PACA-ITS: A Multi-Agent System for Intelligent Virtual Laboratory Courses

Abstract

:1. Introduction

2. Background Theory

3. Related Work

4. Materials and Methods

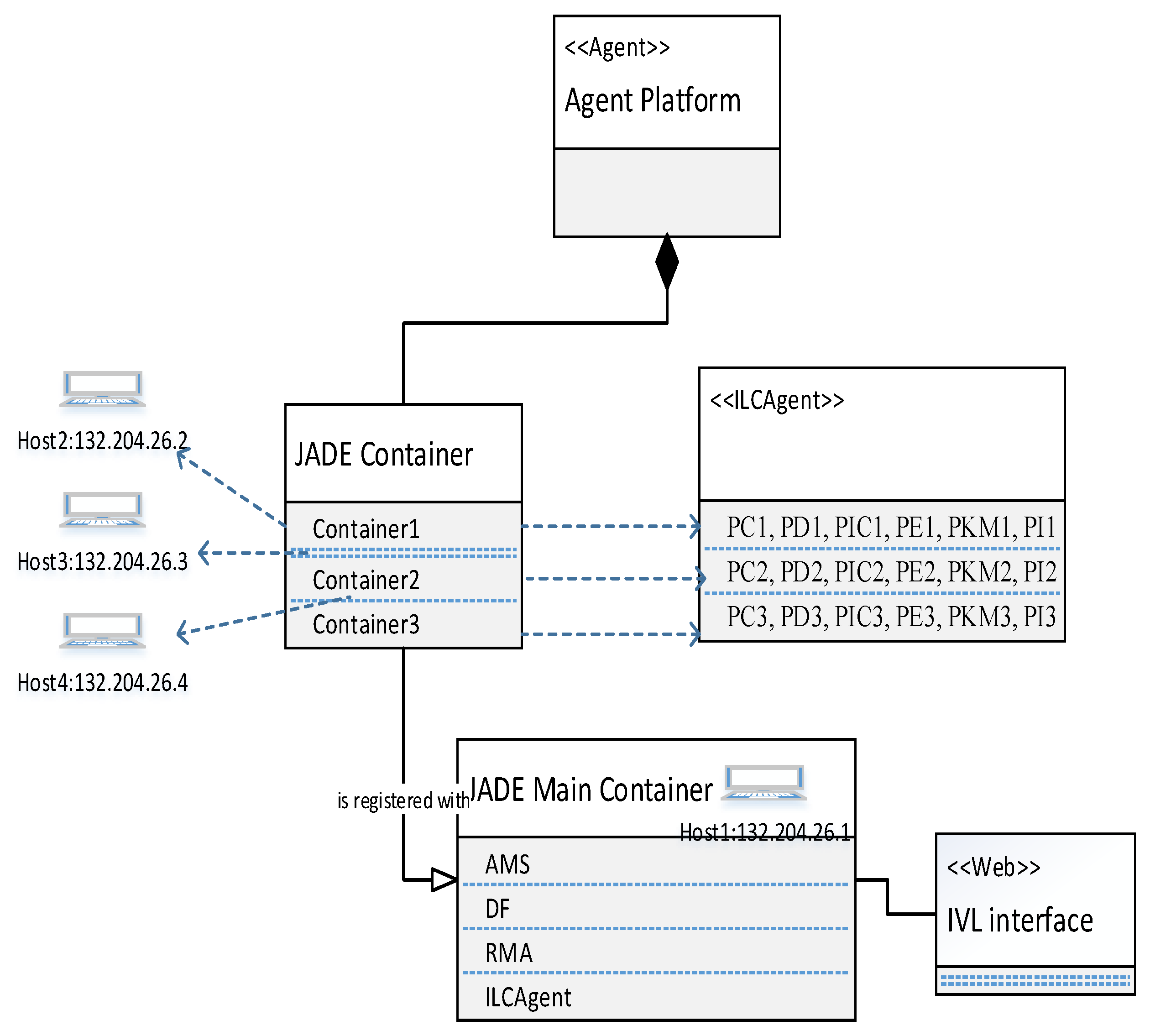

4.1. Identification of Multi-Agent Types and Responsibilities

Challenges to Implementing the System with the JADE Platform

4.2. Mapping the Implementation of PACA-ITS For C++ Programming

Integration Algorithm for Student–Agent Interaction

4.3. Domain/Expert Model

4.3.1. Long Term Memory (LTM)

4.3.2. Working Memory (WM)

4.3.3. Semantic Memory (SM)

4.4. Pedagogical Model

4.5. Learner/Student Model

4.6. Interface Model

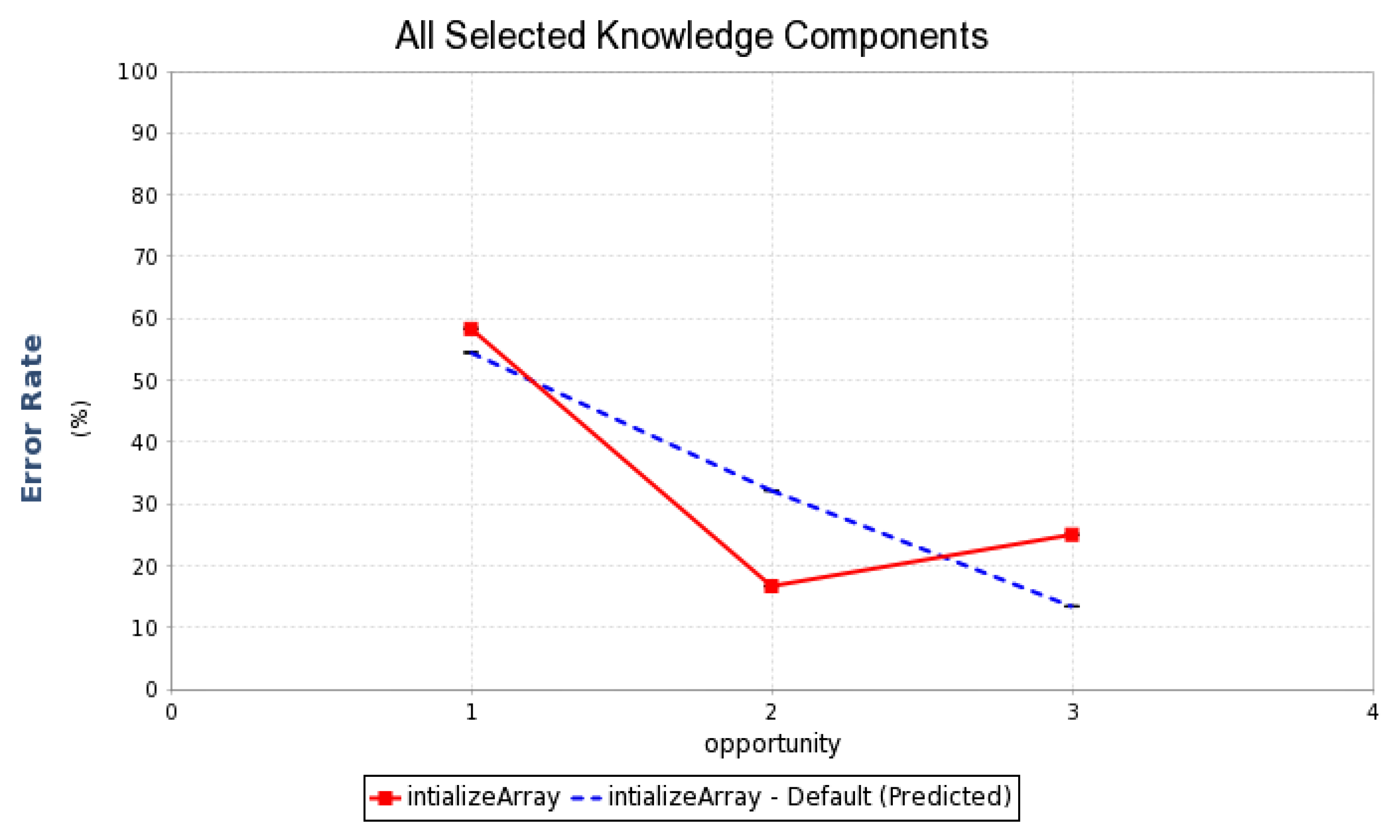

4.7. Reinforcement Learning

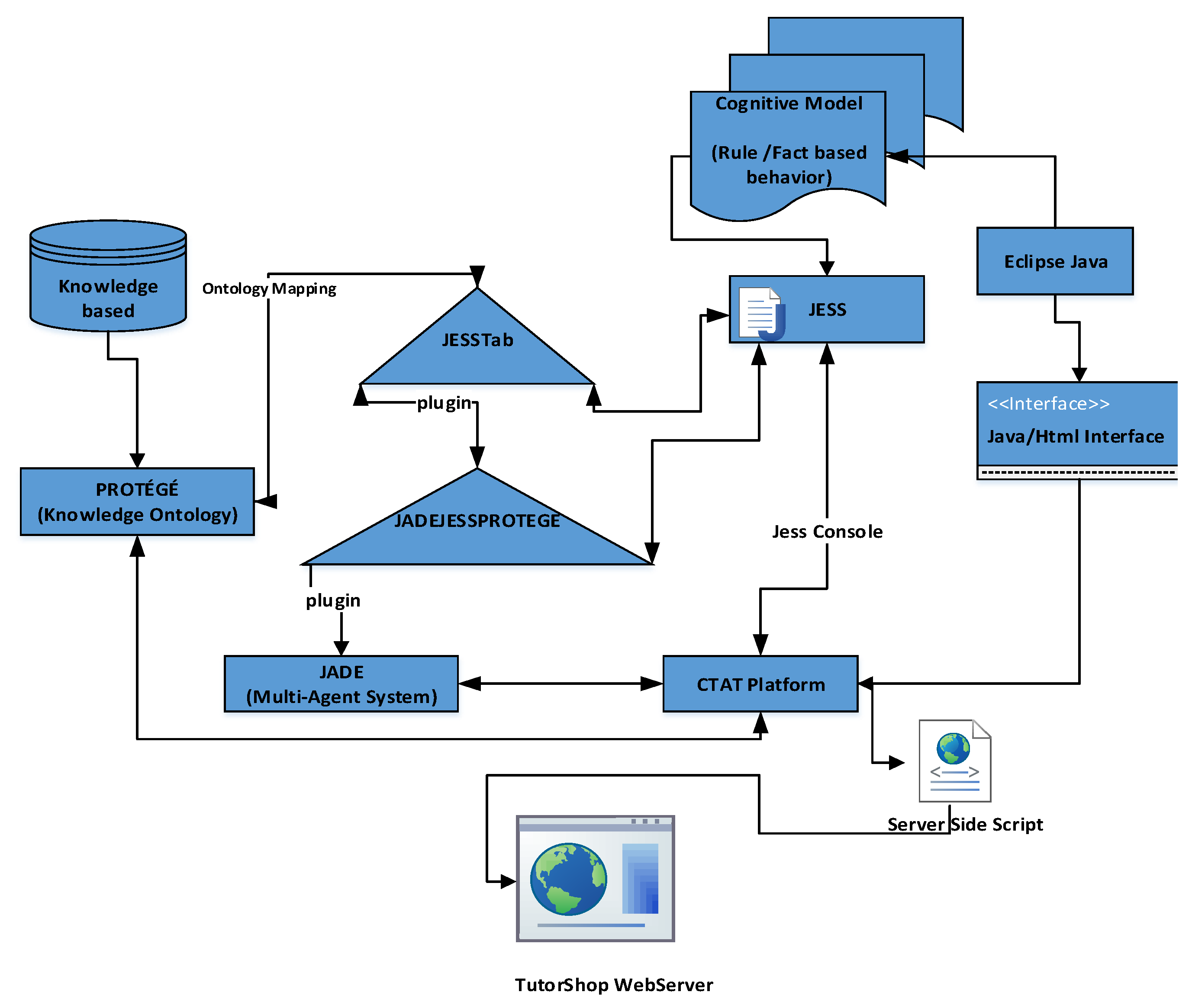

4.8. Infrastructure of Enabling Technologies Integration

5. Results

5.1. Working Memory Editor

5.2. Conflict Tree and Why Not Window

5.3. Jess Console and Behavior Recorder

5.4. Deployment through the TutorShop Webserver

6. Discussion

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

Appendix B

Appendix C

Appendix D

Appendix E

Appendix F

Appendix G

| Sr.No | Architecture Design | Platform Type | Year | Reference |

|---|---|---|---|---|

| 1 | Virtual Laboratory | Multi-agent System | 2007 | [53] |

| 2 | Virtual Laboratory Platform | Virtual Simulation Experiment | 2008 | [54] |

| 3 | Online Virtual Laboratory (CyberLab) | Toolkit | 2008 | [55] |

| 4 | Virtual Laboratory Platform (VL-JM) | VL platform | 2008 | [56] |

| 5 | Web-based Interactive Learning | Web Technologies | 2008 | [57] |

| 6 | Online Internet Laboratory (ONLINE I-LAB) | Internet Assisted Laboratory | 2008 | [58] |

| 7 | Distributed Virtual Laboratory(DVL) | Architecture | 2009 | [59] |

| 8 | VLs and the Synchronous Collaborative Learning Practice | Web-Learning System Tool | 2009 | [60] |

| 9 | Virtual Laboratory Environment (VLE) | Software Tool | 2009 | [61] |

| 10 | Multi-agent Architecture | Experiment | 2010 | [62] |

| 11 | Architecture of the Remote Laboratory(LRA-ULE) | Educational Tool | 2010 | [63] |

| 12 | Virtual Laboratory for Robotics (VLR) | Virtual Tool | 2010 | [64] |

| 13 | Virtual Experiment | Multi-agent | 2010 | [65] |

| 14 | LAB Teaching Assistant (LABTA) | Agent-based | 2010 | [66] |

| 15 | Cyberinfrastructure(VLab) | Experiment and GUI Tool | 2011 | [67] |

| 16 | Artificial and Real Laboratory | Virtual Tool | 2011 | [68] |

| 17 | Virtual Laboratory Architecture | Architecture | 2011 | [68] |

| 18 | Web-Based Virtual Machine Laboratory | Semantic Web | 2011 | [69] |

| 19 | Virtual Computational Laboratory | Architecture | 2011 | [70] |

| 20 | Virtualized Infrastructure | Experiment | 2012 | [71] |

| 21 | Learning Environment (NoobLab) | Automated Assessment Tool | 2012 | [72] |

| 22 | Virtualized Infrastructure(V-Lab) | Experiment and GUI Tool | 2012 | [73] |

| 23 | Automatic Grading Platform. | Automated Assessment Tool | 2012 | [74] |

| 24 | Real Time Virtual Laboratory | Multi-agent System | 2012 | [75] |

| 25 | Virtual Embedded System Laboratory | Assessment Tool | 2012 | [76] |

| 26 | Virtual and Remote Laboratories | Automation Tool | 2012 | [77] |

| 27 | Educational Robotics Laboratories | Experiential Laboratories Tool | 2013 | [78] |

| 28 | VCL Virtual Laboratory | Virtual Tool | 2013 | [79] |

| 29 | Simulation Environment for a virtual Laboratory | Simulation Tool | 2014 | [80] |

| 30 | Electronic Circuit Virtual Laboratory | System Architecture | 2014 | [81] |

| 31 | Collaborative Virtual Laboratory (VLAB-C) | Cloud based Architecture | 2014 | [82] |

| 32 | Cloud-Based Virtual Laboratory (V-Lab) | Experiment | 2014 | [83] |

| 33 | Software Virtual and Robotics tool | Experiment and GUI Tool | 2015 | [84] |

| 34 | IUVIRLAB | Virtual Tool | 2015 | [85] |

| 35 | Virtual Laboratory | Experiment | 2015 | [86] |

| 36 | Virtual Laboratory Platform | System Structure | 2015 | [87] |

| 37 | Virtual Laboratory Platform | Experiment and GUI Tool | 2015 | [88] |

| 38 | Virtual Laboratory for Computer Organization and Logic Design (COLDVL) | Experiment and GUI tool | 2015 | [89] |

| 39 | Virtual Laboratories | Automata Model | 2015 | [90] |

| 40 | Cloud-Based Integrated Virtual Laboratories (CIVIL Model) | Model | 2016 | [91] |

| 41 | Virtual and Remote Labs | Analysis | 2016 | [92] |

| 42 | Virtual Laboratories for Education in Science, Technology, and Engineering | Analysis | 2016 | [93] |

| 43 | Virtual Laboratory Technology | Comparative Study Analysis | 2016 | [94] |

| 44 | Virtual Laboratory | Multi-agent System | 2016 | [95] |

| 45 | Virtual Laboratory of a Spider Crane | Simulink Model | 2016 | [96] |

| 46 | Virtual Laboratory Platform | Experiment and GUI Tool | 2016 | [97] |

| 47 | Virtual Laboratory HTML5 | Experiment and GUI Tool | 2016 | [98] |

| 48 | Collaborative Virtual Laboratory (VLAB-C) | Cloud Infrastructure | 2016 | [99] |

| 49 | Game Technologies VL | Platform | 2016 | [100] |

| 50 | Virtual Laboratory (VLs) | Tool | 2017 | [101] |

| 51 | CodeLab | Conversation-Based Educational Tool | 2018 | [102] |

| 52 | Web-based Remote FPGA Laboratory | web-based platform | 2019 | [103,104] |

| 53 | Network virtual lab | Framework of Virtual Laboratory System | 2019 | [104] |

| 54 | RoboSim | Robotics simulation for programming virtual Linkbot | 2019 | [105] |

| 55 | ARCat | programming tool | 2019 | [106] |

| 56 | Virtual/Remote Labs | Virtual Reality Machines | 2019 | [107] |

| 57 | REMOTE LAB | fully open integrated system (FOIS) | 2019 | [108] |

References

- Ozana, S.; Docekal, T. The concept of virtual laboratory and PIL modeling with REX control system. In Proceedings of the 2017 21st International Conference on Process Control (PC), Štrbské Pleso, Slovakia, 6–9 June 2017; pp. 98–103. [Google Scholar]

- Broisin, J.; Venant, R.; Vidal, P. Lab4CE: A remote laboratory for computer education. Int. J. Artif. Intell. Educ. 2017, 27, 154–180. [Google Scholar] [CrossRef]

- Diwakar, S.; Kumar, D.; Radhamani, R.; Sasidharakurup, H.; Nizar, N.; Achuthan, K.; Nedungadi, P.; Raman, R.; Nair, B.G. Complementing education via virtual labs: Implementation and deployment of remote laboratories and usage analysis in south indian villages. Int. J. Online Eng. 2016, 12, 8–15. [Google Scholar] [CrossRef]

- Ahmed, S.; Karsiti, M.N. Loh, R.N. Control Analysis and Feedback Techniques for Multi Agent Robots; IntechOpen: London, UK, 2009; p. 426. ISBN 978-3-902613-51-6. [Google Scholar]

- Russell, S.J.; Norvig, P.; Canny, J.F.; Malik, J.M.; Edwards, D.D. Artificial Intelligence: A Modern Approach vol. 2: Prentice Hall Upper Saddle River; Pearson Education, Inc.: New Jersey, NJ, USA, 2003. [Google Scholar]

- Franklin, S.; Graesser, A. Is it an Agent, or just a Program?: A Taxonomy for Autonomous Agents. In International Workshop on Agent Theories, Architectures, and Languages; Springer: Berlin/Heidelberg, Germany, 1996; pp. 21–35. [Google Scholar]

- Wooldridge, M. An Introduction to Multiagent Systems; John Wiley & Sons: New York, NY, USA, 2009. [Google Scholar]

- Odell, J.; Parunak, H.V.D.; Bauer, B. Extending UML for agents. Ann Arbor 2000, 1001, 48103. [Google Scholar]

- Abushark, Y.; Thangarajah, J. AUML protocols: From specification to detailed design. In Proceedings of the 2013 International Conference on Autonomous Agents and Multi-Agent Systems, Saint Paul, MN, USA, 6–10 May 2013; pp. 1173–1174. [Google Scholar]

- Bauer, B.; Müller, J.P.; Odell, J. Agent UML: A formalism for specifying multiagent software systems. Int. J. Softw. Eng. Knowl. Eng. 2001, 11, 207–230. [Google Scholar] [CrossRef]

- Da Silva, V.T.; Choren, R.; de Lucena, C.J. A UML based approach for modeling and implementing multi-agent systems. In Proceedings of the Third International Joint Conference on Autonomous Agents and Multiagent Systems, New York, NY, USA, 19–23 July 2004; Volume 2, pp. 914–921. [Google Scholar]

- Bellifemine, F.L.; Caire, G.; Greenwood, D. Developing Multi-Agent Systems with JADE; John Wiley & Sons: Hoboken, NJ, USA, 2007; Volume 7. [Google Scholar]

- Magid, N.; Giovanni, C.; Parisa, A.B. A Methodology for the Analysis and Design of Multi-Agent Systems using JADE. Comput. Syst. Sci. Eng. 2006, 21. [Google Scholar]

- Da Silva, F.L.; Costa, A.H.R. Automatic Object-Oriented Curriculum Generation for Reinforcement Learning. In Proceedings of the 1st Workshop on Scaling-Up Reinforcement Learning (SURL), Skopje, North Macedonia, 18 September 2017; Available online: http://surl.tirl.info/proceedings/SURL-2017_paper_1.pdf (accessed on 1 October 2018).

- Amir, O.; Doshi-Velez, F.; Sarne, D. Agent Strategy Summarization. In Proceedings of the 17th International Conference on Autonomous Agents and MultiAgent Systems, Stockholm, Sweden, 10–15 July 2018; pp. 1203–1207. [Google Scholar]

- Meir, R.; Parkes, D. Playing the Wrong Game: Bounding Externalities in Diverse Populations of Agents. In Proceedings of the 17th International Conference on Autonomous Agents and MultiAgent Systems, Stockholm, Sweden, 10–15 July 2018; pp. 86–94. [Google Scholar]

- Wu, J.; Ghosh, S.; Chollet, M.; Ly, S.; Mozgai, S.; Scherer, S. NADiA-Towards Neural Network Driven Virtual Human Conversation Agents. In Proceedings of the 17th International Conference on Autonomous Agents and MultiAgent Systems, Stockholm, Sweden, 10–15 July 2018; pp. 2262–2264. [Google Scholar]

- Icarte, R.T.; Klassen, T.Q.; Valenzano, R.; McIlraith, S.A. Teaching multiple tasks to an RL agent using LTL. In Proceedings of the 17th International Conference on Autonomous Agents and MultiAgent Systems, Stockholm, Sweden, 10–15 July 2018; pp. 452–461. [Google Scholar]

- Grover, A.; Al-Shedivat, M.; Gupta, J.K.; Burda, Y.; Edwards, H. Evaluating Generalization in Multiagent Systems using Agent-Interaction Graphs. In Proceedings of the International Conference on Autonomous Agents and MultiAgent Systems, Stockholm, Sweden, 10–15 July 2018. [Google Scholar]

- Track, S.I.A.; Pöppel, J.; Kopp, S. Satisficing Models of Bayesian Theory of Mind for Explaining Behavior of Differently Uncertain Agents. In Proceedings of the 17th International Conference on Autonomous Agents and Multiagent Systems, Stockholm, Sweden, 10–15 July 2018. [Google Scholar]

- Savarimuthu, B.T.R. Norm Learning in Multi-Agent Societies; University of Otago: Dunedin, New Zealand, 2011. [Google Scholar]

- Sen, S. The Effects of Past Experience on Trust in Repeated Human-Agent Teamwork. In Proceedings of the 17th International Conference on Autonomous Agents and MultiAgent Systems, Stockholm, Sweden, 10–15 July 2018; pp. 514–522. [Google Scholar]

- Metcalf, K.; Theobald, B.-J.; Apostoloff, N. Learning Sharing Behaviors with Arbitrary Numbers of Agents. In Proceedings of the 17th International Conference on Autonomous Agents and MultiAgent Systems, Stockholm, Sweden, 10–15 July 2018; pp. 1232–1240. [Google Scholar]

- Nkambou, R.; Bourdeau, J.; Mizoguchi, R. Introduction: What are intelligent tutoring systems, and why this book? In Advances in Intelligent Tutoring Systems; Springer: Berlin/Heidelberg, Germany, 2010; Volume 308, pp. 1–12. [Google Scholar]

- Nkambou, R. Modeling the domain: An introduction to the expert module. In Advances in Intelligent Tutoring Systems; Springer: Berlin/Heidelberg, Germany, 2010; Volume 308, pp. 15–32. [Google Scholar]

- Mizoguchi, R.; Hayashi, Y.; Bourdeau, J. Ontology-based formal modeling of the pedagogical world: Tutor modeling. In Advances in Intelligent Tutoring Systems; Springer: Berlin/Heidelberg, Germany, 2010; Volume 308, pp. 229–247. [Google Scholar]

- Woolf, B.P. Student modeling. In Advances in Intelligent Tutoring Systems; Springer: Berlin/Heidelberg, Germany, 2010; Volume 308, pp. 267–279. [Google Scholar]

- Psyché, R.N.V. The Role of Ontologies in the Authoring of ITS: An Instructional Design Support Agent. 2008. Available online: https://www.researchgate.net/publication/327253898_The_Role_of_Ontologies_in_the_Authoring_of_ITS_an_Instructional_Design_Support_Agent (accessed on 1 October 2018).

- Forgy, C.L. Rete: A fast algorithm for the many pattern/many object pattern match problem. In Readings in Artificial Intelligence and Databases; Elsevier: San Mateo, CA, USA, 1989; pp. 547–559. [Google Scholar]

- Harry, E.; Edward, B. Making real virtual lab. Sci. Educ. Rev. 2005, 4, 2005. [Google Scholar]

- Scanlon, E.; Morris, E.; di Paolo, T.; Cooper, M. Contemporary Approaches to Learning Science: Technologically-Mediated Practical Work. Stud. Sci. Educ. 2002, 38, 73–114. [Google Scholar] [CrossRef]

- Lewis, D.I. The pedagogical benefits and pitfalls of virtual tools for teaching and learning laboratory practices in the biological sciences. High. Educ. Acad. STEM York UK 2014. [Google Scholar]

- Miyamoto, M.; Milkowski, D.M.; Young, C.D.; Lebowicz, L.A. Developing a Virtual Lab to Teach Essential Biology Laboratory Techniques. J. Biocommun. 2019, 43, 23–31. [Google Scholar]

- Heradio, R.; de la Torre, L.; Dormido, S. Virtual and remote labs in control education: A survey. Annu. Rev. Control 2016, 42, 1–10. [Google Scholar] [CrossRef]

- Richter, T.; Tetour, Y.; Boehringer, D. Library of labs-a european project on the dissemination of remote experiments and virtual laboratories. In Proceedings of the 2011 IEEE International Symposium on Multimedia (ISM), Dana Point, CA, USA, 5–11 December 2011; pp. 543–548. [Google Scholar]

- Lowe, D.; Conlon, S.; Murray, S.; Weber, L.; de la Villefromoy, M.; Lindsay, E. Labshare: Towards cross-institutional. In Internet Accessible Remote Laboratories: Scalable E-Learning Tools for Engineering and Science Disciplines: Scalable E-Learning Tools for Engineering and Science Disciplines; IGI Global: Hershey, PA, USA, 2011; Volume 453. [Google Scholar]

- García-Zubia, J.; Angulo, I.; Hernández, U.; Orduña, P. Plug&Play remote lab for microcontrollers: WebLab-DEUSTO-PIC. In Proceedings of the 7th European Workshop on Microelectronics Education, San Jose, CA, USA, 28–30 May 2008; pp. 28–30. [Google Scholar]

- De Jong, T.; Sotiriou, S.; Gillet, D. Innovations in STEM education: The Go-Lab federation of online labs. Smart Learn. Environ. 2014, 1, 3. [Google Scholar] [CrossRef]

- Lindsay, E.; Murray, S.; Stumpers, B.D. A toolkit for remote laboratory design & development. In Proceedings of the 2011 First Global Online Laboratory Consortium Remote Laboratories Workshop (GOLC), Cyberjaya, Malaysia, 19–20 December 2011; pp. 1–7. [Google Scholar]

- Kaplan, J.; Yankelovich, N. Open wonderland: An extensible virtual world architecture. IEEE Internet Comput. 2011, 15, 38–45. [Google Scholar] [CrossRef]

- Achuthan, K.; Sreelatha, K.; Surendran, S.; Diwakar, S.; Nedungadi, P.; Humphreys, S.; Sreekala, S.C.O.; Pillai, Z.; Raman, R.; Deepthi, A.; et al. The VALUE@ Amrita Virtual Labs Project: Using web technology to provide virtual laboratory access to students. In Proceedings of the 2011 IEEE Global Humanitarian Technology Conference (GHTC), Seattle, WA, USA, 30 October–1 November 2011; pp. 117–121. [Google Scholar]

- Rus, V.; Ştefănescu, D. Non-intrusive assessment of learners’ prior knowledge in dialogue-based intelligent tutoring systems. Smart Learn. Environ. 2016, 3, 2. [Google Scholar] [CrossRef]

- JAVA Agent DEvelopment Framework. 2018. Available online: http://jade.tilab.com/ (accessed on 1 October 2018).

- Sutton, R.S.; Barto, A.G. Introduction to Reinforcement Learning; MIT Press: Cambridge, UK, 1998; Volume 135. [Google Scholar]

- Dorça, F.A.; Lima, L.V.; Fernandes, M.A.; Lopes, C.R. Comparing strategies for modeling students learning styles through reinforcement learning in adaptive and intelligent educational systems: An experimental analysis. Expert Syst. Appl. 2013, 40, 2092–2101. [Google Scholar] [CrossRef]

- Watkins, C.J.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Aleven, V.; McLaren, B.M.; Sewall, J.; Koedinger, K.R. The cognitive tutor authoring tools (CTAT): Preliminary evaluation of efficiency gains. In Proceedings of the International Conference on Intelligent Tutoring Systems, Jhongli, Taiwan, 26–30 June 2006; pp. 61–70. [Google Scholar]

- Koedinger, K.R.; Anderson, J.R.; Hadley, W.H.; Mark, M.A. Intelligent tutoring goes to school in the big city. Int. J. Artif. Intell. Educ. 1997, 8, 30–43. [Google Scholar]

- Aleven, V.; Sewall, J.; Popescu, O.; Xhakaj, F.; Chand, D.; Baker, R.; Wang, Y.; Siemens, G.; Rosé, C.; Gasevic, D. The beginning of a beautiful friendship? Intelligent tutoring systems and MOOCs. In Proceedings of the International Conference on Artificial Intelligence in Education, Madrid, Spain, 22–26 June 2015; pp. 525–528. [Google Scholar]

- Aravind, V.R.; Refugio, C. Efficient learning with intelligent tutoring across cultures. World J. Educ. Technol. Curr. Issues 2019. [Google Scholar]

- Koedinger, K.R.; Baker, R.S.; Cunningham, K.; Skogsholm, A.; Leber, B.; Stamper, J. A data repository for the EDM community: The PSLC DataShop. In Handbook of Educational Data Mining; CRC Press: Boca Raton, FL, USA, 2010; Volume 43, pp. 43–56. [Google Scholar]

- Jaber, M.Y.; Glock, C.H. A learning curve for tasks with cognitive and motor elements. Comput. Ind. Eng. 2013, 64, 866–871. [Google Scholar] [CrossRef]

- Mechta, D.; Harous, S.; Djoudi, M.; Douar, A. An Agent-based approach for designing and implementing a virtual laboratory. In Proceedings of the IIT’07 4th International Conference on Innovations in Information Technology, Dubai, United Arab Emirates, 18–20 November 2007; pp. 496–500. [Google Scholar]

- Xie, W.; Yang, X.; Li, F. A virtual laboratory platform and simulation software based on web. In Proceedings of the ICARCV 2008 10th International Conference on Control, Automation, Robotics and Vision, Hanoi, Vietnam, 2–5 December 2008; pp. 1650–1654. [Google Scholar]

- Zhao, K.; Evett, M.P. CyberLab: An Online Virtual Laboratory Toolkit for Non-programmers. In Proceedings of the ICALT’08. Eighth IEEE International Conference on Advanced Learning Technologies, Cantabria, Spain, 1–5 July 2008; pp. 316–318. [Google Scholar]

- Sheng, Y.; Wang, W.; Wang, J.; Chen, J. A virtual laboratory platform based on integration of java and matlab. In Proceedings of the International Conference on Web-Based Learning, Magdeburg, Germany, 23–25 September 2008; pp. 285–295. [Google Scholar]

- Moon, I.; Han, S.; Choi, K.; Kim, D.; Jeon, C.; Lee, S. Virtual education system for the c programming language. In Proceedings of the International Conference on Web-Based Learning, Jinhua, China, 20–22 August 2008; pp. 196–207. [Google Scholar]

- Salihbegovic, A.; Ribic, S. Development of online internet laboratory (online I-lab). In Innovative Techniques in Instruction Technology, E-learning, E-assessment, and Education; Springer: Dordrecht, The Netherlands, 2008; pp. 1–6. [Google Scholar]

- Grimaldi, D.; Rapuano, S. Hardware and software to design virtual laboratory for education in instrumentation and measurement. Measurement 2009, 42, 485–493. [Google Scholar] [CrossRef]

- Jara, C.A.; Candelas, F.A.; Torres, F.; Dormido, S.; Esquembre, F.; Reinoso, O. Real-time collaboration of virtual laboratories through the Internet. Comput. Educ. 2009, 52, 126–140. [Google Scholar] [CrossRef]

- Quesnel, G.; Duboz, R.; Ramat, É. The Virtual Laboratory Environment–An operational framework for multi-modelling, simulation and analysis of complex dynamical systems. Simul. Model. Pract. Theory 2009, 17, 641–653. [Google Scholar] [CrossRef]

- Combes, M.; Buin, B.; Parenthoën, M.; Tisseau, J. Multiscale multiagent architecture validation by virtual instruments in molecular dynamics experiments. Procedia Comput. Sci. 2010, 1, 761–770. [Google Scholar] [CrossRef]

- Domínguez, M.; Reguera, P.; Fuertes, J.; Prada, M.; Alonso, S.; Morán, A.; Fernández, D. The virtual laboratory on cybernetics illustrates main results of the paper. Development of an educational tool in LabVIEW and its integration in remote laboratory of automatic control. IFAC Proc. Vol. 2010, 42, 101–106. [Google Scholar] [CrossRef]

- Potkonjak, V.; Vukobratović, M.; Jovanović, K.; Medenica, M. Virtual Mechatronic/Robotic laboratory—A step further in distance learning. Comput. Educ. 2010, 55, 465–475. [Google Scholar] [CrossRef]

- Li, X.; Ma, F.; Zhong, S.; Tang, L.; Han, Z. Research on virtual experiment intelligent tutoring system based on multi-agent. In Proceedings of the International Conference on Technologies for E-Learning and Digital Entertainment, Changchun, China, 16–18 August 2010; Springer: Berlin/Heidelberg, Germany, 2010; Volume 6249, pp. 100–110. [Google Scholar] [CrossRef]

- Yang, C. LABTA: An Agent-Based Intelligent Teaching Assistant for Experiment Courses. In Proceedings of the International Conference on Web-Based Learning, Shanghai, China, 8–10 December 2010; pp. 309–317. [Google Scholar]

- Da Silveira, P.R.; Valdez, M.N.; Wenzcovitch, R.M.; Pierce, M.; da Silva, C.R.; Yuen, D.A. Virtual laboratory for planetary materials (VLab): An updated overview of system service architecture. In Proceedings of the 2011 TeraGrid Conference: Extreme Digital Discovery, Salt Lake City, UT, USA, 18–21 July 2011; p. 33. [Google Scholar]

- Logar, V.; Karba, R.; Papič, M.; Atanasijević-Kunc, M. Artificial and real laboratory environment in an e-learning competition. Math. Comput. Simul. 2011, 82, 517–524. [Google Scholar] [CrossRef]

- Salonen, J.; Nykänen, O.; Ranta, P.; Nurmi, J.; Helminen, M.; Rokala, M. An implementation of a semantic, web-based virtual machine laboratory prototyping environment. In Proceedings of the International Semantic Web Conference, Bonn, Germany, 23–27 October 2011; pp. 221–236. [Google Scholar]

- Juszczyszyn, K.; Paprocki, M.; Prusiewicz, A.; Sieniawski, L. Personalization and content awareness in online lab–virtual computational laboratory. In Proceedings of the Asian Conference on Intelligent Information and Database Systems, Daegu, Korea, 20–22 April 2011; pp. 367–376. [Google Scholar]

- Uludag, S.; Guler, E.; Karakus, M.; Turner, S.W. An affordable virtual laboratory infrastructure to complement a variety of computing classes. J. Comput. Sci. Coll. 2012, 27, 158–166. [Google Scholar]

- Neve, P.; Hunter, G.; Livingston, D.; Orwell, J. NoobLab: An intelligent learning environment for teaching programming. In Proceedings of the 2012 IEEE/WIC/ACM International Joint Conferences on Web Intelligence and Intelligent Agent Technology, IEEE Computer Society, Washington, DC, USA, 4–7 December 2012; Volume 3, pp. 357–361. [Google Scholar]

- Xu, L.; Huang, D.; Tsai, W.-T. V-lab: A cloud-based virtual laboratory platform for hands-on networking courses. In Proceedings of the 17th ACM Annual Conference on INNOVATION and Technology in Computer Science Education, Larnaca, Cyprus, 2–4 July 2012; pp. 256–261. [Google Scholar]

- Sánchez, C.; Gómez-Estern, F.; de la Peña, D.M. A virtual lab with automatic assessment for nonlinear controller design exercises. IFAC Proc. Vol. 2012, 45, 172–176. [Google Scholar] [CrossRef]

- Al-hamdani, A.Y.H.; Altaie, A.M. Designing and implementation of a real time virtual laboratory based on multi-agents system. In Proceedings of the 2012 IEEE Conference on Open Systems (ICOS), Kuala Lumpur, Malaysia, 21–24 October 2012; pp. 1–6. [Google Scholar]

- Mohanty, R.; Routray, A. Advanced virtual embedded system laboratory. In Proceedings of the 2012 2nd Interdisciplinary Engineering Design Education Conference (IEDEC), Santa Clara, CA, USA, 19–20 March 2012; pp. 92–95. [Google Scholar]

- Leão, C.P.; Soares, F.; Rodrigues, H.; Seabra, E.; Machado, J.; Farinha, P.; Costa, S. Web-assisted laboratory for control education: Remote and virtual environments. In The Impact of Virtual, Remote, and Real Logistics Labs; Springer: Berlin/Heidelberg, Germany, 2012; pp. 62–72. [Google Scholar]

- Caci, B.; Chiazzese, G.; D’Amico, A. Robotic and virtual world programming labs to stimulate reasoning and visual-spatial abilities. Procedia-Soc. Behav. Sci. 2013, 93, 1493–1497. [Google Scholar] [CrossRef]

- Jordá, J.M.M. Virtual Tools: Virtual Laboratories for Experimental science—An Experience with VCL Tool. Procedia-Soc. Behav. Sci. 2013, 106, 3355–3365. [Google Scholar] [CrossRef]

- Dias, F.; Matutino, P.M.; Barata, M. Virtual laboratory for educational environments. In Proceedings of the 2014 11th International Conference on Remote Engineering and Virtual Instrumentation (REV), Porto, Portugal, 26–28 February 2014; pp. 191–194. [Google Scholar]

- Yang-Mei, L.; Bo, C. Electronic Circuit Virtual Laboratory Based on LabVIEW and Multisim. In Proceedings of the 2014 7th International Conference on Intelligent Computation Technology and Automation (ICICTA), Changsha, China, 25–26 October 2014; pp. 222–225. [Google Scholar]

- Yu, J.; Dong, K. VLAB-C: A cloud service platform for collaborative virtual laboratory. In Proceedings of the 2014 IEEE International Conference on Services Computing (SCC), Anchorage, AK, USA, 27 June–2 July 2014; pp. 829–835. [Google Scholar]

- Xu, L.; Huang, D.; Tsai, W.-T. Cloud-based virtual laboratory for network security education. IEEE Trans. Educ. 2014, 57, 145–150. [Google Scholar] [CrossRef]

- Esquembre, F. Facilitating the creation of virtual and remote laboratories for science and engineering education. IFAC-PapersOnLine 2015, 48, 49–58. [Google Scholar] [CrossRef]

- İnce, E.; Kırbaşlar, F.G.; Güneş, Z.Ö.; Yaman, Y.; Yolcu, Ö.; Yolcu, E. An innovative approach in virtual laboratory education: The case of “IUVIRLAB” and relationships between communication skills with the usage of IUVIRLAB. Procedia-Soc. Behav. Sci. 2015, 195, 1768–1777. [Google Scholar] [CrossRef]

- Achuthan, K.; Bose, L.S. Concept mapping and assessment of virtual laboratory experimental knowledge. In Proceedings of the 2015 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Kochi, India, 10–13 August 2015; pp. 887–893. [Google Scholar]

- Chen, X. Research on User Identity Authentication Technology for Virtual Laboratory System. In Proceedings of the 2015 Sixth International Conference on Intelligent Systems Design and Engineering Applications (ISDEA), Guiyang, China, 18–19 August 2015; pp. 688–691. [Google Scholar]

- Li, Y.; Xiao, L.; Sheng, Y. Virtual laboratory platform for computer science curricula. In Proceedings of the 2015 IEEE Frontiers in Education Conference (FIE), El Paso, TX, USA, 21–24 October 2015; pp. 1–7. [Google Scholar]

- Roy, G.; Ghosh, D.; Mandal, C. A virtual laboratory for computer organisation and logic design (COLDVL) and its utilisation for MOOCs. In Proceedings of the 2015 IEEE 3rd International Conference on MOOCs, Innovation and Technology in Education (MITE), Amritsar, India, 1–2 October 2015; pp. 284–289. [Google Scholar]

- Chezhin, M.S.; Efimchik, E.A.; Lyamin, A.V. Automation of variant preparation and solving estimation of algorithmic tasks for virtual laboratories based on automata model. In Proceedings of the International Conference on E-Learning, E-Education, and Online Training, Novedrate, Italy, 16–18 September 2015; pp. 35–43. [Google Scholar]

- Erdem, M.B.; Kiraz, A.; Eski, H.; Çiftçi, Ö.; Kubat, C. A conceptual framework for cloud-based integration of Virtual laboratories as a multi-agent system approach. Comput. Ind. Eng. 2016, 102, 452–457. [Google Scholar] [CrossRef]

- Heradio, R.; de la Torre, L.; Galan, D.; Cabrerizo, F.J.; Herrera-Viedma, E.; Dormido, S. Virtual and remote labs in education: A bibliometric analysis. Comput. Educ. 2016, 98, 14–38. [Google Scholar] [CrossRef]

- Potkonjak, V.; Gardner, M.; Callaghan, V.; Mattila, P.; Guetl, C.; Petrović, V.M.; Jovanović, K. Virtual laboratories for education in science, technology, and engineering: A review. Comput. Educ. 2016, 95, 309–327. [Google Scholar] [CrossRef]

- Trnka, P.; Vrána, S.; Šulc, B. Comparison of Various Technologies Used in a Virtual Laboratory. IFAC-PapersOnLine 2016, 49, 144–149. [Google Scholar] [CrossRef]

- Castillo, L. A virtual laboratory for multiagent systems: Joining efficacy, learning analytics and student satisfaction. In Proceedings of the 2016 International Symposium on Computers in Education (SIIE), Salamanca, Spain, 13–15 September 2016; pp. 1–6. [Google Scholar]

- Chacón, J.; Farias, G.; Vargas, H.; Dormido, S. Virtual laboratory of a Spider Crane: An implementation based on an interoperability protocol. In Proceedings of the 2016 IEEE Conference on Control Applications (CCA), Buenos Aires, Argentina, 19–22 September 2016; pp. 827–832. [Google Scholar]

- Francis, S.P.; Kanikkolil, V.; Achuthan, K. Learning curve analysis for virtual laboratory experimentation. In Proceedings of the 2016 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Jaipur, India, 21–24 September 2016; pp. 1073–1078. [Google Scholar]

- Sheng, Y.; Huang, J.; Zhang, F.; An, Y.; Zhong, P. A virtual laboratory based on HTML5. In Proceedings of the 2016 11th International Conference on Computer Science & Education (ICCSE), Nagoya, Japan, 23–25 August 2016; pp. 299–302. [Google Scholar]

- Yu, J.; Dong, K.; Zheng, Y. VLAB-C: Collaborative Virtual Laboratory in Cloud Computing and Its Applications. In Big Data Applications and Use Cases; Springer: Berlin/Heidelberg, Germany, 2016; pp. 145–174. [Google Scholar]

- Daineko, Y.; Ipalakova, M.; Muhamedyev, R.; Brodyagina, M.; Yunnikova, M.; Omarov, B. Use of Game Technologies for the Development of Virtual Laboratories for Physics Study. In Proceedings of the International Conference on Digital Transformation and Global Society, St. Petersburg, Russia, 22–24 June 2016; pp. 422–428. [Google Scholar]

- Achuthan, K.; Francis, S.P.; Diwakar, S. Augmented reflective learning and knowledge retention perceived among students in classrooms involving virtual laboratories. Educ. Inf. Technol. 2017, 22, 2825–2855. [Google Scholar] [CrossRef]

- Mor, E.; Santanach, F.; Tesconi, S.; Casado, C. CodeLab: Designing a Conversation-Based Educational Tool for Learning to Code. In Proceedings of the International Conference on Human-Computer Interaction, Las Vegas, NV, USA, 15–20 July 2018; pp. 94–101. [Google Scholar]

- Wan, H.; Liu, K.; Lin, J.; Gao, X. A Web-based Remote FPGA Laboratory for Computer Organization Course. In Proceedings of the 2019 on Great Lakes Symposium on VLSI, Tysons Corner, VA, USA, 9–11 May 2019; pp. 243–248. [Google Scholar]

- Si, H.; Sun, C.; Chen, B.; Shi, L.; Qiao, H. Analysis of Socket Communication Technology Based on Machine Learning Algorithms Under TCP/IP Protocol in Network Virtual Laboratory System. IEEE Access 2019, 7, 80453–80464. [Google Scholar] [CrossRef]

- Gucwa, K.J.; Cheng, H.H. RoboSim: A simulation environment for programming virtual robots. Eng. Comput. 2018, 34, 475–485. [Google Scholar] [CrossRef]

- Deng, X.; Jin, Q.; Wang, D.; Sun, F. ARCat: A Tangible Programming Tool for DFS Algorithm Teaching. In Proceedings of the 18th ACM International Conference on Interaction Design and Children, Boise, ID, USA, 12–15 June 2019; pp. 533–537. [Google Scholar]

- Morales-Menendez, R.; Ramírez-Mendoza, R.A. Virtual/Remote Labs for Automation Teaching: A Cost Effective Approach. IFAC-PapersOnLine 2019, 52, 266–271. [Google Scholar] [CrossRef]

- Sanchez-Herrera, R.; Mejías, A.; Márquez, M.; Andújar, J. The Remote Access to Laboratories: A Fully Open Integrated System. IFAC-PapersOnLine 2019, 52, 121–126. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Munawar, S.; Khalil Toor, S.; Aslam, M.; Aimeur, E. PACA-ITS: A Multi-Agent System for Intelligent Virtual Laboratory Courses. Appl. Sci. 2019, 9, 5084. https://doi.org/10.3390/app9235084

Munawar S, Khalil Toor S, Aslam M, Aimeur E. PACA-ITS: A Multi-Agent System for Intelligent Virtual Laboratory Courses. Applied Sciences. 2019; 9(23):5084. https://doi.org/10.3390/app9235084

Chicago/Turabian StyleMunawar, Saima, Saba Khalil Toor, Muhammad Aslam, and Esma Aimeur. 2019. "PACA-ITS: A Multi-Agent System for Intelligent Virtual Laboratory Courses" Applied Sciences 9, no. 23: 5084. https://doi.org/10.3390/app9235084