Reward History Modulates the Processing of Task-Irrelevant Emotional Faces in a Demanding Task

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Design and Materials

2.3. Procedures

2.3.1. Value Learning Phase

2.3.2. Test Phase

2.4. EEG Recordings and Analyses

3. Results

3.1. Behavioral Results

3.1.1. Learning Task

3.1.2. Test Phase

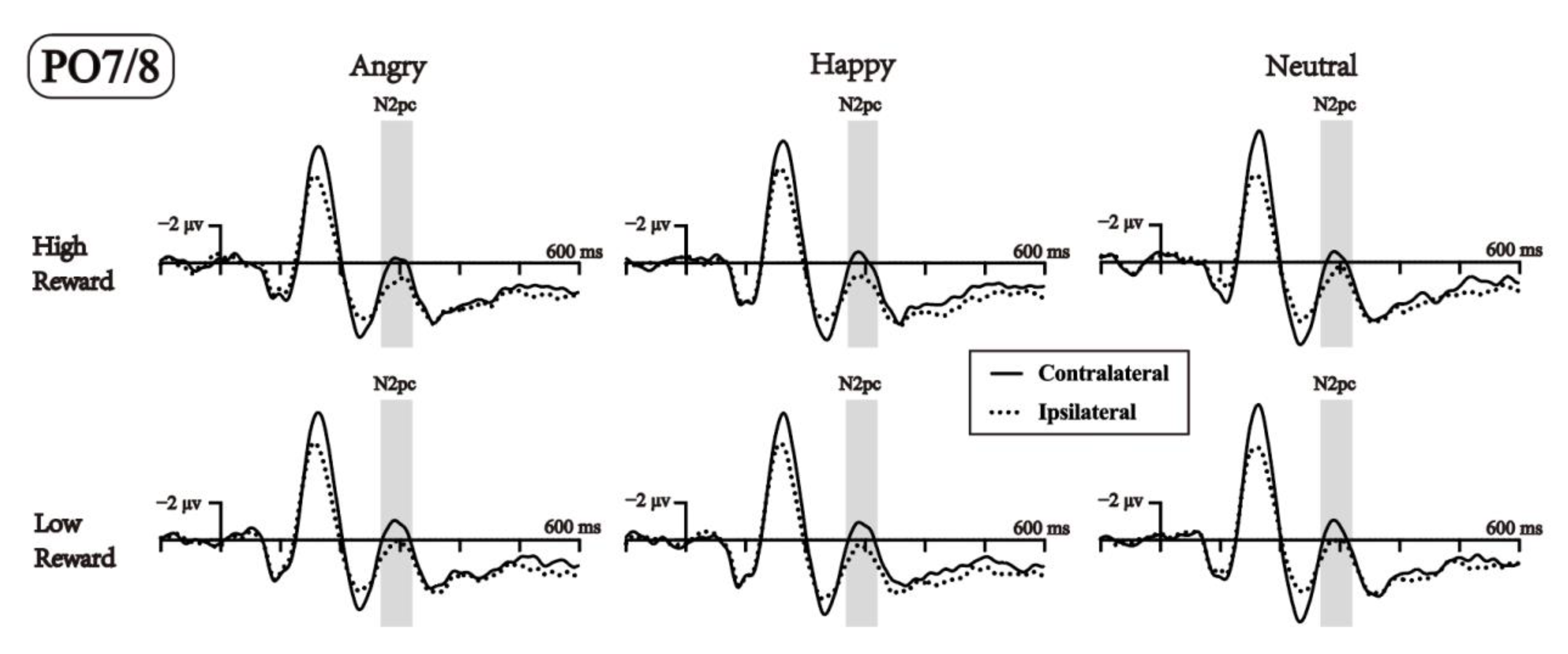

3.2. ERP Results during the Test Phase

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Anderson, B.A.; Kim, H.; Kim, A.J.; Liao, M.R.; Mrkonja, L.; Clement, A.; Grégoire, L. The past, present, and future of selection history. Neurosci. Biobehav. Rev. 2021, 130, 326–350. [Google Scholar] [CrossRef] [PubMed]

- Pessoa, L. How do emotion and motivation direct executive control? Trends Cogn. Sci. 2009, 13, 160–166. [Google Scholar] [CrossRef] [PubMed]

- Schindler, S.; Bublatzky, F. Attention and emotion: An integrative review of emotional face processing as a function of attention. Cortex 2020, 130, 362–386. [Google Scholar] [CrossRef]

- Pourtois, G.; Schettino, A.; Vuilleumier, P. Brain mechanisms for emotional influences on perception and attention: What is magic and what is not. Biol. Psychol. 2013, 92, 492–512. [Google Scholar] [CrossRef]

- Carretié, L. Exogenous (automatic) attention to emotional stimuli: A review. Cogn. Affect. Behav. Neurosci. 2014, 14, 1228–1258. [Google Scholar] [CrossRef] [PubMed]

- Chelazzi, L.; Perlato, A.; Santandrea, E.; Della Libera, C. Rewards teach visual selective attention. Vision Res. 2013, 85, 58–72. [Google Scholar] [CrossRef]

- Failing, M.; Theeuwes, J. Selection history: How reward modulates selectivity of visual attention. Psychon. Bull. Rev. 2018, 25, 514–538. [Google Scholar] [CrossRef]

- Grégoire, L.; Britton, M.K.; Anderson, B.A. Motivated suppression of value- and threat-modulated attentional capture. Emotion 2022, 22, 780–794. [Google Scholar] [CrossRef]

- Anderson, B.A.; Laurent, P.A.; Yantis, S. Reward predictions bias attentional selection. Front. Hum. Neurosci. 2013, 7, 262. [Google Scholar] [CrossRef]

- Kiss, M.; Driver, J.; Eimer, M. Reward priority of visual target singletons modulates event-related potential signatures of attentional selection. Psychol. Sci. 2009, 20, 245–251. [Google Scholar] [CrossRef]

- Gupta, R. Motivational salience, not valence, modulates time perception. Emotion 2022, 22, 283–291. [Google Scholar] [CrossRef]

- Anderson, B.A.; Laurent, P.A.; Yantis, S. Value-driven attentional capture. Proc. Natl. Acad. Sci. USA 2011, 108, 10367–10371. [Google Scholar] [CrossRef] [PubMed]

- Gupta, R.; Hur, Y.J.; Lavie, N. Distracted by pleasure: Effects of positive versus negative valence on emotional capture under load. Emotion 2016, 16, 328–337. [Google Scholar] [CrossRef] [PubMed]

- Gupta, R. Positive emotions have a unique capacity to capture attention. Prog. Brain Res. 2019, 247, 23–46. [Google Scholar] [CrossRef] [PubMed]

- Mine, C.; Saiki, J. Task-irrelevant stimulus-reward association induces value-driven attentional capture. Atten. Percept. Psychophys. 2015, 77, 1896–1907. [Google Scholar] [CrossRef]

- Calvo, M.G.; Beltran, D.; Fernandez-Martin, A. Processing of facial expressions in peripheral vision: Neurophysiological evidence. Biol. Psychol. 2014, 100, 60–70. [Google Scholar] [CrossRef]

- Eimer, M.; Holmes, A. Event-related brain potential correlates of emotional face processing. Neuropsychologia 2007, 45, 15–31. [Google Scholar] [CrossRef] [PubMed]

- Lamy, D.; Amunts, L.; Bar-Haim, Y. Emotional priming of pop-out in visual search. Emotion 2008, 8, 151–161. [Google Scholar] [CrossRef]

- Nummenmaa, L.; Calvo, M.G. Dissociation between recognition and detection advantage for facial expressions: A meta-analysis. Emotion 2015, 15, 243–256. [Google Scholar] [CrossRef]

- Zinchenko, A.; Kanske, P.; Obermeier, C.; Schröger, E.; Kotz, S.A. Emotion and goal-directed behavior: ERP evidence on cognitive and emotional conflict. Soc. Cogn. Affect. Neurosci. 2015, 10, 1577–1587. [Google Scholar] [CrossRef]

- Zinchenko, A.; Obermeier, C.; Kanske, P.; Schröger, E.; Kotz, S.A. Positive emotion impedes emotional but not cognitive conflict processing. Cogn. Affect. Behav. Neurosci. 2017, 17, 665–677. [Google Scholar] [CrossRef] [PubMed]

- Hudson, A.; Durston, A.J.; McCrackin, S.D.; Itier, R.J. Emotion, Gender and Gaze Discrimination Tasks do not Differentially Impact the Neural Processing of Angry or Happy Facial Expressions-a Mass Univariate ERP Analysis. Brain Topogr. 2021, 34, 813–833. [Google Scholar] [CrossRef] [PubMed]

- Durston, A.J.; Itier, R.J. The early processing of fearful and happy facial expressions is independent of task demands—Support from mass univariate analyses. Brain Res. 2021, 1765, 147505. [Google Scholar] [CrossRef] [PubMed]

- Wu, L.; Müller, H.J.; Zhou, X.; Wei, P. Differential modulations of reward expectation on implicit facial emotion processing: ERP evidence. Psychophysiology 2019, 56, e13304. [Google Scholar] [CrossRef]

- Qiu, Z.; Zhang, J.; Pegna, A.J. Neural processing of lateralised task-irrelevant fearful faces under different awareness conditions. Conscious. Cogn. 2023, 107, 103449. [Google Scholar] [CrossRef] [PubMed]

- Pessoa, L.; Padmala, S.; Morland, T. Fate of unattended fearful faces in the amygdala is determined by both attentional resources and cognitive modulation. NeuroImage 2005, 28, 249–255. [Google Scholar] [CrossRef]

- Pegna, A.J.; Darque, A.; Berrut, C.; Khateb, A. Early ERP modulation for task-irrelevant subliminal faces. Front. Psychol. 2011, 2, 88. [Google Scholar] [CrossRef]

- Holmes, A.; Kiss, M.; Eimer, M. Attention modulates the processing of emotional expression triggered by foveal faces. Neurosci. Lett. 2006, 394, 48–52. [Google Scholar] [CrossRef]

- Müller-Bardorff, M.; Schulz, C.; Peterburs, J.; Bruchmann, M.; Mothes-Lasch, M.; Miltner, W.; Straube, T. Effects of emotional intensity under perceptual load: An event-related potentials (ERPs) study. Biol. Psychol. 2016, 117, 141–149. [Google Scholar] [CrossRef]

- Mancini, C.; Falciati, L.; Maioli, C.; Mirabella, G. Threatening Facial Expressions Impact Goal-Directed Actions Only if Task-Relevant. Brain Sci. 2020, 10, 794. [Google Scholar] [CrossRef]

- Mancini, C.; Falciati, L.; Maioli, C.; Mirabella, G. Happy facial expressions impair inhibitory control with respect to fearful facial expressions but only when task-relevant. Emotion 2022, 22, 142–152. [Google Scholar] [CrossRef] [PubMed]

- Mirabella, G.; Grassi, M.; Mezzarobba, S.; Bernardis, P. Angry and happy expressions affect forward gait initiation only when task relevant. Emotion 2023, 23, 387–399. [Google Scholar] [CrossRef] [PubMed]

- Calbi, M.; Montalti, M.; Pederzani, C.; Arcuri, E.; Umiltà, M.A.; Gallese, V.; Mirabella, G. Emotional body postures affect inhibitory control only when task-relevant. Front. Psychol. 2022, 13, 1035328. [Google Scholar] [CrossRef] [PubMed]

- Yao, S.; Ding, C.; Qi, S.; Yang, D. Value associations of emotional faces can modify the anger superiority effect: Behavioral and electrophysiological evidence. Soc. Cogn. Affect. Neurosci. 2014, 9, 849–856. [Google Scholar] [CrossRef]

- Grubert, A.; Eimer, M. The Time Course of Target Template Activation Processes during Preparation for Visual Search. J. Neurosci. 2018, 38, 9527–9538. [Google Scholar] [CrossRef]

- Luck, S.J.; Hillyard, S.A. Electrophysiological correlates of feature analysis during visual search. Psychophysiology 1994, 31, 291–308. [Google Scholar] [CrossRef]

- Chen, N.; Wei, P. Reward association alters brain responses to emotional stimuli: ERP evidence. Int. J. Psychophysiol. 2019, 135, 21–32. [Google Scholar] [CrossRef]

- Zhou, X.; Du, B.; Wei, Z.; He, W. Attention Capture of Non-target Emotional Faces: An Evidence From Reward Learning. Front. Psychol. 2019, 10, 3004. [Google Scholar] [CrossRef]

- Hammerschmidt, W.; Sennhenn-Reulen, H.; Schacht, A. Associated motivational salience impacts early sensory processing of human faces. NeuroImage 2017, 156, 466–474. [Google Scholar] [CrossRef]

- Hammerschmidt, W.; Kagan, I.; Kulke, L.; Schacht, A. Implicit reward associations impact face processing: Time-resolved evidence from event-related brain potentials and pupil dilations. NeuroImage 2018, 179, 557–569. [Google Scholar] [CrossRef]

- Müller-Bardorff, M.; Bruchmann, M.; Mothes-Lasch, M.; Zwitserlood, P.; Schlossmacher, I.; Hofmann, D.; Miltner, W.; Straube, T. Early brain responses to affective faces: A simultaneous EEG-fMRI study. NeuroImage 2018, 178, 660–667. [Google Scholar] [CrossRef] [PubMed]

- Lavie, N. Perceptual load as a necessary condition for selective attention. J. Exp. Psychol. Hum. Percept. Perform. 1995, 21, 451–468. [Google Scholar] [CrossRef]

- Murphy, G.; Groeger, J.A.; Greene, C.M. Twenty years of load theory-Where are we now, and where should we go next? Psychon. Bull. Rev. 2016, 23, 1316–1340. [Google Scholar] [CrossRef]

- Matias, J.; Quinton, J.-C.; Colomb, M.; Izaute, M.; Silvert, L. Reward history modulates perceptual load effects. Acta Psychol. 2021, 212, 103217. [Google Scholar] [CrossRef] [PubMed]

- Bourgeois, A.; Chelazzi, L.; Vuilleumier, P. How motivation and reward learning modulate selective attention. Prog. Brain Res. 2016, 229, 325–342. [Google Scholar] [CrossRef]

- Steinberg, C.; Bröckelmann, A.K.; Rehbein, M.; Dobel, C.; Junghöfer, M. Rapid and highly resolving associative affective learning: Convergent electro- and magnetoencephalographic evidence from vision and audition. Biol. Psychol. 2013, 92, 526–540. [Google Scholar] [CrossRef]

- Olofsson, J.K.; Nordin, S.; Sequeira, H.; Polich, J. Affective picture processing: An integrative review of ERP findings. Biol. Psychol. 2008, 77, 247–265. [Google Scholar] [CrossRef] [PubMed]

- Olofsson, J.K.; Polich, J. Affective visual event-related potentials: Arousal, repetition, and time-on-task. Biol. Psychol. 2007, 75, 101–108. [Google Scholar] [CrossRef]

- Eimer, M. The face-sensitive N170 component of the event-related brain potential. Oxf. Handb. Face Percept. 2011, 28, 329–344. [Google Scholar]

- Rossion, B.; Jacques, C. The N170: Understanding the time course of face perception in the human brain. In The Oxford Handbook of Event-Related Potential Components; Luck, S.J., Kappenman, E.S., Eds.; Oxford University Press: Oxford, UK, 2012; pp. 115–141. [Google Scholar]

- Schacht, A.; Sommer, W. Emotions in word and face processing: Early and late cortical responses. Brain Cogn. 2009, 69, 538–550. [Google Scholar] [CrossRef]

- Schupp, H.T.; Ohman, A.; Junghöfer, M.; Weike, A.I.; Stockburger, J.; Hamm, A.O. The facilitated processing of threatening faces: An ERP analysis. Emotion 2004, 4, 189–200. [Google Scholar] [CrossRef] [PubMed]

- Schubring, D.; Schupp, H.T. Affective picture processing: Alpha- and lower beta-band desynchronization reflects emotional arousal. Psychophysiology 2019, 56, e13386. [Google Scholar] [CrossRef] [PubMed]

- Junghöfer, M.; Bradley, M.M.; Elbert, T.R.; Lang, P.J. Fleeting images: A new look at early emotion discrimination. Psychophysiology 2001, 38, 175–178. [Google Scholar] [CrossRef] [PubMed]

- Luck, S.J. The Operation of Attention—Millisecond by Millisecond—Over the First Half Second. In The First Half Second: The Microgenesis and Temporal Dynamics of Unconscious and Conscious Visual Processes; Öğmen, H., Breitmeyer, B.G., Eds.; MIT Press: London, UK, 2006; pp. 187–206. [Google Scholar]

- Hickey, C.; Chelazzi, L.; Theeuwes, J. Reward changes salience in human vision via the anterior cingulate. J. Neurosci. 2010, 30, 11096–11103. [Google Scholar] [CrossRef]

- Wei, P.; Ji, L. Reward expectation modulates N2pc for target selection: Electrophysiological evidence. Psychophysiology 2021, 58, e13837. [Google Scholar] [CrossRef]

- Qiu, Z.; Becker, S.I.; Pegna, A.J. Spatial attention shifting to emotional faces is contingent on awareness and task relevancy. Cortex 2022, 151, 30–48. [Google Scholar] [CrossRef]

- Faul, F.; Erdfelder, E.; Buchner, A.; Lang, A.G. Statistical power analyses using G*Power 3.1: Tests for correlation and regression analyses. Behav. Res. Methods 2009, 41, 1149–1160. [Google Scholar] [CrossRef]

- Calvo, M.G.; Beltrán, D. Brain lateralization of holistic versus analytic processing of emotional facial expressions. NeuroImage 2014, 92, 237–247. [Google Scholar] [CrossRef]

- Wang, Y.; Luo, Y.-J. Standardization and Assessment of College Students’ Facial Expression of Emotion. Chin. J. Clin. Psych. 2005, 13, 396–398. [Google Scholar]

- Luck, S.J. Ten simple rules for designing and interpreting ERP experiments. In Event-Related Potentials: A Methods Handbook; MIT Press: London, UK, 2005; p. 262083337. [Google Scholar]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef]

- Luo, W.; Feng, W.; He, W.; Wang, N.Y.; Luo, Y.J. Three stages of facial expression processing: ERP study with rapid serial visual presentation. NeuroImage 2010, 49, 1857–1867. [Google Scholar] [CrossRef] [PubMed]

- Wei, P.; Kang, G.; Ding, J.; Guo, C. Monetary incentives modulate the processing of emotional facial expressions: An ERP study. Acta Psychol. Sin. 2014, 46, 437. [Google Scholar] [CrossRef]

- Eimer, M. The N2pc component as an indicator of attentional selectivity. Electroencephalogr. Clin. Neurophysiol. 1996, 99, 225–234. [Google Scholar] [CrossRef] [PubMed]

- Holmes, A.; Bradley, B.P.; Kragh Nielsen, M.; Mogg, K. Attentional selectivity for emotional faces: Evidence from human electrophysiology. Psychophysiology 2009, 46, 62–68. [Google Scholar] [CrossRef] [PubMed]

- Calvo, M.G.; Marrero, H.; Beltrán, D. When does the brain distinguish between genuine and ambiguous smiles? An ERP study. Brain Cogn. 2013, 81, 237–246. [Google Scholar] [CrossRef] [PubMed]

- Dennis, T.A.; Chen, C.C. Neurophysiological mechanisms in the emotional modulation of attention: The interplay between threat sensitivity and attentional control. Biol. Psychol. 2007, 76, 1–10. [Google Scholar] [CrossRef]

- Paulmann, S.; Pell, M.D. Facial expression decoding as a function of emotional meaning status: ERP evidence. Neuroreport 2009, 20, 1603–1608. [Google Scholar] [CrossRef]

- Carretié, L.; Mercado, F.; Tapia, M.; Hinojosa, J.A. Emotion, attention, and the ‘negativity bias’, studied through event-related potentials. Int. J. Psychophysiol. 2001, 41, 75–85. [Google Scholar] [CrossRef] [PubMed]

- Schutter, D.J.; de Haan, E.H.; van Honk, J. Functionally dissociated aspects in anterior and posterior electrocortical processing of facial threat. Int. J. Psychophysiol. 2004, 53, 29–36. [Google Scholar] [CrossRef]

- Bentin, S.; Allison, T.; Puce, A.; Perez, E.; McCarthy, G. Electrophysiological Studies of Face Perception in Humans. J. Cogn. Neurosci. 1996, 8, 551–565. [Google Scholar] [CrossRef]

- Jonas, J.; Jacques, C.; Liu-Shuang, J.; Brissart, H.; Colnat-Coulbois, S.; Maillard, L.; Rossion, B. A face-selective ventral occipito-temporal map of the human brain with intracerebral potentials. Proc. Natl. Acad. Sci. USA 2016, 113, E4088–E4097. [Google Scholar] [CrossRef]

- Lochy, A.; de Heering, A.; Rossion, B. The non-linear development of the right hemispheric specialization for human face perception. Neuropsychologia 2019, 126, 10–19. [Google Scholar] [CrossRef]

- Rossion, B. Understanding face perception by means of human electrophysiology. Trends Cogn. Sci. 2014, 18, 310–318. [Google Scholar] [CrossRef]

- Eimer, M.; Holmes, A. An ERP study on the time course of emotional face processing. Neuroreport 2002, 13, 427–431. [Google Scholar] [CrossRef] [PubMed]

- Eimer, M.; Holmes, A.; McGlone, F.P. The role of spatial attention in the processing of facial expression: An ERP study of rapid brain responses to six basic emotions. Cogn. Affect. Behav. Neurosci. 2003, 3, 97–110. [Google Scholar] [CrossRef]

- Calvo, M.G.; Beltrán, D. Recognition advantage of happy faces: Tracing the neurocognitive processes. Neuropsychologia 2013, 51, 2051–2061. [Google Scholar] [CrossRef]

- Williams, L.M.; Palmer, D.; Liddell, B.J.; Song, L.; Gordon, E. The ‘when’ and ‘where’ of perceiving signals of threat versus non-threat. NeuroImage 2006, 31, 458–467. [Google Scholar] [CrossRef] [PubMed]

- Rellecke, J.; Sommer, W.; Schacht, A. Emotion effects on the n170: A question of reference? Brain Topogr. 2013, 26, 62–71. [Google Scholar] [CrossRef] [PubMed]

- Burra, N.; Kerzel, D. Task Demands Modulate Effects of Threatening Faces on Early Perceptual Encoding. Front. Psychol. 2019, 10, 2400. [Google Scholar] [CrossRef]

- Scherer, K.R.; Moors, A. The Emotion Process: Event Appraisal and Component Differentiation. Annu. Rev. Psychol. 2019, 70, 719–745. [Google Scholar] [CrossRef]

- Bradley, M.M.; Codispoti, M.; Cuthbert, B.N.; Lang, P.J. Emotion and motivation I: Defensive and appetitive reactions in picture processing. Emotion 2001, 1, 276–298. [Google Scholar] [CrossRef]

- Jia, Y.; Cui, L.; Pollmann, S.; Wei, P. The interactive effects of reward expectation and emotional interference on cognitive conflict control: An ERP study. Physiol. Behav. 2021, 234, 113369. [Google Scholar] [CrossRef]

- Marini, F.; Marzi, T.; Viggiano, M.P. “Wanted!” the effects of reward on face recognition: Electrophysiological correlates. Cogn. Affect. Behav. Neurosci. 2011, 11, 627–643. [Google Scholar] [CrossRef] [PubMed]

- Kissler, J.; Herbert, C.; Winkler, I.; Junghofer, M. Emotion and attention in visual word processing: An ERP study. Biol. Psychol. 2009, 80, 75–83. [Google Scholar] [CrossRef] [PubMed]

- Schacht, A.; Sommer, W. Time course and task dependence of emotion effects in word processing. Cogn. Affect. Behav. Neurosci. 2009, 9, 28–43. [Google Scholar] [CrossRef] [PubMed]

- Bayer, M.; Sommer, W.; Schacht, A. P1 and beyond: Functional separation of multiple emotion effects in word recognition. Psychophysiology 2012, 49, 959–969. [Google Scholar] [CrossRef]

- Frühholz, S.; Jellinghaus, A.; Herrmann, M. Time course of implicit processing and explicit processing of emotional faces and emotional words. Biol. Psychol. 2011, 87, 265–274. [Google Scholar] [CrossRef]

- Kaltwasser, L.; Ries, S.; Sommer, W.; Knight, R.T.; Willems, R.M. Independence of valence and reward in emotional word processing: Electrophysiological evidence. Front. Psychol. 2013, 4, 168. [Google Scholar] [CrossRef]

- Wei, P.; Wang, D.; Ji, L. Reward expectation regulates brain responses to task-relevant and task-irrelevant emotional words: ERP evidence. Soc. Cogn. Affect. Neurosci. 2016, 11, 191–203. [Google Scholar] [CrossRef]

- Chen, N.; Wei, P. Task Demand Moderates the Effects of Reward Learning on Emotional Stimuli. Available online: https://ssrn.com/abstract=4191476 (accessed on 29 March 2023). [CrossRef]

- Mohanty, A.; Egner, T.; Monti, J.M.; Mesulam, M.M. Search for a threatening target triggers limbic guidance of spatial attention. J. Neurosci. 2009, 29, 10563–10572. [Google Scholar] [CrossRef]

- Troiani, V.; Price, E.T.; Schultz, R.T. Unseen fearful faces promote amygdala guidance of attention. Soc. Cogn. Affect. Neurosci. 2014, 9, 133–140. [Google Scholar] [CrossRef] [PubMed]

| High-Reward | Low-Reward | |||||

|---|---|---|---|---|---|---|

| Angry | Happy | Neutral | Angry | Happy | Neutral | |

| RTs (SE) | 715 (16) | 721 (17) | 716 (18) | 717 (18) | 717 (17) | 721 (17) |

| Accuracy (SE) | 77.6 (1.2) | 79.5 (1.2) | 76.5 (1.3) | 77.4 (1.2) | 78.7 (1.3) | 80.1 (1.1) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, N.-X.; Wei, P. Reward History Modulates the Processing of Task-Irrelevant Emotional Faces in a Demanding Task. Brain Sci. 2023, 13, 874. https://doi.org/10.3390/brainsci13060874

Chen N-X, Wei P. Reward History Modulates the Processing of Task-Irrelevant Emotional Faces in a Demanding Task. Brain Sciences. 2023; 13(6):874. https://doi.org/10.3390/brainsci13060874

Chicago/Turabian StyleChen, Ning-Xuan, and Ping Wei. 2023. "Reward History Modulates the Processing of Task-Irrelevant Emotional Faces in a Demanding Task" Brain Sciences 13, no. 6: 874. https://doi.org/10.3390/brainsci13060874