The Impact of the Perception of Primary Facial Emotions on Corticospinal Excitability

Abstract

:1. Introduction

2. Methods

2.1. Participants

2.2. Stimuli

2.3. Electromyographic Recordings and Transcranial Magnetic Stimulation (TMS)

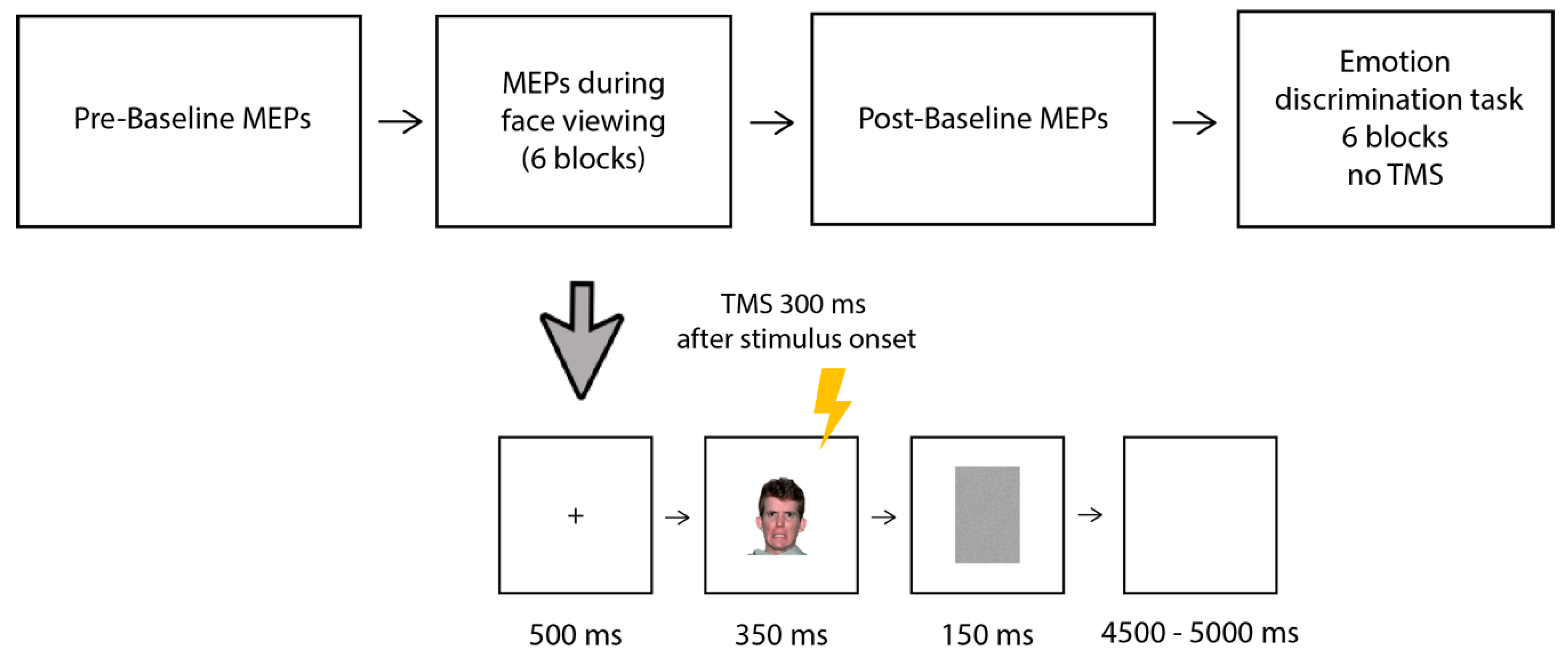

2.4. Procedure

3. Data Analyses and Results

3.1. Data Analyses

3.2. Results

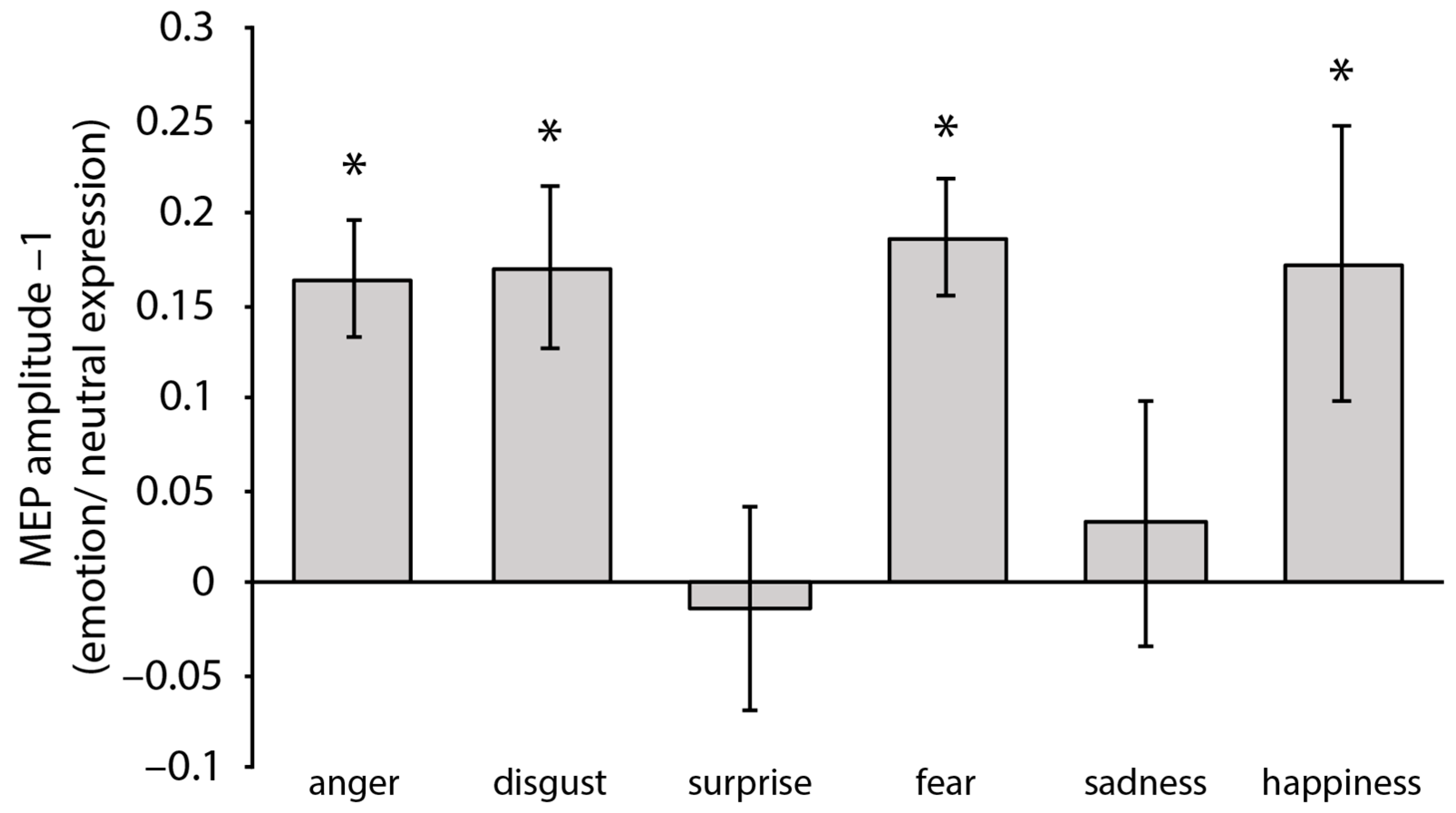

3.2.1. MEP

3.2.2. Emotion Discrimination Task (No TMS)

3.2.3. Online Ratings (No TMS)

3.3. Correlational Analyses

4. Discussion

4.1. Fear and Anger

4.2. Disgust

4.3. Happiness

4.4. Sadness and Surprise

4.5. General Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Blakemore, R.L.; Vuilleumier, P. An emotional call to action: Integrating affective neuroscience in models of motor control. Emot. Rev. 2017, 9, 299–309. [Google Scholar] [CrossRef]

- Murray, R.J.; Kreibig, S.D.; Pehrs, C.; Vuilleumier, P.; Gross, J.J.; Samson, A.C. Mixed emotions to social situations: An fMRI investigation. NeuroImage 2023, 271, 119973. [Google Scholar] [CrossRef] [PubMed]

- Sato, W.; Kochiyama, T.; Yoshikawa, S. The widespread action observation/execution matching system for facial expression processing. Hum. Brain Mapp. 2023, 44, 3057–3071. [Google Scholar] [CrossRef] [PubMed]

- Roelofs, K.; Dayan, P. Freezing revisited: Coordinated autonomic and central optimization of threat coping. Nat. Rev. Neurosci. 2022, 23, 568–580. [Google Scholar] [CrossRef] [PubMed]

- Grecucci, A.; Koch, I.; Rumiati, R.I. The role of emotional context in facilitating imitative actions. Acta Psychol. 2011, 138, 311–315. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P.E.; Davidson, R.J. The Nature of Emotion: Fundamental Questions; Oxford University Press: Oxford, UK, 1994. [Google Scholar]

- Frijda, N.H. Emotion experience and its varieties. Emot. Rev. 2009, 1, 264–271. [Google Scholar] [CrossRef]

- Izard, C.E. Innate and universal facial expressions: Evidence from developmental and cross-cultural research. Psychol. Bull. 1994, 115, 288–299. [Google Scholar] [CrossRef]

- Bestmann, S.; Krakauer, J.W. The uses and interpretations of the motor-evoked potential for understanding behaviour. Exp. Brain Res. 2015, 233, 679–689. [Google Scholar] [CrossRef]

- Coombes, S.A.; Tandonnet, C.; Fujiyama, H.; Janelle, C.M.; Cauraugh, J.H.; Summers, J.J. Emotion and motor preparation: A transcranial magnetic stimulation study of corticospinal motor tract excitability. Cogn. Affect. Behav. Neurosci. 2009, 9, 380–388. [Google Scholar] [CrossRef]

- Coelho, C.M.; Lipp, O.V.; Marinovic, W.; Wallis, G.; Riek, S. Increased corticospinal excitability induced by unpleasant visual stimuli. Neurosci. Lett. 2010, 481, 135–138. [Google Scholar] [CrossRef]

- Baumgartner, T.; Willi, M.; Jäncke, L. Modulation of corticospinal activity by strong emotions evoked by pictures and classical music: A transcranial magnetic stimulation study. Neuroreport 2007, 18, 261–265. [Google Scholar] [CrossRef] [PubMed]

- Hajcak, G.; Molnar, C.; George, M.S.; Bolger, K.; Koola, J.; Nahas, Z. Emotion facilitates action: A transcranial magnetic stimulation study of motor cortex excitability during picture viewing. Psychophysiology 2007, 44, 91–97. [Google Scholar] [CrossRef] [PubMed]

- Van Loon, A.M.; van den Wildenberg, W.P.; van Stegeren, A.H.; Ridderinkhof, K.R.; Hajcak, G. Emotional stimuli modulate readiness for action: A transcranial magnetic stimulation study. Cogn. Affect. Behav. Neurosci. 2010, 10, 174–181. [Google Scholar] [CrossRef] [PubMed]

- Borgomaneri, S.; Gazzola, V.; Avenanti, A. Temporal dynamics of motor cortex excitability during perception of natural emotional scenes. Soc. Cogn. Affect. Neurosci. 2014, 9, 1451–1457. [Google Scholar] [CrossRef] [PubMed]

- Borgomaneri, S.; Gazzola, V.; Avenanti, A. Transcranial magnetic stimulation reveals two functionally distinct stages of motor cortex involvement during perception of emotional body language. Brain Struct. Funct. 2015, 220, 2765–2781. [Google Scholar] [CrossRef] [PubMed]

- Borgomaneri, S.; Vitale, F.; Avenanti, A. Early changes in corticospinal excitability when seeing fearful body expressions. Sci. Rep. 2015, 5, 14122. [Google Scholar] [CrossRef]

- Borgomaneri, S.; Vitale, F.; Battaglia, S.; Avenanti, A. Early right motor cortex response to happy and fearful facial expressions: A TMS motor-evoked potential study. Brain Sci. 2021, 11, 1203. [Google Scholar] [CrossRef]

- Ferrari, C.; Fiori, F.; Suchan, B.; Plow, E.B.; Cattaneo, Z. TMS over the posterior cerebellum modulates motor cortical excitability in response to facial emotional expressions. Eur. J. Neurosci. 2021, 53, 1029–1039. [Google Scholar] [CrossRef]

- Hortensius, R.; De Gelder, B.; Schutter, D.J. When anger dominates the mind: Increased motor corticospinal excitability in the face of threat. Psychophysiology 2016, 53, 1307–1316. [Google Scholar] [CrossRef]

- Schutter, D.J.; Hofman, D.; Van Honk, J. Fearful faces selectively increase corticospinal motor tract excitability: A transcranial magnetic stimulation study. Psychophysiology 2008, 45, 345–348. [Google Scholar] [CrossRef]

- Salvia, E.; Süß, M.; Tivadar, R.; Harkness, S.; Grosbras, M.H. Mirror neurons system engagement in late adolescents and adults while viewing emotional gestures. Front. Psychol. 2016, 7, 1099. [Google Scholar] [CrossRef]

- Vicario, C.M.; Rafal, R.D.; Borgomaneri, S.; Paracampo, R.; Kritikos, A.; Avenanti, A. Pictures of disgusting foods and disgusted facial expressions suppress the tongue motor cortex. Soc. Cogn. Affect. Neurosci. 2017, 12, 352–362. [Google Scholar] [CrossRef]

- Oldfield, R.C. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 1971, 9, 97–113. [Google Scholar] [CrossRef]

- Rossi, S.; Hallett, M.; Rossini, P.M.; Pascual-Leone, A. Screening questionnaire before TMS: An update. Clin. Neurophysiol. 2011, 122, 1686. [Google Scholar] [CrossRef]

- Tottenham, N.; Tanaka, J.W.; Leon, A.C.; McCarry, T.; Nurse, M.; Hare, T.A.; Marcus, D.J.; Westerlund, A.; Casey, B.J.; Nelson, C. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Res. 2009, 168, 242–249. [Google Scholar] [CrossRef]

- Rossi, S.; Hallett, M.; Rossini, P.M.; Pascual-Leone, A.; Safety of TMS Consensus Group. Safety, ethical considerations, and application guidelines for the use of transcranial magnetic stimulation in clinical practice and research. Clin. Neurophysiol. 2009, 120, 2008–2039. [Google Scholar] [CrossRef]

- Kammer, T.; Beck, S.; Thielscher, A.; Laubis-Herrmann, U.; Topka, H. Motor thresholds in humans: A transcranial magnetic stimulation study comparing different pulse waveforms, current directions and stimulator types. Clin. Neurophysiol. 2001, 112, 250–258. [Google Scholar] [CrossRef]

- Rossini, P.M.; Burke, D.; Chen, R.; Cohen, L.G.; Daskalakis, Z.; Di Iorio, R.; Di Lazzaro, V.; Ferreri, F.; Fitzgerald, P.B.; George, M.S.; et al. Non-invasive electrical and magnetic stimulation of the brain, spinal cord, roots and peripheral nerves: Basic principles and procedures for routine clinical and research application. An updated report from an IFCN Committee. Clin. Neurophysiol. 2015, 126, 1071–1107. [Google Scholar] [CrossRef] [PubMed]

- Chen, R.; Gerloff, C.; Classen, J.; Wassermann, E.M.; Hallett, M.; Cohen, L.G. Safety of different inter-train intervals for repetitive transcranial magnetic stimulation and recommendations for safe ranges of stimulation parameters. Electroencephalogr. Clin. Neurophysiol./Electromyogr. Mot. Control 1997, 105, 415–421. [Google Scholar] [CrossRef] [PubMed]

- Cattaneo, Z.; Lega, C.; Boehringer, J.; Gallucci, M.; Girelli, L.; Carbon, C.C. Happiness takes you right: The effect of emotional stimuli on line bisection. Cogn. Emot. 2014, 28, 325–344. [Google Scholar] [CrossRef] [PubMed]

- Schepman, A.; Rodway, P.; Geddes, P. Valence-specific laterality effects in vocal emotion: Interactions with stimulus type, blocking and sex. Brain Cogn. 2012, 79, 129–137. [Google Scholar] [CrossRef] [PubMed]

- Bradley, M.M.; Lang, P.J. Measuring emotion: The self-assessment manikin and the semantic differential. J. Behav. Ther. Exp. Psychiatry 1994, 25, 49–59. [Google Scholar] [CrossRef]

- Davis, M.; Whalen, P.J. The amygdala: Vigilance and emotion. Mol. Psychiatry 2001, 6, 13–34. [Google Scholar] [CrossRef]

- Darwin, C. The Expression of Emotions in Animals and Man; Murray: London, UK, 1872; Volume 11. [Google Scholar]

- Chen, M.; Bargh, J.A. Consequences of automatic evaluation: Immediate behavioral predispositions to approach or avoid the stimulus. Personal. Soc. Psychol. Bull. 1999, 25, 215–224. [Google Scholar] [CrossRef]

- Marsh, A.A.; Ambady, N.; Kleck, R.E. The effects of fear and anger facial expressions on approach-and avoidance-related behaviors. Emotion 2005, 5, 119. [Google Scholar] [CrossRef]

- Giovannelli, F.; Banfi, C.; Borgheresi, A.; Fiori, E.; Innocenti, I.; Rossi, S.; Zaccara, G.; Viggiano, M.P.; Cincotta, M. The effect of music on corticospinal excitability is related to the perceived emotion: A transcranial magnetic stimulation study. Cortex 2013, 49, 702–710. [Google Scholar] [CrossRef] [PubMed]

- Tormos, J.M.; Cañete, C.; Tarazona, F.; Catalá, M.D.; Pascual, A.P.L.; Pascual-Leone, A. Lateralized effects of self-induced sadness and happiness on corticospinal excitability. Neurology 1997, 49, 487–491. [Google Scholar] [CrossRef]

- Phaf, R.H.; Mohr, S.E.; Rotteveel, M.; Wicherts, J.M. Approach, avoidance, and affect: A meta-analysis of approach-avoidance tendencies in manual reaction time tasks. Front. Psychol. 2014, 5, 378. [Google Scholar] [CrossRef]

- Schützwohl, A. Surprise and schema strength. J. Exp. Psychol. Learn. Mem. Cogn. 1998, 24, 1182. [Google Scholar] [CrossRef]

- Bestmann, S.; Harrison, L.M.; Blankenburg, F.; Mars, R.B.; Haggard, P.; Friston, K.J.; Rothwell, J.C. Influence of uncertainty and surprise on human corticospinal excitability during preparation for action. Curr. Biol. 2008, 18, 775–780. [Google Scholar] [CrossRef]

- Batty, M.; Taylor, M.J. Early processing of the six basic facial emotional expressions. Cogn. Brain Res. 2003, 17, 613–620. [Google Scholar] [CrossRef]

- Pourtois, G.; Schettino, A.; Vuilleumier, P. Brain mechanisms for emotional influences on perception and attention: What is magic and what is not. Biol. Psychol. 2013, 92, 492–512. [Google Scholar] [CrossRef] [PubMed]

- Bullock, M.; Russel, J.A. Continuities in emotion understanding from 3 to 6 years. Child Dev. 1985, 67, 789–802. [Google Scholar]

- Avenanti, A.; Bueti, D.; Galati, G.; Aglioti, S.M. Transcranial magnetic stimulation highlights the sensorimotor side of empathy for pain. Nat. Neurosci. 2005, 8, 955–960. [Google Scholar] [CrossRef]

- Vitale, F.; Urrutia, M.; Avenanti, A.; de Vega, M. You are fired! Exclusion words induce corticospinal modulations associated with vicarious pain. Soc. Cogn. Affect. Neurosci. 2023, 18, nsad033. [Google Scholar] [CrossRef] [PubMed]

- Thompson, A.E.; Voyer, D. Sex differences in the ability to recognise non-verbal displays of emotion: A meta-analysis. Cogn. Emot. 2014, 28, 1164–1195. [Google Scholar] [CrossRef] [PubMed]

- Sullivan, S.; Campbell, A.; Hutton, S.B.; Ruffman, T. What’s good for the goose is not good for the gander: Age and gender differences in scanning emotion faces. J. Gerontol. Ser. B Psychol. Sci. Soc. Sci. 2017, 72, 441–447. [Google Scholar] [CrossRef]

- Olderbak, S.G.; Mokros, A.; Nitschke, J.; Habermeyer, E.; Wilhelm, O. Psychopathic men: Deficits in general mental ability, not emotion perception. J. Abnorm. Psychol. 2018, 127, 294. [Google Scholar] [CrossRef] [PubMed]

- van Peer, J.M.; Roelofs, K.; Rotteveel, M.; van Dijk, J.G.; Spinhoven, P.; Ridderinkhof, K.R. The effects of cortisol administration on approach–avoidance behavior: An event-related potential study. Biol. Psychol. 2007, 76, 135–146. [Google Scholar] [CrossRef]

- Demaree, H.A.; Everhart, D.E.; Youngstrom, E.A.; Harrison, D.W. Brain lateralization of emotional processing: Historical roots and a future incorporating “dominance”. Behav. Cogn. Neurosci. Rev. 2005, 4, 3–20. [Google Scholar] [CrossRef]

- Ferrari, C.; Gamond, L.; Gallucci, M.; Vecchi, T.; Cattaneo, Z. An exploratory TMS study on prefrontal lateralization in valence categorization of facial expressions. Exp. Psychol. 2017, 64, 282–289. [Google Scholar] [CrossRef] [PubMed]

| Anger | Disgust | Surprise | Fear | Sadness | Happiness | |

|---|---|---|---|---|---|---|

| Recognition Accuracy % | 93.05 (9.60) | 92.86 (8.48) | 89.59 (11.56) | 89.91 (9.72) | 84.91 (12.37) | 91.95 (10.76) |

| Anger | Disgust | Surprise | Fear | Sadness | Happiness | Neutral | |

|---|---|---|---|---|---|---|---|

| Arousal | 6.65 (1.49) | 6.38(1.51) | 5.49 (1.50) | 5.79 (1.48) | 4.82 (1.49) | 3.67 (2.14) | 2.45 (1.31) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fiori, F.; Ciricugno, A.; Cattaneo, Z.; Ferrari, C. The Impact of the Perception of Primary Facial Emotions on Corticospinal Excitability. Brain Sci. 2023, 13, 1291. https://doi.org/10.3390/brainsci13091291

Fiori F, Ciricugno A, Cattaneo Z, Ferrari C. The Impact of the Perception of Primary Facial Emotions on Corticospinal Excitability. Brain Sciences. 2023; 13(9):1291. https://doi.org/10.3390/brainsci13091291

Chicago/Turabian StyleFiori, Francesca, Andrea Ciricugno, Zaira Cattaneo, and Chiara Ferrari. 2023. "The Impact of the Perception of Primary Facial Emotions on Corticospinal Excitability" Brain Sciences 13, no. 9: 1291. https://doi.org/10.3390/brainsci13091291

APA StyleFiori, F., Ciricugno, A., Cattaneo, Z., & Ferrari, C. (2023). The Impact of the Perception of Primary Facial Emotions on Corticospinal Excitability. Brain Sciences, 13(9), 1291. https://doi.org/10.3390/brainsci13091291