Effect of Sound Coding Strategies on Music Perception with a Cochlear Implant

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Design

2.2. Population Characteristics

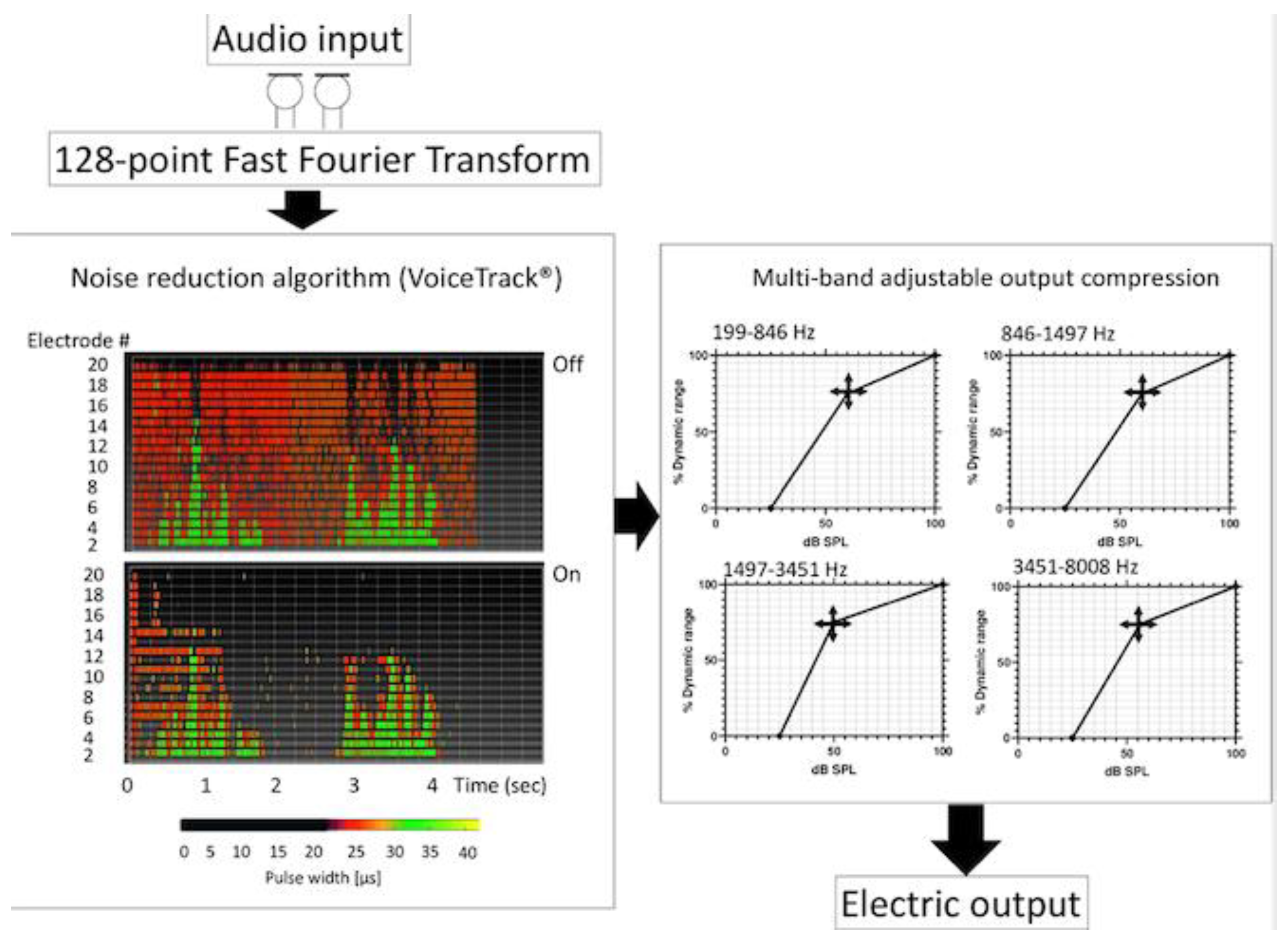

2.3. Coding and Sound Processing Strategies

2.4. Clinical Data

2.5. Questionnaires

2.6. Music Test

2.7. Statistical Tests

3. Results

3.1. CI and Sound Processing Strategies

3.2. Musical Experience

3.3. Music Test

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Musacchia, G.; Sams, M.; Skoe, E.; Kraus, N. Musicians have enhanced subcortical auditory and audiovisual processing of speech and music. Proc. Natl. Acad. Sci. USA 2007, 104, 15894–15898. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Limb, C.J.; Rubinstein, J.T. Current research on music perception in cochlear implant users. Otolaryngol. Clin. N. Am. 2012, 45, 129–140. [Google Scholar] [CrossRef] [PubMed]

- Lassaletta, L.; Castro, A.; Bastarrica, M.; Pérez-Mora, R.; Madero, R.; De Sarriá, J.; Gavilán, J. Does music perception have an impact on quality of life following cochlear implantation? Acta Otolaryngol. 2007, 127, 682–686. [Google Scholar] [CrossRef] [PubMed]

- Mandikal Vasuki, P.R.; Sharma, M.; Ibrahim, R.; Arciuli, J. Statistical learning and auditory processing in children with music training: An ERP study. Clin. Neurophysiol. 2017, 128, 1270–1281. [Google Scholar] [CrossRef]

- Patel, A.D. Why would Musical Training Benefit the Neural Encoding of Speech? The OPERA Hypothesis. Front. Psychol. 2011, 2, 142. [Google Scholar] [CrossRef] [Green Version]

- Li, X.; Nie, K.; Imennov, N.S.; Won, J.H.; Drennan, W.R.; Rubinstein, J.T.; Atlas, L.E. Improved perception of speech in noise and Mandarin tones with acoustic simulations of harmonic coding for cochlear implants. J. Acoust. Soc. Am. 2012, 132, 3387–3398. [Google Scholar] [CrossRef] [Green Version]

- Trehub, S.E.; Vongpaisal, T.; Nakata, T. Music in the lives of deaf children with cochlear implants. Ann. N. Y. Acad. Sci. 2009, 1169, 534–542. [Google Scholar] [CrossRef]

- Gfeller, K.; Driscoll, V.; Kenworthy, M.; Van Voorst, T. Music Therapy for Preschool Cochlear Implant Recipients. Music Ther. Perspect. 2011, 29, 39–49. [Google Scholar] [CrossRef] [Green Version]

- Gfeller, K.; Witt, S.; Woodworth, G.; Mehr, M.A.; Knutson, J. Effects of frequency, instrumental family, and cochlear implant type on timbre recognition and appraisal. Ann. Otol. Rhinol. Laryngol. 2002, 111, 349–356. [Google Scholar] [CrossRef]

- Petersen, B.; Andersen, A.S.F.; Haumann, N.T.; Højlund, A.; Dietz, M.J.; Michel, F.; Riis, S.K.; Brattico, E.; Vuust, P. The CI MuMuFe—A New MMN Paradigm for Measuring Music Discrimination in Electric Hearing. Front. Neurosci. 2020, 14, 2. [Google Scholar] [CrossRef]

- Hughes, S.; Llewellyn, C.; Miah, R. Let’s Face the Music! Results of a Saturday Morning Music Group for Cochlear-Implanted Adults. Cochlear Implant. Int. 2010, 11, 69–73. [Google Scholar] [CrossRef]

- Steel, M.M.; Polonenko, M.J.; Giannantonio, S.; Hopyan, T.; Papsin, B.C.; Gordon, K.A. Music Perception Testing Reveals Advantages and Continued Challenges for Children Using Bilateral Cochlear Implants. Front. Psychol. 2020, 10, 3015. [Google Scholar] [CrossRef]

- Zhu, M.; Chen, B.; Galvin, J.J., 3rd; Fu, Q.-J. Influence of pitch, timbre and timing cues on melodic contour identification with a competing masker (L). J. Acoust. Soc. Am. 2011, 130, 3562–3565. [Google Scholar] [CrossRef] [Green Version]

- Rubinstein, J.T.; Hong, R. Signal coding in cochlear implants: Exploiting stochastic effects of electrical stimulation. Ann. Otol. Rhinol. Laryngol. 2003, 191, 14–19. [Google Scholar] [CrossRef]

- Hochmair, I.; Hochmair, E.; Nopp, P.; Waller, M.; Jolly, C. Deep electrode insertion and sound coding in cochlear implants. Hear. Res. 2015, 322, 14–23. [Google Scholar] [CrossRef] [Green Version]

- Clark, G.M. The multiple-channel cochlear implant: The interface between sound and the central nervous system for hearing, speech, and language in deaf people-a personal perspective. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2006, 361, 791–810. [Google Scholar] [CrossRef] [Green Version]

- Pfingst, B.E.; Zhou, N.; Colesa, D.J.; Watts, M.M.; Strahl, S.B.; Garadat, S.N.; Schvartz-Leyzac, K.C.; Budenz, C.L.; Raphael, Y.; Zwolan, T.A. Importance of cochlear health for implant function. Hear. Res. 2015, 322, 77–88. [Google Scholar] [CrossRef] [Green Version]

- Wang, S.; Chen, B.; Yu, Y.; Yang, H.; Cui, W.; Li, J.; Fan, G.G. Alterations of structural and functional connectivity in profound sensorineural hearing loss infants within an early sensitive period: A combined DTI and fMRI study. Dev. Cogn. Neurosci. 2019, 38, 100654. [Google Scholar] [CrossRef]

- Mitani, C.; Nakata, T.; Trehub, S.E.; Kanda, Y.; Kumagami, H.; Takasaki, K.; Miyamoto, I.; Takahashi, H. Music recognition, music listening, and word recognition by deaf children with cochlear implants. Ear Hear. 2007, 28, 29S–33S. [Google Scholar] [CrossRef]

- Cheng, X.; Liu, Y.; Shu, Y.; Tao, D.D.; Wang, B.; Yuan, Y.; Galvin, J.J., 3rd; Fu, Q.J.; Chen, B. Music Training Can Improve Music and Speech Perception in Pediatric Mandarin-Speaking Cochlear Implant Users. Trends Hear. 2018, 22, 2331216518759214. [Google Scholar] [CrossRef] [Green Version]

- McDermott, H.J.; McKay, C.M.; Richardson, L.M.; Henshall, K.R. Application of loudness models to sound processing for cochlear implants. J. Acoust. Soc. Am. 2003, 114, 2190–2197. [Google Scholar] [CrossRef]

- Riley, P.E.; Ruhl, D.S.; Camacho, M.; Tolisano, A.M. Music Appreciation after Cochlear Implantation in Adult Patients: A Systematic Review. Otolaryngol. Head Neck Surg. 2018, 158, 1002–1010. [Google Scholar] [CrossRef]

- Irvine, D.R.F. Auditory perceptual learning and changes in the conceptualization of auditory cortex. Hear. Res. 2018, 366, 3–16. [Google Scholar] [CrossRef]

- Galvin, J.J., 3rd; Fu, Q.J.; Oba, S. Effect of instrument timbre on melodic contour identification by cochlear implant users. J. Acoust. Soc. Am. 2008, 124, EL189–EL195. [Google Scholar] [CrossRef]

- Moore, B.C. Coding of sounds in the auditory system and its relevance to signal processing and coding in cochlear implants. Otol. Neurotol. 2003, 24, 243–254. [Google Scholar] [CrossRef]

- Suied, C.; Agus, T.R.; Thorpe, S.J.; Mesgarani, N.; Pressnitzer, D. Auditory gist: Recognition of very short sounds from timbre cues. J. Acoust. Soc. Am. 2014, 135, 1380–1391. [Google Scholar] [CrossRef] [Green Version]

- Carlyon, R.P.; van Wieringen, A.; Long, C.J.; Deeks, J.M.; Wouters, J. Temporal pitch mechanisms in acoustic and electric hearing. J. Acoust. Soc. Am. 2002, 112, 621–633. [Google Scholar] [CrossRef]

- Mistrík, P.; Jolly, C. Optimal electrode length to match patient specific cochlear anatomy. Eur. Ann. Otorhinolaryngol. Head Neck Dis. 2016, 133, S68–S71. [Google Scholar] [CrossRef] [PubMed]

- Shannon, R.V.; Fu, Q.J.; Galvin, J.J., 3rd. The number of spectral channels required for speech recognition depends on the difficulty of the listening situation. Acta Otolaryngol. 2004, 552, 50–54. [Google Scholar] [CrossRef] [PubMed]

- Luo, X.; Garrett, C. Dynamic current steering with phantom electrode in cochlear implants. Hear. Res. 2020, 390, 107949. [Google Scholar] [CrossRef] [PubMed]

- Roy, A.T.; Carver, C.; Jiradejvong, P.; Limb, C.J. Musical Sound Quality in Cochlear Implant Users: A Comparison in Bass Frequency Perception Between Fine Structure Processing and High-Definition Continuous Interleaved Sampling Strategies. Ear Hear. 2015, 36, 582–590. [Google Scholar] [CrossRef]

- Crew, J.D.; Iii, J.J.G.; Fu, Q.-J. Channel interaction limits melodic pitch perception in simulated cochlear implants. J. Acoustic. Soc. Am. 2012, 132, EL429–EL435. [Google Scholar] [CrossRef] [Green Version]

- Tang, Q.; Benítez, R.; Zeng, F.-G. Spatial channel interactions in cochlear implants. J. Neural Eng. 2011, 8, 046029. [Google Scholar] [CrossRef]

- James, C.J.; Blamey, P.J.; Martin, L.; Swanson, B.; Just, Y.; Macfarlane, D. Adaptive dynamic range optimization for cochlear implants: A preliminary study. Ear Hear. 2002, 23, 49S–58S. [Google Scholar] [CrossRef]

- Blamey, P.J. Adaptive Dynamic Range Optimization (ADRO): A Digital Amplification Strategy for Hearing Aids and Cochlear Implants. Trends Amplif. 2005, 9, 77–98. [Google Scholar] [CrossRef] [Green Version]

- Mao, Y.; Yang, J.; Hahn, E.; Xu, L. Auditory perceptual efficacy of nonlinear frequency compression used in hearing aids: A review. J. Otol. 2017, 12, 97–111. [Google Scholar] [CrossRef]

- Vaisbuch, Y.; Santa Maria, P.L. Age-Related Hearing Loss: Innovations in Hearing Augmentation. Otolaryngol. Clin. N. Am 2018, 51, 705–723. [Google Scholar] [CrossRef]

- Bozorg-Grayeli, A.; Guevara, N.; Bebear, J.P.; Ardoint, M.; Saaï, S.; Hoen, M.; Gnansia, D.; Romanet, P.; Lavieille, J.P. Clinical evaluation of the xDP output compression strategy for cochlear implants. Eur. Arch. Otorhinolaryngol. 2016, 273, 2363–2371. [Google Scholar] [CrossRef]

- Dalla Bella, S.; Peretz, I.; Rousseau, L.; Gosselin, N. A developmental study of the affective value of tempo and mode in music. Cognition 2001, 80, B1–B10. [Google Scholar] [CrossRef]

- Spitzer, E.R.; Landsberger, D.M.; Friedmann, D.R.; Galvin, J.J., 3rd. Pleasantness Ratings for Harmonic Intervals with Acoustic and Electric Hearing in Unilaterally Deaf Cochlear Implant Patients. Front. Neurosci. 2019, 13, 922. [Google Scholar] [CrossRef]

- Di Lella, F.; Bacciu, A.; Pasanisi, E.; Vincenti, V.; Guida, M.; Bacciu, S. Main peak interleaved sampling (MPIS) strategy: Effect of stimulation rate variations on speech perception in adult cochlear implant recipients using the Digisonic SP cochlear implant. Acta Otolaryngol. 2010, 130, 102–107. [Google Scholar] [CrossRef] [PubMed]

- Brockmeier, S.J.; Grasmeder, M.; Passow, S.; Mawmann, D.; Vischer, M.; Jappel, A.; Baumgartner, W.; Stark, T.; Müller, J.; Brill, S.; et al. Comparison of musical activities of cochlear implant users with different speech-coding strategies. Ear Hear. 2007, 28, 49S–51S. [Google Scholar] [CrossRef] [PubMed]

- Bonett, D.G. Sample size requirements for testing and estimating coefficient alpha. J. Educ. Behav. Stat. 2002, 27, 335–340. [Google Scholar] [CrossRef]

- Müllensiefen, D.; Gingras, B.; Musil, J.; Stewart, L. The Musicality of Non-Musicians: An Index for Assessing Musical Sophistication in the General Population. PLoS ONE 2014, 9, e89642. [Google Scholar] [CrossRef] [Green Version]

- Kang, R.; Nimmons, G.L.; Drennan, W.; Longnion, J.; Ruffin, C.; Nie, K.; Won, J.H.; Worman, T.; Yueh, B.; Rubinstein, J. Development and validation of the University of Washington Clinical Assessment of Music Perception test. Ear Hear. 2009, 30, 411–418. [Google Scholar] [CrossRef] [Green Version]

- Drennan, W.R.; Oleson, J.J.; Gfeller, K.; Crosson, J.; Driscoll, V.D.; Won, J.H.; Anderson, E.S.; Rubinstein, J.T. Clinical evaluation of music perception, appraisal and experience in cochlear implant users. Int. J. Audiol. 2015, 54, 114–123. [Google Scholar] [CrossRef] [Green Version]

- Kohlberg, G.D.; Mancuso, D.M.; Chari, D.A.; Lalwani, A.K. Music Engineering as a Novel Strategy for Enhancing Music Enjoyment in the Cochlear Implant Recipient. Behav. Neurol. 2015, 2015, 829680. [Google Scholar] [CrossRef] [Green Version]

- Hopyan, T.; Gordon, K.A.; Papsin, B.C. Identifying emotions in music through electrical hearing in deaf children using cochlear implants. Cochlear Implants Int. 2011, 12, 21–26. [Google Scholar] [CrossRef]

- Hopyan, T.; Manno, F.A.M., 3rd; Papsin, B.C.; Gordon, K.A. Sad and happy emotion discrimination in music by children with cochlear implants. Child Neuropsychol. 2016, 22, 366–380. [Google Scholar] [CrossRef]

- Levitin, D.J. What Does It Mean to Be Musical? Neuron 2012, 73, 633–637. [Google Scholar] [CrossRef] [Green Version]

- Giannantonio, S.; Polonenko, M.J.; Papsin, B.C.; Paludetti, G.; Gordon, K.A. Experience Changes How Emotion in Music Is Judged: Evidence from Children Listening with Bilateral Cochlear Implants, Bimodal Devices, and Normal Hearing. PLoS ONE 2015, 10, e0136685. [Google Scholar] [CrossRef] [Green Version]

- Bowling, D.L. A vocal basis for the affective character of musical mode in melody. Front. Psychol. 2013, 4, 464. [Google Scholar] [CrossRef] [Green Version]

- Gfeller, K.; Lansing, C. Musical perception of cochlear implant users as measured by the Primary Measures of Music Audiation: An item analysis. J. Music Ther. 1992, 29, 18–39. [Google Scholar] [CrossRef]

- Swanson, B.; Dawson, P.; McDermott, H. Investigating cochlear implant place-pitch perception with the Modified Melodies test. Cochlear Implants Int. 2009, 10, 100–104. [Google Scholar] [CrossRef]

- Swanson, B.A.; Marimuthu, V.M.R.; Mannell, R.H. Place and Temporal Cues in Cochlear Implant Pitch and Melody Perception. Front. Neurosci. 2019, 13, 1266. [Google Scholar] [CrossRef]

- Fu, Q.-J.; Nogaki, G. Noise susceptibility of cochlear implant users: The role of spectral resolution and smearing. J. Assoc. Res. Otolaryngol. 2005, 6, 19–27. [Google Scholar] [CrossRef] [Green Version]

- Johnson-Laird, P.N.; Kang, O.E.; Leong, Y.C. On musical dissonance. Music Percept. 2012, 30, 19–35. [Google Scholar] [CrossRef]

- Plomp, R.; Levelt, W.J. Tonal consonance and critical bandwidth. J. Acoust. Soc. Am. 1965, 38, 548–560. [Google Scholar] [CrossRef]

- Cousineau, M.; McDermott, J.H.; Peretz, I. The basis of musical consonance as revealed by congenital amusia. Proc. Natl. Acad. Sci. USA 2012, 109, 19858–19863. [Google Scholar] [CrossRef] [Green Version]

- Nemer, J.S.; Kohlberg, G.D.; Mancuso, D.M.; Griffin, B.M.; Certo, M.V.; Chen, S.Y.; Chun, M.B.; Spitzer, J.B.; Lalwani, A.K. Reduction of the Harmonic Series Influences Musical Enjoyment with Cochlear Implants. Otol. Neurotol. 2017, 38, 31–37. [Google Scholar] [CrossRef] [Green Version]

- Bruns, L.; Mürbe, D.; Hahne, A. Understanding music with cochlear implants. Sci. Rep. 2016, 6, 32026. [Google Scholar] [CrossRef]

- Vuust, P.; Witek, M.A. Rhythmic complexity and predictive coding: A novel approach to modeling rhythm and meter perception in music. Front. Psychol. 2014, 5, 1111. [Google Scholar] [CrossRef] [Green Version]

- Huron, D. (Ed.) Sweet Anticipation: Music and the Psychology of Expectation; MIT Press: Cambridge, UK, 2006; p. 462. [Google Scholar]

- Matthews, T.E.; Witek, M.A.G.; Heggli, O.A.; Penhune, V.B.; Vuust, P. The sensation of groove is affected by the interaction of rhythmic and harmonic complexity. PLoS ONE 2019, 14, e0204539. [Google Scholar] [CrossRef] [Green Version]

- Gfeller, K.; Christ, A.; Knutson, J.; Witt, S.; Mehr, M. The effects of familiarity and complexity on appraisal of complex songs by cochlear implant recipients and normal hearing adults. J. Music Ther. 2003, 40, 78–112. [Google Scholar] [CrossRef]

- Holden, L.K.; Finley, C.C.; Firszt, J.B.; Holden, T.A.; Brenner, C.; Potts, L.G.; Gotter, B.D.; Vanderhoof, S.S.; Mispagel, K.; Heydebrand, G.; et al. Factors affecting open-set word recognition in adults with Cochlear Implants. Ear Hear. 2013, 34, 342–360. [Google Scholar] [CrossRef] [Green Version]

- Frederigue-Lopes, N.B.; Bevilacqua, M.C.; Costa, O.A. Munich Music Questionnaire: Adaptation into Brazilian Portuguese and application in cochlear implant users. Codas 2015, 27, 13–20. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Q.; Gu, X.; Liu, B. The music quality feeling and music perception of adult cochlear implant recipients. Lin Chung Er Bi Yan Hou Tou Jing Wai Ke Za Zhi 2019, 33, 47–51. [Google Scholar]

- Falcón-González, J.C.; Borkoski-Barreiro, S.; Limiñana-Cañal, J.M.; Ramos-Macías, A. Recognition of music and melody in patients with cochlear implants, using a new programming approach for frequency assignment. Acta Otorrinolaringol. Esp. 2014, 65, 289–296. [Google Scholar] [CrossRef] [PubMed]

- Belfi, A.M.; Kasdan, A.; Rowland, J.; Vessel, E.A.; Starr, G.G.; Poeppel, D. Rapid Timing of Musical Aesthetic Judgments. J. Exp. Psychol. Gen. 2018, 147, 1531–1543. [Google Scholar] [CrossRef] [PubMed]

- Goehring, T.; Chapman, J.L.; Bleeck, S.; Monaghan, J.J.M. Tolerable delay for speech production and perception: Effects of hearing ability and experience with hearing aids. Int. J. Audiol. 2018, 57, 61–68. [Google Scholar] [CrossRef] [PubMed]

| ID# | Sex | Age | Etiology | Hearing Deprivation | CI Exp. | CI Side | Process. | Active/Total Electrodes | Initial Strategy |

|---|---|---|---|---|---|---|---|---|---|

| 1 | M | 50 | Idiopathic | 3 | 5 | L | SP | 18/20 | Crystalis |

| 2 | M | 53 | Trauma | 1 | 7 | R | SP | 18/20 | Crystalis |

| 3 | M | 47 | Congenital | 1 | 3 | L | SP | 18/20 | Crystalis |

| 4 | M | 23 | Congenital | 1 | 4 | R | SP | 20/20 | Crystalis |

| 5 | F | 51 | Idiopathic | 1 | 4 | L | SP | 17/20 | Crystalis |

| 6 | F | 62 | Idiopathic | 1 | 19 | L | SP | 9/15 | Crystalis |

| 7 | M | 67 | Idiopathic | 1 | 11 | L | CX | 15/15 | Crystalis |

| 8 | M | 62 | Idiopathic | 48 | 3 | L | SP | 19/20 | Crystalis |

| 9 | F | 67 | Idiopathic | 37 | 17 | L | CX | 12/15 | MPIS |

| 10 | F | 58 | Otosclerosis | 14 | 19 | R | CX | 9/15 | MIPS |

| 11 | F | 74 | Otosclerosis | 1 | 10 | L | SP | 16/20 | MPIS |

| 12 | M | 63 | Idiopathic | 1 | 4 | R | SP | 20/20 | Crystalis |

| 13 | F | 54 | Idiopathic | 1 | 5 | BIN | SP | 12/12 + 12/12 | Crystalis |

| 14 | F | 55 | Idiopathic | 8 | 6 | BIN | SP | 12/12 + 12/12 | Crystalis |

| 15 | M | 69 | Meniere’s | 29 | 6 | L | SP | 16/20 | Crystalis |

| 16 | F | 56 | Congenital | 28 | 16 | R | CX | 11/15 | MPIS |

| 17 | F | 47 | Idiopathic | 3 | 3 | R | SP | 17/20 | Crystalis |

| 18 | F | 44 | Idiopathic | 2 | 2 | L | SP | 16/20 | Crystalis |

| 19 | F | 38 | Congenital | 1 | 6 | BIN | SP | 12/12 + 12/12 | MPIS |

| 20 | F | 38 | Idiopathic | 3 | 4 | BIL | SP | 16/20 + 18/20 | MPIS |

| 21 | M | 69 | Trauma | 1 | 4 | R | SP | 18/20 | Crystalis |

| Item | Before HL | Before CI | MPIS (n = 6) | CrystalisXDP (n = 15) | |

|---|---|---|---|---|---|

| How important is music in your life? | - | - | 3.7 ± 0.42 | 3.6 ± 0.34 | |

| Do you attend musical events? | - | - | 2 | 9 | |

| Do you look for new musical releases? | - | - | 3 | 2 | |

| Do you read publications on music? | - | - | 2 | 6 | |

| How often do you listen to music? | Often | - | 10 | 2 | 3 |

| Sometimes | - | 7 | 4 | 9 | |

| Never | - | 4 | 0 | 3 | |

| How much music daily? | <30 min | 5 | 16 | 2 | 8 |

| 30–60 min | 11 | 2 | 3 | 6 | |

| 1–2 H | 1 | 2 | 0 | 0 | |

| >2 H | 3 | 0 | 1 | 0 | |

| All day long | 1 | 1 | 0 | 1 |

| Item | Subitems/Choices | MPIS (n = 6) | Crystalis XDP (n = 15) |

|---|---|---|---|

| How does music sound with CI? | 0:Unnatural-5:Natural | 4.0 ± 0.26 [3,5] | 2.9 ± 0.28 [1,5] |

| 0:Unpleasant-5:Pleasant | 4.5 ± 0.22 [4,5] | 3.5 ± 0.31 [1,5] | |

| 0:Unclear-5:Clear | 3.0 ± 0.4 [1,4] | 2.6 ± 0.24 [1,4] | |

| 0:Metallic-5:Not metallic | 3.33 ± 0.56 [1,5] | 3.1 ± 0.31 [1,5] | |

| How do you listen to music? | As background | 2 | 1 |

| Active listening | 2 | 8 | |

| Both | 3 | 4 | |

| Neither | 1 | 0 | |

| Why do you listen to music? (answers not exclusive) | Pleasure | 6 | 12 |

| Emotion | 0 | 4 | |

| Good mood | 1 | 2 | |

| Dance | 3 | 6 | |

| During work | 2 | 3 | |

| Relaxing | 3 | 5 | |

| Staying awake | 0 | 1 | |

| None of the above | 0 | 1 | |

| When did you listen to music after CI? | Never | 0 | 1 |

| <1 week after | 2 | 4 | |

| 1–6 months | 3 | 5 | |

| 7–12 months | 1 | 3 | |

| >12 months | 0 | 2 | |

| Do you enjoy listening to solo instruments or orchestra? | Solo | 1 | 5 |

| Orchestra | 0 | 1 | |

| Both | 2 | 8 | |

| None | 3 | 1 | |

| What do you hear best or most? (answers not exclusive) | Pleasant sounds | 5 | 10 |

| Rhythm | 6 | 13 | |

| Unpleasant sounds | 1 | 3 | |

| Melodies | 6 | 12 | |

| Voices | 2 | 9 | |

| Can you detect wrong notes? | 2 | 5 | |

| 2 | 8 | |

| 5 | 10 | |

| 4 | 14 | |

| 4 | 11 | |

| 2 | 10 | |

| 2 | 9 | |

| 3 | 13 | |

| 2 | 3 | |

| 1 | 1 | |

| Did you train with music and CI? | 5 | 8 |

| Total Score with MPIS | Total Score with Crystalis XDP | |||

|---|---|---|---|---|

| Yes | No | Yes | No | |

| Player before CI (Yes: n = 7; No: n = 14) | 24.9 ±1.34 [18.7–29.2] | 25.7 ± 1.36 [15.3–32.3] | 27.1 ± 1.03 [22.5–30.5] | 26.7 ± 1.40 [17.8–36.3] |

| Singer before CI (Yes: n = 10; No: n = 11) | 26.7 ± 0.92 [23–31.7] | 24.2 ± 1.67 [15.3–32.3] | 28.4 ± 1.14 [22.5–36.3] | 25.4 ± 1.46 [17.8–31.8] |

| Musical training with CI (Yes: n = 13; No: n = 8) | 25.5 ± 1.09 [18.7–32.3] | 25.3 ± 2.03 [15.3–31.7] | 26.9 ±0.90 [19–30.5] | 26.8 ± 2.22 [17.8–36.3] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Leterme, G.; Guigou, C.; Guenser, G.; Bigand, E.; Bozorg Grayeli, A. Effect of Sound Coding Strategies on Music Perception with a Cochlear Implant. J. Clin. Med. 2022, 11, 4425. https://doi.org/10.3390/jcm11154425

Leterme G, Guigou C, Guenser G, Bigand E, Bozorg Grayeli A. Effect of Sound Coding Strategies on Music Perception with a Cochlear Implant. Journal of Clinical Medicine. 2022; 11(15):4425. https://doi.org/10.3390/jcm11154425

Chicago/Turabian StyleLeterme, Gaëlle, Caroline Guigou, Geoffrey Guenser, Emmanuel Bigand, and Alexis Bozorg Grayeli. 2022. "Effect of Sound Coding Strategies on Music Perception with a Cochlear Implant" Journal of Clinical Medicine 11, no. 15: 4425. https://doi.org/10.3390/jcm11154425

APA StyleLeterme, G., Guigou, C., Guenser, G., Bigand, E., & Bozorg Grayeli, A. (2022). Effect of Sound Coding Strategies on Music Perception with a Cochlear Implant. Journal of Clinical Medicine, 11(15), 4425. https://doi.org/10.3390/jcm11154425