A Concert-Based Study on Melodic Contour Identification among Varied Hearing Profiles—A Preliminary Report

Abstract

:1. Introduction

1.1. Music and Cochlear Implants

1.2. The Study

2. Methodology

2.1. Experimental Set-Up

2.2. Procedure

- Drums + Piano

- Drums + Bass + Piano

- Drums + Accordion

- Drums + Bass + Accordion

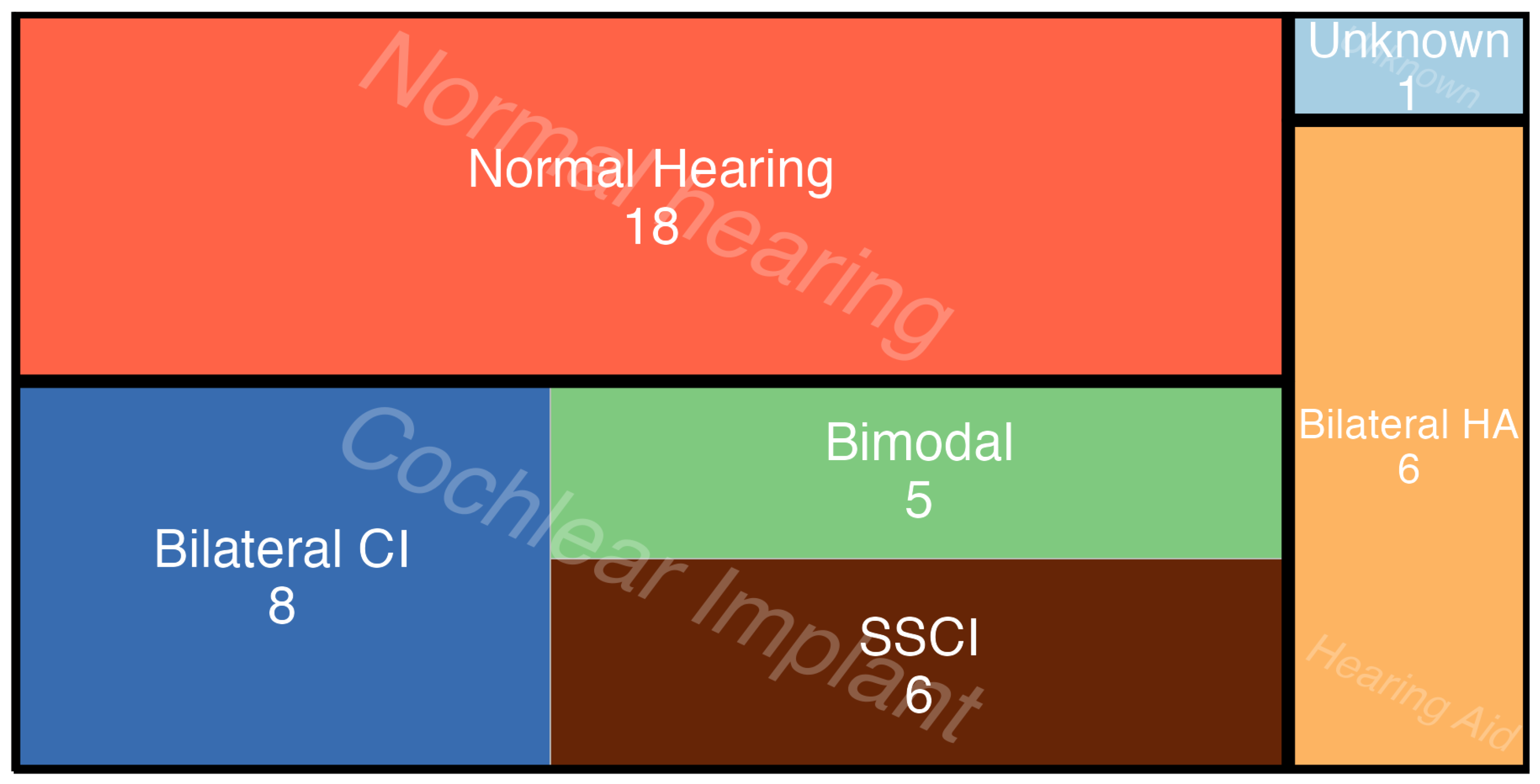

2.3. Participants

- Advertised on Danish CI social media groups;

- Local and regional hearing associations (høreforening);

- Local and regional centers for Education and Communication (Center for Specialundervisning for Voksne, Roskilde og København);

- The Copenhagen Center for Hearing and Balance at the local hospital (Rigshospitalet);

- Personal invitations.

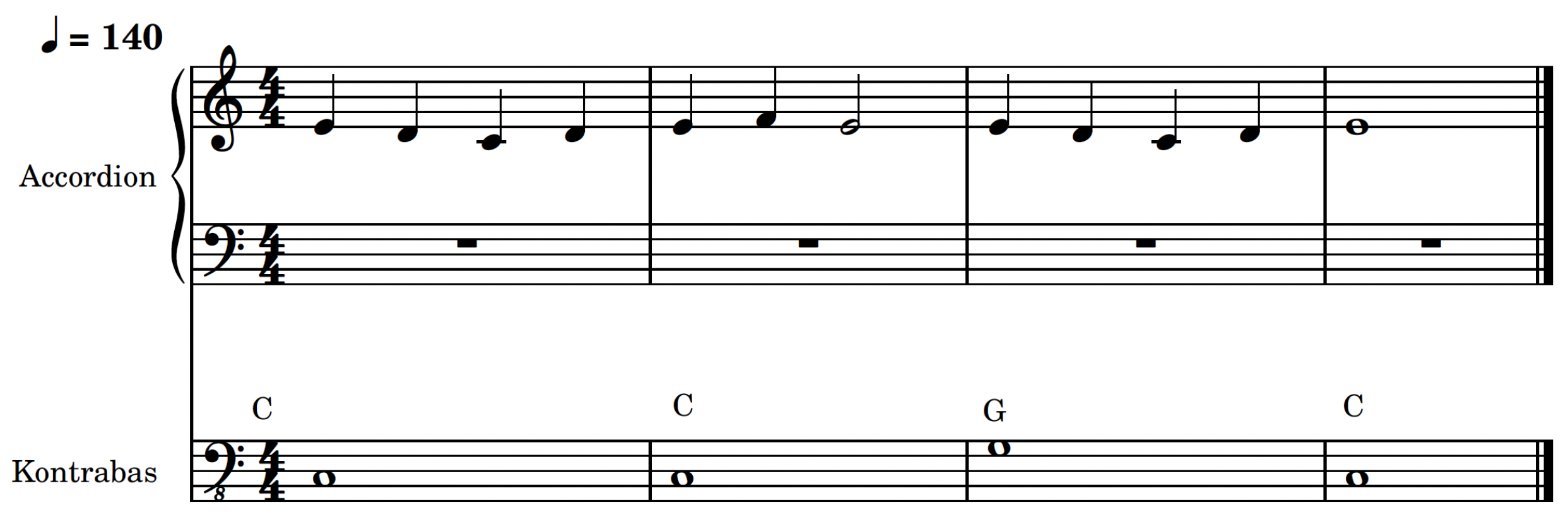

2.4. Stimuli

- Ascending: a consistently rising trajectory over a minimum of 12 semitones from the starting note (middle C), with the highest point reached near the end.

- Descending: a continuous downward trajectory over a minimum of 12 semitones from the starting note, with its lowest point near the end.

- Undulating: a mostly flat line oscillating around a single pitch (middle C), fluctuating by a maximum of 4 semitones up or down.

- Arching: rises in pitch for at least 12 semitones from the starting note and then decreases, returning close to middle C. The authors of [46] argued that this type follows the “logical trajectory of thought”.

2.5. Statistical Analysis

- (a)

- Considers individual factors (Hearing Profile + Instruments + Contours) separately, and utilizes a random intercept to account for individual variation.

- (b)

- Similar to model A but includes an interaction term (Hearing Profile: Instruments + Contours) to examine the combined effect of Hearing Profile and Instruments. Similarly, it incorporates the same random intercept to address individual variation.

- (c)

- Adopts a more complex approach exploring the potential interaction between all variables simultaneously (Hearing Profile × Instruments × Contours), and as with the other two, accounts for individual variations with random intercepts.

3. Results

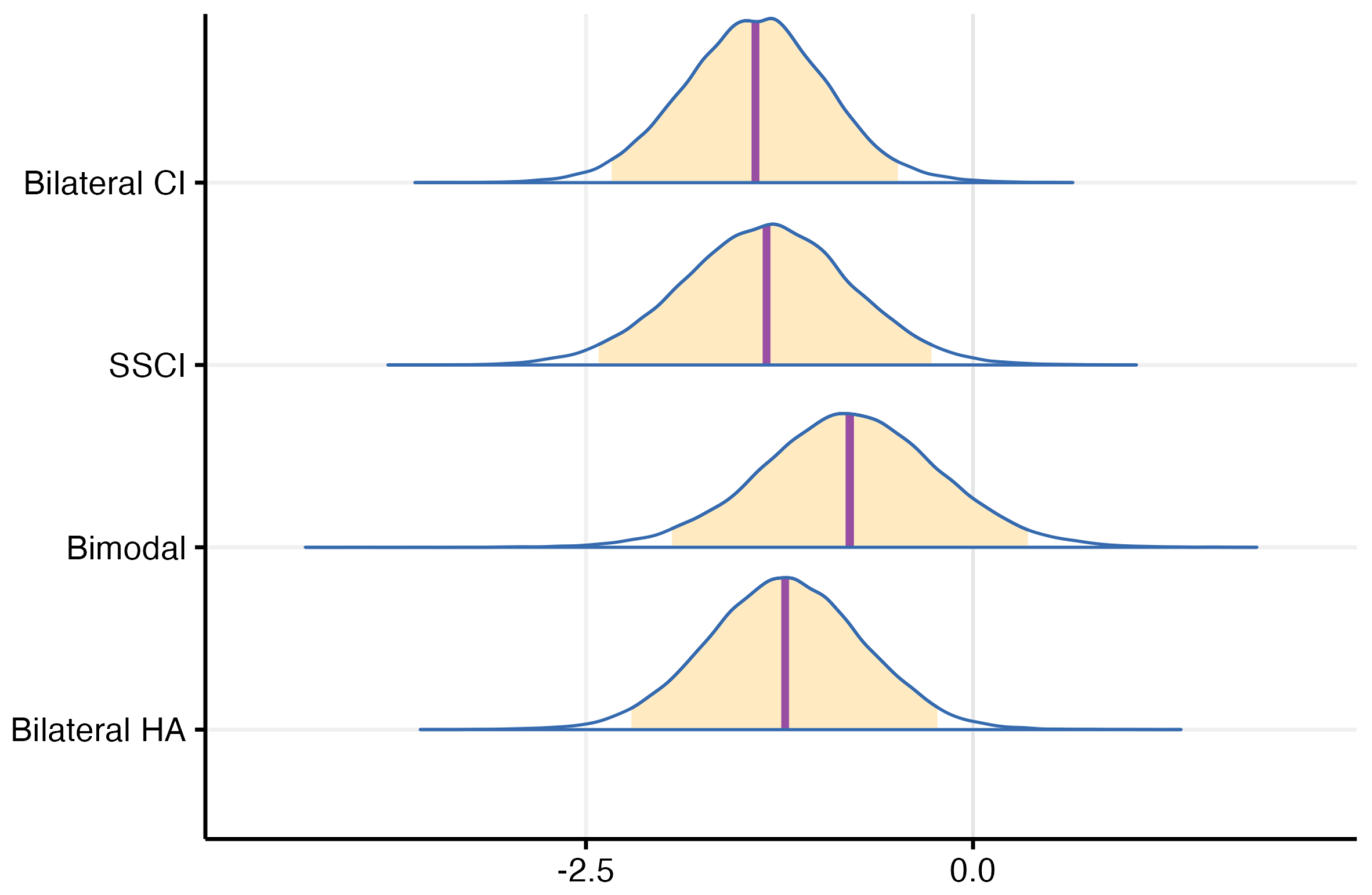

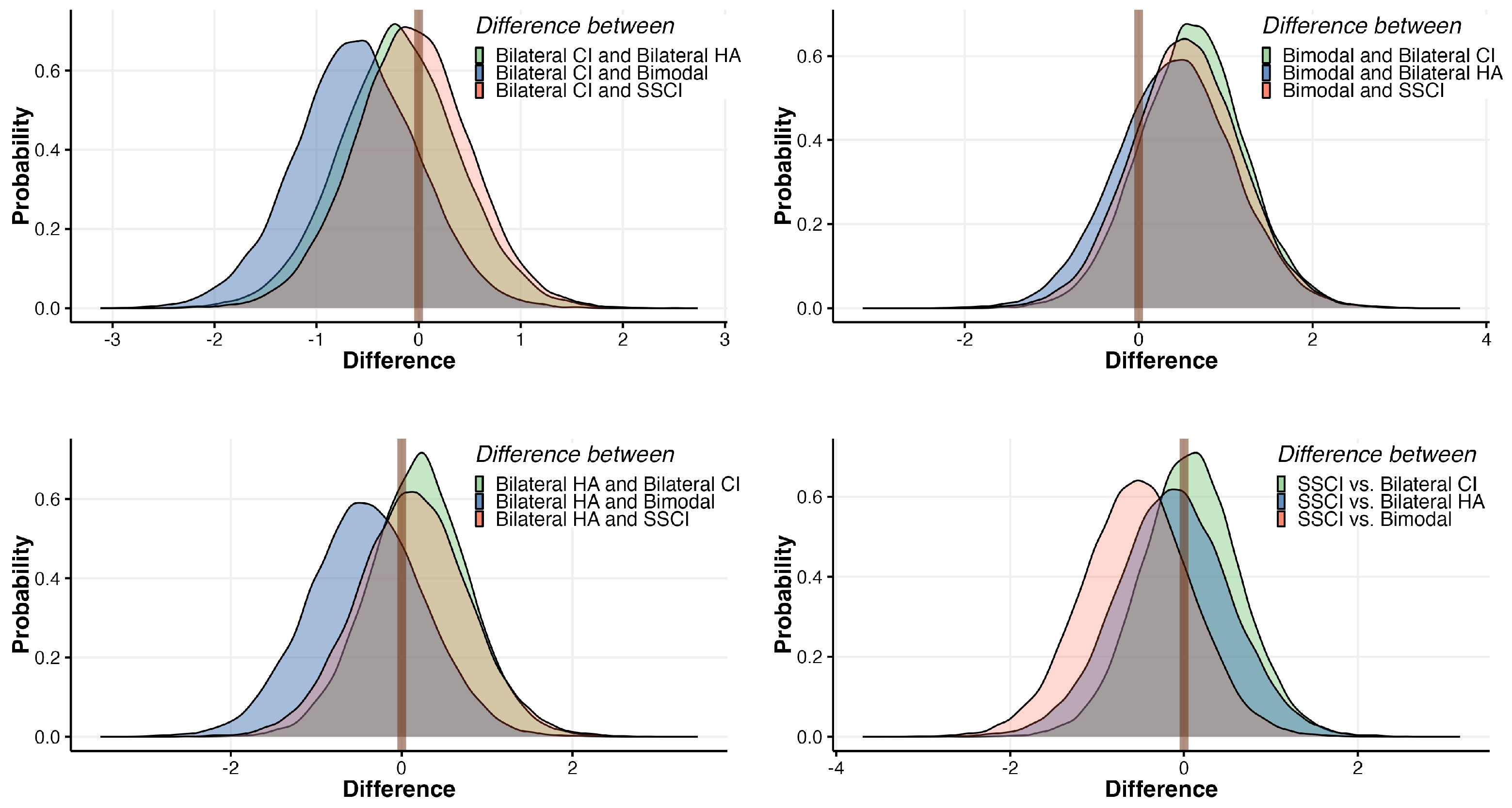

3.1. The Effect of Hearing Profiles on MCI Performance

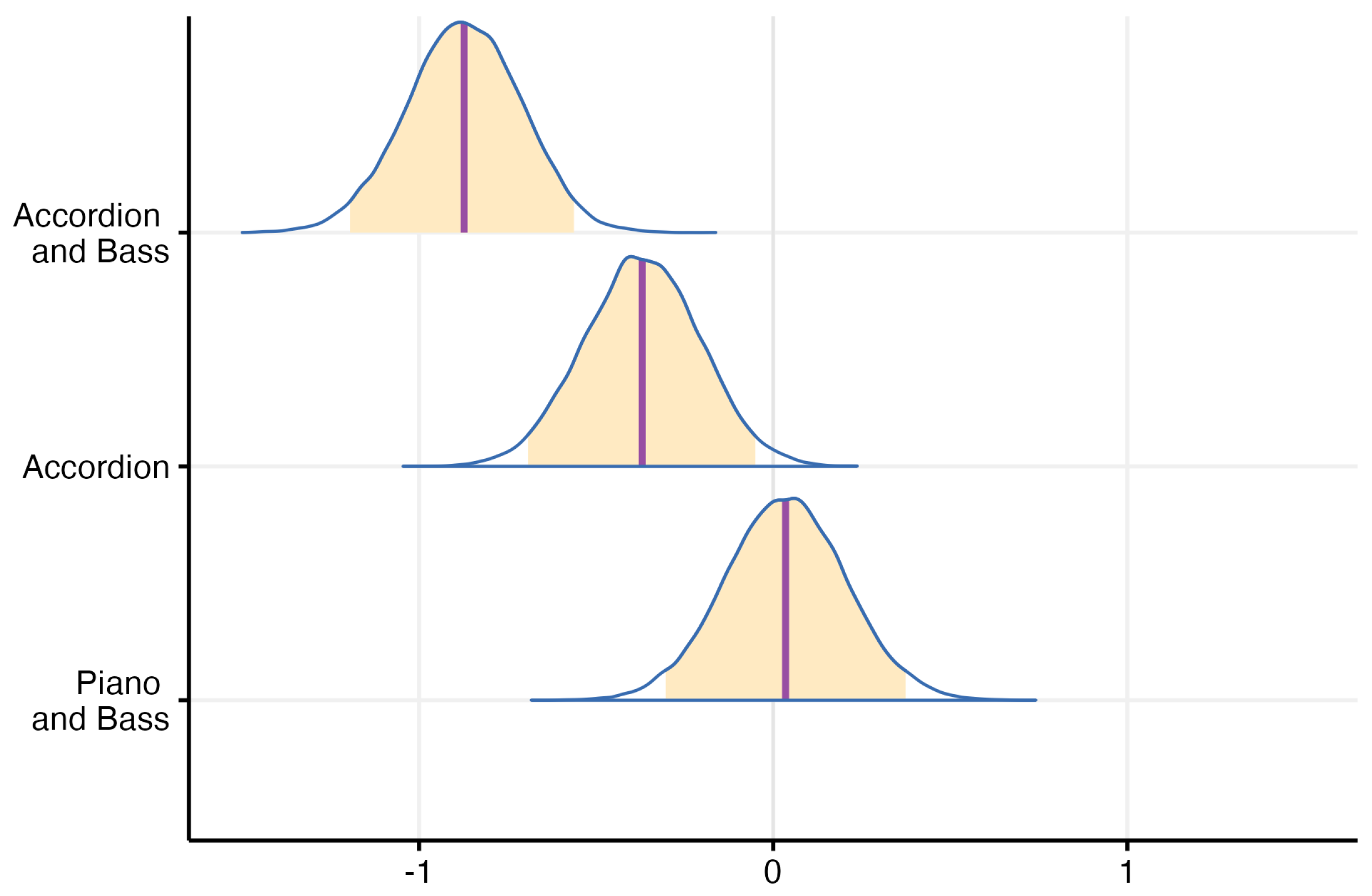

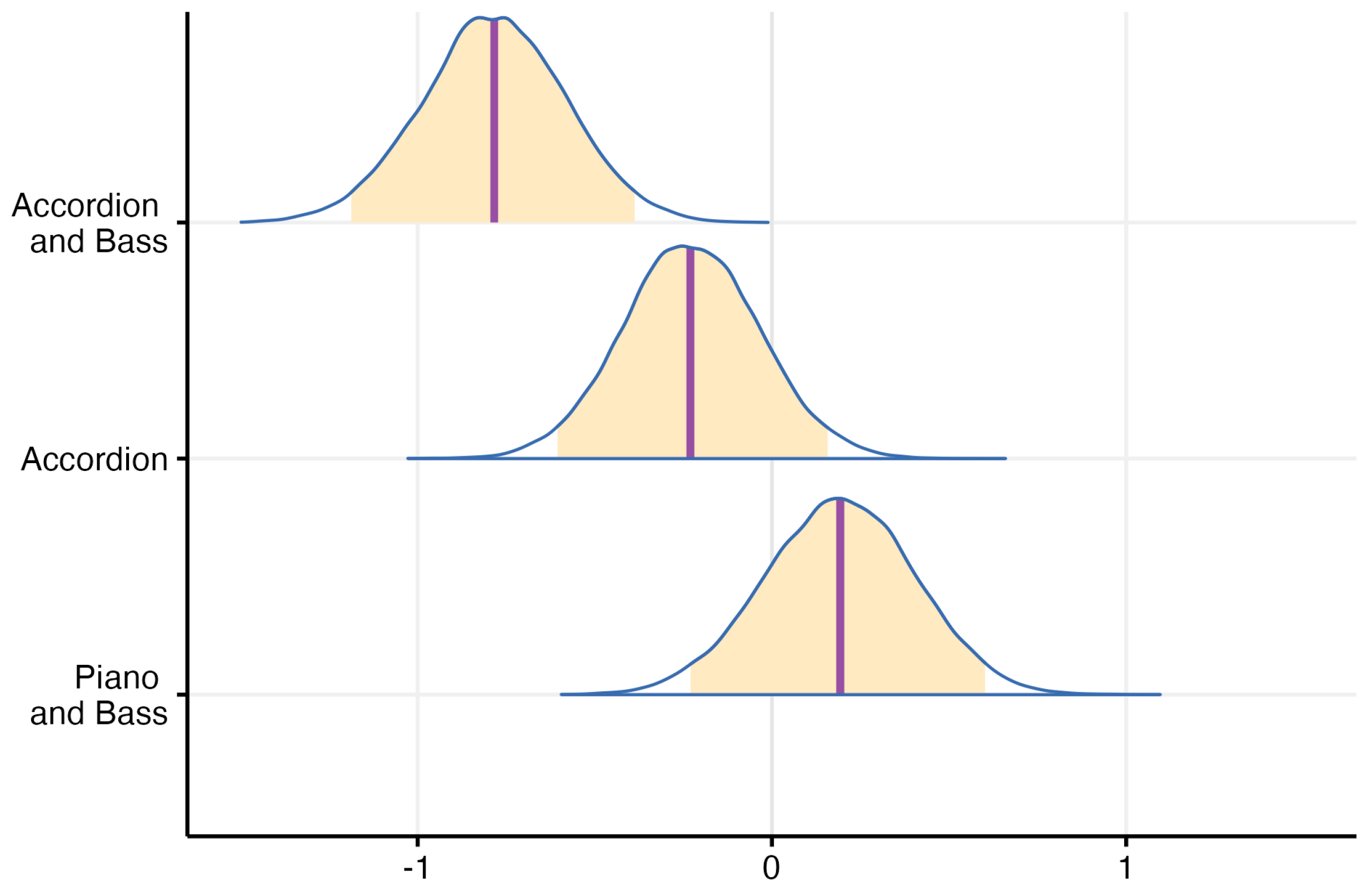

3.2. The Effect of Instrument Combinations on MCI Performance

4. Discussion

4.1. Masker Impact on MCI

4.2. Access to Acoustic Hearing and MCI

4.3. Further Discussion

4.4. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Altschuler, I.M. A Psychiatrist’s Experience with Music as a Therapeutic Agent. Nord. J. Music Ther. 2001, 10, 69–76. [Google Scholar] [CrossRef]

- Bruns, L.; Mürbe, D.; Hahne, A. Understanding music with cochlear implants. Sci. Rep. 2016, 6, 32026. [Google Scholar] [CrossRef]

- Fuller, C.; Free, R.; Maat, B.; Başkent, D. Self-reported music perception is related to quality of life and self-reported hearing abilities in cochlear implant users. Cochlear Implant. Int. 2022, 23, 1–10. [Google Scholar] [CrossRef]

- Dritsakis, G.; van Besouw, R.M.; Meara, A.O. Impact of music on the quality of life of cochlear implant users: A focus group study. Cochlear Implant. Int. 2017, 18, 207–215. [Google Scholar] [CrossRef] [PubMed]

- Cheng, X.; Liu, Y.; Shu, Y.; Tao, D.D.; Wang, B.; Yuan, Y.; Galvin, J.J.; Fu, Q.J.; Chen, B. Music Training Can Improve Music and Speech Perception in Pediatric Mandarin-Speaking Cochlear Implant Users. Trends Hear. 2018, 22. [Google Scholar] [CrossRef] [PubMed]

- Good, A.; Gordon, K.A.; Papsin, B.C.; Nespoli, G.; Hopyan, T.; Peretz, I.; Russo, F.A. Benefits of Music Training for Perception of Emotional Speech Prosody in Deaf Children with Cochlear Implants. Ear Hear. 2017, 38, 455–464. [Google Scholar] [CrossRef]

- Başkent, D.; Gaudrain, E. Musician advantage for speech-on-speech perception. J. Acoust. Soc. Am. 2016, 139, EL51–EL56. [Google Scholar] [CrossRef]

- Galvin, J.J.; Fu, Q.J.; Nogaki, G. Melodic contour identification by cochlear implant listeners. Ear Hear. 2007, 28, 302–319. [Google Scholar] [CrossRef] [PubMed]

- Galvin, J.; Eskridge, E.; Oba, S.; Fu, Q.J. Melodic contour identification training in cochlear implant users with and without a competing instrument. Semin. Hear. 2012, 33, 399–409. [Google Scholar] [CrossRef]

- Galvin, J.J.; Fu, Q.J.; Oba, S.I. Effect of a competing instrument on melodic contour identification by cochlear implant users. J. Acoust. Soc. Am. 2009, 125, EL98–EL103. [Google Scholar] [CrossRef]

- Galvin, J.J.; Fu, Q.J.; Oba, S. Effect of instrument timbre on melodic contour identification by cochlear implant users. J. Acoust. Soc. Am. 2008, 124, EL189–EL195. [Google Scholar] [CrossRef] [PubMed]

- Habibi, A.; Kreutz, G.; Russo, F.; Tervaniemi, M. Music-based interventions in community settings: Navigating the tension between rigor and ecological validity. Ann. N. Y. Acad. Sci. 2022, 1518, 47–57. [Google Scholar] [CrossRef] [PubMed]

- Tervaniemi, M. The neuroscience of music—Towards ecological validity. Trends Neurosci. 2023, 46, 355–364. [Google Scholar] [CrossRef]

- McAdams, S. Prolog. Music Percept. 2004, 22, 171. [Google Scholar] [CrossRef]

- Thompson, S. Audience responses to a live orchestral concert. Music. Sci. 2006, 10, 215–244. [Google Scholar] [CrossRef]

- Schubert, E.; Marozeau, J.; Stevens, C.J.; Innes-Brown, H. ‘Like Pots and Pans Falling Down the Stairs’. Experience of Music Composed for Listeners with Cochlear Implants in a Live Concert Setting. J. New Music Res. 2014, 43, 237–249. [Google Scholar] [CrossRef]

- Gfeller, K.; Driscoll, V.; Schwalje, A. Adult cochlear implant recipients’ perspectives on experiences with music in everyday life: A multifaceted and dynamic phenomenon. Front. Neurosci. 2019, 13, 469385. [Google Scholar] [CrossRef] [PubMed]

- Zeng, F.G. Trends in cochlear implants. Trends Amplif. 2004, 8, 1–34. [Google Scholar] [CrossRef] [PubMed]

- Kochkin, S. MarkeTrak VIII: Consumer satisfaction with hearing aids is slowly increasing. Hear. J. 2010, 63, 19–20. [Google Scholar] [CrossRef]

- McRackan, T.R.; Hand, B.N.; Velozo, C.A.; Dubno, J.R.; Golub, J.S.; Wilkinson, E.P.; Mills, D.; Carey, J.P.; Vorasubin, N.; Brunk, V.; et al. Cochlear implant quality of life (CIQOL): Development of a profile instrument (CIQOL-35 profile) and a global measure (CIQOL-10 Global). J. Speech, Lang. Hear. Res. 2019, 62, 3554–3563. [Google Scholar] [CrossRef]

- Gfeller, K.; Witt, S.; Adamek, M.; Mehr, M.; Rogers, J.; Stordahl, J.; Ringgenberg, S. Effects of training on timbre recognition and appraisal by postlingually deafened cochlear implant recipients. J. Am. Acad. Audiol. 2002, 13, 132–145. [Google Scholar] [CrossRef] [PubMed]

- Gfeller, K.E.; Olszewski, C.; Turner, C.; Gantz, B.; Oleson, J. Music perception with cochlear implants and residual hearing. Audiol. Neurotol. 2006, 11, 12–15. [Google Scholar] [CrossRef] [PubMed]

- Golub, J.S.; Won, J.H.; Drennan, W.R.; Worman, T.D.; Rubinstein, J.T. Spectral and temporal measures in hybrid cochlear implant users: On the mechanism of electroacoustic hearing benefits. Otol. Neurotol. 2012, 33, 147–153. [Google Scholar] [CrossRef] [PubMed]

- Gantz, B.J.; Dunn, C.; Walker, E.; Voorst, T.V.; Gogel, S.; Hansen, M. Outcomes of adolescents with a short electrode cochlear implant with preserved residual hearing. Otol. Neurotol. 2016, 37, e118–e125. [Google Scholar] [CrossRef] [PubMed]

- Middlebrooks, J.C.; Snyder, R.L. Auditory prosthesis with a penetrating nerve array. J. Assoc. Res. Otolaryngol. 2007, 8, 258–279. [Google Scholar] [CrossRef]

- Pinyon, J.; Tadros, S.; Froud, K.; Wong, A.; Tompson, I.; Crawford, E.; Ko, M.; Morris, R.; Klugmann, M.; Housley, G. Close-field electroporation gene delivery using the cochlear implant electrode array enhances the bionic ear. Sci. Transl. Med. 2014, 6, 233ra54. [Google Scholar] [CrossRef]

- Carlyon, R.P.; Goehring, T. Cochlear Implant Research and Development in the Twenty-first Century: A Critical Update. J. Assoc. Res. Otolaryngol. 2021, 22, 481–508. [Google Scholar] [CrossRef] [PubMed]

- Dieter, A.; Keppeler, D.; Moser, T. Towards the optical cochlear implant: Optogenetic approaches for hearing restoration. EMBO Mol. Med. 2020, 12, e11618. [Google Scholar] [CrossRef]

- Fletcher, M.D.; Thini, N.; Perry, S.W. Enhanced Pitch Discrimination for Cochlear Implant Users with a New Haptic Neuroprosthetic. Sci. Rep. 2020, 10, 10354. [Google Scholar] [CrossRef]

- Certo, M.V.; Kohlberg, G.D.; Chari, D.A.; Mancuso, D.M.; Lalwani, A.K. Reverberation time influences musical enjoyment with cochlear implants. Otol. Neurotol. 2015, 36, e46–e50. [Google Scholar] [CrossRef]

- Zeng, F.G.; Galvin, J.J. Amplitude mapping and phoneme recognition in cochlear implant listeners. Ear Hear. 1999, 20, 60–74. [Google Scholar] [CrossRef] [PubMed]

- Zeng, F.G.; Grant, G.; Niparko, J.; Galvin, J.; Shannon, R.; Opie, J.; Segel, P. Speech dynamic range and its effect on cochlear implant performance. J. Acoust. Soc. Am. 2002, 111, 377–386. [Google Scholar] [CrossRef] [PubMed]

- Lassaletta, L.; Castro, A.; Bastarrica, M.; Pérez-Mora, R.; Herrán, B.; Sanz, L.; de Sarriá, M.J.; Gavilán, J. Musical Perception and Enjoyment in Post-Lingual Patients with Cochlear Implants. Acta Otorrinolaringol. (Engl. Ed.) 2008, 59, 228–234. [Google Scholar] [CrossRef]

- Gfeller, K.; Christ, A.; Knutson, J.F.; Witt, S.; Murray, K.T.; Tyler, R.S. Musical backgrounds, listening habits, and aesthetic enjoyment of adult cochlear implant recipients. J. Am. Acad. Audiol. 2000, 11, 390–406. [Google Scholar] [CrossRef] [PubMed]

- Philips, B.; Vinck, B.; Vel, E.D.; Maes, L.; D’Haenens, W.; Keppler, H.; Dhooge, I. Characteristics and determinants of music appreciation in adult CI users. Eur. Arch. Oto-Rhino 2012, 269, 813–821. [Google Scholar] [CrossRef] [PubMed]

- Nemer, J.S.; Kohlberg, G.D.; Mancuso, D.M.; Griffin, B.M.; Certo, M.V.; Chen, S.Y.; Chun, M.B.; Spitzer, J.B.; Lalwani, A.K. Reduction of the harmonic series influences musical enjoyment with cochlear implants. Otol. Neurotol. 2017, 38, 31–37. [Google Scholar] [CrossRef] [PubMed]

- Au, A.; Marozeau, J.; Innes-Brown, H.; Schubert, E.; Stevens, C. Music for the cochlear implant: Audience response to six commissioned Compositions. Semin. Hear. 2012, 33, 335–345. [Google Scholar] [CrossRef]

- Buyens, W.; Dijk, B.V.; Moonen, M.; Wouters, J. Music mixing preferences of cochlear implant recipients: A pilot study. Int. J. Audiol. 2014, 53. [Google Scholar] [CrossRef] [PubMed]

- Buyens, W.; Dijk, B.V.; Moonen, M.; Wouters, J. Evaluation of a stereo music preprocessing scheme for cochlear implant users. J. Am. Acad. Audiol. 2018, 29, 035–043. [Google Scholar] [CrossRef]

- Gfeller, K.; Jiang, D.; Oleson, J.J.; Driscoll, V.; Olszewski, C.; Knutson, J.F.; Turner, C.; Gantz, B. The effects of musical and linguistic components in recognition of real-world musical excerpts by cochlear implant recipients and normal-hearing adults. J. Music Ther. 2012, 49, 68–101. [Google Scholar] [CrossRef]

- Magnusson, L. Comparison of the fine structure processing (FSP) strategy and the CIS strategy used in the MED-EL cochlear implant system: Speech intelligibility and music sound quality. Int. J. Audiol. 2011, 50, 279–287. [Google Scholar] [CrossRef] [PubMed]

- Fuller, C.D.; Galvin, J.J.; Maat, B.; Başkent, D.; Free, R.H. Comparison of Two Music Training Approaches on Music and Speech Perception in Cochlear Implant Users. Trends Hear. 2018, 22, 1–22. [Google Scholar] [CrossRef] [PubMed]

- Rouger, J.; Lagleyre, S.; Fraysse, B.; Deneve, S.; Deguine, O.; Barone, P. Evidence that cochlear-implanted deaf patients are better multisensory integrators. Proc. Natl. Acad. Sci. USA 2007, 104, 7295–7300. [Google Scholar] [CrossRef]

- Stein, B.; Stein, P.; Meredith, M. The Merging of the Senses; A Bradford Book; MIT Press: Cambridge, MA, USA, 1993. [Google Scholar]

- Kelter, R. Bayesian and frequentist testing for differences between two groups with parametric and nonparametric two-sample tests. WIREs Comput. Stat. 2021, 13, e1523. [Google Scholar] [CrossRef]

- Adams, C.R. Melodic Contour Typology. Ethnomusicology 1976, 20, 179–215. [Google Scholar] [CrossRef]

- Gfeller, K.; Turner, C.; Oleson, J.; Zhang, X.; Gantz, B.; Froman, R.; Olszewski, C. Accuracy of cochlear implant recipients on pitch perception, melody recognition, and speech reception in noise. Ear Hear. 2007, 28, 412–423. [Google Scholar] [CrossRef] [PubMed]

- Looi, V.; McDermott, H.; McKay, C.; Hickson, L. Music perception of cochlear implant users compared with that of hearing aid users. Ear Hear. 2008, 29, 421–434. [Google Scholar] [CrossRef] [PubMed]

- Wong, T.T. Performance evaluation of classification algorithms by k-fold and leave-one-out cross validation. Pattern Recognit. 2015, 48, 2839–2846. [Google Scholar] [CrossRef]

- Parkinson, A.J.; Rubinstein, J.T.; Drennan, W.R.; Dodson, C.; Nie, K. Hybrid Music Perception Outcomes: Implications for Melody and Timbre Recognition in Cochlear Implant Recipients. Otol. Neurotol. 2019, 40, e283–e289. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Paisa, R.; Andersen, J.; Ganis, F.; Percy-Smith, L.M.; Serafin, S. A Concert-Based Study on Melodic Contour Identification among Varied Hearing Profiles—A Preliminary Report. J. Clin. Med. 2024, 13, 3142. https://doi.org/10.3390/jcm13113142

Paisa R, Andersen J, Ganis F, Percy-Smith LM, Serafin S. A Concert-Based Study on Melodic Contour Identification among Varied Hearing Profiles—A Preliminary Report. Journal of Clinical Medicine. 2024; 13(11):3142. https://doi.org/10.3390/jcm13113142

Chicago/Turabian StylePaisa, Razvan, Jesper Andersen, Francesco Ganis, Lone M. Percy-Smith, and Stefania Serafin. 2024. "A Concert-Based Study on Melodic Contour Identification among Varied Hearing Profiles—A Preliminary Report" Journal of Clinical Medicine 13, no. 11: 3142. https://doi.org/10.3390/jcm13113142