Artificial Intelligence for the Automatic Diagnosis of Gastritis: A Systematic Review

Abstract

:1. Introduction

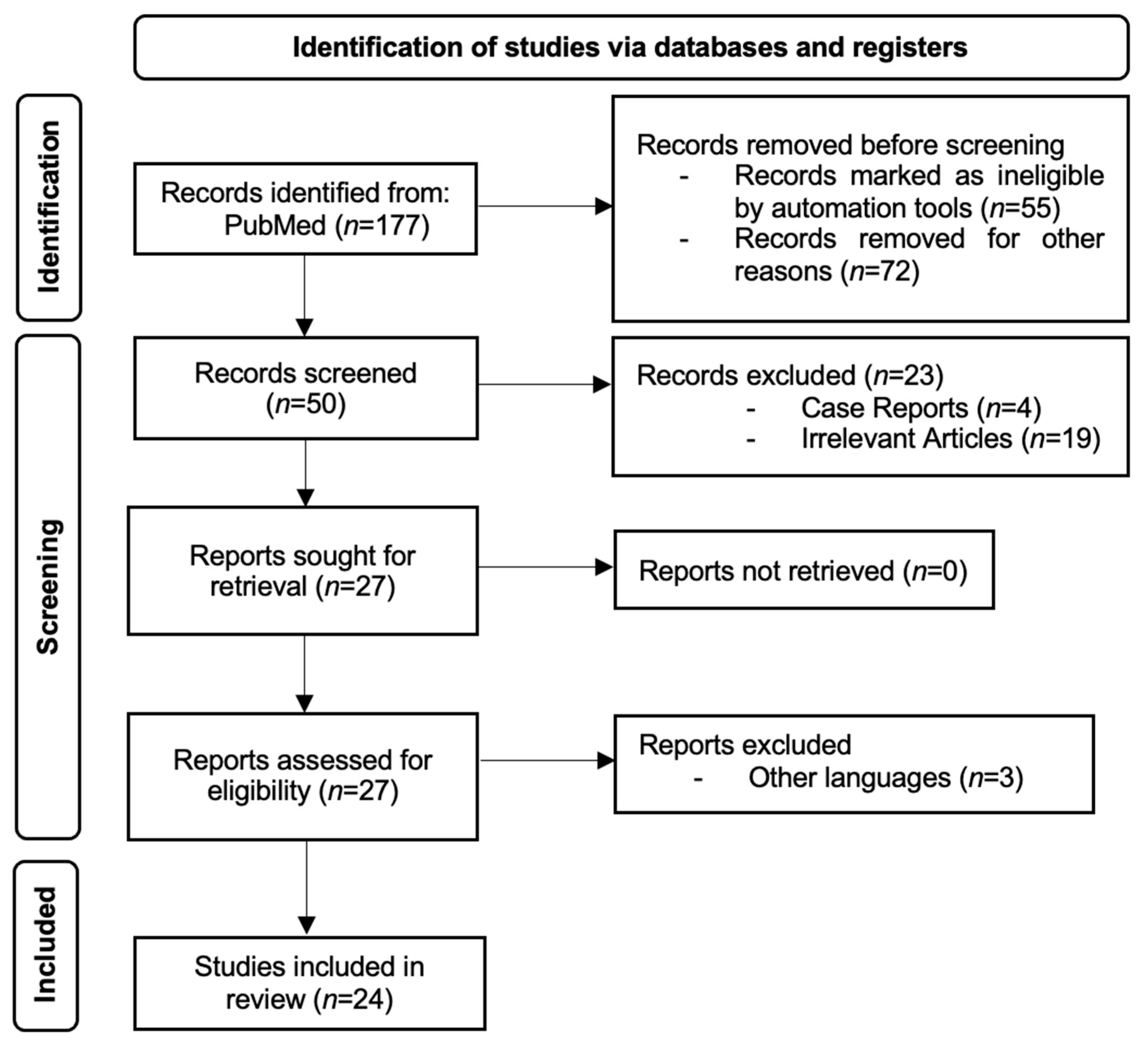

2. Materials and Methods

3. Results

3.1. Artificial Intelligence Based on Endoscopic Images

3.2. Artificial Intelligence Based on Pathology Slides

3.3. Artificial Intelligence Based on Double-Contrast UGI Barium X-rays

3.4. Artificial Intelligence Based on Clinical and Serological Findings

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| Abbreviation | Definition |

| AAG | Automatic Annotating Group |

| ABG | Atrophic Body Gastritis |

| AG | Atrophic Gastritis |

| AI | Artificial Intelligence |

| AuG | Autoimmune Gastritis |

| CAD | Computer-Aided Diagnosis |

| CAG | Chronic Atrophic Gastritis |

| CAcG | Chronic Active Gastritis |

| CAM | Class Activation Mapping |

| CGI | Corpus-Predominant Gastritis Index |

| CNAG | Chronic Non-Atrophic Gastritis |

| CNN | Convolutional Neural Networks |

| CSuG | Chronic Superficial Gastritis |

| DL | Deep Learning |

| DTL | Dual Transfer Learning |

| EGC | Early Gastric Cancer |

| GAM | Global Attention Mechanism |

| GC | Gastric Cancer |

| GIM | Gastric Intestinal Metaplasia |

| HP | Helicobacter Pylori |

| HPG | Helicobacter Pylori Gastritis |

| LAG | Local Attention Grouping |

| LCI | Linked-Color Imaging |

| LI | Low Inflammation |

| MAG | Manual Annotating Group |

| ME-NBI | Magnifying Endoscopy with Narrow Band Imaging |

| NBI | Narrow-Band Imaging |

| OLGA | Operative Link on Gastritis Assessment |

| PG | Pepsinogens |

| PSM | Propensity Score Matching |

| SI | Severe Inflammation |

| UGI | Upper Gastrointestinal |

| WLI | White Light Imaging |

| WSIs | Whole-Slide Images |

References

- Alpers, D.H. Textbook of Gastroenterology, 5th ed.; Wiley-Blackwell: Hoboken, NJ, USA, 2009; Volumes 1–2. [Google Scholar]

- Feyisa, Z.T.; Woldeamanuel, B.T. Prevalence and associated risk factors of gastritis among patients visiting Saint Paul Hospital Millennium Medical College, Addis Ababa, Ethiopia. PLoS ONE 2021, 16, e0246619. [Google Scholar] [CrossRef] [PubMed]

- Dixon, M.F.; Genta, R.M.; Yardley, J.H.; Correa, P. Classification and grading of gastritis. The updated Sydney System. International Workshop on the Histopathology of Gastritis, Houston 1994. Am. J. Surg. Pathol. 1996, 20, 1161–1181. [Google Scholar] [CrossRef] [PubMed]

- Crafa, P.; Russo, M.; Miraglia, C.; Barchi, A.; Moccia, F.; Nouvenne, A.; Leandro, G.; Meschi, T.; De’ Angelis, G.L.; Di Mario, F. From Sidney to OLGA: An overview of atrophic gastritis. Acta Biomed. 2018, 89, 93–99. [Google Scholar] [PubMed]

- Lee, S.Y. Endoscopic gastritis: What does it mean? Dig. Dis. Sci. 2011, 56, 2209–2211. [Google Scholar] [CrossRef] [PubMed]

- Sumiyama, K.; Futakuchi, T.; Kamba, S.; Matsui, H.; Tamai, N. Artificial intelligence in endoscopy: Present and future perspectives. Dig. Endosc. 2021, 33, 218–230. [Google Scholar] [CrossRef] [PubMed]

- Kröner, P.T.; Engels, M.M.; Glicksberg, B.S.; Johnson, K.W.; Mzaik, O.; van Hooft, J.E.; Wallace, M.B.; El-Serag, H.B.; Krittanawong, C. Artificial intelligence in gastroenterology: A state-of-the-art review. World J. Gastroenterol. 2021, 27, 6794–6824. [Google Scholar] [CrossRef] [PubMed]

- Yakirevich, E.; Resnick, M.B. Pathology of gastric cancer and its precursor lesions. Gastroenterol. Clin. N. Am. 2013, 42, 261–284. [Google Scholar] [CrossRef] [PubMed]

- Sipponen, P.; Maaroos, H.I. Chronic gastritis. Scand. J. Gastroenterol. 2015, 50, 657–667. [Google Scholar] [CrossRef] [PubMed]

- Pasechnikov, V.; Chukov, S.; Fedorov, E.; Kikuste, I.; Leja, M. Gastric cancer: Prevention, screening and early diagnosis. World J. Gastroenterol. 2014, 20, 13842–13862. [Google Scholar] [CrossRef] [PubMed]

- Okagawa, Y.; Abe, S.; Yamada, M.; Oda, I.; Saito, Y. Artificial Intelligence in Endoscopy. Dig. Dis. Sci. 2022, 67, 1553–1572. [Google Scholar] [CrossRef] [PubMed]

- Shi, Y.; Wei, N.; Wang, K.; Wu, J.; Tao, T.; Li, N.; Lv, B. Deep learning-assisted diagnosis of chronic atrophic gastritis in endoscopy. Front. Oncol. 2023, 13, 1122247. [Google Scholar] [CrossRef] [PubMed]

- Jhang, J.Y.; Tsai, Y.C.; Hsu, T.C.; Huang, C.R.; Cheng, H.C.; Sheu, B.S. Gastric Section Correlation Network for Gastric Precancerous Lesion Diagnosis. IEEE Open J. Eng. Med. Biol. 2023, 5, 434–442. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Tao, X.; Zhu, Y.; Dong, Z.; Huang, L.; Shang, R.; Du, H.; Wang, J.; Zeng, X.; Wang, W.; Wang, J.; et al. An artificial intelligence system for chronic atrophic gastritis diagnosis and risk stratification under white light endoscopy. Dig. Liver Dis. 2024, 56, 1319–1326. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Q.; Jia, Q.; Chi, T. U-Net deep learning model for endoscopic diagnosis of chronic atrophic gastritis and operative link for gastritis assessment staging: A prospective nested case-control study. Ther. Adv. Gastroenterol. 2023, 16. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Yang, J.; Ou, Y.; Chen, Z.; Liao, J.; Sun, W.; Luo, Y.; Luo, C. A Benchmark Dataset of Endoscopic Images and Novel Deep Learning Method to Detect Intestinal Metaplasia and Gastritis Atrophy. IEEE J. Biomed. Health Inform. 2023, 27, 7–16. [Google Scholar] [CrossRef] [PubMed]

- Chong, Y.; Xie, N.; Liu, X.; Zhang, M.; Huang, F.; Fang, J.; Wang, F.; Pan, S.; Nie, H.; Zhao, Q. A deep learning network based on multi-scale and attention for the diagnosis of chronic atrophic gastritis. Z. Gastroenterol. 2022, 60, 1770–1778. [Google Scholar] [PubMed]

- Luo, J.; Cao, S.; Ding, N.; Liao, X.; Peng, L.; Xu, C. A deep learning method to assist with chronic atrophic gastritis diagnosis using white light images. Dig. Liver Dis. 2022, 54, 1513–1519. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Q.; Chi, T. Deep learning model can improve the diagnosis rate of endoscopic chronic atrophic gastritis: A prospective cohort study. BMC Gastroenterol. 2022, 22, 133. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Q.; Jia, Q.; Chi, T. Deep learning as a novel method for endoscopic diagnosis of chronic atrophic gastritis: A prospective nested case-control study. BMC Gastroenterol. 2022, 22, 352. [Google Scholar] [CrossRef] [PubMed]

- Lin, N.; Yu, T.; Zheng, W.; Hu, H.; Xiang, L.; Ye, G.; Zhong, X.; Ye, B.; Wang, R.; Deng, W.; et al. Simultaneous Recognition of Atrophic Gastritis and Intestinal Metaplasia on White Light Endoscopic Images Based on Convolutional Neural Networks: A Multicenter Study. Clin. Transl. Gastroenterol. 2021, 12, e00385. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Li, F.; Yuan, F.; Zhang, K.; Huo, L.; Dong, Z.; Lang, Y.; Zhang, Y.; Wang, M.; Gao, Z.; et al. Diagnosing chronic atrophic gastritis by gastroscopy using artificial intelligence. Dig. Liver Dis. 2020, 52, 566–572. [Google Scholar] [CrossRef] [PubMed]

- Horiuchi, Y.; Aoyama, K.; Tokai, Y.; Hirasawa, T.; Yoshimizu, Z.; Ishiyama, A.; Yoshio, T.; Tsuchida, T.; Fujisaki, J.; Tada, T. Convolutional Neural Network for Differentiating Gastric Cancer from Gastritis Using Magnified Endoscopy with Narrow Band Imaging. Dig. Dis. Sci. 2020, 65, 1355–1363. [Google Scholar] [CrossRef] [PubMed]

- Franklin, M.M.; Schultz, F.A.; Tafoya, M.A.; Kerwin, A.A.; Broehm, C.J.; Fischer, E.G.; Gullapalli, R.R.; Clark, D.P.; Hanson, J.A.; Martin, D.R. A Deep Learning Convolutional Neural Network Can Differentiate Between Helicobacter Pylori Gastritis and Autoimmune Gastritis With Results Comparable to Gastrointestinal Pathologists. Arch. Pathol. Lab. Med. 2022, 146, 117–122. [Google Scholar] [CrossRef] [PubMed]

- Lin, Y.J.; Chen, C.C.; Lee, C.H.; Yeh, C.Y.; Jeng, Y.M. Two-tiered deep-learning-based model for histologic diagnosis of Helicobacter gastritis. Histopathology 2023, 83, 771–781. [Google Scholar] [CrossRef] [PubMed]

- Fang, S.; Liu, Z.; Qiu, Q.; Tang, Z.; Yang, Y.; Kuang, Z.; Du, X.; Xiao, S.; Liu, Y.; Luo, Y.; et al. Diagnosing and grading gastric atrophy and intestinal metaplasia using semi-supervised deep learning on pathological images: Development and validation study. Gastric Cancer 2024, 27, 343–354. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Ma, M.; Zeng, X.; Qu, L.; Sheng, X.; Ren, H.; Chen, W.; Li, B.; You, Q.; Xiao, L.; Wang, Y.; et al. Advancing Automatic Gastritis Diagnosis: An Interpretable Multilabel Deep Learning Framework for the Simultaneous Assessment of Multiple Indicators. Am. J. Pathol. 2024, 194, 1538–1549. [Google Scholar] [CrossRef] [PubMed]

- Ba, W.; Wang, S.H.; Liu, C.C.; Wang, Y.F.; Shi, H.Y.; Song, Z.G. Histopathological Diagnosis System for Gastritis Using Deep Learning Algorithm. Chin. Med. Sci. J. 2021, 36, 204–209. [Google Scholar] [PubMed]

- Ma, B.; Guo, Y.; Hu, W.; Yuan, F.; Zhu, Z.; Yu, Y.; Zou, H. Artificial Intelligence-Based Multiclass Classification of Benign or Malignant Mucosal Lesions of the Stomach. Front. Pharmacol. 2020, 11, 572372. [Google Scholar] [CrossRef] [PubMed]

- Steinbuss, G.; Kriegsmann, K.; Kriegsmann, M. Identification of Gastritis Subtypes by Convolutional Neuronal Networks on Histological Images of Antrum and Corpus Biopsies. Int. J. Mol. Sci. 2020, 21, 6652. [Google Scholar] [CrossRef] [PubMed]

- Kanai, M.; Togo, R.; Ogawa, T.; Haseyama, M. Chronic atrophic gastritis detection with a convolutional neural network considering stomach regions. World J. Gastroenterol. 2020, 26, 3650–3659. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Togo, R.; Ogawa, T.; Haseyama, M. Chronic gastritis classification using gastric X-ray images with a semi-supervised learning method based on tri-training. Med. Biol. Eng. Comput. 2020, 58, 1239–1250. [Google Scholar] [CrossRef] [PubMed]

- Togo, R.; Yamamichi, N.; Mabe, K.; Takahashi, Y.; Takeuchi, C.; Kato, M.; Sakamoto, N.; Ishihara, K.; Ogawa, T.; Haseyama, M. Detection of gastritis by a deep convolutional neural network from double-contrast upper gastrointestinal barium X-ray radiography. J. Gastroenterol. 2019, 54, 321–329. [Google Scholar] [CrossRef] [PubMed]

- Li, G.; Togo, R.; Ogawa, T.; Haseyama, M. Self-supervised learning for gastritis detection with gastric X-ray images. Int. J. Comput. Assist. Radiol. Surg. 2023, 18, 1841–1848. [Google Scholar] [CrossRef] [PubMed]

- Lahner, E.; Grossi, E.; Intraligi, M.; Buscema, M.; Corleto, V.D.; Delle Fave, G.; Annibale, B. Possible contribution of artificial neural networks and linear discriminant analysis in recognition of patients with suspected atrophic body gastritis. World J. Gastroenterol. 2005, 11, 5867–5873. [Google Scholar] [CrossRef] [PubMed]

- Shi, Y.; Wei, N.; Wang, K.; Tao, T.; Yu, F.; Lv, B. Diagnostic value of artificial intelligence-assisted endoscopy for chronic atrophic gastritis: A systematic review and meta-analysis. Front. Med. 2023, 10, 1134980. [Google Scholar] [CrossRef] [PubMed]

- Luo, Q.; Yang, H.; Hu, B. Application of artificial intelligence in the endoscopic diagnosis of early gastric cancer, atrophic gastritis, and Helicobacter pylori infection. J. Dig. Dis. 2022, 23, 666–674. [Google Scholar] [CrossRef] [PubMed]

| Study | Year of Publication | Country | Study Design | Validation Method | Number of Images/ Videos | Diagnosis | Main Findings |

|---|---|---|---|---|---|---|---|

| Shi et al. [12] | 2023 | China | Retrospective | Internal and External | 10,961 images and 118 videos | CAG | The GAM-Efficient net showed an accuracy of 93.5% in diagnosing CAG in images and 92.37% in videos. |

| Jhang et al. [13] | 2023 | Taiwan | Retrospective | Internal | 912 images | CGI | The model performed better than the five models it was compared to, with an AUC of 0.984, accuracy of 0.957, sensitivity of 0.938, and specificity of 0.962. |

| Tao et al. [14] | 2024 | China | Retrospective | Internal and External | 869 images and 119 videos | CAG | The model performed better in the image competition except compared to the expert endoscopists. The model performed better than all groups of endoscopists in the video competition and CAG stratification. |

| Zhao et al. [15] | 2023 | China | Prospective | Internal | 5290 images | CAG, OLGA | The model performed better than endoscopists for all parameters (sensitivity, specificity, PPV, NPV, accuracy rate, Youden index, odd product, LR+, LR−, AUC, and kappa) and for diagnosing OLGA. |

| Yang et al. [16] | 2023 | China | Retrospective | Internal | 21,240 images | CAG and CIM | The AI model attained state-of-the-art performances with an accuracy of 99.18% and 97.12% in identifying GIM and CAG, respectively. |

| Chong et al. [17] | 2022 | China | Retrospective | Internal | 5159 images | CAG | The sensitivity and specificity achieved by MWA-net were 90.19% and 94.15%, which were greater than those of an expert group of endoscopists. |

| Luo et al. [18] | 2022 | China | Retrospective | Internal and External | 10,593 images | CAG | The deep learning model’s performance to recognize CAG and its severity was similar to that of a group of gastroenterologists. |

| Zhao et al. [19] | 2022 | China | Prospective | Internal | 5290 images | CAG | The U-NET model identified more cases with moderate and severe CAG in contrast with a team of gastroscopists. |

| Zhao et al. [20] | 2022 | China | Prospective | Internal | 5290 images | CAG | By taking the histopathological diagnosis as the gold standard, the real-time video assessment model showed superior results compared to human experts. |

| Lin et al. [21] | 2021 | China | Retrospective | Internal | 7037 images | CAG and GIM | The CNN network, TResNET, attained high performances in recognizing AG and GIM. |

| Zhang et al. [22] | 2020 | China | Retrospective | Internal | 5470 images | CAG | DenseNet exhibited high levels of accuracy in diagnosing mild, moderate, and severe gastritis: 93%, 95%, and 99%, respectively. |

| Horiuchi et al. [23] | 2019 | Japan | Retrospective | Internal | 2828 images | EGC and CAG | The overall accuracy of this CNN model of recognizing the two stomach pathologies was 85.3%. |

| Study | Year of Publication | Country | Study Design | Validation Method | Number of Pathology Slides | Diagnosis | Main Findings |

|---|---|---|---|---|---|---|---|

| Franklin et al. [24] | 2022 | USA | Retrospective | Internal | 187 | HPG and CAG | The results of the AI model were equal to those of two expert pathologists, which illustrated 100% concordance with the gold standard diagnosis. |

| Lin et al. [25] | 2023 | China | Retrospective | Internal | 885 | HPG | The model had 0.974 AUC, 0.933 sensitivity, and 0.901 specificity, compared to pathologists with 0.933 sensitivity and 0.842 specificity. |

| Fang et al. [26] | 2023 | China | Retrospective | Internal and External | 2725 | GA, IM | The model had a high performance, obtaining better results than most pathologists, but when assisting them only improved a few parameters (IM AUC, sensitivity and weighted kappa, and atrophy specificity). |

| Ma et al. [27] | 2024 | China | Retrospective | Internal | 1096 patients | GA, IM | The model had a high performance, with 0.93 AUC for evaluating activity, 0.97 AUC for GA, and 0.93 AUC for IM. It also reduced the time spent on each slide from 5.46 min to 2.85 min. |

| Ba et al. [28] | 2021 | China | Retrospective | Internal | 1250 | CSuG, CAcG, and CAG | For the three different types of chronic gastritis, the deep learning network showed an accuracy of 86.7%. |

| Ma et al. [29] | 2020 | China | Retrospective | Internal | 763 | Normal mucosa, CAG, GC | The highest accuracy for recognizing the three states of the gastric mucosa was 94.5%. After collecting follow-up data and fine-tuning the AI model with more features, the DL model had a survival prediction accuracy of 97.4%. |

| Steinbuss et al. [30] | 2020 | Germany | Retrospective | Internal | 135 | Autoimmune, bacterial, and chemical gastritis | Diagnosis of bacterial gastritis using a deep learning model was performed with a sensitivity of 100% and a specificity of 93%. |

| Study | Year of Publication | Country | Study Design | Validation Method | Number of X-rays | Diagnosis | Main Findings |

|---|---|---|---|---|---|---|---|

| Kanai et al. [31] | 2020 | Japan | Retrospective | Not specified | 815 | CAG | The AI model showed high performance in recognizing CAG, even when the number of manually annotated X-rays was limited. |

| Li et al. [32] | 2020 | Japan | Retrospective | Internal | 815 | CAG | High precision in diagnosing chronic gastritis was attained with a restricted number of annotated images. Using 100 annotated images, the best results were achieved with a sensitivity of 92.2% and a specificity of 90.7%. |

| Togo et al. [33] | 2018 | Japan | Retrospective | Internal | 6520 | CAG | The sensitivity, specificity, and harmonic mean were 96.2%, 98.3%, and 97.2%, respectively, which were similar to those obtained with the ABC (D) stratification. |

| Li et al. [34] | 2023 | Japan | Retrospective | Internal | 815 | Gastritis | The model had a harmonic mean score of sensitivity and specificity of 0.875, 0.911, 0.915, 0.931, and 0.954 when using data from 10, 20, 30, 40, and 200 patients. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Turtoi, D.C.; Brata, V.D.; Incze, V.; Ismaiel, A.; Dumitrascu, D.I.; Militaru, V.; Munteanu, M.A.; Botan, A.; Toc, D.A.; Duse, T.A.; et al. Artificial Intelligence for the Automatic Diagnosis of Gastritis: A Systematic Review. J. Clin. Med. 2024, 13, 4818. https://doi.org/10.3390/jcm13164818

Turtoi DC, Brata VD, Incze V, Ismaiel A, Dumitrascu DI, Militaru V, Munteanu MA, Botan A, Toc DA, Duse TA, et al. Artificial Intelligence for the Automatic Diagnosis of Gastritis: A Systematic Review. Journal of Clinical Medicine. 2024; 13(16):4818. https://doi.org/10.3390/jcm13164818

Chicago/Turabian StyleTurtoi, Daria Claudia, Vlad Dumitru Brata, Victor Incze, Abdulrahman Ismaiel, Dinu Iuliu Dumitrascu, Valentin Militaru, Mihai Alexandru Munteanu, Alexandru Botan, Dan Alexandru Toc, Traian Adrian Duse, and et al. 2024. "Artificial Intelligence for the Automatic Diagnosis of Gastritis: A Systematic Review" Journal of Clinical Medicine 13, no. 16: 4818. https://doi.org/10.3390/jcm13164818