From Promise to Practice: Harnessing AI’s Power to Transform Medicine

Author Contributions

Conflicts of Interest

References

- Baker, A.; Perov, Y.; Middleton, K.; Baxter, J.; Mullarkey, D.; Sangar, D.; Butt, M.; DoRosario, A.; Johri, S. A Comparison of Artificial Intelligence and Human Doctors for the Purpose of Triage and Diagnosis. Front. Artif. Intell. 2020, 3, 543405. [Google Scholar] [CrossRef] [PubMed]

- Gabriel, J.; Shafik, L.; Alanbuki, A.; Larner, T. The utility of the ChatGPT artificial intelligence tool for patient education and enquiry in robotic radical prostatectomy. Int. Urol. Nephrol. 2023, 55, 2717–2732. [Google Scholar] [CrossRef] [PubMed]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef] [PubMed]

- Huang, S.; Yang, J.; Fong, S.; Zhao, Q. Artificial intelligence in cancer diagnosis and prognosis: Opportunities and challenges. Cancer Letters 2020, 471, 61–71. [Google Scholar] [CrossRef] [PubMed]

- Borna, S.; Gomez-Cabello, C.A.; Pressman, S.M.; Haider, S.A.; Forte, A.J. Comparative Analysis of Large Language Models in Emergency Plastic Surgery Decision-Making: The Role of Physical Exam Data. J. Pers. Med. 2024, 14, 612. [Google Scholar] [CrossRef] [PubMed]

- Biswas, A.; Talukdar, W. Intelligent Clinical Documentation: Harnessing Generative AI for Patient-Centric Clinical Note Generation. arXiv 2024. [Google Scholar] [CrossRef]

- Kapoor, R.; Corrales, G.; Flores, M.P.; Feng, L.; Cata, J.P. Use of Neural Machine Translation Software for Patients With Limited English Proficiency to Assess Postoperative Pain and Nausea. JAMA Netw. Open 2022, 5, e221485. [Google Scholar] [CrossRef] [PubMed]

- Hossain, E.; Rana, R.; Higgins, N.; Soar, J.; Barua, P.D.; Pisani, A.R.; Turner, K. Natural Language Processing in Electronic Health Records in relation to healthcare decision-making: A systematic review. Comput. Biol. Med. 2023, 155, 106649. [Google Scholar] [CrossRef] [PubMed]

- Haider, S.A.; Ho, O.A.; Borna, S.; Gomez-Cabello, C.A.; Pressman, S.M.; Cole, D.; Sehgal, A.; Leibovich, B.C.; Forte, A.J. Use of Multimodal Artificial Intelligence in Surgical Instrument Recognition. Bioengineering 2025, 12, 72. [Google Scholar] [CrossRef] [PubMed]

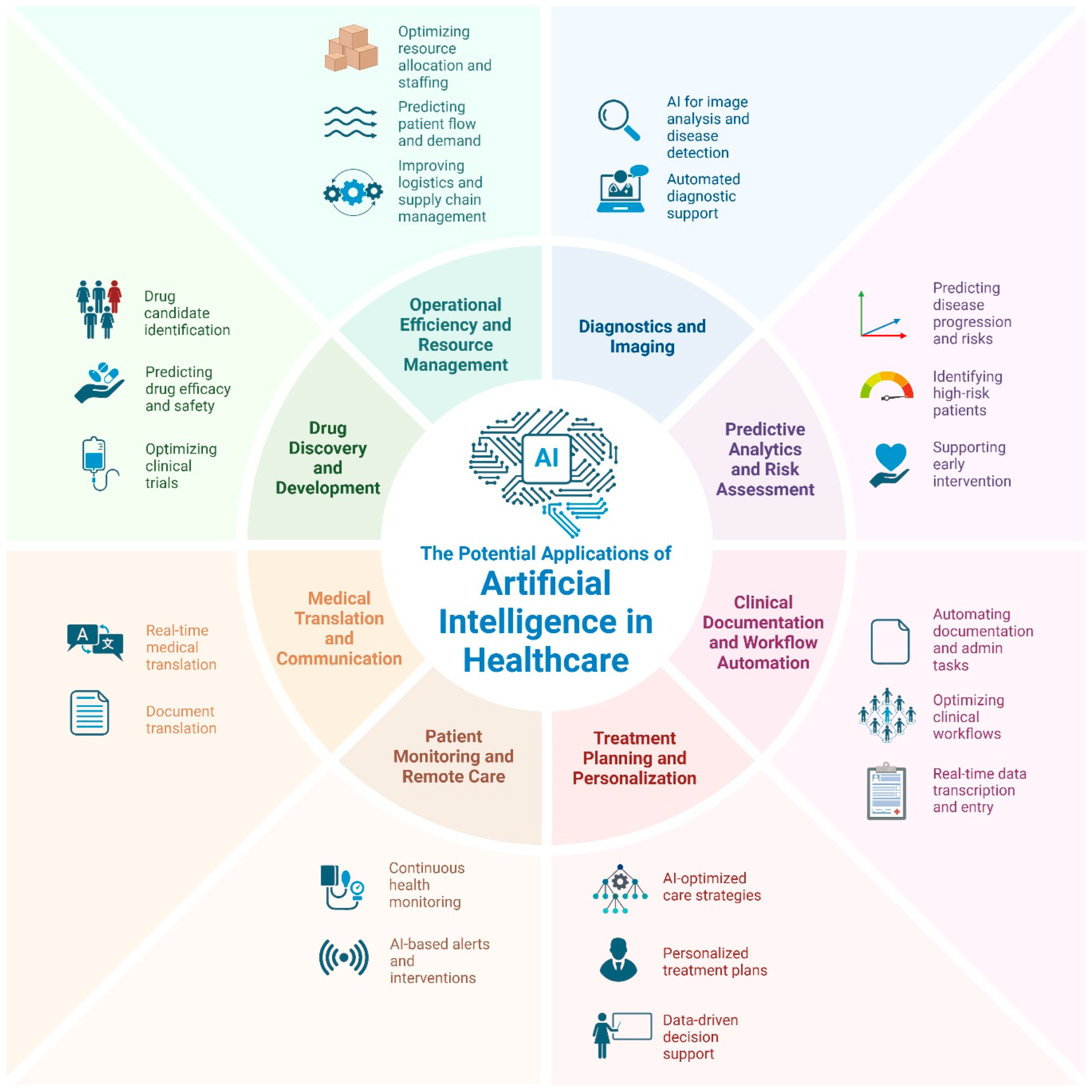

- Genovese, A. The Potential Applications of Artificial Intelligence in Healthcare. 2025. Available online: https://biorender.com/g74f622 (accessed on 1 February 2025).

- Franzoni, V. From Black Box to Glass Box: Advancing Transparency in Artificial Intelligence Systems for Ethical and Trustworthy AI. In Computational Science and Its Applications—ICCSA 2023 Workshops; Springer: Cham, Switzerland, 2023; pp. 118–130. [Google Scholar] [CrossRef]

- Loukas, O.; Chung, H.-R. Demographic Parity: Mitigating Biases in Real-World Data. arXiv 2023. [Google Scholar] [CrossRef]

- Norori, N.; Hu, Q.; Aellen, F.M.; Faraci, F.D.; Tzovara, A. Addressing bias in big data and AI for health care: A call for open science. Patterns 2021, 2, 100347. [Google Scholar] [CrossRef] [PubMed]

- Keogh, A.; Argent, R.; Doherty, C.; Duignan, C.; Fennelly, O.; Purcell, C.; Johnston, W.; Caulfield, B. Breaking down the Digital Fortress: The Unseen Challenges in Healthcare Technology—Lessons Learned from 10 Years of Research. Sensors 2024, 24, 3780. [Google Scholar] [CrossRef] [PubMed]

- Palaniappan, K.; Lin, E.Y.T.; Vogel, S. Global Regulatory Frameworks for the Use of Artificial Intelligence (AI) in the Healthcare Services Sector. Healthcare 2024, 12, 562. [Google Scholar] [CrossRef] [PubMed]

- Derek, C.; Angus, M. Randomized Clinical Trials of Artificial Intelligence. JAMA 2020, 323, 1043–1045. [Google Scholar] [CrossRef]

- Shah, S.M.M. Harnessing Electronic Patient Records for AI Innovation: Balancing Data Privacy and Diagnostic Advancement. J. Khyber Coll. Dent. 2024, 14, 1. [Google Scholar] [CrossRef]

- Murdoch, B. Privacy and artificial intelligence: Challenges for protecting health information in a new era. BMC Med. Ethics 2021, 22, 122. [Google Scholar] [CrossRef] [PubMed]

- Inukonda, J.; Tetala, V.R.R.; Hallur, J. Explainable Artificial Intelligence (XAI) in Healthcare: Enhancing Transparency and Trust. Int. J. Multidiscip. Res. 2024, 6, 30010. [Google Scholar] [CrossRef]

- Hellmeier, F.; Brosien, K.; Eickhoff, C.; Meyer, A. Beyond One-Time Validation: A Framework for Adaptive Validation of Prognostic and Diagnostic AI-based Medical Devices. arXiv 2024. [Google Scholar] [CrossRef]

- Myllyaho, L.; Raatikainen, M.; Männistö, T.; Mikkonen, T.; Nurminen, J.K. Systematic Literature Review of Validation Methods for AI Systems. arXiv 2021. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Genovese, A.; Borna, S.; Gomez-Cabello, C.A.; Haider, S.A.; Prabha, S.; Trabilsy, M.; Forte, A.J. From Promise to Practice: Harnessing AI’s Power to Transform Medicine. J. Clin. Med. 2025, 14, 1225. https://doi.org/10.3390/jcm14041225

Genovese A, Borna S, Gomez-Cabello CA, Haider SA, Prabha S, Trabilsy M, Forte AJ. From Promise to Practice: Harnessing AI’s Power to Transform Medicine. Journal of Clinical Medicine. 2025; 14(4):1225. https://doi.org/10.3390/jcm14041225

Chicago/Turabian StyleGenovese, Ariana, Sahar Borna, Cesar A. Gomez-Cabello, Syed Ali Haider, Srinivasagam Prabha, Maissa Trabilsy, and Antonio Jorge Forte. 2025. "From Promise to Practice: Harnessing AI’s Power to Transform Medicine" Journal of Clinical Medicine 14, no. 4: 1225. https://doi.org/10.3390/jcm14041225

APA StyleGenovese, A., Borna, S., Gomez-Cabello, C. A., Haider, S. A., Prabha, S., Trabilsy, M., & Forte, A. J. (2025). From Promise to Practice: Harnessing AI’s Power to Transform Medicine. Journal of Clinical Medicine, 14(4), 1225. https://doi.org/10.3390/jcm14041225