Ethics and Algorithms to Navigate AI’s Emerging Role in Organ Transplantation

Abstract

:1. Introduction

2. Materials and Methods

- Studies not written in English;

- Conference abstracts, notes, letters, case reports, or animal studies;

- Duplicate studies.

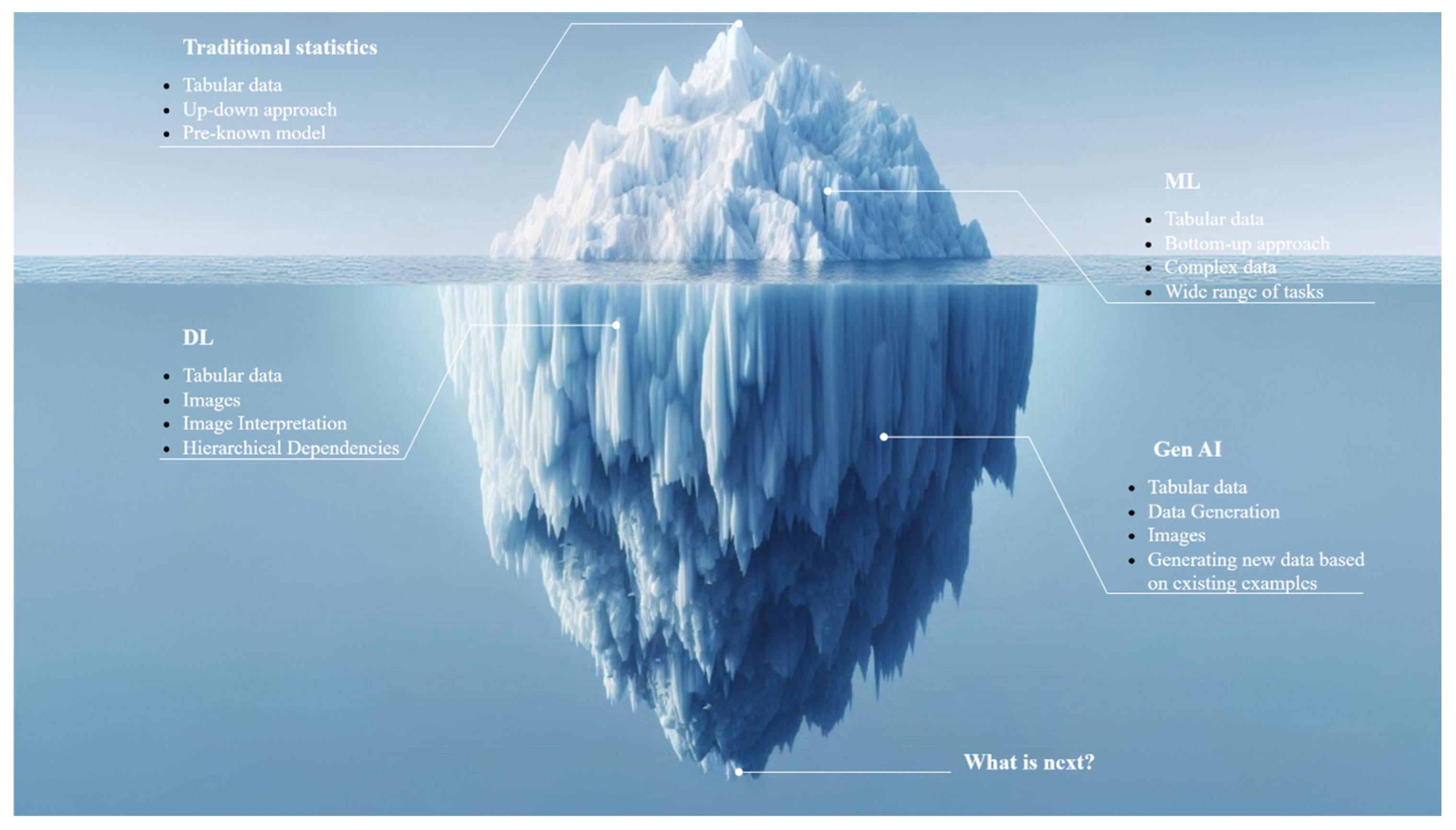

3. Evolution of Data Analysis—From Traditional Statistics to Generative AI

4. AI Tools in Transplantation

4.1. Kidney Transplantation

4.2. Heart Transplantation

4.3. Liver Transplantation

5. Ethical Aspects of AI Tools in Organ Transplantation

5.1. Promoting Equity by Addressing Bias and Fairness in AI Systems

5.2. Transparency and Explainability: Unveiling the Black Box Through Transparency and Explainability

5.2.1. Transparency and Its Ethical Importance

- Data Transparency: The datasets used to train AI systems must be accessible, representative, and of high quality. This involves disclosing the sources of data, the methods of data collection, and addressing any potential biases. In transplantation, where patient outcomes can vary widely, transparent data practices help stakeholders understand the factors influencing AI recommendations [55].

- Algorithmic Transparency: The complexity of AI models, particularly deep learning algorithms, often results in what is termed a “black box” effect, where the internal workings of the model are opaque [56]. While it may not always be feasible to disclose every detail of the model’s operations, providing a high-level overview of how the model functions and the types of patterns it detects is crucial. This allows clinicians to better understand and trust AI-assisted decisions.

5.2.2. Provide Explainability to Bridge the Gap Between Complexity and Comprehension

- Interpretable Models: One approach to enhancing explainability is using simpler, more interpretable models like decision trees or linear regression, which offer straightforward explanations. However, in complex domains like transplantation, where numerous factors influence outcomes, these simpler models might not be sufficiently accurate [57]. The challenge lies in balancing the need for interpretability with the accuracy required for clinical decision-making [58].

- Post-Hoc Explainability Tools: Techniques such as Local Interpretable Model-agnostic Explanations (LIME) [59] and SHapley Additive exPlanations (SHAP) [60] have been developed to provide post-hoc explanations for complex AI models. These tools allow clinicians to see how different features contributed to the AI’s decisions, offering insights that can increase confidence in AI-generated recommendations.

- Contextual Explanations: Tailoring explanations to the audience is essential. For clinicians, detailed explanations that align with medical reasoning are necessary, while for patients, explanations should focus on the implications for their health and treatment options [61,62]. Providing relevant and understandable information helps ensure that both patients and healthcare providers can make informed decisions based on AI outputs.

5.2.3. The Ethical Imperative of Transparency and Explainability

- Autonomy and Informed Consent: Patients have the right to make informed decisions about their care, which requires a clear understanding of AI recommendations. Explainable AI respects patient autonomy by enabling informed choices based on a full understanding of the risks and benefits. Informed consent is a foundational principle of medical ethics, requiring that patients understand and agree to the treatments they receive. The use of AI in transplantation introduces new complexities to the process of obtaining informed consent. Patients may have a limited understanding of AI technology and its role in their care, which can hinder their ability to make fully informed decisions. This is particularly concerning when AI is used to predict outcomes or recommend specific treatments, as patients may not be aware of the limitations or uncertainties inherent in these tools. Healthcare providers must ensure that patients are adequately informed about the use of AI in their care, including the potential risks and benefits. This involves not only explaining how AI is used but also discussing the limitations of the technology and the potential for error. Ethical frameworks for informed consent in the era of AI must evolve to address these new challenges, ensuring that patients retain autonomy over their healthcare decisions [64].

- Non-Maleficence and Beneficence: Transparent and explainable AI systems help prevent harm by allowing for the identification and correction of errors or biases. This ensures that AI tools contribute positively to patient outcomes. While AI can provide valuable insights and support to healthcare providers, there is a risk that over-reliance on these tools could undermine the autonomy of both physicians and patients. Ethical concerns arise when AI recommendations are followed without critical evaluation or when they conflict with the clinical judgment of physicians [65]. The concept of “algorithmic authority” refers to the growing reliance on AI systems to make decisions traditionally made by humans. In transplantation, this could lead to situations where AI-driven decisions override human judgment, potentially leading to outcomes that are not in the best interest of the patient. To address these concerns, it is essential to establish clear guidelines that balance the use of AI with human oversight, ensuring that AI tools augment rather than replace clinical expertise [66,67].

- Justice: Transparency and explainability are critical for identifying and addressing potential biases in AI systems. Ensuring fairness and equity in AI applications is essential, especially in transplantation, where disparities in access and outcomes must be carefully managed. The adoption of AI in transplantation has the potential to exacerbate existing inequalities in healthcare access. Advanced AI tools are often developed and implemented in well-resourced healthcare settings, potentially leaving less affluent institutions and their patients at a disadvantage. This raises ethical questions about the equitable distribution of the benefits of AI, particularly in a field as critical as transplantation, where access to care can be a matter of life and death [68]. Efforts to promote equity in AI deployment include the development of policies and frameworks that ensure fair access to AI technologies, regardless of geographic location or socioeconomic status. Additionally, there is a need for global collaboration to address disparities in AI development and implementation, ensuring that the benefits of AI in transplantation are available to all patients [69,70].

- Safety and Privacy: The deployment of AI in transplantation relies on the analysis of large datasets, including sensitive patient information. This raises significant ethical concerns regarding data privacy and security. The potential for data breaches, unauthorized access, and misuse of patient information is a major risk that must be carefully managed. Moreover, the sharing of data across institutions and international borders for AI development and validation purposes introduces additional layers of complexity and risk [71]. Ethical guidelines for AI in healthcare emphasize the importance of robust data protection measures, including encryption, anonymization, and strict access controls. However, the dynamic nature of AI development, which often requires continuous data collection and analysis, poses ongoing challenges to maintaining patient privacy. Ensuring that patient data is used responsibly and securely is essential to maintaining public trust and safeguarding patient rights [72].

6. Discussion

6.1. Impact on Patient–Physician Relationships

6.2. Is AI Changing the Clinical Workflows in Organ Transplantation?

6.3. Future Perspectives

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- IRODaT—International Registry on Organ Donation and Transplantation. Available online: https://www.irodat.org/?p=publications (accessed on 20 August 2024).

- Global Observatory on Donation and Transplantation. Available online: https://www.transplant-observatory.org (accessed on 20 August 2024).

- Organ Donation Statistics. Available online: https://www.organdonor.gov/learn/organ-donation-statistics (accessed on 20 August 2024).

- Al Moussawy, M.; Lakkis, Z.S.; Ansari, Z.A.; Cherukuri, A.R.; Abou-Daya, K.I. The transformative potential of artificial intelligence in solid organ transplantation. Front. Transplant. 2024, 3, 1361491. [Google Scholar] [CrossRef] [PubMed]

- Peloso, A.; Moeckli, B.; Delaune, V.; Oldani, G.; Andres, A.; Compagnon, P. Artificial Intelligence: Present and Future Potential for Solid Organ Transplantation. Transpl. Int. 2022, 35, 10640. [Google Scholar] [CrossRef] [PubMed]

- Rawashdeh, B. Artificial Intelligence in Organ Transplantation: Surveying Current Applications, Addressing Challenges and Exploring Frontiers; IntechOpen: London, UK, 2024. [Google Scholar] [CrossRef]

- Deshpande, R. Smart match: Revolutionizing organ allocation through artificial intelligence. Front. Artif. Intell. 2024, 7, 1364149. [Google Scholar] [CrossRef] [PubMed]

- Balch, J.A.; Delitto, D.; Tighe, P.J.; Zarrinpar, A.; Efron, P.A.; Rashidi, P.; Upchurch, G.R.; Bihorac, A.; Loftus, T.J. Machine Learning Applications in Solid Organ Transplantation and Related Complications. Front. Immunol. 2021, 12, 739728. [Google Scholar] [CrossRef]

- Basuli, D.; Roy, S. Beyond Human Limits: Harnessing Artificial Intelligence to Optimize Immunosuppression in Kidney Transplantation. J. Clin. Med. Res. 2023, 15, 391–398. [Google Scholar] [CrossRef]

- Kers, J.; Bülow, R.D.; Klinkhammer, B.M.; Breimer, G.E.; Fontana, F.; Abiola, A.A.; Hofstraat, R.; Corthals, G.L.; Peters-Sengers, H.; Djudjaj, S.; et al. Deep learning-based classification of kidney transplant pathology: A retrospective, multicentre, proof-of-concept study. Lancet Digit Health 2022, 4, e18–e26. [Google Scholar] [CrossRef]

- Yi, Z.; Salem, F.; Menon, M.C.; Keung, K.; Xi, C.; Hultin, S.; Al Rasheed, M.R.H.; Li, L.; Su, F.; Sun, Z.; et al. Deep learning identified pathological abnormalities predictive of graft loss in kidney transplant biopsies. Kidney Int. 2022, 101, 288–298. [Google Scholar] [CrossRef]

- Gotlieb, N.; Azhie, A.; Sharma, D.; Spann, A.; Suo, N.J.; Tran, J.; Orchanian-Cheff, A.; Wang, B.; Goldenberg, A.; Chassé, M.; et al. The promise of machine learning applications in solid organ transplantation. npj Digit. Med. 2022, 5, 89. [Google Scholar] [CrossRef]

- Ley, C.; Martin, R.K.; Pareek, A.; Groll, A.; Seil, R.; Tischer, T. Machine learning and conventional statistics: Making sense of the differences. Knee Surg. Sports Traumatol. Arthrosc. 2022, 30, 753–757. [Google Scholar] [CrossRef]

- Aldoseri, A.; Al-Khalifa, K.N.; Hamouda, A.M. Re-thinking data strategy and integration for artificial intelligence: Concepts, opportunities, and challenges. Appl. Sci. 2023, 13, 7082. [Google Scholar] [CrossRef]

- Edwards, A.S.; Kaplan, B.; Jie, T. A Primer on Machine Learning. Transplantation 2021, 105, 699–703. [Google Scholar] [CrossRef] [PubMed]

- Bhat, M.; Rabindranath, M.; Chara, B.S.; Simonetto, D.A. Artificial intelligence, machine learning, and deep learning in liver transplantation. J. Hepatol. 2023, 78, 1216–1233. [Google Scholar] [CrossRef] [PubMed]

- Truchot, A.; Raynaud, M.; Kamar, N.; Naesens, M.; Legendre, C.; Delahousse, M.; Thaunat, O.; Buchler, M.; Crespo, M.; Linhares, K.; et al. Machine learning does not outperform traditional statistical modelling for kidney allograft failure prediction. Kidney Int. 2023, 103, 936–948. [Google Scholar] [CrossRef] [PubMed]

- Eckardt, J.N.; Hahn, W.; Röllig, C.; Stasik, S.; Platzbecker, U.; Müller-Tidow, C.; Serve, H.; Baldus, C.D.; Schliemann, C.; Schäfer-Eckart, K.; et al. Mimicking clinical trials with synthetic acute myeloid leukemia patients using generative artificial intelligence. npj Digit. Med. 2024, 7, 76. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Cairns, B.J.; Li, J.; Zhu, T. Generating synthetic mixed-type longitudinal electronic health records for artificial intelligent applications. npj Digit. Med. 2023, 6, 98. [Google Scholar] [CrossRef]

- Guillaudeux, M.; Rousseau, O.; Petot, J.; Bennis, Z.; Dein, C.A.; Goronflot, T.; Vince, N.; Limou, S.; Karakachoff, M.; Wargny, M.; et al. Patient-centric synthetic data generation, no reason to risk re-identification in biomedical data analysis. npj Digit. Med. 2023, 6, 37. [Google Scholar] [CrossRef]

- Deeb, M.; Gangadhar, A.; Rabindranath, M.; Rao, K.; Brudno, M.; Sidhu, A.; Wang, B.; Bhat, M. The emerging role of generative artificial intelligence in transplant medicine. Am. J. Transplant. 2024, 24, 1724–1730. [Google Scholar] [CrossRef]

- Assis de Souza, A.; Stubbs, A.; Hesselink, D.; Baan, C.C.; Boer, K. Cherry on Top or Real Need? A Review of Explainable Machine Learning in Kidney Transplantation. Transplantation 2025, 109, 123–132. [Google Scholar] [CrossRef]

- Connor, K.L.; O’Sullivan, E.D.; Marson, L.P.; Wigmore, S.J.; Harrison, E.M. The Future Role of Machine Learning in Clinical Transplantation. Transplantation 2021, 105, 723–735. [Google Scholar] [CrossRef]

- Ravindhran, B.; Chandak, P.; Schafer, N.; Kundalia, K.; Hwang, W.; Antoniadis, S.; Haroon, U.; Zakri, R.H. Machine learning models in predicting graft survival in kidney transplantation: Meta-analysis. BJS Open 2023, 7, zrad011. [Google Scholar] [CrossRef]

- Loupy, A.; Aubert, O.; Orandi, B.J.; Naesens, M.; Bouatou, Y.; Raynaud, M.; Divard, G.; Jackson, A.M.; Viglietti, D.; Giral, M.; et al. Prediction system for risk of allograft loss in patients receiving kidney transplants: International derivation and validation study. BMJ 2019, 366, l4923. [Google Scholar] [CrossRef] [PubMed]

- Impact For Patients: Transplant Therapeutics Consortium, C-Path. Available online: https://c-path.org/story/impact-for-patients-transplant-therapeutics-consortium/ (accessed on 20 August 2024).

- Qualification Opinion for the iBox Scoring System as a Secondary Efficacy Endpoint in Clinical Trials Investigating Novel Immunosuppressive Medicines in Kidney Transplant Patients. 2022. Available online: https://www.ema.europa.eu/en/documents/scientific-guideline/qualification-opinion-ibox-scoring-system-secondary-efficacy-endpoint-clinical-trials-investigating-novel-immunosuppressive-medicines-kidney-transplant-patients_en.pdf (accessed on 20 August 2024).

- Klein, A.; Loupy, A.; Stegall, M.; Helanterä, I.; Kosinski, L.; Frey, E.; Aubert, O.; Divard, G.; Newell, K.; Meier-Kriesche, H.-U.; et al. Qualifying a Novel Clinical Trial Endpoint (iBOX) Predictive of Long-Term Kidney Transplant Outcomes. Transpl. Int. 2023, 36, 11951. [Google Scholar] [CrossRef] [PubMed]

- Ali, H.; Mohammed, M.; Molnar, M.Z.; Fülöp, T.; Burke, B.; Shroff, S.; Shroff, A.; Briggs, D.; Krishnan, N. Live-Donor Kidney Transplant Outcome Prediction (L-TOP) using artificial intelligence. Nephrol. Dial. Transplant. 2024, 39, 2088–2099. [Google Scholar] [CrossRef] [PubMed]

- Ali, H.; Mohamed, M.; Molnar, M.Z.; Fülöp, T.; Burke, B.; Shroff, A.; Shroff, S.; Briggs, D.; Krishnan, N. Deceased-Donor Kidney Transplant Outcome Prediction Using Artificial Intelligence to Aid Decision-Making in Kidney Allocation. ASAIO J. 2024, 70, 808–818. [Google Scholar] [CrossRef] [PubMed]

- Schapranow, M.P.; Bayat, M.; Rasheed, A.; Naik, M.; Graf, V.; Schmidt, D.; Budde, K.; Cardinal, H.; Sapir-Pichhadze, R.; Fenninger, F.; et al. NephroCAGE—German-Canadian Consortium on AI for Improved Kidney Transplantation Outcome: Protocol for an Algorithm Development and Validation Study. JMIR Res. Protoc. 2023, 12, e48892. [Google Scholar] [CrossRef]

- Patzer, R.E.; Basu, M.; Larsen, C.P.; Pastan, S.O.; Mohan, S.; Patzer, M.; Konomos, M.M.; McClellan, W.M.; Lea, J.; Howard, D.; et al. iChoose Kidney: A Clinical Decision Aid for Kidney Transplantation Versus Dialysis Treatment. Transplantation 2016, 100, 630–639. [Google Scholar] [CrossRef]

- Gander, J.C.; Basu, M.; McPherson, L.; Garber, M.D.; Pastan, S.O.; Manatunga, A.; Arriola, K.J.; Patzer, R.E. iChoose Kidney for Treatment Options: Updated Models for Shared Decision Aid. Transplantation 2018, 102, e370–e371. [Google Scholar] [CrossRef]

- iChoose Kidney | Emory University | Atlanta GA. Available online: https://ichoosekidney.emory.edu/index.html (accessed on 20 August 2024).

- Seferović, P.M.; Tsutsui, H.; Mcnamara, D.M.; Ristić, A.D.; Basso, C.; Bozkurt, B.; Cooper, L.T.; Filippatos, G.; Ide, T.; Inomata, T.; et al. Heart Failure Association, Heart Failure Society of America, and Japanese Heart Failure Society Position Statement on Endomyocardial Biopsy. J. Card. Fail. 2021, 27, 727–743. [Google Scholar] [CrossRef]

- Costanzo, M.R.; Dipchand, A.; Starling, R.; Anderson, A.; Chan, M.; Desai, S.; Fedson, S.; Fisher, P.; Gonzales-Stawinski, G.; Martinelli, L.; et al. The International Society of Heart and Lung Transplantation Guidelines for the care of heart transplant recipients. J. Heart Lung Transplant. 2010, 29, 914–956. [Google Scholar] [CrossRef]

- Caves, P.K.; Stinson, E.B.; Billingham, M.E.; Shumway, N.E. Serial transvenous biopsy of the transplanted human heart. Improved management of acute rejection episodes. Lancet 1974, 303, 821–826. [Google Scholar] [CrossRef]

- Bermpeis, K.; Esposito, G.; Gallinoro, E.; Paolisso, P.; Bertolone, D.T.; Fabbricatore, D.; Mileva, N.; Munhoz, D.; Buckley, J.; Wyffels, E.; et al. Safety of Right and Left Ventricular Endomyocardial Biopsy in Heart Transplantation and Cardiomyopathy Patients. JACC Heart Fail. 2022, 10, 963–973. [Google Scholar] [CrossRef] [PubMed]

- Adedinsewo, D.; Hardway, H.D.; Morales-Lara, A.C.; Wieczorek, M.A.; Johnson, P.W.; Douglass, E.J.; Dangott, B.J.; E Nakhleh, R.; Narula, T.; Patel, P.C.; et al. Non-invasive detection of cardiac allograft rejection among heart transplant recipients using an electrocardiogram based deep learning model. Eur. Heart J. Digit. Health 2023, 4, 71–80. [Google Scholar] [CrossRef] [PubMed]

- Agasthi, P.; Buras, M.R.; Smith, S.D.; Golafshar, M.A.; Mookadam, F.; Anand, S.; Rosenthal, J.L.; Hardaway, B.W.; DeValeria, P.; Arsanjani, R. Machine learning helps predict long-term mortality and graft failure in patients undergoing heart transplant. Gen. Thorac. Cardiovasc. Surg. 2020, 68, 1369–1376. [Google Scholar] [CrossRef] [PubMed]

- Zare, A.; Zare, M.A.; Zarei, N.; Yaghoobi, R.; Zare, M.A.; Salehi, S.; Geramizadeh, B.; Malekhosseini, S.A.; Azarpira, N. A Neural Network Approach to Predict Acute Allograft Rejection in Liver Transplant Recipients Using Routine Laboratory Data. Hepat. Mon. 2017, 17, e55092. [Google Scholar] [CrossRef]

- Lau, L.; Kankanige, Y.; Rubinstein, B.; Jones, R.; Christophi, C.; Muralidharan, V.; Bailey, J. Machine-Learning Algorithms Predict Graft Failure After Liver Transplantation. Transplantation 2017, 101, e125–e132. [Google Scholar] [CrossRef]

- Chongo, G.; Soldera, J. Use of machine learning models for the prognostication of liver transplantation: A systematic review. World J. Transplant. 2024, 14, 88891. [Google Scholar] [CrossRef]

- Kang, J. Opportunities and challenges of machine learning in transplant-related studies. Am. J. Transplant. 2024, 24, 322–324. [Google Scholar] [CrossRef]

- van Giffen, B.; Herhausen, D.; Fahse, T. Overcoming the pitfalls and perils of algorithms: A classification of machine learning biases and mitigation methods. J. Bus. Res. 2022, 144, 93–106. [Google Scholar] [CrossRef]

- Obermeyer, Z.; Powers, B.; Vogeli, C.; Mullainathan, S. Dissecting racial bias in an algorithm used to manage the health of populations. Science 2019, 366, 447–453. [Google Scholar] [CrossRef]

- Rajkomar, A.; Hardt, M.; Howell, M.D.; Corrado, G.; Chin, M.H. Ensuring Fairness in Machine Learning to Advance Health Equity. Ann. Intern. Med. 2018, 169, 866–872. [Google Scholar] [CrossRef]

- González-Sendino, R.; Serrano, E.; Bajo, J. Mitigating bias in artificial intelligence: Fair data generation via causal models for transparent and explainable decision-making. Future Gener Comput. Syst. 2024, 155, 384–401. [Google Scholar] [CrossRef]

- Alvarez, J.M.; Colmenarejo, A.B.; Elobaid, A.; Fabbrizzi, S.; Fahimi, M.; Ferrara, A.; Ghodsi, S.; Mougan, C.; Papageorgiou, I.; Reyero, P.; et al. Policy advice and best practices on bias and fairness in AI. Ethics Inf. Technol. 2024, 26, 31. [Google Scholar] [CrossRef]

- FERI: A Multitask-based Fairness Achieving Algorithm with Applications to Fair Organ Transplantation. AMIA Jt. Summits Transl. Sci. Proc. 2024, 2024, 593–602.

- Lebret, A. Allocating organs through algorithms and equitable access to transplantation—A European human rights law approach. J. Law Biosci. 2023, 10, lsad004. [Google Scholar] [CrossRef]

- Chen, R.J.; Wang, J.J.; Williamson, D.F.K.; Chen, T.Y.; Lipkova, J.; Lu, M.Y.; Sahai, S.; Mahmood, F. Algorithm fairness in artificial intelligence for medicine and healthcare. Nat. Biomed. Eng. 2023, 7, 719–742. [Google Scholar] [CrossRef]

- Fairness and Machine Learning, MIT Press. Available online: https://mitpress.mit.edu/9780262048613/fairness-and-machine-learning/ (accessed on 20 August 2024).

- Balasubramaniam, N.; Kauppinen, M.; Rannisto, A.; Hiekkanen, K.; Kujala, S. Transparency and explainability of AI systems: From ethical guidelines to requirements. Inf. Softw. Technol. 2023, 159, 107197. [Google Scholar] [CrossRef]

- Al Kuwaiti, A.; Nazer, K.; Al-Reedy, A.; Al-Shehri, S.; Al-Muhanna, A.; Subbarayalu, A.V.; Al Muhanna, D.; Al-Muhanna, F.A. A Review of the Role of Artificial Intelligence in Healthcare. J. Pers. Med. 2023, 13, 951. [Google Scholar] [CrossRef]

- Samek, W.; Wiegand, T.; Müller, K.R. Explainable artificial intelligence: Understanding, visualizing and interpreting deep learning models. arXiv 2017, arXiv:1708.08296. Available online: https://arxiv.org/pdf/1708.08296 (accessed on 20 August 2024).

- Lipton, Z.C. The Mythos of Model Interpretability: In machine learning, the concept of interpretability is both important and slippery. Queue 2018, 16, 31–57. [Google Scholar] [CrossRef]

- Rudin, C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 2019, 1, 206–215. [Google Scholar] [CrossRef]

- Zafar, M.R.; Khan, N. Deterministic Local Interpretable Model-Agnostic Explanations for Stable Explainability. Mach. Learn Knowl. Extr. 2021, 3, 525–541. [Google Scholar] [CrossRef]

- Lundberg, S.; Lee, S.I. A Unified Approach to Interpreting Model Predictions. arXiv 2017, arXiv:1705.07874. Available online: https://arxiv.org/pdf/1705.07874 (accessed on 20 August 2024).

- Schuler, K.; Sedlmayr, M.; Sedlmayr, B. Mapping Medical Context: Workshop-Driven Clustering of Influencing Factors on Medical Decision-Making. Stud. Health Technol. Inform. 2024, 316, 1754–1758. [Google Scholar] [CrossRef] [PubMed]

- Schoonderwoerd, T.A.J.; Jorritsma, W.; Neerincx, M.A.; van den Bosch, K. Human-centered XAI: Developing design patterns for explanations of clinical decision support systems. Int. J. Hum-Comput. Stud. 2021, 154, 102684. [Google Scholar] [CrossRef]

- Hagendorff, T. The Ethics of AI Ethics: An Evaluation of Guidelines. Minds Mach. 2020, 30, 99–120. [Google Scholar] [CrossRef]

- Grote, T.; Berens, P. On the ethics of algorithmic decision-making in healthcare. J. Med. Ethics 2020, 46, 205–211. [Google Scholar] [CrossRef]

- Topol, E.J. High-performance medicine: The convergence of human and artificial intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef]

- Danks, D.; London, A.J. Algorithmic Bias in Autonomous Systems. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, Melbourne, VIC, Australia, 19–25 August 2017; pp. 4691–4697. [Google Scholar] [CrossRef]

- Char, D.S.; Shah, N.H.; Magnus, D. Implementing Machine Learning in Health Care—Addressing Ethical Challenges. N. Engl. J. Med. 2018, 378, 981–983. [Google Scholar] [CrossRef]

- Wiens, J.; Saria, S.; Sendak, M.; Ghassemi, M.; Liu, V.X.; Doshi-Velez, F.; Jung, K.; Heller, K.; Kale, D.; Saeed, M.; et al. Do no harm: A roadmap for responsible machine learning for health care. Nat. Med. 2019, 25, 1337–1340. [Google Scholar] [CrossRef]

- Vayena, E.; Blasimme, A.; Cohen, I.G. Machine learning in medicine: Addressing ethical challenges. PLoS Med. 2018, 15, e1002689. [Google Scholar] [CrossRef]

- Fjeld, J.; Achten, N.; Hilligoss, H.; Nagy, A.; Srikumar, M. Principled Artificial Intelligence: Mapping Consensus in Ethical and Rights-Based Approaches to Principles for AI. SSRN Electron. J. 2020. [Google Scholar] [CrossRef]

- Price, W.N. Big data and black-box medical algorithms. Sci. Transl. Med. 2018, 10, eaao5333. [Google Scholar] [CrossRef] [PubMed]

- Mittelstadt, B.D.; Floridi, L. The Ethics of Big Data: Current and Foreseeable Issues in Biomedical Contexts. Sci. Eng. Ethics 2016, 22, 303–341. [Google Scholar] [CrossRef] [PubMed]

- Drezga-Kleiminger, M.; Demaree-Cotton, J.; Koplin, J.; Savulescu, J.; Wilkinson, D. Should AI allocate livers for transplant? Public attitudes and ethical considerations. BMC Med. Ethics 2023, 24, 102. [Google Scholar] [CrossRef] [PubMed]

- Doshi-Velez, F.; Kortz, M.; Budish, R.; Bavitz, C.; Gershman, S.; O’Brien, D.F.; Scott, K.; Shieber, S.; Waldo, J.; Weinberger, D.; et al. Accountability of AI Under the Law: The Role of Explanation. arXiv 2019, arXiv:1711.01134. Available online: https://arxiv.org/pdf/1711.01134 (accessed on 20 August 2024). [CrossRef]

- Salybekov, A.A.; Wolfien, M.; Hahn, W.; Hidaka, S.; Kobayashi, S. Artificial Intelligence Reporting Guidelines’ Adherence in Nephrology for Improved Research and Clinical Outcomes. Biomedicines 2024, 12, 606. [Google Scholar] [CrossRef]

- Richardson, J.P.; Smith, C.; Curtis, S.; Watson, S.; Zhu, X.; Barry, B.; Sharp, R.R. Patient apprehensions about the use of artificial intelligence in healthcare. npj Digit. Med. 2021, 4, 140. [Google Scholar] [CrossRef]

- Robertson, C.; Woods, A.; Bergstrand, K.; Findley, J.; Balser, C.; Slepian, M.J. Diverse patients’ attitudes towards Artificial Intelligence (AI) in diagnosis. PLoS Digit Health 2023, 2, e0000237. [Google Scholar] [CrossRef]

- Rawbone, R. Principles of Biomedical Ethics, 7th Edition. Occup. Med. 2015, 65, 88–89. [Google Scholar] [CrossRef]

- Hatherley, J.J. Limits of trust in medical AI. J. Med. Ethics 2020, 46, 478–481. [Google Scholar] [CrossRef]

- Transforming Healthcare with AI | IJGM. Available online: https://www.dovepress.com/transforming-healthcare-with-ai-promises-pitfalls-and-pathways-forward-peer-reviewed-fulltext-article-IJGM (accessed on 20 August 2024).

- Kempt, H.; Heilinger, J.C.; Nagel, S.K. “I’m afraid I can’t let you do that, Doctor”: Meaningful disagreements with AI in medical contexts. AI Soc. 2023, 38, 1407–1414. [Google Scholar] [CrossRef]

- Yelne, S.; Chaudhary, M.; Dod, K.; Sayyad, A.; Sharma, R. Harnessing the Power of AI: A Comprehensive Review of Its Impact and Challenges in Nursing Science and Healthcare. Cureus 2023, 15, e49252. [Google Scholar] [CrossRef] [PubMed]

- Shamszare, H.; Choudhury, A. Clinicians’ Perceptions of Artificial Intelligence: Focus on Workload, Risk, Trust, Clinical Decision Making, and Clinical Integration. Healthcare 2023, 11, 2308. [Google Scholar] [CrossRef] [PubMed]

- London, A.J. Artificial intelligence in medicine: Overcoming or recapitulating structural challenges to improving patient care? Cell Rep. Med. 2022, 3, 100622. [Google Scholar] [CrossRef]

- Sage, A.T.; Donahoe, L.L.; Shamandy, A.A.; Mousavi, S.H.; Chao, B.T.; Zhou, X.; Valero, J.; Balachandran, S.; Ali, A.; Martinu, T.; et al. A machine-learning approach to human ex vivo lung perfusion predicts transplantation outcomes and promotes organ utilization. Nat. Commun. 2023, 14, 4810. [Google Scholar] [CrossRef]

- Michelson, A.P.; Oh, I.; Gupta, A.; Puri, V.; Kreisel, D.; Gelman, A.E.; Nava, R.; Witt, C.A.; Byers, D.E.; Halverson, L.; et al. Developing Machine Learning Models to Predict Primary Graft Dysfunction After Lung Transplantation. Am. J. Transplant. 2024, 24, 458–467. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Salybekov, A.A.; Yerkos, A.; Sedlmayr, M.; Wolfien, M. Ethics and Algorithms to Navigate AI’s Emerging Role in Organ Transplantation. J. Clin. Med. 2025, 14, 2775. https://doi.org/10.3390/jcm14082775

Salybekov AA, Yerkos A, Sedlmayr M, Wolfien M. Ethics and Algorithms to Navigate AI’s Emerging Role in Organ Transplantation. Journal of Clinical Medicine. 2025; 14(8):2775. https://doi.org/10.3390/jcm14082775

Chicago/Turabian StyleSalybekov, Amankeldi A., Ainur Yerkos, Martin Sedlmayr, and Markus Wolfien. 2025. "Ethics and Algorithms to Navigate AI’s Emerging Role in Organ Transplantation" Journal of Clinical Medicine 14, no. 8: 2775. https://doi.org/10.3390/jcm14082775

APA StyleSalybekov, A. A., Yerkos, A., Sedlmayr, M., & Wolfien, M. (2025). Ethics and Algorithms to Navigate AI’s Emerging Role in Organ Transplantation. Journal of Clinical Medicine, 14(8), 2775. https://doi.org/10.3390/jcm14082775