Research on Walnut (Juglans regia L.) Yield Prediction Based on a Walnut Orchard Point Cloud Model

Abstract

:1. Introduction

2. Materials and Methods

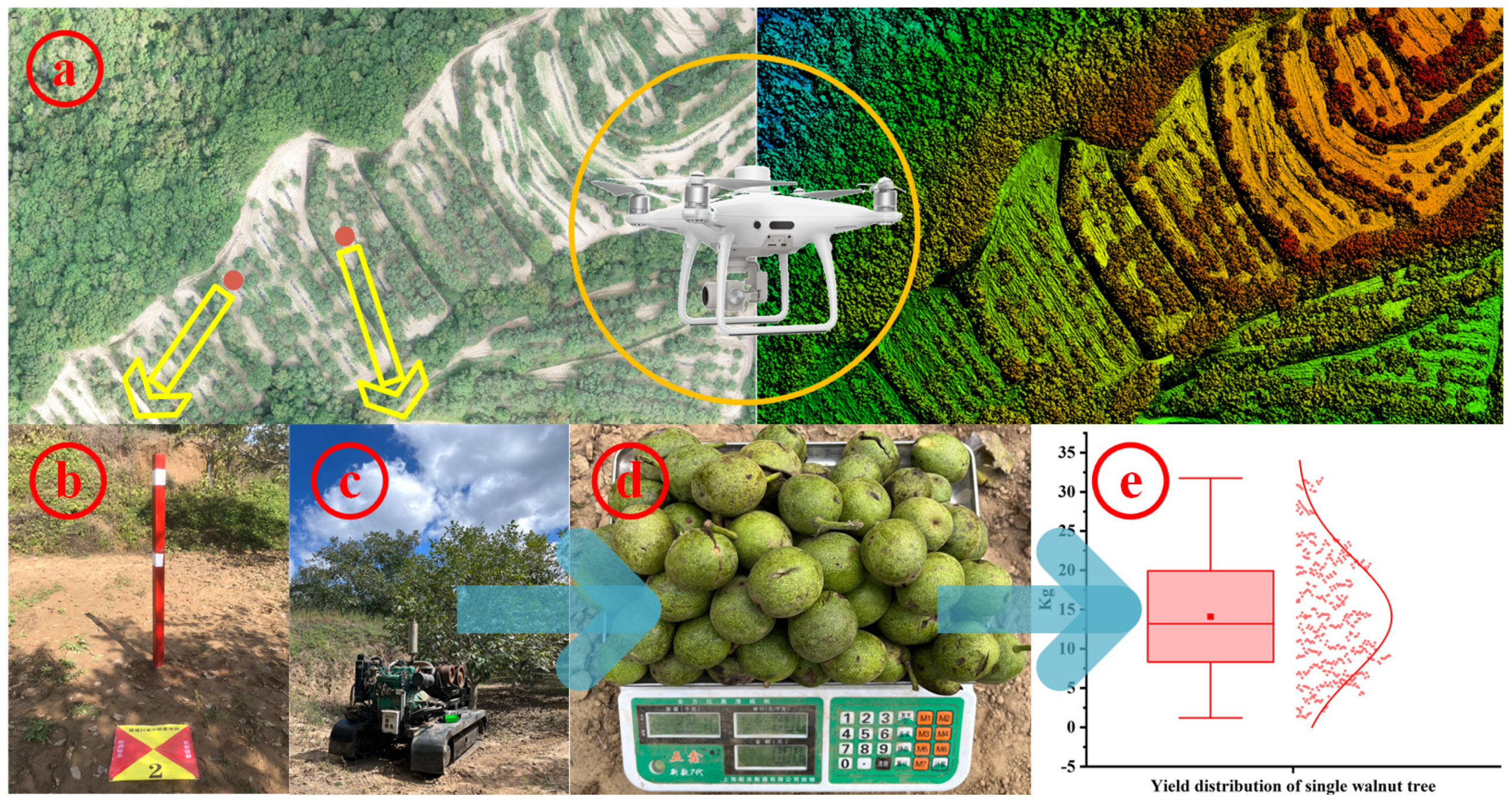

2.1. Data Acquisition and Application

2.2. Reconstruction of the Walnut Orchard Point Cloud Model Based on Neural Radiance Field

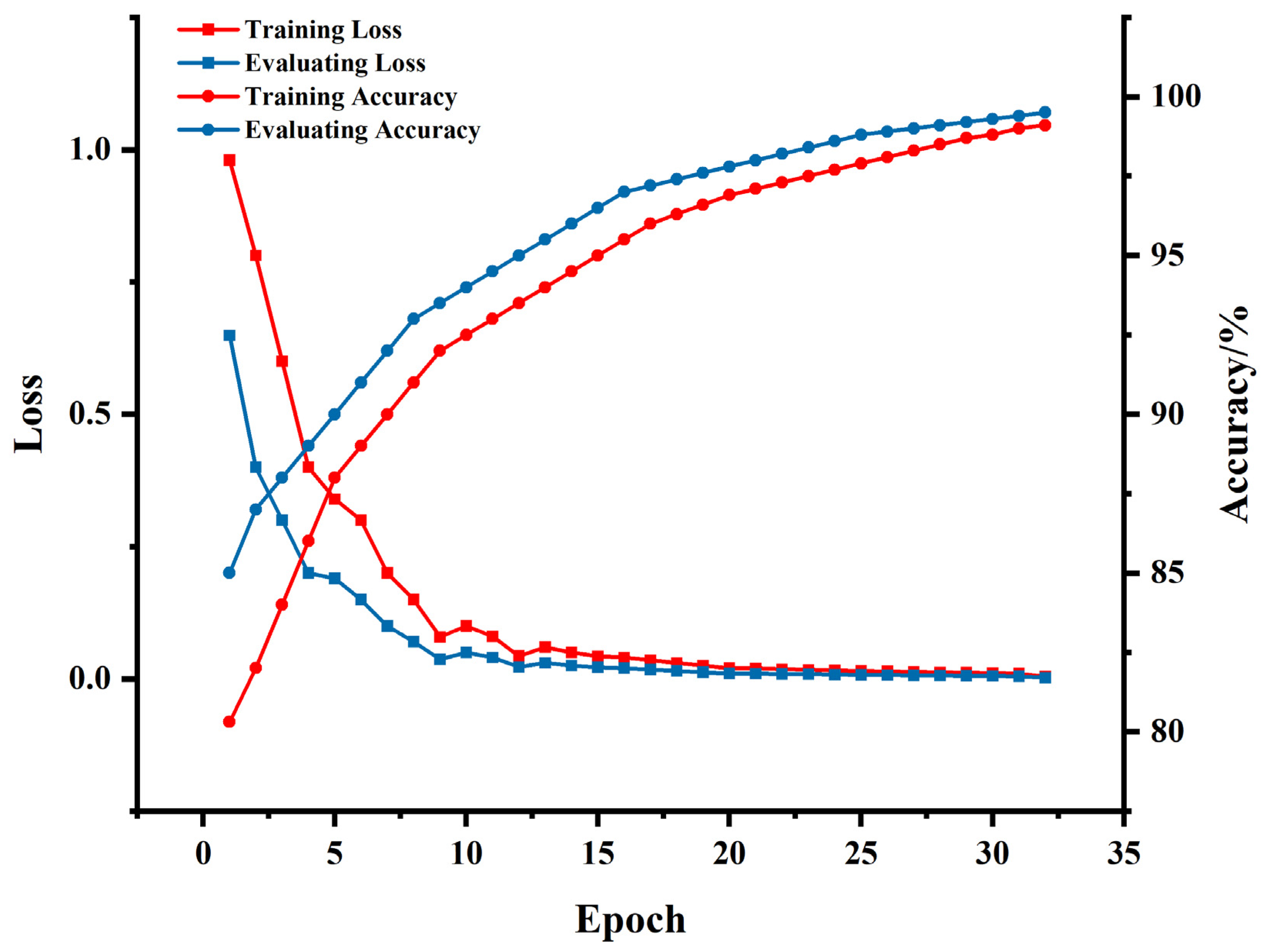

2.3. Semantic Segmentation of the Walnut Orchard Point Cloud Model Based on PointNet++

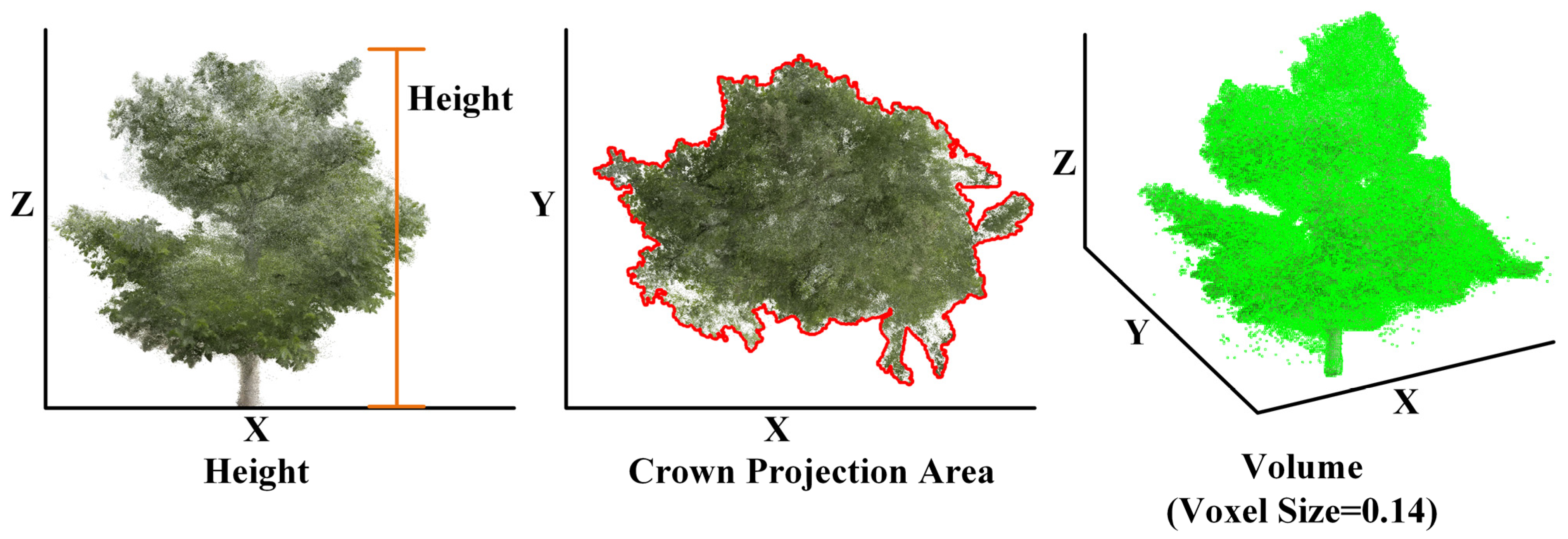

2.4. Calculation of Walnut Tree Morphological Features Based on the Walnut Tree Point Cloud Model

2.5. Yield Modeling and Evaluation Based on Walnut Tree Morphological Features

3. Results

3.1. Walnut Orchard Point Cloud Model Acquisition

3.2. Semantic Segmentation of the Walnut Tree Point Cloud Model

3.3. Distribution of Morphological Features of Individual Walnut Trees

3.4. Walnut Tree Yield Model Construction

4. Discussion

4.1. Advantages and Limitations of Walnut Orchard 3D Point Cloud Modeling

4.2. Physiological Mechanisms of Walnut Tree Morphological Features Affecting Yield

4.3. Comparison of Yield Prediction by Different Methods

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Nguyen, T.H.; Vu, D.C. A review on phytochemical composition and potential health-promoting properties of walnuts. Food Rev. Int. 2023, 39, 397–423. [Google Scholar] [CrossRef]

- Zhu, K.; Ma, J.; Cong, J.; Zhang, T.; Lei, H.; Xu, H.; Luo, Z.; Li, M. The road to reuse of walnut by-products: A comprehensive review of bioactive compounds, extraction and identification methods, biomedical and industrial applications. Trends Food Sci. Technol. 2024, 143, 104264. [Google Scholar] [CrossRef]

- Rébufa, C.; Artaud, J.; Le Dréau, Y. Walnut (Juglans regia L.) oil chemical composition depending on variety, locality, extraction process and storage conditions: A comprehensive review. J. Food Compos. Anal. 2022, 110, 104534. [Google Scholar] [CrossRef]

- Shigaeva, J.; Darr, D. On the socio-economic importance of natural and planted walnut (Juglans regia L.) forests in the Silk Road countries: A systematic review. For. Policy Econ. 2020, 118, 102233. [Google Scholar] [CrossRef]

- Gao, Y.; Hu, J.; Su, X.; Li, Q.; Su, C.; Li, Y.; Ma, G.; Zhang, S.; Yu, X. Extraction, chemical components, bioactive functions and adulteration identification of walnut oils: A review. Grain Oil Sci. Technol. 2024, 7, 30–41. [Google Scholar] [CrossRef]

- Shabbir, M.A.; Nawaz, M.F.; Tariq, T.; Khan, M.R.; Saeed, H.; Abdi, G.; Aadil, R.M. Astounding the synergistic interplay of walnuts in combating inflammation and oxidative damage. J. Funct. Foods 2024, 119, 106292. [Google Scholar] [CrossRef]

- Hassani, D.; Dastjerdi, R.; Soleimani, A.; Jaffaraghaei, M.; Rezaee, R.; Vahdati, K.; Dehghani, A.; Hadadnejhad, H.; Asefnokhostin, M.; Mozaffari, M.; et al. A model for estimation of the potential yield of walnut trees. Acta Hortic. 2014, 1050, 407–412. [Google Scholar] [CrossRef]

- Brauer, D.; Ares, A.; Reid, W.; Thomas, A.; Slusher, J.P. Nut-yield variations and yield–diameter relationships in open-canopy black walnut trees in southern USA. Agrofor. Syst. 2006, 67, 63–72. [Google Scholar] [CrossRef]

- Žalac, H.; Burgess, P.; Graves, A.; Giannitsopoulos, M.; Paponja, I.; Popović, B.; Ivezić, V. Modelling the yield and profitability of intercropped walnut systems in Croatia. Agrofor. Syst. 2021, 97, 279–290. [Google Scholar] [CrossRef]

- van Klompenburg, T.; Kassahun, A.; Catal, C. Crop yield prediction using machine learning: A systematic literature review. Comput. Electron. Agric. 2020, 177, 105709. [Google Scholar] [CrossRef]

- Chlingaryan, A.; Sukkarieh, S.; Whelan, B. Machine learning approaches for crop yield prediction and nitrogen status estimation in precision agriculture: A review. Comput. Electron. Agric. 2018, 151, 61–69. [Google Scholar]

- Paudel, D.; Boogaard, H.; de Wit, A.; Janssen, S.; Osinga, S.; Pylianidis, C.; Athanasiadis, I.N. Machine learning for large-scale crop yield forecasting. Agric. Syst. 2021, 187, 103016. [Google Scholar] [CrossRef]

- Farjon, G.; Huijun, L.; Edan, Y. Deep-learning-based counting methods, datasets, and applications in agriculture: A review. Precis. Agric. 2023, 24, 1683–1711. [Google Scholar] [CrossRef]

- Olson, D.; Anderson, J. Review on unmanned aerial vehicles, remote sensors, imagery processing, and their applications in agriculture. Agron. J. 2021, 113, 971–992. [Google Scholar] [CrossRef]

- Zamani-Noor, N.; Feistkorn, D. Monitoring growth status of winter oilseed rape by NDVI and NDYI derived from UAV-based Red–Green–Blue imagery. Agronomy 2022, 12, 2212. [Google Scholar] [CrossRef]

- Fass, E.; Shlomi, E.; Ziv, C.; Glickman, O.; Helman, D. Machine learning models based on hyperspectral imaging for pre-harvest tomato fruit quality monitoring. Comput. Electron. Agric. 2024, 229, 109788. [Google Scholar] [CrossRef]

- Chuquimarca, L.E.; Vintimilla, B.X.; Velastin, S.A. A review of external quality inspection for fruit grading using CNN models. Artif. Intell. Agric. 2024, 14, 1–20. [Google Scholar] [CrossRef]

- Rahman, M.M.; Robson, A.; Bristow, M. Exploring the potential of high resolution worldview-3 Imagery for estimating yield of mango. Remote. Sens. 2018, 10, 1866. [Google Scholar] [CrossRef]

- Jin, Z.; Azzari, G.; Lobell, D.B. Improving the accuracy of satellite-based high-resolution yield estimation: A test of multiple scalable approaches. Agric. For. Meteorol. 2017, 247, 207–220. [Google Scholar] [CrossRef]

- Ye, X.; Sakai, K.; Manago, M.; Asada, S.-I.; Sasao, A. Prediction of citrus yield from airborne hyperspectral imagery. Precis. Agric. 2007, 8, 111–125. [Google Scholar] [CrossRef]

- Aghighi, H.; Azadbakht, M.; Ashourloo, D.; Shahrabi, H.S.; Radiom, S. Machine learning regression techniques for the silage maize yield prediction using time-series images of Landsat 8 OLI. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4563–4577. [Google Scholar] [CrossRef]

- Kuwata, K.; Shibasaki, R. Estimating corn yield in the united states with modis evi and machine learning methods. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 3, 131–136. [Google Scholar] [CrossRef]

- Hong, Y.; Ma, R.; Li, C.; Shao, C.; Huang, J.; Zeng, Y.; Chen, Y. Three-dimensional localization and mapping of multiagricultural scenes via hierarchically-coupled LiDAR-inertial odometry. Comput. Electron. Agric. 2024, 227, 109487. [Google Scholar] [CrossRef]

- Song, H.; Wen, W.; Wu, S.; Guo, X. Comprehensive review on 3D point cloud segmentation in plants. Artif. Intell. Agric. 2025, 15, 296–315. [Google Scholar] [CrossRef]

- He, L.; Fang, W.; Zhao, G.; Wu, Z.; Fu, L.; Li, R.; Majeed, Y.; Dhupia, J. Fruit yield prediction and estimation in orchards: A state-of-the-art comprehensive review for both direct and indirect methods. Comput. Electron. Agric. 2022, 195, 106812. [Google Scholar] [CrossRef]

- Chen, Y.; Lee, W.S.; Gan, H.; Peres, N.; Fraisse, C.; Zhang, Y.; He, Y. Strawberry yield prediction based on a deep neural network using high-resolution aerial orthoimages. Remote Sens. 2019, 11, 1584. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Chen, R.; Zhang, C.; Xu, B.; Zhu, Y.; Zhao, F.; Han, S.; Yang, G.; Yang, H. Predicting individual apple tree yield using UAV multi-source remote sensing data and ensemble learning. Comput. Electron. Agric. 2022, 201, 107275. [Google Scholar] [CrossRef]

- Choudhury, M.R.; Das, S.; Christopher, J.; Apan, A.; Chapman, S.; Menzies, N.W.; Dang, Y.P. Improving biomass and grain yield prediction of wheat genotypes on sodic soil using integrated high-resolution multispectral, hyperspectral, 3D point cloud, and machine learning techniques. Remote. Sens. 2021, 13, 3482. [Google Scholar] [CrossRef]

- Tesfaye, A.A.; Osgood, D.; Aweke, B.G. Combining machine learning, space-time cloud restoration and phenology for farm-level wheat yield prediction. Artif. Intell. Agric. 2021, 5, 208–222. [Google Scholar] [CrossRef]

- Tancik, M.; Weber, E.; Ng, E.; Li, R.; Yi, B.; Wang, T.; Kristoffersen, A.; Austin, J.; Salahi, K.; Ahuja, A.; et al. Nerfstudio: A modular framework for neural radiance field development. In Proceedings of the SIGGRAPH ′23: Special Interest Group on Computer Graphics and Interactive Techniques Conference, Los Angeles, CA, USA, 6–10 August 2023; pp. 1–12. [Google Scholar]

- Schonberger, J.L.; Frahm, J.-M. Structure-from-motion revisited. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27 June–2 July 2016; pp. 4104–4113. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar] [CrossRef]

- Van der Sluijs, J.; Saiet, E.; Fraser, R.; Kokelj, S.V.; Bakelaar, C. Validation of beyond visual-line-of-sight drone photogrammetry for terrain and canopy height applications. Remote Sens. Appl. Soc. Environ. 2024, 35, 101266. [Google Scholar] [CrossRef]

- Valluvan, A.B.; Raj, R.; Pingale, R.; Jagarlapudi, A. Canopy height estimation using drone-based RGB images. Smart Agric. Technol. 2023, 4, 100145. [Google Scholar] [CrossRef]

- Rayamajhi, A.; Jahanifar, H.; Mahmud, S. Measuring ornamental tree canopy attributes for precision spraying using drone technology and self-supervised segmentation. Comput. Electron. Agric. 2024, 225, 109359. [Google Scholar] [CrossRef]

- Su, J.; Zhu, X.; Li, S.; Chen, W.-H. AI meets UAVs: A survey on AI empowered UAV perception systems for precision agriculture. Neurocomputing 2023, 518, 242–270. [Google Scholar] [CrossRef]

- Gavilán-Acuna, G.; Coops, N.C.; Tompalski, P.; Mena-Quijada, P.; Varhola, A.; Roeser, D.; Olmedo, G.F. Characterizing annual leaf area index changes and volume growth using ALS and satellite data in forest plantations. Sci. Remote Sens. 2024, 10, 100159. [Google Scholar] [CrossRef]

- Trentin, C.; Ampatzidis, Y.; Lacerda, C.; Shiratsuchi, L. Tree crop yield estimation and prediction using remote sensing and machine learning: A systematic review. Smart Agric. Technol. 2024, 9, 100556. [Google Scholar] [CrossRef]

- Ge, L.; Zou, K.; Zhou, H.; Yu, X.; Tan, Y.; Zhang, C.; Li, W. Three dimensional apple tree organs classification and yield estimation algorithm based on multi-features fusion and support vector machine. Inf. Process. Agric. 2022, 9, 431–442. [Google Scholar] [CrossRef]

- Lu, N.; Zhou, J.; Han, Z.; Li, D.; Cao, Q.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cheng, T. Improved estimation of aboveground biomass in wheat from RGB imagery and point cloud data acquired with a low-cost unmanned aerial vehicle system. Plant Methods 2019, 15, 1–16. [Google Scholar] [CrossRef]

| Tree Feature | Min. | Max. | Median | IQR | Q1 | Q3 | Mean | SD |

|---|---|---|---|---|---|---|---|---|

| H (m) | 2.23 | 8.56 | 5.97 | 1.56 | 5.12 | 6.68 | 5.87 | 1.11 |

| A (m2) | 3.63 | 28.60 | 14.15 | 6.34 | 11.15 | 17.49 | 14.44 | 4.89 |

| V (m3) | 4.42 | 57.15 | 22.66 | 16.71 | 15.71 | 32.42 | 24.61 | 11.73 |

| Method | MAE (kg) | MAPE (%) | RMSE (kg) | R2 |

|---|---|---|---|---|

| MLR | 2.79 | 23.97 | 3.63 | 0.72 |

| SVR | 3.09 | 27.12 | 3.90 | 0.68 |

| RFR | 2.04 | 17.24 | 2.81 | 0.83 |

| XGBoost | 2.10 | 18.56 | 2.92 | 0.82 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, H.; Cao, J.; An, J.; Xu, Y.; Bai, X.; Xu, D.; Li, W. Research on Walnut (Juglans regia L.) Yield Prediction Based on a Walnut Orchard Point Cloud Model. Agriculture 2025, 15, 775. https://doi.org/10.3390/agriculture15070775

Chen H, Cao J, An J, Xu Y, Bai X, Xu D, Li W. Research on Walnut (Juglans regia L.) Yield Prediction Based on a Walnut Orchard Point Cloud Model. Agriculture. 2025; 15(7):775. https://doi.org/10.3390/agriculture15070775

Chicago/Turabian StyleChen, Heng, Jiale Cao, Jianshuo An, Yangjing Xu, Xiaopeng Bai, Daochun Xu, and Wenbin Li. 2025. "Research on Walnut (Juglans regia L.) Yield Prediction Based on a Walnut Orchard Point Cloud Model" Agriculture 15, no. 7: 775. https://doi.org/10.3390/agriculture15070775

APA StyleChen, H., Cao, J., An, J., Xu, Y., Bai, X., Xu, D., & Li, W. (2025). Research on Walnut (Juglans regia L.) Yield Prediction Based on a Walnut Orchard Point Cloud Model. Agriculture, 15(7), 775. https://doi.org/10.3390/agriculture15070775