A New Efficient Ship Detection Method Based on Remote Sensing Images by Device–Cloud Collaboration

Abstract

1. Introduction

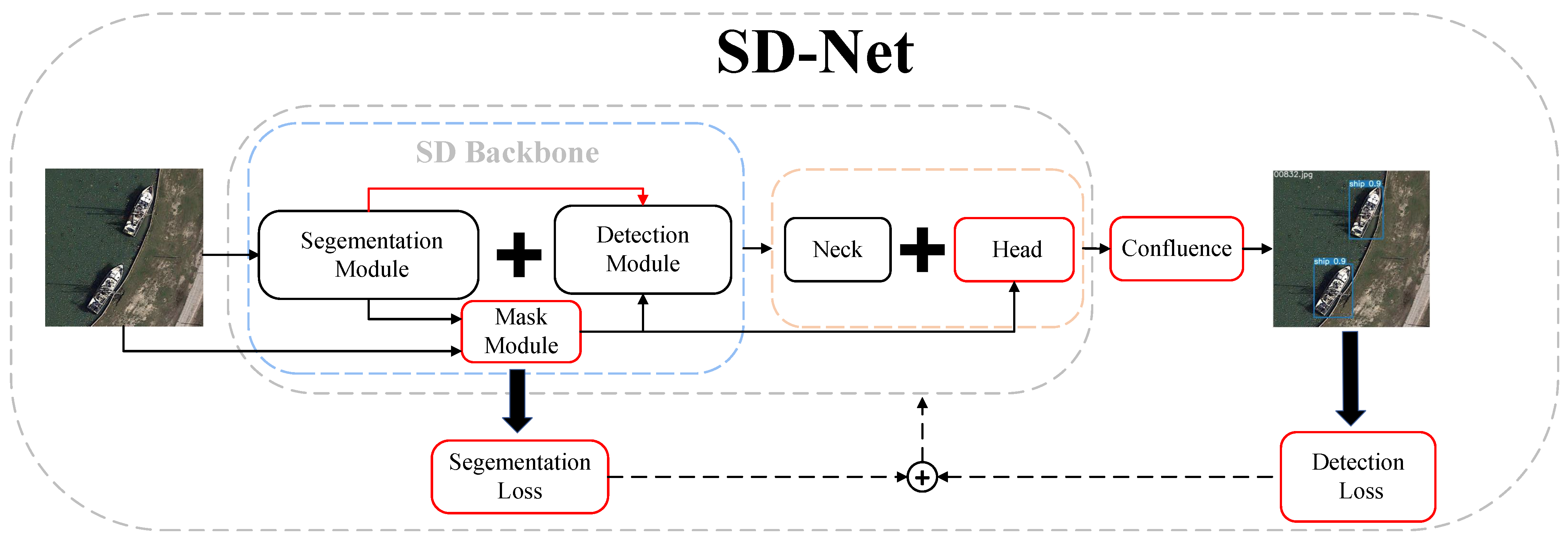

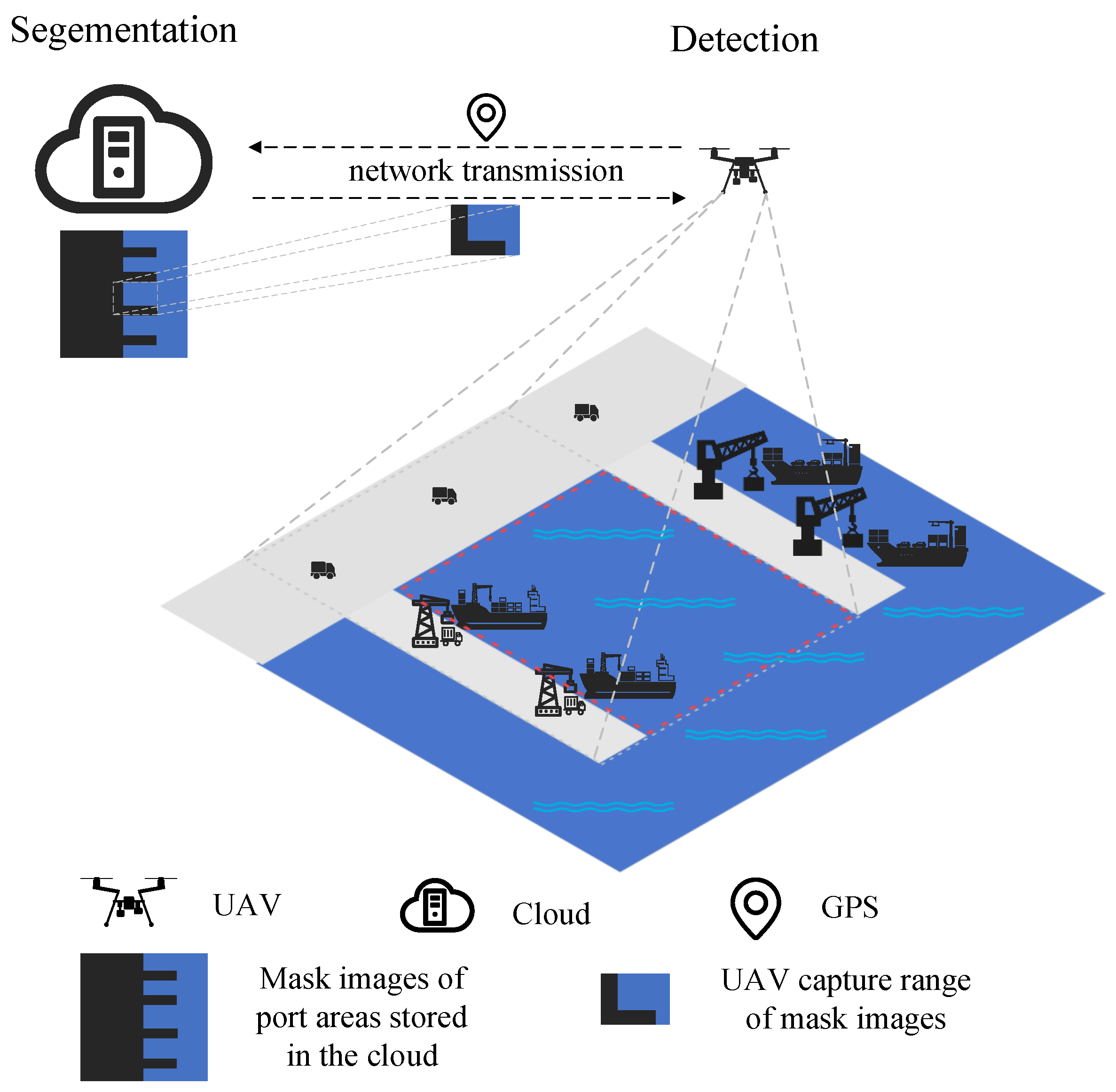

- A novel ship detection framework based on device–cloud collaboration is proposed, which integrates semantic segmentation and object detection functions. By migrating the segmentation module to the cloud, the semantic segmentation results are stored in the cloud for the device-end object detection exploitation to optimize the allocation and utilization of computational resources.

- A Mask module and Anchor Head module are designed to reduce the computation and postprocessing time of the network.

- The performance of semantic segmentation and ship detection is improved by joint training of semantic segmentation and object detection.

- The postprocessing step and loss function of the original YOLO model are improved. Additionally, a Coordinate Attention module is introduced to better adapt to environments with dense small objects and heavy occlusion.

- In order to verify the effectiveness of the proposed method, we have conducted extensive ablation and comparison experiments. The experimental results show that our device–cloud collaborative method significantly improves detection efficiency while ensuring detection accuracy.

2. Related Work

2.1. Semantic Segmentation in Remote Sensing Images

2.2. Ship Detection in Remote Sensing Images

2.3. Cloud Computing and Device–Cloud Collaboration

3. Definition of the Problem

- Chip computing efficiency affects the speed of ship detection.

- Power consumption affects the working time of front-end equipment. For example, the maximum flight time of the DJI Mavic 3E is 45 min.

- Storage capacity affects the detection capacity and range of front-end equipment. For example, the storage capacity of DJI Mavic 3E is usually 512 GB.

- Network capacity affects the speed at which information can be transmitted.

4. Methodology

4.1. Device–Cloud Collaboration Framework

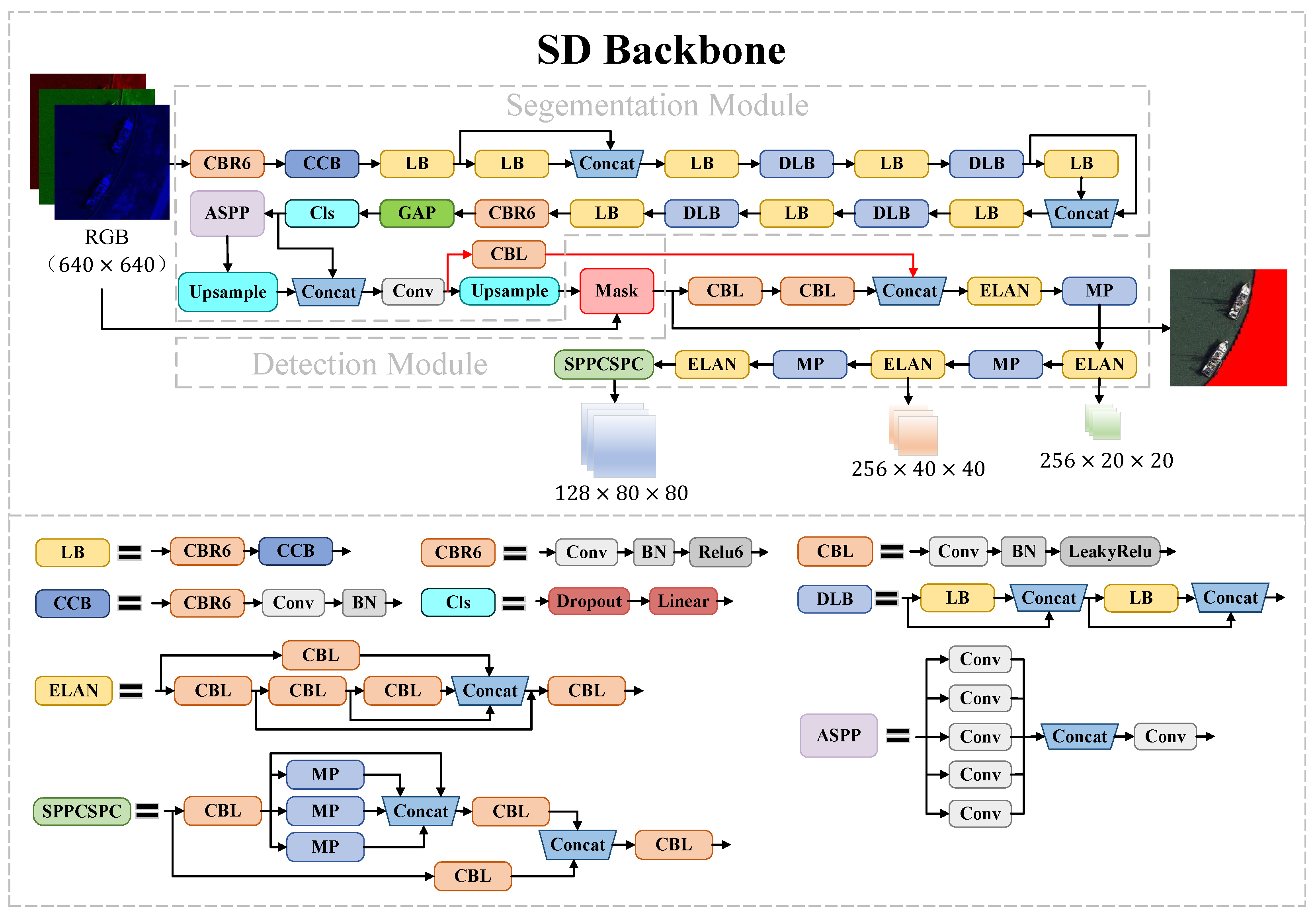

4.2. Backbone Structure

4.2.1. Basic Module

4.2.2. Mask Module

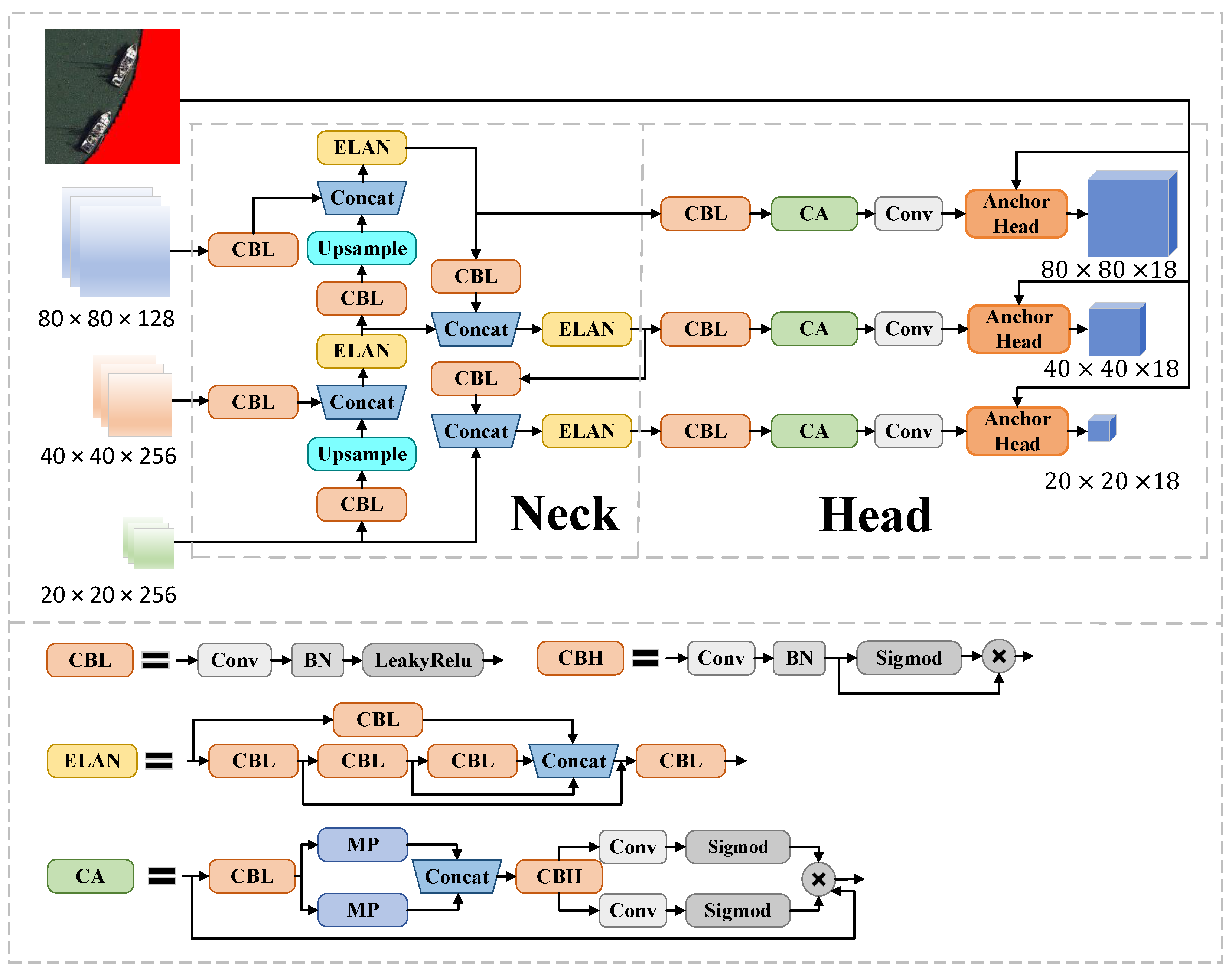

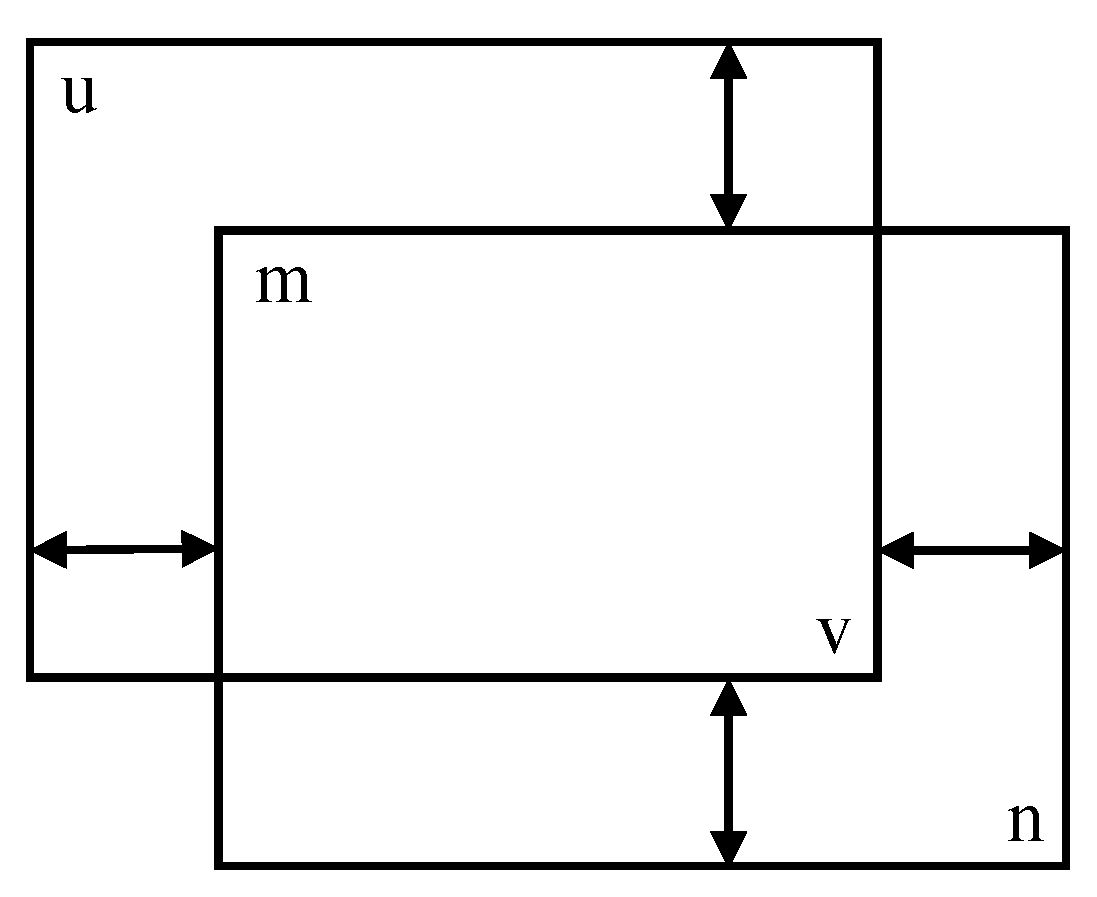

4.3. Neck and Head Structure

4.3.1. Basic Module

4.3.2. Anchor Head Module

4.4. Coordinate Attention Module

4.5. Postprocessing Design

4.6. IoU Loss Design

4.7. Joint Optimization

5. Experiments and Analysis

5.1. Implementation Details

5.1.1. Dataset

5.1.2. Model Training

5.2. Evaluation Indicators

5.3. Parameter Analysis

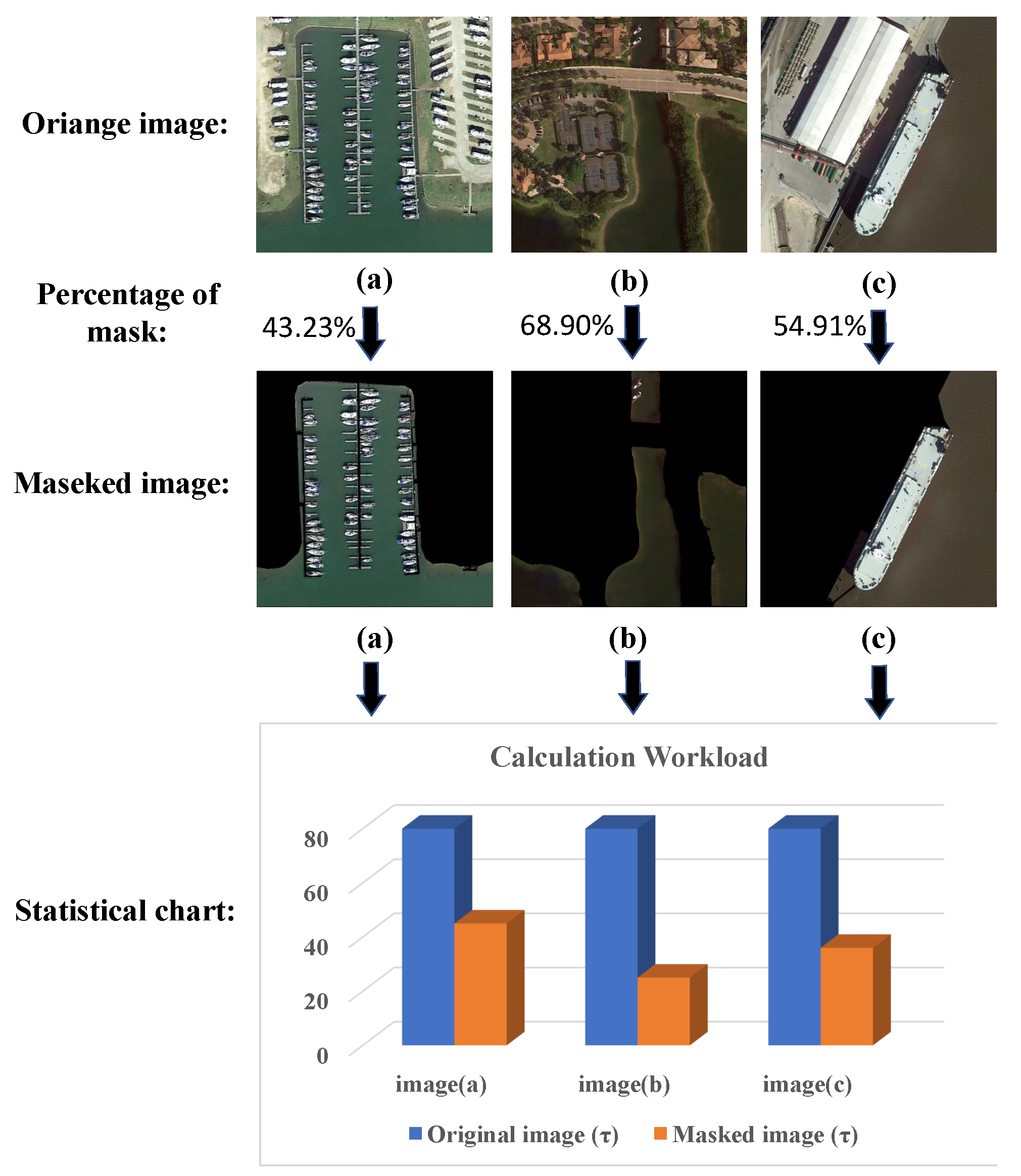

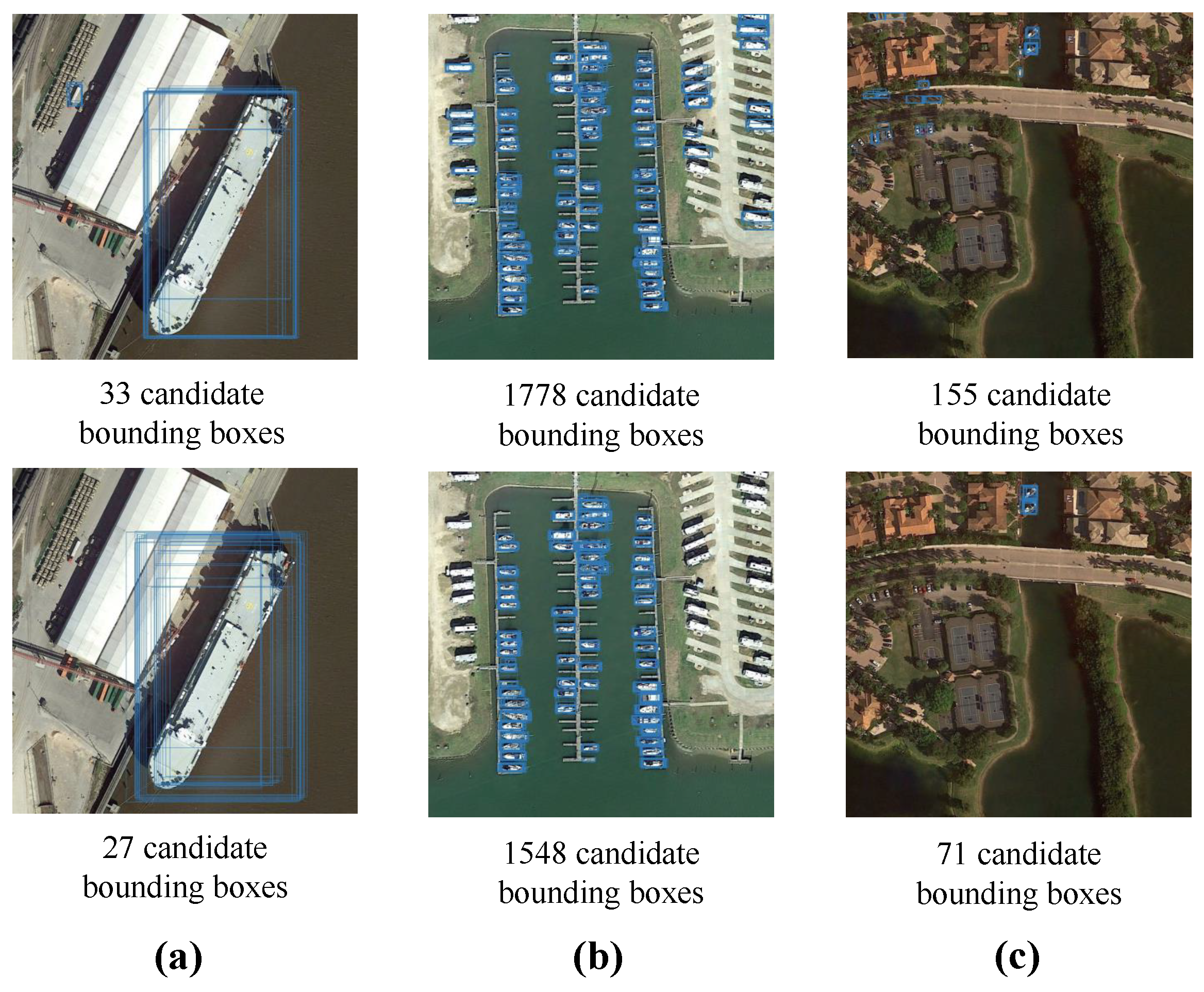

5.4. Analysis of the Calculation Amount

5.5. Comparison with Other Detection Models

5.6. Ablation Experiment

5.6.1. Postprocessing

5.6.2. Segmentation Module

5.6.3. Detection Module

5.7. Efficiency Analysis

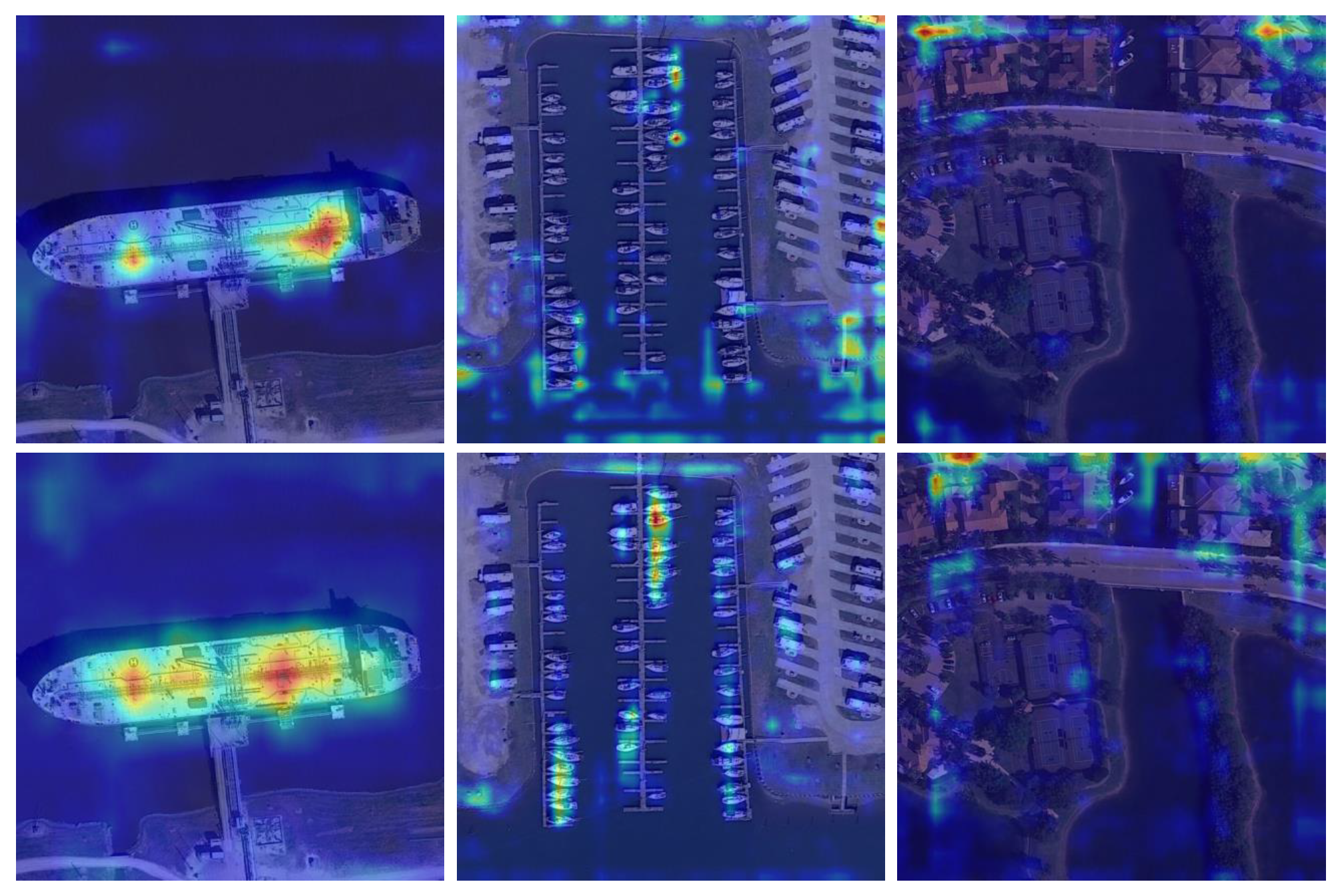

5.8. Attention Visualization Analysis

5.9. Visualization of Results

5.9.1. Semantic Segmentation

5.9.2. Object Detection

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhenbo, B.; Shiyou, Z.; Hua, Y.; Yuanhong, W. Survey of Ship Detection in Video Surveillance Based on Shallow Machine Learning. J. Syst. Simul. 2021, 33, 16. [Google Scholar]

- Dudczyk, J.; Rybak, Ł. Application of data particle geometrical divide algorithms in the process of radar signal recognition. Sensors 2023, 23, 8183. [Google Scholar] [CrossRef]

- Dudczyk, J.; Kawalec, A. Specific emitter identification based on graphical representation of the distribution of radar signal parameters. Bull. Pol. Acad. Sci. Tech. Sci. 2015, 63, 391–396. [Google Scholar] [CrossRef]

- Dudczyk, J. Radar emission sources identification based on hierarchical agglomerative clustering for large data sets. J. Sens. 2016, 2016, 1879327. [Google Scholar] [CrossRef]

- Chen, X.; Wu, H.; Han, B.; Liu, W.; Montewka, J.; Liu, R.W. Orientation-aware ship detection via a rotation feature decoupling supported deep learning approach. Eng. Appl. Artif. Intell. 2023, 125, 106686. [Google Scholar] [CrossRef]

- Chen, X.; Wei, C.; Yang, Y.; Luo, L.; Biancardo, S.A.; Mei, X. Personnel trajectory extraction from port-like videos under varied rainy interferences. IEEE Trans. Intell. Transp. Syst. 2024, 25, 6567–6579. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Dai, X.; Chen, Y.; Yang, J.; Zhang, P.; Yuan, L.; Zhang, L. Dynamic detr: End-to-end object detection with dynamic attention. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2988–2997. [Google Scholar]

- Li, J.; Tian, P.; Song, R.; Xu, H.; Li, Y.; Du, Q. PCViT: A Pyramid Convolutional Vision Transformer Detector for Object Detection in Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5608115. [Google Scholar] [CrossRef]

- Yan, R.; Yan, L.; Geng, G.; Cao, Y.; Zhou, P.; Meng, Y. ASNet: Adaptive Semantic Network Based on Transformer-CNN for Salient Object Detection in Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5608716. [Google Scholar] [CrossRef]

- Zhao, T.; Wang, Y.; Li, Z.; Gao, Y.; Chen, C.; Feng, H.; Zhao, Z. Ship Detection with Deep Learning in Optical Remote-Sensing Images: A Survey of Challenges and Advances. Remote Sens. 2024, 16, 1145. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Shen, Q.; Gao, C. Research on Semantic Segmentation of Natural Landform Based on Edge Detection Module. J. Syst. Simul. 2022, 34, 293–302. [Google Scholar]

- Li, Y.; Chen, W.; Huang, X.; Gao, Z.; Li, S.; He, T.; Zhang, Y. MFVNet: A deep adaptive fusion network with multiple field-of-views for remote sensing image semantic segmentation. Sci. China Inf. Sci. 2023, 66, 140305. [Google Scholar] [CrossRef]

- Cai, Y.; Fan, L.; Fang, Y. SBSS: Stacking-Based Semantic Segmentation Framework for Very High-Resolution Remote Sensing Image. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–14. [Google Scholar] [CrossRef]

- Saralioglu, E.; Gungor, O. Semantic segmentation of land cover from high resolution multispectral satellite images by spectral-spatial convolutional neural network. Geocarto Int. 2022, 37, 657–677. [Google Scholar] [CrossRef]

- Wu, H.; Huang, P.; Zhang, M.; Tang, W.; Yu, X. CMTFNet: CNN and Multiscale Transformer Fusion Network for Remote Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 2004612. [Google Scholar] [CrossRef]

- Xiao, T.; Liu, Y.; Huang, Y.; Li, M.; Yang, G. Enhancing Multiscale Representations with Transformer for Remote Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- He, X.; Zhou, Y.; Zhao, J.; Zhang, D.; Yao, R.; Xue, Y. Swin transformer embedding UNet for remote sensing image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Li, L.; Jiang, L.; Zhang, J.; Wang, S.; Chen, F. A complete YOLO-based ship detection method for thermal infrared remote sensing images under complex backgrounds. Remote Sens. 2022, 14, 1534. [Google Scholar] [CrossRef]

- You, Y.; Cao, J.; Zhang, Y.; Liu, F.; Zhou, W. Nearshore ship detection on high-resolution remote sensing image via scene-mask R-CNN. IEEE Access 2019, 7, 128431–128444. [Google Scholar] [CrossRef]

- Tian, Y.; Liu, J.; Zhu, S.; Xu, F.; Bai, G.; Liu, C. Ship Detection in Visible Remote Sensing Image Based on Saliency Extraction and Modified Channel Features. Remote Sens. 2022, 14, 3347. [Google Scholar] [CrossRef]

- Liu, T.; Jia, Z.; Lei, Z.; Zhang, X.; Huo, Y. Unsupervised depth estimation for ship target based on single view UAV image. Int. J. Remote Sens. 2022, 43, 3216–3235. [Google Scholar] [CrossRef]

- Xu, X.; Zhang, X.; Zhang, T. Lite-yolov5: A lightweight deep learning detector for on-board ship detection in large-scene sentinel-1 sar images. Remote Sens. 2022, 14, 1018. [Google Scholar] [CrossRef]

- Tian, Y.; Wang, X.; Zhu, S.; Xu, F.; Liu, J. LMSD-Net: A Lightweight and High-Performance Ship Detection Network for Optical Remote Sensing Images. Remote Sens. 2023, 15, 4358. [Google Scholar] [CrossRef]

- Peng, G.; Yang, Z.; Wang, S.; Zhou, Y. AMFLW-YOLO: A Lightweight Network for Remote Sensing Image Detection Based on Attention Mechanism and Multi-scale Feature Fusion. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4600916. [Google Scholar] [CrossRef]

- Liu, T.; Zhang, Z.; Lei, Z.; Huo, Y.; Wang, S.; Zhao, J.; Zhang, J.; Jin, X.; Zhang, X. An approach to ship target detection based on combined optimization model of dehazing and detection. Eng. Appl. Artif. Intell. 2024, 127, 107332. [Google Scholar] [CrossRef]

- Liu, L.; Chen, H.; Xu, Z. SPMOO: A Multi-Objective Offloading Algorithm for Dependent Tasks in IoT Cloud-Edge-End Collaboration. Information 2022, 13, 75. [Google Scholar] [CrossRef]

- Liu, F.; Huang, J.; Wang, X. Joint Task Offloading and Resource Allocation for Device-Edge-Cloud Collaboration with Subtask Dependencies. IEEE Trans. Cloud Comput. 2023, 11, 3027–3039. [Google Scholar] [CrossRef]

- Zhuang, Y.; Zheng, Z.; Shao, Y.; Li, B.; Wu, F.; Chen, G. ECLM: Efficient Edge-Cloud Collaborative Learning with Continuous Environment Adaptation. arXiv 2023, arXiv:2311.11083. [Google Scholar]

- Wang, Y.; Xu, R.; Zhou, C.; Kang, X.; Chen, Z. Digital twin and cloud-side-end collaboration for intelligent battery management system. J. Manuf. Syst. 2022, 62, 124–134. [Google Scholar] [CrossRef]

- Zhang, K.; Huang, W.; Hou, X.; Xu, J.; Su, R.; Xu, H. A fault diagnosis and visualization method for high-speed train based on edge and cloud collaboration. Appl. Sci. 2021, 11, 1251. [Google Scholar] [CrossRef]

- Tan, G.; Li, C.; Zhan, Z. Adaptive Scheduling Algorithm for Object Detection and Tracking Based on Device-Cloud Collaboration. J. South China Univ. Technol. Nat. Sci. Ed. 2021, 49. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 13713–13722. [Google Scholar]

- Neubeck, A.; Van Gool, L. Efficient non-maximum suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 3, pp. 850–855. [Google Scholar]

- Shepley, A.J.; Falzon, G.; Kwan, P.; Brankovic, L. Confluence: A robust non-IoU alternative to non-maxima suppression in object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 11561–11574. [Google Scholar] [CrossRef]

- He, J.; Erfani, S.; Ma, X.; Bailey, J.; Chi, Y.; Hua, X.S. α-IoU: A family of power intersection over union losses for bounding box regression. Adv. Neural Inf. Process. Syst. 2021, 34, 20230–20242. [Google Scholar]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3974–3983. [Google Scholar]

- Zou, Z.; Shi, Z. Random access memories: A new paradigm for target detection in high resolution aerial remote sensing images. IEEE Trans. Image Process. 2017, 27, 1100–1111. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Lv, W.; Xu, S.; Zhao, Y.; Wang, G.; Wei, J.; Cui, C.; Du, Y.; Dang, Q.; Liu, Y. Detrs beat yolos on real-time object detection. arXiv 2023, arXiv:2304.08069. [Google Scholar]

- Cheng, S.; Zhu, Y.; Wu, S. Deep learning based efficient ship detection from drone-captured images for maritime surveillance. Ocean Eng. 2023, 285, 115440. [Google Scholar] [CrossRef]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Soft-NMS–improving object detection with one line of code. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5561–5569. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar]

| Segmentation | Detection | ||||

|---|---|---|---|---|---|

| MIoU (%) | MPA (%) | Accuracy (%) | mAP@0.5 (%) | mAP@0.5: 0.95 (%) | |

| Constant at 0.5 | 93.0 | 96.2 | 97.0 | 92.3 | 52.9 |

| Increasing from 0.5 to 0.9 | 92.8 | 96.1 | 97.1 | 92.2 | 52.9 |

| Decreasing from 0.9 to 0.5 | 93.0 | 96.2 | 97.1 | 92.4 | 52.9 |

| Models | mAP (%) | Params (M) | Weight File (MB) | GFLOPs |

|---|---|---|---|---|

| YOLOv3-tiny | 75.9 | 8.6 | 66.3 | 12.9 |

| YOLOv4-tiny | 73.2 | 6.1 | 46.3 | 16.5 |

| YOLOv5s | 89.1 | 7.0 | 54.2 | 15.8 |

| YOLOv6s | 81.5 | 18.5 | 154.3 | 45.7 |

| YOLOv7-tiny | 89.8 | 6.0 | 46.4 | 13.0 |

| YOLOv8s | 90.3 | 11.1 | 128.0 | 28.4 |

| RT-DETR-R18 | 80.5 | 20.0 | 154.3 | 60.0 |

| YOLOv5-ODConvNeXt | 92.0 | 7.0 | 54.0 | 14.8 |

| Ours (Detection) | 92.4 | 6.0 | 45.8 | 9.7 |

| Methods | mAP@0.5 (%) | mAP@0.95 (%) |

|---|---|---|

| NMS | 90.3 | 51.9 |

| Soft NMS | 86.6 | 50.6 |

| DIoU NMS | 88.6 | 51.5 |

| Ours (Confluence) | 92.2 | 52.9 |

| Methods | MIoU (%) | MPA (%) | Accuracy (%) |

|---|---|---|---|

| Segmentation only | 92.0 | 95.5 | 96.6 |

| Segmentation (joint optimization) | 93.0 | 96.2 | 97.1 |

| Methods | Confluence | CA | -IoU | -IoU | Mask | Anchor Head | mAP@0.5 (%) | mAP@0.95 (%) |

|---|---|---|---|---|---|---|---|---|

| Detection-only | × | × | × | × | × | × | 89.8 | 49.5 |

| Detection (Joint optimization) | × | × | × | × | × | × | 90.6 | 50.3 |

| ✓ | × | × | × | × | × | 91.0 | 51.8 | |

| ✓ | ✓ | × | × | × | × | 91.6 | 52.4 | |

| ✓ | ✓ | ✓ | × | × | × | 92.2 | 52.9 | |

| ✓ | ✓ | × | ✓ | × | × | 91.9 | 52.7 | |

| ✓ | ✓ | ✓ | × | ✓ | × | 92.4 | 52.9 | |

| ✓ | ✓ | ✓ | × | ✓ | ✓ | 92.4 | 52.9 |

| Methods | Postprocessing Time Consumption (ms) | Memory Peak (bytes) | Memory Used (bytes) |

|---|---|---|---|

| YOLO Model | 0.9 | 1099.0 | 195.0 |

| Ours | 0.8 | 1099.0 | 195.0 |

| Model | Methods | Cloud (ms) | Transmission (ms) | End (ms) | |||||

|---|---|---|---|---|---|---|---|---|---|

| Confluence | CA | -IoU | -IoU | Mask | Anchor Head | ||||

| Detection-only | × | × | × | × | × | × | × | × | 5.4 |

| Detection (Joint optimization) | × | × | × | × | × | × | 2.1 | 0.16 | 5.4 |

| ✓ | × | × | × | × | × | 2.1 | 0.16 | 5.4 | |

| ✓ | ✓ | × | × | × | × | 2.1 | 0.16 | 5.4 | |

| ✓ | ✓ | ✓ | × | × | × | 2.1 | 0.16 | 5.4 | |

| ✓ | ✓ | × | ✓ | × | × | 2.1 | 0.16 | 5.4 | |

| ✓ | ✓ | ✓ | × | ✓ | × | 2.1 | 0.16 | 3.1 | |

| ✓ | ✓ | ✓ | × | ✓ | ✓ | 2.1 | 0.16 | 3.1 | |

| YOLOv3-tiny | × | × | × | × | × | × | × | × | 1.8 |

| YOLOv4-tiny | × | × | × | × | × | × | × | × | 3.6 |

| YOLOv5s | × | × | × | × | × | × | × | × | 5.5 |

| YOLOv6s | × | × | × | × | × | × | × | × | 6.0 |

| YOLOv7-tiny | × | × | × | × | × | × | × | × | 5.4 |

| YOLOv8s | × | × | × | × | × | × | × | × | 5.3 |

| RT-DETR-R18 | × | × | × | × | × | × | × | × | 5.8 |

| YOLOv5-ODConvNeXt | × | × | × | × | × | × | × | × | 5.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, T.; Ye, Y.; Lei, Z.; Huo, Y.; Zhang, X.; Wang, F.; Sha, M.; Wu, H. A New Efficient Ship Detection Method Based on Remote Sensing Images by Device–Cloud Collaboration. J. Mar. Sci. Eng. 2024, 12, 1422. https://doi.org/10.3390/jmse12081422

Liu T, Ye Y, Lei Z, Huo Y, Zhang X, Wang F, Sha M, Wu H. A New Efficient Ship Detection Method Based on Remote Sensing Images by Device–Cloud Collaboration. Journal of Marine Science and Engineering. 2024; 12(8):1422. https://doi.org/10.3390/jmse12081422

Chicago/Turabian StyleLiu, Tao, Yun Ye, Zhengling Lei, Yuchi Huo, Xiaocai Zhang, Fang Wang, Mei Sha, and Huafeng Wu. 2024. "A New Efficient Ship Detection Method Based on Remote Sensing Images by Device–Cloud Collaboration" Journal of Marine Science and Engineering 12, no. 8: 1422. https://doi.org/10.3390/jmse12081422

APA StyleLiu, T., Ye, Y., Lei, Z., Huo, Y., Zhang, X., Wang, F., Sha, M., & Wu, H. (2024). A New Efficient Ship Detection Method Based on Remote Sensing Images by Device–Cloud Collaboration. Journal of Marine Science and Engineering, 12(8), 1422. https://doi.org/10.3390/jmse12081422