Forensic Facial Comparison: Current Status, Limitations, and Future Directions

Abstract

:Simple Summary

Abstract

1. Introduction

2. Development of an African Facial Image Database

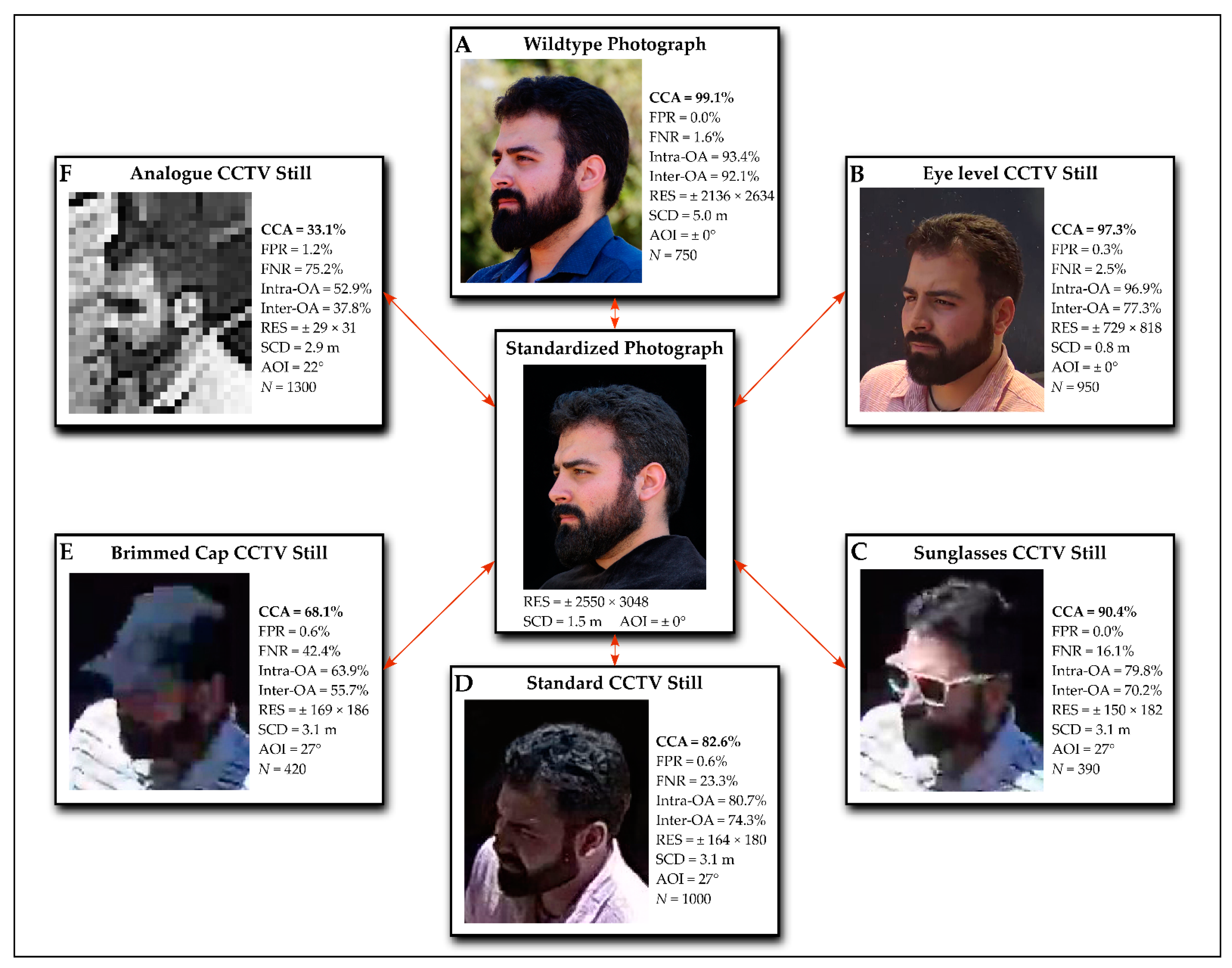

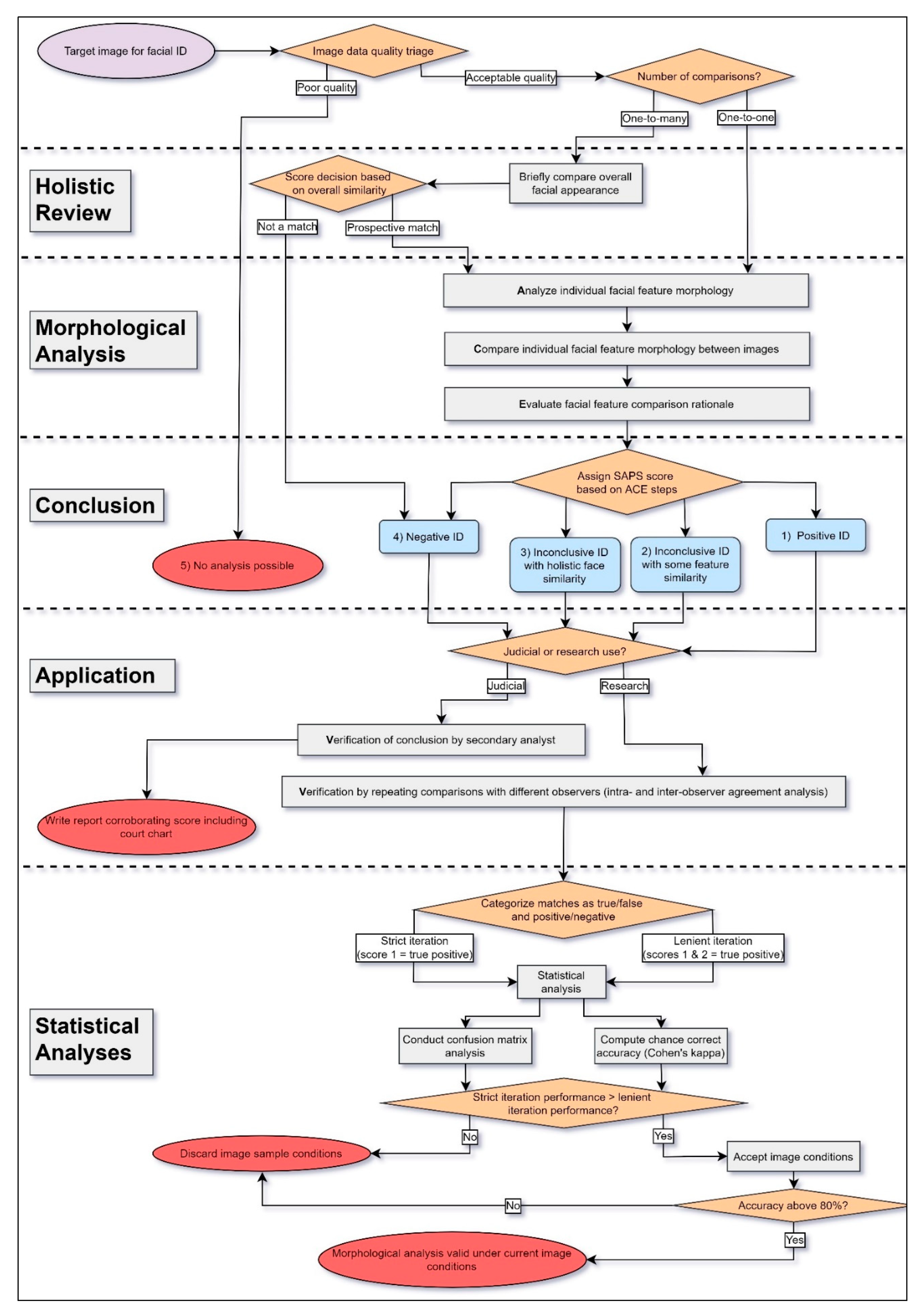

3. Outcomes of Validation Studies

4. Discussion

4.1. Influence of CCTV Installations

4.2. Feature List Usage, Disguises, and Training

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jäger, J. Photography: A means of surveillance? Judicial photography, 1850 to 1900. Crime Hist. Sociétés 2001, 5, 27–51. [Google Scholar] [CrossRef] [Green Version]

- Bertillon, A.; McClaughry, R.W. Signaletic Instructions Including the Theory and Practice of Anthropometrical Identification; McClaughry, R.W., Ed.; The Werner Company: Chicago, IL, USA, 1896. [Google Scholar]

- Faigman, D.L. Anecdotal Forensics, Phrenology, and Other Abject Lessons from the History of Science. Hastings Law J. 2008, 59, 979–1000. [Google Scholar]

- Mokwena, R.J. The Value of Photography in the Investigation of Crime Scenes; University of South Africa: Pretoria, South Africa, 2012. [Google Scholar]

- Bell, A. Crime scene photography in England, 1895–1960. J. Br. Stud. 2018, 57, 53–78. [Google Scholar] [CrossRef] [Green Version]

- Lindegaard, M.R.; Bernasco, W. Lessons Learned from Crime Caught on Camera. J. Res. Crime Delinq. 2018, 55, 155–186. [Google Scholar] [CrossRef] [PubMed]

- Norris, C.; McCahill, M.; Wood, D. The Growth of CCTV: A global perspective on the international diffusion of video surveillance in publicly accessible space. Surveill. Soc. 2002, 2, 110–135. [Google Scholar] [CrossRef]

- Piza, E.L.; Welsh, B.C.; Farrington, D.P.; Thomas, A.L. CCTV surveillance for crime prevention: A 40-year systematic review with meta-analysis. Criminol. Public Policy 2019, 18, 135–159. [Google Scholar] [CrossRef] [Green Version]

- Jain, A.K.; Klare, B.; Park, U. Face Matching and Retrieval in Forensics Applications. IEEE Multimed. 2012, 19, 20. [Google Scholar] [CrossRef]

- Moyo, S. Evaluating the Use of CCTV Surveillance Systems for Crime Cotnrol and Prevention: Selected Case Studies from Johannesburg and Tshwane, Gauteng; University of South Africa: Pretoria, South Africa, 2019. [Google Scholar]

- Goold, B.; Loader, I.; Thumala, A. The banality of security: The curious case of surveillance cameras. Br. J. Criminol. 2013, 53, 977–996. [Google Scholar] [CrossRef]

- Duncan, J. How CCTV surveillance poses a threat to privacy in South Africa. Conversation 2018, 1–3. Available online: https://theconversation.com/how-cctv-surveillance-poses-a-threat-to-privacy-in-south-africa-97418 (accessed on 30 October 2021).

- Gill, M.; Spriggs, A. Assessing the Impact of CCTV: Home Office Research Study 292. 2005. Available online: https://techfak.uni-bielefeld.de/~iluetkeb/2006/surveillance/paper/social_effect/CCTV_report.pdf (accessed on 25 November 2021).

- Welsh, B.C.; Farrington, D.P. Effects of Closed Circuit Television Surveillance on Crime. Campbell Syst. Rev. 2008, 4, 1–73. [Google Scholar] [CrossRef]

- Ashby, M.P.J. The Value of CCTV Surveillance Cameras as an Investigative Tool: An Empirical Analysis. Eur. J. Crim. Policy Res. 2017, 23, 441–459. [Google Scholar] [CrossRef] [Green Version]

- Kleinberg, K.F.; Siebert, J.P. A study of quantitative comparisons of photographs and video images based on landmark derived feature vectors. Forensic Sci. Int. 2012, 219, 248–258. [Google Scholar] [CrossRef]

- Steyn, M.; Pretorius, M.; Briers, N.; Bacci, N.; Johnson, A.; Houlton, T.M.R. Forensic facial comparison in South Africa: State of the science. Forensic Sci. Int. 2018, 287, 190–194. [Google Scholar] [CrossRef]

- Jackson, A. The Admissibility of Identification Evidence Made on the Basis of Recognition from Photographs Taken at a Crime Scene. J. Crim. Law 2016, 80, 234–236. [Google Scholar] [CrossRef]

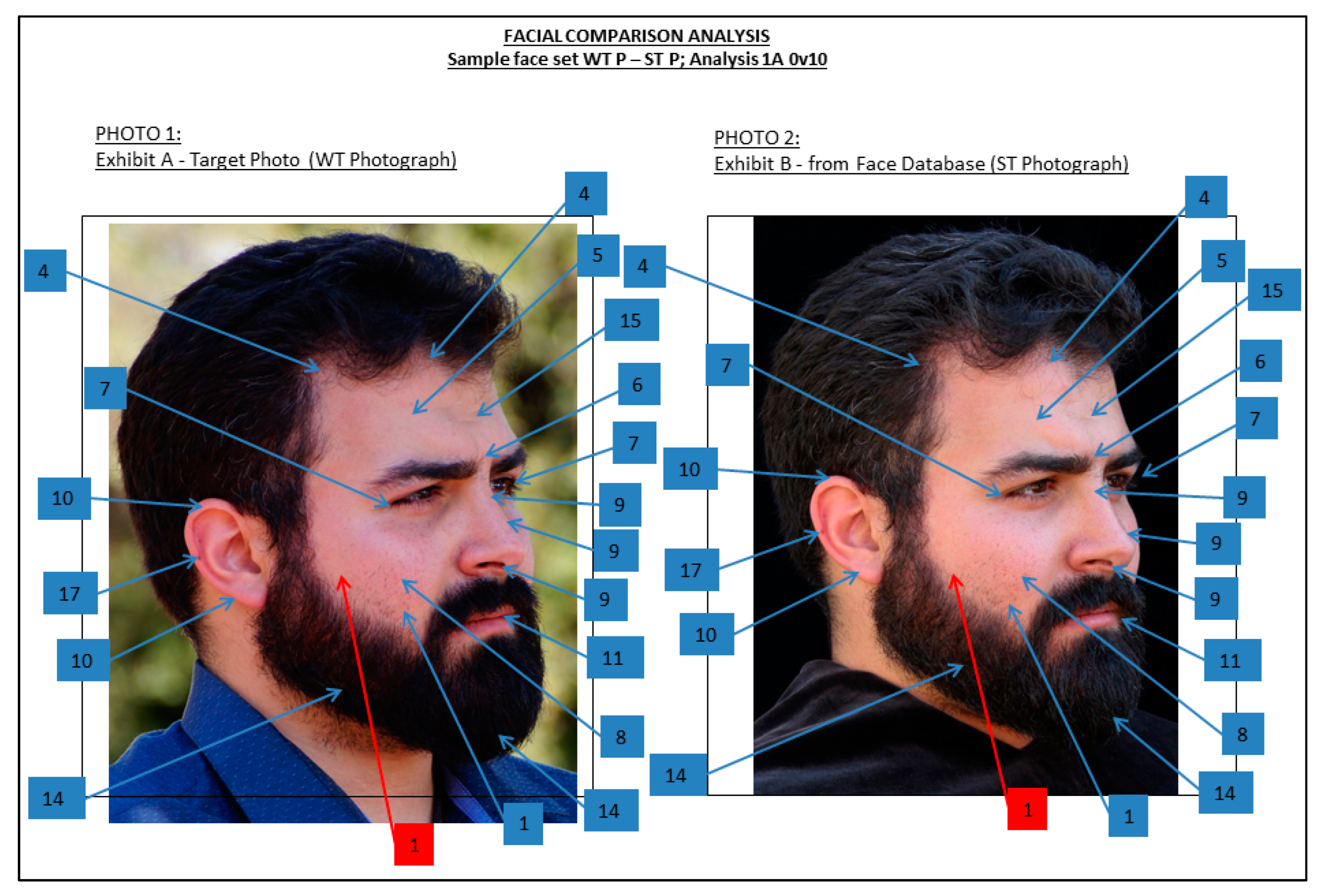

- Houlton, T.M.R.; Steyn, M. Finding Makhubu: A morphological forensic facial comparison. Forensic Sci. Int. 2018, 285, 13–20. [Google Scholar] [CrossRef]

- Stephan, C.N.; Caple, J.M.; Guyomarc’h, P.; Claes, P. An overview of the latest developments in facial imaging. Forensic Sci. Res. 2019, 4, 10–28. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bacci, N.; Houlton, T.M.R.; Briers, N.; Steyn, M. Validation of forensic facial comparison by morphological analysis in photographic and CCTV samples. Int. J. Legal Med. 2021, 135, 1965–1981. [Google Scholar] [CrossRef] [PubMed]

- Schüler, G.; Obertová, Z. Visual identification of persons: Facial image comparison and morphological comparative analysis. In Statistics and Probability in Forensic Anthropology; Obertová, Z., Stewart, A., Cattaneo, C., Eds.; Elsevier Academic Press: London, UK, 2020; pp. 313–330. [Google Scholar]

- Behrman, B.W.; Davey, S.L. Eyewitness identification in actual criminal cases: An archival analysis. Law Hum. Behav. 2001, 25, 475–491. [Google Scholar] [CrossRef]

- Boyce, M.A.; Lindsay, D.S.; Brimacombe, C.A.E. Investigating investigators: Examining the impact of eyewitness identification evidence on student-investigators. Law Hum. Behav. 2008, 32, 439–453. [Google Scholar] [CrossRef] [PubMed]

- Davis, J.P.; Valentine, T.; Wilkinson, C. Facial image comparison. In Craniofacial Identification; Wilkinson, C., Rynn, C., Eds.; Cambridge University Press: New York, NY, USA, 2012; pp. 136–153. ISBN 9781139049566. [Google Scholar]

- Valentine, T.; Davis, J.P. Forensic Facial Identification: Theory and Practice of Identification from Eyewitnesses, Composites and CCTV; John Wiley & Sons, Ltd.: Chichester, UK, 2015; ISBN 9781118469538. [Google Scholar]

- Facial Identification Scientific Working Group. Facial Comparison Overview and Methodology Guidelines. 2019. Available online: https://fiswg.org/fiswg_facial_comparison_overview_and_methodology_guidelines_V1.0_20191025.pdf (accessed on 25 November 2021).

- Adjabi, I.; Ouahabi, A.; Benzaoui, A.; Taleb-Ahmed, A. Past, present, and future of face recognition: A review. Electronics 2020, 9, 1188. [Google Scholar] [CrossRef]

- Akhtar, Z.; Rattani, A. A Face in any Form: New Challenges and Opportunities for Face Recognition Technology. Computer 2017, 50, 80–90. [Google Scholar] [CrossRef]

- Lai, X.; Patrick Rau, P.L. Has facial recognition technology been misused? A user perception model of facial recognition scenarios. Comput. Hum. Behav. 2021, 124, 106894. [Google Scholar] [CrossRef]

- Kaur, P.; Krishan, K.; Sharma, S.K.; Kanchan, T. Facial-recognition algorithms: A literature review. Med. Sci. Law 2020, 60, 131–139. [Google Scholar] [CrossRef] [PubMed]

- Grother, P.; Ngan, M.; Hanaoka, K. Face Recognition Vendor Test (FRVT) Part 2: Identification; US Department of Commerce, National Institute of Standards & Technology: Gaithersburg, MD, USA, 2019.

- Dodd, V. UK Police Use of Facial Recognition Technology a Failure, Says Report. The Guardian, 15 May 2018. [Google Scholar]

- Grother, P.; Ngan, M.; Hanaoka, K. Face Recognition Vendor Test Part 3: Demographic Effects; US Department of Commerce, National Institute of Standards & Technology: Gaithersburg, MD, USA, December 2019.

- White, D.; Jonathon Phillips, P.; Hahn, C.A.; Hill, M.; O’Toole, A.J. Perceptual expertise in forensic facial image comparison. Proc. R. Soc. B Biol. Sci. 2015, 282, 20151292. [Google Scholar] [CrossRef] [Green Version]

- Davis, J.P.; Valentine, T.; Davis, R.E. Computer assisted photo-anthropometric analyses of full-face and profile facial images. Forensic Sci. Int. 2010, 200, 165–176. [Google Scholar] [CrossRef] [PubMed]

- Urbanová, P. Performance of distance-based matching algorithms in 3D facial identification. Egypt. J. Forensic Sci. 2016, 6, 135–151. [Google Scholar] [CrossRef] [Green Version]

- Press Association. Welsh Police Wrongly Identify Thousands as Potential Criminals. The Guardian, 5 May 2018. [Google Scholar]

- Mordor Intelligence. Global Facial Recognition Market (2021–2026). 2020. Available online: https://www.mordorintelligence.com/industry-reports/facial-recognition-market (accessed on 30 October 2021).

- Spaun, N.A. Facial comparisons by subject matter experts: Their role in biometrics and their training. Int. Conf. Biom. 2009, 5558, 161–168. [Google Scholar] [CrossRef] [Green Version]

- Bacci, N.; Davimes, J.; Steyn, M.; Briers, N. Development of the Wits Face Database: An African database of high-resolution facial photographs and multimodal closed-circuit television (CCTV) recordings. F1000Research 2021, 10, 131. [Google Scholar] [CrossRef]

- Wilkinson, C.; Evans, R. Are facial image analysis experts any better than the general public at identifying individuals from CCTV images? Sci. Justice 2009, 49, 191–196. [Google Scholar] [CrossRef]

- Valentine, T.; Davis, J.P. Forensic Facial Identification; John Wiley & Sons: Hoboken, NJ, USA, 2015; ISBN 9781118469118. [Google Scholar]

- White, D.; Dunn, J.D.; Schmid, A.C.; Kemp, R.I. Error Rates in Users of Automatic Face Recognition Software. PLoS ONE 2015, 10, e0139827. [Google Scholar] [CrossRef] [Green Version]

- Speckeis, C. Can ACE-V be validated? J. Forensic Identif. 2011, 61, 201–209. [Google Scholar]

- Norris, C. The success of failure. Accounting for the global growth of CCTV. In Routledge Handbook of Surveillance Studies; Ball, K., Haggerty, K.D., Lyon, D., Eds.; Routledge: London, UK; New York, NY, USA, 2012; pp. 251–258. [Google Scholar]

- Facial Identification Scientific Working Group. Facial Image Comparison Feature List for Morphological Analysis. 2018. Available online: https://fiswg.org/FISWG_Morph_Analysis_Feature_List_v2.0_20180911.pdf (accessed on 25 November 2021).

- Bacci, N.; Steyn, M.; Briers, N. Performance of forensic facial comparison by morphological analysis across optimal and suboptimal CCTV settings. Sci. Justice 2021, 61, 743–754. [Google Scholar] [CrossRef]

- Bacci, N.; Briers, N.; Steyn, M. Assessing the effect of facial disguises on forensic facial comparison by morphological analysis. J. Forensic Sci. 2021, 66, 1220–1233. [Google Scholar] [CrossRef]

- Martinez, A.M.; Benavente, R. The AR Face Database CVC Technical Report #24; Universitat Autònoma de Barcelona: Bellaterra, Spain, 1998; Volume 24. [Google Scholar]

- Phillips, P.J.; Moon, H.; Rizvi, S.A.; Rauss, P.J. The FERET evaluation methodology for face-recognition algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1090–1104. [Google Scholar] [CrossRef]

- Sim, T.; Baker, S.; Bsat, M. The CMU Pose, Illumination, and Expression (PIE) database. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1615–1618. [Google Scholar]

- Calvo, M.G.; Lundqvist, D. Facial expressions of emotion (KDEF): Identification under different display-duration conditions. Behav. Res. Methods 2008, 40, 109–115. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gao, W.; Cao, B.; Shan, S.; Chen, X.; Zhou, D.; Zhang, X.; Zhao, D. The CAS-PEAL large-scale chinese face database and baseline evaluations. IEEE Trans. Syst. Man Cybern. 2008, 38, 149–161. [Google Scholar]

- Milborrow, S.; Morkel, J.; Nicolls, F. The MUCT Landmarked Face Database. Pattern Recognit. Assoc. S. Afr. 2008. Available online: http://www.milbo.org/muct/ (accessed on 25 November 2021).

- Tottenham, N.; Tanaka, J.W.; Leon, A.C.; McCarry, T.; Nurse, M.; Hare, T.A.; Marcus, D.J.; Westerlund, A.; Casey, B.J.; Nelson, C. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Res. 2009, 168, 242–249. [Google Scholar] [CrossRef] [Green Version]

- Grgic, M.; Delac, K.; Grgic, S. SCface—Surveillance cameras face database. Multimed. Tools Appl. 2011, 51, 863–879. [Google Scholar] [CrossRef]

- Kostinger, M.; Wohlhart, P.; Roth, P.M.; Bischof, H. Annotated Facial Landmarks in the Wild: A large-scale, real-world database for facial landmark localization. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; IEEE: Barcelona, Spain, 2011; pp. 2144–2151. [Google Scholar]

- Sagonas, C.; Antonakos, E.; Tzimiropoulos, G.; Zafeiriou, S.; Pantic, M. 300 Faces In-The-Wild Challenge: Database and results. Image Vis. Comput. 2015, 47, 3–18. [Google Scholar] [CrossRef] [Green Version]

- Karam, L.J.; Zhu, T. Quality labeled faces in the wild (QLFW): A database for studying face recognition in real-world environments. Hum. Vis. Electron. Imaging XX 2015, 9394, 93940. [Google Scholar] [CrossRef]

- Ma, D.S.; Correll, J.; Wittenbrink, B. The Chicago face database: A free stimulus set of faces and norming data. Behav. Res. Methods 2015, 47, 1122–1135. [Google Scholar] [CrossRef] [Green Version]

- Belhumeur, P.N.; Kriegman, D.J. Eigenfaces vs. Fisherfaces: Recognition Using Class Specific Linear Projection. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 711–720. [Google Scholar] [CrossRef] [Green Version]

- Georghiades, A.S.; Belhumeur, P.N.; Kriegman, D.J. From few to many: Illumination cone models for face recognition under variable lighting and pose. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 643–660. [Google Scholar] [CrossRef] [Green Version]

- Huang, G.B.; Ramesh, M.; Berg, T.; Learned-Miller, E. Labeled Faces in the Wild: A Database for Studying Face Recognition in Unconstrained Environments; University of Massachusetts: Amherst, MA, USA, 2007; Volume October, pp. 1–11. [Google Scholar]

- Gross, R. Face Databases. In Handbook Face Recognition; Springer: New York, NY, USA, 2005; pp. 301–327. [Google Scholar] [CrossRef]

- Shapiro, P.N.; Penrod, S. Meta-analysis of facial identification studies. Psychol. Bull. 1986, 100, 139–156. [Google Scholar] [CrossRef]

- Bothwell, R.K.; Brigham, J.C.; Malpass, R.S. Cross-Racial Identification. Pers. Soc. Psychol. Bull. 1989, 15, 19–25. [Google Scholar] [CrossRef]

- Anthony, T.; Copper, C.; Mullen, B. Cross-Racial Facial Identification: A Social Cognitive Integration. Pers. Soc. Psychol. Bull. 1992, 18, 296–301. [Google Scholar] [CrossRef]

- Meissner, C.A.; Brigham, J.C. Thirty years of investigating the own-race bias in memory for faces: A meta-analytic review. Psychol. Public Policy Law 2001, 7, 3–35. [Google Scholar] [CrossRef]

- Chiroro, P.M.; Tredoux, C.G.; Radaelli, S.; Meissner, C.A. Recognizing faces across continents: The effect of within-race variations on the own-race bias in face recognition. Psychon. Bull. Rev. 2008, 15, 1089–1092. [Google Scholar] [CrossRef] [PubMed]

- Kokje, E.; Bindemann, M.; Megreya, A.M. Cross-race correlations in the abilities to match unfamiliar faces. Acta Psychol. 2018, 185, 13–21. [Google Scholar] [CrossRef] [Green Version]

- Bacci, N.; Davimes, J.; Steyn, M.; Briers, N. Wits Face Database. Available online: https://hdl.handle.net/10539/29924 (accessed on 30 October 2021).

- Bromby, M. CCTV and Expert Evidence: Addressing the Reliability of New Sciences. Archbold News, 2 November 2006; 6–9. [Google Scholar]

- Kuhn, M. Caret: Classification and Regression Training R Package. 2020. Available online: https://cran.r-project.org/web/packages/caret/index.html (accessed on 30 October 2021).

- Ngan, M.; Grother, P.; Hanaoka, K. Ongoing Face Recognition Vendor Test (FRVT) Part 6A: Face Recognition Accuracy with Masks Using Pre-COVID-19 Algorithms; US Department of Commerce, National Institute of Standards & Technology: Gaithersburg, MD, USA, 2020.

- Ngan, M.; Grother, P.; Hanaoka, K. Ongoing Face Recognition Vendor Test (FRVT) Part 6B: Face Recognition Accuracy with Face Masks Using Post-COVID-19 Algorithms; US Department of Commerce, National Institute of Standards & Technology: Gaithersburg, MD, USA, 2020.

- Davis, J.P.; Valentine, T. CCTV on trial: Matching video images with the defendant in the dock. Appl. Cogn. Psychol. 2009, 23, 482–505. [Google Scholar] [CrossRef]

- Bruce, V.; Henderson, Z.; Greenwood, K.; Hancock, P.J.B.; Burton, A.M.; Miller, P. Verification of face identities from images captured on video. J. Exp. Psychol. Appl. 1999, 5, 339–360. [Google Scholar] [CrossRef]

- Kemp, R.I.; Caon, A.; Howard, M.; Brooks, K.R. Improving Unfamiliar Face Matching by Masking the External Facial Features. Appl. Cogn. Psychol. 2016, 30, 622–627. [Google Scholar] [CrossRef]

- Terry, R.L. How wearing eyeglasses affects facial recognition. Curr. Psychol. 1993, 12, 151–162. [Google Scholar] [CrossRef]

- Henderson, Z.; Bruce, V.; Burton, A.M. Matching the faces of robbers captured on video. Appl. Cogn. Psychol. 2001, 15, 445–464. [Google Scholar] [CrossRef]

- Lee, W.-L.; Wilkinson, C.; Memon, A.; Houston, K. Matching unfamiliar faces from poor quality closed-circuit television (CCTV) footage: An evaluation of the effect of training on facial identification ability. Axis Online J. CAHId 2009, 1, 19–28. [Google Scholar]

- Righi, G.; Peissig, J.J.; Tarr, M.J. Recognizing disguised faces. Vis. Cogn. 2012, 20, 143–169. [Google Scholar] [CrossRef]

- Towler, A.; White, D.; Kemp, R.I. Evaluating the feature comparison strategy for forensic face identification. J. Exp. Psychol. Appl. 2017, 23, 47–58. [Google Scholar] [CrossRef] [PubMed]

- Megreya, A.M.; Bindemann, M. Feature instructions improve face-matching accuracy. PLoS ONE 2018, 13, e0193455. [Google Scholar] [CrossRef] [PubMed]

- Megreya, A.M. Feature-by-feature comparison and holistic processing in unfamiliar face matching. PeerJ 2018, 6, e4437. [Google Scholar] [CrossRef] [Green Version]

- Goold, B.J. Open to all? Regulating open street CCTV and the case for “symmetrical surveillance”. Crim. Justice Ethics 2006, 25, 3–17. [Google Scholar] [CrossRef]

- Welsh, B.C.; Farrington, D.P. Public area CCTV and crime prevention: An updated systematic review and meta-analysis. Justice Q. 2009, 26, 716–745. [Google Scholar] [CrossRef]

- Kleinberg, K.F.; Vanezis, P.; Burton, A.M. Failure of anthropometry as a facial identification technique using high-quality photographs. J. Forensic Sci. 2007, 52, 779–783. [Google Scholar] [CrossRef] [PubMed]

- Keval, H.U.; Sasse, M.A. Can we ID from CCTV? Image quality in digital CCTV and face identification performance. Mob. Multimedia Image Process. Secur. Appl. 2008, 6982, 69820. [Google Scholar] [CrossRef] [Green Version]

- Smith, R.A.; MacLennan-Brown, K.; Tighe, J.F.; Cohen, N.; Triantaphillidou, S.; MacDonald, L.W. Colour analysis and verification of CCTV images under different lighting conditions. Image Qual. Syst. Perform. V 2008, 6808, 68080. [Google Scholar] [CrossRef] [Green Version]

- Bindemann, M.; Attard, J.; Leach, A.; Johnston, R.A. The effect of image pixelation on unfamiliar-face matching. Appl. Cogn. Psychol. 2013, 27, 707–717. [Google Scholar] [CrossRef] [Green Version]

- Burton, A.M.; Wilson, S.; Cowan, M.; Bruce, V. Face recognition in poor-quality video: Evidence from security surveillance. Psychol. Sci. 1999, 10, 243–248. [Google Scholar] [CrossRef]

- Ritchie, K.L.; White, D.; Kramer, R.S.S.; Noyes, E.; Jenkins, R.; Burton, A.M. Enhancing CCTV: Averages improve face identification from poor-quality images. Appl. Cogn. Psychol. 2018, 32, 671–680. [Google Scholar] [CrossRef] [Green Version]

- Fysh, M.C.; Bindemann, M. The Kent Face Matching Test. Br. J. Psychol. 2018, 109, 219–231. [Google Scholar] [CrossRef] [Green Version]

- Kramer, R.S.S.; Mohamed, S.; Hardy, S.C. Unfamiliar Face Matching With Driving Licence and Passport Photographs. Perception 2019, 48, 175–184. [Google Scholar] [CrossRef] [PubMed]

- Burton, A.M.; White, D.; McNeill, A. The Glasgow Face Matching Test. Behav. Res. Methods 2010, 42, 286–291. [Google Scholar] [CrossRef] [Green Version]

- Bindemann, M.; Attard, J.; Johnston, R.A. Perceived ability and actual recognition accuracy for unfamiliar and famous faces. Cogent Psychol. 2014, 1, 986903. [Google Scholar] [CrossRef]

- Damjanovski, V. CCTV from Light to Pixels, 3rd ed.; Elsevier: Oxford, UK, 2014; ISBN 9780124045576. [Google Scholar]

- Ward, D. Testing Camera Height vs. Image Quality; Pennsylvania, USA. 2013. Available online: https://ipvm.com/reports/testing-camera-height (accessed on 25 November 2021).

- Mileva, M.; Burton, A.M. Face search in CCTV surveillance. Cogn. Res. Princ. Implic. 2019, 4, 1–21. [Google Scholar] [CrossRef]

- Abudarham, N.; Yovel, G. Reverse engineering the face space: Discovering the critical features for face identification. J. Vis. 2016, 16, 40. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Stephan, C.N. Perspective distortion in craniofacial superimposition: Logarithmic decay curves mapped mathematically and by practical experiment. Forensic Sci. Int. 2015, 257, 520.e1–520.e8. [Google Scholar] [CrossRef] [Green Version]

- Stephan, C.N.; Armstrong, B. Scientific estimation of the subject-to-camera distance from facial photographs for craniofacial superimposition. Forensic Sci. Int. Rep. 2021, 4, 100238. [Google Scholar] [CrossRef]

- British Security Industry. Video Surveillance Systems for Use in Security Applications: System Requirements—General. 2014. Available online: https://www.bsia.co.uk/publications/video-surveillance/ (accessed on 30 October 2021).

- Cohen, N.; Gattuso, J.; MacLennan-Brown, K. CCTV Operational Requirements Manual; Cohen, N., Gattuso, J., MacLennan-Brown, K., Eds.; Home Office Scientific Development Branch: Sandridge, UK, 2009; ISBN 9781847269027. [Google Scholar]

- Surette, R. The thinking eye: Pros and cons of second generation CCTV surveillance systems. Policing 2005, 28, 152–173. [Google Scholar] [CrossRef]

- Kruegle, H. CCTV Surveillance: Analog and Digital Video Practices and Technology, 2nd ed.; Elsevier Butterworth–Heinemann: Burlington, MA, USA, 2007; ISBN 9780750677684. [Google Scholar]

- Blunden, B. Anti-Forensics: The Rootkit Connection. In Proceedings of the Black Hat USA 2009, Las Vegas, NV, USA, 25–30 July 2009; pp. 1–44. [Google Scholar]

- D’Orazio, C.; Ariffin, A.; Choo, K.-K.R. IOS Anti-Forensics: How Can We Securely Conceal, Delete and Insert Data? In Proceedings of the 47th Hawaii International Conference on System Sciences, Waikoloa, HI, USA, 13 October 2013; pp. 6–9. Available online: https://ssrn.com/abstract=2339819 (accessed on 30 October 2021).

- Kissel, R.; Regenscheid, A.; Scholl, M.; Stine, K. Guidelines for Media Sanitization; US Department of Commerce, National Institute of Standards and Technology: Gaithersburg, MD, USA, 2014; Volume 800.

- Ariffin, A.; Choo, K.K.; Yunos, Z. Forensic readiness: A case study on digital CCTV systems antiforensics. In Contemporary Digital Forensic Investigations of Cloud and Mobile Applications; Choo, K.-K.R., Dehghantanha, A., Eds.; Syngress: London, UK, 2017; pp. 147–162. [Google Scholar]

- Gibelli, D.; Obertová, Z.; Ritz-Timme, S.; Gabriel, P.; Arent, T.; Ratnayake, M.; De Angelis, D.; Cattaneo, C. The identification of living persons on images: A literature review. Leg. Med. 2016, 19, 52–60. [Google Scholar] [CrossRef]

- ENFSI. Best Practice Manual for Facial Image Comparison; ENFSI: Wiesbaden, Germany, 2018; Volume 1, Available online: https://enfsi.eu/wp-content/uploads/2017/06/ENFSI-BPM-DI-01.pdf (accessed on 30 October 2021).

- Smith, S. CCTV Market Outlook 2017. Cision PR Newswire, 15 May 2014. [Google Scholar]

- Wood, L. CCTV Cameras—Worldwide Market Outlook Report 2018–2026: Dome Cameras Dominate. Businesswire 2018. Available online: https://www.businesswire.com/news/home/20180913005519/en/CCTV-Cameras---Worldwide-Market-Outlook-Report-2018-2026-Dome-Cameras-Dominate---ResearchAndMarkets.com (accessed on 30 October 2021).

- Yip, A.W.; Sinha, P. Contribution of color to face recognition. Perception 2002, 31, 995–1003. [Google Scholar] [CrossRef]

- Bindemann, M.; Burton, A.M. The role of color in human face detection. Cogn. Sci. 2009, 33, 1144–1156. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hunt, R.W.G. The Reproduction of Colour, 5th ed.; Fountain Press: London, UK, 1995. [Google Scholar]

- MacDonald, L.W. Fatal Flaws: Uncertainty in the Interpretation of Colour in CCTV Images. Ann. Br. Mach. Vis. Assoc. 2007, 7, 1–11. [Google Scholar]

- Bachmann, T. Identification of spatially quantised tachistoscopic images of faces: How many pixels does it take to carry identity? Eur. J. Cogn. Psychol. 1991, 3, 87–103. [Google Scholar] [CrossRef]

- Bruce, V.; Henderson, Z.; Newman, C.; Burton, A.M. Matching identities of familiar and unfamiliar faces caught on CCTV images. J. Exp. Psychol. Appl. 2001, 7, 207–218. [Google Scholar] [CrossRef]

- Costen, N.P.; Parker, D.M.; Craw, I. Spatial Content and Spatial Quantisation Effects in Face Recognition. Perception 1994, 23, 129–146. [Google Scholar] [CrossRef] [PubMed]

- Costen, N.P.; Parker, D.M.; Craw, I. Effects of high-pass and low-pass spatial filtering on face identification. Percept. Psychophys. 1996, 58, 602–612. [Google Scholar] [CrossRef] [PubMed]

- Viték, S.; Klíma, M.; Krasula, L. Video compression technique impact on efficiency of person identification in CCTV systems. In Proceedings of the Proceedings—International Carnahan Conference on Security Technology, Rome, Italy, 13–16 October 2014. [Google Scholar]

- Utochkin, S. The Principles of CCTV Design in VideoCAD Interframe Compression. 2011. Available online: https://cctvcad.com/Files/the_principles_of_cctv_design_in_videocad_part5.pdf (accessed on 30 October 2021).

- Stephan, C.N. Estimating the Skull-to-Camera Distance from Facial Photographs for Craniofacial Superimposition. J. Forensic Sci. 2017, 62, 850–860. [Google Scholar] [CrossRef]

- Klima, M.; Fliegel, K. Image compression techniques in the field of security technology: Examples and discussion. In Proceedings of the 38th Annual 2004 International Carnahan Conference on Security Technology, Albuquerque, NM, USA, 11–14 October 2004; IEEE: Albuquerque, NM, USA, 2014; pp. 278–284. [Google Scholar]

- Qi, X.; Liu, C. Mitigate compression artifacts for face in video recognition. In Proceedings of the Disruptive Technologies in Information Sciences IV, online. 27 April–8 May 2020; Blowers, M., Hall, R.D., Dasari, V.R., Eds.; SPIE: Bellingham, WA, USA, 2020; p. 25. [Google Scholar]

- Ghazali, N.N.A.N.; Zamani, N.A.; Abdullah, S.N.H.S.; Jameson, J. Super resolution combiantion methods for CCTV forensic interpretation. In Proceedings of the 12th International Conference on Intelligent Systems Design and Applications, Kochi, India, 27–29 November 2012; pp. 853–858. [Google Scholar]

- Stevenage, S.V.; Pitfield, C. Fact or friction: Examination of the transparency, reliability and sufficiency of the ACE-V method of fingerprint analysis. Forensic Sci. Int. 2016, 267, 145–156. [Google Scholar] [CrossRef] [Green Version]

- Norell, K.; Läthén, K.B.; Bergström, P.; Rice, A.; Natu, V.; O’Toole, A. The Effect of Image Quality and Forensic Expertise in Facial Image Comparisons. J. Forensic Sci. 2015, 60, 331–340. [Google Scholar] [CrossRef] [PubMed]

- FISWG. Guide for Role-Based Training in Facial Comparison; FISWG: Washington, DC, USA, 2020; Available online: https://www.fiswg.org/fiswg_guide_for_role-based_training_in_facial_comparison_v1.0_20200717.pdf (accessed on 30 October 2021).

- Towler, A.; Kemp, R.I.; Mike Burton, A.; Dunn, J.D.; Wayne, T.; Moreton, R.; White, D. Do professional facial image comparison training courses work? PLoS ONE 2019, 14, e0211037. [Google Scholar] [CrossRef] [PubMed]

- Davis, J.P.; Tamonyte, D. Masters of disguise: Super-recognisers’ superior memory for concealed unfamiliar faces. In Proceedings of the 2017 Seventh International Conference on Emerging Security Technologies (EST), Canterbury, UK, 6–8 September 2017; IEEE: Canterbury, UK, 2017; pp. 44–49. [Google Scholar]

- Russell, R.; Duchaine, B.; Nakayama, K. Super-recognizers: People with extraordinary face recognition ability. Psychon. Bull. Rev. 2009, 16, 252–257. [Google Scholar] [CrossRef] [PubMed]

| Database Cohort Organization | Unique Individuals | Photographs | Corresponding CCTV 1 Recordings | Data Loss (%) |

| ST 2 CCTV 1—ST 2 Photographs | 98 | 980 | 89 | 9.2% |

| Eye-level CCTV 1—ST 2 Photographs | 108 | 1080 | 76 | 29.6% |

| ST 2 CCTV 1 with Cap—ST 2 Photographs | 45 | 450 | 34 | 24.4% |

| ST 2 CCTV 1 with Cap—ST 2 Photographs | 41 | 410 | 31 | 24.4% |

| Total IP 3 CCTV 1 Data | 292 | 2920 | 230 | 21.2% |

| Analogue CCTV 1—ST 2 Photographs | 111 | 1110 | 107 | 3.6% |

| CCTV 1 Grand Totals | 403 | 4030 | 337 | 16.4% |

| General Limitations | Specific Limitations | Effects |

|---|---|---|

| Camera placement | ||

| Camera specifications |

| |

| Lighting conditions |

| |

| Image quality | ||

| Data loss and corruption |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bacci, N.; Davimes, J.G.; Steyn, M.; Briers, N. Forensic Facial Comparison: Current Status, Limitations, and Future Directions. Biology 2021, 10, 1269. https://doi.org/10.3390/biology10121269

Bacci N, Davimes JG, Steyn M, Briers N. Forensic Facial Comparison: Current Status, Limitations, and Future Directions. Biology. 2021; 10(12):1269. https://doi.org/10.3390/biology10121269

Chicago/Turabian StyleBacci, Nicholas, Joshua G. Davimes, Maryna Steyn, and Nanette Briers. 2021. "Forensic Facial Comparison: Current Status, Limitations, and Future Directions" Biology 10, no. 12: 1269. https://doi.org/10.3390/biology10121269

APA StyleBacci, N., Davimes, J. G., Steyn, M., & Briers, N. (2021). Forensic Facial Comparison: Current Status, Limitations, and Future Directions. Biology, 10(12), 1269. https://doi.org/10.3390/biology10121269