A Citizen Science Tool Based on an Energy Autonomous Embedded System with Environmental Sensors and Hyperspectral Imaging

Abstract

1. Introduction

2. Literature Review

2.1. Embedded Systems for Smart Agriculture

2.2. Spectral Imaging in Agriculture

2.3. Artificial Intelligence in Agriculture

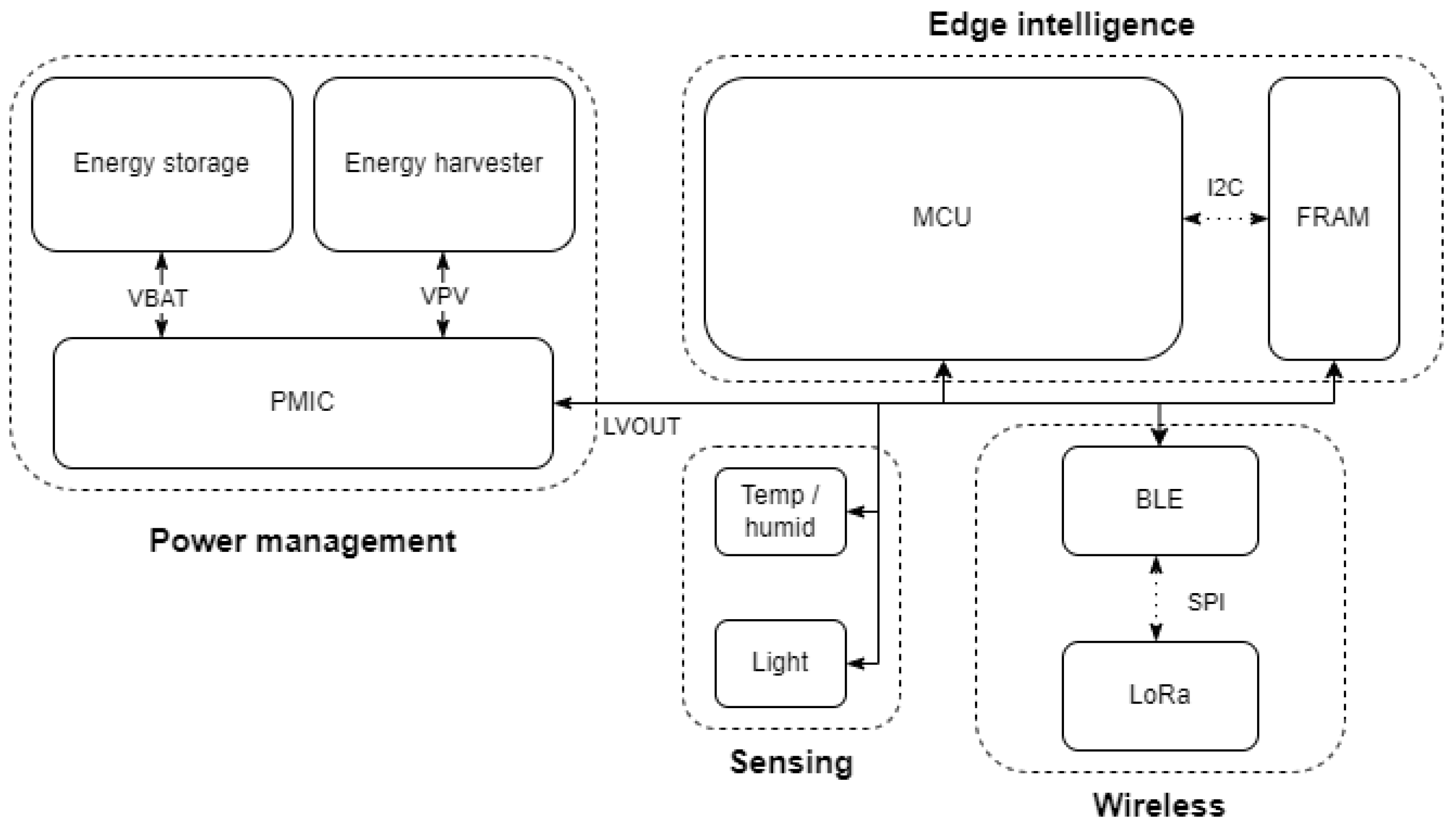

3. System Architecture

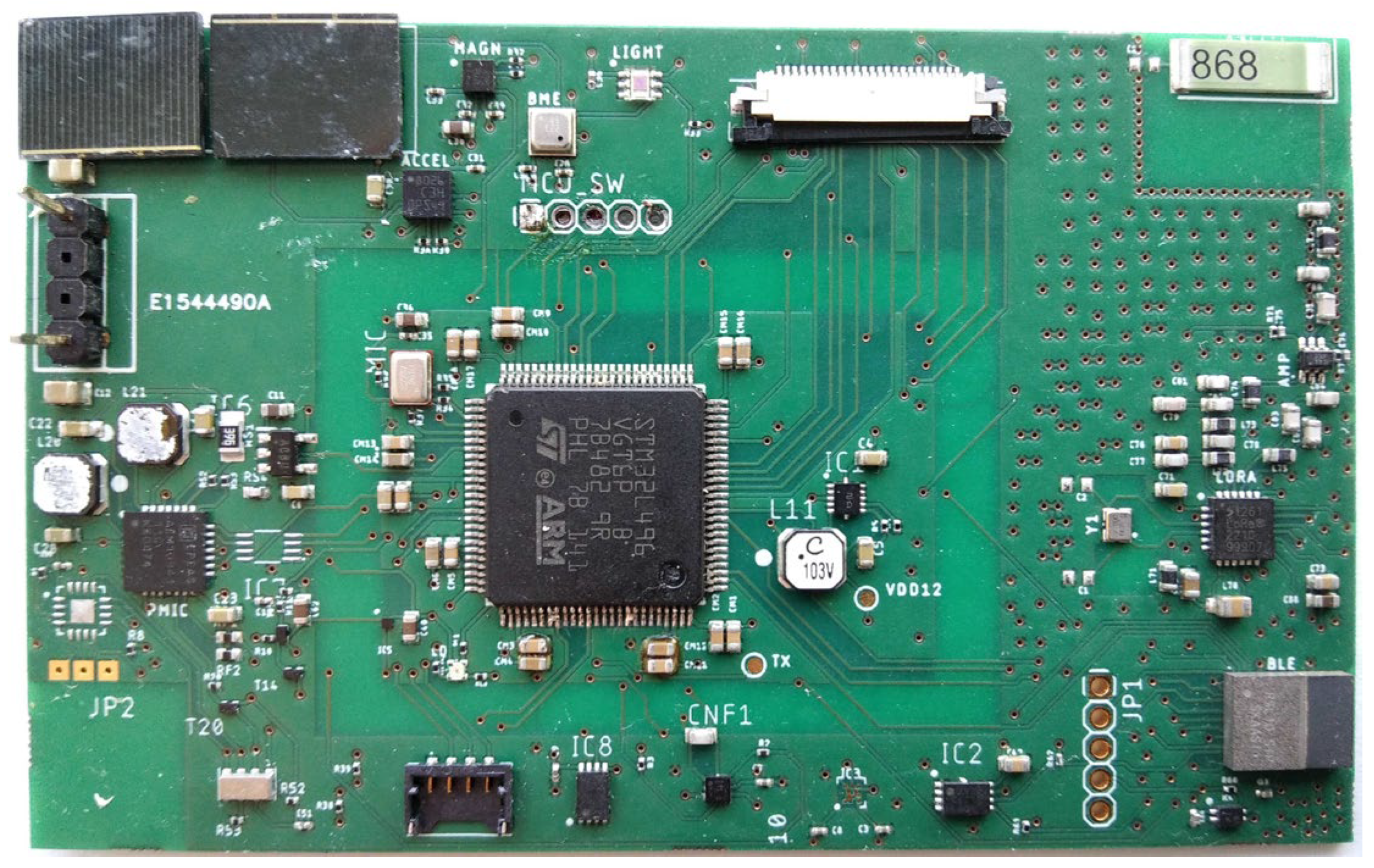

3.1. Hardware and Internet of Things Sensors

3.1.1. System Specifications

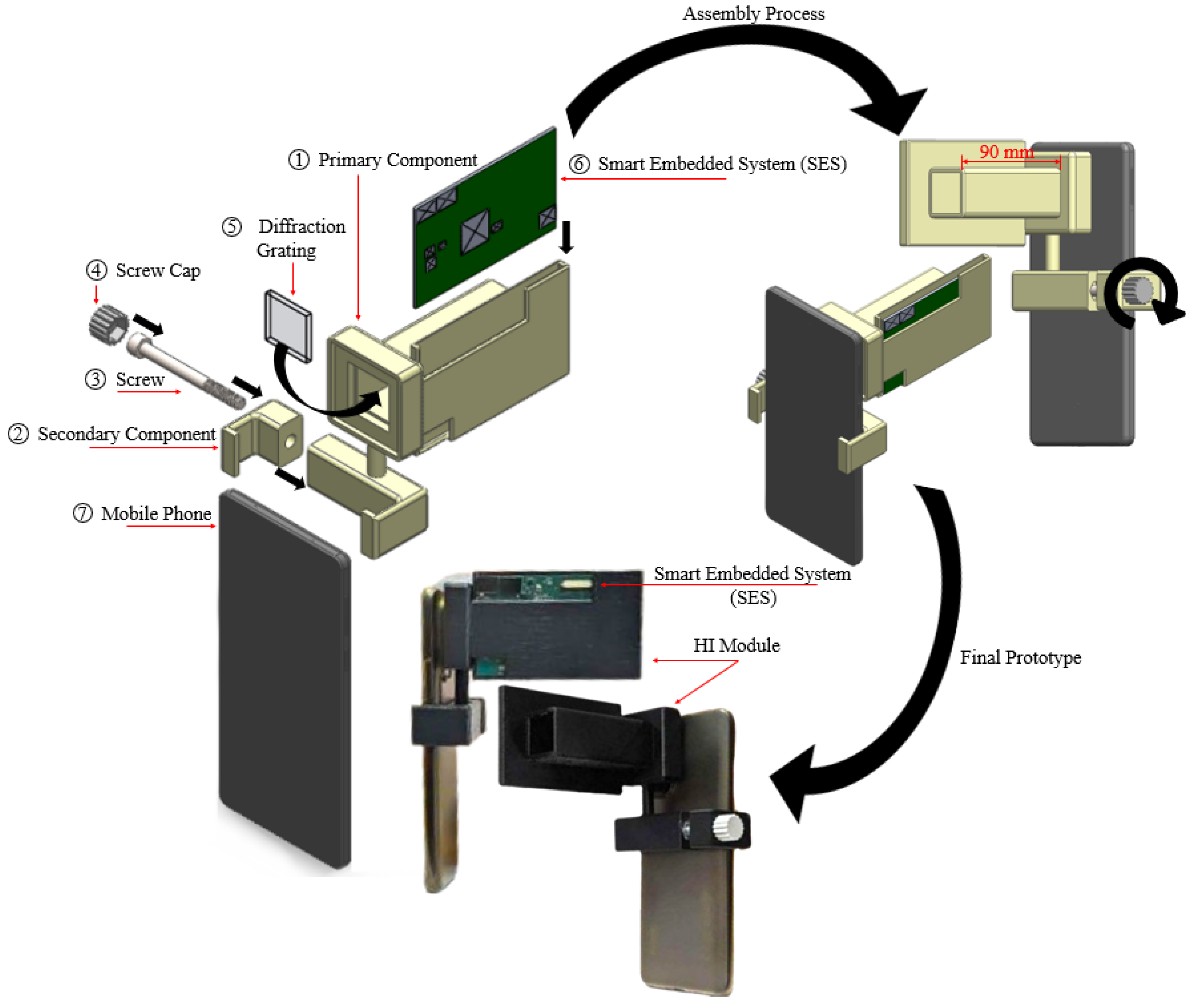

3.2. Diffraction Grating

3.3. Additive Manufacturing

3.4. Assembly Process

4. Deep Learning Integration

4.1. Dataset

4.2. Methodology

4.2.1. Sparse Training

4.2.2. Pruning

4.2.3. Fine-Tuning

4.3. Deep Learning Models

4.4. Model Training

4.5. Evaluation Metrics

4.6. Implementation in the Embedded System

5. Results and Discussion

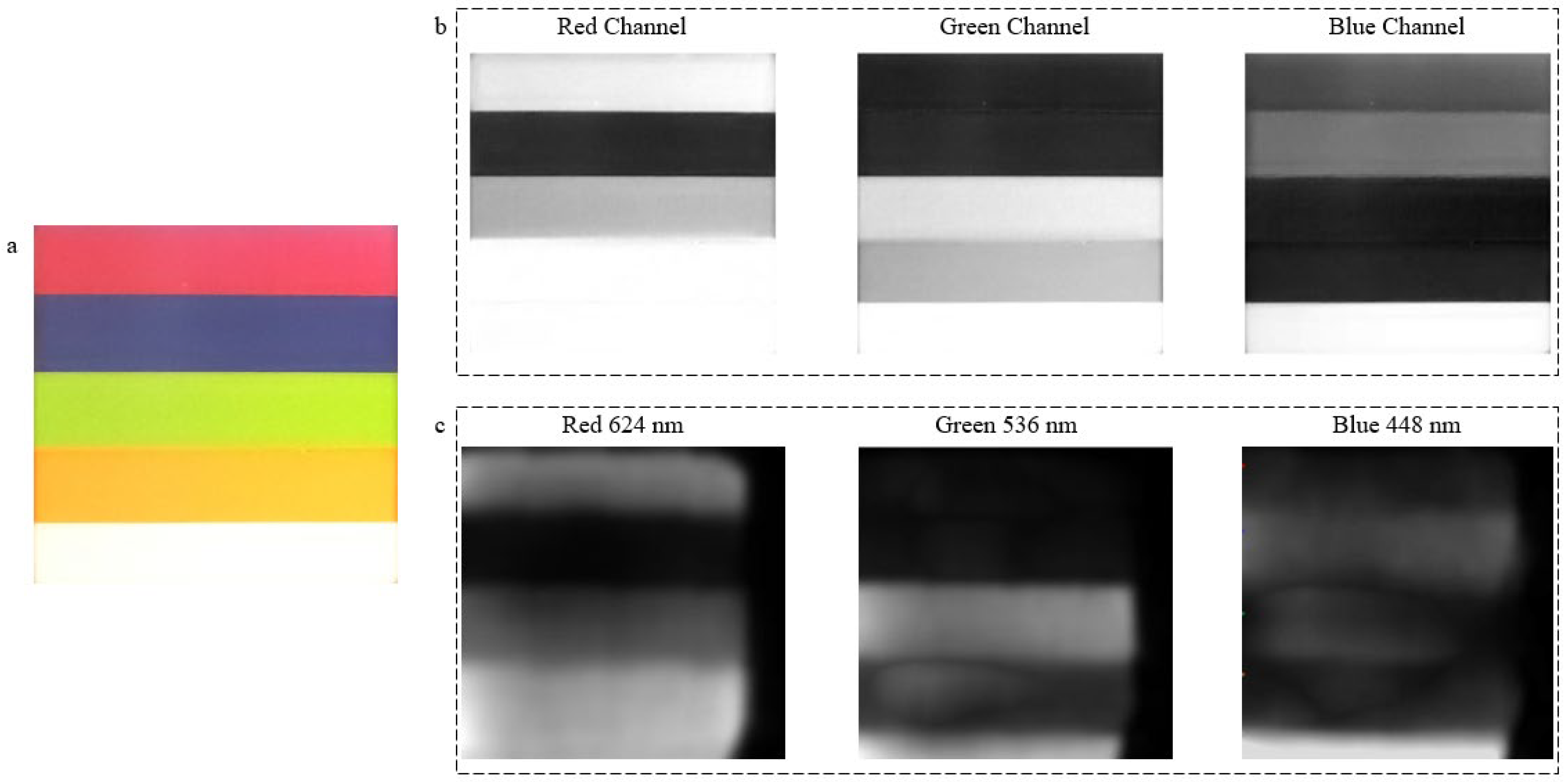

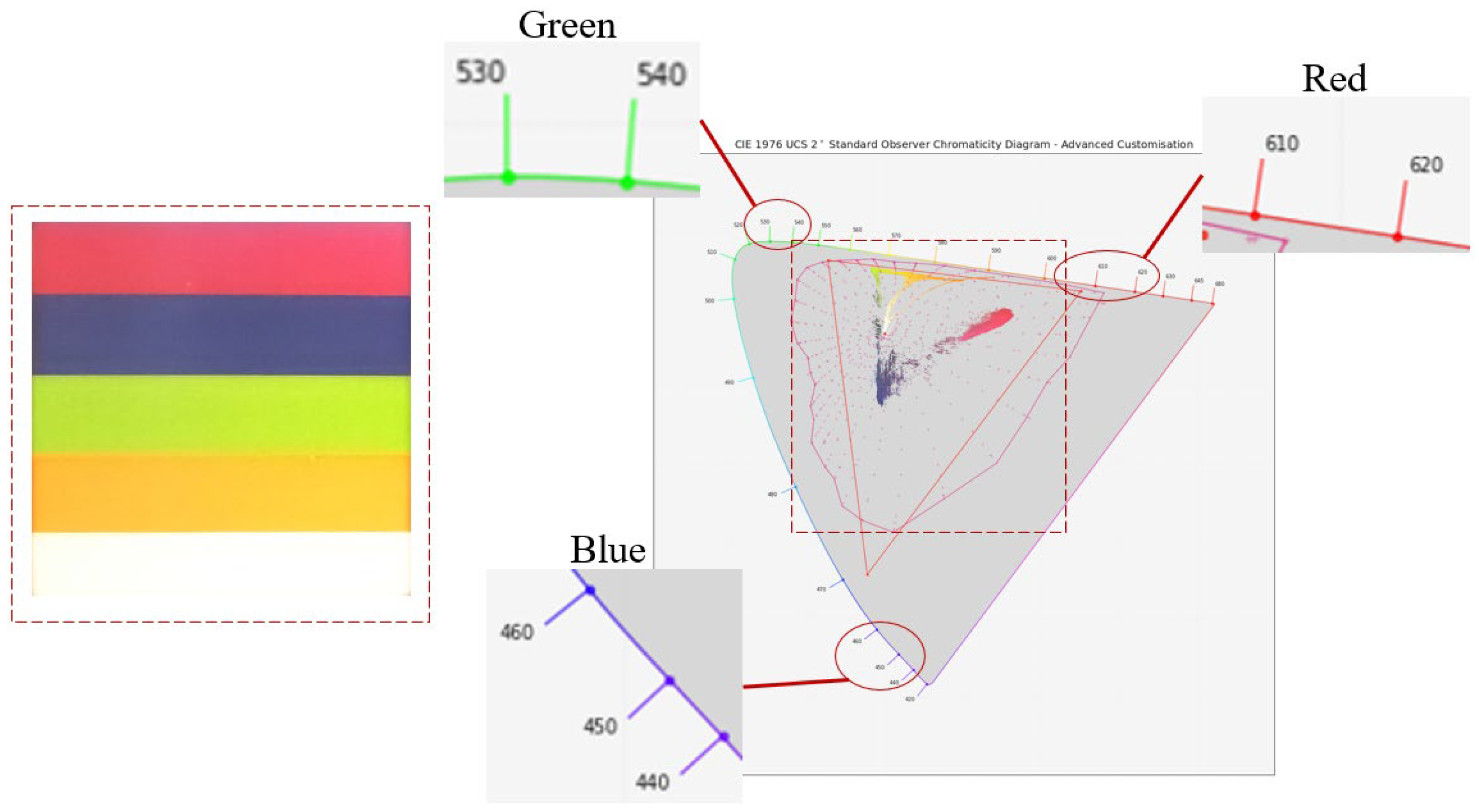

5.1. Proof of Concept Using Spectral Images from a 3D-Printed Multi-Color Box

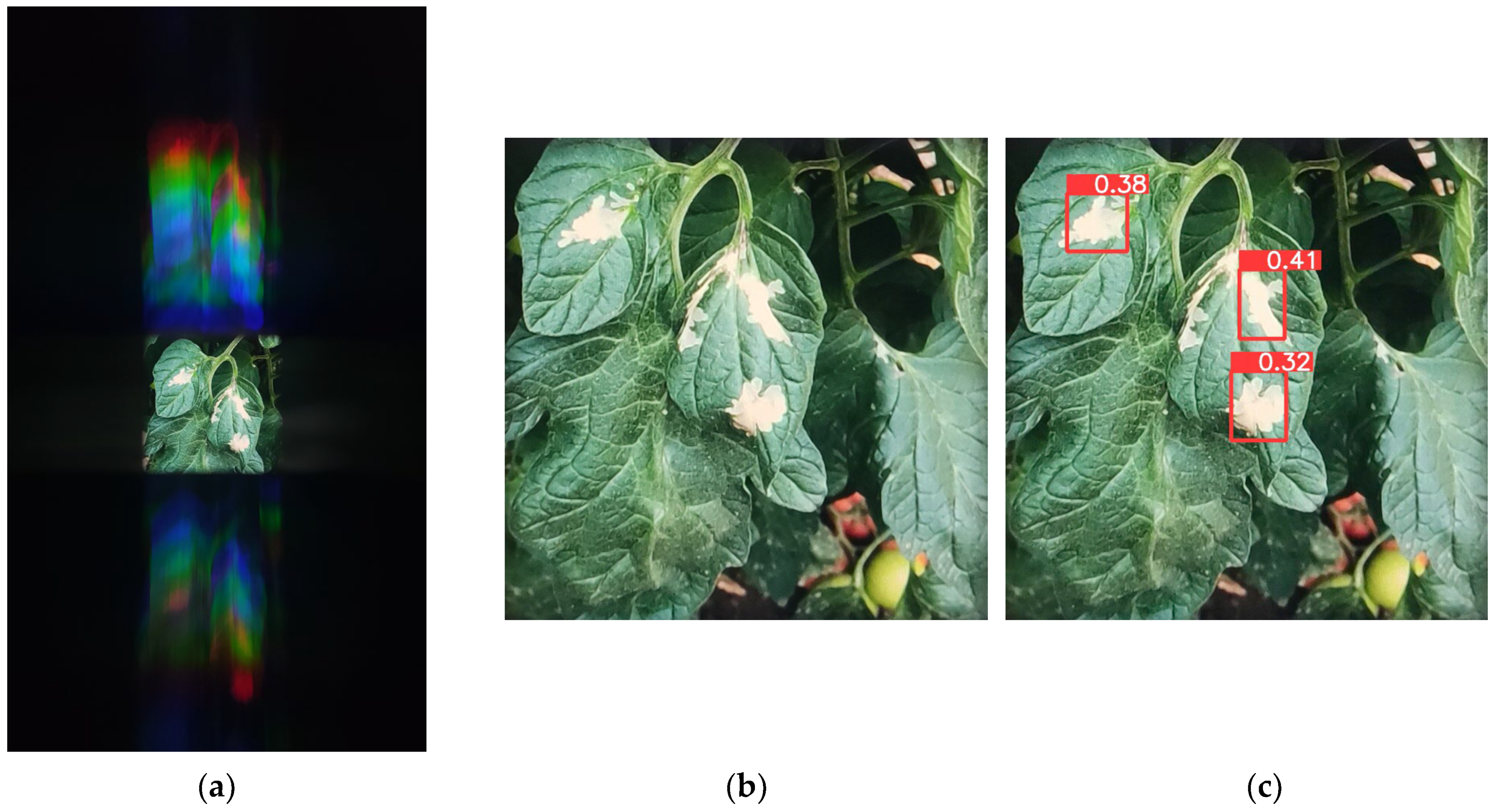

5.2. Proof of Concept Using Spectral Images from Agricultural Leaves

5.3. Deep Learning Models’ Results

5.4. Deep Learning Models’ Results on the Embedding System

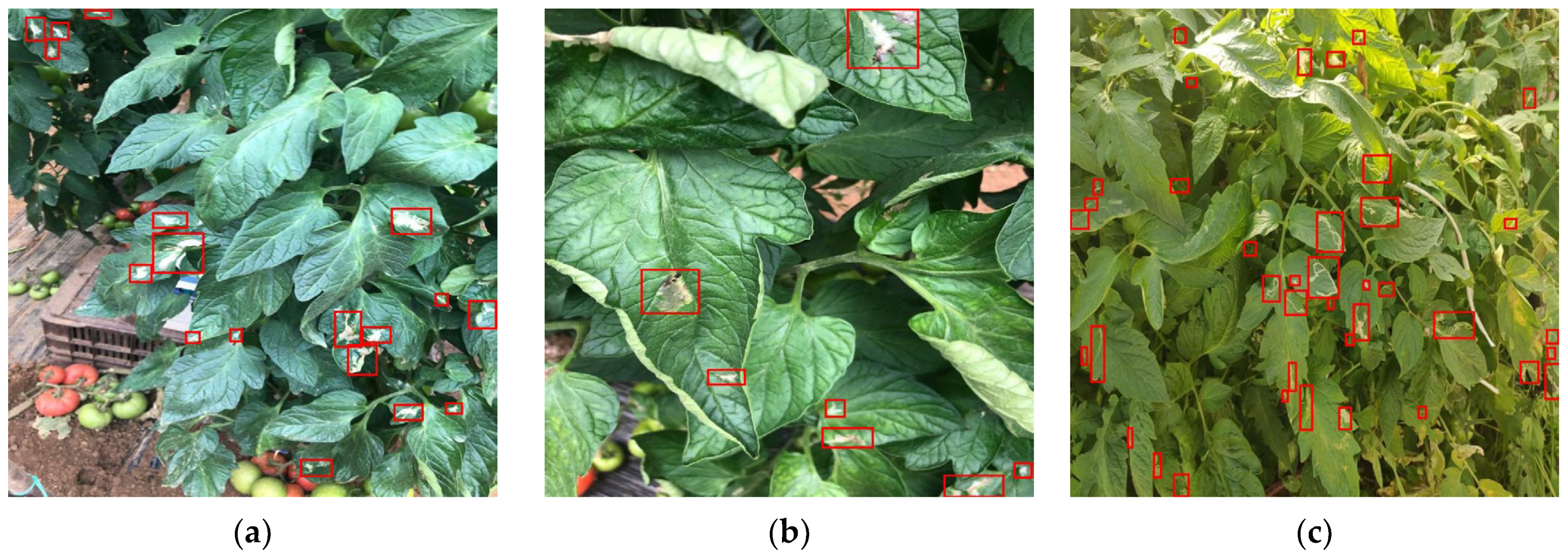

5.5. Proof of Concept in Detection of T. absoluta

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Sparsity | Pruning Ration | Params | GFLOPs | mAP50 | Size |

|---|---|---|---|---|---|

| 0.00001 | 10% | 286,535 | 0.9 | 0.0134 | 753 KB |

| 0.00001 | 20% | 245,420 | 0.8 | 0.0032 | 673 KB |

| 0.00001 | 30% | 208,185 | 0.7 | 0.0023 | 600 KB |

| 0.00005 | 10% | 286,249 | 0.9 | 0.0451 | 753 KB |

| 0.00005 | 20% | 244,197 | 0.8 | 0.0042 | 670 KB |

| 0.00005 | 30% | 202,607 | 0.7 | 0.0026 | 588 KB |

| 0.0001 | 10% | 285,034 | 0.9 | 0.1708 | 751 KB |

| 0.0001 | 20% | 239,037 | 0.8 | 0.0061 | 660 KB |

| 0.0001 | 30% | 201,337 | 0.7 | 0.0053 | 586 KB |

| 0.0005 | 10% | 279,444 | 0.9 | 0.4483 | 740 KB |

| 0.0005 | 20% | 237,086 | 0.8 | 0.4482 | 657 KB |

| 0.0005 | 30% | 195,702 | 0.7 | 0.3757 | 575 KB |

| 0.001 | 10% | 282,376 | 0.9 | 0.4390 | 745 KB |

| 0.001 | 20% | 237,293 | 0.8 | 0.4533 | 657 KB |

| 0.005 | 10% | 288,219 | 0.9 | 0.4146 | 757 KB |

References

- Dehnen-Schmutz, K.; Foster, G.L.; Owen, L.; Persello, S. Exploring the role of smartphone technology for citizen science in agriculture. Agron. Sustain. Dev. 2016, 36, 25. [Google Scholar] [CrossRef]

- Brown, N.; Pérez-Sierra, A.; Crow, P.; Parnell, S. The role of passive surveillance and citizen science in plant health. CABI Agric. Biosci. 2020, 1, 17. [Google Scholar] [CrossRef] [PubMed]

- Carleton, R.D.; Owens, E.; Blaquière, H.; Bourassa, S.; Bowden, J.J.; Candau, J.-N.; DeMerchant, I.; Edwards, S.; Heustis, A.; James, P.M.A.; et al. Tracking insect outbreaks: A case study of community-assisted moth monitoring using sex pheromone traps. FACETS 2020, 5, 91–104. [Google Scholar] [CrossRef]

- Malek, R.; Tattoni, C.; Ciolli, M.; Corradini, S.; Andreis, D.; Ibrahim, A.; Mazzoni, V.; Eriksson, A.; Anfora, G. Coupling Traditional Monitoring and Citizen Science to Disentangle the Invasion of Halyomorpha halys. ISPRS Int. J. Geo-Inf. 2018, 7, 171. [Google Scholar] [CrossRef]

- Meentemeyer, R.K.; Dorning, M.A.; Vogler, J.B.; Schmidt, D.; Garbelotto, M. Citizen science helps predict risk of emerging infectious disease. Front. Ecol. Environ. 2015, 13, 189–194. [Google Scholar] [CrossRef] [PubMed]

- Garbelotto, M.; Maddison, E.R.; Schmidt, D. SODmap and SODmap Mobile: Two Tools to Monitor the Spread of Sudden Oak Death. For. Phytophthoras 2014, 4. [Google Scholar] [CrossRef]

- de Groot, M.; Pocock, M.J.O.; Bonte, J.; Fernandez-Conradi, P.; Valdés-Correcher, E. Citizen Science and Monitoring Forest Pests: A Beneficial Alliance? Curr. For. Rep. 2023, 9, 15–32. [Google Scholar] [CrossRef] [PubMed]

- Mkonyi, L.; Rubanga, D.; Richard, M.; Zekeya, N.; Sawahiko, S.; Maiseli, B.; Machuve, D. Early identification of Tuta absoluta in tomato plants using deep learning. Sci. Afr. 2020, 10, e00590. [Google Scholar] [CrossRef]

- Wang, C.; Liu, B.; Liu, L.; Zhu, Y.; Hou, J.; Liu, P.; Li, X. A review of deep learning used in the hyperspectral image analysis for agriculture. Artif. Intell. Rev. 2021, 54, 5205–5253. [Google Scholar] [CrossRef]

- Khan, A.; Vibhute, A.D.; Mali, S.; Patil, C.H. A systematic review on hyperspectral imaging technology with a machine and deep learning methodology for agricultural applications. Ecol. Inform. 2022, 69, 101678. [Google Scholar] [CrossRef]

- Lu, B.; Dao, P.D.; Liu, J.; He, Y.; Shang, J. Recent Advances of Hyperspectral Imaging Technology and Applications in Agriculture. Remote Sens. 2020, 12, 2659. [Google Scholar] [CrossRef]

- Avola, G.; Matese, A.; Riggi, E. An Overview of the Special Issue on ‘Precision Agriculture Using Hyperspectral Images’. Remote Sens. 2023, 15, 1917. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. A review on the combination of deep learning techniques with proximal hyperspectral images in agriculture. Comput. Electron. Agric. 2023, 210, 107920. [Google Scholar] [CrossRef]

- Wang, Y.M.; Ostendorf, B.; Gautam, D.; Habili, N.; Pagay, V. Plant Viral Disease Detection: From Molecular Diagnosis to Optical Sensing Technology—A Multidisciplinary Review. Remote Sens. 2022, 14, 1542. [Google Scholar] [CrossRef]

- Rayhana, R.; Ma, Z.; Liu, Z.; Xiao, G.; Ruan, Y.; Sangha, J. A Review on Plant Disease Detection Using Hyperspectral Imaging. IEEE Trans. AgriFood Electron. 2023, 1, 108–134. [Google Scholar] [CrossRef]

- Teke, M.; Deveci, H.S.; Haliloğlu, O.; Gürbüz, S.Z.; Sakarya, U. A short survey of hyperspectral remote sensing applications in agriculture. In Proceedings of the 2013 6th International Conference on Recent Advances in Space Technologies (RAST), Istanbul, Turkey, 12–14 June 2013; pp. 171–176. [Google Scholar] [CrossRef]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral Imaging: A Review on UAV-Based Sensors, Data Processing and Applications for Agriculture and Forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Eli-Chukwu, N.C. Applications of Artificial Intelligence in Agriculture: A Review. Eng. Technol. Appl. Sci. Res. 2019, 9, 4377–4383. [Google Scholar] [CrossRef]

- Pechlivani, E.M.; Papadimitriou, A.; Pemas, S.; Giakoumoglou, N.; Tzovaras, D. Low-Cost Hyperspectral Imaging Device for Portable Remote Sensing. Instruments 2023, 7, 32. [Google Scholar] [CrossRef]

- Ahmad, F.F.; Ghenai, C.; Bettayeb, M. Maximum power point tracking and photovoltaic energy harvesting for Internet of Things: A comprehensive review. Sustain. Energy Technol. Assess. 2021, 47, 101430. [Google Scholar] [CrossRef]

- Pechlivani, E.M.; Gkogkos, G.; Giakoumoglou, N.; Hadjigeorgiou, I.; Tzovaras, D. Towards Sustainable Farming: A Robust Decision Support System’s Architecture for Agriculture 4.0. In Proceedings of the 2023 24th International Conference on Digital Signal Processing (DSP), Rhodes, Greece, 11–13 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Miranda, M.M.M.; Picanço, M.; Zanuncio, J.C.; Guedes, R.N.C. Ecological Life Table of Tuta absoluta (Meyrick) (Lepidoptera: Gelechiidae). Biocontrol Sci. Technol. 1998, 8, 597–606. [Google Scholar] [CrossRef]

- Urbaneja, A.; González-Cabrera, J.; Arnó, J.; Gabarra, R. Prospects for the biological control of Tuta absoluta in tomatoes of the Mediterranean basin. Pest Manag. Sci. 2012, 68, 1215–1222. [Google Scholar] [CrossRef]

- Qazi, S.; Khawaja, B.A.; Farooq, Q.U. IoT-Equipped and AI-Enabled Next Generation Smart Agriculture: A Critical Review, Current Challenges and Future Trends. IEEE Access 2022, 10, 21219–21235. [Google Scholar] [CrossRef]

- Brunelli, D.; Albanese, A.; d’Acunto, D.; Nardello, M. Energy Neutral Machine Learning Based IoT Device for Pest Detection in Precision Agriculture. IEEE Internet Things Mag. 2019, 2, 10–13. [Google Scholar] [CrossRef]

- Gia, T.N.; Qingqing, L.; Queralta, J.P.; Zou, Z.; Tenhunen, H.; Westerlund, T. Edge AI in Smart Farming IoT: CNNs at the Edge and Fog Computing with LoRa. In Proceedings of the 2019 IEEE AFRICON, Accra, Ghana, 25–27 September 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Shivling, D.V.; Sharma, S.K.; Ghanshyam, C.; Dogra, S.; Mokheria, P.; Kaur, R.; Arora, D. Low cost sensor based embedded system for plant protection and pest control. In Proceedings of the 2015 International Conference on Soft Computing Techniques and Implementations (ICSCTI), Faridabad, India, 8–10 October 2015; pp. 179–184. [Google Scholar] [CrossRef]

- Materne, N.; Inoue, M. IoT Monitoring System for Early Detection of Agricultural Pests and Diseases. In Proceedings of the 2018 12th South East Asian Technical University Consortium (SEATUC), Yogyakarta, Indonesia, 12–13 March 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Yashodha, G.; Shalini, D. An integrated approach for predicting and broadcasting tea leaf disease at early stage using IoT with machine learning—A review. Mater. Today Proc. 2021, 37, 484–488. [Google Scholar] [CrossRef]

- Terentev, A.; Dolzhenko, V.; Fedotov, A.; Eremenko, D. Current State of Hyperspectral Remote Sensing for Early Plant Disease Detection: A Review. Sensors 2022, 22, 757. [Google Scholar] [CrossRef]

- Hussain, T.; Shah, B.S.; Ullah, I.U.; Shah, S.M.; Ali, F.; Park, S.H. Deep learning-based segmentation and classification of leaf images for detection of tomato plant disease. Front. Plant Sci. 2022, 13, 1031748. [Google Scholar] [CrossRef]

- Giakoumoglou, N.; Pechlivani, E.M.; Sakelliou, A.; Klaridopoulos, C.; Frangakis, N.; Tzovaras, D. Deep learning-based multi-spectral identification of grey mould. Smart Agric. Technol. 2023, 4, 100174. [Google Scholar] [CrossRef]

- Georgantopoulos, P.S.; Papadimitriou, D.; Constantinopoulos, C.; Manios, T.; Daliakopoulos, I.N.; Kosmopoulos, D. A Multispectral Dataset for the Detection of Tuta Absoluta and Leveillula Taurica in Tomato Plants. Smart Agric. Technol. 2023, 4, 100146. [Google Scholar] [CrossRef]

- Fernández, C.I.; Leblon, B.; Wang, J.; Haddadi, A.; Wang, K. Detecting Infected Cucumber Plants with Close-Range Multispectral Imagery. Remote Sens. 2021, 13, 2948. [Google Scholar] [CrossRef]

- Nagasubramanian, K.; Jones, S.; Singh, A.K.; Sarkar, S.; Singh, A.; Ganapathysubramanian, B. Plant disease identification using explainable 3D deep learning on hyperspectral images. Plant Methods 2019, 15, 98. [Google Scholar] [CrossRef]

- Moghadam, P.; Ward, D.; Goan, E.; Jayawardena, S.; Sikka, P.; Hernandez, E. Plant Disease Detection Using Hyperspectral Imaging. In Proceedings of the 2017 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Sydney, Australia, 29 November–1 December 2017; pp. 1–8. [Google Scholar] [CrossRef]

- Nguyen, C.; Sagan, V.; Maimaitiyiming, M.; Maimaitijiang, M.; Bhadra, S.; Kwasniewski, M.T. Early Detection of Plant Viral Disease Using Hyperspectral Imaging and Deep Learning. Sensors 2021, 21, 742. [Google Scholar] [CrossRef] [PubMed]

- Feng, L.; Wu, B.; He, Y.; Zhang, C. Hyperspectral Imaging Combined with Deep Transfer Learning for Rice Disease Detection. Front. Plant Sci. 2021, 12, 693521. [Google Scholar] [CrossRef] [PubMed]

- Bannerjee, G.; Sarkar, U.; Das, S.; Ghosh, I. Artificial intelligence in agriculture: A literature survey. Int. J. Sci. Res. Comput. Sci. Appl. Manag. Stud. 2018, 7, 1–6. [Google Scholar]

- Giakoumoglou, N.; Pechlivani, E.M.; Tzovaras, D. Generate-Paste-Blend-Detect: Synthetic dataset for object detection in the agriculture domain. Smart Agric. Technol. 2023, 5, 100258. [Google Scholar] [CrossRef]

- Giakoumoglou, N.; Pechlivani, E.M.; Katsoulas, N.; Tzovaras, D. White Flies and Black Aphids Detection in Field Vegetable Crops using Deep Learning. In Proceedings of the 2022 IEEE 5th International Conference on Image Processing Applications and Systems (IPAS), Genova, Italy, 5–7 December 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Liu, J.; Wang, R.; Xie, C.; Yang, P.; Wang, F.; Sudirman, S.; Liu, W. PestNet: An End-to-End Deep Learning Approach for Large-Scale Multi-Class Pest Detection and Classification. IEEE Access 2019, 7, 45301–45312. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X. Tomato Diseases and Pests Detection Based on Improved Yolo V3 Convolutional Neural Network. Front. Plant Sci. 2020, 11, 898. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.; Liu, L.; Xie, C.; Yang, P.; Li, R.; Zhou, M. AgriPest: A Large-Scale Domain-Specific Benchmark Dataset for Practical Agricultural Pest Detection in the Wild. Sensors 2021, 21, 1601. [Google Scholar] [CrossRef]

- Fuentes, A.; Yoon, S.; Kim, S.; Park, D. A Robust Deep-Learning-Based Detector for Real-Time Tomato Plant Diseases and Pests Recognition. Sensors 2017, 17, 2022. [Google Scholar] [CrossRef]

- Loyani, L.K.; Bradshaw, K.; Machuve, D. Segmentation of Tuta absoluta ’s Damage on Tomato Plants: A Computer Vision Approach. Appl. Artif. Intell. 2021, 35, 1107–1127. [Google Scholar] [CrossRef]

- Mia, M.J.; Maria, S.K.; Taki, S.S.; Biswas, A.A. Cucumber disease recognition using machine learning and transfer learning. Bull. Electr. Eng. Inform. 2021, 10, 3432–3443. [Google Scholar] [CrossRef]

- Rubanga, D.P.; Loyani, L.K.; Richard, M.; Shimada, S. A Deep Learning Approach for Determining Effects of Tuta Absoluta in Tomato Plants. arXiv 2020. [Google Scholar] [CrossRef]

- Giakoumoglou, N.; Pechlivani, E.-M.; Frangakis, N.; Tzovaras, D. Enhancing Tuta absoluta Detection on Tomato Plants: Ensemble Techniques and Deep Learning. AI 2023, 4, 50. [Google Scholar] [CrossRef]

- Kouzinopoulos, C.S.; Tzovaras, D.; Bembnowicz, P.; Meli, M.; Bellanger, M.; Kauer, M.; De Vos, J.; Pasero, D.; Schellenberg, M.; Vujicic, O. AMANDA: An Autonomous Self-Powered Miniaturized Smart Sensing Embedded System. In Proceedings of the 2019 IEEE 9th International Conference on Consumer Electronics (ICCE-Berlin), Berlin, Germany, 8–11 September 2019; pp. 324–329. [Google Scholar] [CrossRef]

- Papaioannou, A.; Kouzinopoulos, C.S.; Ioannidis, D.; Tzovaras, D. An Ultra-low-power Embedded AI Fire Detection and Crowd Counting System for Indoor Areas. ACM Trans. Embed. Comput. Syst. 2023, 22, 1–20. [Google Scholar] [CrossRef]

- Rosa, R.L.; Dehollain, C.; Costanza, M.; Speciale, A.; Viola, F.; Livreri, P. A Battery-Free Wireless Smart Sensor platform with Bluetooth Low Energy Connectivity for Smart Agriculture. In Proceedings of the 2022 IEEE 21st Mediterranean Electrotechnical Conference (MELECON), Palermo, Italy, 14–16 June 2022; pp. 554–558. [Google Scholar] [CrossRef]

- Meli, M.L.; Favre, S.; Maij, B.; Stajic, S.; Boebel, M.; Poole, P.J.; Schellenberg, M.; Kouzinopoulos, C.S. Energy Autonomous Wireless Sensing Node Working at 5 Lux from a 4 cm2 Solar Cell. J. Low Power Electron. Appl. 2023, 13, 12. [Google Scholar] [CrossRef]

- Stuart, M.B.; McGonigle, A.J.S.; Davies, M.; Hobbs, M.J.; Boone, N.A.; Stanger, L.R.; Zhu, C.; Pering, T.D.; Willmott, J.R. Low-Cost Hyperspectral Imaging with A Smartphone. J. Imaging 2021, 7, 136. [Google Scholar] [CrossRef] [PubMed]

- Biswas, P.C.; Rani, S.; Hossain, M.A.; Islam, M.R.; Canning, J. Multichannel Smartphone Spectrometer Using Combined Diffraction Orders. IEEE Sens. Lett. 2020, 4, 1–4. [Google Scholar] [CrossRef]

- Pituła, E.; Koba, M.; Śmietana, M. Which smartphone for a smartphone-based spectrometer? Opt. Laser Technol. 2021, 140, 107067. [Google Scholar] [CrossRef]

- Singh, S.; Singh, G.; Prakash, C.; Ramakrishna, S. Current status and future directions of fused filament fabrication. J. Manuf. Process. 2020, 55, 288–306. [Google Scholar] [CrossRef]

- Harris, M.; Potgieter, J.; Archer, R.; Arif, K.M. Effect of Material and Process Specific Factors on the Strength of Printed Parts in Fused Filament Fabrication: A Review of Recent Developments. Materials 2019, 12, 1664. [Google Scholar] [CrossRef]

- Varo-Martínez, M.; Ramírez-Faz, J.C.; López-Sánchez, J.; Torres-Roldán, M.; Fernández-Ahumada, L.M.; López-Luque, R. Design and 3D Manufacturing of an Improved Heliostatic Illuminator. Inventions 2022, 7, 127. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; Kwon, Y.; Michael, K.; Fang, J.; Yifu, Z.; Wong, C.; Montes, D.; et al. ultralytics/yolov5: v7.0—YOLOv5 SOTA Realtime Instance Segmentation. Zenodo 2022. [Google Scholar] [CrossRef]

- Liu, Z.; Li, J.; Shen, Z.; Huang, G.; Yan, S.; Zhang, C. Learning Efficient Convolutional Networks through Network Slimming. arXiv 2017. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015. [Google Scholar] [CrossRef]

- Li, H.; Kadav, A.; Durdanovic, I.; Samet, H.; Graf, H.P. Pruning Filters for Efficient ConvNets. arXiv 2017, arXiv:1608.08710. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv 2017. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. arXiv 2019. [Google Scholar] [CrossRef]

- Bavali, A.; Parvin, P.; Tavassoli, M.; Mohebbifar, M.R. Angular distribution of laser-induced fluorescence emission of active dyes in scattering media. Appl. Opt. 2018, 57, B32–B38. [Google Scholar] [CrossRef]

- Krechemer, F.D.S.; Foerster, L.A. Tuta absoluta (Lepidoptera: Gelechiidae): Thermal requirements and effect of temperature on development, survival, reproduction and longevity. Eur. J. Entomol. 2015, 112, 658–663. [Google Scholar] [CrossRef]

| SoC | Sensors | Model | Wireless | Source |

|---|---|---|---|---|

| Raspberry Pi | Image | Deep Learning | LoRa | [25] |

| Raspberry Pi, AVR | Environmental, soil | - | LoRa, NRF24L01 | [26] |

| Raspberry Pi | Environmental | Beta regression | - | [27] |

| Raspberry Pi, Arduino Uno | Environmental, soil, lux, CO2 | k-nearest neighbor, logistic regression, random forest regression, linear regression | ZigBee | [28] |

| ARM RISC | Environmental | Custom YOLOv5 | BLE, LoRa | Ours |

| Part No | Functionality | Manufacturer |

|---|---|---|

| Processing | ||

| STM32U5A5ZJ | MCU | ST |

| MB85RC64TAPN-G-AMEWE1 | FRAM | Fujitsu Semiconductor |

| Sensors | ||

| MS1089 | Temperature | Microdul |

| BME680 | Environmental | Bosch |

| Power | ||

| EXL1-1V20 | PV harvester | Lightricity |

| AEM10941 | PMIC | E-Peas |

| GEB201212C | Battery | PowerStream |

| Communication | ||

| RSL10 | Bluetooth Low Energy | Onsemi |

| SX1261 | LoRa | Semtech |

| Sparsity | mAP50 | Precision | Recall | Prune Threshold |

|---|---|---|---|---|

| 0 | 0.460 | 0.546 | 0.467 | - |

| 0.00001 | 0.462 | 0.605 | 0.437 | 0.65710 |

| 0.00005 1 | 0.465 | 0.548 | 0.476 | 0.7344 |

| 0.0001 | 0.460 | 0.570 | 0.462 | 0.6234 |

| 0.0005 | 0.455 | 0.551 | 0.466 | 0.3746 |

| 0.001 | 0.453 | 0.536 | 0.467 | 0.2905 |

| 0.005 | 0.44 | 0.544 | 0.451 | 0.1471 |

| 0.01 | 0.433 | 0.563 | 0.425 | 0.0754 |

| Sparsity | Prune Ratio | Params. | GFLOPs | Size | mAP50 | Precision | Recall |

|---|---|---|---|---|---|---|---|

| 0.0001 | 10% | 285,034 | 0.9 | 751 KB | 0.433 | 0.499 | 0.456 |

| 0.0005 | 10% | 279,444 | 0.9 | 740 KB | 0.442 | 0.499 | 0.474 |

| 0.0005 | 20% | 237,086 | 0.8 | 657 KB | 0.437 | 0.524 | 0.452 |

| 0.0005 3 | 30% | 195,702 | 0.7 | 575 KB | 0.434 | 0.497 | 0.467 |

| 0.001 2 | 10% | 282,376 | 0.9 | 745 KB | 0.445 | 0.558 | 0.434 |

| 0.001 | 20% | 237,293 | 0.8 | 657 KB | 0.439 | 0.529 | 0.446 |

| 0.005 | 10% | 288,219 | 0.9 | 757 KB | 0.424 | 0.51 | 0.45 |

| Quantization Precision | mAP50 | Precision | Recall | Size | RAM Usage | Execution Time |

|---|---|---|---|---|---|---|

| 32-bit | 0.434 | 0.497 | 0.467 | 1.165 KB | 2.423 KB | 17.22 min |

| 16-bit | 0.415 | 0.476 | 0.442 | 655 KB | 1.945 KB | 8.55 min |

| 8-bit | 0.398 | 0.459 | 0.425 | 403 KB | 1.393 KB | 4.15 min |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kouzinopoulos, C.S.; Pechlivani, E.M.; Giakoumoglou, N.; Papaioannou, A.; Pemas, S.; Christakakis, P.; Ioannidis, D.; Tzovaras, D. A Citizen Science Tool Based on an Energy Autonomous Embedded System with Environmental Sensors and Hyperspectral Imaging. J. Low Power Electron. Appl. 2024, 14, 19. https://doi.org/10.3390/jlpea14020019

Kouzinopoulos CS, Pechlivani EM, Giakoumoglou N, Papaioannou A, Pemas S, Christakakis P, Ioannidis D, Tzovaras D. A Citizen Science Tool Based on an Energy Autonomous Embedded System with Environmental Sensors and Hyperspectral Imaging. Journal of Low Power Electronics and Applications. 2024; 14(2):19. https://doi.org/10.3390/jlpea14020019

Chicago/Turabian StyleKouzinopoulos, Charalampos S., Eleftheria Maria Pechlivani, Nikolaos Giakoumoglou, Alexios Papaioannou, Sotirios Pemas, Panagiotis Christakakis, Dimosthenis Ioannidis, and Dimitrios Tzovaras. 2024. "A Citizen Science Tool Based on an Energy Autonomous Embedded System with Environmental Sensors and Hyperspectral Imaging" Journal of Low Power Electronics and Applications 14, no. 2: 19. https://doi.org/10.3390/jlpea14020019

APA StyleKouzinopoulos, C. S., Pechlivani, E. M., Giakoumoglou, N., Papaioannou, A., Pemas, S., Christakakis, P., Ioannidis, D., & Tzovaras, D. (2024). A Citizen Science Tool Based on an Energy Autonomous Embedded System with Environmental Sensors and Hyperspectral Imaging. Journal of Low Power Electronics and Applications, 14(2), 19. https://doi.org/10.3390/jlpea14020019