1. Introduction

The emergence of the Internet of Things (IoT) has promoted the rise of edge computing. In IoT applications, data processing, analysis, and storage are increasingly occurring at the edge of the network, close to where users and devices need to access information, which makes edge computing an important development direction.

There were already applications of deep learning in IoT, for example, deep learning predicted household electricity consumption based on data collected by smart meters [

1]; and a load balancing scheme based on the deep learning of the IoT was introduced [

2]. Through the analysis of a large amount of user data, the network load and processing configuration are measured, and the deep belief network method is adopted to achieve efficient load balancing in the IoT. In [

3], an IoT data analysis method based on deep learning algorithms and Apache Spark was proposed. The inference phase was executed on mobile devices, while Apache Spark was deployed in the cloud server to support data training. This two-tier design was very similar to edge computing, which showed that processing tasks can be offloaded from the cloud. In [

4], it is proven that due to the limited network performance of data transmission, the centralized cloud computing structure can no longer process and analyze the large amount of data collected from IoT devices. In [

5], the authors indicated that edge computing can offload computing tasks from the centralized cloud to the edge near the IoT devices, and the data transmitted during the preprocessing process will be greatly reduced. This operation made edge computing another key technology for IoT services.

The data generated by IoT sensor terminal devices need to use deep learning for real-time analysis or for training deep learning models. However, deep learning [

6] inference and training require a lot of computing resources to run quickly. Edge computing is a viable method, as it stores a large number of computing nodes at the terminal location to meet the requirements of high computation and low latency of edge devices. It shows good performance in privacy, bandwidth efficiency, and scalability. Edge computing has been applied to deep learning with different aims: fabric defect detection [

7], falling detection in smart cities, street garbage detection and classification [

8], multi-task partial computation offloading and network flow scheduling [

9], road accidents detection [

10], and real-time video optimization [

11].

Red, green, and blue (RGB) cameras mainly use red, green, and blue light to classify objects. From the point of view of the spectrum, there are three bands that are only in the visible band. The number of spectral bands we use has 1024, including some near-infrared light bands, which is more helpful for accurate classifications. For instance, the red-edge effect of the infrared band inside can distinguish real leaves from plastic leaves in vegetation detection. Therefore, we believe that increasing the number of spectral channels is more conducive to the application expansion of the system in the future.

The optical fiber spectrometer has been reported for applications in photo-luminescence properties detection [

12], the smartphone spectral self-calibration [

13], and phosphor thermometry [

14]. At present, some imaging spectrometers can obtain spatial images, depth information, and spectral data of objects simultaneously [

15]. However, most of the data processed by deep learning algorithms are image data information obtained by these imaging spectrometers. Deep learning algorithms are rarely used to process the reflection spectrum data obtained by the optical fiber spectrometer.

In hyperspectral remote sensing, deep learning algorithms have been widely applied to hyperspectral imaging classification processing tasks. For example, in [

16], a spatial-spectral feature extraction framework for robust hyperspectral images classification was proposed to combine a 3D convolutional neural network. Testing overall classification accuracies was 4.23% higher than SVM on Pavia data sets and Pines data sets. In [

17], a new recurrent neural network architecture was designed and the testing accuracy was 11.52% higher than that of a long short-term memory network, which is on the HSI data sets Pavia and Salinas. A new recursive neural network structure was designed in [

18], and an approach based on a deep belief network was introduced for hyperspectral images classification. Compared with SVM, overall classification accuracies of Salinas, Pines, and Pavia data sets increased by 3.17%. Currently, hyperspectral imagers are mainly used to detect objects [

19]. Although the optical fiber spectrometer is easy to carry and collect the spectra of objects, it cannot realize the imaging detection research of objects. However, deep learning algorithms are data-driven and can realize end-to-end feature processing. If we process spectral data by combining deep learning algorithms with fiber optic spectrometers, it can further perform the detection and research of objects.

However, most spectrometers need to be connected to the host computer via USB, which cannot be carried easily. In this work, we designed and manufactured a portable optical fiber spectrometer. After testing the stability of the system, we collected the reflectance spectra of five fruit samples and proposed a depth called the convolutional neural network learning method, which performs spectral classification. The accuracy of this method is 94.78%. We boldly combined the deep learning algorithm and the system to complete the accurate classification of spectral data. Using this portable spectrometer, we use edge computing technology to increase the speed of deep learning while processing spectral data.

We have designed a portable spectrometer with a screen; the system can get rid of the heavy host computer and realize real-time detection of fruit quality.

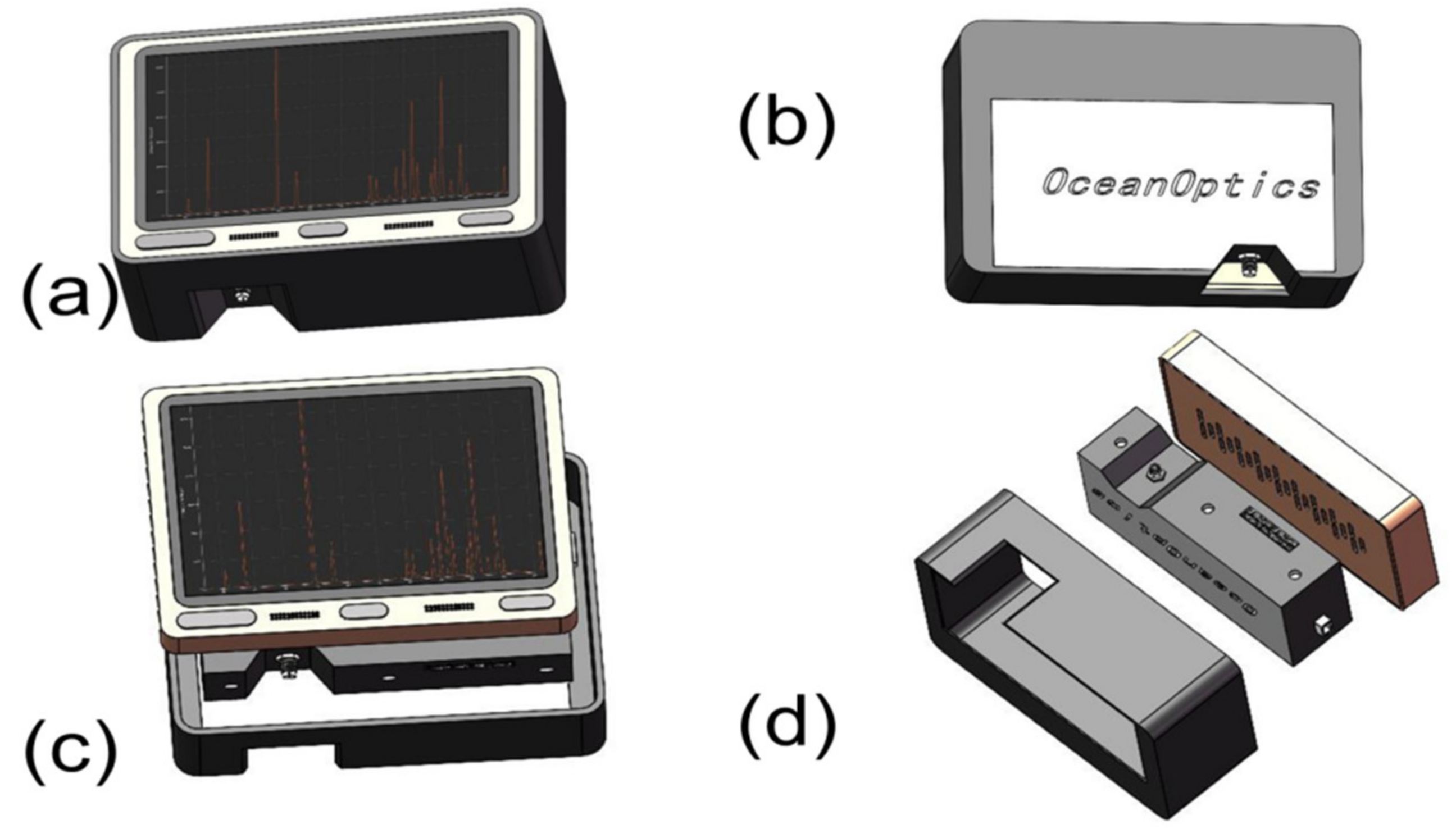

Our portable spectrometer is shown in

Figure 1a. The spectrometer has a 5-inch touch screen, and users can view the visualized sample spectrum information on the spectrometer in real-time. As shown in

Figure 1b, the system is equipped with an Ocean Optics-USB2000+ spectrometer (Ocean Optics, Delray Beach, FL, USA), to ensure that the system has a spectral resolution of not less than 5nm. As shown in

Figure 1d, the system configuration of our spectrometer is a GOLE1 microcomputer, Ocean Optics USB2000+ spectrometer, and a high-precision packaging fixture. The optical fiber can be connected with the spectrometer through a fine-pitch mechanical thread. The overall structure is treated with electroplating black paint, to effectively avoid external light interference and greatly improve the signal-to-noise ratio of spectral information. When using our spectrometer to detect samples, we connect one end of the optical fiber to the spectrometer through a mechanical thread and hold the other end close to the test sample. The reflected light from the sample surface enters the spectrometer through the optical fiber, and the spectrometer converts the collected optical information into electrical signals and transmits it to the microcomputer through the USB cable. The microcomputer visualizes the signal on the screen. Users can view, store, and transmit spectral information through the system’s touch screen, innovating the functions of traditional spectrometers that need to be operated by the keyboard and mouse of the host computer.

To ensure the accuracy of the system data acquisition and to demonstrate the system’s ability to detect various data, the team tested the stability of the entire hyperspectral imaging system. First, we adjust the imaging to the same state as the data acquisition, then we collect 10 sets of solar light spectral data by the spectrograph at 10 s intervals; then, we adjust the display of the system to red, green, and blue colors in turn, and repeat the above steps to obtain the corresponding data. Finally, we input 40 groups of data collected by the above methods into Matlab and then use two different processing methods to demonstrate the stability of the whole hyperspectral imaging system.

The first method is to extract one data point of the same wavelength from all 10 groups of data of the same kind, and arrange the data points in order and draw them into the pictures as shown below.

As shown in

Figure 2, we can see clearly that the intensity fluctuation of the same 10 groups of data at the same wavelength is very small. This shows that the error of data acquisition of the same object is very small in a short time, which proves that the system has high accuracy.

In the second method, we plot the whole spectrum of the same 10 groups of data on one graph and distinguish them by different colors. As shown in

Figure 3, we can clearly see two points: one is that the spectral images of 10 groups of similar data almost coincide; the other is that the spectra of sunlight, blue light, green light, and red light shown in the figure are very classic and do not violate the laws of nature. The above phenomenon shows that the measurement accuracy of the hyperspectral imaging system is high, and the detection ability of each band light is excellent, and the whole system has good stability.

We discussed the edge computing technology under IoT combined with deep learning algorithms to realize street garbage classification, fabric defect detection, et al. We wanted to use edge computing technology combined with deep learning algorithms, to classify more spectral data. The current mainstream spectral data processing algorithm is still for one-dimensional spectral data analysis. The machine learning image processing methods widely used in these processing methods are incompatible. As mentioned previously, the current deep learning algorithms are very in-depth in image processing research, these algorithms have relatively high processing efficiency and classification accuracy. If we can preprocess the spectral data, then we use deep learning algorithms for classification, which will greatly improve the efficiency and accuracy of spectral classification.

In our work, we randomly selected five kinds of fruit for testing and achieved accurate classification results through the algorithms. Generally, as long as we obtain enough spectral data and design effective algorithms, we can achieve accurate classification. A large number of literature results have verified the effectiveness of classification based on spectral data. For instance, in [

20], the classification based on spectral data was also realized for different algae.

In this paper, we designed a portable optical fiber spectrometer with a screen and verified the stability, accuracy, and detection ability of the system through two different experimental processing methods shown in

Figure 2 and

Figure 3. We used the spectrometer to collect one-dimensional reflectance spectrum data from five fruit samples, then we reshaped the spectral data structure and transformed it into 2D spectral data. We used our proposed CNN algorithm to extract and classify the 2D spectral image data of five samples. Its maximum classification accuracy rate was 94.78%, and the average accuracy rate was 92.94%, which is better than the traditional AlexNet, Unet, and SVM. Our method makes the spectral data analysis compatible with the deep learning algorithm and implements the deep learning algorithm to process the reflection spectral data from the optical fiber spectrometer.

The remaining paper is organized as follows:

Section 2 introduces the optical detection experiment in brief.

Section 3 provides the details of the proposed spectral classification method.

Section 4 reports our experiments and discusses our results. Finally,

Section 5 concludes the work and presents some insights for further research.

3. Proposed Method

3.1. Model Description

Using a deep learning convolutional neural network model to identify spectral data can be divided into two steps. First, perform feature extraction on the images, and then use the classifier to classify the images. The specific recognition process is depicted in

Figure 6.

In general, there are convolutional layers, pooling layers, and fully connected layers in a convolutional neural network architecture. Compared with other deep learning models, CNNs show better classification performance.

When CNNs perform convolution operations, the image feature size of the upper layer is calculated and processed through convolution kernels, strides, filling, and activation functions. The output and the input of the previous layer establish a convolution relationship with each other. The convolution operation of feature maps uses the following formula.

where

is the activation function,

is the output value of the

i-th neuron in the (

l − 1)-th layer,

represents the weight value of the

i-th neuron of the

l-th convolutional layer connected to the

j-th neuron of the output layer,

represents the bias value of the

j-th neuron of the

l-th convolutional layer.

where

is the activation function,

represents the downsampling function,

is the constants used when the feature map performs the sampling operation,

represents the bias value of the

j-th neuron of the

l-th convolutional layer.

The convolutional neural network is usually equipped with a fully connected layer in the last few layers. The fully connected layer normalizes the features after multiple convolutions and pooling. It outputs a probability for various classification situations. In other words, the fully connected layer acts as a classifier.

The Dropout [

21] technology is used in CNN to randomly hide some units so that they do not participate in the CNN training process to prevent overfitting. The convolutional layer without the Dropout layer can be calculated using the following formula.

The mean of w, b, and is the same as that of Equation (1).

The discard rate with the Dropout layer can be described as (5):

In fact, the Bernoulli function conforms to the distribution trend of Bernoulli. Through the action of the Bernoulli distribution, the Bernoulli function is randomly decomposed into a matrix vector of 0 or 1 according to a certain probability. Where r is the probability matrix vector obtained by the action of the Bernoulli function. In the training process of models, it is temporarily discarded from the network according to a certain probability, that is, the activation site of a neuron no longer acts with probability p (p is 0).

We multiply the input of neurons by Equation (5) and define the result as the input of neurons with the discard rate. It can be described as.

Therefore, the output was determined using the following formula.

Here, k represents the number of the output neurons.

In this work, we classified 2D spectral data using AlexNet. However, the recognition rate was not high. Mainly, the reasons were analyzed as follows:

- (1)

Due to the small amount of spectral data sample set collected in this experiment, the training data sets are difficult to meet the needs of deeper AlexNet for feature extraction, learning, and processing. Therefore, the traditional network architecture needed to be streamlined.

- (2)

In the convolutional process, the more times of convolution, the more spectral features can be fully extracted. The process also uses a large number of convolution kernels, which will bring difficulties to the calculation. The long stride affected the classification accuracy, it was necessary to decrease the traditional parameters of the network convolutional layer.

- (3)

If a wrong pooling method was used, it would decrease the efficiency of the network learning features and the accuracy of targets classification. The traditional network pooling layer needed to be reduced.

Therefore, we simplified the traditional AlexNet network architecture, decreased the parameters of the convolutional layers, reduced the number of pooling layers, and proposed a new CNN spectral classification model.

Figure 7 reveals a specific deep learning spectral classification model framework. Additionally, we added a Dropout layer after each convolutional layer,

k represents the size of convolution kernels or pooling kernels,

s is the step size moved during convolution or pooling in the CNN operation, and

p represents the value of filling the edge after the convolutional layer operation, and generally, the filling value is 0, 1, and 2.

Since the CNN model requires images of uniform size as input, all spectral data images are normalized to a size of 32 × 32 as input images. We divided the spectral data into n categories, so in the seventh layer, after the Dropout layer and the activation function softmax were calculated, n × 1 × 1 neurons were output, that is, the probability of the category where the n nodes were located.

3.2. Dropout Selection Principle

Dropout can be used as a kind of trick for training convolutional neural networks. In each training batch, it reduces overfitting by ignoring half of the feature detectors. This method can reduce the interaction in feature hidden layer nodes. In brief, Dropout makes the activation value of a certain neuron stop working with a certain probability p when it propagates forward.

In a deep learning model, if the model has too many parameters and too few training samples, the trained model is prone to overfitting. When we train neural networks, we often encounter overfitting problems. The model has a small loss function on the training data and a high prediction accuracy. The loss function on the test data is relatively large, and the prediction accuracy rate is low. If the model is overfitted, then the resulting model is almost unusable. Dropout can effectively alleviate the occurrence of over-fitting and achieve the effect of regularization to a certain extent. The value of the discard rate plays an important role in the deep learning model. An appropriate Dropout value can reduce the complex co-adaptation relationship between neurons and makes the model converge quickly.

In the training process of CNNs, when the steps of the convolution operation are different, the number of output neurons is different, which will reduce their dependence and correlation. If we quantify the correlation, it will increase the dependence. Therefore, we set the discard rate to narrow the range of correlation. After we successively take values in the narrow range, we train and predict the network model again. It will make any two neurons in different states have a higher correlation and improves the recognition accuracy of the model.

When we trained our proposed CNN model, we visualized the movable trend in dropout layers.

Figure 8 presents the movable trend.

Figure 8 demonstrates that it is very unstable between 0.5 and 1, which is prone to over-fitting. In (0, 0.1) and (0.2, 0.5), when increasing the epoch, the discard rate drops rapidly, and it is prone to under-fitting. In (0.1, 0.2), the discard rate gradually tends to a stable and convergent state, it is indicated that the value is more appropriate in the interval.