A Survey of Machine Learning Techniques for Video Quality Prediction from Quality of Delivery Metrics

Abstract

:1. Introduction

1.1. Survey Methodology

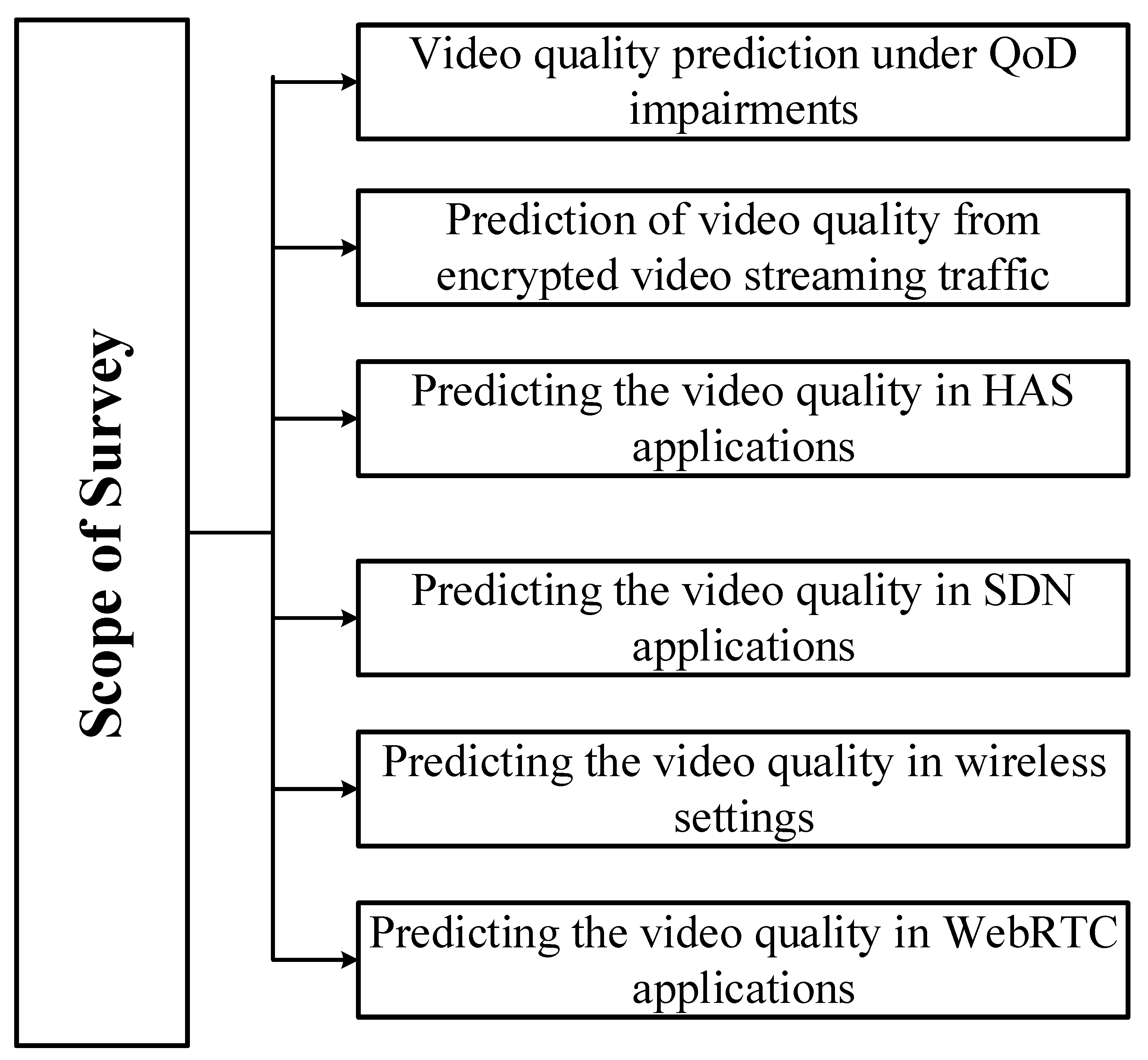

1.2. Scope

- An overview of ML applications in networking. This includes a review of related surveys, the learning paradigms used, and the applications considered.

- A background overview on video streaming. This includes the evolution of video streaming, modeling video quality, and common issues encountered in video streaming protocols.

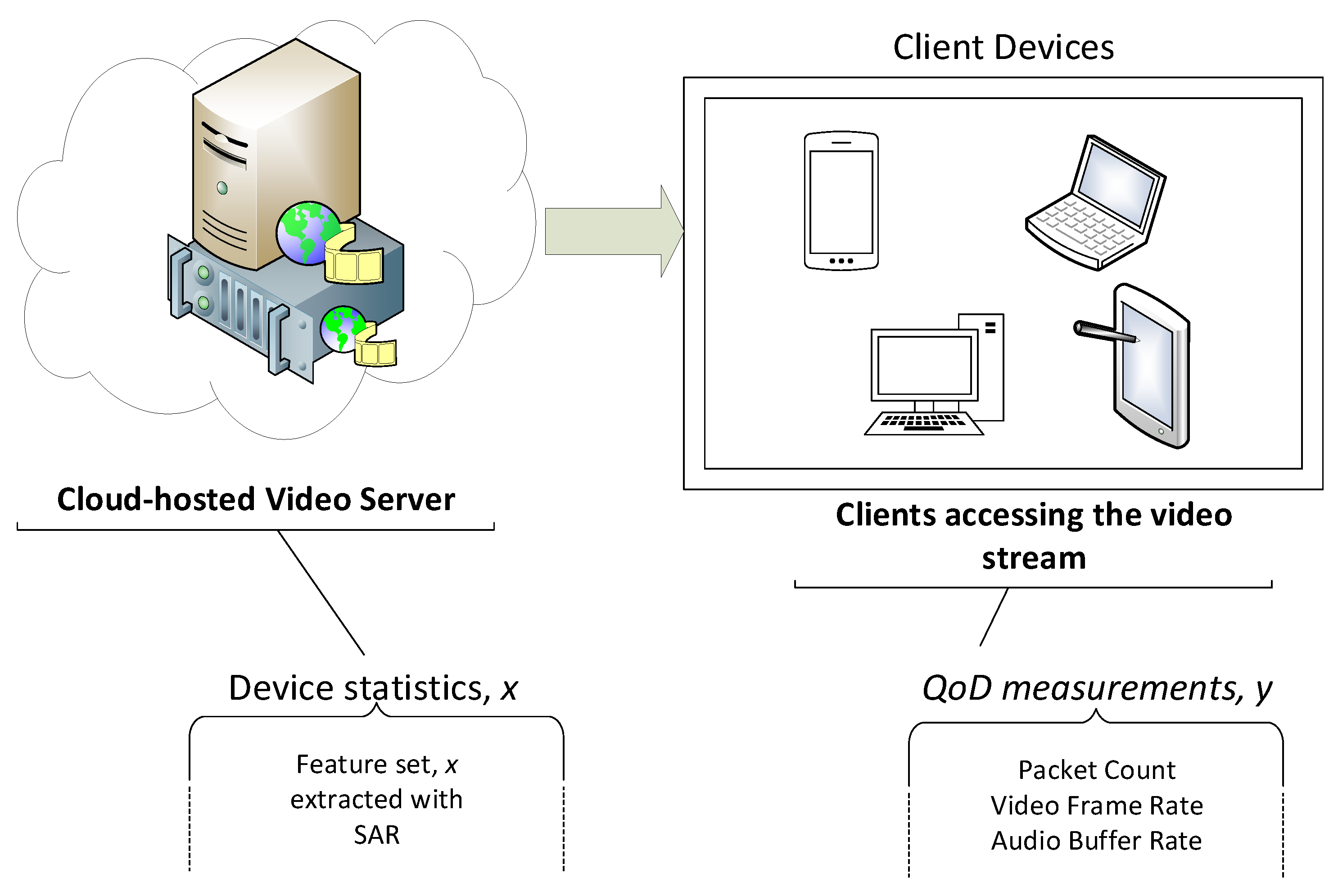

- A review of the applications of ML techniques for predicting QoD metrics with the aim of improving video quality. We also provide a review of works that leveraged ML algorithms for video quality predictions from QoD measurements. We discuss the ML techniques used in these studies, and analyze their benefits and limitations. Figure 2 highlights the scope and areas considered in this survey.

- A discussion of the future challenges and opportunities in the use of ML techniques in video streaming applications.

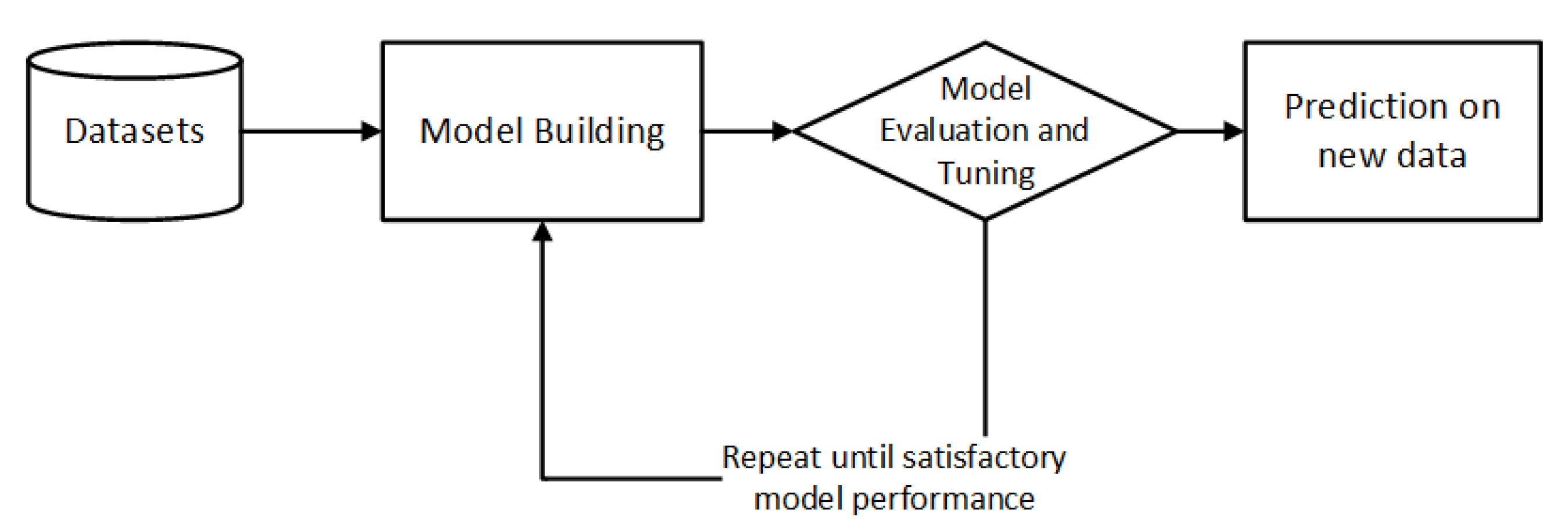

2. Overview of ML Applications in Networks

3. Background on Video Streaming

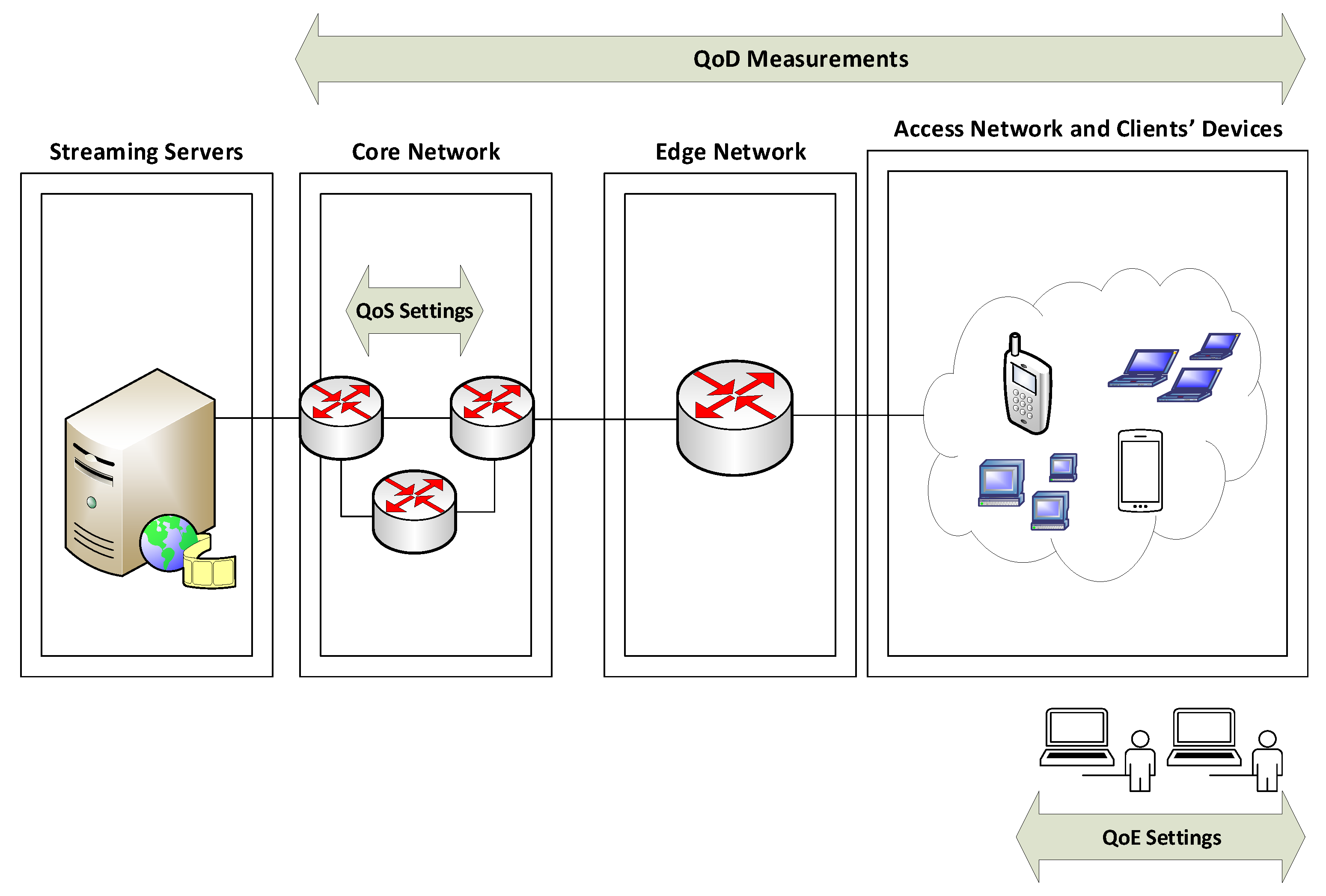

3.1. The Anatomy of a Video Stream

3.2. Video Streaming over IP Networks

- Use of large playout buffer: The use of a large receive buffer can help overcome temporary variations in the network throughput. The video player can decode the pre-fetched data stored in the playout buffer.

- Transcoding-based solutions: These solutions modify one or more parameter of the raw video data algorithm to vary the resultant bit rate. Examples include varying the compression ratio, video resolution, or frame rate. However, transcoding-based solutions require complex hardware support and are computationally intensive processes.

- Scalable encoding solutions: These solutions are achieved by processing the encoded video data rather than the actual raw data. Hence, the raw video content can be adapted by utilizing the scalability features of the encoder. Some examples of these solutions involve adaptation of the picture resolution or frame rate by exploiting the spatial and temporal scalability in the data. However, these solutions require specialized servers to implement this enhanced processing.

- Stream switching solutions: This technique is the simplest to implement and is also used for content delivery networks (CDN). This approach involves preprocessing the raw video data to produce multiple encoded streams, at varying bitrates, resulting in multiple versions of the same content. Thereafter, a client-side adaptive algorithm is used to select the most appropriate rate based for the network conditions during transmission. Stream switching algorithms do not need specialized servers and use the least processing power. However, these solutions require more storage and finer granularity of encoded bitrates.

3.3. Evolution of Video Streaming

3.3.1. Client–Server Video Streaming

3.3.2. Peer-to-Peer (P2P) Streaming

3.3.3. Hyper Text Transfer Protocol (HTTP) Video Streaming

3.4. Common Challenges in Video Streaming

- HAS Multiplayer Competition and Stability: It is important for HAS clients not to switch bitrate frequently, as it leads to video stalls, which can negatively affect the video quality. In a multiplayer HAS environment, it is crucial to achieve fairness. Clients competing for available bandwidth should equally share network resources based on their viewers, content, and device characteristics.

- Consistent Streaming Quality: The correlation between video bitrate and its perceptual quality has been shown to be non-linear by studies conducted on video quality analysis [108]. In general, it is preferable to stream videos at a consistent quality rather than at a consistent bitrate, which results in fewer oscillations in perceptual quality [109].

- Frequent Switches: Depending on the network condition and/or buffer status, the rate adaption algorithm switches video quality. While quality switching is a useful feature of HAS that helps to reduce the frequency of stalling occurrences, frequent quality switching may cause user frustration.

- Throughput: TCP throughput of nearly twice the video bitrate is necessary for effective streaming performance in general, which highlights a fundamental shortcoming of HAS applications [110].

- Media Session: Media session refers to the start of video playback until the end. It includes the effects of initial loading times, rebuffering events, and switching quality, if applicable. This means that any of these events will cause the media session to be longer than the video/audiovisual playback time.

- Initial Delay: Initial delay is the duration between the video request by the client and the actual time when video playback commences. It is also referred to as initial buffering.

- Playback Quality Changes: It refers to the change in quality throughout the course of the video playback. It is also known as rate or quality adaptation.

- Quality Switching Frequency: The rate at which the quality changes during media playback is referred to as quality switching frequency.

- Stalling: This occurs when video playback is interrupted. In cases where the network throughput is insufficient for the content to be downloaded faster than it is consumed, the buffer depletes, and playback is forced to pause until more data are downloaded and the buffer is refilled.

- Rebuffering: This refers to cases when the data in the buffer are depleted, thereby leading to a video playback stalling. These events in a streaming session are normally represented by a spinning wheel, loading sign, or sometimes a frozen frame.

- Rebuffering Frequency or Ratio: The amount of rebuffering incidents per unit of time is referred to as the rebuffering frequency.

- Rebuffering duration: This is the total duration of all rebuffering incidents in a single media session.

4. Review of Video QoD Prediction via ML

4.1. Video Quality Prediction under QoD Impairments

4.2. Prediction of Video Quality from Encrypted Video Streaming Traffic

4.2.1. Real-Time Video Quality Prediction

4.2.2. Session-Level Video Quality Prediction

4.3. QoD Prediction for HAS and DASH

4.4. Software-Defined Networking (SDN)

4.5. Predicting the Video Quality in Wireless Settings

4.6. Predicting Video Quality in WebRTC

5. Discussion and Future Directions

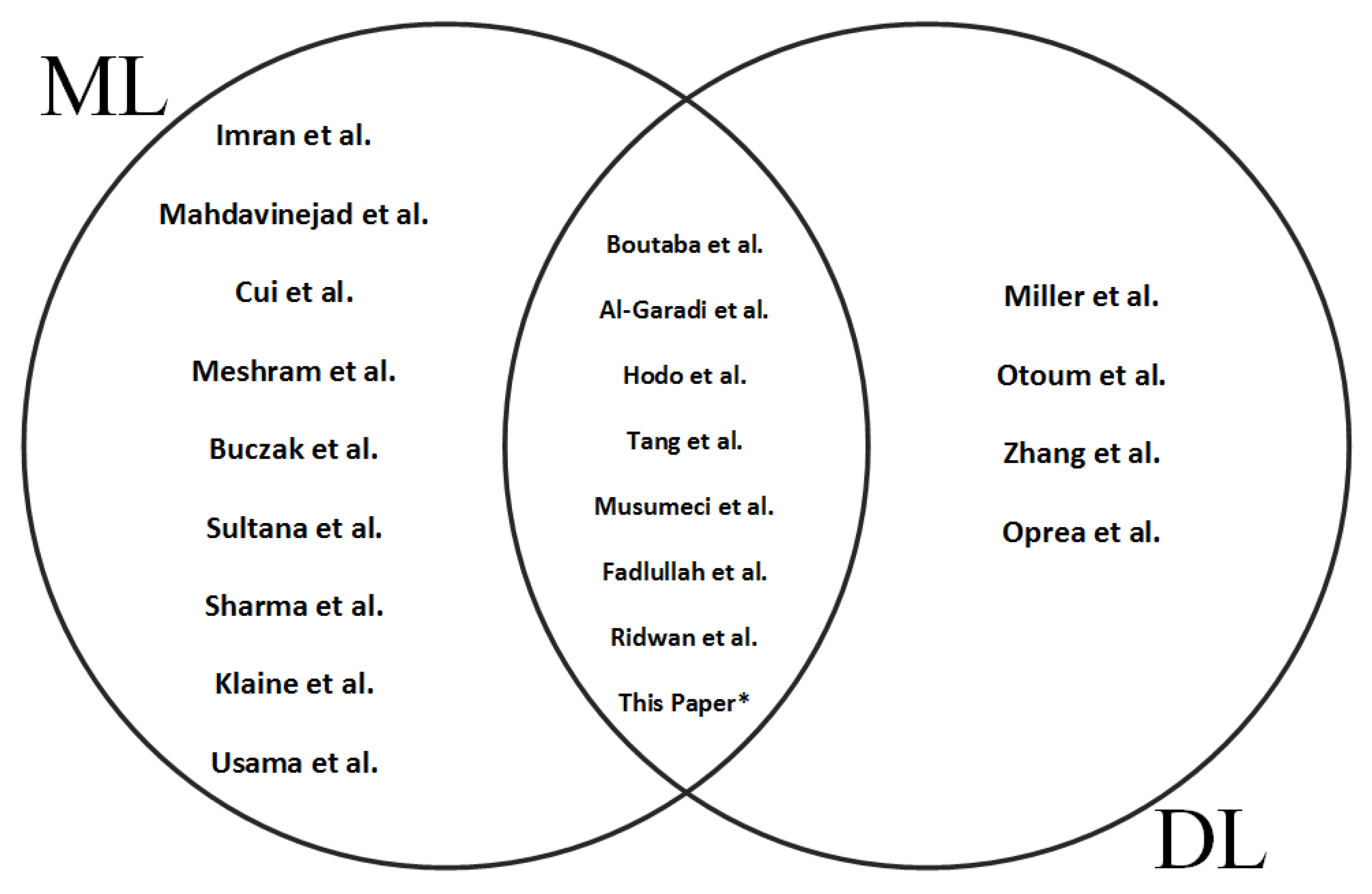

- (A)

- ML Dominance over DL: The applications of ML-based techniques for video quality prediction from QoD measurements show much promise, given that these ML models are capable of making accurate predictions from the video stream data. As we noticed in this study, the vast majority of studies used ML over DL in video streaming networks. Most of these studies were based on offline models, for which they authors used ML algorithms in training the data in batches before they could be applied to decision making. Video streaming networks, however, often exhibit dynamic variations over time, e.g., due to network state changes or QoD degradation [189]. Network state acquisition via QoD metrics can be fed into ML models at the same pace as the rate of change in the service [190]. We envisage that once an algorithm has been trained using past samples, it may be possible to implement various types of ML algorithms in an online fashion [191] to gradually include new input data as they are made available by the network. During the training process of an offline learning model, the ML model’s parameters and weights are updated while trying to optimize the cost function [192] using the data it was trained on. When an online learning process is used, the learned parameters are dependent on the currently seen samples, and possibly on the state of the model at this stage. As a result, the model is continuously learning new data and improving the learned parameters, which makes the learning framework adaptive. SDN and ML integrated frameworks could be used in cases where the dynamics of online learning may pose some challenges, as demonstrated in [73,193]. These studies make a case for an adaptive learning framework considering the real-time constraints for video streaming.

- (B)

- Adaptive Deep Learning for Improved Video Delivery: Advances in DL technology present new possibilities that could transform the video delivery system. Recent developments in big data, and advances in algorithm development, virtualization, and cloud computing enable DL to be used in a range of applications, such as computer vision and speech recognition. From the studies surveyed, we found that the dominant DL architectures for video quality predictions were the LSTM and CNN architectures. LSTM networks have been used in time-series problems [194] and offer the possibility of pooling inputs. They are also able to exploit temporal dependencies between impairment events in a sequence by the use of memory [195]. For most prediction tasks, LSTM’s capability to retain knowledge of previous states makes it an ideal algorithm for most experimental evaluations described in this survey. LSTM, a variant of the recurrent neural network (RNN), provides an effective solution to the problem of vanishing gradients during backpropagation of errors in an RNN. The vanishing gradient problem occurs when the error signal used to train the network gradually reduces as one moves backwards in the network during backpropagation. This has the consequence that the layers closer to the input do not get trained. An LSTM employs a gating mechanism that controls the memoizing process. Information in LSTMs is written, read, or stored by opening and closing gates. Previous studies show the feasibility of LSTM networks for real-time video quality predictions [196] and service response time predictions [197]. CNNs are very effective at image processing and computer vision [198,199]. CNNs have also proven useful for video streaming services [200]. The studies in [200,201] presented some directions in which DL could be applied to improve the quality of video delivery. The authors of [200] proposed the use of a CNN to enable parallel encoding of video for HAS. In parallel encoding, frames of a compressed video serve as a reference to define future frames. This speeds up the process of encoding multiple representations of video data. Most state-of-art techniques utilize the highest quality representation as a reference for encoding the video data. The authors hypothesized that by using the representation with the lowest quality, the encoding process would be relatively improved. The authors of [201] demonstrated how by using DNNs and the improved computational power of the client devices, their technique could leverage redundant information in video data to boost the streaming quality when bandwidth availability was constrained. DNNs allow for the extraction of important features from images. The authors proposed a content-aware DNN model that achieves a significant boost in image resolution and uses the improved computational power of client devices to improve the video quality. These studies highlight the potential of DL in the video delivery system. Given the glut of video-driven data and high computational requirements of DL-based models, it is imperative that these techniques enable real-time, online, and adaptive analysis of the video data. The performances of trained network models may decrease over time due to changes in the video data, network conditions, or even unknown features. In such cases, the inputs used in training the network will vary significantly. The use of an adaptive DL would enable on-the-fly learning, as such a model detects and reacts to changes after deployment in highly dimensional data streams. The studies in [202,203] proposed adaptive DL frameworks for dynamic image classification in IoT environments and real-time image classification, respectively. However, enabling real-time DL poses some challenges. Additional layers of a network may increase accuracy, but they require considerably more compute power and memory. At present, ML has the advantage of having being evaluated first for video QoD predictions and is therefore running in current deployments and future deployments of ML-based solutions are underway. The slower uptake of DL solutions is explained by (1) the complexity of the data models which make it extremely expensive to train; (2) issues with interpreting results; and (3) the need for retraining and up-skilling network engineers.

- (C)

- Computational Cost and Interpretability: A good number of the studies surveyed used DT, RF, NB, and SVM. These four ML algorithms seem popular due to their simplicity and easier interpretation in comparison with DL. The use of RF in batch settings is becoming increasingly popular due to the benefits it provides in terms of learning performance and having little demand for input preparation and hyper-parameter tuning [204]. These models in the majority of cases resulted in the best prediction and classification accuracies. Interpretability has been emphasized alongside accuracy in the literature [205]. Some authors note the importance of comparing other parameters than accuracy when two models exhibit the same accuracy [206,207]. They have attempted to establish a link between the interpretability and usability of models. They argue that it is beneficial for ML and network practitioners to work with easy to understand ML models. This may be important in model selection, feature engineering, and in trusting the prediction outcomes [208]. These algorithms incur shorter training times compared to DL. This makes their use ideal for these prediction tasks. Another possible reason for the dominance of ML models over DL could be attributed to the significant DL computational requirements in terms of power, memory, and resources. In centralized networks without resource constraints, such as SDNs, DL can be implemented by leveraging the centralized controller [209]. In limited storage settings such as with IoT, implementing DL can be challenging. The network provider has a choice among high computational requirements, accuracy, and interpretability. Future research should focus on identifying ways to transfer knowledge between tasks, which can be adapted to changing network environments and contexts [210].

- (D)

- Self-Healing Networks and Failure Recovery: ML applications with SDN control offer some innovative possibilities for network failure recovery in video streaming services. Smart routing has been proposed to tackle some of these challenges posed by data link failures [211]. In contrast to existing approaches, the proposed approach allows the SDN controller to reconfigure the network before the anticipated failure of a link. This approach can not only reduce interruptions caused by links failing, but also bring significant benefits to increasing availability of the video streaming service. From the studies we surveyed, we note some popular QoD KPIs such as rebuffering, quality switching, video resolution, and initial startup delay. High availability and smart routing mechanisms can aid the network in reducing or mitigating video artifacts which may arise as a result of rebuffering events, quality switches, and stalling. Integrating ML with SDN in this manner will aid in providing intelligence to ensure the streaming service continues interrupted.

6. Conclusions

Funding

Conflicts of Interest

References

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 1–74. [Google Scholar]

- What is Deep Learning?|IBM. Available online: https://www.ibm.com/cloud/learn/deep-learning (accessed on 14 October 2021).

- Khan, A.I.; Al-Habsi, S. Machine Learning in Computer Vision. Procedia Comput. Sci. 2020, 167, 1444–1451. [Google Scholar] [CrossRef]

- Zhiyan, H.; Jian, W. Speech Emotion Recognition Based on Deep Learning and Kernel Nonlinear PSVM. In Proceedings of the 2019 Chinese Control and Decision Conference (CCDC), Nanchang, China, 3–5 June 2019; pp. 1426–1430. [Google Scholar]

- Padmanabhan, J.; Johnson Premkumar, M.J. Machine Learning in Automatic Speech Recognition: A Survey. IETE Tech. Rev. 2015, 32, 240–251. [Google Scholar] [CrossRef]

- Haseeb, K.; Ahmad, I.; Awan, I.I.; Lloret, J.; Bosch, I. A Machine Learning SDN-Enabled Big Data Model for IoMT Systems. Electronics 2021, 10, 2228. [Google Scholar] [CrossRef]

- Hashima, S.; ElHalawany, B.M.; Hatano, K.; Wu, K.; Mohamed, E.M. Leveraging Machine-Learning for D2D Communications in 5G/Beyond 5G Networks. Electronics 2021, 10, 169. [Google Scholar] [CrossRef]

- Najm, I.A.; Hamoud, A.K.; Lloret, J.; Bosch, I. Machine learning prediction approach to enhance congestion control in 5G IoT environment. Electronics 2019, 8, 607. [Google Scholar] [CrossRef] [Green Version]

- Izima, O.; de Fréin, R.; Davis, M. Video Quality Prediction Under Time-Varying Loads. In Proceedings of the 2018 IEEE International Conference on Cloud Computing Technology and Science (CloudCom), Nicosia, Cyprus, 10–13 December 2018; pp. 129–132. [Google Scholar]

- Vega, M.T.; Perra, C.; Liotta, A. Resilience of Video Streaming Services to Network Impairments. IEEE Trans. Broadcast. 2018, 64, 220–234. [Google Scholar] [CrossRef] [Green Version]

- Cisco Visual Networking Index: Forecast and Trends, 2017–2022, White Paper. Available online: https://tinyurl.com/29rtya2b (accessed on 1 October 2021).

- ITU. 910. Subjective Video Quality Assessment Methods for Multimedia Applications. International Telecommunication Union Telecommunication Section 1999. Available online: https://www.itu.int/rec/T-REC-P.910-200804-I (accessed on 1 October 2021).

- Chikkerur, S.; Sundaram, V.; Reisslein, M.; Karam, L.J. Objective Video Quality Assessment Methods: A classification, Review, and Performance Comparison. IEEE Trans. Broadcast. 2011, 57, 165–182. [Google Scholar] [CrossRef]

- Recommendation of ITU. 1011-Reference Guide to Quality of Experience Assessment Methodologies. 2013. Available online: https://www.itu.int/rec/T-REC-G.1011-201607-I/en (accessed on 1 October 2021).

- Bentaleb, A.; Taani, B.; Begen, A.C.; Timmerer, C.; Zimmermann, R. A Survey on Bitrate Adaptation Schemes for Streaming Media over HTTP. IEEE Commun. Surv. Tutor. 2018, 21, 562–585. [Google Scholar] [CrossRef]

- Duanmu, Z.; Zeng, K.; Ma, K.; Rehman, A.; Wang, Z. A Quality-of-Experience Index for Streaming Video. IEEE J. Sel. Top. Signal Process. 2016, 11, 154–166. [Google Scholar] [CrossRef]

- Huynh-Thu, Q.; Ghanbari, M. Scope of Validity of PSNR in Image/Video Quality Assessment. Electron. Lett. 2008, 44, 800–801. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [Green Version]

- Wolf, S.; Pinson, M. Reference Algorithm for Computing Peak Signal to Noise Ratio (PSNR) of a Video Sequence with a Constant Delay. ITU-T Contribution COM9-C6-E. Geneva Switzerland, 2–6 February 2009. Available online: https://www.its.bldrdoc.gov/publications/details.aspx?pub=2571 (accessed on 4 October 2021).

- Varela, M.; Skorin-Kapov, L.; Ebrahimi, T. Quality of Service versus Quality of Experience. In Quality of Experience; Springer: Berlin/Heidelberg, Germany, 2014; pp. 85–96. [Google Scholar]

- E.800: Definitions of Terms Related to Quality of Service. Available online: https://www.itu.int/rec/T-REC-E.800-200809-I (accessed on 5 October 2021).

- ETSI TR 102 157—V1.1.1—Satellite Earth Stations and Systems (SES); Broadband Satellite Multimedia; IP Interworking over Satellite; Performance, Availability and Quality of Service. Available online: https://tinyurl.com/kpu7w3m (accessed on 6 October 2021).

- Minhas, T.N. Network Impact on Quality of Experience of Mobile Video. Ph.D. Thesis, Blekinge Institute of Technology, Karlshamn, Sweden, 2012. [Google Scholar]

- Fiedler, M.; Zepernick, H.J.; Lundberg, L.; Arlos, P.; Pettersson, M.I. QoE-based Cross-layer Design of Mobile Video Systems: Challenges and Concepts. In Proceedings of the 2009 IEEE-RIVF International Conference on Computing and Communication Technologies, Danang, Vietnam, 13–17 July 2009; pp. 1–4. [Google Scholar]

- Shinde, P.P.; Shah, S. A Review of Machine Learning and Deep Learning Applications. In Proceedings of the 2018 Fourth International Conference on Computing Communication Control and Automation (ICCUBEA), Pune, India, 16–18 August 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Abiodun, O.I.; Jantan, A.; Omolara, A.E.; Dada, K.V.; Umar, A.M.; Linus, O.U.; Arshad, H.; Kazaure, A.A.; Gana, U.; Kiru, M.U. Comprehensive Review of Artificial Neural Network Applications to Pattern Recognition. IEEE Access 2019, 7, 158820–158846. [Google Scholar] [CrossRef]

- Brink, H.; Richards, J.; Fetherolf, M. Real-World Machine Learning; Manning Publications: Shelter Island, NY, USA, 2017. [Google Scholar]

- Weerts, H.J.; Mueller, A.C.; Vanschoren, J. Importance of Tuning Hyperparameters of Machine Learning Algorithms. arXiv 2020, arXiv:2007.07588. [Google Scholar]

- Wang, M.; Cui, Y.; Wang, X.; Xiao, S.; Jiang, J. Machine Learning for Networking: Workflow, Advances and Opportunities. IEEE Netw. 2017, 32, 92–99. [Google Scholar] [CrossRef] [Green Version]

- Seber, G.A.; Lee, A.J. Linear Regression Analysis; John Wiley & Sons: Hoboken, NJ, USA, 2012; Volume 329. [Google Scholar]

- Quinlan, J.R. Decision Trees and Decision-making. IEEE Trans. Syst. Man Cybern. 1990, 20, 339–346. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Wang, B.; Zou, D.; Ding, R. Support Vector Regression based Video Quality Prediction. In Proceedings of the 2011 IEEE International Symposium on Multimedia, Dana Point, CA, USA, 5–7 December 2011; pp. 476–481. [Google Scholar]

- de Fréin, R. Effect of System Load on Video Service Metrics. In Proceedings of the 2015 26th Irish Signals and Systems Conference (ISSC), Carlow, Ireland, 24–25 June 2015; pp. 1–6. [Google Scholar]

- Xu, R.; Wunsch, D. Survey of Clustering Algorithms. IEEE Trans. Neural Net. 2005, 16, 645–678. [Google Scholar] [CrossRef] [Green Version]

- Hartigan, J.A.; Wong, M.A. Algorithm AS 136: A K-means Clustering Algorithm. J. R. Stat. Soc. Ser. C 1979, 28, 100–108. [Google Scholar] [CrossRef]

- Van Hulle, M.M. Self-Organizing Maps; 2012; pp. 585–622. [Google Scholar] [CrossRef]

- Moon, T.K. The Expectation-Maximization Algorithm. IEEE Signal Process. Mag. 1996, 13, 47–60. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. Adv. Neural Inf. Process. Syst. 2014, 27. [Google Scholar] [CrossRef]

- de Fréin, R. Source Separation Approach to Video Quality Prediction in Computer Networks. IEEE Commun. Lett. 2016, 20, 1333–1336. [Google Scholar] [CrossRef]

- Kaelbling, L.P.; Littman, M.L.; Moore, A.W. Reinforcement Learning: A Survey. J. Artif. Intell. Res. 1996, 4, 237–285. [Google Scholar] [CrossRef] [Green Version]

- Mammeri, Z. Reinforcement Learning based Routing in Networks: Review and Classification of Approaches. IEEE Access 2019, 7, 55916–55950. [Google Scholar] [CrossRef]

- Watkins, C.J.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Li, Y. Deep Reinforcement Learning: An Overview. arXiv 2017, arXiv:1701.07274. [Google Scholar]

- Imran; Ghaffar, Z.; Alshahrani, A.; Fayaz, M.; Alghamdi, A.M.; Gwak, J. A Topical Review on Machine Learning, Software Defined Networking, Internet of Things Applications: Research Limitations and Challenges. Electronics 2021, 10, 880. [Google Scholar] [CrossRef]

- Al-Garadi, M.A.; Mohamed, A.; Al-Ali, A.K.; Du, X.; Ali, I.; Guizani, M. A Survey of Machine and Deep Learning Methods for Internet of Things (IoT) Security. IEEE Commun. Surv. Tutor. 2020, 22, 1646–1685. [Google Scholar] [CrossRef] [Green Version]

- Mahdavinejad, M.S.; Rezvan, M.; Barekatain, M.; Adibi, P.; Barnaghi, P.; Sheth, A.P. Machine learning for Internet of Things Data Analysis: A Survey. Digit. Commun. Netw. 2018, 4, 161–175. [Google Scholar] [CrossRef]

- Cui, L.; Yang, S.; Chen, F.; Ming, Z.; Lu, N.; Qin, J. A Survey on Application of Machine Learning for Internet of Things. Int. J. Mach. Learn. Cybern. 2018, 9, 1399–1417. [Google Scholar] [CrossRef]

- Miller, D.J.; Xiang, Z.; Kesidis, G. Adversarial Learning Targeting Deep Neural Network Classification: A Comprehensive Review of Defenses Against Attacks. Process. IEEE 2020, 108, 402–433. [Google Scholar] [CrossRef]

- Tang, L.; Mahmoud, Q.H. A Survey of Machine Learning-Based Solutions for Phishing Website Detection. Mach. Learn. Knowl. Extr. 2021, 3, 672–694. [Google Scholar] [CrossRef]

- Meshram, A.; Haas, C. Anomaly Detection in Industrial Networks using Machine Learning: A Roadmap. In Machine Learning for Cyber Physical Systems; Springer: Berlin/Heidelberg, Germany, 2017; pp. 65–72. [Google Scholar]

- Hodo, E.; Bellekens, X.; Hamilton, A.; Tachtatzis, C.; Atkinson, R. Shallow and Deep Networks Intrusion Detection System: A Taxonomy and Survey. arXiv 2017, arXiv:1701.02145. [Google Scholar]

- Sultana, N.; Chilamkurti, N.; Peng, W.; Alhadad, R. Survey on SDN based Network Intrusion Detection System Using Machine Learning Approaches. Peer -Peer Netw. Appl. 2019, 12, 493–501. [Google Scholar] [CrossRef]

- Buczak, A.L.; Guven, E. A Survey of Data Mining and Machine Learning Methods for Cyber Security Intrusion Detection. IEEE Commun. Surv. Tutor. 2016, 18, 1153–1176. [Google Scholar] [CrossRef]

- Otoum, S.; Kantarci, B.; Mouftah, H.T. On the Feasibility of Deep Learning in Sensor Network Intrusion Detection. IEEE Netw. Lett. 2019, 1, 68–71. [Google Scholar] [CrossRef]

- Sharma, H.; Haque, A.; Blaabjerg, F. Machine Learning in Wireless Sensor Networks for Smart Cities: A Survey. Electronics 2021, 10, 1012. [Google Scholar] [CrossRef]

- Zhang, C.; Patras, P.; Haddadi, H. Deep Learning in Mobile and Wireless Networking: A Survey. IEEE Commun. Surv. Tutor. 2019, 21, 2224–2287. [Google Scholar] [CrossRef] [Green Version]

- Klaine, P.V.; Imran, M.A.; Onireti, O.; Souza, R.D. A Survey of Machine Learning Techniques Applied to Self-Organizing Cellular Networks. IEEE Commun. 2017, 19, 2392–2431. [Google Scholar] [CrossRef] [Green Version]

- Musumeci, F.; Rottondi, C.; Nag, A.; Macaluso, I.; Zibar, D.; Ruffini, M.; Tornatore, M. An Overview on Application of Machine Learning Techniques in Optical Networks. IEEE Commun. Surv. Tutor. 2019, 21, 1383–1408. [Google Scholar] [CrossRef] [Green Version]

- Usama, M.; Qadir, J.; Raza, A.; Arif, H.; Yau, K.L.A.; Elkhatib, Y.; Hussain, A.; Al-Fuqaha, A. Unsupervised Machine Learning for Networking: Techniques, Applications and Research Challenges. IEEE Access 2019, 7, 65579–65615. [Google Scholar] [CrossRef]

- Fadlullah, Z.M.; Tang, F.; Mao, B.; Kato, N.; Akashi, O.; Inoue, T.; Mizutani, K. State-of-the-Art Deep Learning: Evolving Machine Intelligence Toward Tomorrow’s Intelligent Network Traffic Control Systems. IEEE Commun. Surv. Tutor. 2017, 19, 2432–2455. [Google Scholar] [CrossRef]

- Boutaba, R.; Salahuddin, M.A.; Limam, N.; Ayoubi, S.; Shahriar, N.; Estrada-Solano, F.; Caicedo, O.M. A Comprehensive Survey on Machine Learning for Networking: Evolution, Applications and Research Opportunities. J. Internet Serv. Appl. 2018, 9, 1–99. [Google Scholar] [CrossRef] [Green Version]

- Izima, O.; de Fréin, R.; Davis, M. Evaluating Load Adjusted Learning Strategies for Client Service Levels Prediction from Cloud-hosted Video Servers. In Proceedings of the 26th AIAI Irish Conference on Artificial Intelligence and Cognitive Science, Dublin, Ireland, 6–7 December 2018; Volume 2259, pp. 198–209. [Google Scholar]

- Ridwan, M.A.; Radzi, N.A.M.; Abdullah, F.; Jalil, Y.E. Applications of Machine Learning in Networking: A Survey of Current Issues and Future Challenges. IEEE Access 2021, 9, 52523–52556. [Google Scholar] [CrossRef]

- Oprea, S.; Martinez-Gonzalez, P.; Garcia-Garcia, A.; Castro-Vargas, J.A.; Orts-Escolano, S.; Rodríguez, J.G.; Argyros, A.A. A Review on Deep Learning Techniques for Video Prediction. IEEE Trans. Pattern Anal. Mach. Intell. 2020. [Google Scholar] [CrossRef] [PubMed]

- Aroussi, S.; Mellouk, A. Survey on Machine Learning-based QoE-QoS Correlation Models. In Proceedings of the 2014 International Conference on Computing, Management and Telecommunications (ComManTel), Da Nang, Vietnam, 27–29 April 2014; pp. 200–204. [Google Scholar]

- Khokhar, M.J.; Ehlinger, T.; Barakat, C. From Network Traffic Measurements to QoE for Internet Video. In Proceedings of the 2019 IFIP Networking Conference (IFIP Networking), Warsaw, Poland, 22–22 May 2019; pp. 1–9. [Google Scholar]

- Cheng, Y.; Geng, J.; Wang, Y.; Li, J.; Li, D.; Wu, J. Bridging Machine Learning and Computer Network Research: A Survey. CCF Trans. Netw. 2019, 1, 1–5. [Google Scholar] [CrossRef]

- Marquardt, D.W.; Snee, R.D. Ridge regression in practice. Am. Stat. 1975, 29, 3–20. [Google Scholar]

- Kukreja, S.L.; Löfberg, J.; Brenner, M.J. A Least Absolute Shrinkage and Selection Operator (LASSO) for Nonlinear System identification. IFAC Proc. Vol. 2006, 39, 814–819. [Google Scholar] [CrossRef] [Green Version]

- Zou, H.; Hastie, T. Regularization and Variable Selection via the Elastic Net. J. R. Stat. Soc. Ser. B 2005, 67, 301–320. [Google Scholar] [CrossRef] [Green Version]

- Izima, O.; de Fréin, R.; Davis, M. Predicting Quality of Delivery Metrics for Adaptive Video Codec Sessions. In Proceedings of the 2020 IEEE 9th International Conference on Cloud Networking (CloudNet), Piscataway, NJ, USA, 9–11 November 2020; pp. 1–7. [Google Scholar]

- Izima, O.; de Fréin, R.; Malik, A. Codec-Aware Video Delivery Over SDNs. In Proceedings of the 2021 IFIP/IEEE International Symposium on Integrated Network Management (IM), Bordeaux, France, 17–21 May 2021; pp. 732–733. [Google Scholar]

- Hartsell, T.; Yuen, S.C.Y. Video Streaming in Online Learning. AACE J. 2006, 14, 31–43. [Google Scholar]

- Li, X.; Darwich, M.; Salehi, M.A.; Bayoumi, M. A Survey on Cloud-based Video Streaming Services. In Advances in Computers; Elsevier: Amsterdam, The Netherlands, 2021; Volume 123, pp. 193–244. [Google Scholar]

- Lao, F.; Zhang, X.; Guo, Z. Parallelizing Video Transcoding Using Map-Reduce-based Cloud Computing. In Proceedings of the 2012 IEEE International Symposium on Circuits and Systems (ISCAS), Seoul, Korea, 20–23 May 2012; pp. 2905–2908. [Google Scholar]

- Varma, S. Internet Congestion Control, 1st ed.; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2015; pp. 173–203. [Google Scholar]

- Wu, D.; Hou, Y.T.; Zhu, W.; Zhang, Y.Q.; Peha, J.M. Streaming Video Over the Internet: Approaches and Directions. IEEE Trans. 2001, 11, 282–300. [Google Scholar]

- Pereira, R.; Pereira, E. Video Streaming: Overview and Challenges in the Internet of Things. In Pervasive Computing; Intelligent Data-Centric Systems, Academic Press: Boston, MA, USA, 2016; pp. 417–444. [Google Scholar]

- RFC 3550—RTP: A Transport Protocol for Real-Time Applications. Available online: https://tools.ietf.org/html/rfc3550 (accessed on 2 October 2021).

- RFC 2326—Real Time Streaming Protocol (RTSP). Available online: https://tools.ietf.org/html/rfc2326 (accessed on 2 October 2021).

- Handley, M.; Jacobson, V.; Perkins, C. SDP: Session Description Protocol. 1998. Available online: https://www.hjp.at/doc/rfc/rfc4566.html (accessed on 3 October 2021).

- Friedman, T.; Caceres, R.; Clark, A. RFC 3611—RTP Control Protocol Extended Reports (RTCP XR). 2003. Available online: https://tools.ietf.org/html/rfc3611 (accessed on 3 October 2021).

- RFC 7825—A Network Address Translator (NAT) Traversal Mechanism for Media Controlled by the Real-Time Streaming Protocol (RTSP). Available online: https://tools.ietf.org/html/rfc7825 (accessed on 3 October 2021).

- Kamvar, S.D.; Schlosser, M.T.; Garcia-Molina, H. The Eigentrust Algorithm for Reputation Management in P2P Networks. In Proceedings of the 12th International Conference on World Wide Web, Budapest, Hungary, 20–24 May 2003; pp. 640–651. [Google Scholar]

- Camarillo, G. RFC 5694 Peer-to-Peer (P2P) Architecture: Definition, Taxonomies, Examples, and Applicability. Network Workshop Group IETF. 2009. Available online: https://datatracker.ietf.org/doc/rfc5694/ (accessed on 4 October 2021).

- Ramzan, N.; Park, H.; Izquierdo, E. Video Streaming over P2P Networks: Challenges and Opportunities. Image Commun. 2012, 27, 401–411. [Google Scholar] [CrossRef]

- Chu, Y.h.; Rao, S.G.; Seshan, S.; Zhang, H. A Case for End System Multicast. IEEE J. Sel. Areas Commun. 2002, 20, 1456–1471. [Google Scholar] [CrossRef]

- Gifford, D.; Johnson, K.L.; Kaashoek, M.F.; O’Toole, J.W., Jr. Overcast: Reliable Multicasting with An Overlay Network. In Proceedings of the USENIX Symposium on OSDI, San Diego, CA, USA, 23–25 October 2000. [Google Scholar]

- Magharei, N.; Rejaie, R. Prime: Peer-to-Peer Receiver-driven Mesh-based Streaming. IEEE/ACM Trans. Netw. 2009, 17, 1052–1065. [Google Scholar] [CrossRef] [Green Version]

- Pai, V.; Kumar, K.; Tamilmani, K.; Sambamurthy, V.; Mohr, A.E. Chainsaw: Eliminating Trees from Overlay Multicast. In International Workshop on Peer-to-Peer Systems; Springer: Berlin/Heidelberg, Germany, 2005; pp. 127–140. [Google Scholar]

- Stutzbach, D.; Rejaie, R. Understanding Churn in Peer-to-Peer Networks. In Proceedings of the 6th ACM SIGCOMM Conference on Internet Measurement, New York, NY, USA, 25–27 October 2006; pp. 189–202. [Google Scholar]

- Liu, Y.; Guo, Y.; Liang, C. A Survey on Peer-to-Peer Video Streaming Systems. Peer-to-Peer Netw. Appl. 2008, 1, 18–28. [Google Scholar] [CrossRef]

- Sani, Y.; Mauthe, A.; Edwards, C. Adaptive Bitrate Selection: A Survey. IEEE Commun. Surv. Tutor. 2017, 19, 2985–3014. [Google Scholar] [CrossRef]

- Pantos, R. HTTP Live Streaming, 1 May 2009. Internet Engineering Task Force. Available online: https://datatracker.ietf.org/doc/html/draft-pantos-http-live-streaming (accessed on 5 October 2021).

- Robinson, D. Live Streaming Ecosystems. Adv. Content Deliv. Stream. Cloud Serv. 2014, 2014, 33–49. [Google Scholar] [CrossRef]

- Sodagar, I. The MPEG-DASH Standard for Multimedia Streaming Over the Internet. IEEE Multimed. 2011, 18, 62–67. [Google Scholar] [CrossRef]

- Kua, J.; Armitage, G.; Branch, P. A Survey of Rate Adaptation Techniques for Dynamic Adaptive Streaming Over HTTP. IEEE Commun. Surv. Tutor. 2017, 19, 1842–1866. [Google Scholar] [CrossRef]

- Huang, T.Y.; Johari, R.; McKeown, N.; Trunnell, M.; Watson, M. A Buffer-based Approach To Rate Adaptation: Evidence from a Large Video Streaming Service. In Proceedings of the 2014 ACM Conference on SIGCOMM, Chicago, IL, USA, 17–22 August 2014; pp. 187–198. [Google Scholar]

- Spiteri, K.; Urgaonkar, R.; Sitaraman, R.K. BOLA: Near-optimal Bitrate Adaptation for Online Videos. IEEE/ACM Trans. Netw. 2020, 28, 1698–1711. [Google Scholar] [CrossRef]

- Li, Z.; Zhu, X.; Gahm, J.; Pan, R.; Hu, H.; Begen, A.C.; Oran, D. Probe and Adapt: Rate Adaptation for HTTP Video Streaming at Scale. IEEE J. Sel. Areas Commun. 2014, 32, 719–733. [Google Scholar] [CrossRef] [Green Version]

- Jiang, J.; Sekar, V.; Zhang, H. Improving Fairness, Efficiency, and Stability in HTTP-based Adaptive Video Streaming with Festive. In Proceedings of the 8th International Conference on Emerging Networking Experiments and Technologies, Nice, France, 10–13 December 2012; pp. 97–108. [Google Scholar]

- Yousef, H.; Feuvre, J.L.; Storelli, A. ABR Prediction Using Supervised Learning Algorithms. In Proceedings of the 2020 IEEE 22nd International Workshop on Multimedia Signal Processing (MMSP), Tampere, Finland, 20–23 September 2020; pp. 1–6. [Google Scholar]

- Yin, X.; Jindal, A.; Sekar, V.; Sinopoli, B. A Control-theoretic Approach for Dynamic Adaptive Video Streaming Over HTTP. In Proceedings of the 2015 ACM Conference on Special Interest Group on Data Communication, London, UK, 17–21 August 2015; pp. 325–338. [Google Scholar]

- Microsoft Silverlight Smooth Streaming. Available online: https://mssilverlight.azurewebsites.net/silverlight/smoothstreaming/ (accessed on 8 October 2021).

- Live video streaming online|Adobe HTTP Dynamic Streaming. Available online: https://business.adobe.com/ie/products/primetime/adobe-media-server/hds-dynamic-streaming.html (accessed on 8 October 2021).

- Apple HTTP Live Streaming (HLS), Apple. Available online: https://developer.apple.com/streaming/ (accessed on 8 October 2021).

- Cermak, G.; Pinson, M.; Wolf, S. The Relationship Among Video Quality, Screen Resolution, and Bit Rate. IEEE Trans. Broadcast. 2011, 57, 258–262. [Google Scholar] [CrossRef]

- Li, Z.; Begen, A.C.; Gahm, J.; Shan, Y.; Osler, B.; Oran, D. Streaming Video Over HTTP with Consistent Quality. In Proceedings of the 5th ACM Multimedia Systems Conference, Singapore, 19–21 March 2014; pp. 248–258. [Google Scholar]

- Wang, B.; Kurose, J.; Shenoy, P.; Towsley, D. Multimedia streaming via TCP: An Analytic Performance Study. ACM Trans. Multimed. Comput. Commun. Appl. 2008, 4, 1–22. [Google Scholar] [CrossRef]

- Yu, S.S.; Zhang, J.; Zhou, J.L.; Zhou, X. A Flow Control Scheme in Video Surveillance Applications. Comput. Eng. Sci. 2005, 9, 3–5. [Google Scholar]

- Frnda, J.; Voznak, M.; Sevcik, L. Impact of Packet Loss and Delay Variation on the Quality of Real-time Video Streaming. Telecommun. Syst. 2016, 62, 265–275. [Google Scholar] [CrossRef]

- Seufert, M.; Egger, S.; Slanina, M.; Zinner, T.; Hoßfeld, T.; Tran-Gia, P. A Survey on Quality of Experience of HTTP Adaptive Streaming. IEEE Commun. Surv. Tutor. 2015, 17, 469–492. [Google Scholar] [CrossRef]

- Vega, M.T.; Mocanu, D.C.; Liotta, A. Unsupervised Deep Learning for Real-Time Assessment of Video Streaming Services. Multimed. Tools Appl. 2017, 76, 22303–22327. [Google Scholar] [CrossRef] [Green Version]

- Hinton, G.E. Training Products of Experts by Minimizing Contrastive Divergence. Neural Comput. 2002, 14, 1771–1800. [Google Scholar] [CrossRef]

- de Fréin, R.; Olariu, C.; Song, Y.; Brennan, R.; McDonagh, P.; Hava, A.; Thorpe, C.; Murphy, J.; Murphy, L.; French, P. Integration of QoS Metrics, Rules and Semantic Uplift for Advanced IPTV Monitoring. J. Netw. Syst. Manag. 2015, 23, 673–708. [Google Scholar] [CrossRef] [Green Version]

- Raca, D.; Zahran, A.H.; Sreenan, C.J.; Sinha, R.K.; Halepovic, E.; Jana, R.; Gopalakrishnan, V. On Leveraging Machine and Deep Learning for Throughput Prediction in Cellular Networks: Design, Performance, and Challenges. IEEE Commun. Mag. 2020, 58, 11–17. [Google Scholar] [CrossRef]

- Bentaleb, A.; Timmerer, C.; Begen, A.C.; Zimmermann, R. Bandwidth Prediction in Low-Latency Chunked Streaming. In Proceedings of the 29th ACM Workshop on Network and Operating Systems Support for Digital Audio and Video, Amherst, MA, USA, 21 June 2019; pp. 7–13. [Google Scholar]

- Essaili, A.E.; Lohmar, T.; Ibrahim, M. Realization and Evaluation of an End-to-End Low Latency Live DASH System. In Proceedings of the 2018 IEEE International Symposium on Broadband Multimedia Systems and Broadcasting (BMSB), Valencia, Spain, 6–8 June 2018; pp. 1–5. [Google Scholar]

- Engel, Y.; Mannor, S.; Meir, R. The Kernel Recursive Least-squares Algorithm. IEEE Trans. Signal Process. 2004, 52, 2275–2285. [Google Scholar] [CrossRef]

- Video Quality of Service (QoS) Tutorial—Cisco. Available online: https://tinyurl.com/vw92pypc (accessed on 10 October 2021).

- Mao, H.; Netravali, R.; Alizadeh, M. Neural Adaptive Video Streaming with Pensieve. In Proceedings of the Conference of the ACM Special Interest Group on Data Communication, Los Angeles, CA, USA, 21–25 August 2017; pp. 197–210. [Google Scholar]

- Mao, H.; Chen, S.; Dimmery, D.; Singh, S.; Blaisdell, D.; Tian, Y.; Alizadeh, M.; Bakshy, E. Real-world Video Adaptation with Reinforcement Learning. arXiv 2020, arXiv:2008.12858. [Google Scholar]

- Zhao, Y.; Shen, Q.W.; Li, W.; Xu, T.; Niu, W.H.; Xu, S.R. Latency Aware Adaptive Video Streaming Using Ensemble Deep Reinforcement Learning. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 2647–2651. [Google Scholar]

- Hosmer, D.W., Jr.; Lemeshow, S.; Sturdivant, R.X. Applied Logistic Regression; John Wiley & Sons: Hoboken, NJ, USA, 2013; Volume 398. [Google Scholar]

- Hastie, T.; Rosset, S.; Zhu, J.; Zou, H. Multi-class Adaboost. Stat. Its Interface 2009, 2, 349–360. [Google Scholar] [CrossRef] [Green Version]

- Friedman, J.H. Stochastic Gradient Boosting. Comput. Stat. Data Anal. 2002, 38, 367–378. [Google Scholar] [CrossRef]

- Rish, I. An Empirical Study of the Naive Bayes Classifier. In Proceedings of the IJCAI 2001 Workshop on Empirical Methods in Artificial Intelligence, Seattle, WA, USA, 4–10 August 2001; Volume 3, pp. 41–46. [Google Scholar]

- Kataria, A.; Singh, M. A Review of Data Classification Using K-nearest Neighbour Algorithm. Int. J. Emerg. Technol. Adv. Eng. 2013, 3, 354–360. [Google Scholar]

- Sani, Y.; Raca, D.; Quinlan, J.J.; Sreenan, C.J. SMASH: A Supervised Machine Learning Approach to Adaptive Video Streaming over HTTP. In Proceedings of the 2020 Twelfth International Conference on Quality of Multimedia Experience (QoMEX), Athlone, Ireland, 26–28 May 2020; pp. 1–6. [Google Scholar]

- Srivastava, S.; Gupta, M.R.; Frigyik, B.A. Bayesian Quadratic Discriminant Analysis. J. Mach. Learn. Res. 2007, 8, 1277–1305. [Google Scholar]

- Piramuthu, S.; Shaw, M.J.; Gentry, J.A. A Classification Approach Using Multi-layered Neural Networks. Decis. Support Syst. 1994, 11, 509–525. [Google Scholar] [CrossRef]

- Mok, R.K.; Chan, E.W.; Chang, R.K. Measuring the Quality of Experience of HTTP Video Streaming. In Proceedings of the 12th IFIP/IEEE International Symposium on Integrated Network Management (IM 2011) and Workshops, Dublin, Ireland, 23–27 May 2011; pp. 485–492. [Google Scholar]

- Feamster, N.; Rexford, J. Why (and how) Networks Should Run Themselves. arXiv 2017, arXiv:1710.11583. [Google Scholar]

- Naylor, D.; Finamore, A.; Leontiadis, I.; Grunenberger, Y.; Mellia, M.; Munafò, M.; Papagiannaki, K.; Steenkiste, P. The Cost of the “S” in HTTPS. In Proceedings of the 10th ACM International on Conference on Emerging Networking Experiments and Technologies, Sydney, NSW, Australia, 2–5 December 2014; pp. 133–140. [Google Scholar]

- Jarmoc, J.; Unit, D. SSL/TLS Interception Proxies and Transitive Trust. Black Hat Europe March 2012. Available online: https://www.semanticscholar.org/paper/SSL%2FTLS-Interception-Proxies-and-Transitive-Trust-Jarmoc/bd1e35fc81e8d3d1751f1d7443fef2dfdbdc2394#citing-papers (accessed on 5 October 2021).

- Sherry, J.; Lan, C.; Popa, R.A.; Ratnasamy, S. Blindbox: Deep Packet Inspection Over Encrypted Traffic. In Proceedings of the 2015 ACM Conference on Special Interest Group on Data Communication, London, UK, 17–21 August 2015; pp. 213–226. [Google Scholar]

- Orsolic, I.; Pevec, D.; Suznjevic, M.; Skorin-Kapov, L. YouTube QoE Estimation Based on the Analysis of Encrypted Network Traffic Using Machine Learning. In Proceedings of the 2016 IEEE Globecom Workshops (GC Wkshps), Washington, DC USA, 4–8 December 2016; pp. 1–6. [Google Scholar]

- Buddhinath, G.; Derry, D. A Simple Enhancement To One Rule Classification; Department Computer Science Software Engeering, University of Melbourne: Melbourne, Australia, 2006; p. 40. [Google Scholar]

- Platt, J. Sequential Minimal Optimization: A Fast Algorithm for Training Support Vector Machines. 1998. Available online: https://tinyurl.com/9xt2zkaf (accessed on 11 October 2021).

- Mathuria, M. Decision Tree Analysis on J48 Algorithm for Data Mining. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 2013, 3, 1114–1119. [Google Scholar]

- Dimopoulos, G.; Leontiadis, I.; Barlet-Ros, P.; Papagiannaki, K. Measuring Video QoE from Encrypted Traffic. In Proceedings of the 2016 Internet Measurement Conference, Santa Monica, CA, USA, 14–16 November 2016; pp. 513–526. [Google Scholar]

- Casas, P.; Seufert, M.; Wamser, F.; Gardlo, B.; Sackl, A.; Schatz, R. Next to You: Monitoring Quality of Experience in Cellular Networks from the End-devices. IEEE Trans. Netw. Serv. Manag. 2016, 13, 181–196. [Google Scholar] [CrossRef]

- Wassermann, S.; Wehner, N.; Casas, P. Machine Learning Models for YouTube QoE and User Engagement Prediction in Smartphones. SIGMETRICS 2019, 46, 155–158. [Google Scholar] [CrossRef]

- Pal, K.; Patel, B.V. Data Classification with K-fold Cross Validation and Holdout Accuracy Estimation Methods with 5 Different Machine Learning Techniques. In Proceedings of the 2020 Fourth International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 11–13 March 2020; pp. 83–87. [Google Scholar]

- Didona, D.; Romano, P. On Bootstrapping Machine Learning Performance Predictors via Analytical Models. arXiv 2014, arXiv:1410.5102. [Google Scholar]

- Wassermann, S.; Seufert, M.; Casas, P.; Gang, L.; Li, K. I See What You See: Real Time Prediction of Video Quality from Encrypted Streaming Traffic. In Proceedings of the 4th Internet-QoE Workshop on QoE-based Analysis and Management of Data Communication Networks, Los Cabos, Mexico, 21 October 2019; pp. 1–6. [Google Scholar]

- Saeed, U.; Jan, S.U.; Lee, Y.D.; Koo, I. Fault Diagnosis based on Extremely Randomized Trees in Wireless Sensor Networks. Reliab. Eng. Syst. Saf. 2021, 205, 107284. [Google Scholar] [CrossRef]

- Breiman, L. Bagging Predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef] [Green Version]

- Chakravarthy, A.D.; Bonthu, S.; Chen, Z.; Zhu, Q. Predictive Models with Resampling: A Comparative Study of Machine Learning Algorithms and their Performances on Handling Imbalanced Datasets. In Proceedings of the 2019 18th IEEE International Conference On Machine Learning Additionally, Applications (ICMLA), Boca Raton, FL, USA, 16–19 December 2019; pp. 1492–1495. [Google Scholar]

- Wassermann, S.; Seufert, M.; Casas, P.; Gang, L.; Li, K. Let Me Decrypt Your Beauty: Real-time Prediction of Video Resolution and Bitrate for Encrypted Video Streaming. In Proceedings of the 2019 Network Traffic Measurement and Analysis Conference (TMA), Paris, France, 19–21 June 2019; pp. 199–200. [Google Scholar]

- Gutterman, C.; Guo, K.; Arora, S.; Wang, X.; Wu, L.; Katz-Bassett, E.; Zussman, G. Requet: Real-Time QoE Detection for Encrypted YouTube Traffic. In Proceedings of the 10th ACM Multimedia Systems Conference, Amherst, MA, USA, 18–21 June 2019; pp. 48–59. [Google Scholar]

- Gutterman, C.; Guo, K.; Arora, S.; Gilliland, T.; Wang, X.; Wu, L.; Katz-Bassett, E.; Zussman, G. Requet: Real-Time QoE Metric Detection for Encrypted YouTube Traffic. ACM Trans. Multimed. Comput. Commun. Appl. 2020, 16, 1–28. [Google Scholar] [CrossRef]

- Seufert, M.; Casas, P.; Wehner, N.; Gang, L.; Li, K. Stream-based Machine Learning for Real-time QoE analysis of Encrypted Video Streaming Traffic. In Proceedings of the 2019 22nd Conference on Innovation in Clouds, Internet and Networks and Workshops (ICIN), Paris, France, 19–21 February 2019; pp. 76–81. [Google Scholar]

- Seufert, M.; Casas, P.; Wehner, N.; Gang, L.; Li, K. Features That Matter: Feature Selection for On-line Stalling Prediction in Encrypted Video Streaming. In Proceedings of the IEEE INFOCOM 2019-IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Paris, France, 29 April–2 May 2019; pp. 688–695. [Google Scholar]

- Krishnamoorthi, V.; Carlsson, N.; Halepovic, E.; Petajan, E. BUFFEST: Predicting Buffer Conditions and Real-Time Requirements of HTTP(S) Adaptive Streaming Clients. In Proceedings of the 8th ACM on Multimedia Systems Conference, Taipei, Taiwan, 20–23 June 2017; pp. 76–87. [Google Scholar]

- Mazhar, M.H.; Shafiq, Z. Real-time Video Quality of Experience Monitoring for HTTPS and QUIC. In Proceedings of the IEEE INFOCOM 2018-IEEE Conference on Computer Communications, Honolulu, HI, USA, 15–19 April 2018; pp. 1331–1339. [Google Scholar]

- Bronzino, F.; Schmitt, P.; Ayoubi, S.; Martins, G.; Teixeira, R.; Feamster, N. Inferring Streaming Video Quality from Encrypted Traffic: Practical Models and Deployment Experience. Proc. Acm Meas. Anal. Comput. Syst. 2019, 3, 1–25. [Google Scholar] [CrossRef]

- Pandey, S.; Choi, M.J.; Yoo, J.H.; Hong, J.W.K. Streaming Pattern Based Feature Extraction for Training Neural Network Classifier to Predict Quality of VOD services. In Proceedings of the 2021 IFIP/IEEE International Symposium on Integrated Network Management (IM), Bordeaux, France, 17–21 May 2021; pp. 551–557. [Google Scholar]

- Schwarzmann, S.; Cassales Marquezan, C.; Bosk, M.; Liu, H.; Trivisonno, R.; Zinner, T. Estimating Video Streaming QoE in the 5G Architecture Using Machine Learning. In Proceedings of the 4th Internet-QoE Workshop on QoE-Based Analysis and Management of Data Communication Networks, Los Angeles, CA, USA, 21 August 2019; pp. 7–12. [Google Scholar]

- Baraković, S.; Skorin-Kapov, L. Survey and Challenges of QoE Management Issues in Wireless Networks. J. Comput. Netw. Commun. 2013, 2013. [Google Scholar] [CrossRef] [Green Version]

- Bartolec, I.; Orsolic, I.; Skorin-Kapov, L. In-network YouTube Performance Estimation in Light of End User Playback-Related Interactions. In Proceedings of the 2019 Eleventh International Conference on Quality of Multimedia Experience (QoMEX), Berlin, Germany, 5–7 June 2019; pp. 1–3. [Google Scholar]

- Orsolic, I.; Suznjevic, M.; Skorin-Kapov, L. Youtube QoE Estimation From Encrypted Traffic: Comparison of Test Methodologies and Machine Learning Based Models. In Proceedings of the 2018 Tenth International Conference on Quality of Multimedia Experience (QoMEX), Sardinia, Italy, 29–31 May 2018; pp. 1–6. [Google Scholar]

- Oršolić, I.; Rebernjak, P.; Sužnjević, M.; Skorin-Kapov, L. In-network QoE and KPI Monitoring of Mobile YouTube Traffic: Insights for encrypted ios flows. In Proceedings of the 2018 14th International Conference on Network and Service Management (CNSM), Rome, Italy, 5–9 November 2018; pp. 233–239. [Google Scholar]

- Sun, Y.; Yin, X.; Jiang, J.; Sekar, V.; Lin, F.; Wang, N.; Liu, T.; Sinopoli, B. CS2P: Improving Video Bitrate Selection and Adaptation with Data-Driven Throughput Prediction. In Proceedings of the 2016 ACM SIGCOMM Conference, Florianopolis, Brazil, 22–26 August 2016; pp. 272–285. [Google Scholar]

- Claeys, M.; Latré, S.; Famaey, J.; De Turck, F. Design and Evaluation of a Self-Learning HTTP Adaptive Video Streaming Client. IEEE Commun. Lett. 2014, 18, 716–719. [Google Scholar] [CrossRef] [Green Version]

- de Fréin, R. Take off a load: Load-Adjusted Video Quality Prediction and Measurement. In Proceedings of the 2015 IEEE International Conference on Computer and Information Technology; Ubiquitous Computing and Communications; Dependable, Autonomic and Secure Computing; Pervasive Intelligence and Computing, Liverpool, UK, 26 October 2015; pp. 1886–1894. [Google Scholar] [CrossRef] [Green Version]

- Rossi, L.; Chakareski, J.; Frossard, P.; Colonnese, S. A Poisson Hidden Markov Model for Multiview Video Traffic. IEEE/ACM Trans. Netw. 2014, 23, 547–558. [Google Scholar] [CrossRef]

- Bampis, C.G.; Bovik, A.C. Feature-based Prediction of Streaming Video QoE: Distortions, Stalling and Memory. Signal Process. Image Commun. 2018, 68, 218–228. [Google Scholar] [CrossRef]

- Tran, H.T.T.; Nguyen, D.V.; Ngoc, N.P.; Thang, T.C. Overall Quality Prediction for HTTP Adaptive Streaming Using LSTM Network. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 3212–3226. [Google Scholar] [CrossRef]

- Wang, Y.; Jiang, L.; Yang, M.H.; Li, L.J.; Long, M.; Fei-Fei, L. Eidetic 3d LSTM: A Model for Video Prediction and Beyond. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Dinaki, H.E.; Shirmohammadi, S.; Janulewicz, E.; Côté, D. Forecasting Video QoE With Deep Learning From Multivariate Time-Series. IEEE Open J. Signal Process. 2021, 2, 512–521. [Google Scholar] [CrossRef]

- Kirkpatrick, K. Software-Defined Networking. Commun. ACM 2013, 56, 16–19. [Google Scholar] [CrossRef]

- Xie, J.; Yu, F.R.; Huang, T.; Xie, R.; Liu, J.; Wang, C.; Liu, Y. A Survey of Machine Learning Techniques Applied to Software Defined Networking (SDN): Research Issues and Challenges. IEEE Commun. Surv. Tutor. 2019, 21, 393–430. [Google Scholar] [CrossRef]

- Carner, J.; Mestres, A.; Alarcón, E.; Cabellos, A. Machine Learning-based Network Modeling: An Artificial Neural Network Model vs. a Theoretical Inspired Model. In Proceedings of the 2017 Ninth International Conference on Ubiquitous and Future Networks (ICUFN), Milan, Italy, 4–7 July 2017; pp. 522–524. [Google Scholar]

- Jain, S.; Khandelwal, M.; Katkar, A.; Nygate, J. Applying Big Data Technologies to Manage QoS in an SDN. In Proceedings of the 2016 12th International Conference on Network and Service Management (CNSM), Montreal, QC, Canada, 3 October–4 November 2016; pp. 302–306. [Google Scholar]

- Malik, A.; de Fréin, R.; Aziz, B. Rapid Restoration Techniques for Software-Defined Networks. Appl. Sci. 2020, 10, 3411. [Google Scholar] [CrossRef]

- Pasquini, R.; Stadler, R. Learning End-to-End Application QoS from Openflow Switch Statistics. In Proceedings of the 2017 IEEE Conference on Network Softwarization (NetSoft), Bologna, Italy, 3–7 July 2017; pp. 1–9. [Google Scholar]

- Ben Letaifa, A. Adaptive QoE Monitoring Architecture in SDN Networks: Video Streaming Services Case. In Proceedings of the 2017 13th International Wireless Communications and Mobile Computing Conference (IWCMC), Valencia, Spain, 26–30 June 2017; pp. 1383–1388. [Google Scholar]

- Petrangeli, S.; Wu, T.; Wauters, T.; Huysegems, R.; Bostoen, T.; De Turck, F. A Machine Learning-Based Framework for Preventing Video Freezes in HTTP Adaptive Streaming. J. Netw. Comput. Appl. 2017, 94, 78–92. [Google Scholar] [CrossRef] [Green Version]

- Seiffert, C.; Khoshgoftaar, T.M.; Van Hulse, J.; Napolitano, A. RUSBoost: A Hybrid Approach to Alleviating Class Imbalance. IEEE Trans. Syst. Man Cybern. Part A Syst. Humans 2009, 40, 185–197. [Google Scholar] [CrossRef]

- Da Hora, D.; Van Doorselaer, K.; Van Oost, K.; Teixeira, R. Predicting the Effect of Home Wi-Fi Quality on QoE. In Proceedings of the IEEE INFOCOM 2018-IEEE Conference on Computer Communications, Honolulu, HI, USA, 15–19 April 2018; pp. 944–952. [Google Scholar]

- Wamser, F.; Casas, P.; Seufert, M.; Moldovan, C.; Tran-Gia, P.; Hossfeld, T. Modeling the YouTube stack: From Packets to Quality of Experience. Comput. Netw. 2016, 109, 211–224. [Google Scholar] [CrossRef]

- Zinner, T.; Hohlfeld, O.; Abboud, O.; Hoßfeld, T. Impact of Frame Rate and Resolution on Objective QoE Metrics. In Proceedings of the 2010 Second International Workshop on Quality of Multimedia Experience (QoMEX), Trondheim, Norway, 21–23 June 2010; pp. 29–34. [Google Scholar]

- Ligata, A.; Perenda, E.; Gacanin, H. Quality of Experience Inference for Video Services in Home WiFi Networks. IEEE Commun. Mag. 2018, 56, 187–193. [Google Scholar] [CrossRef]

- Bhattacharyya, R.; Xia, B.; Rengarajan, D.; Shakkottai, S.; Kalathil, D. Flowbazaar: A Market-Mediated Software Defined Communications Ecosystem at the Wireless Edge. arXiv 2018, arXiv:1801.00825. [Google Scholar]

- Ammar, D.; De Moor, K.; Skorin-Kapov, L.; Fiedler, M.; Heegaard, P.E. Exploring the Usefulness of Machine Learning in the Context of WebRTC Performance Estimation. In Proceedings of the 2019 IEEE 44th Conference on Local Computer Networks (LCN), Osnabruck, Germany, 14–17 October 2019; pp. 406–413. [Google Scholar]

- Yan, S.; Guo, Y.; Chen, Y.; Xie, F. Predicting Freezing of WebRTC Videos in WiFi Networks. In International Conference on Ad Hoc Networks; Springer: Berlin/Heidelberg, Germany, 2018; pp. 292–301. [Google Scholar]

- Reiter, U.; Brunnström, K.; De Moor, K.; Larabi, M.C.; Pereira, M.; Pinheiro, A.; You, J.; Zgank, A. Factors Influencing Quality of Experience. In QoE; Springer: Berlin/Heidelberg, Germany, 2014; pp. 55–72. [Google Scholar]

- de Fréin, R. State Acquisition in Computer Networks. In Proceedings of the 2018 IFIP Networking Conference (IFIP Networking) and Workshops, Zurich, Switzerland, 14–16 May 2018; pp. 1–9. [Google Scholar] [CrossRef] [Green Version]

- Suto, J.; Oniga, S.; Lung, C.; Orha, I. Comparison of Offline and Real-time Human Activity Recognition Results Using Machine Learning Techniques. Neural Comput. Appl. 2020, 32, 15673–15686. [Google Scholar] [CrossRef]

- Ayodele, T.O. Types of Machine Learning Algorithms. New Adv. Mach. Learn. 2010, 3, 19–48. [Google Scholar]

- Malik, A.; de Fréin, R. A Proactive-Restoration Technique for SDNs. In Proceedings of the 2020 IEEE Symposium on Computers and Communications (ISCC), Rennes, France, 7–10 July 2020. [Google Scholar]

- Siami-Namini, S.; Tavakoli, N.; Namin, A.S. The Performance of LSTM and BiLSTM in Forecasting Time Series. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; pp. 3285–3292. [Google Scholar]

- Längkvist, M.; Karlsson, L.; Loutfi, A. A Review of Unsupervised Feature Learning and Deep Learning for Time-series Modeling. Pattern Recognit. Lett. 2014, 42, 11–24. [Google Scholar] [CrossRef] [Green Version]

- Eswara, N.; Ashique, S.; Panchbhai, A.; Chakraborty, S.; Sethuram, H.P.; Kuchi, K.; Kumar, A.; Channappayya, S.S. Streaming Video QoE Modeling and Prediction: A Long Short-Term Memory Approach. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 661–673. [Google Scholar] [CrossRef] [Green Version]

- White, G.; Palade, A.; Clarke, S. Forecasting QoS Attributes Using LSTM Networks. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar]

- Zhang, Z.; Cui, P.; Zhu, W. Deep Learning on Graphs: A Survey. IEEE Trans. Knowl. Data Eng. 2020, 1, 5555. [Google Scholar] [CrossRef] [Green Version]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Çetinkaya, E.; Amirpour, H.; Timmerer, C.; Ghanbari, M. FaME-ML: Fast Multirate Encoding for HTTP Adaptive Streaming Using Machine Learning. In Proceedings of the 2020 IEEE International Conference on Visual Communications and Image Processing (VCIP), Munich, Germany, 5–8 December 2020; pp. 87–90. [Google Scholar]

- Yeo, H.; Do, S.; Han, D. How Will Deep Learning Change Internet Video Delivery? In Proceedings of the 16th ACM Workshop on Hot Topics in Networks, Palo Alto, CA, USA, 30 November–1 December 2017; pp. 57–64. [Google Scholar]

- Jameel, S.M.; Hashmani, M.A.; Rehman, M.; Budiman, A. An Adaptive Deep Learning Framework for Dynamic Image Classification in the Internet of Things Environment. Sensors 2020, 20, 5811. [Google Scholar] [CrossRef]

- Chai, F.; Kang, K.D. Adaptive Deep Learning for Soft Real-Time Image Classification. Technologies 2021, 9, 20. [Google Scholar] [CrossRef]

- Gomes, H.M.; Bifet, A.; Read, J.; Barddal, J.P.; Enembreck, F.; Pfharinger, B.; Holmes, G.; Abdessalem, T. Adaptive Random Forests for Evolving Data Stream Classification. Mach. Learn. 2017, 106, 1469–1495. [Google Scholar] [CrossRef]

- Bibal, A.; Frénay, B. Interpretability of Machine Learning Models and Representations: An Introduction. In Proceedings of the ESANN 2016 Proceedings, European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning, Bruges, Belgium, 27–29 April 2016. [Google Scholar]

- Yin, M.; Wortman Vaughan, J.; Wallach, H. Understanding the Effect of Accuracy on Trust in Machine Learning Models. In Proceedings of the 2019 Chi Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–12. [Google Scholar]

- Carvalho, D.V.; Pereira, E.M.; Cardoso, J.S. Machine Learning interpretability: A Survey on Methods and Metrics. Electronics 2019, 8, 832. [Google Scholar] [CrossRef] [Green Version]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. Model-Agnostic Interpretability of Machine Learning. arXiv 2016, arXiv:1606.05386. [Google Scholar]

- Malik, A.; de Fréin, R.; Al-Zeyadi, M.; Andreu-Perez, J. Intelligent SDN Traffic Classification Using Deep Learning: Deep-SDN. In Proceedings of the 2020 2nd International Conference on Computer Communication and the Internet (ICCCI), Nagoya, Japan, 26–29 June 2020; pp. 184–189. [Google Scholar]

- Greenwald, H.S.; Oertel, C.K. Future Directions in Machine Learning. Front. Robot. AI 2017, 3, 79. [Google Scholar] [CrossRef] [Green Version]

- Malik, A.; Aziz, B.; Adda, M.; Ke, C.H. Smart Routing: Towards Proactive Fault Handling of Software-Defined Networks. Comput. Netw. 2020, 170, 107104. [Google Scholar] [CrossRef] [Green Version]

- Orsolic, I.; Skorin-Kapov, L. A Framework for in-Network QoE Monitoring of Encrypted Video Streaming. IEEE Access 2020, 8, 74691–74706. [Google Scholar] [CrossRef]

- Wares, S.; Isaacs, J.; Elyan, E. Data Stream Mining: Methods and Challenges for Handling Concept Drift. SN Appl. Sci. 2019, 1, 1–19. [Google Scholar] [CrossRef] [Green Version]

- Yoon, J.; James, J.; Van Der Schaar, M. Missing Data Imputation Using Generative Adversarial Nets. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018. [Google Scholar]

- Gondara, L.; Wang, K. Multiple imputation using deep denoising autoencoders. arXiv 2017, arXiv:1705.02737. [Google Scholar]

- Frey, B.J.; Brendan, J.F.; Frey, B.J. Graphical Models for Machine Learning and Digital Communication; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- You, J.; Ma, X.; Ding, D.Y.; Kochenderfer, M.; Leskovec, J. Handling Missing Data with Graph Representation Learning. arXiv 2020, arXiv:2010.16418. [Google Scholar]

- de Fréin, R. Load-adjusted video quality prediction methods for missing data. In Proceedings of the 2015 10th International Conference for Internet Technology and Secured Transactions (ICITST), London, UK, 14–16 December 2015; pp. 314–319. [Google Scholar]

- Chen, Y.; Wu, K.; Zhang, Q. From QoS to QoE: A Tutorial on Video Quality Assessment. IEEE Commun. Surv. Tutor. 2014, 17, 1126–1165. [Google Scholar] [CrossRef]

- Part 3: How to Compete With Broadcast Latency Using Current Adaptive Bitrate Technologies|AWS Media Blog. Available online: https://tinyurl.com/8wmrv5cr (accessed on 12 October 2021).

- Balachandran, A.; Sekar, V.; Akella, A.; Seshan, S.; Stoica, I.; Zhang, H. Developing a Predictive Model of Quality of Experience for Internet Video. ACM SIGCOMM Comput. Commun. Rev. 2013, 43, 339–350. [Google Scholar] [CrossRef] [Green Version]

| Area of Focus and Application | Survey Paper |

|---|---|

| Network Security/IDS | Al-Garadi et al. [46], Cui et al. [48], Miller et al. [49], Tang and Mahmoud [50], Meshram and Haas [51], Hodo et al. [52], Sultana et al. [53], Buczak and Guven [54], Otoum et al. [55], Usama et al. [60] |

| IoT | Imran et al. [45], Al-Garadi et al. [46], Mahdavinejad et al. [47], Cui et al. [48] |

| Smart Cities | Mahdavinejad et al. [47], Sharma et al. [56] |

| WSN / Mobile / Cellular Networks | Otoum et al. [55], Sharma et al. [56], Zhang et al. [57], Klaine et al. [58] |

| Networking, Network Operations & Optical Networks | Usama et al. [60], Fadlullah et al. [61], R. Boutaba et al. [62], Ridwan et al. [64] |

| SDN | Imran et al. [45], Cui et al. [48], Sultana et al. [53], This Paper |

| Video Frame Prediction | Oprea et al. [65] |

| Video Prediction from QoD measurements | This Paper |

| Author, Year | Objective, ML Techniques | Features | Key Results |

|---|---|---|---|

| Vega et al. [114] |

|

|

|

| Raca et al. [117] |

|

|

|

| Bentaleb et al. [118] |

|

|

|

| Mao et al. [122] |

|

|

|

| Zhao et al. [124] |

|

|

|

| Yousef et al. [103] |

|

|

|

| Sani et al. [130] |

|

|

|

| Author, Year | Objective, ML Techniques | Features | Key Results |

|---|---|---|---|

| Orsolic et al. [138] |

|

|

|

| Dimopoulos et al. [142] |

|

|

|

| Wassermann et al. [144] |

|

|

|

| Wassermann et al. [147] |

|

|

|

| Wassermann et al. [151] |

|

|

|

| Gutterman et al. [152,153] |

|

|

|

| Seufert et al. [154,155] |

|

|

|

| Krishnamoorthi et al. [156] |

|

|

|

| Mazhar and Shafiq [157] |

|

|

|

| Bronzino et al. [158] |

|

|

|

| Pandey et al. [159] |

|

|

|

| Schwarzmann et al. [160] |

|

|

|

| Bartolec et al. [162] |

|

|

|

| Orsolic et al. [163] Oršolić et al. [164] |

|

|

|

| Author, Year | Objective, ML Techniques | Features | Key Results |

|---|---|---|---|

| Sun et al. [165] |

|

|

|

| Bampis and Bovik [169] |

|

|

|

| Tran et al. [170] |

|

|

|

| Dinaki et al. [172] |

|

|

|

| Author, Year | Objective, ML Techniques | Features | Key Results |

|---|---|---|---|

| Pasquini and Stadler [178] |

|

|

|

| Ben Letaifa [179] |

|

|

|

| Petrangeli et al. [180] |

|

|

|

| Author, Year | Objective, ML Techniques | Features | Key Results |

|---|---|---|---|

| Da Hora et al. [182] |

|

|

|

| Ligata et al. [185] |

|

|

|

| Bhattacharyya et al. [186] |

|

|

|

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Izima, O.; de Fréin, R.; Malik, A. A Survey of Machine Learning Techniques for Video Quality Prediction from Quality of Delivery Metrics. Electronics 2021, 10, 2851. https://doi.org/10.3390/electronics10222851

Izima O, de Fréin R, Malik A. A Survey of Machine Learning Techniques for Video Quality Prediction from Quality of Delivery Metrics. Electronics. 2021; 10(22):2851. https://doi.org/10.3390/electronics10222851

Chicago/Turabian StyleIzima, Obinna, Ruairí de Fréin, and Ali Malik. 2021. "A Survey of Machine Learning Techniques for Video Quality Prediction from Quality of Delivery Metrics" Electronics 10, no. 22: 2851. https://doi.org/10.3390/electronics10222851

APA StyleIzima, O., de Fréin, R., & Malik, A. (2021). A Survey of Machine Learning Techniques for Video Quality Prediction from Quality of Delivery Metrics. Electronics, 10(22), 2851. https://doi.org/10.3390/electronics10222851