Data Augmentation and Deep Learning Methods in Sound Classification: A Systematic Review

Abstract

:1. Introduction

- Insufficient sound or audio data makes it extremely difficult to train deep neural networks, as efficient training and evaluation of audio/sound systems are only dependent on large training data [35].

- Traditional audio feature extraction methods lack strong abilities to effectively identifying better feature representations, thus affecting the performance of sound recognition [36].

- Robustness and generalization are the key challenges in building a high-performance sound recognition system, and some of the existing systems degrade due to scenario mismatch due to some factors such as reverberations, noise types, channels, etc. [37].

- Dependency on expert knowledge for reliable annotation of audio data [38].

- Are existing papers based on the sound classification task applied to specific and established data sources for experimentation?

- What are the data repository or dataset sources used?

- Which feature extraction methods are used and which data are extracted?

- What are the different data augmentation techniques applied in sound classification?

- How can we measure the importance of data augmentation techniques in learning algorithms?

- What is the future research recommendation for augmentation techniques?

- What obstacles are identified in the application of data augmentation for sound classification?

2. Methodology of Literature Search and Selection

3. Results of SLR

4. Discussion of the Results of Review

4.1. Sound Datasets

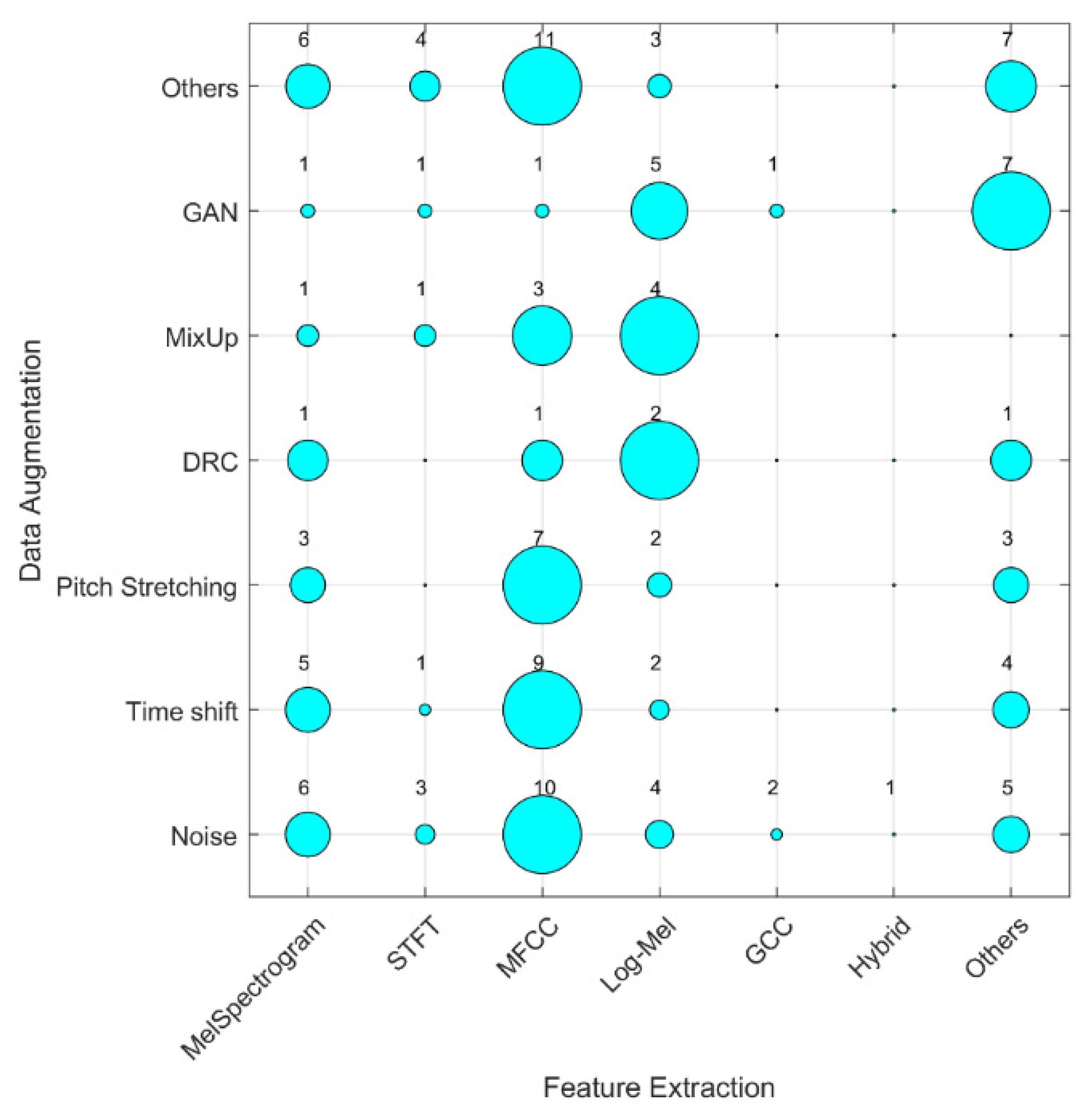

4.2. Feature Extraction Methods in Sound Classification

4.3. Data Augmentation Methods in Sound Classification

4.4. Classification Methods for Sound Classification

4.5. What Are the Obstacles in Application of Data Augmentation in Sound Classification?

| Obstacles | References | No of Papers |

|---|---|---|

| Limited amount of data volume | Garcia-Ceja et al. [58], Lee and Lee [67], Zhang et al. [101], Ykhlef et al. [100] | 4 |

| Lack generalization between data classes | Jeong et al. [61] | 1 |

| Noisy dataset/poor sound quality | Jeong et al. [61], Rituerto-González et al. [87], Lu et al. [71], Mertes et al. [74], Tran and Tsai [92], Wang et al. [96] | 6 |

| High computational complexity (Training time) | Lella and Pja [68], Zheng et al. [106], Vryzas et al. [94], Mushtaq and Su [75], Zhao et al. [103], Kadyan et al. [62], Mushtaq et al. [76], Padovese et al. [82], Pervaiz et al. [83], Sugiura et al. [91], Singh and Joshi [90], Wyatt et al. [97] | 12 |

| High Misclassification errors | Vecchiotti et al. [93], Kathania et al. [63], Lalitha et al. [66] | 3 |

| Over-smoothing effect | Esmaeilpour et al. [57] | 1 |

| Poor performance of classifier | Yang et al. [98], Vryzas et al. [94], Zhang et al. [102], Salamon et al. [88], Novotny et al. [78], Zhao et al. [103], Long et al. [70] | 7 |

| Degradation of synthetic data | Shahnawazuddin et al. [89], Chanane and Bahoura [54] | 2 |

| Class imbalance | Chanane and Bahoura [54] | 1 |

| Overfitting | Padhy et al. [81], Chanane and Bahoura [54] | 2 |

4.6. Summary of Results

4.7. Recommendations

4.8. Potential Threats to Validity

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Patrício, D.I.; Rieder, R. Computer vision and artificial intelligence in precision agriculture for grain crops: A systematic review. Comput. Electron. Agric. 2018, 153, 69–81. [Google Scholar] [CrossRef] [Green Version]

- Le Glaz, A.; Haralambous, Y.; Kim-Dufor, D.H.; Lenca, P.; Billot, R.; Ryan, T.C.; Marsh, J.; DeVylder, J.; Walter, M.; Berrouiguet, S.; et al. Machine Learning and Natural Language Processing in Mental Health: Systematic Review. J. Med. Internet Res. 2021, 23, e15708. [Google Scholar] [CrossRef] [PubMed]

- Rong, G.; Mendez, A.; Bou Assi, E.; Zhao, B.; Sawan, M. Artificial intelligence in healthcare: Review and prediction case studies. Engineering 2020, 6, 291–301. [Google Scholar] [CrossRef]

- Liu, R.; Yang, B.; Zio, E.; Chen, X. Artificial intelligence for fault diagnosis of rotating machinery: A review. Mech. Syst. Signal Process. 2018, 108, 33–47. [Google Scholar] [CrossRef]

- Zinemanas, P.; Rocamora, M.; Miron, M.; Font, F.; Serra, X. An Interpretable Deep Learning Model for Automatic Sound Classification. Electronics 2021, 10, 850. [Google Scholar] [CrossRef]

- Crocco, M.; Cristani, M.; Trucco, A.; Murino, V. Audio surveillance: A systematic review. ACM Comput. Surv. 2016, 48, 1–46. [Google Scholar] [CrossRef]

- Azimi, M.; Roedig, U. Room Identification with Personal Voice Assistants (Extended Abstract). In Computer Security, Lecture Notes in Computer Science, Proceedings of the ESORICS 2021 International Workshops, Online, 4–8 October 2021; Katsikas, S., Zheng, Y., Yuan, X., Yi, X., Eds.; Springer: Cham, Switzerland, 2022; Volume 13106, p. 13106. [Google Scholar] [CrossRef]

- Kapočiūtė-Dzikienė, J. A Domain-Specific Generative Chatbot Trained from Little Data. Appl. Sci. 2020, 10, 2221. [Google Scholar] [CrossRef] [Green Version]

- Shah, S.K.; Tariq, Z.; Lee, Y. Audio IoT Analytics for Home Automation Safety. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 5181–5186. [Google Scholar] [CrossRef]

- Gholizadeh, S.; Lemana, Z.; Baharudinb, B.T.H.T. A review of the application of acoustic emission technique in engineering. Struct. Eng. Mech. 2015, 54, 1075–1095. [Google Scholar] [CrossRef]

- Henriquez, P.; Alonso, J.B.; Ferrer, M.A.; Travieso, C.M. Review of automatic fault diagnosis systems using audio and vibration signals. IEEE Trans. Syst. Man Cybern. Syst. 2014, 44, 642–652. [Google Scholar] [CrossRef]

- Lozano, H.; Hernáez, I.; Picón, A.; Camarena, J.; Navas, E. Audio Classification Techniques in Home Environments for Elderly/Dependant People. In Computers Helping People with Special Needs. Lecture Notes in Computer Science, Proceedings of the 12th International Conference on Computers Helping People, Vienna, Austria, 14–16 July 2010; Miesenberger, K., Klaus, J., Zagler, W., Karshmer, A., Eds.; Springer: Cham, Switzerland, 2010; Volume 6179, p. 6179. [Google Scholar] [CrossRef]

- Bear, H.L.; Heittola, T.; Mesaros, A.; Benetos, E.; Virtanen, T. City Classification from Multiple Real-World Sound Scenes. In Proceedings of the 2019 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), New Paltz, NY, USA, 20–23 October 2019; pp. 11–15. [Google Scholar] [CrossRef] [Green Version]

- Callai, S.C.; Sangiorgi, C. A review on acoustic and skid resistance solutions for road pavements. Infrastructures 2021, 6, 41. [Google Scholar] [CrossRef]

- Blumstein, D.T.; Mennill, D.J.; Clemins, P.; Girod, L.; Yao, K.; Patricelli, G.; Kirschel, A.N.G. Acoustic monitoring in terrestrial environments using microphone arrays: Applications, technological considerations and prospectus. J. Appl. Ecol. 2011, 48, 758–767. [Google Scholar] [CrossRef]

- Bountourakis, V.; Vrysis, L.; Papanikolaou, G. Machine learning algorithms for environmental sound recognition: Towards soundscape semantics. In Proceedings of the ACM International Conference Proceeding Series, Guangzhou, China, 7–9 October 2015. [Google Scholar] [CrossRef]

- Najafabadi, M.M.; Villanustre, F.; Khoshgoftaar, T.M.; Naemm, S.; Wald, R.; Muharemagic, E. Deep learning applications and challenges in big data analytics. J. Big Data 2015, 2, 1. [Google Scholar] [CrossRef] [Green Version]

- Marin, I.; Kuzmanic Skelin, A.; Grujic, T. Empirical Evaluation of the Effect of Optimization and Regularization Techniques on the Generalization Performance of Deep Convolutional Neural Network. Appl. Sci. 2020, 10, 7817. [Google Scholar] [CrossRef]

- Bergstra, J.; Yamins, D.; Cox, D.D. Making a science of model search: Hyperparameter optimization in hundreds of dimensions for vision architectures. In Proceedings of the 30th International Conference on Machine Learning Part 1 (ICML), Baltimore, MD, USA, 17–23 July 2013; pp. 115–123. [Google Scholar]

- Khalid, R.; Javaid, N. A survey on hyperparameters optimization algorithms of forecasting models in smart grid. Sustain. Cities Soc. 2020, 61, 2275. [Google Scholar] [CrossRef]

- Kalliola, J.; Kapočiūte-Dzikiene, J.; Damaševičius, R. Neural network hyperparameter optimization for prediction of real estate prices in helsinki. PeerJ Comput. Sci. 2021, 7, 1–25. [Google Scholar] [CrossRef] [PubMed]

- Połap, D.; Woźniak, M.; Hołubowski, W.; Damaševičius, R. A heuristic approach to the hyperparameters in training spiking neural networks using spike-timing-dependent plasticity. Neural Comput. Appl. 2021, 34, 13187–13200. [Google Scholar] [CrossRef]

- Saeed, N.; Nyberg, R.G.; Alam, M.; Dougherty, M.; Jooma, D.; Rebreyend, P. Classification of the Acoustics of Loose Gravel. Sensors 2021, 21, 4944. [Google Scholar] [CrossRef]

- Castro Martinez, A.M.; Spille, C.; Roßbach, J.; Kollmeier, B.; Meyer, B.T. Prediction of speech intelligibility with DNN-based performance measures. Comput. Speech Lang. 2022, 74, 1329. [Google Scholar] [CrossRef]

- Han, D.; Kong, Y.; Han, J.; Wang, G. A survey of music emotion recognition. Front. Comput. Sci. 2022, 16, 166335. [Google Scholar] [CrossRef]

- Alonso Hernández, J.B.; Barragán Pulido, M.L.; Gil Bordón, J.M.; Ferrer Ballester, M.Á.; Travieso González, C.M. Speech evaluation of patients with alzheimer’s disease using an automatic interviewer. Expert Syst. Appl. 2022, 192, 6386. [Google Scholar] [CrossRef]

- Tagawa, Y.; Maskeliūnas, R.; Damaševičius, R. Acoustic Anomaly Detection of Mechanical Failures in Noisy Real-Life Factory Environments. Electronics 2021, 10, 2329. [Google Scholar] [CrossRef]

- Qurthobi, A.; Maskeliūnas, R.; Damaševičius, R. Detection of Mechanical Failures in Industrial Machines Using Overlapping Acoustic Anomalies: A Systematic Literature Review. Sensors 2022, 22, 3888. [Google Scholar] [CrossRef] [PubMed]

- Domingos, L.C.F.; Santos, P.E.; Skelton, P.S.M.; Brinkworth, R.S.A.; Sammut, K. A survey of underwater acoustic data classification methods using deep learning for shoreline surveillance. Sensors 2022, 22, 2181. [Google Scholar] [CrossRef]

- Ji, C.; Mudiyanselage, T.B.; Gao, Y.; Pan, Y. A review of infant cry analysis and classification. Eurasip. J. Audio Speech Music. Process. 2021, 2021, 1975. [Google Scholar] [CrossRef]

- Qian, K.; Janott, C.; Schmitt, M.; Zhang, Z.; Heiser, C.; Hemmert, W.; Schuller, B.W. Can machine learning assist locating the excitation of snore sound? A review. IEEE J. Biomed. Health Inform. 2021, 25, 1233–1246. [Google Scholar] [CrossRef]

- Meyer, J.; Dentel, L.; Meunier, F. Speech Recognition in Natural Background Noise. PLoS ONE 2013, 8, e79279. [Google Scholar] [CrossRef] [Green Version]

- Bahle, G.; Fortes Rey, V.; Bian, S.; Bello, H.; Lukowicz, P. Using Privacy Respecting Sound Analysis to Improve Bluetooth Based Proximity Detection for COVID-19 Exposure Tracing and Social Distancing. Sensors 2021, 21, 5604. [Google Scholar] [CrossRef]

- Holzapfel, A.; Sturm, B.L.; Coeckelbergh, M. Ethical Dimensions of Music Information Retrieval Technology. Trans. Int. Soc. Music. Inf. Retriev. 2018, 1, 44–55. [Google Scholar] [CrossRef]

- Tsalera, E.; Papadakis, A.; Samarakou, M. Comparison of Pre-Trained CNNs for Audio Classification Using Transfer Learning. J. Sens. Actuator Netw. 2021, 10, 72. [Google Scholar] [CrossRef]

- Alías, F.; Socoró, J.C.; Sevillano, X. A Review of Physical and Perceptual Feature Extraction Techniques for Speech, Music and Environmental Sounds. Appl. Sci. 2016, 6, 143. [Google Scholar] [CrossRef] [Green Version]

- Wang, J.; Lee, Y.; Lin, C.; Siahaan, E.; Yang, C. Robust environmental sound recognition with fast noise suppression for home automation. IEEE Trans. Autom. Sci. Eng. 2015, 12, 1235–1242. [Google Scholar] [CrossRef]

- Steinfath, E.; Palacios-Muñoz, A.; Rottschäfer, J.R.; Yuezak, D.; Clemens, J. Fast and accurate annotation of acoustic signals with deep neural networks. eLife 2021, 10, e68837. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Wong, A.K.C.; Kamel, M.S. Classification of Imbalanced Data: A Review. Int. J. Pattern Recognit. Artif. Intell. 2009, 23, 687–719. [Google Scholar] [CrossRef]

- Dong, X.; Yin, B.; Cong, Y.; Du, Z.; Huang, X. Environment sound event classification with a two-stream convolutional neural network. IEEE Access 2020, 8, 125714–125721. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaria, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.X.; Li, Y.; Wu, N. Data augmentation and its application in distributed acoustic sensing data denoising. Geophys. J. Int. 2021, 228, 119–133. [Google Scholar] [CrossRef]

- Abeßer, J. A review of deep learning based methods for acoustic scene classification. Appl. Sci. 2020, 10, 20. [Google Scholar] [CrossRef] [Green Version]

- Bahmei, B.; Birmingham, E.; Arzanpour, S. CNN-RNN and data augmentation using deep convolutional generative adversarial network for environmental sound classification. IEEE Signal Process. Lett. 2022, 29, 682–686. [Google Scholar] [CrossRef]

- Horwath, J.P.; Zakharov, D.N.; Mégret, R.; Stach, E.A. Understanding important features of deep learning models for segmentation of high-resolution transmission electron microscopy images. NPJ Comput. Mater. 2006, 108, 2–9. [Google Scholar] [CrossRef]

- Feurer, M.; Hutter, F. Hyperparameter Optimization. In Automated Machine Learning. The Springer Series on Challenges in Machine Learning; Hutter, F., Kotthoff, L., Vanschoren, J., Eds.; Springer: Cham, Switzerland, 2019. [Google Scholar] [CrossRef] [Green Version]

- Van Wee, B.; Banister, D. How to write a literature review paper? Transp. Rev. 2016, 36, 278–288. [Google Scholar]

- Kitchenham, B.; Brereton, O.P.; Budgen, D.; Turner, M.; Bailey, J.; Linkman, S. Systematic literature reviews in software engineering—A systematic literature review. Inf. Softw. Technol. 2009, 51, 7–15. [Google Scholar] [CrossRef]

- Badampudi, D.; Wohlin, C.; Petersen, K. Experiences from using snowballing and database searches in systematic literature studies. In Proceedings of the ACM International Conference Proceeding Series, Edinbourgh, UK, 27–29 April 2015. [Google Scholar] [CrossRef] [Green Version]

- Liberati, A.; Altman, D.G.; Tetzlaff, J.; Mulrow, C.; Gøtzsche, P.C.; Ioannidis, J.P.; Moher, D. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: Explanation and elaboration. J. Clin. Epidemiol. 2009, 62, e1–e34. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Basu, V.; Rana, S. Respiratory diseases recognition through respiratory sound with the help of deep neural network. In Proceedings of the 2020 4th International Conference on Computational Intelligence and Networks (CINE), Online, 2–5 September 2020; pp. 1–6. [Google Scholar]

- Billah, M.M.; Nishimura, M. A data augmentation-based technique to classify chewing and swallowing using LSTM. In Proceedings of the 2020 IEEE 2nd Global Conference on Life Sciences and Technologies (LifeTech), Kyoto, Japan, 10–12 March 2020; pp. 84–85. [Google Scholar]

- Celin, M.T.A.; Nagarajan, T.; Vijayalakshmi, P. Data augmentation using virtual microphone array synthesis and multi-resolution feature extraction for isolated word dysarthric speech recognition. IEEE J. Sel. Top. Signal Process. 2020, 14, 346–354. [Google Scholar] [CrossRef]

- Chanane, H.; Bahoura, M. Convolutional Neural Network-based Model for Lung Sounds Classification. In Proceedings of the 2021 IEEE International Midwest Symposium on Circuits and Systems (MWSCAS), East Lansing, MI, USA, 7–10 August 2021; pp. 555–558. [Google Scholar]

- Davis, N.; Suresh, K. Environmental sound classification using deep convolutional neural networks and data augmentation. In Proceedings of the 2018 IEEE Recent Advances in Intelligent Computational Systems (RAICS), Thiruvananthapuram, India, 6–8 December 2018; pp. 41–45. [Google Scholar]

- Diffallah, Z.; Ykhlef, H.; Bouarfa, H.; Ykhlef, F. Impact of Mixup Hyperparameter Tunning on Deep Learning-based Systems for Acoustic Scene Classification. In Proceedings of the 2021 International Conference on Recent Advances in Mathematics and Informatics (ICRAMI), Tebessa, Algeria, 21–22 September 2021; pp. 1–6. [Google Scholar]

- Esmaeilpour, M.; Cardinal, P.; Koerich, A.L. Unsupervised feature learning for environmental sound classification using Weighted Cycle-Consistent Generative Adversarial Network. Appl. Soft Comput. 2019, 86, 105912. [Google Scholar] [CrossRef]

- Garcia-Ceja, E.; Riegler, M.; Kvernberg, A.K.; Torresen, J. User-adaptive models for activity and emotion recognition using deep transfer learning and data augmentation. User Model. User-Adapt. Interact. 2020, 30, 365–393. [Google Scholar] [CrossRef]

- Greco, A.; Petkov, N.; Saggese, A.; Vento, M. Aren: A deep learning approach for sound event recognition using a brain inspired representation. IEEE Trans. Inf. Forens. Secur. 2020, 15, 3610–3624. [Google Scholar] [CrossRef]

- Imoto, K. Acoustic Scene Classification Using Multichannel Observation with Partially Missing Channels. In Proceedings of the 2021 29th European Signal Processing Conference (EUSIPCO), Dublin, Ireland, 23–27 August 2021; pp. 875–879. [Google Scholar]

- Jeong, Y.; Kim, J.; Kim, D.; Kim, J.; Lee, K. Methods for improving deep learning-based cardiac auscultation accuracy: Data augmentation and data generalization. Appl. Sci. 2021, 11, 4544. [Google Scholar] [CrossRef]

- Kadyan, V.; Bawa, P.; Hasija, T. In domain training data augmentation on noise robust punjabi children speech recognition. J. Ambient. Intell. Humaniz. Comput. 2021, 13, 03468. [Google Scholar] [CrossRef]

- Kathania, H.K.; Kadiri, S.R.; Alku, P.; Kurimo, M. Using data augmentation and time-scale modification to improve ASR of children’s speech in noisy environments. Appl. Sci. 2021, 11, 8420. [Google Scholar] [CrossRef]

- Koike, T.; Qian, K.; Schuller, B.W.; Yamamoto, Y. Transferring cross-corpus knowledge: An investigation on data augmentation for heart sound classification. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine Biology Society (EMBC), Guadalajara, Mexico, 26 July 2021; pp. 1976–1979. [Google Scholar]

- Koszewski, D.; Kostek, B. Musical instrument tagging using data augmentation and effective noisy data processing. AES J. Audio Eng. Soc. 2020, 68, 57–65. [Google Scholar] [CrossRef]

- Lalitha, S.; Gupta, D.; Zakariah, M.; Alotaibi, Y.A. Investigation of multilingual and mixed-lingual emotion recognition using enhanced cues with data augmentation. Appl. Acoust. 2020, 170, 107519. [Google Scholar] [CrossRef]

- Lee, H.; Lee, J. Neural network prediction of sound quality via domain knowledge-based data augmentation and bayesian approach with small data sets. Mech. Syst. Signal Process. 2021, 157, 107713. [Google Scholar] [CrossRef]

- Lella, K.K.; Pja, A. Automatic COVID-19 disease diagnosis using 1D convolutional neural network and augmentation with human respiratory sound based on parameters: Cough, breath, and voice. AIMS Public Health 2021, 8, 240–264. [Google Scholar] [CrossRef] [PubMed]

- Leng, Y.; Zhao, W.; Lin, C.; Sun, C.; Wang, R.; Yuan, Q.; Li, D. LDA-based data augmentation algorithm for acoustic scene classification. Knowl.-Based Syst. 2020, 195, 105600. [Google Scholar] [CrossRef]

- Long, Y.; Li, Y.; Zhang, Q.; Wei, S.; Ye, H.; Yang, J. Acoustic data augmentation for mandarin-english code-switching speech recognition. Appl. Acoust. 2020, 161, 107175. [Google Scholar] [CrossRef]

- Lu, R.; Duan, Z.; Zhang, C. Metric learning based data augmentation for environmental sound classification. In Proceedings of the 2017 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), New Paltz, NY, USA, 15–18 October 2017; pp. 1–5. [Google Scholar]

- Ma, X.; Shao, Y.; Ma, Y.; Zhang, W.Q. Deep Semantic Encoder-Decoder Network for Acoustic Scene Classification with Multiple Devices. In Proceedings of the 2020 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Auckland, New Zealand, 7–10 December 2020; pp. 365–370. [Google Scholar]

- Madhu, A.; Kumaraswamy, S. Data augmentation using generative adversarial network for environmental sound classification. In Proceedings of the 2019 27th European Signal Processing Conference (EUSIPCO), Coruna, Spain, 2–6 September 2019; pp. 1–5. [Google Scholar]

- Mertes, S.; Baird, A.; Schiller, D.; Schuller, B.W.; André, E. An evolutionary-based generative approach for audio data augmentation. In Proceedings of the 2020 IEEE 22nd International Workshop on Multimedia Signal Processing (MMSP), Online, 21–24 September 2020; pp. 1–6. [Google Scholar]

- Mushtaq, Z.; Su, S. Environmental sound classification using a regularized deep convolutional neural network with data augmentation. Appl. Acoust. 2020, 167, 107389. [Google Scholar] [CrossRef]

- Mushtaq, Z.; Su, S.; Tran, Q. Spectral images based environmental sound classification using CNN with meaningful data augmentation. Appl. Acoust. 2021, 172, 107581. [Google Scholar] [CrossRef]

- Nanni, L.; Maguolo, G.; Paci, M. Data augmentation approaches for improving animal audio classification. Ecol. Inform. 2020, 57, 101084. [Google Scholar] [CrossRef] [Green Version]

- Novotný, O.; Plchot, O.; Glembek, O.; Černocký, J.; Burget, L. Analysis of DNN speech signal enhancement for robust speaker recognition. Comput. Speech Lang. 2019, 58, 403–421. [Google Scholar] [CrossRef] [Green Version]

- Nugroho, K.; Noersasongko, E.; Purwanto; Muljono; Setiadi, D.R.I.M. Enhanced indonesian ethnic speaker recognition using data augmentation deep neural network. J. King Saud Univ. Comput. Inf. Sci. 2021, 34, 4375–4384. [Google Scholar] [CrossRef]

- Ozer, I.; Ozer, Z.; Findik, O. Lanczos kernel based spectrogram image features for sound classification. Procedia Comput. Sci. 2017, 111, 137–144. [Google Scholar] [CrossRef]

- Padhy, S.; Tiwari, J.; Rathore, S.; Kumar, N. Emergency signal classification for the hearing impaired using multi-channel convolutional neural network architecture. In Proceedings of the 2019 IEEE Conference on Information and Communication Technology, Surabaya, Indonesia, 18 July 2019; pp. 1–6. [Google Scholar]

- Padovese, B.; Frazao, F.; Kirsebom, O.S.; Matwin, S. Data augmentation for the classification of north atlantic right whales upcalls. J. Acoust. Soc. Am. 2021, 149, 2520–2530. [Google Scholar] [CrossRef] [PubMed]

- Pervaiz, A.; Hussain, F.; Israr, H.; Tahir, M.A.; Raja, F.R.; Baloch, N.K.; Zikria, Y.B. Incorporating noise robustness in speech command recognition by noise augmentation of training data. Sensors 2020, 20, 2326. [Google Scholar] [CrossRef] [Green Version]

- Praseetha, V.M.; Joby, P.P. Speech emotion recognition using data augmentation. Int. J. Speech Technol. 2021, 9883. [Google Scholar] [CrossRef]

- Qian, Y.; Hu, H.; Tan, T. Data augmentation using generative adversarial networks for robust speech recognition. Speech Commun. 2019, 114, 1–9. [Google Scholar] [CrossRef]

- Ramesh, V.; Vatanparvar, K.; Nemati, E.; Nathan, V.; Rahman, M.M.; Kuang, J. CoughGAN: Generating synthetic coughs that improve respiratory disease classification. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine Biology Society (EMBC), Online, 20–24 July 2020; pp. 5682–5688. [Google Scholar]

- Rituerto-González, E.; Mínguez-Sánchez, A.; Gallardo-Antolín, A.; Peláez-Moreno, C. Data augmentation for speaker identification under stress conditions to combat gender-based violence. Appl. Sci. 2019, 9, 2298. [Google Scholar] [CrossRef] [Green Version]

- Salamon, J.; Bello, J.P. Deep convolutional neural networks and data augmentation for environmental sound classification. IEEE Signal Process. Lett. 2017, 24, 279–283. [Google Scholar] [CrossRef]

- Shahnawazuddin, S.; Adiga, N.; Kathania, H.K.; Sai, B.T. Creating speaker independent ASR system through prosody modification based data augmentation. Pattern Recognit. Lett. 2020, 131, 213–218. [Google Scholar] [CrossRef]

- Singh, J.; Joshi, R. Background sound classification in speech audio segments. In Proceedings of the 2019 International Conference on Speech Technology and Human-Computer Dialogue (SpeD), Timisoara, Romania, 10–12 October 2019; pp. 1–6. [Google Scholar]

- Sugiura, T.; Kobayashi, A.; Utsuro, T.; Nishizaki, H. Audio Synthesis-based Data Augmentation Considering Audio Event Class. In Proceedings of the 2021 IEEE 10th Global Conference on Consumer Electronics (GCCE), Online, 12–15 October 2021. [Google Scholar]

- Tran, V.T.; Tsai, W.H. Stethoscope-Sensed Speech and Breath-Sounds for Person Identification with Sparse Training Data. IEEE Sens. J. 2019, 20, 848–859. [Google Scholar] [CrossRef]

- Vecchiotti, P.; Pepe, G.; Principi, E.; Squartini, S. Detection of activity and position of speakers by using deep neural networks and acoustic data augmentation. Expert Syst. Appl. 2019, 134, 53–65. [Google Scholar] [CrossRef]

- Vryzas, N.; Kotsakis, R.; Liatsou, A.; Dimoulas, C.; Kalliris, G. Speech emotion recognition for performance interaction. AES: J. Audio Eng. Soc. 2018, 66, 457–467. [Google Scholar] [CrossRef]

- Wang, E.K.; Yu, J.; Chen, C.; Kumari, S.; Rodrigues, J.J.P.C. Data augmentation for internet of things dialog system. Mob. Netw. Appl. 2020, 27, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Wang, S.; Yang, Y.; Wu, Z.; Qian, Y.; Yu, K. Data augmentation using deep generative models for embedding based speaker recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 2598–2609. [Google Scholar] [CrossRef]

- Wyatt, S.; Elliott, D.; Aravamudan, A.; Otero, C.E.; Otero, L.D.; Anagnostopoulos, G.C.; Lam, E. Environmental sound classification with tiny transformers in noisy edge environments. In Proceedings of the 2021 IEEE 7th World Forum on Internet of Things (WF-IoT), Online, 26 July 2021; pp. 309–314. [Google Scholar]

- Yang, L.; Tao, L.; Chen, X.; Gu, X. Multi-scale semantic feature fusion and data augmentation for acoustic scene classification. Appl. Acoust. 2020, 163, 107238. [Google Scholar] [CrossRef]

- Yella, N.; Rajan, B. Data Augmentation using GAN for Sound based COVID 19 Diagnosis. In Proceedings of the 2021 11th IEEE International Conference on Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications (IDAACS), Cracow, Poland, 22–25 September 2021; Volume 2, pp. 606–609. [Google Scholar]

- Ykhlef, H.; Ykhlef, F.; Chiboub, S. Experimental Design and Analysis of Sound Event Detection Systems: Case Studies. In Proceedings of the 2019 6th International Conference on Image and Signal Processing and their Applications (ISPA), Mostaganem, Algeria, 24–25 November 2019; pp. 1–6. [Google Scholar]

- Zhang, Z.; Han, J.; Qian, K.; Janott, C.; Guo, Y.; Schuller, B. Snore-GANs: Improving automatic snore sound classification with synthesized data. IEEE J. Biomed. Health Inform. 2019, 24, 300–310. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, Z.; Xu, S.; Zhang, S.; Qiao, T.; Cao, S. Learning attentive representations for environmental sound classification. IEEE Access 2019, 7, 130327–130339. [Google Scholar] [CrossRef]

- Zhao, X.; Shao, Y.; Mai, J.; Yin, A.; Xu, S. Respiratory Sound Classification Based on BiGRU-Attention Network with XGBoost. In Proceedings of the 2020 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Seoul, Republic of Korea, 16–19 December 2020; pp. 915–920. [Google Scholar]

- Zhao, Y.; Togneri, R.; Sreeram, V. Replay anti-spoofing countermeasure based on data augmentation with post selection. Comput. Speech Lang. 2020, 64, 1115. [Google Scholar] [CrossRef]

- Zheng, Q.; Zhao, P.; Li, Y.; Wang, H.; Yang, Y. Spectrum interference-based two-level data augmentation method in deep learning for automatic modulation classification. Neural Comput. Appl. 2021, 33, 7723–7745. [Google Scholar] [CrossRef]

- Zheng, X.; Zhang, C.; Chen, P.; Zhao, K.; Jiang, H.; Jiang, Z.; Jia, W. A CRNN System for Sound Event Detection Based on Gastrointestinal Sound Dataset Collected by Wearable Auscultation Devices. IEEE Access 2020, 8, 157892–157905. [Google Scholar] [CrossRef]

- Ismail, A.; Abdlerazek, S.; El-Henawy, I.M. Development of Smart Healthcare System Based on Speech Recognition Using Support Vector Machine and Dynamic Time Warping. Sustainability 2020, 12, 2403. [Google Scholar] [CrossRef] [Green Version]

- Takahashi, N.; Gygli, M.; Van Gool, L. AENet: Learning deep audio features for video analysis. IEEE Trans. Multimed. 2018, 20, 513–524. [Google Scholar] [CrossRef] [Green Version]

- Meltzner, G.S.; Heaton, J.T.; Deng, Y.; De Luca, G.; Roy, S.H.; Kline, J.C. Silent speech recognition as an alternative communication device for persons with laryngectomy. IEEE/ACM Trans. Audio Speech Lang. Process. 2017, 25, 2386–2398. [Google Scholar] [CrossRef] [PubMed]

- Borsky, M.; Mehta, D.D.; Van Stan, J.H.; Gudnason, J. Modal and nonmodal voice quality classification using acoustic and electroglottographic features. IEEE/ACM Trans. Audio Speech Lang. Process. 2017, 25, 2281–2291. [Google Scholar] [CrossRef] [PubMed]

- Lech, M.; Stolar, M.; Best, C.; Bolia, R. Real-Time Speech Emotion Recognition Using a Pre-trained Image Classification Network: Effects of Bandwidth Reduction and Companding. Front. Comput. Sci. 2020, 2, 14. [Google Scholar] [CrossRef]

- Schuller, B.; Steidl, S.; Batliner, A.; Bergelson, E.; Krajewski, J.; Janott, C.; Zafeiriou, S. The interspeech 2017 computational paralinguistics challenge: Addressee, cold snoring. In Computational Paralinguistics Challenge (ComParE); Interspeech: London, UK, 2017; pp. 3442–3446. [Google Scholar]

- Ferrer, L.; Bratt, H.; Burget, L.; Cernocky, H.; Glembek, O.; Graciarena, M.; Lawson, A.; Lei, Y.; Matejka, P.; Pichot, O.; et al. Promoting robustness for speaker modeling in the community: The PRISM evaluation set. In Proceedings of the NIST Speaker Recognition Analysis Workshop (SRE11), Atlanta, GA, USA, 1–3 March 2011; pp. 1–7. [Google Scholar]

- Sun, H.; Ma, B. The NIST SRE summed channel speaker recognition system. In Interspeech 2014; ISCA: New York, NY, USA, 2014. [Google Scholar] [CrossRef]

- Xie, H.; Virtanen, T. Zero-Shot Audio Classification Via Semantic Embeddings. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 1233–1242. [Google Scholar] [CrossRef]

- Johnson, J.M.; Khoshgoftaar, T.M. Survey on deep learning with class imbalance. J. Big Data 2019, 6, 1925. [Google Scholar] [CrossRef] [Green Version]

- Papakostas, M.; Spyrou, E.; Giannakopoulos, T.; Siantikos, G.; Sgouropoulos, D.; Mylonas, P.; Makedon, F. Deep Visual Attributes vs. Hand-Crafted Audio Features on Multidomain Speech Emotion Recognition. Computation 2017, 5, 26. [Google Scholar] [CrossRef] [Green Version]

- Ye, J.; Kobayashi, T.; Murakawa, M. Urban sound event classification based on local and global features aggregation. Appl. Acoust. 2017, 117, 246–256. [Google Scholar] [CrossRef]

- Lachambre, H.; Ricaud, B.; Stempfel, G.; Torrésani, B.; Wiesmeyr, C.; Onchis-Moaca, D. Optimal Window and Lattice in Gabor Transform. Application to Audio Analysis. In Proceedings of the International Symposium on Symbolic and Numeric Algorithms for Scientific Computing, Timisoara, Romania, 21–24 September 2015; Volume 17, pp. 109–112. [Google Scholar] [CrossRef] [Green Version]

- Schmitt, M.; Janott, C.; Pandit, V.; Qian, K.; Heiser, C.; Hemmert, W.; Schuller, B. A Bag-of-Audio-Words Approach for Snore Sounds’ Excitation Localisation. In Proceedings of the 12th ITG Symposium on Speech Communication, Paderborn, Germany, 5–7 October 2016; pp. 1–5. [Google Scholar]

- Valero, X.; Alías, F. Narrow-band autocorrelation function features for the automatic recognition of acoustic environments. J. Acoust. Soc. Am. 2013, 134, 880–890. [Google Scholar] [CrossRef]

- Iwana, B.K.; Uchida, S. An empirical survey of data augmentation for time series classification with neural networks. PLoS ONE 2021, 16, e0254841. [Google Scholar] [CrossRef]

- Ko, T.; Peddinti, V.; Povey, D.; Khudanpur, S. Audio augmentation for speech recognition. In Proceedings of the Sixteenth Annual Conference of the International Speech Communication Association, Dresden, Germany, 6–10 September 2015. [Google Scholar]

- Ozmen, G.C.; Gazi, A.H.; Gharehbaghi, S.; Richardson, K.L.; Safaei, M.; Whittingslow, D.C.; Inan, O.T. An Interpretable Experimental Data Augmentation Method to Improve Knee Health Classification Using Joint Acoustic Emissions. Ann. Biomed. Eng. 2021, 49, 2399–2411. [Google Scholar] [CrossRef] [PubMed]

- Rocha, B.M.; Filos, D.; Mendes, L.; Serbes, G.; Ulukaya, S.; Kahya, Y.P.; Jakovljevic, N.; Turukalo, T.L.; Vogiatzis, I.M.; Perantoni, E.; et al. An open access database for the evaluation of respiratory sound classification algorithms. Physiol. Meas. 2019, 40, 035001. [Google Scholar]

- Wei, S.; Zou, S.; Liao, F.; Lang, W. A Comparison on Data Augmentation Methods Based on Deep Learning for Audio Classification. J. Phys. Conf. Ser. 2020, 1453, 012085. [Google Scholar] [CrossRef]

- Araújo, T.; Aresta, G.; Mendoca, L.; Penas, S.; Maia, C.; Carneiro, A.; Campilho, A.; Mendonca, A.M. Data Augmentation for Improving Proliferative Diabetic Retinopathy Detection in Eye Fundus Images. IEEE Access 2020, 8, 182462–182474. [Google Scholar] [CrossRef]

| Database | Search (Title, Abstract or Keyword) | No. of Results | Filtered by Exclusion Criteria | Forward Snowballing | Backward Snowballing | Final No. |

|---|---|---|---|---|---|---|

| Scopus | T-A-K: ((Sound OR Audio OR Voice OR Speech)) AND T: (Data Augmentation) AND (L-T: (SRCTYPE, “j”)) AND (L-T (PY: 2021) OR L-T (PY: 2020) OR L-T (PY: 2019) OR L-T (PY: 2018) OR L-T (PY: 2017)) AND (L-T (LANG, “English”)) | 42 | 32 | 4 | 2 | 38 |

| Web of Science | T: ((Sound OR Audio OR Voice OR Speech)) AND T: (Data Augmentation) AND ((PY:2021) OR (PY:2020) OR (PY: 2019) OR (PY: 2018) OR (PY = 2017)) | 34 | 28 | - | 4 | 32 |

| IEEE Xplore | T: ((Sound OR Audio OR Voice OR Speech)) AND T: (Data Augmentation) AND ((PY:2021) OR (PY:2020) OR (PY: 2019) OR (PY: 2018) OR (PY = 2017)) | 55 | 20 | - | - | 20 |

| Datasets | Number of Categories | Number of Samples (Training/Test/Validation) | References |

|---|---|---|---|

| Speech datasets | |||

| Acted Emotional Speech Dynamic Database | Five emotion classes (anger, disgust, fear, happiness, sadness) | 600 phrases | Vryzas et al. [94] |

| AMI (meeting transcription) | n/a | 100 h of meeting recordings | Qian et al. [85] |

| ASVspoof 2017 corpus | Two utterance classes (bona fide, spoofing) | 3014/1710/13,306 | Zhao et al. [103] |

| Dysarthric Speech Corpus in Tamil | n/a | 22 dysarthric speakers, 262 sentences and 103 words | Celin et al. [53] |

| Baum-1a | 13 emotional & mental states | 1184 clips | Lalitha et al. [66] |

| Indian Institute of Technology Kharagpur Simulated Emotion Speech Corpus (IITKGP-SESC) | 8 emotions | 40 sentences | Lalitha et al. [66] |

| Indonesian Ethnic Speaker Recognition | 70 classes | 280 records | Nugroho et al. [79] |

| OC16-CE80 Mandarin-English mix lingual speech | 50 speakers | 80 h | Long et al. [70] |

| Punjabi Children speech corpus | n/a | 1887 utterances (39 speakers)/and 485 (6 speakers) | Kadyan et al. [62] |

| Speech Command Dataset v1, v2 | 30 words | V1: 28,410 (22,236/3093/3081) V2: 46,258 (36,923/445/4890) | Pervaiz et al. [83] |

| Toronto Emotional Speech Set (TESS) | 7 emotion classes | 200 target words | Praseetha and Joby [84] |

| WSJCAM0 adults’ speech corpus | 92/20 speakers | 16 h of records | Kathania et al. [63], Vecchiotti et al. [93] |

| PF-STAR children’s speech corpus | 122/60 speakers | 9.4 h | Kathania et al. [63], Vecchiotti et al. [93] |

| EmotionDB 1999 | 7 emotion classes | 3188 records | Garcia-Ceja et al. [58] |

| Surrey Audio Visual Expressed Emotion (SAVEE) database | 7 emotions | 4 subjects, 480 utterances | Lalitha et al. [66], Vryzas et al. [94] |

| VOCE Corpus Database | Stress levels | 38 raw recordings (638 min of speech) | Shahnawazuddin et al. [89] |

| Universal Access research (UA-corpus) | Two health classes (palsy/no palsy) | 19 speakers | Celin et al. [53] |

| Medical sound datasets | |||

| Gastrointestinal Sound Dataset | 6 kinds of body sounds | 43,200 audio segments | Zheng et al. [106] |

| PhysioNet CinC Dataset | 2 classes (normal/abnormal) | 3240 audio files | Jeong et al. [61], Koike et al. [64] |

| PASCAL Heart Sound Challenge (HSC) A and B datasets | 5 classes of heart sounds | A: 176 records B: 656 records | Jeong et al. [61] |

| Munich-Passau Snore Sound Corpus (MPSSC) | 219 subjects | 828 snore events | Zhang et al. [101] |

| ICBHI Challenge database | 4 classes | 6898 cycles (5.5 h) | Basu and Rana [51], Chanane and Bahoura [54], Zhao et al. [104], Rituerto-González et al. [87] |

| Natural datasets | |||

| BIRDZ | 12 classes | 3101 samples | Nanni et al. [77] |

| CAT | 10 sound classes | 3000 samples | Nanni et al. [77] |

| NARW calls dataset | 2 classes | 24 h | Padovese et al. [82] |

| Environmental sound datasets | |||

| Audioset dataset | 632 audio event classes | 2,084,320 sound clips | Padhy et al. [81] |

| DCASE dataset | 11 sound classes | 20 sound files | Wyatt et al. [97], Ykhlef et al. [100], Zhang et al. [102], Esmaeilpour et al. [57], Imoto [60] |

| Emotional Soundscapes database | na | 1213 clips | Mertes et al. [74] |

| TAU Urban Acoustic Scenes | 10 acoustic scenes | 64 h of audio | Diffallah et al. [56], Ma et al. [72] |

| Mivia Road Audio Events Dataset | 2 classes (car crash/tire skidding) | 400 records | Greco et al. [59] |

| Urbansound8K (US8K) | 10 sound event classes | 302 labeled sound recordings | Davis and Suresh [55], Esmaeilpour et al. [57], Lu et al. [71], Madhu and Kumaraswamy [73], Singh and Joshi [90], Mushtaq and Su [75], Mushtaq et al. [76], Salamon et al. [88] |

| ESC-10, ESC-50 | 50 classes | 2000 (ESC-50) | Esmaeilpour et al. [57], Mushtaq and Su [75], Mushtaq et al. [76], Zhang et al. [102], Wyatt et al. [97] |

| Real Word Computing Partnership Sound Scene Database (RWCP-SSD) | 105 kinds of environmental sounds | 155,568 words | Ozer et al. [80] |

| Sound Events for Surveillance Applications (SESA) | 4 sound classes | 585 (480/105) | Greco et al. [59] |

| TUT acoustic scenes | 15 acoustic scenes | 312 segments (52 min) | Leng et al. [69], Yang et al. [98] |

| YBSS-200 | 10 sound classes | 2000 (1600/400) | Singh and Joshi [90] |

| Features/Feature Extraction Methods | Application | References |

|---|---|---|

| BoAW | Medical sound recognition | Zhang et al. [101] |

| cepstral mean, variance normalization (CMVN) | Speech recognition | Pervaiz et al. [83] |

| chromagram, spectral contrast, spectral centroid, spectral roll-off | Speech and breathing sound recognition | Tran and Tsai [92] |

| Constant Q transform (CQT) | Lung (respiratory) sound classification | Chanane and Bahoura [54], Zhao et al. [103] |

| Data De-noising Auto Encoder | Respiratory sound classification | Lella and Pja [68] |

| Empirical mode decomposition (EMD) | Lung (respiratory) sound classification | Chanane and Bahoura [54] |

| Filter-bank features | Speech recognition | Long et al. [70] |

| Gammatonegram | Sound event recognition | Greco et al. [59] |

| GCC-PHAT Pattern features | Speaker recognition | Wang, Yu et al. [96] |

| IFFT | Speaker recognition | Zheng et al. [105] |

| log-gammatone spectrogram | Environmental sound classification | Zhang et al. [102] |

| Log-Mel | Multiple applications | Leng et al. [69], Qian et al. [85], Salamon et al. [88], Sugiura et al. [91], Wang, Yang et al. [95], Diffallah et al. [56], Ma et al. [72], Wang, Yu et al. [96], Yang et al. [98], Yella and Rajan [99], Lu et al. [71], Rituerto-González et al. [87], Koszewski and Kostek [65], Mushtaq and Su [75], Singh and Joshi [90] |

| Mel filter bank energy | Speech emotion recognition | Praseetha and Joby [84] |

| Mel Frequency Cepstral Coefficient (MFCC) | Mushtaq and Su [75], Basu and Rana [51], Vecchiotti et al. [93], Zheng et al. [106], Davis and Suresh [55], Novotny et al. [78], Nugroho et al. [79], Padovese et al. [82], Pervaiz et al. [83], Shahnawazuddin et al. [89], Ykhlef et al. [100], Imoto [60], Ramesh et al. [86], Tran and Tsai [92], Zhao et al. [104], Wang, Yang et al. [95], Koszewski and Kostek [65], Garcia-Ceja et al. [58] | |

| Mel spectrogram | Multiple applications | Mushtaq and Su [75], Mushtaq et al. [76], Padhy et al. [81], Billah and Nishimura [52], Tran and Tsai [92], Vryzas et al. [94], Madhu and Kumaraswamy [73], Wyatt et al. [97] |

| Mel-Frequency Cepstral Coefficient, Gammatone frequency cepstral coefficient (MF-GFCC) | Speech recognition | Kadyan et al. [62] |

| Mel-STFT | Lung sound classification | Chanane and Bahoura [54] |

| MFCC, inverted MFCC (IMFCC), extended MFCC, extended IMFCC, LPC, Mel, Bank filter bank-derived features | Speech recognition | Lalitha et al. [66] |

| Multi-resolution feature extraction | Speech recognition | Celin et al. [53] |

| Short Time Fourier Transform (STFT) | Medical sound recognition, speech recognition | Greco et al. [59], Jeong et al. [61], Kathania et al. [63] |

| SIFT | Sound classification | Ozer et al. [80] |

| Spectral features (Spectral Centroid, RMS, Spectral Bandwidth, Spectral Contrast, Spectral Flatness, Spectral Roll-off) | Speaker recognition | Mertes et al. [74], Zhao et al. [104] |

| Spectrogram | Heart sound recognition, sound quality evaluation, animal audio classification | Koike et al. [64], Lee and Lee [67], Nanni et al. [77] |

| Speed Up Robust Feature (SURF) | Environmental sound classification | Esmaeilpour et al. [57] |

| STFT | Lung sounds classification | Chanane and Bahoura [54], Zheng et al. [105] |

| Waveform based features | Music processing | Koszewski and Kostek [65] |

| Zero crossing rate, energy, entropy of energy, spectral centroid, spectral spread, spectral entropy, spectral flux, spectral roll-off | Emotion recognition, respiratory sound classification | Garcia-Ceja et al. [58], Ramesh et al. [86] |

| Reference | Data Augmentation Methods | Applications |

|---|---|---|

| Basu and Rana [51] | random noise, time stretching | Respiratory sound classification |

| Billah and Nishimura [52] | mixup | Chewing and swallowing sound classification |

| Celin et al. [53] | multi resolution | Speech recognition |

| Chanane and Bahoura [54] | Time stretching, spectrogram flipping, Vocal tract length perturbation (VTLP), | Lung sounds classification |

| Davis and Suresh [55] | Time Stretching, pitch shifting, Dynamic range compression (DRC), background noise, Linear prediction cepstral coefficients (LPCC) | Environmental sound classification |

| Diffallah et al. [56] | Mix up | Acoustic scene classification |

| Esmaeilpour et al. [57] | Weighted Cycle-Consistent Generative Adversarial Network (WCCGAN) | Environmental sound classification |

| Garcia-Ceja et al. [58] | Random oversampling | Emotion recognition |

| Greco et al. [59] | Adding noise attenuating or amplifying the energy | Sound event recognition |

| Imoto [60] | Mask, overwrite, random copy, swap | Acoustic scene classification |

| Jeong et al. [61] | random noise, salt, pepper noise, SpecAugmentation (random frequency masking, time masking) | Cardiac sound classification |

| Kadyan et al. [62] | Adding noise (factory, babble, white) | Speech recognition |

| Kathania et al. [63] | Adding noise (factory, babble, white, volvo) | Speech recognition |

| Koike et al. [64] | trimming, scaling frequency masking, time masking, isation, random erase | Heart sound classification |

| Koszewski and Kostek [65] | mixup approach (linear interpolation), scale augmentation | Music classification |

| Lalitha et al. [66] | Synthetic Minority Oversampling Technique (SMOTE) | Emotion recognition |

| Lee and Lee [67] | Bayesian approach, grayscale | Sound quality evaluation |

| Lella and Pja [68] | Stretching Time, Shift Pitch, Compression of Range Dynamically, Background of Noise | Respiratory sound classification |

| Leng et al. [69] | Topic model-LDA (Latent Dirichlet Allocation) | Acoustic scene classification |

| Long et al. [70] | speed, volume, noise perturbation; SpecAugment | Speech recognition |

| Lu et al. [71] | Time stretch, pitch shift 1, pitch shift, dynamic range compression, background noise | Environmental sound classification |

| Ma et al. [72] | Mix-up, Image Data Generator, temporal corp | Acoustic scene classification |

| Madhu and Kumaraswamy [73] | GAN, time stretching, Pitch shifting, background noise (BG), Dynamic range compression (DRC) | Environmental sound classification |

| Mertes et al. [74] | WaveGAN | Soundscape classification |

| Mushtaq and Su [75] | Offline augmentation (pitch shifting, silence trimming, time stretch, adding white noise) | Environmental sound classification |

| Mushtaq et al. [76] | Augmentation 1: (Zoom, Width shift, Fill mode, Brightness, Rotation, Height shift, Shear, Horizontal flip). Augmentation 2: (pitch shift, time stretch, trim silences) | Environmental sound classification |

| Nanni et al. [77] | Audiogmenter, image augmentation (Reflection, Rotation, Translation); Signal augmentation (speed scaling, pitch shift, volume Gain range, random noise, Time shift); Spectrogram augmentation (Randomshift, SameClass Sum, VTLN, Equalized Mixture Data Augmentation, Timeshift, random Image Warp) | Animal audio classification |

| Novotny et al. [78] | Reverberation, MUSAN noises, music, Babble noise, static noise | Speaker recognition |

| Nugroho et al. [79] | Adding white noise, pitch shifting, time stretching | Speaker recognition |

| Ozer et al. [80] | Adding noise: Destroyer Control Room, Speech Babble, Factory Floor-1, Jet Cockpit-1 | Sound event recognition |

| Padhy et al. [81] | Background white noise, Time shifting, Speed Tuning, Mixing white noise with stretching or shifting, Mixup | Audio classification |

| Padovese et al. [82] | SpecAugment (Time warping, Masking; time, Frequency masking), Mixup | Animal sound classification |

| Pervaiz et al. [83] | Noise (six types) | Speech recognition |

| Praseetha and Joby [84] | Time stretching, embedding noise | Speech emotion recognition |

| Qian et al. [85] | GAN under all noisy condition, additive noise, channel distortion, reverberation. | Speech recognition |

| Ramesh et al. [86] | GAN | Respiratory sound classification |

| Rituerto-González et al. [87] | Time Domain, Time-Frequency Domain (Vocal tract length perturbation (VTLP), volume adjusting, noise addition, pitch adjusting, speed adjusting | Speaker identification |

| Salamon et al. [88] | offline augmentation (pitch shifting, time shifting, dynamic range compression (DRC), background noise) | Environmental sound classification |

| Shahnawazuddin et al. [89] | oversampling (SMOTE), Pitch, Speed Modifications | Speech recognition |

| Singh and Joshi [90] | Background noise | Sound classification |

| Sugiura et al. [91] | Mixup, synthetic noise (shouting, brass) | Audio classification |

| Tran and Tsai [92] | Background noise addition, time-shifting, time-stretching, value augmentation, a combination | Speaker identification |

| Vecchiotti et al. [93] | Pitch modification, time shift | Speaker identification |

| Vryzas et al. [94] | (1) Pitch alterations with constant tempo; (2) Overlapping windows | Speech emotion recognition |

| Wang, Yang et al. [95] | Generative adversarial network (GAN) and variational autoencoder (VAE) | Speech recognition |

| Wang, Yu et al. [96] | Room Impulse Response generator | Speaker recognition |

| Wyatt et al. [97] | noise | Environment sound classification |

| Yang et al. [98] | label smoothing mixup (spatial-mixup) technique | Acoustic scene classification |

| Yella and Rajan [99] | waveGAN | Respiratory sound recognition |

| Ykhlef et al. [100] | not disclosed | Sound event detection |

| Zhang et al. [101] | semi-supervised conditional Generative Adversarial Networks (scGANs) | Snore sound classification |

| Zhang et al. [102] | time, frequency masking, mixup | Environmental sound classification |

| Zhao et al. [103] | Auxiliary classifier generative adversarial network (AC-GAN), shifting, stretching traditional method | Respiratory sound classification |

| Zhao et al. [104] | GriffinLim, WORLD Vocode | Speaker recognition |

| Zheng et al. [105] | spectrum interference-based data augmentation (random cropping, random label, soft label, amplitude interference, spectrum interference | Radio signal classification |

| Zheng et al. [106] | data sampling, class balance sampling, audio transformation | Biomedical sound detection |

| Classification Method | References | Application |

|---|---|---|

| Adaboost | Ykhlef et al. [100] | Sound event detection |

| AlexNet | Diffallah et al. [56], Esmaeilpour et al. [57], Mushtaq et al. [76] | Environmental sound and acoustic scene classification |

| Audio Event Recognition Network (AReN) | Greco et al. [59] | Sound event recognition |

| Autoencoder DNN | Ma et al. [72], Novotny et al. [78] | Acoustic scene classification, speaker recognition |

| Bidirectional Encoder Representations from Transformers (BERT) | Wyatt et al. [97] | Environmental sound classification |

| Bidirectional Gated Recurrent Neural Networks (BiGRU) | Zhao et al. [104], Zheng et al. [106] | Sound event detection, speaker recognition |

| Convolutional Neural Network (CNN) | Chanane and Bahoura [54], Davis and Suresh [55], Jeong et al. [61], Koike et al. [64], Lee and Lee [67], Lella and Pja [68], Lu et al. [71], Pervaiz et al. [83], Salamon et al. [88], Sugiura et al. [91], Tran and Tsai [92], Vryzas et al. [94], Wang, Yu et al. [96], Yella and Rajan [99], Ykhlef et al. [100], Zhao et al. [103], Zheng et al. [106] | Various applications |

| Deep CNN (DCNN) | Madhu and Kumaraswamy [73], Mushtaq and Su [75], Zheng et al. [105] | Various applications |

| Deep neural network (DNN) | Kadyan et al. [62], Nugroho et al. [79], Padovese et al. [82], Pervaiz et al. [83], Shahnawazuddin et al. [89] | Various applications |

| DenseNet | Koszewski and Kostek [65], Mushtaq et al. [76] | Music classification, environmental sound classification |

| DNN-hidden Markov model (HMM) | Celin et al. [53], Kathania et al. [63] | Speech recognition |

| DNN trained with Restricted Boltzmann Machine | Ozer et al. [80] | Sound classification |

| Ensemble CNN | Rituerto-González et al. [87] | Speaker identification |

| Gated Recurrent Unit (GRU) | Basu and Rana [51], Praseetha and Joby [84], Zhang et al. [101] | Respiratory sound classification, speech emotion recognition, snore sound classification |

| GoogLeNet | Esmaeilpour et al. [57], Nanni et al. [77] | Environmental sound classification |

| LSTM | Billah and Nishimura [52], Leng et al. [69], Long et al. [70], Pervaiz et al. [83], Vecchiotti et al. [93], Zheng et al. [106] | Multiple applications |

| Multi-channel CNN | Padhy et al. [81] | Audio sound recognition |

| Multilayer perceptron (MLP) | Lalitha et al. [66], Leng et al. [69], Tran and Tsai [92] | Speech emotion recognition, acoustic scene classification, medical sound classification |

| Random forest (RF) | Garcia-Ceja et al. [58], Lalitha et al. [66], Ramesh et al. [86], Ykhlef et al. [100] | Speech emotion recognition, medical sound classification, sound event detection |

| Recurrent Neural Network (RNN) | Praseetha and Joby [84], Zhang et al. [102], Zheng et al. [106] | Speech emotion recognition, environmental sound classification, sound event detection |

| REPTree (RT) | Lalitha et al. [66] | Speech emotion recognition |

| ResNet | Diffallah et al. [56], Imoto [60], Mushtaq et al. [76], Wang, Yang et al. [95] | Acoustic scene classification, environmental sound classification |

| SqueezeNet | Mushtaq et al. [76] | Environmental sound classification |

| Support Vector Machine (SVM) | Lalitha et al. [66], Mertes et al. [74], Ramesh et al. [86], Tran and Tsai [92], Ykhlef et al. [100], Zhang et al. [101] | Speech emotion recognition, audio classification, sound event detection, biomedical sound classification |

| Time-Delay Neural Network (TDNN) | Long et al. [70], Wang, Yang et al. [95] | Speech recognition |

| VGG | Imoto [60], Mushtaq et al. [76], Nanni et al. [77], Singh and Joshi [90], Leng et al. [69] | Acoustic scene and environmental sound classification, animal sound classification |

| Xception | Yang et al. [98] | Acoustic scene classification |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abayomi-Alli, O.O.; Damaševičius, R.; Qazi, A.; Adedoyin-Olowe, M.; Misra, S. Data Augmentation and Deep Learning Methods in Sound Classification: A Systematic Review. Electronics 2022, 11, 3795. https://doi.org/10.3390/electronics11223795

Abayomi-Alli OO, Damaševičius R, Qazi A, Adedoyin-Olowe M, Misra S. Data Augmentation and Deep Learning Methods in Sound Classification: A Systematic Review. Electronics. 2022; 11(22):3795. https://doi.org/10.3390/electronics11223795

Chicago/Turabian StyleAbayomi-Alli, Olusola O., Robertas Damaševičius, Atika Qazi, Mariam Adedoyin-Olowe, and Sanjay Misra. 2022. "Data Augmentation and Deep Learning Methods in Sound Classification: A Systematic Review" Electronics 11, no. 22: 3795. https://doi.org/10.3390/electronics11223795

APA StyleAbayomi-Alli, O. O., Damaševičius, R., Qazi, A., Adedoyin-Olowe, M., & Misra, S. (2022). Data Augmentation and Deep Learning Methods in Sound Classification: A Systematic Review. Electronics, 11(22), 3795. https://doi.org/10.3390/electronics11223795