Abstract

Internet of Things (IoT) has emerged as an enabling platform for smart cities. In this paper, the IoT devices’ offloading decisions, CPU frequencies and transmit powers joint optimization problem is investigated for a multi-mobile edge computing (MEC) server and multi-IoT device cellular network. An optimization problem is formulated to minimize the weighted sum of the computing pressure on the primary MEC server (PMS), the sum of energy consumption of the network, and the task dropping cost. The formulated problem is a mixed integer nonlinear program (MINLP) problem, which is difficult to solve since it contains strongly coupled constraints and discrete integer variables. Taking the dynamic of the environment into account, a deep reinforcement learning (DRL)-based optimization algorithm is developed to solve the nonconvex problem. The simulation results demonstrate the correctness and the effectiveness of the proposed algorithm.

1. Introduction

Smart city is a promising city paradigm, which improves the quality of experience (QoE) of citizens through advanced information and communication technologies (ICTs) infrastructure and enormous Internet of Things (IoT) devices [1,2,3]. A practical problem is that the IoT devices are usually low cost with limited computing powers and storage capacities. Therefore, it is hard to complete compute-intensive and latency-sensitive tasks independently by the IoT devices. An intuitive method to alleviate this problem is to adopt the cloud computing technology for remote task computation. However, most of the clouding computing servers are deployed far away from the IoT devices, in which offloading the tasks of the IoT devices will cause severe transmission delay. Hence, traditional cloud computing technology is difficult to satisfy the latency requirements of applications in smart cities. To solve the issue mentioned above, researchers have proposed the concept of mobile edge computing (MEC).

In MEC systems, MEC servers are deployed at the edge of network to provide cloud-like computing services for the IoT devices [4,5]. IoT devices offload their compute-intensive tasks to the MEC servers for task execution. Since MEC servers are deployed around the IoT devices, the latency for task offloading is significantly reduced compared with the cloud computing. Hence, MEC has been considered as a promising solution to provide ultra-latency computation service for smart cities [1,2,3].

There have been a lot of works focused on the researches of the resource allocation and caching problems in MEC systems in IoT or IoT related areas. A multi-user MEC network consisting of a MEC server and multiple wireless devices was considered in [6]. The weighted sum computation rate of all the wireless devices maximization problem was studied. The computing mode and the system resource allocation were jointly optimized by a proposed alternating direction method of multipliers decomposition technique-based algorithm. In [7], a MEC system with a multiple antenna access point (AP) and K single antenna users was studied. The beamforming vector of the AP, the CPU frequencies, the numbers of offloaded bits and the time allocation of the users were jointly optimized to minimize the energy consumption of the AP. A device-to-device (D2D)-MEC system including one MEC server and multiple user devices was considered in [8]. The goal of this paper was to maximize the number of devices serviced by the system under the communication and computation resources constraints. Unlike the cloud computing, the MEC server has limited computing power. Hence, in single base station (BS) or MEC server scenarios, the IoT devices may have the problem of long service response time and even failure during periods of peak demand. The authors in [9] studied a heterogeneous network consisting of a multi-antenna macro-cell BS and multiple small-cells BSs. The offloading decision, offloading and computation resources allocation were optimized to minimize the total energy consumption of the devices within the coverage of the BSs. In [10], a dense small-cell network was concerned, which had multiple MEC servers. The spatial demand coupling, service heterogeneity, and decentralized coordination problems were solved by a proposed collaborative service placement algorithm. In [11], the weighted sum of the difference of the observed delay and its corresponding delay requirement at each slice was minimized through optimizing the offloading decisions of the users and the communication and computing resource allocation in a multi-cell MEC server network.

The above works focused on the MEC problems in static environment, which is a particular case of dynamic environment. In dynamic environment, the MEC system state changes randomly and unpredictable, which is more approximate to the practical scenarios. In static environment, the MEC systems mainly concern about the short-term utility, while in dynamic environment, the long-term utility are concerned. Edge intelligence empowered by artificial intelligence (AI) is promising way to optimize the system performance in the field of the smart city IoT [12,13,14]. In [15], the power control and computing resource allocation optimization problem in Industrial Internet of Things MEC network was studied, a deep reinforcement learning (DRL)-based dynamic resource management algorithm was proposed to minimize the long-term average delay of the tasks. In [16], a content caching problem was investigated, and an actor-critic DRL-based algorithm was studied to maximize the cache bit rate. In [17], the task migration problem was studied in multi-MEC server and multi-user network, a multi-agent DRL task migration algorithm was proposed to solve the formulated problem. In [18], a multi-user end-edge-cloud orchestrated network was proposed and a DRL-based computation offloading and resource allocation strategy was designed to minimize the energy consumption of the system.

In practical scenarios, there are many metrics to measure the performance of a MEC system, hence, the system requirements are always multifaceted. The works mentioned above mainly considered single-objective optimization scenarios, which may be not generalized and universal for some practical MEC systems. Motivated by these facts, we propose a multi-MEC server and multi-IoT device cellular network structure and investigate a weighted sum of multiple objectives minimization optimization problem in this paper. The weighted sum of multiple objectives optimization problems in dynamic MEC systems were also studied in [19,20,21], but the optimization objectives and system models are different to ours. The key differences between the relevant works and our work are shown in Table 1. The main contributions of this paper are summarized as follows:

Table 1.

Comparison of relevant works.

- (1)

- A multi-MEC server and multi-IoT device cellular network structure is proposed. A high-cost and high-performance primary MEC server (PMS) with relative strong computing power is deployed in the BS, and multiple low-cost secondary MEC servers (SMSs) with relative weak computing powers are deployed within the coverage area of the BS.

- (2)

- An optimization problem is formulated. The problem considers the weighted sum of multiple optimization objectives, including the minimization of the weighted sum of the computing pressure on the PMS, the sum of energy consumption of the network, and the task dropping cost. The formulated problem is a nonconvex mixed integer nonlinear program (MINLP) problem, which is solved by our proposed DRL-based optimization algorithm.

- (3)

- Simulation results are presented to evaluate the performance of the proposed algorithm. The correctness and effectiveness of the proposed algorithm are demonstrated by the simulation results.

The remainder of this paper is organized as follows. Section 2 presents the system model and formulates the optimization problem. The proposed DRL-based optimization algorithm is described in Section 3. The complexity and convergence analysis is given in Section 4. Simulation results are provided in Section 5. Finally, Section 6 concludes this paper.

2. System Model

In this section, we first introduce the proposed multi-MEC server and multi-IoT device cellular network, the channel model, and the computation model, respectively. Then, based on these we establish the optimization problem of our paper.

2.1. Network and Channel Model

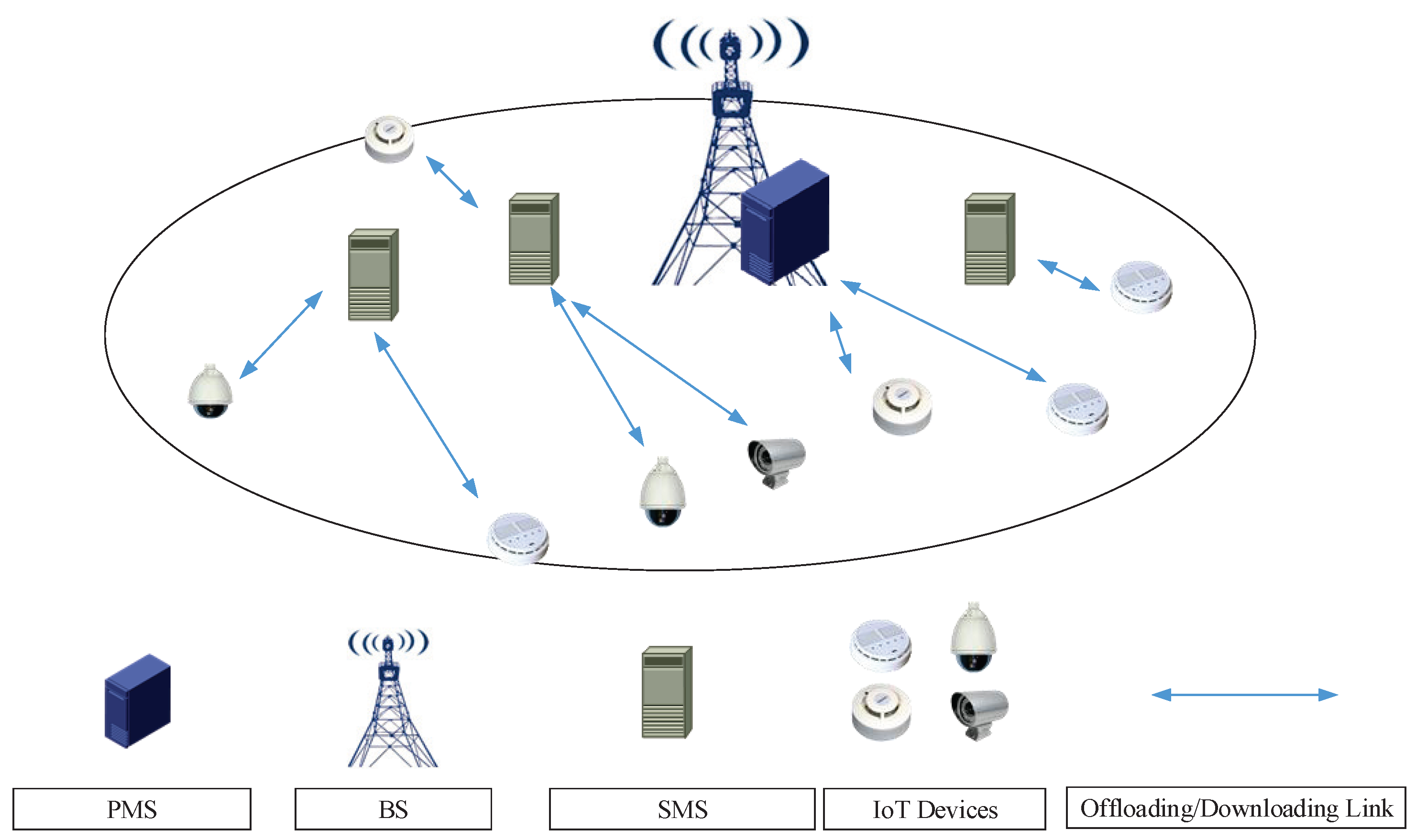

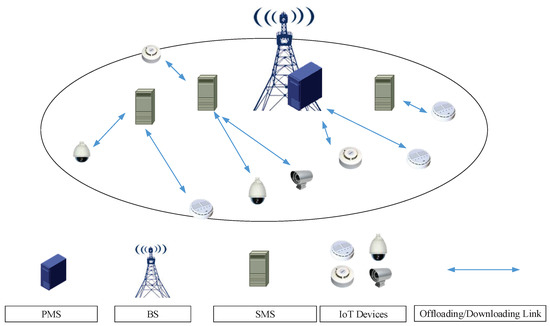

As shown in Figure 1, a multi-MEC server and multi-IoT device cellular network is considered, which consists of a high-performance PMS, M SMSs with relative weak computing powers, and K IoT devices. The computing power of the PMS is much stronger than the SMSs. The PMS is deployed at the BS, and the SMSs are deployed in the APs, which are distributed in different locations within the coverage area of the BS. A specific scenario of this network is a UAV-assisted MEC system, in which the PMS is the BS and the SMSs can be the UAVs equipped with limited computing power MEC servers. To avoid repetition, the notations used below do not distinguish between PMS and BS, the SMS and the AP. We assume each SMS’s cost is low and can be easily deployed and removed according to the requirements of the MEC system. Let denote the set of the MEC servers, where 0 denotes the PMS and others denote the SMSs. Let denote the set of the IoT devices. We assume that all of the IoT devices and MEC servers are equipped with one single antenna. By adopting multi-antenna technologies, our work can be extended to multi-antenna scenarios [7,9,22,23].

Figure 1.

The illustration of the multi-MEC server cellular network.

It is assumed that the system is operated in a time-slotted manner with time-slot length . In this paper, we concern about the long-term return during T consecutive time-slots. The set of the time-slots is denoted as . Let denote the channel power gain between the IoT device k and the MEC server m at time-slot t. Similar to [7,24], we assume that the wireless channels between the IoT devices and the MEC servers remain unchanged at each time-slot and vary at different time-slots. Motivated by the works in [25,26], we adopt a -element channel power gain state set to capture the time-varying characteristics of the , denoted as , i.e., .

2.2. Computation Task Model

At time-slot t, the computation task of the IoT device k is denoted as , which is defined by a tuple , where denotes the size (in bits) of the task , denotes the number of required CPU cycles for computing 1-bit of the task (i.e. the computational complexity), and is the latency requirement of the task . Similar to the assumption in [27], we assume that at the beginning of each time-slot t, each IoT device k has a new task arrival, is randomly generated from the set , and the corresponding computational complexity belongs the set of complexity . For simplicity, the latency requirement for each task is set to .

To facilitate the resource management, a virtual system operator (VSO) is deployed at the BS, which is responsible for collecting the network information (e.g., the channel state information, size of the each IoT device’s task, each IoT device’s task computational complexity, etc.) and allocating computation resources for the IoT devices. As the computing powers of the IoT devices are always weak, we assume that the tasks of each IoT device must be entirely offloaded to a certain MEC server for computation through wireless link. The task offloading decision variable of the IoT device k is denoted as , where denotes the IoT device k’s computation task is offloaded to the MEC server m for execution at time-slot t.

Let denote the time duration of task offloading from the IoT device k to the MEC server m at time-slot t. The offloaded task is processed by the MEC server m in remaining time duration . The rate of task data offloaded by the IoT device k to the MEC server m at time-slot t can be expressed as

where denotes the available channel bandwidth between the IoT device k and the MEC server m; denotes the transmit power of the IoT device k at the time-slot t, is the noise power at the MEC server m. The energy consumption of the IoT device k at time-slot t for task offloading is expressed as

The corresponding computation energy consumption of the MEC server m can be expressed as

where denotes the CPU frequency of the MEC server m allocated to the IoT device k’s task at time-slot t; is the effective capacitance coefficient of the MEC server m at time-slot t, which is determined by the chip architecture [6,7].

2.3. Problem Formulation

If the computing pressure of the PMS is too high, i.e., if the VSO allocates too many tasks to the PMS, the PMS may have higher probability for crashing. As the PMS has much stronger computing power than the SMSs, the crashing of the PMS has serious impact on the reliability of the MEC system. Meanwhile, the energy consumption and the task completion rate are also very important to the MEC system. Therefore, in this paper, we aim to minimize the weighted sum of the computing pressure of the PMS, the sum energy consumption of the MEC servers and the IoT devices, and the task dropping cost. The corresponding optimization problem is formulated as

where the first term of the objective function represents the computing pressure of the PMS, the second term represents the sum of energy consumption of the network and the task dropping cost; and are the weights of the two terms above, respectively; means the corresponding objective is not considered; means the corresponding objective is considered; is the normalization factors to normalize each term. is the discount factor, which denotes the difference on importance between the future rewards and the present reward [28]; and denote the maximal transmit power of the IoT device k and the maximal available CPU frequency of the MEC server m, respectively. is task dropping cost of the IoT device k at time-slot t. is the indicator function, which is given as

(4b) is the offloading decision variable constraint, which guarantees each IoT device’s task has been allocated to a MEC server. (4c) and (4d) are the IoT devices’ computation tasks constraints to make sure that each IoT device’s task can be offloaded and completed. (4e) is the transmit power constraint of the IoT devices. (4f) is the CPU frequency constraint, we assume that IoT devices equally share the CPU at the MEC server m, . (4g) and (4h) are the constraints of task offloading time and weights, respectively.

Due to the binary variable and high coupling constraints, problem is a non-convex MINLP problem. Furthermore, the computation tasks of the IoT devices and the channel gains are randomly varying during T consecutive time-slots. Hence, it is impossible to solve the problem at the beginning of the T consecutive time-slots. Thus, traditional optimization-based methods are not suitable to solve the problem .

3. Proposed DRL-Based Optimization Algorithm

In order to address the above issue, we propose a DRL-based optimization algorithm in this section. Specifically, we utilize the importance sampling based parameterized policy gradient approach (PPGA) DRL algorithm [28]. In order to apply the DRL-based algorithm, we first give the system state, action, reward, and the policy of the MEC system as follows:

(1) System state : The system state at time-slot t is characterized by the channel power gain, the size (in bits) of computation task data, and the corresponding task complexity, i.e., .

(2) Action : is the set of the offloading decisions of the IoT devices, i.e., .

(3) Reward : After executing an action under system state at time-slot t, the VSO will receive a reward . The reward of a DRL model is direct related with the optimization objective of the system. Therefore, the reward of our DRL model is determined by the objective function value of the problem at time-slot t. With given (), the optimization problem for reward are given as

The standard form of the DRL-based optimization problem is to maximize the accumulated reward, hence, we add a minus sign before the . Obviously, when are determined, the computing pressure of the PMS is determined too. Therefore, solving the problem is equal to solve the problem below

Based on the primal decomposition theory [29], the problem can be decomposed into K sub-optimization problems. Specifically, for the IoT device k and the MEC server m, , the corresponding sub-optimization problem can be expressed as

If the problem is solvable, i.e., there exist feasible solutions of , , and to meet the constraints (4c)–(4g). The optimal value of is equal to the optimal objective value of . On the other hand, if there exist no feasible solutions of , , and , we let the objective value of be equal to . It is worth noting that, if not all the IoT devices’ tasks can be completed, our work can not be applied to minimize the sum of energy consumption of the system. According to , if we set , the optimal solutions for is to set , which are pointless solutions. Hence, the conditions and must be satisfied simultaneously when . If the problem has feasible solutions, the problem is transformed into , which is given as

Problem is still non-convex and intractable due to the complex coupling among the variables , , and . To address this issue, we adopt block coordinate descending (BCD) algorithm to optimize , , and alternately. For any given feasible , the optimization problem is transformed into , which is given as

The above optimization problem can be further decomposed into the following two manageable sub-problems, namely,

Theorem 1.

For a given , the optimal and can be given as

respectively.

Proof.

It is easy to prove that problem and are both convex optimization problem and can be efficiently solved by using the Karush-Kuhn-Tucker (KKT) conditions [30]. □

Substituting the above results into , we have

where

According to Theorem 1, the optimal solution of the problem have closed-form optimal solutions, which are determined by the value of . Therefore, if , solving the problem is equivalent to solving the problem , which has one optimization variable . If , the problem is unsolvable.

Theorem 2.

The optimization problem is convex.

Proof.

See Appendix A. □

Based on the convexity illustrated in Theorem 2, we can adopt the bisection method to solve the problem . The bisection based optimization algorithm for solving problem is summarized in Algorithm 1, where denote the objective function of .

(4) Policy : The policy denotes the mapping from the state to the action of the MEC system, i.e., , where is the parameter of the policy.

The parameter of the policy is obtained through gradient based method. The performance measure of the PPGA is defined as [28]

where is the value function for policy starting from initial state and is the parameter of the policy. An analytic expression for the gradient of is provided by policy gradient theorem [28], which is given as

where is the on-policy distribution over states, is the value of taking action in state under policy .

| : A Bisection Algorithm for Solving |

| 1: Initialization: |

| 2: The bisection algorithm iteration index , maximum number |

| of iterations , , , the tolerance errors . |

| 3: for: : |

| 4: Update . |

| 5: if then |

| 6: The optimal value of is ; |

| 7: break; |

| 8: end if |

| 9: if then |

| 10: Update ; |

| 11: end if |

| 12: if then |

| 13: Update ; |

| 14: end if |

| 15: if or then |

| 16: The optimal value of is ; |

| 17: end if |

| 18: end for |

An action-independent baseline is always introduced to decrease the variance in the training process. Then, the analytic expression for the policy gradient with baseline is denoted as

Off-policy method adopts an exploratory behavior policy to generate behavior, while the target policy learns about the behavior and finally become the optimal policy. Importance sampling technique is widely used by off-policy methods, which weights the returns by importance-sampling ratio [28]. The parameter is updated as

where is the learning rate, G is the return following time-slot t, is the estimate of at time-slot t. We adopt the estimate of the state value as the baseline, where is the weight vector of the state value function. Then, the DRL-based algorithm is summarized in Algorithm 2.

| : The proposed DRL-based algorithm. |

| 1: Initialization: |

| 2: , , target policy , behavior policy |

| maximal number of iterations , discount factor , the learning |

| rate of policy , the learning rate of the baseline ; |

| 3: for : |

| 4: Using and Algorithm 1 to generate trajectory |

| , |

| by action policy ; |

| 5: for : |

| 6: Update G: ; |

| 7: Update : ; |

| 8: Update by (18) with ; |

| 9: end for |

| 10: end for |

4. Complexity and Convergence Analysis

According to [31], the computation complexity of a training step for a full-connection deep neural network (DNN) is , where J is number of the layers, is the number of the neural in j-th layer. Considering Algorithm 1 and Algorithm 2, the total complexity of our proposed algorithm is , where U is total training episodes.

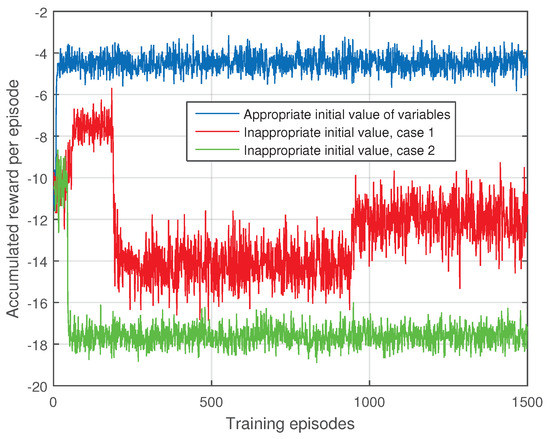

The convergence guarantee of the DRL algorithm is still an open issue [27], which are influenced by many factors, such as the setting of the hyperparameters and the initial value of the DNN parameters. The convergence performance of our proposed algorithm is shown in Section 5.

5. Simulation Results

In this section, simulation results are provided to evaluate the performance of the proposed DRL-based algorithm. We conduct the simulations through python 3.8 and Tensorflow 2.5.0. Fully-connected hidden layer with 10 neurons in both the baseline and policy networks are employed. The learning rates and are set as and , respectively. The channel bandwidth between each IoT device and each MEC server is 200 KHz. The maximum CPU frequency of the SMS and the PMS are set as 1 GHz and 5 GHz, respectively. The length of time-slot is set as 100 ms. is set as . Without loss of generality, are all set as 1000. is set as . is set as bits, . T is set as 40.

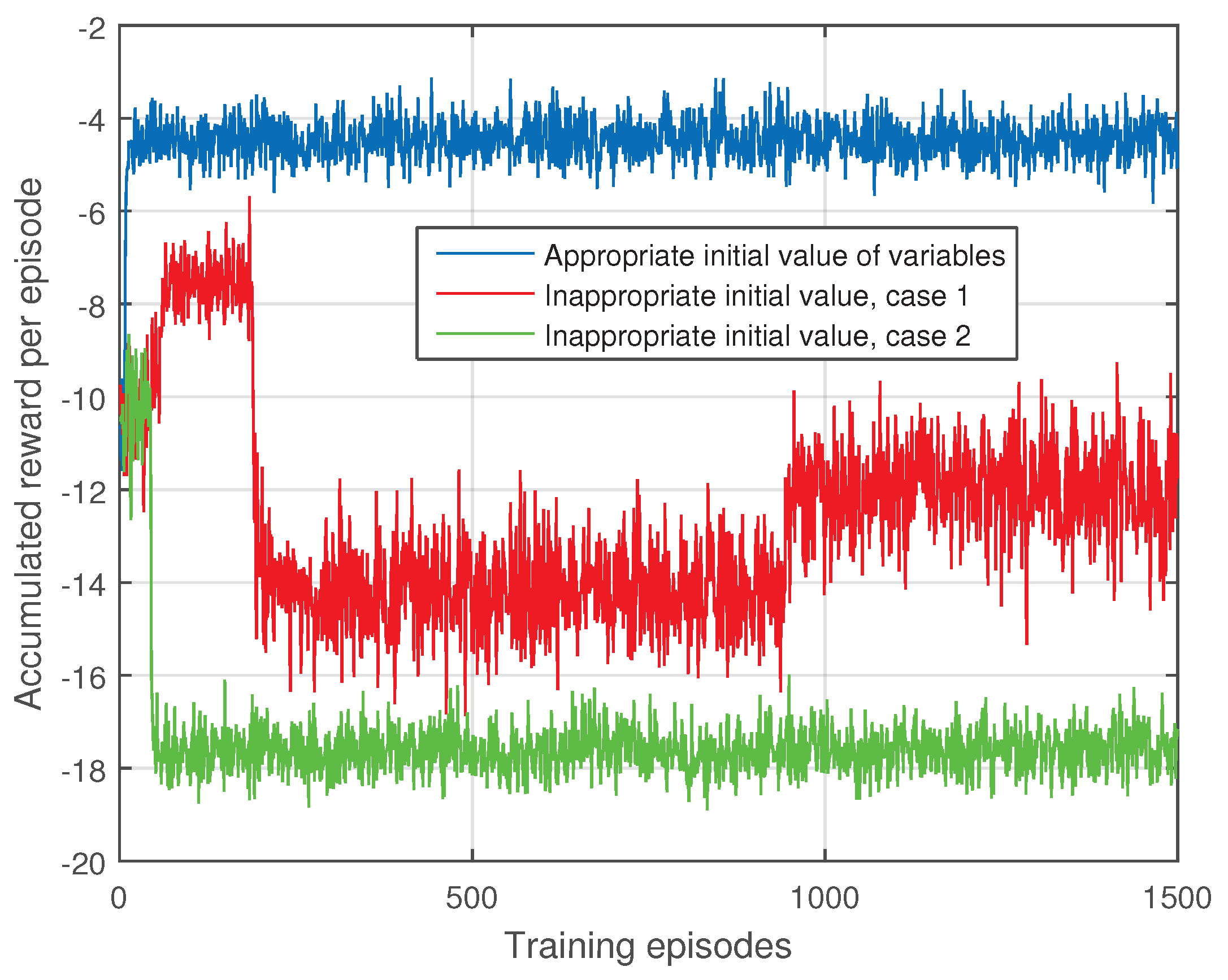

Figure 2 shows the impacts of the initial values of and on the accumulated reward. The iterative algorithms are susceptible to the initial value of variables. In our paper, the initial value of and are randomly given, which is a common way in DRL algorithms. It can be seen from Figure 2 that different initial values of and have deep influences on the convergence performance of the our DRL based algorithm. In order to guarantee the performance of the algorithm, we must run the algorithm multiple times and select the one has best performance as the final output.

Figure 2.

The impacts of the initial value of and on the accumulated reward.

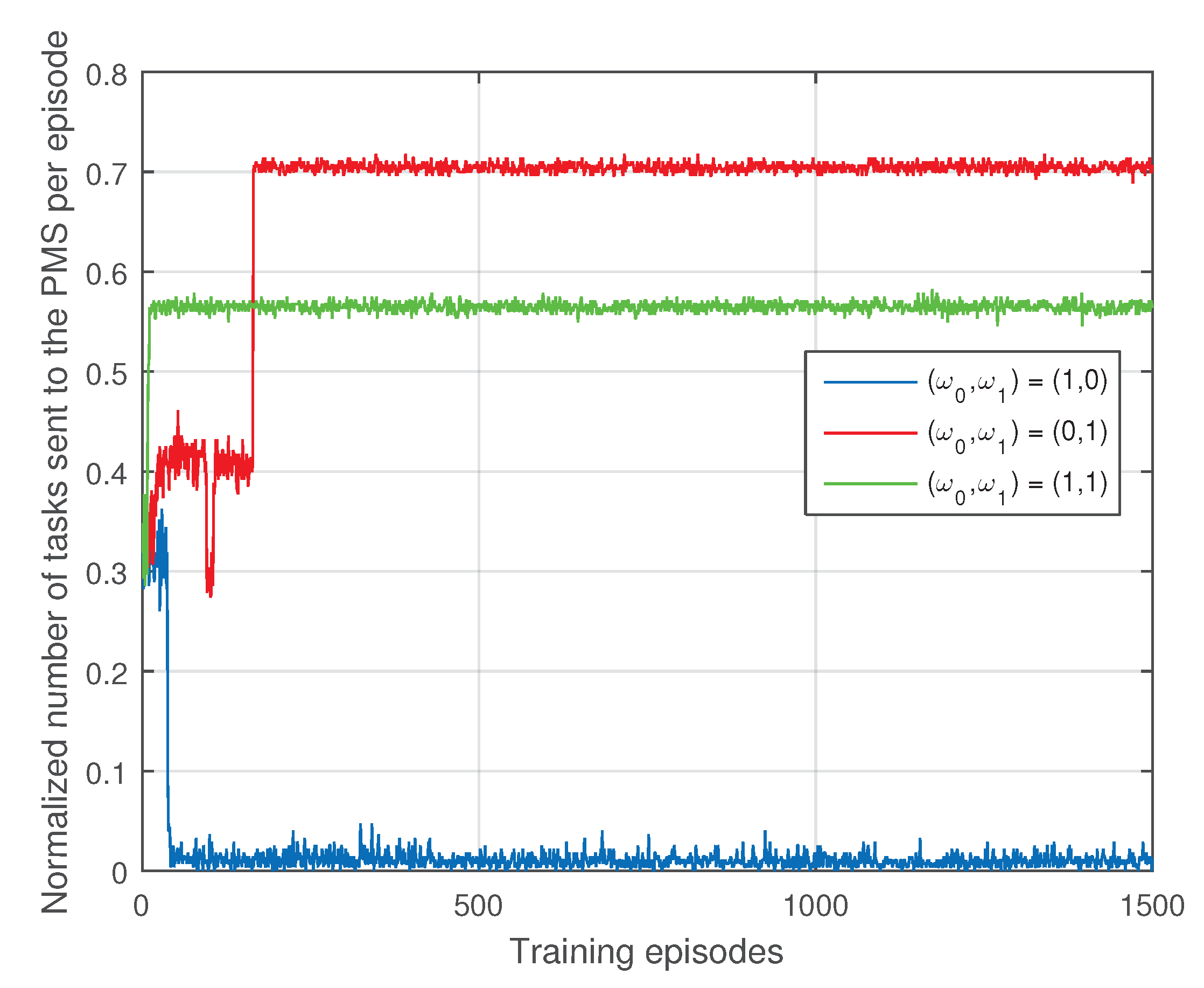

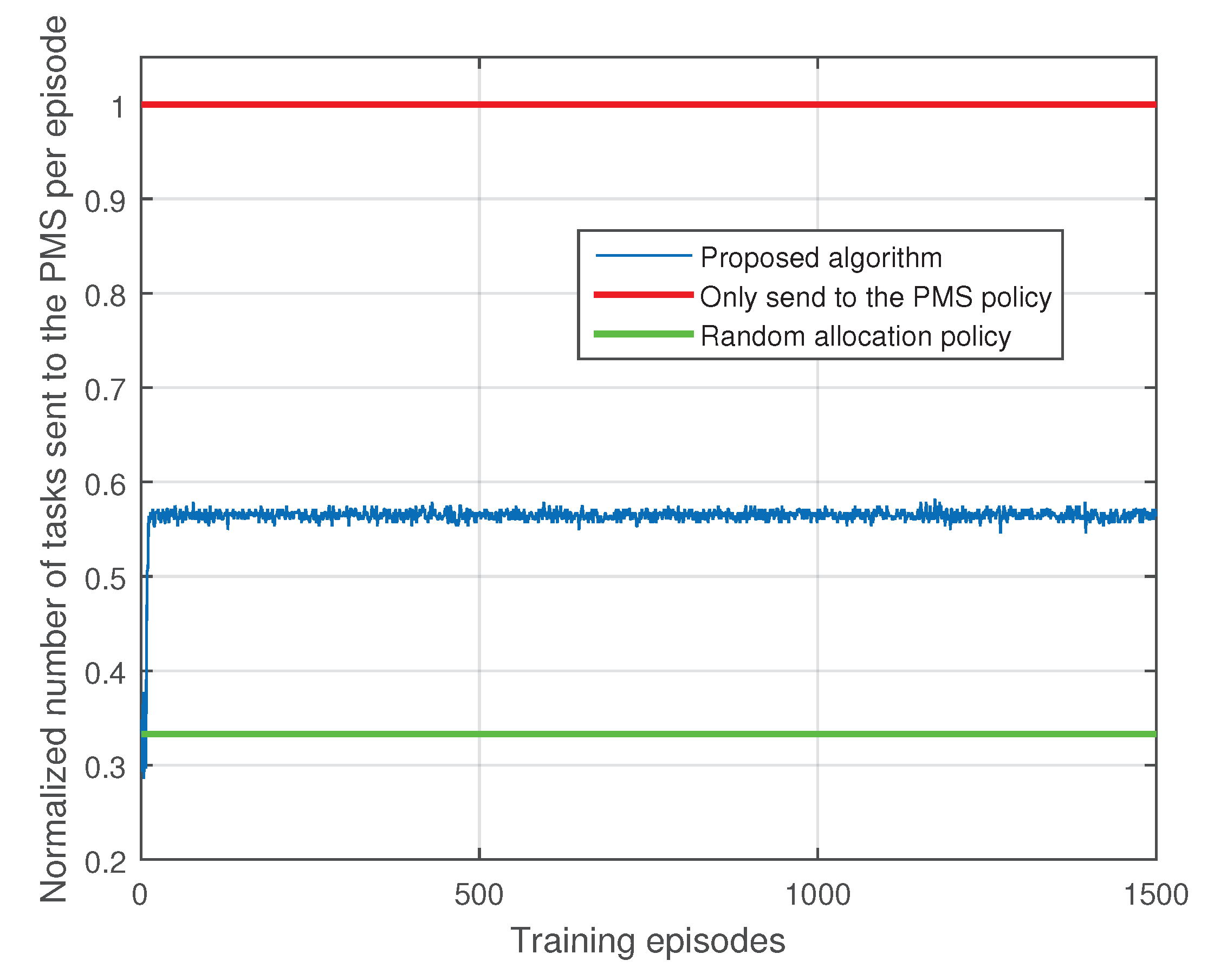

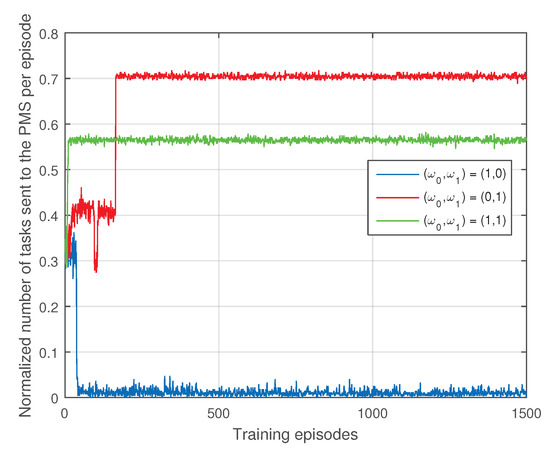

Figure 3 shows the impact of the weights on the normalized number of tasks sent to the PMS per episode. It can be seen from Figure 3 that the case has the smallest number of tasks sent to the PMS. This is because the VSO only concerns the computing pressure of the PMS when . When , the VSO mainly tries to find a policy to minimize the number of dropped tasks, namely, to make the problem solvable. As the PMS has strongest computing power, the VSO will allocate many tasks to the PMS, which can be proofed by Figure 3. Finally, when , the VSO must make a trade-off between the computing pressure on the PMS and the task dropping cost. Hence, when , the number of tasks sent to the PMS per episode is higher than the case but smaller than the case .

Figure 3.

The impact of the weights on the normalized number of tasks sent to the PMS per episode.

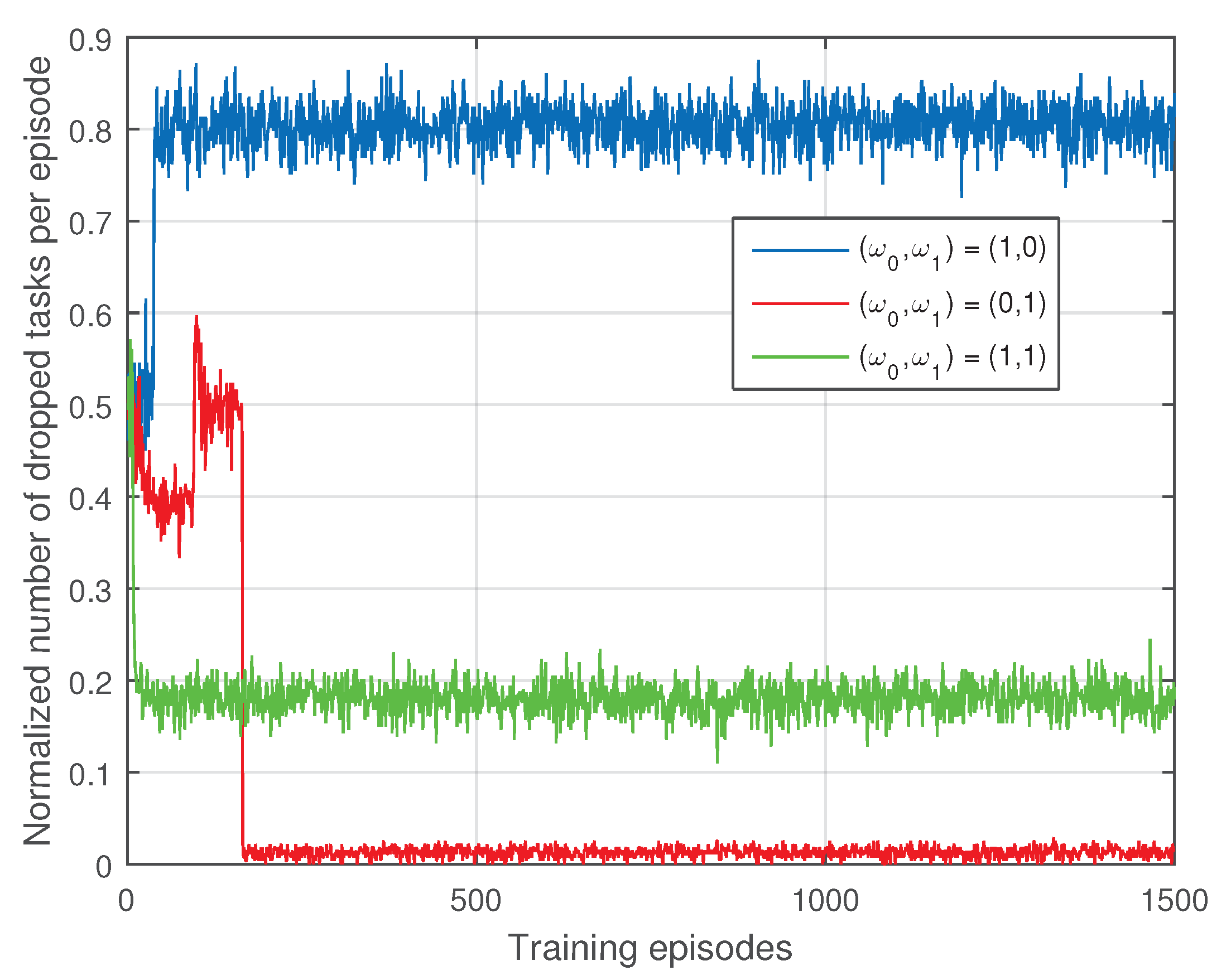

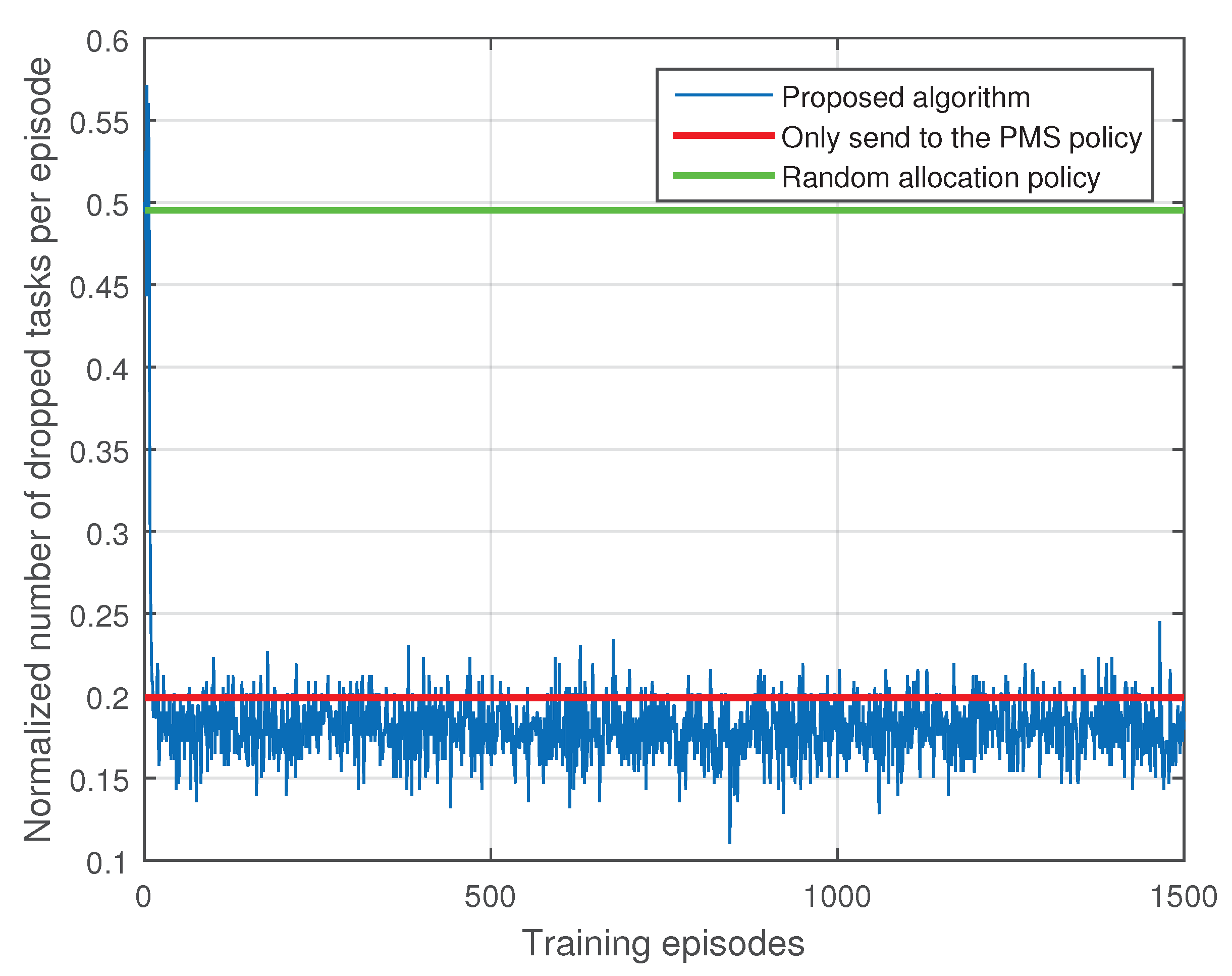

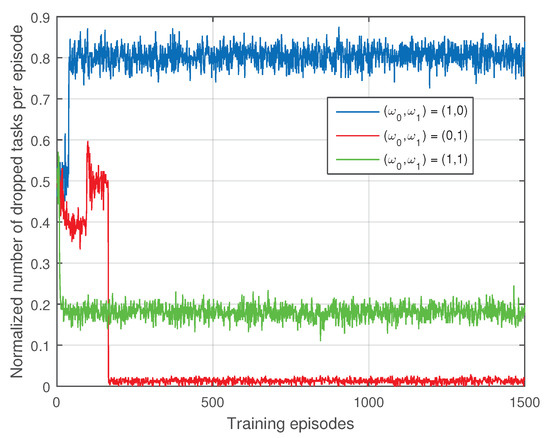

Figure 4 shows the impact of the weights on the normalized number of dropped tasks per episode. When , most of the tasks are allocated to the SMSs to reduce the computing pressure of the PMS. Since the computing power of the SMSs are weak, many tasks may be dropped. The case has the smallest normalized number of dropped tasks per episode, which is explained in Figure 3. Similar to that in Figure 3, the case has middle number of dropped tasks per episode.

Figure 4.

The impact of the weights on the normalized number of dropped tasks per episode.

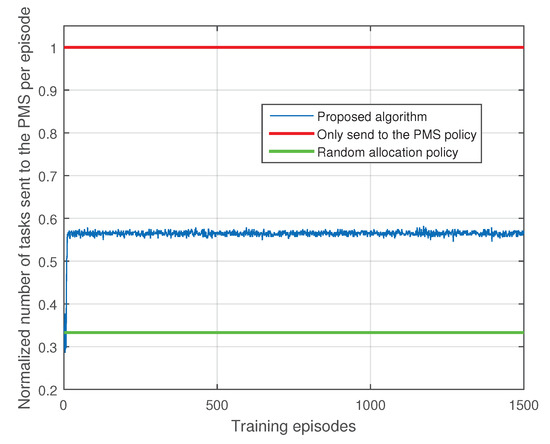

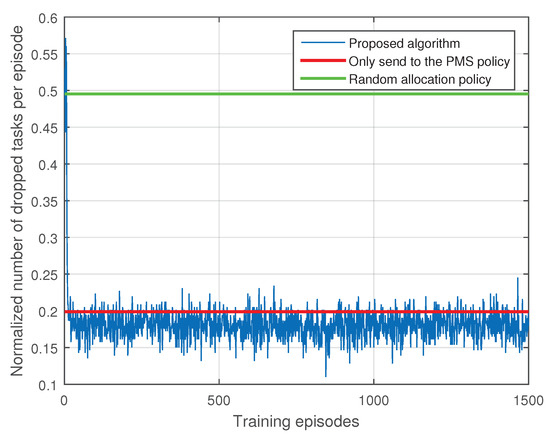

Figure 5 and Figure 6 show performance comparisons between our proposed algorithm () and two benchmark polices: only send to the PMS policy and random allocation policy. Only send to the PMS policy allocate all the IoT devices’ tasks to the PMS, which is a policy adopted in typical single MEC server deployment scenario. Random allocation policy allocates each IoT device’s task randomly to the MEC servers. The two benchmark policies are both short-term optimization policies, we plot the mean value of the normalized number of tasks sent to the PMS and dropped tasks per episode in Figure 5 and Figure 6. As shown in Figure 5 and Figure 6, our proposed algorithm achieve superior performances on computing pressure of the PMS and tasks dropped cost than the only send to the PMS policy. In order to obtain a lower task dropped cost, our algorithm has larger computing pressure on the PMS than the random allocation policy. However, we have significant performance gain in term of task dropped cost compared with the random allocation policy. Hence, our proposed algorithm is more practical than the random allocation policy.

Figure 5.

Comparison with benchmark polices in terms of normalized number of tasks sent to the PMS per episode.

Figure 6.

Comparison with benchmark polices in terms of normalized number of dropped tasks per episode.

6. Conclusions

We studied the problem of making trade-off among the computing pressure on the PMS, the sum of energy consumption of the IoT devices and all the MEC servers, and the task dropping cost. The formulated MINLP problem was solved by a proposed DRL-based optimization algorithm. The simulation results demonstrated the validity of the proposed algorithm.

Author Contributions

Conceptualization, K.T. and B.L.; methodology, software, validation, K.T., H.C., Y.L. and B.L.; writing—review and editing, K.T. and B.L.; supervision, B.L. All authors have read and agreed to the published version of the manuscript.

Funding

The research reported in this article was supported by the Research Program of China Mobile System Integration Co., Ltd. under Grant ZYJC-Shaanxi-202110-B-CB-001.

Acknowledgments

We thank Wei Zhang for his valuable comments and discussion.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Proof (Proof of Theorem 1).

We start the proof by deriving the first and second order derivatives of the objective function of to clarify the convexity. Specifically, let denote the objective function of , namely,

Thus, its first and second order derivatives can be respectively given as

It is observed that the second order derivative of the objective function is positive for any feasible . Thus, the optimization problem contains a convex objective function and a linear constraint. Therefore, the optimization problem is a convex optimization problem. The proof is completed. □

References

- Zhao, Y.; Xu, K.; Wang, H.; Li, B.; Qiao, M.; Shi, H. MEC-enabled hierarchical emotion recognition and perturbation-aware defense in smart cities. IEEE Internet Things J. 2021, 8, 16933–16945. [Google Scholar] [CrossRef]

- Khan, L.U.; Yaqoob, I.; Tran, N.H.; Kazmi, S.M.A.; Dang, T.N.; Hong, C.S. Edge-computing-enabled smart cities: A comprehensive survey. IEEE Internet Things J. 2020, 7, 10200–10232. [Google Scholar] [CrossRef] [Green Version]

- Wu, H.; Zhang, Z.; Guan, C.; Wolter, K.; Xu, M. Collaborate edge and cloud computing with distributed deep learning for smart city internet of things. IEEE Internet Things J. 2020, 7, 8099–8110. [Google Scholar] [CrossRef]

- Mao, Y.; You, C.; Zhang, J.; Huang, K.; Letaief, K.B. A survey on mobile edge computing: The communication perspective. IEEE Commun. Surv. Tutor. 2017, 1, 2322–2358. [Google Scholar] [CrossRef] [Green Version]

- Ryu, J.W.; Pham, Q.V.; Luan, H.N.T.; Hwang, W.J.; Kim, J.D.; Lee, J.T. Multi-access edge computing empowered heterogeneous networks: A novel architecture and potential works. Symmetry 2019, 11, 842. [Google Scholar] [CrossRef] [Green Version]

- Bi, S.; Zhang, Y.J. Computation rate maximization for wireless powered mobile-edge computing with binary computation offloading. IEEE Trans. Wirel. Commun. 2018, 17, 4177–4190. [Google Scholar] [CrossRef] [Green Version]

- Wang, F.; Xu, J.; Wang, X.; Cui, S. Joint offloading and computing optimization in wireless powered mobile-edge computing systems. IEEE Trans. Wirel. Commun. 2018, 17, 1784–1797. [Google Scholar] [CrossRef]

- He, Y.; Ren, J.; Yu, G.; Cai, Y. D2D communications meet mobile edge computing for enhanced computation capacity in cellular networks. IEEE Trans. Wirel. Commun. 2019, 18, 1750–1763. [Google Scholar] [CrossRef]

- El Haber, E.; Nguyen, T.M.; Assi, C.; Ajib, W. Macro-cell assisted task offloading in mec-based heterogeneous networks with wireless backhaul. IEEE Trans. Netw. Serv. Manag. 2019, 16, 1754–1767. [Google Scholar] [CrossRef]

- Chen, L.; Shen, C.; Zhou, P.; Xu, J. Collaborative service placement for edge computing in dense small cell networks. IEEE Trans. Mob. Comput. 2021, 20, 377–390. [Google Scholar] [CrossRef]

- Zarandi, S.; Tabassum, H. Delay minimization in sliced multi-cell mobile edge computing (mec) systems. IEEE Commun. lett. 2021, 25, 1964–1968. [Google Scholar] [CrossRef]

- Lim, W.Y.B.; Ng, J.S.; Xiong, Z.; Jin, J.; Zhang, Y.; Niyato, D.; Leung, C.S.; Miao, C. Decentralized Edge Intelligence: A Dynamic Resource Allocation Framework for Hierarchical Federated Learning. IEEE Trans. Parallel Distrib. Syst. 2022, 33, 536–550. [Google Scholar] [CrossRef]

- Lim, W.Y.B.; Ng, J.S.; Xiong, Z.; Niyato, D.; Miao, C.; Kim, D.I. Dynamic Edge Association and Resource Allocation in Self-Organizing Hierarchical Federated Learning Networks. IEEE J. Sel. Areas Commun. 2021, 39, 3640–3653. [Google Scholar] [CrossRef]

- Yang, H.; Zhao, J.; Xiong, Z.; Lam, K.-Y.; Sun, S.; Xiao, L. Privacy-Preserving Federated Learning for UAV-Enabled Networks: Learning-Based Joint Scheduling and Resource Management. IEEE J. Sel. Areas Commun. 2021, 39, 3144–3159. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, Z.; Zhang, Y.; Wu, Y.; Chen, X.; Zhao, L. Deep reinforcement learning-based dynamic resource management for mobile edge computing in industrial internet of things. IEEE Trans. Ind. Informat. 2021, 17, 4925–4934. [Google Scholar] [CrossRef]

- Zhong, C.; Gursoy, M. C.; Velipasalar, S. Deep reinforcement learning-based edge caching in wireless networks. IEEE Trans. Cogn. Commun. Netw. 2020, 6, 48–61. [Google Scholar] [CrossRef]

- Liu, C.; Tang, F.; Hu, Y.; Li, K.; Tang, Z.; Li, K. Distributed task migration optimization in mec by extending multi-agent deep reinforcement learning approach. IEEE Trans. Parallel Distrib. Syst. 2021, 32, 1603–1614. [Google Scholar] [CrossRef]

- Dai, A.; Zhang, K.; Maharjan, S.; Zhang, Y. Edge intelligence for energy-efficient computation offloading and resource allocation in 5G beyond. IEEE Trans. Veh. Technol. 2020, 69, 12175–12186. [Google Scholar] [CrossRef]

- Hu, H.; Wang, Q.; Hu, R. Q.; Zhu, H. Mobility-Aware Offloading and Resource Allocation in a MEC-Enabled IoT Network With Energy Harvesting. IEEE Internet Things J. 2021, 8, 17541–17556. [Google Scholar] [CrossRef]

- Ale, L.; Zhang, N.; Fang, X.; Chen, X.; Wu, S.; Li, L. Delay-aware and energy-efficient computation offloading in mobile-edge computing using deep reinforcement learning. IEEE Trans. Cogn. Commun. Netw. 2021, 7, 881–892. [Google Scholar] [CrossRef]

- Temesgene, D.A.; Miozzo, M.; Gündüz, D.; Dini, P. Distributed deep reinforcement learning for functional split control in energy harvesting virtualized small cells. IEEE Trans. Sustain. Comput. 2021, 6, 626–640. [Google Scholar] [CrossRef]

- Han, H.; Fang, L.; Lu, W.; Zhai, W.; Li, Y.; Zhao, J. A GCICA Grant-Free Random Access Scheme for M2M Communications in Crowded Massive MIMO Systems. IEEE Internet Things J. 2021. early access. [Google Scholar] [CrossRef]

- Han, H.; Fang, L.; Lu, W.; Chi, K.; Zhai, W.; Zhao, J. A Novel Grant-Based Pilot Access Scheme for Crowded Massive MIMO Systems. IEEE Trans. Veh. Technol. 2021, 70, 11111–11115. [Google Scholar] [CrossRef]

- Mao, Y.; Zhang, J.; Letaief, K.B. Dynamic Computation offloading for mobile-edge computing with energy harvesting devices. IEEE J. Sel. Areas Commun. 2016, 34, 3590–3605. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Xie, S.; Zhang, Y. Cooperative offloading and resource management for uav-enabled mobile edge computing in power iot system. IEEE Trans. Veh. Technol. 2020, 69, 12229–12239. [Google Scholar] [CrossRef]

- Wang, H.; Yu, F.R.; Zhu, L.; Tang, T.; Ning, B. Finite-state markov modeling for wireless channels in tunnel communication-based train control systems. IEEE Trans. Intell. Transp. Syst. 2014, 15, 1083–1090. [Google Scholar] [CrossRef]

- Tang, M.; Wong, V.W.S. Deep reinforcement learning for task offloading in mobile edge computing systems. IEEE Trans. Mob. Comput. 2020, in press. [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction, 2nd ed.; The MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Palomar, D.P.; Chiang, M. A tutorial on decomposition methods for network utility maximization. IEEE J. Sel. Areas Commun. 2006, 24, 1439–1451. [Google Scholar] [CrossRef] [Green Version]

- Boyd, S.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Li, C.; Xia, J.; Liu, F.; Li, D.; Fan, L.; Karagiannidis, G.K.; Nallanathan, A. Dynamic Offloading for Multiuser Muti-CAP MEC Networks: A Deep Reinforcement Learning Approach. IEEE Trans. Veh. Technol. 2021, 70, 2922–2927. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).