Multimodal Natural Language Explanation Generation for Visual Question Answering Based on Multiple Reference Data

Abstract

1. Introduction

- We introduce multiple reference sources into the explanation generation model to solve the informativeness problem and analyze the impact of different reference information on model performance.

- We use images and questions for retrieval to solve the problem of outside knowledge failure caused by caption-based retrieval methods in the previous work [26].

- We use a simple and efficient feature fusion method to address the problem of fusing multiplied multimodal reference information features.

- The experimental results show that the generated answers are more correct, and the generated explanations are more comprehensive and reasonable than answers and explanations generated by other state-of-the-art models.

2. Related Works

2.1. Visual Question Answering

2.2. Natural Language Explanation Generation for the Visual Question Answering

2.3. Large-Scale Language Models

3. Multimodal Explanation Generation Model Based on Multiple Reference Information

3.1. Generation of Multiple References

3.1.1. Generation of Multiple Image Captions

3.1.2. Generation of Outside Knowledge

3.1.3. Generation of Object Information

3.2. Answer and Explanation Generation

4. Experiments

4.1. Experimental Settings

4.2. Evaluation

4.3. Experimental Results

4.3.1. Quantitative Analysis

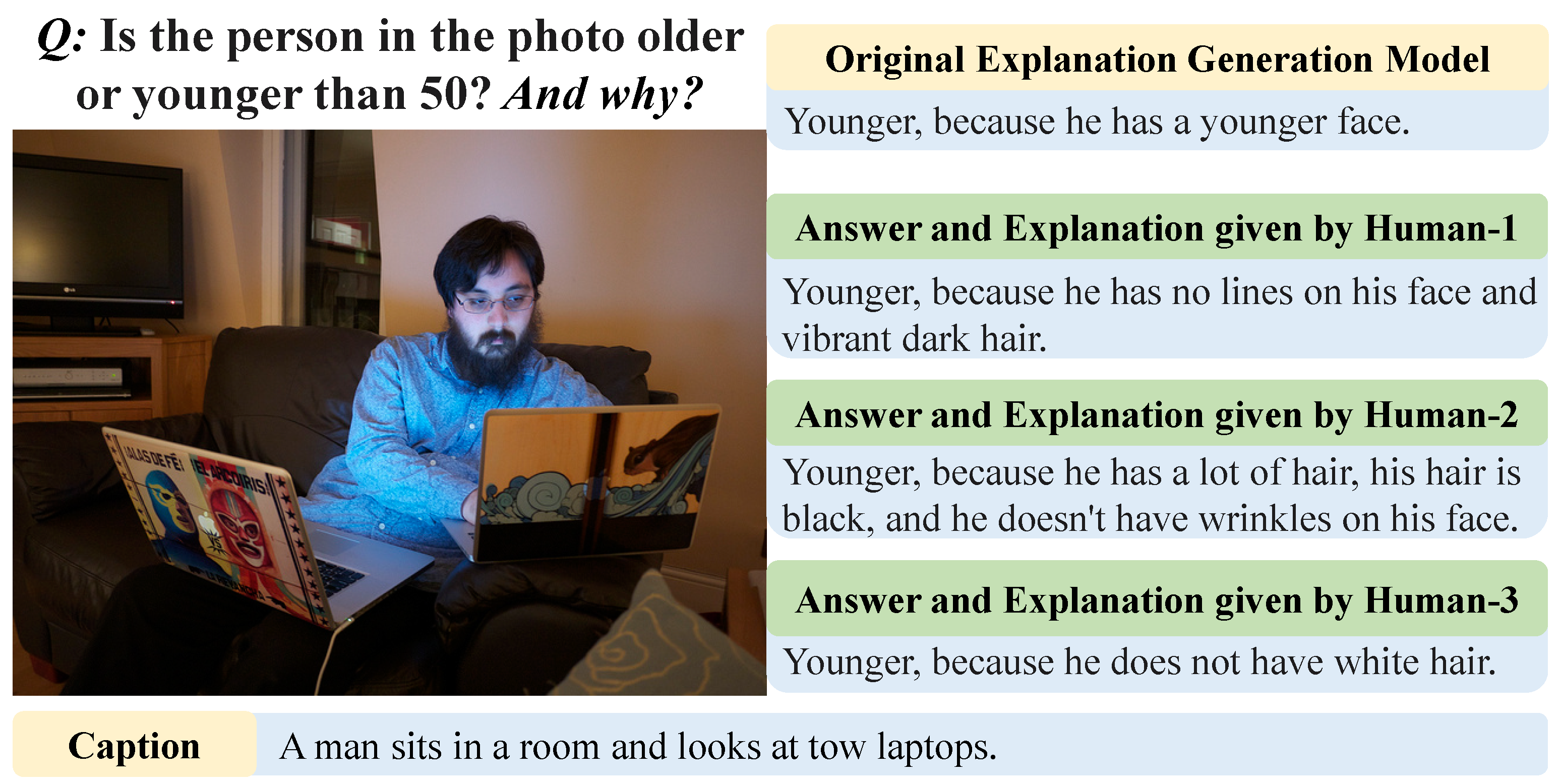

4.3.2. Qualitative Analysis

5. Discussions

5.1. Limitations

5.2. Future Works

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Makridakis, S. The forthcoming Artificial Intelligence (AI) revolution: Its impact on society and firms. Futures 2017, 90, 46–60. [Google Scholar] [CrossRef]

- Kang, J.S.; Kang, J.; Kim, J.J.; Jeon, K.W.; Chung, H.J.; Park, B.H. Neural Architecture Search Survey: A Computer Vision Perspective. Sensors 2023, 23, 1713. [Google Scholar] [CrossRef] [PubMed]

- Khurana, D.; Koli, A.; Khatter, K.; Singh, S. Natural language processing: State of the art, current trends and challenges. Multimed. Tools Appl. 2023, 82, 3713–3744. [Google Scholar] [CrossRef]

- Antol, S.; Agrawal, A.; Lu, J.; Mitchell, M.; Batra, D.; Zitnick, C.L.; Parikh, D. VQA: Visual question answering. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2425–2433. [Google Scholar] [CrossRef]

- Czimmermann, T.; Ciuti, G.; Milazzo, M.; Chiurazzi, M.; Roccella, S.; Oddo, C.M.; Dario, P. Visual-based defect detection and classification approaches for industrial applications—A survey. Sensors 2020, 20, 1459. [Google Scholar] [CrossRef] [PubMed]

- Dhar, T.; Dey, N.; Borra, S.; Sherratt, R.S. Challenges of Deep Learning in Medical Image Analysis—Improving Explainability and Trust. IEEE Trans. Technol. Soc. 2023, 4, 68–75. [Google Scholar] [CrossRef]

- Zhu, H.; Togo, R.; Ogawa, T.; Haseyama, M. Diversity Learning Based on Multi-Latent Space for Medical Image Visual Question Generation. Sensors 2023, 23, 1057. [Google Scholar] [CrossRef]

- Huang, X. Safety and Reliability of Deep Learning: (Brief Overview). In Proceedings of the 1st International Workshop on Verification of Autonomous & Robotic Systems, Philadelphia, PA, USA, 23 May 2021. [Google Scholar] [CrossRef]

- Molnar, C. Interpretable Machine Learning: A Guide for Making Black Box Models Explainable; Lean Publishing: Victoria, BC, Canada, 2020. [Google Scholar]

- Lipton, Z.C. The mythos of model interpretability: In machine learning, the concept of interpretability is both important and slippery. Queue 2018, 61, 31–57. [Google Scholar] [CrossRef]

- Berg, T.; Belhumeur, P.N. How Do You Tell a Blackbird from a Crow? In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013. [Google Scholar]

- Doersch, C.; Singh, S.; Gupta, A.; Sivic, J.; Efros, A. What makes paris look like paris? ACM Trans. Graph. 2012, 31, 101. [Google Scholar] [CrossRef]

- Park, D.H.; Hendricks, L.A.; Akata, Z.; Rohrbach, A.; Schiele, B.; Darrell, T.; Rohrbach, M. Multimodal explanations: Justifying decisions and pointing to the evidence. In Proceedings of the IEEE/CVF Conference on Conference Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8779–8788. [Google Scholar] [CrossRef]

- Wu, J.; Mooney, R. Faithful Multimodal Explanation for Visual Question Answering. In Proceedings of the ACL Workshop BlackboxNLP: Analyzing and Interpreting Neural Networks for NLP, Florence, Italy, 1 August 2019; pp. 103–112. [Google Scholar] [CrossRef]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Online, 18–24 July 2021; pp. 8748–8763. [Google Scholar] [CrossRef]

- Marasović, A.; Bhagavatula, C.; sung Park, J.; Le Bras, R.; Smith, N.A.; Choi, Y. Natural Language Rationales with Full-Stack Visual Reasoning: From Pixels to Semantic Frames to Commonsense Graphs. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP, Online, 16–20 November 2020; pp. 2810–2829. [Google Scholar] [CrossRef]

- Kayser, M.; Camburu, O.M.; Salewski, L.; Emde, C.; Do, V.; Akata, Z.; Lukasiewicz, T. E-ViL: A dataset and benchmark for natural language explanations in vision-language tasks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1244–1254. [Google Scholar] [CrossRef]

- Sammani, F.; Mukherjee, T.; Deligiannis, N. NLX-GPT: A Model for Natural Language Explanations in Vision and Vision-Language Tasks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8322–8332. [Google Scholar] [CrossRef]

- Li, X.; Zhou, Y.; Wu, T.; Socher, R.; Xiong, C. Learn to Grow: A Continual Structure Learning Framework for Overcoming Catastrophic Forgetting. Proc. Mach. Learn. Res. 2019, 97, 3925–3934. [Google Scholar]

- Zhu, H.; Togo, R.; Ogawa, T.; Haseyama, M. A multimodal interpretable visual question answering model introducing image caption processor. In Proceedings of the IEEE 11th Global Conference on Consumer Electronics, Osaka, Japan, 18–21 October 2022; pp. 805–806. [Google Scholar] [CrossRef]

- Hu, X.; Gu, L.; Kobayashi, K.; An, Q.; Chen, Q.; Lu, Z.; Su, C.; Harada, T.; Zhu, Y. Interpretable Medical Image Visual Question Answering via Multi-Modal Relationship Graph Learning. arXiv 2023, arXiv:2302.09636. [Google Scholar]

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Networks Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [PubMed]

- Marino, K.; Rastegari, M.; Farhadi, A.; Mottaghi, R. Ok-VQA: A visual question answering benchmark requiring external knowledge. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3195–3204. [Google Scholar]

- Vrandečić, D.; Krötzsch, M. Wikidata: A free collaborative knowledgebase. Commun. ACM 2014, 57, 78–85. [Google Scholar] [CrossRef]

- Zhu, H.; Togo, R.; Ogawa, T.; Haseyama, M. Interpretable Visual Question Answering Referring to Outside Knowledge. arXiv 2023, arXiv:2303.04388. [Google Scholar]

- Ben-Younes, H.; Cadene, R.; Thome, N.; Cord, M. BLOCK: Bilinear superdiagonal fusion for visual question answering and visual relationship detection. Proc. AAAI Conf. Artif. Intell. 2019, 33, 8102–8109. [Google Scholar] [CrossRef]

- Jiang, H.; Misra, I.; Rohrbach, M.; Learned-Miller, E.; Chen, X. In defense of grid features for visual question answering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10267–10276. [Google Scholar] [CrossRef]

- Li, X.; Song, J.; Gao, L.; Liu, X.; Huang, W.; He, X.; Gan, C. Beyond RNNs: Positional self-attention with co-attention for video question answering. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8658–8665. [Google Scholar] [CrossRef]

- Tang, R.; Ma, C.; Zhang, W.E.; Wu, Q.; Yang, X. Semantic equivalent adversarial data augmentation for visual question answering. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 437–453. [Google Scholar]

- Escorcia, V.; Carlos Niebles, J.; Ghanem, B. On the relationship between visual attributes and convolutional networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1256–1264. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Object detectors emerge in Deep Scene CNNs. arXiv 2014, arXiv:1412.6856. [Google Scholar] [CrossRef]

- Liu, P.; Yuan, W.; Fu, J.; Jiang, Z.; Hayashi, H.; Neubig, G. Pre-Train, Prompt, and Predict: A Systematic Survey of Prompting Methods in Natural Language Processing. ACM Comput. Surv. 2023, 55, 1–35. [Google Scholar] [CrossRef]

- Allen, J. Natural Language Understanding; Benjamin-Cummings Publishing Co., Inc.: Menlo Park, CA, USA, 1995. [Google Scholar]

- Reiter, E.; Dale, R. Building applied natural language generation systems. Nat. Lang. Eng. 1997, 3, 57–87. [Google Scholar] [CrossRef]

- Ranathunga, S.; Lee, E.S.A.; Prifti Skenduli, M.; Shekhar, R.; Alam, M.; Kaur, R. Neural machine translation for low-resource languages: A survey. ACM Comput. Surv. 2023, 55, 1–37. [Google Scholar] [CrossRef]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. Technical Report, OpenAI. 2018. Available online: https://openai.com/ (accessed on 25 March 2023).

- Dai, Y.; Gieseke, F.; Oehmcke, S.; Wu, Y.; Barnard, K. Attentional feature fusion. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikola, HI, USA, 3–8 January 2021; pp. 3560–3569. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Young, P.; Lai, A.; Hodosh, M.; Hockenmaier, J. From image descriptions to visual denotations: New similarity metrics for semantic inference over event descriptions. Trans. Assoc. Comput. Linguist. 2014, 2, 67–78. [Google Scholar] [CrossRef]

- Wang, J.; Yang, Z.; Hu, X.; Li, L.; Lin, K.; Gan, Z.; Liu, Z.; Liu, C.; Wang, L. GIT: A Generative Image-to-text Transformer for Vision and Language. arXiv 2022, arXiv:2205.14100. [Google Scholar] [CrossRef]

- Li, J.; Li, D.; Xiong, C.; Hoi, S. BLIP: Bootstrapping language-image pre-training for unified vision-language understanding and generation. In Proceedings of the International Conference on Machine Learning, PMLR, Baltimore, MA, USA, 17–23 July 2022; pp. 12888–12900. [Google Scholar] [CrossRef]

- Yu, J.; Wang, Z.; Vasudevan, V.; Yeung, L.; Seyedhosseini, M.; Wu, Y. CoCa: Contrastive Captioners are Image-Text Foundation Models. arXiv 2022, arXiv:2205.01917. [Google Scholar] [CrossRef]

- Li, J.; Li, D.; Savarese, S.; Hoi, S. BLIP-2: Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models. arXiv 2023, arXiv:2301.12597. [Google Scholar] [CrossRef]

- Gui, L.; Wang, B.; Huang, Q.; Hauptmann, A.; Bisk, Y.; Gao, J. KAT: A knowledge augmented transformer for vision-and-language. arXiv 2021, arXiv:2112.08614. [Google Scholar] [CrossRef]

- Johnson, J.; Douze, M.; Jégou, H. Billion-scale similarity search with GPUs. IEEE Trans. Big Data 2019, 7, 535–547. [Google Scholar] [CrossRef]

- Jocher, G.; Stoken, A.; Borovec, J.; NanoCode012; Stan, C.; Liu, C.; Hogan, A.; Diaconu, L.; Ingham, D.; Gupta, N.; et al. ultralytics/yolov5: v3.1—Bug Fixes and Performance Improvements 2020. Available online: https://zenodo.org/record/4154370#.ZFo7os5BxPY (accessed on 25 March 2023).

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. BLEU: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 7–12 July 2002; pp. 311–318. [Google Scholar] [CrossRef]

- Banerjee, S.; Lavie, A. METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization, Ann Arbor, MI, USA, 29 June 2005; pp. 65–72. [Google Scholar]

- Lin, C.Y.; Hovy, E. Automatic evaluation of summaries using n-gram co-occurrence statistics. In Proceedings of the Human Language Technology Conference of the North American Chapter of the Association for Computational linguistics, Edmonton, AB, Canada, 27 May–1 June 2003; pp. 150–157. [Google Scholar]

- Anderson, P.; Fernando, B.; Johnson, M.; Gould, S. SPICE: Semantic propositional image caption evaluation. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 382–398. [Google Scholar] [CrossRef]

- Vedantam, R.; Lawrence Zitnick, C.; Parikh, D. CIDEr: Consensus-based image description evaluation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4566–4575. [Google Scholar]

- Chen, X.; Fang, H.; Lin, T.Y.; Vedantam, R.; Gupta, S.; Dollár, P.; Zitnick, C.L. Microsoft COCO Captions: Data collection and evaluation server. arXiv 2015, arXiv:1504.00325. [Google Scholar] [CrossRef]

- Nijkamp, E.; Ruffolo, J.; Weinstein, E.N.; Naik, N.; Madani, A. Progen2: Exploring the boundaries of protein language models. arXiv 2022, arXiv:2206.13517. [Google Scholar]

- Jozefowicz, R.; Vinyals, O.; Schuster, M.; Shazeer, N.; Wu, Y. Exploring the limits of language modeling. arXiv 2016, arXiv:1602.02410. [Google Scholar]

- Fernández-González, D.; Gómez-Rodríguez, C. Dependency parsing with bottom-up Hierarchical Pointer Networks. Inf. Fusion 2023, 91, 494–503. [Google Scholar] [CrossRef]

- Chen, S.; Zhao, Q. Rex: Reasoning-aware and grounded explanation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 15586–15595. [Google Scholar]

- OpenAI. GPT: Language Models. 2021. Available online: https://openai.com/language/models/gpt-3/ (accessed on 25 March 2023).

- Li, H.; Srinivasan, D.; Zhuo, C.; Cui, Z.; Gur, R.E.; Gur, R.C.; Oathes, D.J.; Davatzikos, C.; Satterthwaite, T.D.; Fan, Y. Computing personalized brain functional networks from fMRI using self-supervised deep learning. Med. Image Anal. 2023, 85, 102756. [Google Scholar] [CrossRef]

- Rudovic, O.; Lee, J.; Dai, M.; Schuller, B.; Picard, R.W. Personalized machine learning for robot perception of affect and engagement in autism therapy. Sci. Robot. 2018, 3, eaao6760. [Google Scholar] [CrossRef] [PubMed]

| Symbol | Models |

|---|---|

| Language encoder | |

| Vision encoder | |

| Image captioning model | |

| Object recognition model | |

| R | Outside knowledge retrieval model |

| MLP for language features | |

| MLP for image feature | |

| D | Decoder |

| Method | Overview |

|---|---|

| CM1 [17] | The questions are answered directly without reference to the image information, and the corresponding explanations are generated. The model uses a separate question-answering model and an explanation generation model. |

| CM2 [17] | The initial study primarily concerned generating natural language justifications for complex visual reasoning tasks, such as VQA, visual–-textual entailment, and commonsense reasoning. It has separate question-answering models and explanation generation models. |

| CM3 [18] | The method serves as a benchmark for evaluating explainable vision–language tasks. It introduces a unified evaluation framework and comprehensively compares existing approaches that generate natural language explanations for vision–language tasks. |

| CM4 [19] | A language model that is both compact and general in nature and is faithful to the underlying data. This model can predict answers and provide explanations simultaneously. The model introduces large-scale vision and language models to generate explanations and achieve good performance. |

| PM1 [26] | The proposed method only introduces multiple image captions as an additional reference. |

| PM2 | The proposed method only introduces outside knowledge as an additional reference. |

| PM3 | The proposed method only introduces object information as an additional reference. |

| PM4 | The proposed method used four different MLPs with small hidden space sizes to handle different types of reference information. |

| PM5 | The proposed method uses concatenation rather than summation in extracting captions and outside knowledge features from the set. |

| Explanation | Answer | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| BLEU1 | BLEU2 | BLEU3 | BLEU4 | ROUGE-L | METEOR | CIDEr | SPICE | Acc. | |

| CM1 | 59.3 | 44.0 | 32.3 | 23.9 | 47.4 | 20.6 | 91.8 | 17.9 | 63.4 |

| CM2 | 59.4 | 43.4 | 31.1 | 22.3 | 46.6 | 20.1 | 84.4 | 17.3 | 62.7 |

| CM3 | 52.6 | 36.6 | 24.9 | 17.2 | 40.3 | 19.0 | 51.8 | 15.7 | 56.8 |

| CM4 | 64.0 | 48.6 | 36.4 | 27.2 | 50.7 | 22.6 | 104.7 | 21.6 | 67.1 |

| PM | 65.0 | 50.0 | 38.0 | 28.8 | 51.4 | 23.3 | 110.0 | 22.0 | 69.5 |

| Explanation | Answer | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| BLEU1 | BLEU2 | BLEU3 | BLEU4 | ROUGE-L | METEOR | CIDEr | SPICE | Acc. | |

| CM3 | 30.1 | 19.9 | 13.7 | 9.6 | 27.8 | 19.6 | 85.9 | 34.5 | 79.5 |

| CM4 | 35.7 | 24.0 | 16.8 | 11.9 | 33.4 | 18.1 | 114.7 | 32.1 | - |

| PM | 36.4 | 24.6 | 17.1 | 12.2 | 33.9 | 18.4 | 117.2 | 32.5 | 74.2 |

| Explanation | Answer | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| BLEU1 | BLEU2 | BLEU3 | BLEU4 | ROUGE-L | METEOR | CIDEr | SPICE | Acc. | |

| PM1 | 64.0 | 49.1 | 37.2 | 28.3 | 51.3 | 23.1 | 109.8 | 21.3 | 68.5 |

| PM2 | 63.4 | 48.6 | 36.8 | 28.2 | 51.1 | 22.7 | 107.2 | 21.1 | 66.4 |

| PM3 | 63.4 | 48.6 | 36.6 | 27.4 | 51.1 | 22.5 | 106.2 | 21.1 | 66.5 |

| PM4 | 64.4 | 49.0 | 36.6 | 27.2 | 50.9 | 22.6 | 104.0 | 20.9 | 66.5 |

| PM5 | 64.4 | 49.1 | 37.0 | 27.8 | 51.2 | 23.0 | 105.3 | 21.7 | 67.0 |

| PM | 65.0 | 50.0 | 38.0 | 28.8 | 51.4 | 23.3 | 110.0 | 22.0 | 69.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, H.; Togo, R.; Ogawa, T.; Haseyama, M. Multimodal Natural Language Explanation Generation for Visual Question Answering Based on Multiple Reference Data. Electronics 2023, 12, 2183. https://doi.org/10.3390/electronics12102183

Zhu H, Togo R, Ogawa T, Haseyama M. Multimodal Natural Language Explanation Generation for Visual Question Answering Based on Multiple Reference Data. Electronics. 2023; 12(10):2183. https://doi.org/10.3390/electronics12102183

Chicago/Turabian StyleZhu, He, Ren Togo, Takahiro Ogawa, and Miki Haseyama. 2023. "Multimodal Natural Language Explanation Generation for Visual Question Answering Based on Multiple Reference Data" Electronics 12, no. 10: 2183. https://doi.org/10.3390/electronics12102183

APA StyleZhu, H., Togo, R., Ogawa, T., & Haseyama, M. (2023). Multimodal Natural Language Explanation Generation for Visual Question Answering Based on Multiple Reference Data. Electronics, 12(10), 2183. https://doi.org/10.3390/electronics12102183