1. Introduction

Sentiment analysis (SA), a pivotal field in natural language processing (NLP), falls into the area of hate speech and offensive language detection. Pang et al. [

1] described sentiment analysis as the process of identifying and evaluating emotional tones in text to understand and assess opinions and attitudes. SA has gained significant prominence in recent years for its ability to decipher and understand human emotions expressed in textual data. As communication increasingly permeates digital platforms, analyzing sentiments has become essential for businesses, researchers, and organizations aiming to comprehend public opinions, customer feedback, and societal trends. SA identifies the sentiment expressed in the text and then analyzes it. Sentiment analysis aims to find opinions, identify the feelings that people express, and then classify the polarity. The goal of sentence-level sentiment analysis is to classify the sentiment expressed in each sentence. Sentence-level sentiment analysis determines whether the sentence expresses positive or negative opinions.

A survey paper by Medhat et al. [

2] provided an overview of recent updates in sentiment analysis algorithms and applications, categorizing and summarizing fifty-four recently published and cited articles. The articles contributed to various SA-related fields, emphasizing the ongoing research opportunities in enhancing sentiment classification and feature selection algorithms. The study identified Naïve Bayes and Support Vector Machines as frequently used machine learning algorithms for solving sentiment classification problems and underscored the growing interest in languages other than English in SA research, emphasizing the need for resources and studies in these languages. Corso et al. [

3] introduced an improved iteration of PArameter-free Solutions for Textual Analysis (PASTA), a distributed self-tuning engine. This enhanced version is applied to a collection of crisis tweets to automatically organize a corpus of tweets into cohesive and distinct clusters, requiring minimal intervention from analysts. The paper by Wankhade et al. [

4] delved into sentiment analysis and its techniques, emphasizing the investigation of classification methods along with their pros and cons. It covered various levels of sentiment analysis, including procedures such as data collection and feature selection. The survey highlighted the prevalence of supervised machine learning methods, particularly Naïve Bayes (NB) and Support Vector Machines (SVM), due to their simplicity and high accuracy. It also addressed common application areas and explored the significance of challenges in sentiment analysis, including domain dependence. The paper by Jiang et al. [

5] introduced a significant innovation by employing different data ratios for concurrent comparisons with multiple methods. This approach allowed for assessing performance across varying amounts of data. When dealing with limited data, machine learning demonstrated effective performance, while superior results were achieved with deep learning when larger datasets were utilized in their experiments. Notably, the use of Bidirectional Recurrent Neural Networks (BiRNNs) yielded the most favorable outcomes compared to the other methods under consideration. The study thus highlighted the nuanced impact of data ratios on the performance of different methods in the context of the research. The paper by Vigna et al. [

6] aimed to address and prevent the spread of hate campaigns on public Italian pages, using Facebook as a reference. Consequently, the authors introduced hate categories to distinguish different types of hate. Comments were annotated by human annotators based on a defined taxonomy. Two classifiers for the Italian language, employing different learning algorithms, were then designed and tested for their effectiveness in recognizing hate speech on the first manually annotated Italian Hate Speech Corpus of social media text.

Quite often, sentiment analysis is used for marketing purposes. Several research findings indicate that stock market prices do not adhere to a random walk and, to some extent, can be predicted [

7,

8,

9,

10,

11]. For instance, Gruhl et al. [

12] demonstrated the correlation between online chat activity and the prediction of book sales. Liu et al. [

13] utilized a Probabilistic Latent Semantic Analysis (PLSA) model to extract sentiment indicators from blogs in order to forecast future product sales. In addition, Mishne and Rijke [

14] employed evaluations of blog sentiment to anticipate the sales of movies. This meant that the company had a portal and could access reviews under the products analyzed. This way, the company could find out what people thought about its products, what it needed to improve, etc. Politicians use a similar tactic when they analyze the comments under their posts, for example, on social networks, finding out what the voters’ preferences and opinions are [

15,

16,

17].

In our study, we conducted a comprehensive comparison of sentiment analysis methodologies in the Slovak language, assessing precision, accuracy, F1 scores, recall rates, and training times across diverse language models. We examined rule-based systems, traditional machine learning algorithms, and advanced deep learning models for their efficacy in handling Slovak linguistic nuances. The evaluation was performed on three distinct Slovak text datasets. In the introduction, we elucidated the diverse applications of sentiment analysis and its pivotal role in the broader field of natural language processing. Subsequently, we delve into a comprehensive review of related works, highlighting key advancements in sentiment analysis and providing a contextual backdrop for our study. Following this, we introduce the methodologies and datasets integral to our experimental framework, offering transparency regarding our chosen approaches and data sources. In the final stages of our study, we present a detailed examination of the achieved results and conduct a comparative analysis, providing valuable insights into the relative efficacy of the applied methods. Through this structured approach, our study contributes to the evolving discourse on sentiment analysis methodologies and their practical implications.

The paper’s Introduction aimed to familiarize readers with the issue of sentiment analysis, and we provide a description of our motivation and goals in

Section 1. Subsequently,

Section 2 presents individual related works, while

Section 3 characterizes the research methodology. In

Section 4, the proposed approach, training datasets, and utilized methods are described.

Section 5 provides a detailed account of the experiments and results for the two solved tasks. Following this,

Section 6 features a discussion of the achieved results and a comparison. The final

Section 7 draws conclusions and proposes the next direction for our work.

Motivation

Sentiment analysis is a technical tool through which we can better understand and respond to the thoughts, feelings, and opinions of individuals and societies [

1,

18]. It has far-reaching implications across various domains, offering improved decision-making, enhanced user experiences, and the potential to drive positive social change [

19]. Therefore, investing in sentiment analysis is not only good but essential in our data-driven world [

20,

21].

To our knowledge, there are only a few works that have reported results in this area. In our opinion, there is still room for improvement in this task. The primary scientific contribution and motivation of this paper resides in the release of a novel dataset in the Slovak language. The dataset underwent annotation by a specifically chosen scientific cohort. Notably, it stands as the most extensive dataset documented to date within the domain of research centered on the Slovak language. The “SentiSK” dataset was systematically subjected to experimental evaluations, as were the other selected datasets in the study. For this reason, we examined publicly available datasets in the Slovak language and applied several of the classification methods that are most often used to classify texts for sentiment analysis tasks. Subsequently, we compared the achieved results with those of other studies that have dealt with this issue for the Slovak language as well as other languages.

The aim of this study, excluding the publication of the “SentiSK” dataset to the public, was to compare the results of our dataset with those of other existing datasets in the Slovak language. This comparison was intended to determine how our data stand and whether the direction taken in data collection was correct.

4. Proposed Approach

The primary objective of our research was to conduct a comparison of existing datasets in the Slovak language for sentiment analysis, employing various classification algorithms and models. For the experimental solution, we used three datasets in the Slovak language. These datasets were chosen because, at the time of our experiential solution, there were no other datasets publicly accessible in the Slovak language. Additionally, we aimed to compare datasets that were not machine-translated and were very similar in structure and annotation. The selected datasets are described in

Section 4.1. We used classification algorithms and models, which we describe in

Section 4.2.

4.1. Training Datasets

As we mentioned earlier, we used three different datasets in our experimental solution. The first one was our dataset in the Slovak language, which we annotated manually. We named this dataset “SentiSK”, and it contained 34,006 comments from the Facebook social network. The comments were obtained using a Python tool for scraping data from websites, and they were comments under the contributions of three Slovak politicians. The preprocessing of the data consisted of clearing the text of unwanted characters and deleting blank lines, empty spaces, dots, etc. For the preprocessing, we used the NLTK library. We annotated the data with the Prodigy annotation tool provided by our Department of Electronics and Multimedia Communications. We marked each comment as negative, neutral, or positive. In total, there were 20,655 negative, 9573 neutral, and 3778 positive comments in the dataset. Since we downloaded the data from the posts of Slovak politicians, there were a lot of negative comments. For this reason, the dataset was class-imbalanced.

Upon a brief exploration, we identified a freely accessible dataset [

28] in the Slovak language available on the Internet. Its creators developed and utilized the dataset for a task involving multilevel text preprocessing to address the intricacies of user-generated social content. The original dataset was categorized into five groups. For ease of comparison with our dataset, we consolidated the “strongly negative” category with the “negative” category and merged the “strongly positive” with the “positive” category. The “neutral” category remained unchanged. In the final structure, the dataset comprised a total of 1584 comments, with 709 comments labeled as “negative”, 573 as “positive”, and 306 as “neutral”. Termed “Sentigrade” by the authors, this dataset was curated from Seesame’s Facebook pages and the associated comments on these pages.

The third dataset in this experiment was the “Slovak dataset for SA”, which was created by Machova during her research [

32]. The dataset is available online on the website [

55]. The dataset contains 2669 negative and 2573 positive comments.

It is possible to notice that the datasets we obtained from the Internet were significantly smaller in terms of the number of comments. On the other hand, we can see that these datasets were more class-balanced. We describe the selected datasets in more detail in

Table 4.

4.2. Used Methods

We used the Scikit-learn library to train the models. Scikit-learn, often referred to as sklearn, is a widely used open-source machine learning library designed for the Python programming language. This library contains efficient implementations of many popular machine learning algorithms, like Support Vector Machines, Random Forests, and k-nearest neighbors [

56]. In the following paragraphs, we briefly describe all the methods that we applied in our experimental solution.

The Random Forest Classifier (RFC) is as a machine learning algorithm tailored for classification tasks. During training, the algorithm constructs multiple decision trees and outputs the mode of classes (for classification) or an average prediction (for regression) derived from individual trees. Essentially, it amalgamates predictions from multiple decision trees to create a more accurate and robust model compared to a single decision tree. The algorithm operates on the principle of randomly selecting a subset of features and data samples for each tree, training them independently. This introduction of randomness mitigates overfitting, enhancing the model’s generalization capability. In prediction, the algorithm aggregates the results from all trees to generate a final prediction [

57].

Strengths: RFCs are highly adaptable and can be utilized for diverse tasks like classification, regression, and outlier detection. Compared to other algorithms like decision trees, RFCs are more resistant to overfitting. This resilience stems from the aggregation of multiple tree predictions, thereby lowering the model’s variance. Due to their proficiency in handling non-linear data, RFCs are adept at modeling complex variable relationships, a significant advantage in scenarios with non-linearly separable data. One of the key capabilities of RFCs is their ability to pinpoint crucial data features. This is beneficial for both understanding variable interconnections and for the process of feature selection.

Weaknesses: In terms of interpretability, RFCs lag behind simpler algorithms such as decision trees. The complexity of being an ensemble model obscures the clarity of their predictive processes. When dealing with high-dimensional data, RFCs tend to be slower in both the training and prediction phases. The necessity of constructing numerous trees contributes to this computational burden. RFCs demand more extensive datasets for training in comparison to algorithms like decision trees. The requirement to build multiple trees means that they need more data to achieve effective generalization [

58].

The Multilayer Perceptron (MLP) classifier is a machine learning algorithm for analyzing tasks. The algorithm is a type of neural network. It processes data in one direction, from input to output. The MLP classifier consists of one or more nodes, each of which performs a weighted sum of inputs followed by a non-linear activation function. The output of each node is then transferred as input to the next layer until the final output layer is reached [

59].

Strengths: MLPs are capable of learning complex relationships between input and output variables, even when the relationships are non-linear. This makes them well-suited for tasks such as image recognition and natural language processing. MLPs can be trained on large datasets, which can help them to capture more complex patterns in the data.

Weaknesses: MLPs can be prone to overfitting, which means that they may learn the training data too well and perform poorly on new data. This can be a problem when training on small datasets. They also require significant computational resources. MLPs can be computationally expensive to train, especially when using large datasets. This can make them impractical for some applications. The performance of an MLP can be sensitive to the choice of hyperparameters, such as the number of hidden layers and the number of neurons in each layer. This can make it difficult to tune MLPs to work well on a particular task [

60].

Logistic Regression (LR) is a machine learning algorithm. The algorithm is based on the probability that an event will occur. Logistic Regression uses a logistic function (also known as a symbol function) to map the input characteristics to probability values between 0 and 1. If the probability is higher than the threshold value (usually 0.5), the event is expected to occur, and if it is not, the event is not expected to occur [

61].

Strengths: LR stands out for its simplicity and efficiency, making it easy to implement and train while being computationally light. It provides probabilistic outcomes, offering insights into the likelihood of each class for specific data points, which is particularly valuable in tasks like risk assessment. LR is effective in determining the relationship between input features and the target variable.

Weaknesses: LR is built on the premise of a linear relationship between input and output variables, which can lead to subpar results in scenarios with non-linear relationships. In cases where input variables and the output have a non-linear relationship, LR may not perform as well as other algorithms capable of modeling non-linear relationships, such as decision trees or Support Vector Machines. LR’s applicability is primarily limited to binary classification, making it less suitable for multiclass classification tasks where alternatives like Multinomial Logistic Regression or Random Forests may be more effective [

62].

A Support Vector Classifier (SVC) searches for the best hyperplane to split input data into different classes. The hyperplane is selected to maximize the margin (i.e., the distance between the hyperplane and the closest data point of each class). The data points on the margin are called support vectors.

Strengths: SVCs excel in high-dimensional spaces, outperforming traditional linear classifiers. Their efficiency in these complex spaces comes from the kernel trick, which effectively maps data into a higher-dimensional space for linear separation. They demonstrate remarkable versatility by handling both linear and non-linear separable data. The kernel trick equips SVCs with the flexibility to adapt to the data’s structure, enhancing their resilience to noise and outliers. Known for their strong generalization capabilities, SVCs often perform well with unseen data. This strength is rooted in their training optimization process, which aims to balance minimizing training errors and model complexity.

Weaknesses: Training SVCs can be computationally demanding, particularly for large datasets, due to the complex quadratic optimization problem involved in their training. The efficacy of SVCs heavily depends on the selection of the kernel function and hyperparameters, necessitating meticulous tuning for optimal results. While adept at binary classification, SVCs are not inherently designed for multiclass classification tasks, making other algorithms like multiclass SVMs or Random Forests more suitable for such scenarios [

63].

The K-Neighbors Classifier (KNN) classifies new data points based on the k-nearest neighbor class in the training data. During training, the K-Neighbors Classifier stores trained data points in a data structure that allows an efficient search for the nearest neighbor [

64].

Strengths: KNN is renowned for its simplicity, both in understanding and implementation, lacking a complex training phase, which makes it ideal for beginners in machine learning. As an instance-based learning algorithm, KNN does not build a conventional model but instead stores the entire dataset for classification tasks. This attribute allows it to scale well with large datasets. KNN’s ability to adjust to new data and shifts in data distribution is a significant advantage, especially in scenarios where data are continually evolving.

Weaknesses: Classifying new data points with KNN can be computationally heavy, particularly with larger datasets, due to the necessity of comparing each new point with all points in the training set. The algorithm’s performance is highly sensitive to how the features are scaled. Incorrect scaling can severely impair KNN’s effectiveness because it relies on distance measurements for determining similarity between data points. In high-dimensional spaces, KNN’s efficacy diminishes. The exponential increase in potential neighbors as dimensions grow complicates the identification of the most relevant neighbors, often leading to suboptimal classification results [

65].

The Multinomial NB (MNB) is based on the Bayes theorem. It assumes that the probability of a property for a class is independent of other properties. It models the probability of each class (e.g., different categories of text documents) based on the frequency of occurrence of different features (e.g., words or phrases) in the input data. It assumes that the frequency of different characteristics follows a multinomial distribution [

66].

Strengths: MNB stands out for its efficiency in both training and classifying data, making it particularly suitable for handling large datasets. It is highly effective for text classification tasks, capable of managing extensive feature spaces and adeptly capturing relationships between words within documents. MNB’s ability to deal with large feature spaces is a critical advantage in text classification, where the number of unique words (features) can be exceedingly high.

Weaknesses: A fundamental limitation of MNB is its assumption of feature independence. In real-world data, this assumption is often not met, potentially leading to subpar classification performance. The algorithm can struggle with imbalanced datasets where one class significantly outweighs others. MNB’s reliance on feature frequency in class probability assignment can skew results in favor of the more prevalent class. MNB is primarily designed for categorical data, with features having a limited set of values. Its effectiveness diminishes with continuous data, which encompass a broader range of feature values [

67].

Multilingual BERT (mBERT) is a variant of BERT that is pretrained on a large corpus of texts in multiple languages, allowing it to perform well on multilingual natural language processing tasks. mBERT is trained to jointly model language-specific and common features of multiple languages, allowing it to transfer knowledge between languages and improve performance in low-resource languages. The mBERT architecture is based on a transformational model. The transformation model uses attention mechanisms that allow the model to focus on different parts of the input sequence at each layer. The mBERT model has 110 million parameters [

68].

Strengths: mBERT is a versatile multilingual neural network model, proficient in processing and understanding text across multiple languages. This capability renders it highly valuable for applications like machine translation, cross-lingual sentiment analysis, and question answering in various languages. The model excels in grasping deep contextual relationships within sentences, enabling it to comprehend the contextual meaning of words. This feature is crucial for complex tasks such as natural language understanding and effective machine translation. mBERT offers the flexibility to be fine-tuned for specific tasks and domains, allowing for customization to meet the specific requirements of different applications.

Weaknesses: One major drawback of mBERT is its size and complexity, necessitating substantial computational resources for training and fine-tuning. This aspect can limit its practicality in some scenarios. The complexity of mBERT can also pose challenges in terms of understanding and implementation, potentially making it less accessible for certain users. The effective fine-tuning of mBERT demands specialized knowledge in machine learning and natural language processing, which can be a barrier for users without such expertise [

68].

SlovakBERT is a variant of BERT that was specially trained for the Slovak language. SlovakBERT is trained to capture the context of Slovak words in sentences and can perform well in various natural language processing tasks. SlovakBERT has 110 million parameters for the largest version and 30 million for the basic version. SlovakBERT is an open-source model and can be fine-tuned to specific natural language processing tasks using relatively small amounts of data for specific tasks [

34].

Strengths: SlovakBERT’s specialization in processing Slovak text, thanks to its training on a vast corpus of the Slovak language, enables it to adeptly capture linguistic nuances. This attribute makes it particularly effective for tasks like sentiment analysis, named entity recognition, and machine translation involving the Slovak language. The model is designed to understand contextual meanings within sentences, a key feature for comprehensive natural language understanding and accurate machine translation in Slovak. SlovakBERT offers adaptability, allowing for fine-tuning to cater to specific tasks and domains. This customization potential enables it to meet the unique needs of various applications involving the Slovak language.

Weaknesses: Its exclusive focus on the Slovak language limits its applicability; SlovakBERT is not suitable for tasks involving languages other than Slovak. Due to its size and complexity, SlovakBERT demands significant computational resources for training and fine-tuning, which may render it impractical for certain applications or settings with limited resources. Achieving optimal performance on specific tasks with SlovakBERT requires meticulous fine-tuning, a process that can be both time-intensive and necessitates expertise in machine learning and natural language processing [

34].

The purpose of the experiment was to compare the accuracy, precision, recall, F1 score, and running time of classification algorithms. The test machines included a CPU Core i7920 with 2.67 GHz, 32 GB of RAM, 2 NVIDIA GeForce 1080, and 12 GB of VRAM. We used Scikit to train statistics classifiers. Hugging-face transformers were used to fine-tune the neural language model.

6. Discussion

Overall, we can say that our results for the Slovak language were satisfying. The experimental results in the first task (

Section 5.1) were slightly lower than in the second experimental task (

Section 5.2). We managed to outperform the results of other studies focused on the Slovak language, which we described in

Section 2.2, in most cases. There are not many tools available to preprocess comments from social networks in the Slovak language, unlike other languages (mainly English). Since the processing of Slovak texts is significantly more demanding than processing for the English language, we consider our results to be very favorable. The employed methodologies were straightforward. However, the primary objective was to conduct a comparative analysis between the outcomes derived from our dataset and those of other existing datasets. In pursuit of objectivity, we systematically scrutinized the selected datasets by means of our proprietary source code, ensuring a rigorous evaluation process. Also, the double or triple checking of the data could be introduced, as the data were annotated by a specifically chosen scientific cohort, and each sentence was annotated only once, indicating a lack of back-checking. Additionally, methodologies based on the embedding and vectorization of the data could be explored. In our upcoming work, we will emphasize embedding to more effectively identify the words influencing the sentiment in a sentence. Our primary criterion for making this determination will be the frequency of the word’s occurrence.

In comparison with [

29], where the authors achieved an accuracy of 76.22% for Support Vector Machine (SVM) and two-class classification, we achieved a higher accuracy (88.20%). On the other hand, for three-class classification, we achieved an accuracy of 65.60%, and the above authors had a very similar accuracy of 66.25%.

Further, in [

30], the authors achieved an F1 score of 69.78% in three-class classification by the BiLSTM model. With the SVM model, they achieved an F1 score of 68.40%, and we achieved an F1 score of 60.26%.

Ref. [

32] achieved an accuracy of 74.30% by the lexicon approach using BBPSO labeling representation and an accuracy of 80.70% by the lexicon approach in combination with Naïve Bayes, also using BBPSO labeling representation (both for two-class classification). We achieved similar or better results using the RFC, LR, SVC, mBERT, and SlovakBERT models for two-class classification. It should be noted that using the same data, but different training methods, we achieved better results by up to 24%.

In [

36], the highest F1 score in task sentiment analysis was 67.20% for two-class classification and 70.50% for three-class classification using the SlovakBERT model. By using the same model, we achieved an F1 score of 71.53% for two-class classification and 90.65% for three-class classification.

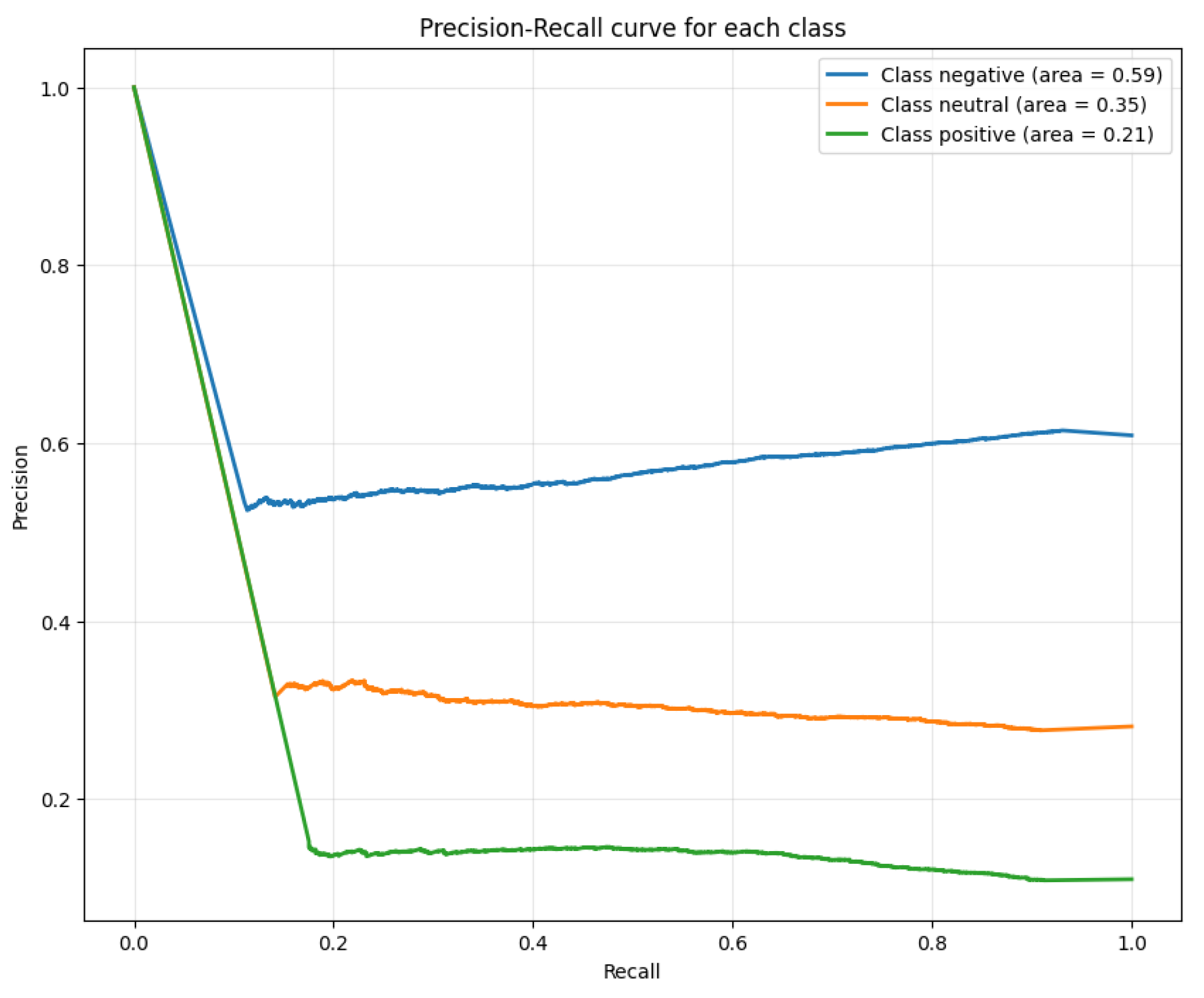

It is also interesting to observe how the results for individual classes (see

Table 6 and

Table 8) correlate with the class balance of individual datasets (see

Table 4). Consequently, it is crucial to pay attention to the balance of datasets. We will certainly apply these observations in our further research.

7. Conclusions

In this paper, we described the latest research in the field of sentiment analysis on social networks for the Slovak language. As part of our experimental solution, we tested three Slovak datasets that contained comments from social networks. For the first task, where we tested three classes (positive/neutral/negative), we achieved F1 scores ranging from 67.68% to 71.53% for the SlovakBERT model on the “SentiSK” and “Sentigrade” datasets. In the second task, where we tested only two classes (positive/negative), we achieved F1 scores ranging from 75.35% to 95.04% for the mBERT and SlovakBERT models on the “SentiSK”, “Sentigrade”, and “Slovak dataset for SA” datasets. Eight algorithms or models were used for testing the datasets, namely RFC, MLP, LR, SVC, KNN, Multinomial NB, mBERT, and SlovakBERT. Our primary objective did not entail the introduction of novel insights into the domain of sentiment analysis. Our central aim was to contribute by presenting and disseminating a dataset that underwent manual annotation. Subsequently, we subjected our annotated dataset to rudimentary classification methodologies, followed by a comparative analysis of the outcomes against existing freely accessible datasets in the Slovak language. In our work, we were limited by the lack of datasets in the Slovak language for comparing our results and by the imbalance of the corpus we created. Our paper is primarily aimed at a Slovak audience and Slovak researchers but is also relevant for researchers of other languages. After we publish the dataset, researchers can repeat and validate our experiments and try to achieve similar or better results. Researchers can also translate the datasets into another language, for example through machine translation.

In the near future, we plan to obtain new sentiment data and compare them with the values we obtained herein. We plan to publish both the dataset and the training codes used in this article so that other researchers can also try the experiments. We plan to create a web interface for sentiment detection. Additionally, we plan to concentrate on addressing the issue of hate speech in the Slovak language. Our approach involves creating a balanced dataset in Slovak, manually annotating it, and subsequently developing an automatic annotator specifically tailored for hate speech and offensive language in Slovak. In our subsequent research, we will explore a combination of multiple classifiers. Additionally, we aim to machine-translate an existing database into Slovak and compare the performance of various models across different languages.