MeMPA: A Memory Mapped M-SIMD Co-Processor to Cope with the Memory Wall Issue

Abstract

:1. Introduction

- MeMPA design resumes from a previous work named Hybrid-SIMD [2]. The Hybrid-SIMD is a SIMD vectorial co-processor that combines memory and computational capabilities to reduce the Memory Wall overhead for highly parallel data-intensive applications. Yet, Hybrid-SIMD supports a small amount and very specific operations, essentially limited by the increasing complexity and performance degradation. Hence, the MeMPA co-processor was designed to improve the computing and programming capabilities by organizing the processing elements (PEs) in a matrix fashion instead of a vectorial one and accurately devising the PE’s internal structures.

- The PE structure was derived by statistical analysis on different benchmarks consisting of profiling the algorithms and estimating the most recurrent instructions that were later integrated inside MeMPA.

- The MeMPA concept stresses programming generality even more. Since the Hybrid-SIMD could not efficiently execute sequential portions of the algorithms because of its intrinsic structural limitations, the MeMPA PEs matrix was enriched with different programmable interconnections, drastically dropping the algorithm execution time and leading to significant energy savings.

- To push even more toward a maximized parallel execution, the computing paradigm of the MeMPA co-processor was designed to refer to the Multiple-SIMD (M-SIMD) approach to enable the execution of different instructions on different datasets at the same time.

- MeMPA was compared with Hybrid-SIMD in terms of execution time and energy for the same set of benchmarks used in [2] to demonstrate the improvements achieved by the MeMPA structure.

- Finally, MeMPA was inserted inside a CPU-Memory context. Two systems were evaluated: CPU-Mem, based on a classical structure with a RISC-V core, and CPU-Mem-MeMPA, which considers the MeMPA insertion. In this work, other BvNC solutions presented in the literature are not considered as criteria for comparison because the attention is focused on the evaluation of the improvements of MeMPA with respect to Hybrid-SIMD and the MeMPA impact in a classical von Neumann CPU-Memory system.

2. Algorithm Profiling

- Choose the reference Instruction Set Architecture (ISA). This paper used a RISC-V-based system, requiring cross-compiling the benchmarks for a RISC-V ISA. The RISC-V GNU Toolchain from [22] was configured with base integer, multiplication/division, and atomic extensions but not with the floating-point one since MeMPA architecture does not support floating-point calculations. The built toolchain was used to compile the benchmarks and generate the executable files.

- Run the benchmarks and trace the algorithm execution. For these purposes, the Gem5 Simulator [23] was used in system-call emulation mode. Gem5 executes SPLASH-2 benchmarks with the instructions trace feature enabled. In this way, for each algorithm, the simulator prints a disassembled version, reporting the actual instructions executed by the core. These data are saved into a file named program.out.

- Estimate the instructions occurrences. The program.out file was parsed by a Python script that counts the number of instructions for each algorithm. A final plot is shown in Figure 1, which considers all the instruction counts contributions of each benchmark in percentage. For example, considering the addi instruction, its value was obtained as the sum of the number of addi instructions for each benchmark (or test) divided by the total number of instructions of each benchmark (which is ∼64 M), following Equatiion (1).

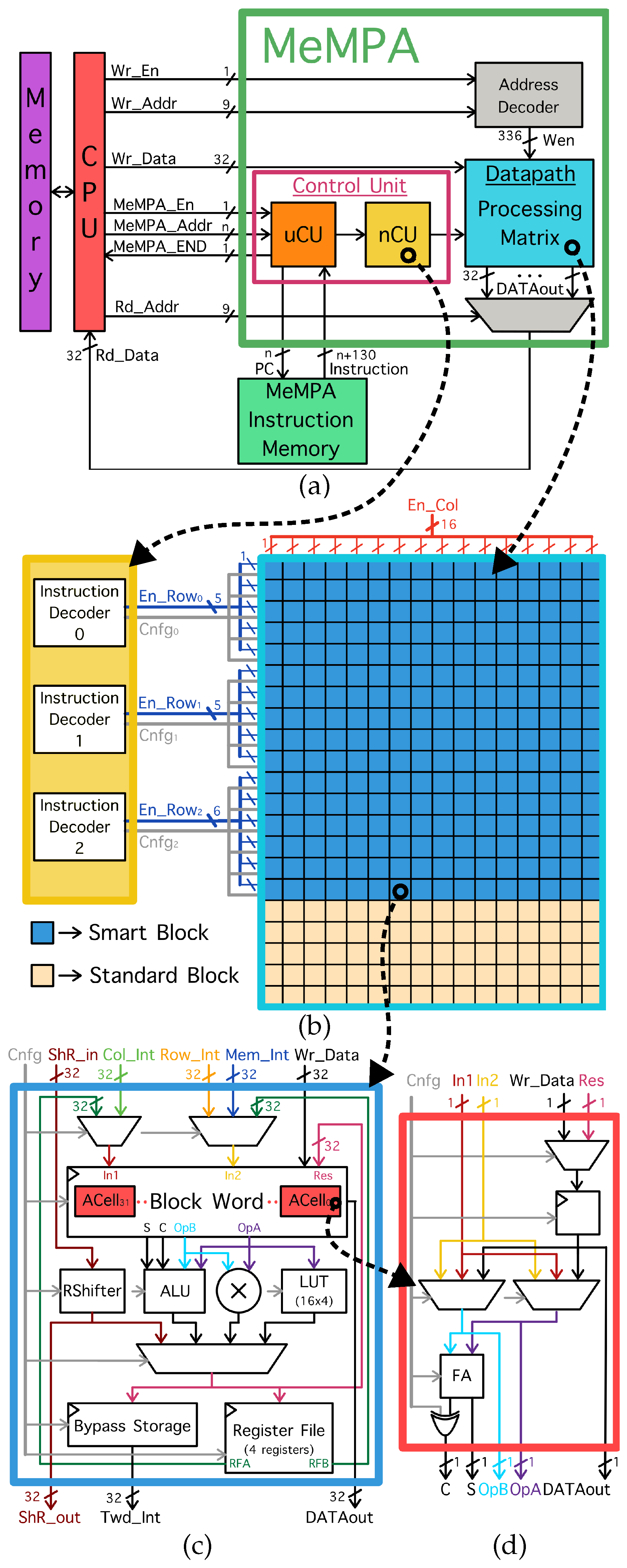

3. Architecture

3.1. System Overview

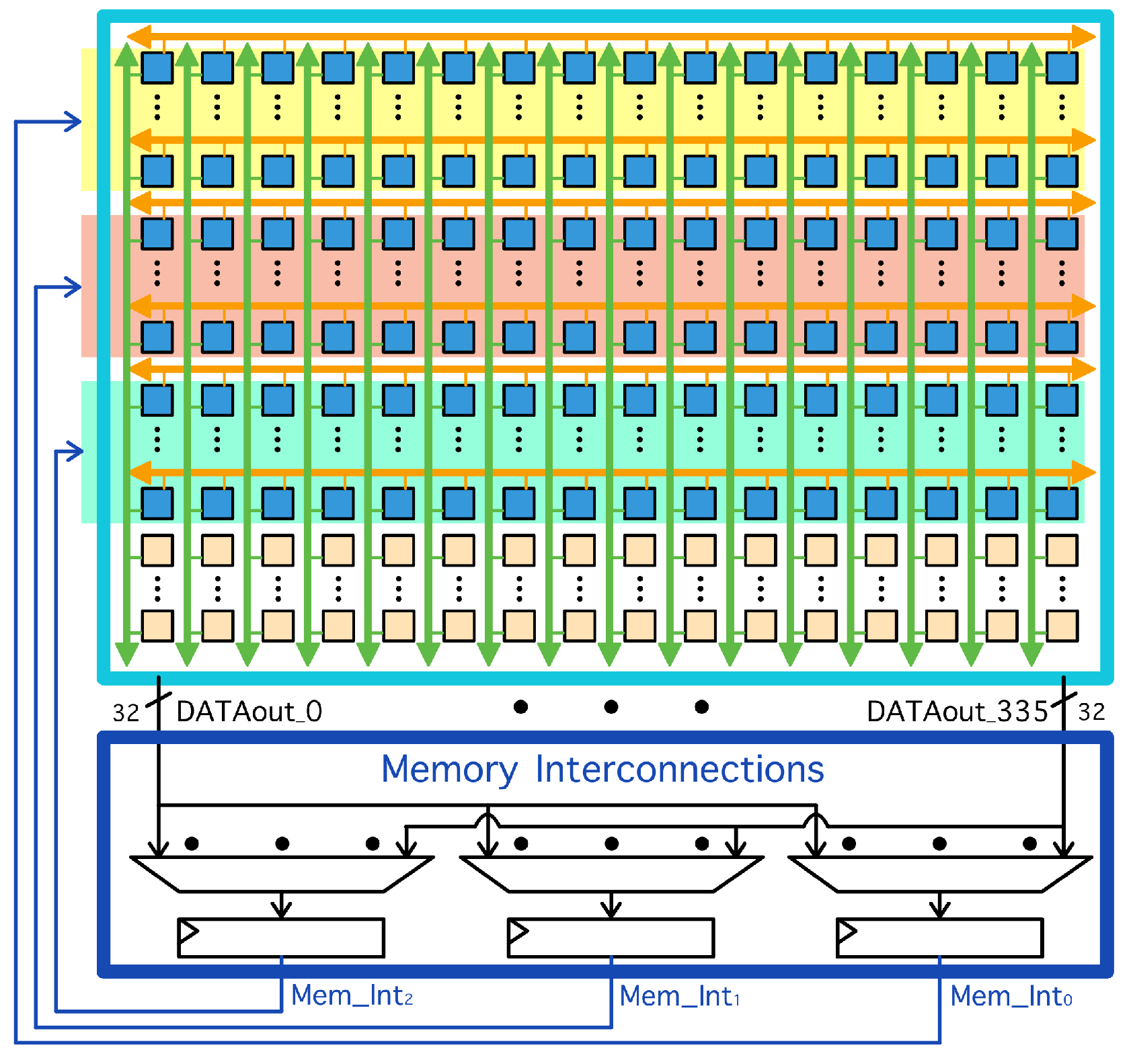

3.2. Datapath: The Processing Matrix

3.2.1. Routing Network

3.2.2. Smart Block

3.3. Instructions Organization

- EN_ROW: contains the enabling signals (En_Row) of the Processing Matrix rows as shown in Figure 2b;

- OPCODE: tells whether the operation to be performed is a load or an arithmetical one and, in this last case, specifies by which of the arithmetic-logic blocks, among RShifter, ALU, Multiplier, and LUT, that operation has to be carried out;

- SOURCE_OP: selects which is the operand or couple of operands and their order for the required operation processing, choosing among data coming from Column Interconnections, Row Interconnections, Memory Interconnections, Register File, or Block Word;

- DEST_OP: indicates where the operation result should be stored in the Smart Block among Block Word, Bypass Storage, and Register File;

- ADDR_S1: specifies the address of the data to be elaborated when one of the operands selected through the SOURCE_OP field comes from the Column Interconnections, or the first output port of the Register File (RFA);

- ADDR_S2: specifies the address of the data to be elaborated when one of the operands selected through the SOURCE_OP field comes from the Row Interconnections, the Memory Interconnection, or the second output port of the Register File (RFB);

- ADDR_D: complements the DEST_OP field in case the Register File is selected as destination storage, holding the specific address of the register involved;

- FUNC: is used to further detail which among the operations implemented by the arithmetic-logic block selected through the OPCODE field has to be performed.

4. Performance

- Area occupation: 1.55 mm2.

- Maximum clock frequency (fclk): 257.77 MHz.

- Worst-case power: 670.48 mW @fclk = 250 MHz.

5. Benchmarks Mapping

MVM

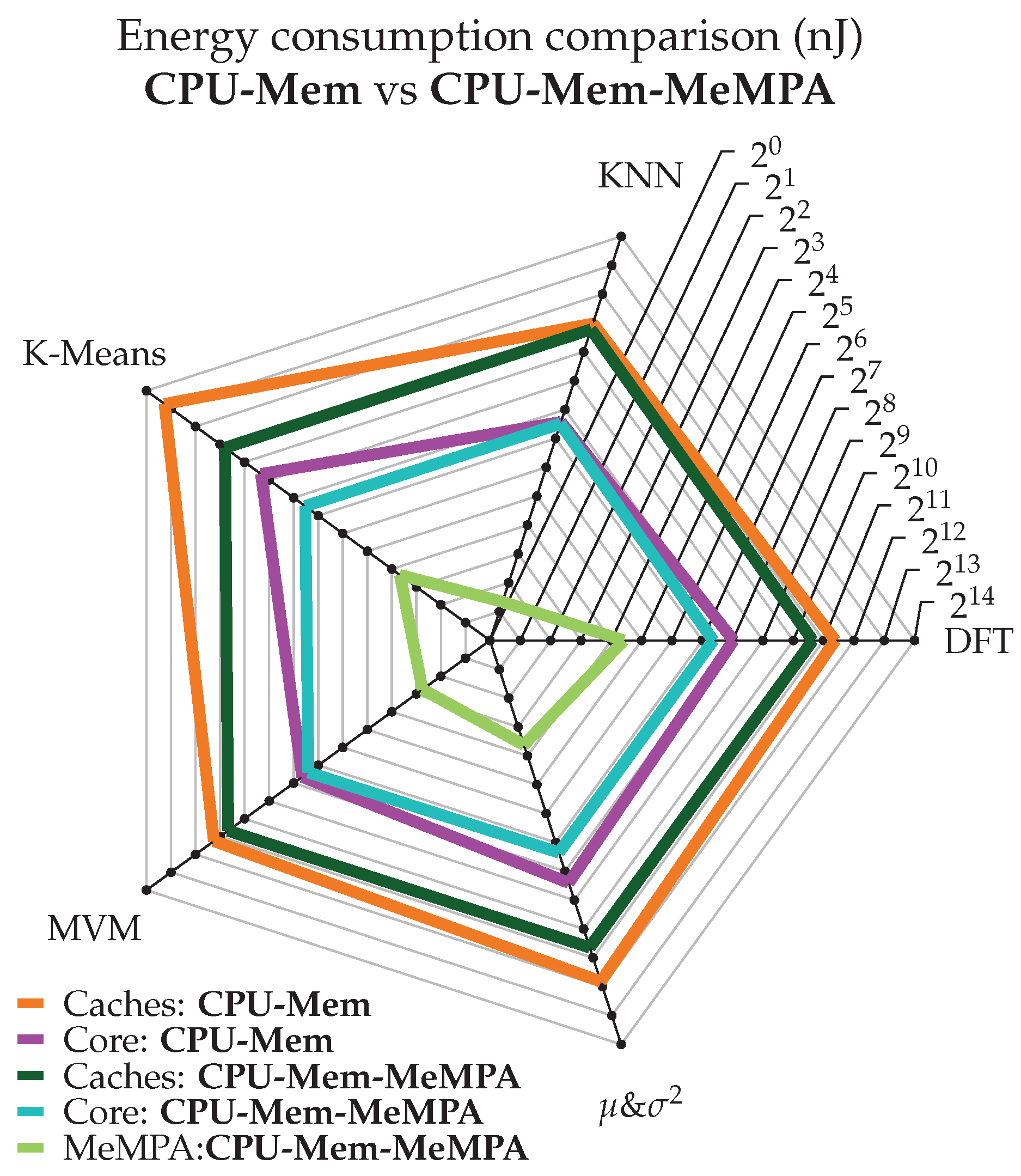

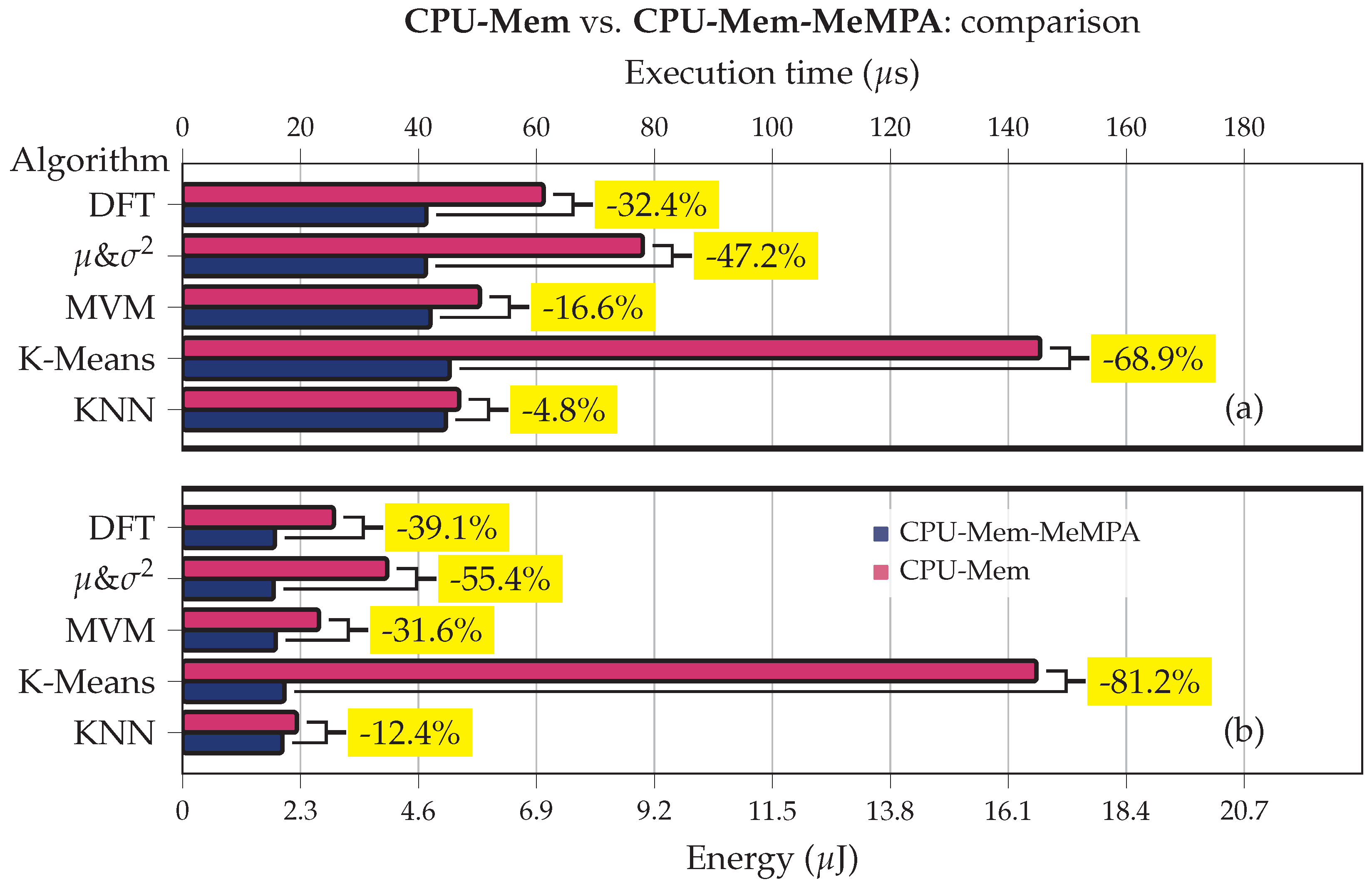

6. Performance Comparisons on Benchmarks

6.1. MeMPA vs. Hybrid-SIMD

6.2. RISC-V with Normal Memory

- Implementation of the algorithms in C. CPU-Mem solution implements the whole algorithm in the core, while CPU-Mem-MeMPA simply conveys data from caches inside MeMPA sequentially.

- Compilation of the benchmarks with RISC-V GNU Toolchain and simulation with Gem5. The CPU is an In-Order model (TimingSimpleCPU) that runs in the system call-emulation mode.

- Analysis of stats.txt output file. At the end of the Gem5 simulation, an output file is generated containing statistics like the number of memory accesses for each cache, the total number of executed instructions, etc.

- Memory consumption estimation with Cacti by HP [24]. Cacti is a tool able to model caches very precisely. It outputs parameters like the energy/access, starting from some essential memory characteristics (e.g., the size, the memory type, the associativity, the technology node, etc.). The memory consumption is simply obtained by multiplying the energy/access for each memory by the total accesses to that memory. This last information is stored inside stats.txt.

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hennessy, J.L.; Patterson, D.A. Computer Architecture: A Quantitative Approach; Elsevier: Amsterdam, The Netherlands, 2017. [Google Scholar]

- Coluccio, A.; Casale, U.; Guastamacchia, A.; Turvani, G.; Vacca, M.; Roch, M.R.; Zamboni, M.; Graziano, M. Hybrid-SIMD: A Modular and Reconfigurable approach to Beyond von Neumann Computing. IEEE Trans. Comput. 2021, 71, 2287–2299. [Google Scholar] [CrossRef]

- Akyel, K.C.; Charles, H.P.; Mottin, J.; Giraud, B.; Suraci, G.; Thuries, S.; Noel, J.P. DRC 2: Dynamically Reconfigurable Computing Circuit based on memory architecture. In Proceedings of the 2016 IEEE International Conference on Rebooting Computing (ICRC), San Diego, CA, USA, 17–19 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–8. [Google Scholar] [CrossRef]

- Lin, Z.; Zhan, H.; Li, X.; Peng, C.; Lu, W.; Wu, X.; Chen, J. In-Memory Computing with Double Word Lines and Three Read Ports for Four Operands. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2020, 28, 1316–1320. [Google Scholar] [CrossRef]

- Ali, M.F.; Jaiswal, A.; Roy, K. In-Memory Low-Cost Bit-Serial Addition Using Commodity DRAM Technology. IEEE Trans. Circuits Syst. I Regul. Pap. 2019, 67, 155–165. [Google Scholar] [CrossRef]

- Seshadri, V.; Lee, D.; Mullins, T.; Hassan, H.; Boroumand, A.; Kim, J.; Kozuch, M.A.; Mutlu, O.; Gibbons, P.B.; Mowry, T.C. Ambit: In-memory accelerator for bulk bitwise operations using commodity DRAM technology. In Proceedings of the 2017 50th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO), Cambridge, MA, USA, 14–18 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 273–287. [Google Scholar] [CrossRef]

- Jaiswal, A.; Chakraborty, I.; Agrawal, A.; Roy, K. 8T SRAM cell as a multibit dot-product engine for beyond von Neumann computing. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2019, 27, 2556–2567. [Google Scholar] [CrossRef]

- Wang, H.; Yan, X. Overview of resistive random access memory (RRAM): Materials, filament mechanisms, performance optimization, and prospects. Phys. Status Solidi (RRL)-Rapid Res. Lett. 2019, 13, 1900073. [Google Scholar] [CrossRef]

- Kvatinsky, S.; Belousov, D.; Liman, S.; Satat, G.; Wald, N.; Friedman, E.G.; Kolodny, A.; Weiser, U.C. MAGIC—Memristor-aided logic. IEEE Trans. Circuits Syst. II Express Briefs 2014, 61, 895–899. [Google Scholar] [CrossRef]

- Durlam, M.; Naji, P.; DeHerrera, M.; Tehrani, S.; Kerszykowski, G.; Kyler, K. Nonvolatile RAM based on magnetic tunnel junction elements. In Proceedings of the 2000 IEEE International Solid-State Circuits Conference. Digest of Technical Papers (Cat. No. 00CH37056), San Francisco, CA, USA, 9 February 2000; IEEE: Piscataway, NJ, USA, 2000; pp. 130–131. [Google Scholar] [CrossRef]

- Rakin, A.S.; Angizi, S.; He, Z.; Fan, D. Pim-tgan: A processing-in-memory accelerator for ternary generative adversarial networks. In Proceedings of the 2018 IEEE 36th International Conference on Computer Design (ICCD), Orlando, FL, USA, 7–10 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 266–273. [Google Scholar] [CrossRef]

- Sebastian, A.; Le Gallo, M.; Khaddam-Aljameh, R.; Eleftheriou, E. Memory devices and applications for in-memory computing. Nat. Nanotechnol. 2020, 15, 529–544. [Google Scholar] [CrossRef] [PubMed]

- Giannopoulos, I.; Sebastian, A.; Le Gallo, M.; Jonnalagadda, V.; Sousa, M.; Boon, M.; Eleftheriou, E. 8-bit precision in-memory multiplication with projected phase-change memory. In Proceedings of the 2018 IEEE International Electron Devices Meeting (IEDM), San Francisco, CA, USA, 1–5 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 27.7.1–27.7.4. [Google Scholar] [CrossRef]

- Akin, B.; Franchetti, F.; Hoe, J.C. Data reorganization in memory using 3D-stacked DRAM. ACM SIGARCH Comput. Archit. News 2015, 43, 131–143. [Google Scholar] [CrossRef]

- Jeddeloh, J.; Keeth, B. Hybrid memory cube new DRAM architecture increases density and performance. In Proceedings of the 2012 symposium on VLSI technology (VLSIT), Honolulu, HI, USA, 12–14 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 87–88. [Google Scholar] [CrossRef]

- Pan, B.; Wang, G.; Zhang, H.; Kang, W.; Zhao, W. A Mini Tutorial of Processing in Memory: From Principles, Devices to Prototypes. IEEE Trans. Circuits Syst. II Express Briefs 2022, 69, 3044–3050. [Google Scholar] [CrossRef]

- Mu, J.; Kim, H.; Kim, B. SRAM-Based In-Memory Computing Macro Featuring Voltage-Mode Accumulator and Row-by-Row ADC for Processing Neural Networks. IEEE Trans. Circuits Syst. I Regul. Pap. 2022, 69, 2412–2422. [Google Scholar] [CrossRef]

- Morad, A.; Yavits, L.; Ginosar, R. GP-SIMD processing-in-memory. ACM Trans. Archit. Code Optim. 2015, 11, 1–26. [Google Scholar] [CrossRef]

- Kuon, I.; Tessier, R.; Rose, J. FPGA Architecture: Survey and Challenges; Now Publishers Inc.: Delft, The Netherlands, 2008. [Google Scholar] [CrossRef]

- Vassiliadis, S.; Soudris, D. Fine-and Coarse-Grain Reconfigurable Computing; Springer: Dordrecht, The Netherlands, 2007; Volume 16. [Google Scholar]

- Woo, S.C.; Ohara, M.; Torrie, E.; Singh, J.P.; Gupta, A. The SPLASH-2 programs: Characterization and methodological considerations. ACM SIGARCH Comput. Archit. News 1995, 23, 24–36. [Google Scholar] [CrossRef]

- Riscv-Collab. RISCV-Collab/RISCV-GNU-Toolchain: GNU Toolchain for RISC-V, Including GCC. Available online: https://github.com/riscv-collab/riscv-gnu-toolchain (accessed on 19 February 2024).

- Binkert, N.; Beckmann, B.; Black, G.; Reinhardt, S.K.; Saidi, A.; Basu, A.; Hestness, J.; Hower, D.R.; Krishna, T.; Sardashti, S.; et al. The gem5 simulator. ACM SIGARCH Comput. Archit. News 2011, 39, 1–7. [Google Scholar] [CrossRef]

- Muralimanohar, N.; Balasubramonian, R.; Jouppi, N.P. CACTI 6.0: A tool to model large caches. HP Lab. 2009, 27, 28. [Google Scholar]

- Gautschi, M.; Schiavone, P.D.; Traber, A.; Loi, I.; Pullini, A.; Rossi, D.; Flamand, E.; Gürkaynak, F.K.; Benini, L. Near-Threshold RISC-V Core With DSP Extensions for Scalable IoT Endpoint Devices. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2017, 25, 2700–2713. [Google Scholar] [CrossRef]

| Benchmark | Data | Algorithm | Parameter | Power | |

|---|---|---|---|---|---|

| Initialization | Execution | [mW] | |||

| # Clock Cycles | # Clock Cycles | @4ns | |||

| K-NN | of N samples | 7 | 72.95 | ||

| K-means | of K centroids, of N samples: | + | 74.48 | ||

| , assign each to the nearest centroid | |||||

| MVM | 62.64 | ||||

| μ& | , | N | 65.77 | ||

| DFT | 94.44 |

| Algorithm | Memory Accesses (L1&L2) | Reduction (%) | |

|---|---|---|---|

| CPU-Mem | CPU-Mem-MeMPA | ||

| KNN | 19,799 | 16,702 | 15.6 |

| K-Means | 103,362 | 16,946 | 83.6 |

| MVM | 24,153 | 15,479 | 35.9 |

| & | 36,606 | 15,090 | 58.8 |

| DFT | 26,599 | 15,133 | 43.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guastamacchia, A.; Coluccio, A.; Riente, F.; Turvani, G.; Graziano, M.; Zamboni, M.; Vacca, M. MeMPA: A Memory Mapped M-SIMD Co-Processor to Cope with the Memory Wall Issue. Electronics 2024, 13, 854. https://doi.org/10.3390/electronics13050854

Guastamacchia A, Coluccio A, Riente F, Turvani G, Graziano M, Zamboni M, Vacca M. MeMPA: A Memory Mapped M-SIMD Co-Processor to Cope with the Memory Wall Issue. Electronics. 2024; 13(5):854. https://doi.org/10.3390/electronics13050854

Chicago/Turabian StyleGuastamacchia, Angela, Andrea Coluccio, Fabrizio Riente, Giovanna Turvani, Mariagrazia Graziano, Maurizio Zamboni, and Marco Vacca. 2024. "MeMPA: A Memory Mapped M-SIMD Co-Processor to Cope with the Memory Wall Issue" Electronics 13, no. 5: 854. https://doi.org/10.3390/electronics13050854