1. Introduction

Approximately 3% of colorectal cancers (CRC) arise in the context of Lynch syndrome (LS), where the patient has a germline mutation in a DNA mismatch repair (MMR) gene [

1]. Historically, CRC patients were tested for LS if they were at high risk according to clinical criteria, e.g., aged under 50 years or with a strong family history. Several clinicopathologic criteria (e.g., Amsterdam criteria, revised Bethesda guidelines) were used to identify individuals at risk for Lynch syndrome or eligible for tumor-based MSI testing [

2]. However, a large proportion of LS patients were missed by this strategy [

3]. Currently, new diagnostics guidance are recommending that all patients with newly diagnosed CRC be screened for LS. Universal tumor-based genetic screening for Lynch syndrome, with MSI screening by PCR or defective MMR(dMMR) detection by IHC testing of all CRCs regardless of age, has greater sensitivity for identification of Lynch syndrome as compared with other strategies [

1]. The pathway includes testing tumour tissue for defective MMR by either microsatellite instability (MSI) testing or immunohistochemistry (IHC) for the MMR proteins MLH1, PMS2, MSH2 and MSH6. Tumours showing MSI or MLH1 loss should subsequently undergo

BRAF mutation testing followed by

MLH1 promoter methylation analysis in the absence of a

BRAF mutation. Patients with tumours showing MSH2, MSH6 or isolated PMS2 loss, or MLH1 loss/MSI with no evidence of

BRAF mutation/

MLH1 promoter hypermethylation, are referred for germline testing if clinically appropriate.

MSI is not specific for LS, and approximately 15 percent of all sporadic CRCs and 5 to 10 percent of metastatic CRCs demonstrate MSI due to hypermethylation of

MLH1 [

3,

4,

5]. Sporadic microsatellite instability-high (MSI-H) CRCs typically develop through a methylation pathway called CpG island methylator phenotype (CIMP), which is characterized by aberrant patterns of DNA methylation and frequently by mutations in the

BRAF gene. These cancers develop somatic promoter methylation of

MLH1, leading to loss of MLH1 function and resultant MSI. The prevalence of loss of MLH1 expression in CRC increases markedly with aging and this trend is particularly evident in women [

6].

While guidelines set forth by multiple professional societies recommend universal testing for dMMR/MSI [

7], these methods require additional resources and are not available at all medical facilities, so many CRC patients are not currently tested [

8].

Since the last two decades, certain histology-based prediction models that rely on hand-crafted clinico-pathologic feature extraction—such as age < 50, female sex, right sided location, size >= 60 mm,

BRAF mutation, tumor infiltrating lymphocytes (TILs), a peritumoral lymphocytic reaction, mucinous morphology and increased stromal plasma cells—have reported encouraging performance but has not been sufficient to supersede universal testing for MSI/dMMR [

9]. Measurement of the variables for MSI prediction, requires significant effort and expertise by pathologists, and inter-rater differences may affect the perceived reliability of histology-based scoring systems [

9]. However, this work is fundamental to the premise that MSI can be predicted from histology, which was recently proposed as a task for deep learning from digital pathology [

10,

11].

Research on deep learning methods to predict MSI directly from hematoxylin and eosin (H&E) stained slides of CRC have proliferated in the last years, Refs. [

10,

11,

12,

13,

14,

15,

16,

17] with reported accuracy rapidly improved on most recent works [

14,

15].

If successful, this approach could have significant benefits, including reducing cost and resource-utilization and increasing the proportion of CRC patients that are tested for MSI. Additional potential benefits are to increase the capability of detecting MSI over current methods alone. Some tumors are either dMMR or MSI-H but not the other. Testing of tumors with only immunohistochemistry (IHC) or polymerase chain reaction (PCR) will falsely exclude some patients from immunotherapy [

18]. A system trained on both techniques could overcome this limitation, obviating co-testing with both MMR IHC and MSI PCR as an screening strategy for evaluating the eligibility status for immunotherapy.

Among current limitations, the developed systems so far are not able to distinguish between somatic and germline etiology of MSI, such that confirmatory testing is required. Another important limitation is generalizability due to batch effects, while those systems have proved excellent performance on well curated cohorts that are similar to training data, the performance is not robust to differing patient and tissue characteristics. This limitation is evident from the performance deterioration when systems trained on a single datasource are tested on external datasets [

14]. This limitation can be palliated by using larger multi-institutional datasets from different institutions for training, as shown by [

15] that estimated 5000 to be the optimal number of patients needed for this specific task. Nonetheless, compiling such international large scale datasets is costly and unfeasible in most cases and does not eliminate batch effects. Regardless of the dataset size and number of contributing sites, the propensity for overfitting of digital histology models to site level characteristics is incompletely characterized and is infrequently accounted for in internal validation of deep learning models [

19]. For example assessments of stain normalization and augmentation techniques have focused on the performance of models in validation sets, rather than true elimination of batch effect [

15,

20]. In addition to staining techniques, batch effects originate from other multiple reasons, such as digitization of slides, variations due to scanner calibration and choice of resolution and magnification. Batch effects in training, validation and testing, must be accounted for to ensure equitable application of DL. Batch effects leads to overoptimistic estimates of model performance and methods to not only palliate but to directly abrogate this bias are needed [

19].

Herein, we present a novel approach in digital pathology to eliminate the batch effects at the deep learning architecture following the methodology described in [

21], where by means of an adversarial training and bias distillation regime, the model avoids learning undesirable characteristics of datasets such as the hospital or other protected variables. Adversarial training has been previously explored to improve the generalizability of predictive model to predict MSI status on tumor types not observed in training [

10], nonetheless this technique has not yet been applied for removing multiple batch effects in digital pathology. We extend the methodology described in [

21] by systematically assessing and quantifying the spurious associations of protected variables (biases) on the network and then leveraging a multiple bias-ablation architecture in the model.

The remainder of the paper is as follows.

Section 2 describes the methodology employed, including the study population, the image preprocessing module, partitioning methods and deep learning architecture, the identification of biases, the bias-ablation system and training regime.

Section 3 first describes the results of the tissue classifier module, the image dataset obtained after preprocessing, the results of the bias identification, the results of the MSI-status classifier module at image level with the demonstration of batch effect distillation, performance results at patient level, analysing the impact of types of tissues included and image magnifications, and the explainability of predictions. Finally,

Section 4 addresses the discussion, conclusions and future work.

2. Material and Methods

2.1. Study Population, Data Collection and Ground Truth Ascertainment

The study population initially consisted of the H&E of 57 tissue-microarrays (TMAs) that included cylindrical tissue samples of 1 mm diameter each (spots), in duplicate from all patients prospectively collected in the EPICOLON project. TMAs were constructed from tumor paraffin blocks from each hospital. For the present project, the paraffin blocks from each hospital were sectioned and the same hematoxylin eosin staining was performed on all the cases included. In this technique, each TMA glass consists of multiple spots placed in one microscope glass slide. The same glass slide vendor was used for all the TMAs. The EPICOLON project was a population-based, observational, cohort study which included 1705 patients with CRC from 2 Spanish nationwide multi-center studies: EPICOLON I and EPICOLON II. EPICOLON I included consecutive patients with a new diagnosis of CRC between November 2000 and October 2001 with the main goal of estimating the incidence of LS in Spain [

22]. EPICOLON II also included consecutive patients with newly diagnosed CRC between March 2006 and December 2007 and from 2009 to 2013 with the aim of investigating different aspects related to the diagnosis of hereditary CRC [

23]. For both cohorts, the inclusion criteria were all patients with a de-novo histologically confirmed diagnosis of colorectal adenocarcinoma and who attended 25 teaching and community hospitals across Spain during the different recruiting periods covered by EPICOLON I and EPICOLON II as previously detailed. Exclusion criteria for both studies were patients in whom CRC developed in the context of familial adenomatous polyposis or inflammatory bowel disease, and patient or family refusal to participate in the study. It was assumed that the EPICOLON population was representative of the Spanish population, due to the large number of participating centres (most of them referral centres of each area), their homogeneous distribution throughout the country, and the lack of ethnic differences among regions [

22]. Both studies were approved by the institutional review boards of the participating hospitals. The overall MSI frequency in the EPICOLON project was 7.4%.

The population was further expanded with 283 additional patients retrospectively obtained from the

Hospital Universitario de Alicante, Spain (HGUA) from 2017 onwards, which -once preprocessed- added 66 MSI-H and 177 microsatellite stable (MSS) cases to the final study population as shown in

Figure 1. The demographic and main clinical characteristics of the final study sample after preprocessing are summarized in

Table 1. The corresponding H&E images were provided in 15 TMAs that included 1 mm spots in duplicate for each patient.

For the task of MSI prediction each patient and corresponding spots were labeled as MSI-H vs. MSS. MSI-H was defined as tumour-tissue testing defective MMR(dMMR) by either microsatellite instability (MSI) testing or immunohistochemistry (IHC) for the MMR proteins MLH1, PMS2, MSH2 or MSH6. Tumours showing MSI or MLH1 loss underwent BRAF mutation testing followed by MLH1 promoter methylation analysis in the absence of a BRAF mutation. Briefly, patients with tumours showing MSH2, MSH6 or isolated PMS2 loss, or MLH1 loss/MSI with no evidence of BRAF mutation (regardless of presence or not of MLH1 promoter hypermethylation), were labeled as MSI-H and all others as MSS.

TMAs were scanned with the VENTANA ROCHE iSCAN scanner at magnification × 40 corresponding to a maximal resolution of 0.25 microns per pixel (MPP).

This project was approved by the institutional research committee CEIm PI2019-029 from ISABIAL and both the images and associated clinical information was previously anonymized. The data from EPICOLON project and HGUA are not publicly available, in accordance with the research group and institutional requirements governing human subject privacy protection.

The NCT-CRC-HE-100K and CRC-VAL-HE-7K datasets, consisting of 100,000 non-overlapping image patches from hematoxylin & eosin (H&E) stained histological images of human colorectal cancer (CRC) and normal tissue labeled with 9 tissue classes—adipose (ADI), background without tissue (BACK), debris (DEB), lymphocytes (LYM), mucus (MUC), smooth muscle (MUS), normal colon epithelium (NORM), cancer-associated stroma (STR) and colorectal adenocarcinoma epithelium (TUM)—were used to train a model in the task of tissue class prediction. All images were 224 × 224 pixels (px) at 0.5 MPPs. All images were color-normalized using Mazenko’s method [

24]. All image tiles for the NCT-CRC-HE-100K and CRC-VAL-HE-7K datasets are available online at

https://zenodo.org/record/1214456#.XcNpCpNKjyw (accessed on 25 October 2019).

2.2. Preprocessing Module

The image preprocessing module consisted of a TMA-customized dynamic extractor of tiles of 400 × 400 pixels corresponding to adjacent regions without overlap parameterized at different magnifications (×40, ×20, ×10, ×5, ×0) linked to a DL tissue classifier (described in see

Section 2.3) that filtered spots without viable colorectal adenocarcinoma epithelium and at the same time selected the regions of interest based on type of tissues.

2.3. Deep Learning Architecture

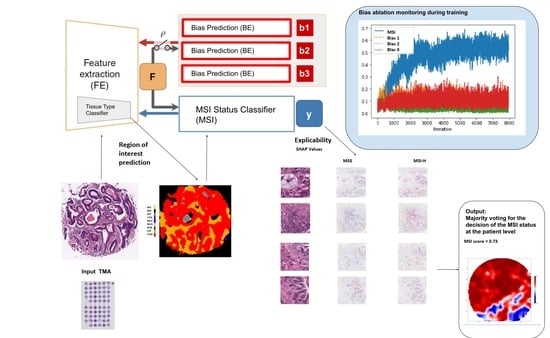

The DL architecture (see

Figure 2) consisted of an end-to-end deep learning system linking three types of modules: (1) A classifier of 9 types of tissues at tile level: adipose (ADI), background without tissue (BACK), debris (DEB), lymphocytes (LYM), mucus (MUC), smooth muscle (MUS), normal colon epithelium (NORM), cancer-associated stroma (STR) and colorectal adenocarcinoma epithelium (TUM) (2) the MSI status classifier

and (3) the batch effect module learners

, which has as many learners or heads as required to account for multiple biases.

The classifier of tissues, had as a convolutional backbone a Resnet34 pre-trained on Imagenet, and two hidden layer of dimension 512 with ReLU as the activation function, and 9 out features. This module was trained with the NCT-CRC-HE-100K and CRC-VAL-HE-7K datasets on the final task of learning the 9 types of tissue. Once trained, it was used both to select the regions of interest (ROIs) based on type of tissues in the preprocessing module and as the feature extractor backbone in the end-to-end system (removing the head) connected with the and modules. The yielded 512 intermediate features.

Both the and all modules have two hidden layers of dimension 512 with ReLU as the activation function, and 2 out features.

The DL system without the modules is referred herein as the baseline model, and when including the modules, is referred as the bias-ablated model.

All code was programmed using the Python programming language. Machine and deep learning methods were implemented using FastAI [

25] and PyTorch. QPath v0.2.3 [

26] was used for annotation purposes.

2.4. Bias Identification

To systematically identify and quantify the effect of potential biases interfering with the MSI prediction task, the baseline model, which as described in

Section 2.3 included only the feature extractor backbone

connected to the MSI status classifier

module, was trained on the study sample using 5-fold cross-validation for 3 epochs. The squared distance correlation

[

27] was computed on each batch-iteration between the extracted features from the

and three putative biases (study project, patient, and glass) followed by backward selection to uncover hidden interactions between biases. The

is a measure of dependence between random vectors analogous to product-moment covariance and correlation, but unlike the classical definition of correlation, distance correlation is zero only if the random vectors are statistically independent [

27].

The three biases considered for testing were the study project, the patient and the TMA glass based on the following reasoning: First, since MSI-H patients were significantly over-represented in the subset of cases obtained from a single hospital (34% in HGUA vs 7.4% in EPICOLON), and also samples were obtained in different years on each project (2017 onwards in HGUA vs prior to 2013 in EPICOLON) in this study the contributing project becomes a potential task bias; i.e., prediction of MSI status may be dependent on project instead of on the image bio-markers of MSI status. Second, tiles from the same patient commonly share observable visual patterns originated from both tissue characteristics, slicing direction and staining differences in laboratory procedures. As the model is designed to be trained at tile level, even if no patient is at the same time in the training and validation set, the model would learn those patterns as shortcuts for the MSI task, hence being a potential reason for model overfitting. overfitting was indeed verified when the baseline model was trained more than 3 epochs observing an ever decreasing training loss near zero with no improvements in the validation loss. Third, each TMA agglutinates sample spots from tens of patients which are placed between an underlying and a cover glass. The unique characteristics associated with the digitization of each TMA-glass may be statistically associated with MSI status if the distribution of classes across TMAs is not uniform.

2.5. Bias Ablation

Once biases were identified and quantitatively characterized, the ablation of each bias

, was implemented through an adversary training and distillation bias regime following the approach described in [

21] (see

Figure 2), but differently from this work, the ablation was not limited to only one but to all the biases identified.

Namely, given the input image X, the module extracts a feature vector F, on top of which the module predicts the class label y. To guarantee that F is not biased to the multiple , each corresponding module back-propagate, in a consecutive way, the loss to adversarially, i.e., as . It results in features that minimize the classification loss of the module while maintaining the least statistical dependence on each of the bias .

Each of the

modules is trained on a

y-conditioned cohort, i.e., samples of the training data whose

y values (MSI labels) are confined to a specific group (referred as

in

Figure 2). Consequently, the features learned by the system are predictive of

y while being conditionally independent of the batch effect originated by each of the biases. On the implemented architecture, the system would learn to separate MSI-H vs MSS samples by training each

only on the MSS group to correctly model the batch effects on the samples. We perform the adversarial training of each of the

only on the MSS group.

During the end-to-end system training a min-max game, is defined between two networks. The classification loss

is defined by a cross-entropy:

where

X and

y are the input images and corresponding msi target labels, respectively,

N is the number of training pairs (

X,

y),

M is the number of classes to predict (two, MSI-H vs. MSS) and

is the predicted MSI label.

Each batch effect or bias loss

is based on the squared Pearson correlation

:

where

defines the vector of the bias across all

N training inputs. The statistical dependence is removed by pursuing a zero correlation between

and

through adversarial training.

The overall objective of the end-to-end network is defined as:

where

B is the number of protected variables or biases

b.

Specifically, in each iteration, first we back-propagate loss to update and . Second, for each , we fix and then minimize the loss to update their corresponding . Finally, we fix and then maximize the loss to update , hence distilling all the biases from . In this study, each depends on the correlation operation, which is a population-based operation, as opposed to individual level error metrics such as cross-entropy or MSE losses. Therefore, we calculate the correlations over each training batch as a batch-level operation. In conclusion, extracts features that minimize the classification criterion, while ‘fooling’ all modules (i.e., making each incapable of predicting their corresponding bias ). Hence, the saddle point for this objective is obtained when the parameters minimize the classification loss while maximizing the loss of all the modules.

2.6. Partitions, Model Training and Metrics

For model training, the dataset was split 80/20 for training and validation applying 5-fold cross-validation and guaranteeing on each fold that the images of each patient only belonged to one set (either training or validation but not both). To address imbalance in MSI status as well as in the number of tiles for each patient, we applied a composite weighted random sampling for both criteria, resulting in a balanced training set for both patient and MSI label simultaneously. Tiles were resized to 224 × 224 pixels and color was normalized following Mazenko method [

24]. In addition to Mazenko, experiments were done with and without an additional color normalization with statistics computed from the EPICOLON image dataset which included 25 different hospitals. Data augmentation at training time consisted in random rotations up to 90°, dihedral flips with probability of 0.5, a perspective warping of maximum 0.2, and hue variations of maximum 0.15. Of note, training sets included all magnifications so that the network could be trained simultaneously on higher tissue architecture patterns as well as on cellular-level features including nuclear characteristics. The batch size was 512 images.

The statistical dependency between learned features and each of the selected biases was monitored during model training with the squared distance correlation . Principal component analysis (PCA) was used to assess how the spatial representations of the learned features were affected by the protected (bias) variables before and after bias ablation. One-way ANOVA was used to compare the false positive rates and false negative rates of the different tissue types and magnifications on the MSI classification task. Metrics used to assess the performance of the MSI classifier include AUC, balanced accuracy, sensitivity, specificity, positive predictive value, negative predictive value, false positive rate and false negative rates. Metrics dependent on MSI-H prevalence are calculated assuming 15% in the real population.

2.7. Explainable Methods

SHAP (SHapley Additive exPlanations) [

28] values were used to provide a means of visually interpreting the topology and morphology of features that influence predictions. The goal of SHAP is to explain the prediction of an instance by computing the contribution of each feature to the prediction. The SHAP explanation method computes Shapley values from coalitional game theory.

4. Discussion

We present a system for the prediction of MSI from H&E images using artificial vision techniques that incorporates and end-to-end TMA-customized image preprocessing module to tile samples at multiple magnifications in the regions of interests guided by the automatically detected type of tissues and a multiple bias distiller system integrated with the MSI predictor.

In the present work we find that TMAs have special characteristics, not reported for WSIs, which make them especially challenging for the application of DL methods in digital pathology, emphasizing the relevance of addressing biases.

A systematic study of biases at tile level demonstrated three hidden variables interfering with the model’s learned representations: the project of origin of samples, the patient’s spot and the TMA glass where each spot was placed. Even if it is preferred to control for any of those types of biases at the dataset level, it entails either obtaining more tissue and/or re-allocating them in new TMA glasses which was unfeasible in general. Instead, we reused the TMAs as they were provided for research purposes given that first, the most optimal management of tissue samples avoiding sample waste is always desirable and second, the presence of associations spurious or otherwise undesirable that are exploited by DL models, rather than being an odd problem affecting only our work, is a general, common and not yet resolved challenge in medical datasets used to train AI systems. Consequently, we decided to dedicate the efforts to systematically study and address the biases at the learning stage. For this purpose, a novel multiple bias rejecting technique has been implemented at the deep learning architecture to directly avoid learning the batch effects introduced by protected variables.

The implemented method achieved a significant reduction in the dependence of the learned features with regard to the project bias and patient bias in the general study populations but did not reduced the glass bias dependence in the HGUA project where we found that for most of the TMAs there were only samples with one single type of target class included. We observed that the ablation method is highly effective for mitigating bias in datasets where for all possible ordered pairs of protected variable classes and target classes there are representative samples even if heavily in-balanced. In addition, the performance in the MSI classification improved in terms of AUC and balanced accuracy for the population meeting this condition (EPICOLON cohorts). Conversely, when this condition was not meet (as in the case of the HGUA for the glass bias, where the target and protected variable had an unequivocal association in the samples), the statistical dependence was not eliminated and the classification performance in the target task still seemed to exploit the bias maintaining a highly marked predictive advantage in comparison with the classification results in the EPICOLON cohorts. We observed that the performance of the models were tightly associated to the presence of batch effects and their presence had a larger impact on performance than the number of patients available for training on each cohort. In essence, as illustrated in

Figure 6, even if the number of patients was smaller in the HGUA cohort, the model performance on this cohort was higher than that achieved in the EPICOLON project, which was explained by the model’s exploitation of the TMA-glass bias in the HGUA cohort. When analyzed considering all study population, the learned features from the bias-ablated model had maximum discriminative power with respect to the task and minimal statistical mean dependence with the biases.

In contrast to other population-based cohorts where MSI prevalence is around 15%, in the EPICOLON project, the cohorts had a mismatch repair deficiency in only 7.4% patients. In EPICOLON I only 91 patients (7.4%) had a mismatch repair deficiency with tumors exhibiting either genetic microsatellite instability (n = 83) or loss of protein expression (n = 81) [

2]. This difference is attributed to the fact that the EPICOLON project was a population-based study; while CRC cohorts with a 14–15% dMMR prevalence usually correspond to registries with a higher percentage of patients with family history, as it is for example, the Cancer Family Registry [

1].

Regarding the classification MSI status at case level, we observed that the performance consistently increased in all experiments when not only tumor epithelium but also the mucinous and lymphocytic infiltrate regions were included. Those regions were probed to be nonspecific at image level, but increased the sensibility at patient level, which is a desirable characteristic for screening purposes.

Also, a ×20 magnification achieved the higher specificity, but at the same time, reducing magnifications up to ×10 contributed to higher sensitivity of the models. This observation supports that the best approach would be to include different magnifications, helping the model to learn both low and high level tissue architectural patterns at the same time. Moreover, including all magnifications during training was considered in this project as a data augmentation technique.

The tissue classifier module reached an AUC of 0.98 in the validation set. This module was capable of classifying the different regions of the image: tumor epithelium, stroma, normal epithelium, mucin, muscular fibers, lymphocytic infiltrates, debris, adipose tissue and background. Regarding the final task of MSI status prediction, the AUC at tile level, including all three selected tissues (tumor epithelium, mucin and lymphocytic regions) and all magnifications, was 0.87 ± 0.03 and increased to 0.9 ± 0.03 at patient level.

Limitations of the study are as follow: We observed, after applying the image preprocessing pipeline, a reduction in the final available MSI population, altering its original frequency in the EPICOLON project. Specifically, due to lack of epithelial tumor regions in the spots, up to 9% (n = 159 patients) in the EPICOLON population were excluded from the final analysis, where the largest proportion of excluded patients corresponded to the MSI-H arm, hence reducing its frequency from the expected 7.4% in EPICOLON to 5.8% (n = 105). This was explained by a more intensive molecular tissue testing performed in MSI-H cases in the context of the EPICOLON research project that exhausted a subset of spots with no viable regions of epithelial tumor left. The reduction in the final available MSI population, altering its original frequency, was consequently not longer considered a representative sample of the original EPICOLON MSI-H population. To overcome this limitation, the dataset was further enriched with cases from a single hospital as shown in

Figure 1. On the one hand, this enrichment would increase the exposure of the MSI classifier to additional unselected MSI-H cases and, on the other hand, the bias rejecting technique implemented successfully addressed the batch effect introduced by the project of origin. Finally, result metrics which are impacted by disease prevalence, are calculated considering the MSI-H prevalence in the real population (15%), so as to approximate its performance in the clinical setting.

As future work, only after addressing the remaining TMA-glass bias at the data level, testing the generalizability of the system in an independent and prospective test would be necessary. Also multimodal variables including age, stage, location, Bethesda criteria could be included in the model to explore their potential to improve the predictive capacity.