1. Introduction

Currently, bionic hands have been widely applied in many fields [

1], such as grasping tasks [

2,

3], industrial applications [

4], human–robot-interaction [

5], and conducting delicate operations in dangerous situations [

6]. As multi-degree-of-freedom (DoF) end-effectors, bionic hands have excellent flexibility and versatility. Meanwhile, the anthropomorphic structure empowered bionic hands to conduct some complicated, dangerous, and inaccessible tasks in a human-inspired manner. Hence, bionic hands have gained widespread attention in a variety of practical applications.

Bionic hands can be divided into fully actuated and underactuated ones, according to the mechanical structure [

7]. Fully actuated bionic hands benefit from high dexterity, which allows them to independently control each degree of freedom for more complex operations [

8]. The redundant DoFs allow the robot to deal with optimisation and hierarchical control problems. However, full actuation also yields bulkier and more complex mechatronic systems and control algorithms. The underactuated ones, instead, benefit from a lightweight and portable design, as well as a simple driver structure, which is easier to build and deploy [

9]. Furthermore, underactuation brings a better compliance of the mechanical structure. However, due to the underactuated and nonlinear motion characteristics, developing an accurate mathematical model is difficult [

10]. Learning-based methods also have been investigated to program the motion of a bionic hand [

11] and robotics [

12]. However, for executing tasks in dynamic or unstructured environments, the pre-defined bionic hand motion sometimes is not suitable or robust enough. Although underactuated bionic hands have numerous advantages, how to control them in a more natural and precise manner remains a challenging issue [

13].

Humans are capable of dealing with complicated tasks, even in unstructured or dynamic environments. By incorporating human intelligence, teleoperation control is considered a promising solution to control robots [

14,

15]. For example, a novel data glove was designed and employed to achieve the teleoperation control of a robotic hand–arm system, which was stable, compact, and portable [

16]. Specifically, in the teleoperation control task of an underactuated bionic hand, by sensing human hand posture and then sending it to the bionic hand, a real-time mapping from the human to the bionic hand can be realised [

17]. First of all, human hand motion should be detected in real-time, and many solutions, such as gloves, exoskeletons, bending sensors, vision-tracking-based methods, etc., have been investigated [

18,

19,

20].

Among the human-hand-tracking methods, wearable mechanical sensor systems (fabric gloves, exoskeletons) and vision-tracking-based methods (Kinect camera, Leap Motion, Vicon tracker system, etc.) are the most used ones. In wearable solutions, flexion and potentiometer sensor are the most frequently utilised to detect the bending angle of human hand joints and then map it to a bionic hand. These methods outperform vision-tracking-based methods in terms of stability and robustness and, especially, do not suffer from line-of-sight occlusion problems [

21]. A typical application of the wearable-based teleoperation control framework was implemented by NASA, which utilised two CyberGloves to teleoperate the robot to perform maintenance tasks such as threading nuts into bolts and tying knots [

22]. Apart from this, a wearable-glove-based method was also introduced into virtual interaction scenarios for 3D modelling and task training [

23]. Although wearable sensors have such advantages, the cumbersome wearing process, size adaptability, and pretty poor comfortableness still hinder their further application. Meanwhile, the unergonomic structural design affects the intuitiveness and transparency during the operation.

Compared with the glove- or exoskeleton-based methods, vision-tracking-based methods are much more natural and can be personalised by measuring the joint position of the human hand. Vision-based tracking systems are primarily adopted to extract important human hand features and send the command to the robotic hand after the motion mapping. In [

24], Artal proposed a real-time gesture recognition technique based on a Leap Motion camera to control a 5-DoF bionic hand. Similarly, in Madina’s study [

25], Leap Motion was used as an input device for hand rehabilitation training in a virtual training case. In addition, some camera tracker and infrared tracker systems have also been used to realise hand posture recognition [

26,

27]. Nonetheless, the tracking performance of the vision-tracking-based methods greatly depends on environmental factors, such as uneven illumination, cluttered background, and items with a similar colour to the hand. In addition, the vision-tracking-based methods have limited workspace and usually suffer from the self-occlusion issue. Although the characteristics of the different control methods are obvious, there is still no systematic study of the performance of both in practical applications as a basis for how to select an appropriate method.

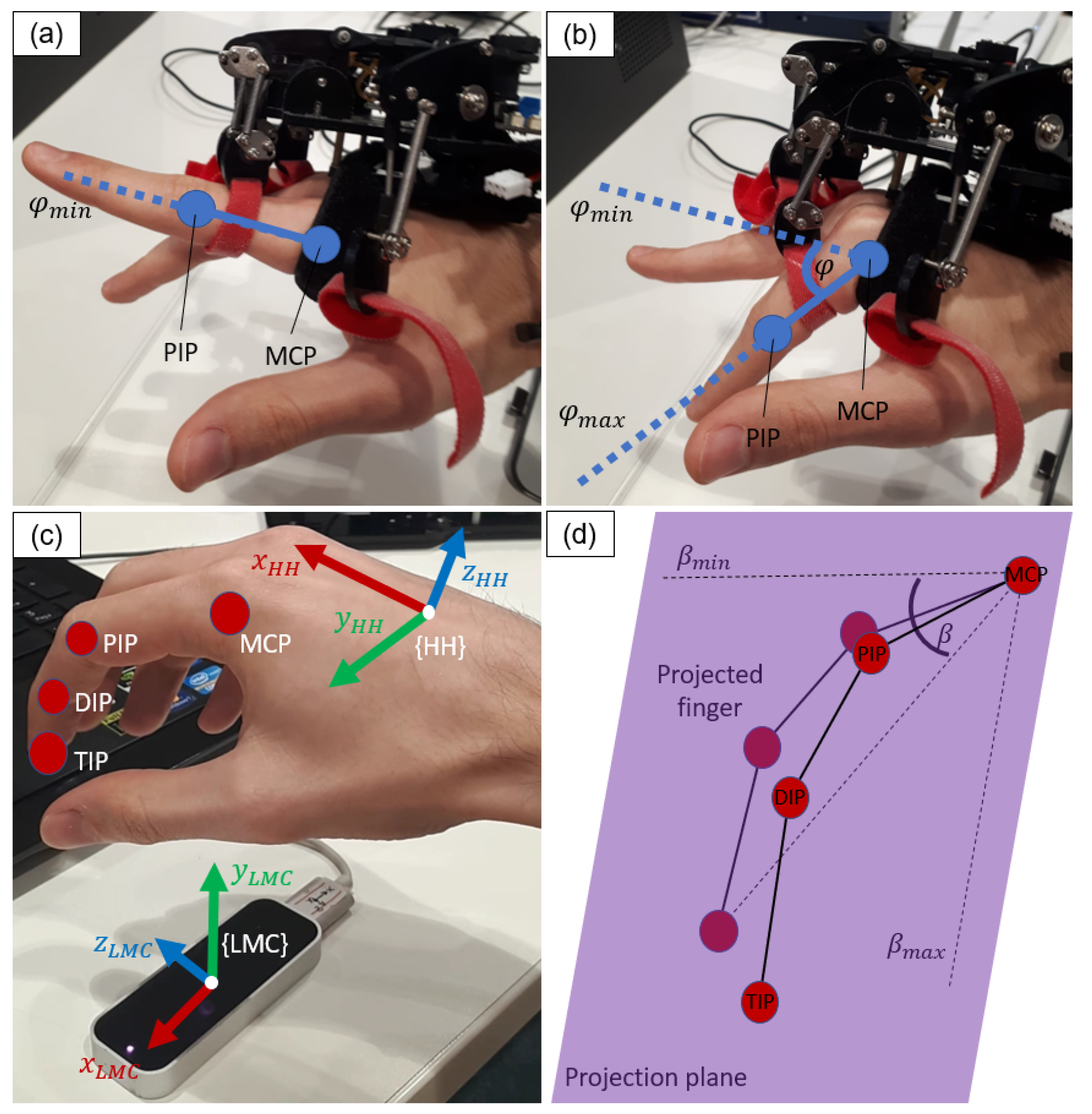

In this paper, the main motivation aims to achieve intuitive and natural teleoperation control of an underactuated bionic hand. To achieve this, two control frameworks based on a wearable glove and a vision-tracking system are developed, respectively. The main contributions are summarised as follows: (1) The calibration results with an external ground truth tracking system are employed to formulate the inverse kinematics model of the underactuated bionic hand, which takes the underactuated and nonlinear characteristics of the bionic hand into consideration. (2) Two novel features to describe the human hand fingers’ motion are defined, namely the flexion angle and the bending angle. Such quantities are measured by the two tracking systems, respectively, normalised for their own range and mapped to the bionic hand motion to provide an intuitive teleoperation control. (3) Furthermore, a comparison of the proposed two methods in terms of accuracy in both static and dynamic environments is performed. User study and practical grasp tasks are designed to demonstrate the effectiveness of the two methods and to further compare their performance in terms of success rate and subjective evaluation.

The remainder of this paper is organised as follows.

Section 2 describes the research background and the calibration procedures of the underactuated bionic hand kinematics. Following that,

Section 3 presents the wearable glove and vision-tracking-based methods to detect human hand motion in real-time. Then, the details of the teleoperation control frameworks are described in

Section 4, as well as the algorithm to apply the inverse kinematic model.

Section 5 depicts the experimental setup and metrics to demonstrate the performance of the proposed method. After that, the experimental results are given in

Section 6. Finally,

Section 7 gives the conclusions of this work.

4. Teleoperation Control Framework

In this section, it is shown how the teleoperation control methods are implemented using the wearable-glove-based and the vision-based tracking methods, respectively. As depicted in

Figure 7, the actuation controller stage is the same for both teleoperation systems, while the distinctive stage is the motion tracking one.

As described in

Section 3.1 and

Section 3.2, each tracking system extracts for each finger one dimensionless human motion feature, ranging from 0 to 1, which represents the finger motion, namely the flexion level

and the bending level

, respectively. A prior step for teleoperation is mapping such a human feature to the robot feature, namely the robot bending angle

. As the correspondence between human and robot gestures must be intuitively understandable and applicable by the user without any mental effort or long-time learning needed, the resulting movement of the robot must be highly semantically correlated to the human one, while considering the kinematic differences between human and robot structures. Benefiting from the conciseness and meaningfulness of the motion features extracted by the two tracking systems and from the underactuated mechanics of the bionic hand, a simple and intuitive mapping can be performed. The output of the utilised tracking system, whether

in the case of the wearable system or

in the case of the vision system, is multiplied for the bending angle range

measured during the bionic hand calibration described in

Section 2.2.

Once the bending angles are computed, an ROS node applies the inverse kinematics model synthesised in

Section 2.2. To avoid a sudden change from one function to the other, and thus instability, the average value of the bending angle in the last 40 frames

(moving average on 1 second) was used to improve robustness. Only if the angle

deviates from

for more than

of the bending angle Range Of Motion (ROM), the direction changes are detected. Then, according to the current value of the direction (extension or flexion), the corresponding inverse kinematic function (

and

, respectively) is applied to

in order to obtain the motor control signal value

. The details of the implemented inverse kinematics algorithm are summarised in Algorithm 1.

Then, the communication handler node packs the control signals in a message characterised by a proper TCP/IP format and sends it to the bionic hand through an Ethernet link. Control messages are unpacked by the bionic hand processor, and the motors are driven consequently. A user interface manages the opening and closing of the communication link according to the user’s will. The user’s input is delivered through a keyboard and a mouse.

| Algorithm 1: Inverse kinematics modelling of the underactuated bionic hand. |

![Robotics 11 00061 i001]() |