Continuous Online Semantic Implicit Representation for Autonomous Ground Robot Navigation in Unstructured Environments

Abstract

:1. Introduction

2. Related Work

- The presence and nature of an intermediate representation between sensor data and navigation structure, which impacts scalability and computational performances.

- The continuous or discrete aspect of the navigation structure, which defines the characteristics and modalities of the planning methods that can be applied.

- Whether it can be applied to large scale navigation tasks or for local planning.

- Whether semantics are employed to enrich the environment understanding and improve navigation.

- Whether an uncertainty measurement is provided, which we consider a key aspect to guarantee robot safety.

2.1. Semantic Mapping for Robot Navigation

2.2. Implicit Representations for Robot Navigation

2.3. Summary of Contributions

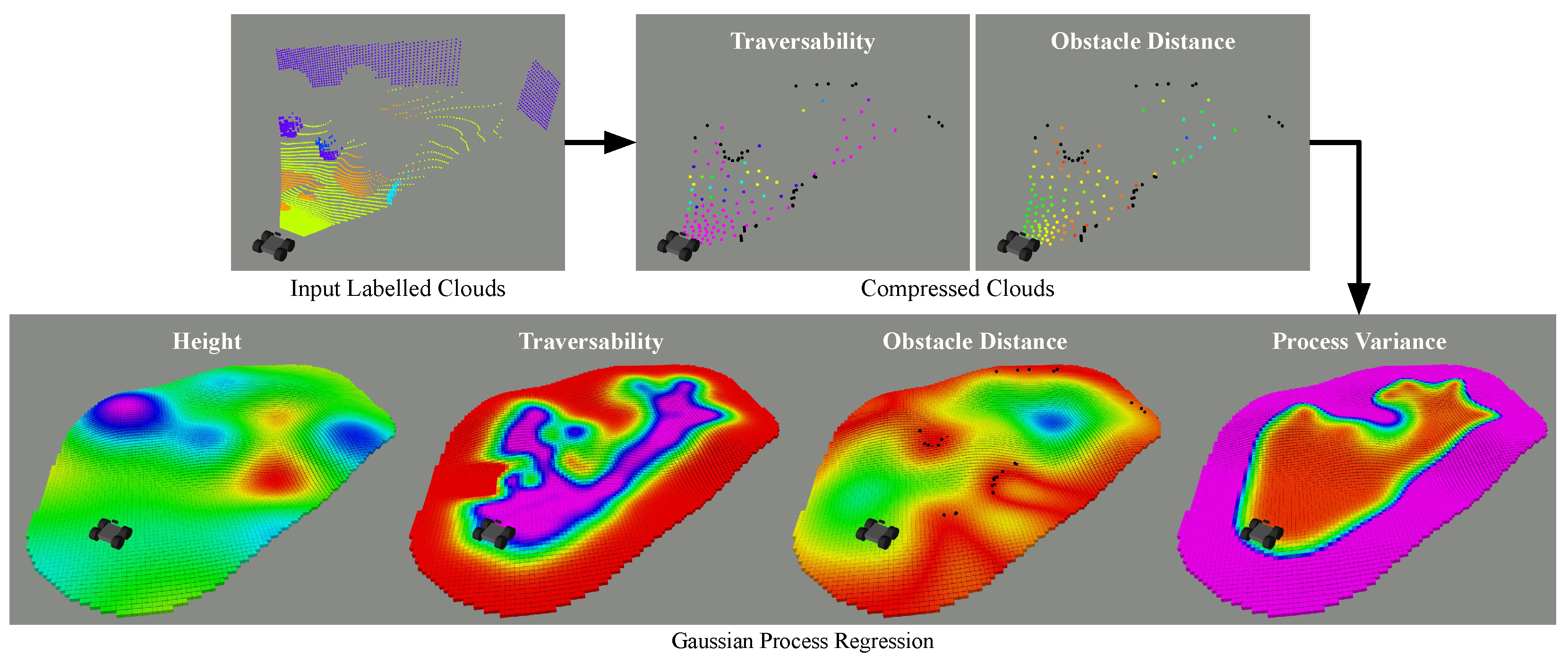

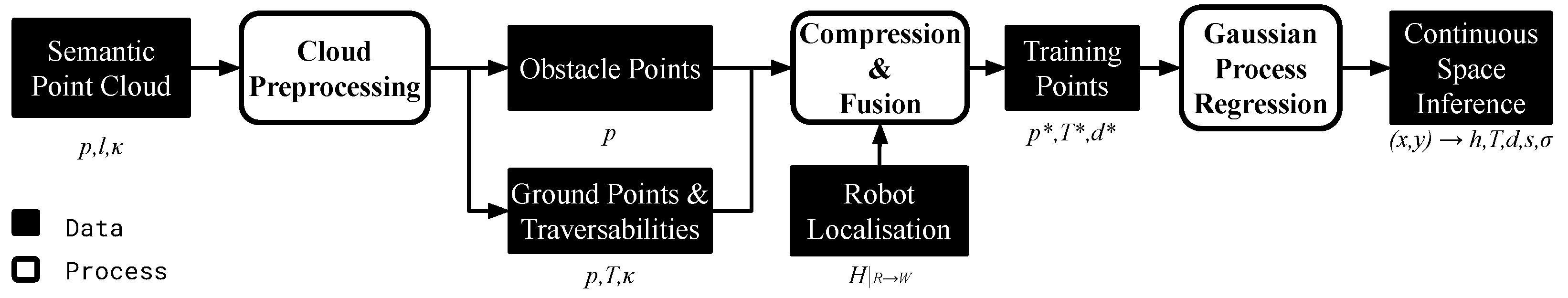

3. GPR Online Implicit Environment Representation

3.1. Input Data and Preprocessing

3.2. Point Clouds Compression and Fusion

3.2.1. Grid Clustering Compression

3.2.2. Two-Steps k-Means Clustering Compression

3.2.3. Obstacle Distance Computation

3.3. Gaussian Process Regression

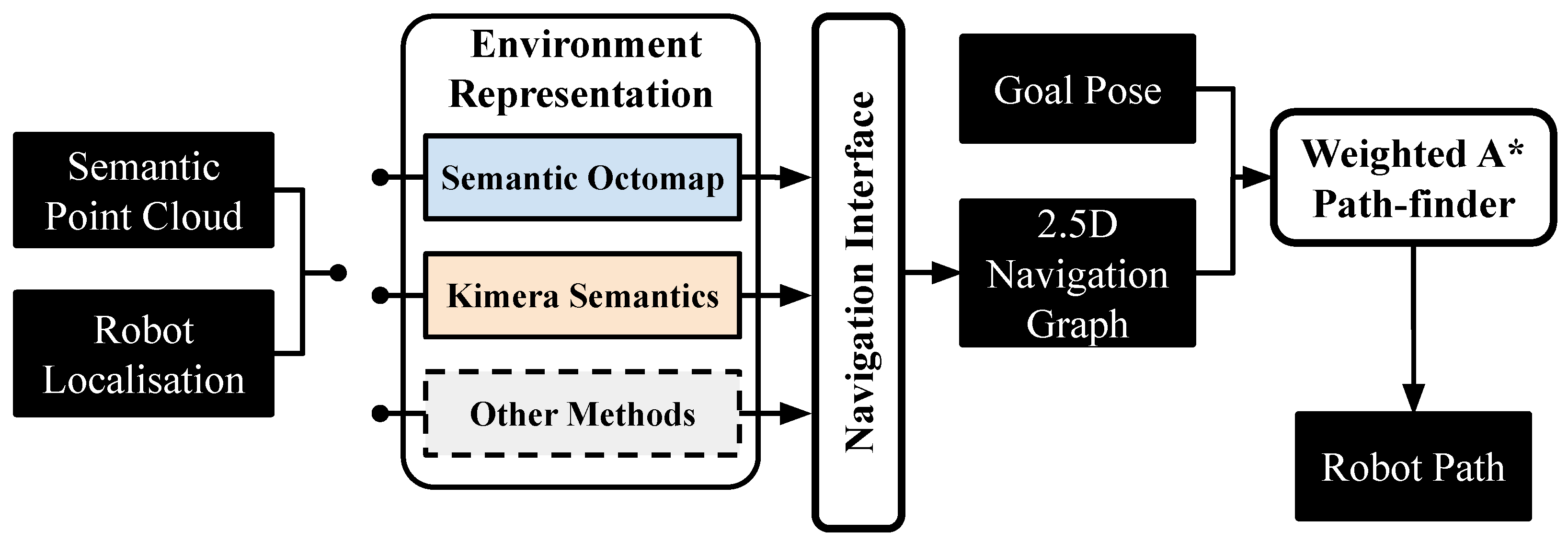

4. The COSMAu-Nav Architecture

4.1. Implementation of the GPR Environment Representation Method

4.2. Smooth Path Planning in the GPR Environment Representation

- and the loss term and coefficient relating to the path length

- and the loss term and coefficient relating to terrain traversability

- and the loss term and coefficient relating to the process variance

- and the loss term and coefficient relating to obstacle collision

- and the loss term and coefficient relating to the path curvature

5. Experimental Evaluations

5.1. Gazebo Simulation Evaluation Setup

5.2. Comparison of Point Cloud Compression Methods

- The grid resolution for grid clustering compression r at 0.2 m, 0.25 m, 0.3 m, 0.35 m and 0.4 m.

- The number of clusters for the k-means spatial compression step at 50, 100, 150, 200 and 250.

- The number of clusters for the k-means temporal compression step at 50, 100, 150 and 200.

- The GPR inference resolution at 0.0 m (no local inference), 0.05 m, 0.1 m, 0.15 m, 0.2 m and 0.25 m.

5.3. Baseline Discrete Semantic Mapping and Navigation Architecture (SMaNA)

5.3.1. Discrete 3D Semantic Mapping Methods

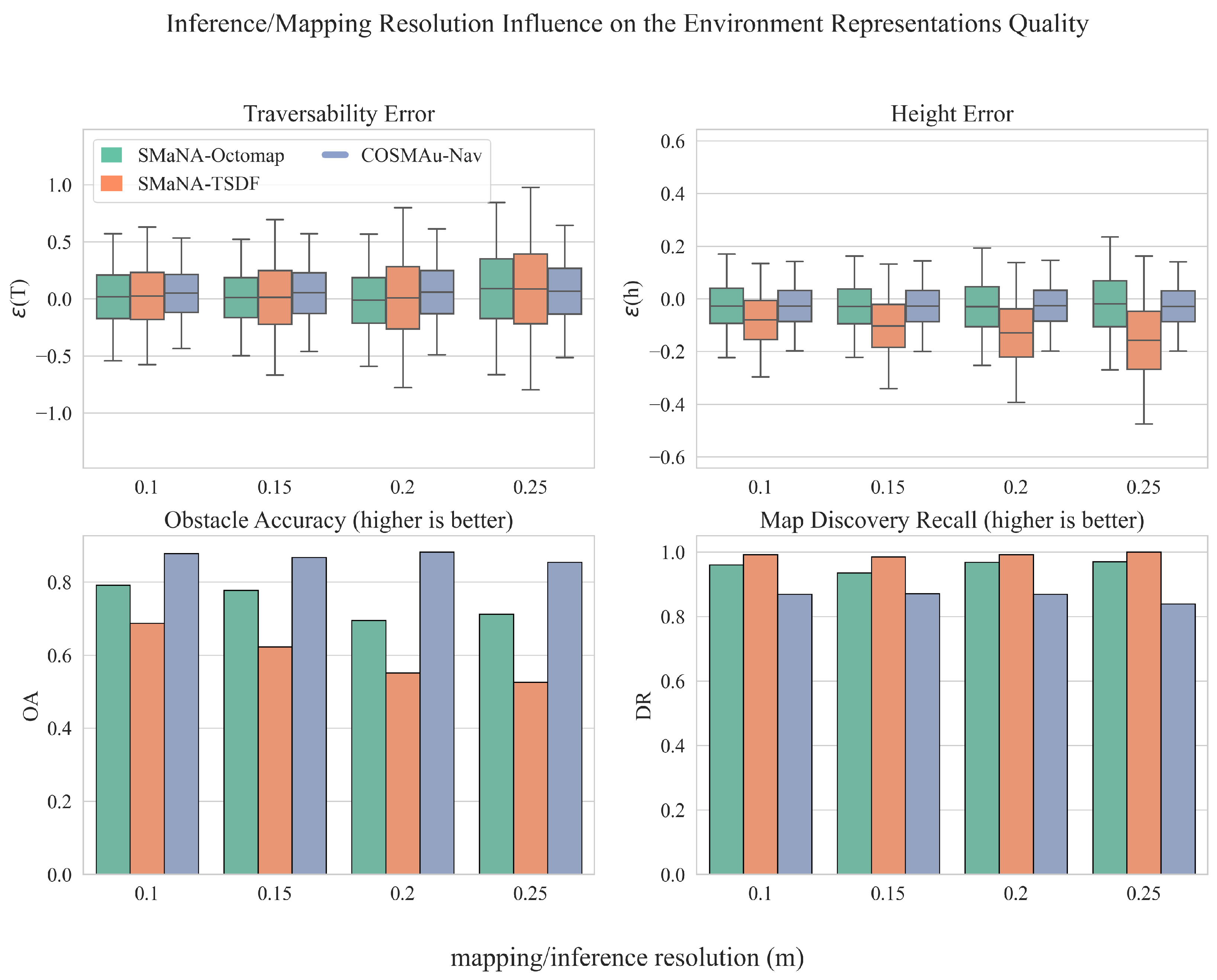

5.4. Comparison of the GPR Environment Representation with the SMaNA Baseline

5.5. Analysis of Computational Performances on the Rellis-3D Real-World Dataset

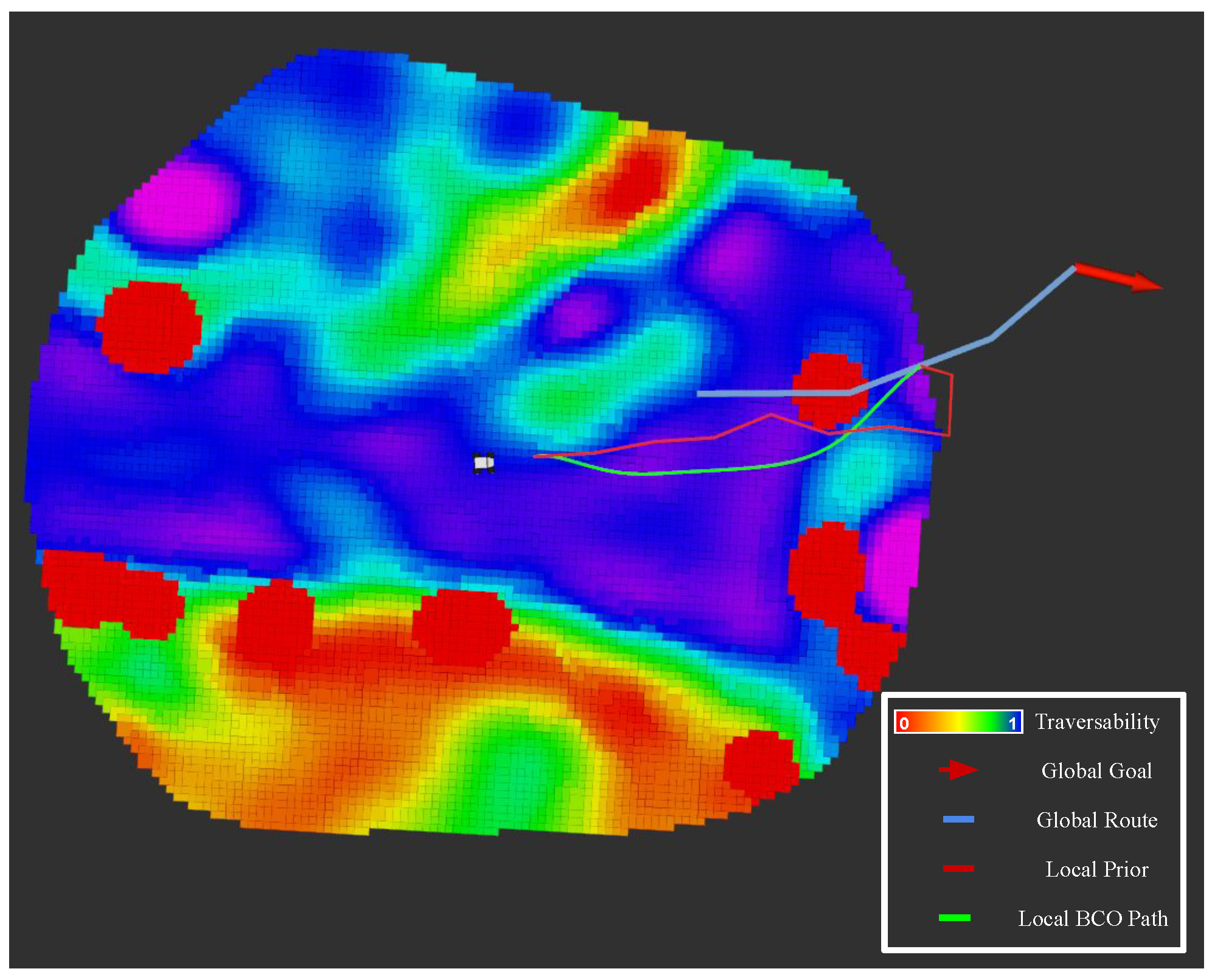

6. Application to a Real-World Autonomous Navigation Mission

6.1. Embedding COSMAu-Nav Onboard a Ground Robot

6.2. Adapting GPR Representation for Multi-Scale Navigation

6.2.1. Keyframe Storage

6.2.2. Global GPR Inference

6.2.3. Navigation in the Global Environment Representation

6.3. Experiments and Results

7. Conclusions and Perspectives

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CNN | Convolutional Neural Network |

| COSMAu-Nav | Continuous Online Semantic iMplicit representation for Autonomous Navigation |

| CPU | Central Processing Unit |

| GPR | Gaussian Process Regression |

| GPU | Graphic Processing Unit |

| LOVE | Lanczos Variance Estimator |

| RAM | Random Access Memory |

| ROS | Robot Operating System |

| RRT | Rapidly exploring Random Tree |

| SLAM | Simultaneous Localisation And Mapping |

| SMaNA | Semantic Mapping and Navigation Architecture |

| TSDF | Truncated Signed Distance Function |

| T-RRT | Transition based Rapidly exploring Random Tree |

| UGV | Unmanned Ground Vehicle |

Appendix A

References

- Jaillet, L.; Cortes, J.; Simeon, T. Transition-based RRT for path planning in continuous cost spaces. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 2145–2150. [Google Scholar]

- Serdel, Q.; Moras, J.; Marzat, J. SMaNA: Semantic Mapping and Navigation Architecture for Autonomous Robots. In Proceedings of the 20th International Conference on Informatics in Control, Automation and Robotics (ICINCO), Rome, Italy, 13–15 November 2023. [Google Scholar]

- Tylecek, R.; Sattler, T.; Le, H.A.; Brox, T.; Pollefeys, M.; Fisher, R.B.; Gevers, T. 3D Reconstruction Meets Semantics: Challenge Results Discussion; Technical report, ECCV Workshops; 2018; Available online: https://openaccess.thecvf.com/content_eccv_2018_workshops/w18/html/Tylecek_The_Second_Workshop_on_3D_Reconstruction_Meets_Semantics_Challenge_Results_ECCVW_2018_paper.html (accessed on 1 May 2024).

- Jiang, P.; Osteen, P.; Wigness, M.; Saripalli, S. RELLIS-3D dataset: Data, benchmarks and analysis. In Proceedings of the IEEE international Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 1110–1116. [Google Scholar]

- Wermelinger, M.; Fankhauser, P.; Diethelm, R.; Krüsi, P.; Siegwart, R.; Hutter, M. Navigation planning for legged robots in challenging terrain. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 1184–1189. [Google Scholar] [CrossRef]

- Xuan, Z.; David, F. Real-Time Voxel Based 3D Semantic Mapping with a Hand Held RGB-D Camera. 2018. Available online: https://github.com/floatlazer/semantic_slam (accessed on 1 May 2024).

- Rosinol, A.; Abate, M.; Chang, Y.; Carlone, L. Kimera: An Open-Source Library for Real-Time Metric-Semantic Localization and Mapping. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020. [Google Scholar]

- Maturana, D.; Chou, P.W.; Uenoyama, M.; Scherer, S. Real-time Semantic Mapping for Autonomous Off-Road Navigation. In Proceedings of the 11th International Conference on Field and Service Robotics (FSR), Zurich, Switzerland, 12–15 September 2017; pp. 335–350. [Google Scholar]

- Ewen, P.; Li, A.; Chen, Y.; Hong, S.; Vasudevan, R. These Maps Are Made For Walking: Real-Time Terrain Property Estimation for Mobile Robots. IEEE Robot. Autom. Lett. 2022, 7, 7083–7090. [Google Scholar] [CrossRef]

- Camps, G.S.; Dyro, R.; Pavone, M.; Schwager, M. Learning Deep SDF Maps Online for Robot Navigation and Exploration. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022. [Google Scholar]

- Jadidi, M.G.; Miro, J.V.; Dissanayake, G. Gaussian processes autonomous mapping and exploration for range-sensing mobile robots. Auton. Robot. 2017, 42, 273–290. [Google Scholar] [CrossRef]

- Morelli, J.; Zhu, P.; Doerr, B.; Linares, R.; Ferrari, S. Integrated Mapping and Path Planning for Very Large-Scale Robotic (VLSR) Systems. In Proceedings of the International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3356–3362. [Google Scholar] [CrossRef]

- Liu, J.; Chen, X.; Xiao, J.; Lin, S.; Zheng, Z.; Lu, H. Hybrid Map-Based Path Planning for Robot Navigation in Unstructured Environments. arXiv 2023, arXiv:2303.05304. [Google Scholar]

- Lombard, C.D.; van Daalen, C.E. Stochastic triangular mesh mapping: A terrain mapping technique for autonomous mobile robots. Robot. Auton. Syst. 2020, 127, 103449. [Google Scholar] [CrossRef]

- Fankhauser, P.; Bloesch, M.; Hutter, M. Probabilistic Terrain Mapping for Mobile Robots with Uncertain Localization. IEEE Robot. Autom. Lett. 2018, 3, 3019–3026. [Google Scholar] [CrossRef]

- Poudel, R.P.K.; Liwicki, S.; Cipolla, R. Fast-SCNN: Fast Semantic Segmentation Network. arXiv 2019, arXiv:1902.04502. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep learning on point sets for 3D classification and segmentation. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Maturana, D. Semantic Mapping for Autonomous Navigation and Exploration. Ph.D. Thesis, Carnegie Mellon University, Pittsburgh, PA, USA, 2022. [Google Scholar]

- Zaganidis, A.; Magnusson, M.; Duckett, T.; Cielniak, G. Semantic-assisted 3D normal distributions transform for scan registration in environments with limited structure. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 4064–4069. [Google Scholar] [CrossRef]

- Crespo, J.; Castillo, J.C.; Mozos, O.M.; Barber, R. Semantic Information for Robot Navigation: A Survey. Appl. Sci. 2020, 10, 497. [Google Scholar] [CrossRef]

- Chen, D.; Zhuang, M.; Zhong, X.; Wu, W.; Liu, Q. RSPMP: Real-Time Semantic Perception and Motion Planning for Autonomous Navigation of Unmanned Ground Vehicle in off-Road Environments. Appl. Intell. 2022, 53, 4979–4995. [Google Scholar] [CrossRef]

- Belter, D.; Wietrzykowski, J.; Skrzypczyński, P. Employing Natural Terrain Semantics in Motion Planning for a Multi-Legged Robot. J. Intell. Robot. Syst. 2019, 93, 723–743. [Google Scholar] [CrossRef]

- Ono, M.; Fuchs, T.J.; Steffy, A.; Maimone, M.; Yen, J. Risk-aware planetary rover operation: Autonomous terrain classification and path planning. In Proceedings of the IEEE Aerospace Conference, Big Sky, MT, USA, 7–14 March 2015; pp. 1–10. [Google Scholar] [CrossRef]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar] [CrossRef]

- Hedman, P.; Srinivasan, P.P.; Mildenhall, B.; Barron, J.T.; Debevec, P. Baking Neural Radiance Fields for Real-Time View Synthesis. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Kerbl, B.; Kopanas, G.; Leimkühler, T.; Drettakis, G. 3D Gaussian Splatting for Real-Time Radiance Field Rendering. ACM Trans. Graph. 2023, 42, 139:1–139:14. [Google Scholar] [CrossRef]

- Sucar, E.; Liu, S.; Ortiz, J.; Davison, A. iMAP: Implicit Mapping and Positioning in Real-Time. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Ramos, F.; Ott, L. Hilbert maps: Scalable continuous occupancy mapping with stochastic gradient descent. Int. J. Robot. Res. 2016, 35, 1717–1730. [Google Scholar] [CrossRef]

- Jin, L.; Rückin, J.; Kiss, S.H.; Vidal-Calleja, T.; Popović, M. Adaptive-resolution field mapping using Gaussian process fusion with integral kernels. IEEE Robot. Autom. Lett. 2022, 7, 7471–7478. [Google Scholar] [CrossRef]

- Snelson, E.; Ghahramani, Z. Sparse Gaussian Processes using Pseudo-inputs. In Advances in Neural Information Processing Systems; Weiss, Y., Schölkopf, B., Platt, J., Eds.; MIT Press: Cambridge, MA, USA, 2005; Volume 18. [Google Scholar]

- Jadidi, M.G.; Gan, L.; Parkison, S.A.; Li, J.; Eustice, R.M. Gaussian Processes Semantic Map Representation. arXiv 2017, arXiv:1707.01532. [Google Scholar] [CrossRef]

- Zobeidi, E.; Koppel, A.; Atanasov, N. Dense incremental metric-semantic mapping for multiagent systems via sparse Gaussian process regression. IEEE Trans. Robot. 2022, 38, 3133–3153. [Google Scholar] [CrossRef]

- Garnelo, M.; Schwarz, J.; Rosenbaum, D.; Viola, F.; Rezende, D.; Eslami, S.; Teh, Y. Neural Processes. In Proceedings of the Theoretical Foundations and Applications of Deep Generative Models Workshop, International Conference on Machine Learning (ICML), Stockholm, Sweden, 10–15 July 2018. [Google Scholar]

- Eder, M.; Prinz, R.; Schöggl, F.; Steinbauer-Wagner, G. Traversability analysis for off-road environments using locomotion experiments and earth observation data. Robot. Auton. Syst. 2023, 168, 104494. [Google Scholar] [CrossRef]

- Yu, C.; Gao, C.; Wang, J.; Yu, G.; Shen, C.; Sang, N. BiSeNet V2: Bilateral Network with Guided Aggregation for Real-Time Semantic Segmentation. Int. J. Comput. Vis. 2020, 129, 3051–3068. [Google Scholar] [CrossRef]

- Zürn, J.; Burgard, W.; Valada, A. Self-supervised visual terrain classification from unsupervised acoustic feature learning. IEEE Trans. Robot. 2020, 37, 466–481. [Google Scholar] [CrossRef]

- Sculley, D. Web-Scale k-Means Clustering. In Proceedings of the 19th International Conference on World Wide Web, Raleigh, NC, USA, 26–30 April 2010; pp. 1177–1178. [Google Scholar] [CrossRef]

- Neal, R.M. Monte Carlo Implementation of Gaussian Process Models for Bayesian Regression and Classification; Technical Report 9702; Department of Statistics, University of Toronto: Toronto, ON, Canada, 1997. [Google Scholar]

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; Burovski, E.; Peterson, P.; Weckesser, W.; Bright, J.; et al. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nat. Methods 2020, 17, 261–272. [Google Scholar] [CrossRef]

- Gardner, J.R.; Pleiss, G.; Bindel, D.; Weinberger, K.Q.; Wilson, A.G. GPyTorch: Blackbox Matrix-Matrix Gaussian Process Inference with GPU Acceleration. In Proceedings of the Advances in Neural Information Processing Systems, Montréal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Pleiss, G.; Gardner, J.R.; Weinberger, K.Q.; Wilson, A.G. Constant-Time Predictive Distributions for Gaussian Processes. arXiv 2018, arXiv:1803.06058. [Google Scholar]

- Serdel, Q.; Marzat, J.; Moras, J. Smooth Path Planning Using a Gaussian Process Regression Map for Mobile Robot Navigation. In Proceedings of the 13th International Workshop on Robot Motion and Control (RoMoCo), Poznań, Poland, 2–4 July 2024. [Google Scholar]

- Achat, S.; Marzat, J.; Moras, J. Path Planning Incorporating Semantic Information for Autonomous Robot Navigation. In Proceedings of the 19th International Conference on Informatics in Control, Automation and Robotics (ICINCO), Lisbon, Portugal, 14–16 July 2022. [Google Scholar]

- Bartolomei, L.; Teixeira, L.; Chli, M. Perception-aware Path Planning for UAVs using Semantic Segmentation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October–24 January 2020; pp. 5808–5815. [Google Scholar] [CrossRef]

- Oleynikova, H.; Taylor, Z.; Fehr, M.; Siegwart, R.; Nieto, J. Voxblox: Incremental 3D Euclidean Signed Distance Fields for On-Board MAV Planning. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017. [Google Scholar]

- Nießner, M.; Zollhöfer, M.; Izadi, S.; Stamminger, M. Real-time 3D Reconstruction at Scale using Voxel Hashing. ACM Trans. Graph. (TOG) 2013, 32, 169. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Susstrunk, S. SLIC Superpixels Compared to State-of-the-Art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

- Wigness, M.; Eum, S.; Rogers, J.G.; Han, D.; Kwon, H. A RUGD Dataset for Autonomous Navigation and Visual Perception in Unstructured Outdoor Environments. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019. [Google Scholar]

- Furgale, P.; Rehder, J.; Siegwart, R. Unified temporal and spatial calibration for multi-sensor systems. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 1280–1286. [Google Scholar] [CrossRef]

- Koide, K.; Oishi, S.; Yokozuka, M.; Banno, A. General, Single-shot, Target-less, and Automatic LiDAR-Camera Extrinsic Calibration Toolbox. arXiv 2023, arXiv:2302.05094. [Google Scholar]

- Koide, K.; Miura, J.; Menegatti, E. A portable three-dimensional LIDAR-based system for long-term and wide-area people behavior measurement. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419841532. [Google Scholar] [CrossRef]

- Biber, P.; Strasser, W. The normal distributions transform: A new approach to laser scan matching. In Proceedings of the Proceedings 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No.03CH37453), Las Vegas, NV, USA, 27–31 October 2003; Volume 3, pp. 2743–2748. [Google Scholar] [CrossRef]

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An Easy-to-Use Airborne LiDAR Data Filtering Method Based on Cloth Simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Torroba, I.; Chella, M.; Teran, A.; Rolleberg, N.; Folkesson, J. Online Stochastic Variational Gaussian Process Mapping for Large-Scale SLAM in Real Time. arXiv 2022, arXiv:2211.05601. [Google Scholar] [CrossRef]

| Method | Navigation Structure | Intermediate Representation | Representation Storage | Domain | Scale | Employ Semantics | Uncertainty Measurement |

|---|---|---|---|---|---|---|---|

| Wermelinger et al. [5] | 2D MLG | Ø | Ø | Discrete | Global | no | no |

| SMaNA [2] with [6] | 2D MLG | Octomap | Explicit | Discrete | Global | yes | no |

| SMaNA [2] with [7] | 2D MLG | TSDF | Semi-Implicit | Discrete | Global | yes | no |

| Maturna et al. [8] | 2D MLG | Ø | Ø | Discrete | Local | yes | yes |

| Ewen et al. [9] | 2.5D Triangle Mesh | Ø | Ø | Discrete | Local | yes | no |

| Sznaier et al. [10] | Sampled SDF | Local MLPs | Implicit | Continuous | Global | no | no |

| Ghaffari et al. [11] | 2D MLG | GPR | Implicit | Continuous | Global | no | yes |

| Morelli et al. [12] | Sampled 2D Graph | Hilbert Map | Implicit | Continuous | Global | no | no |

| COSMAu-Nav (ours) | GPR | Compressed Points | Implicit | Continuous | Hybrid | yes | yes |

| class | sky | grass | terrain | hedge | topiary | rose | obstacle | tree |

| color | ||||||||

| traversability | 0 | 0.2 | 1 | 0 | 0 | 0 | 0 | 0 |

| Parameter | Value |

|---|---|

| Grid Clustering Compression | |

| Point integration max distance | 3.0 m |

| Resolution of the clustering grid | 0.25 m |

| Distance from the robot beyond which a cluster is discarded | 15 m |

| K-means Clustering Compression | |

| Number of clusters for k-means spatial compression | 100 |

| Number of clusters for k-means temporal compression | 200 |

| Number of scans in the temporal integration window | 10 |

| GPR | |

| Safety radius: threshold distance from obstacles below which a point is considered as occupied | 0.25 m |

| Inference resolution for online visualisation | 0.1 m |

| Threshold process variance above which the space is considered unobserved | 2.5 × 10−3 |

| Distance from the robot up to which inference is performed for visualisation | 3.0 m |

| Parameter | Value |

|---|---|

| Semantic Octomap | |

| Maximum point integration range | 3.0 m |

| Resolution of the octree leaf voxels | 0.1 m |

| Ray casting range | 3.0 m |

| Occupancy threshold | 0.5 |

| Kimera Semantics | |

| Maximum point integration range | 3.0 m |

| Voxel size | 0.1 m |

| Voxels per bloc side | 16 |

| Voxel update method | “fast” |

| Navigation Graph Building | |

| Safety radius: threshold distance from obstacles below which a cell is considered as occupied | 0.25 m |

| Navigation graph grid cell resolution | 0.1 m |

| Parameter | Value |

|---|---|

| Grid Clustering Compression | |

| Point integration max distance | 12.0 m |

| Resolution of the clustering grid | 0.5 m |

| Distance from the robot beyond which a compressed point is discarded | 12.0 m |

| Distance from the last key-pose at which a new keyframe is created | 12.0 m |

| GPR | |

| Safety radius: threshold distance from obstacles below which a point is considered as occupied | 1.0 m |

| Inference resolution for online visualisation | 0.25 m |

| Threshold process variance above which the space is considered unobserved | 2 × 10−2 |

| Distance from the robot up to which inference is performed for visualisation | 12.0 m |

| Planning | |

| Prior T-RRT step size | 1.0 m |

| Resolution of the sampling along the path for optimisation | 0.25 m |

| Minimum accepted path curvature radius | 1.0 m |

| ADAM optimiser initial learning rate | 0.1 |

| Path length cost factor | 1 |

| Terrain traversability cost factor | 25 |

| Process variance cost factor | 1 × 105 |

| Obstacle collision avoidance cost factor | 1 × 106 |

| Path curvature cost factor | 100 |

| Number of closest keyframes for global inference | 3 |

| Global route planning step size | 5.0 m |

| Max robot speed | 0.5 m/s |

| Path collision check rate | 1 Hz |

| Local planning update rate | 0.5 Hz |

| Global planning update rate | 0.1 Hz |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Serdel, Q.; Marzat, J.; Moras, J. Continuous Online Semantic Implicit Representation for Autonomous Ground Robot Navigation in Unstructured Environments. Robotics 2024, 13, 108. https://doi.org/10.3390/robotics13070108

Serdel Q, Marzat J, Moras J. Continuous Online Semantic Implicit Representation for Autonomous Ground Robot Navigation in Unstructured Environments. Robotics. 2024; 13(7):108. https://doi.org/10.3390/robotics13070108

Chicago/Turabian StyleSerdel, Quentin, Julien Marzat, and Julien Moras. 2024. "Continuous Online Semantic Implicit Representation for Autonomous Ground Robot Navigation in Unstructured Environments" Robotics 13, no. 7: 108. https://doi.org/10.3390/robotics13070108