Ginkgo biloba Sex Identification Methods Using Hyperspectral Imaging and Machine Learning

Abstract

:1. Introduction

2. Materials and Methods

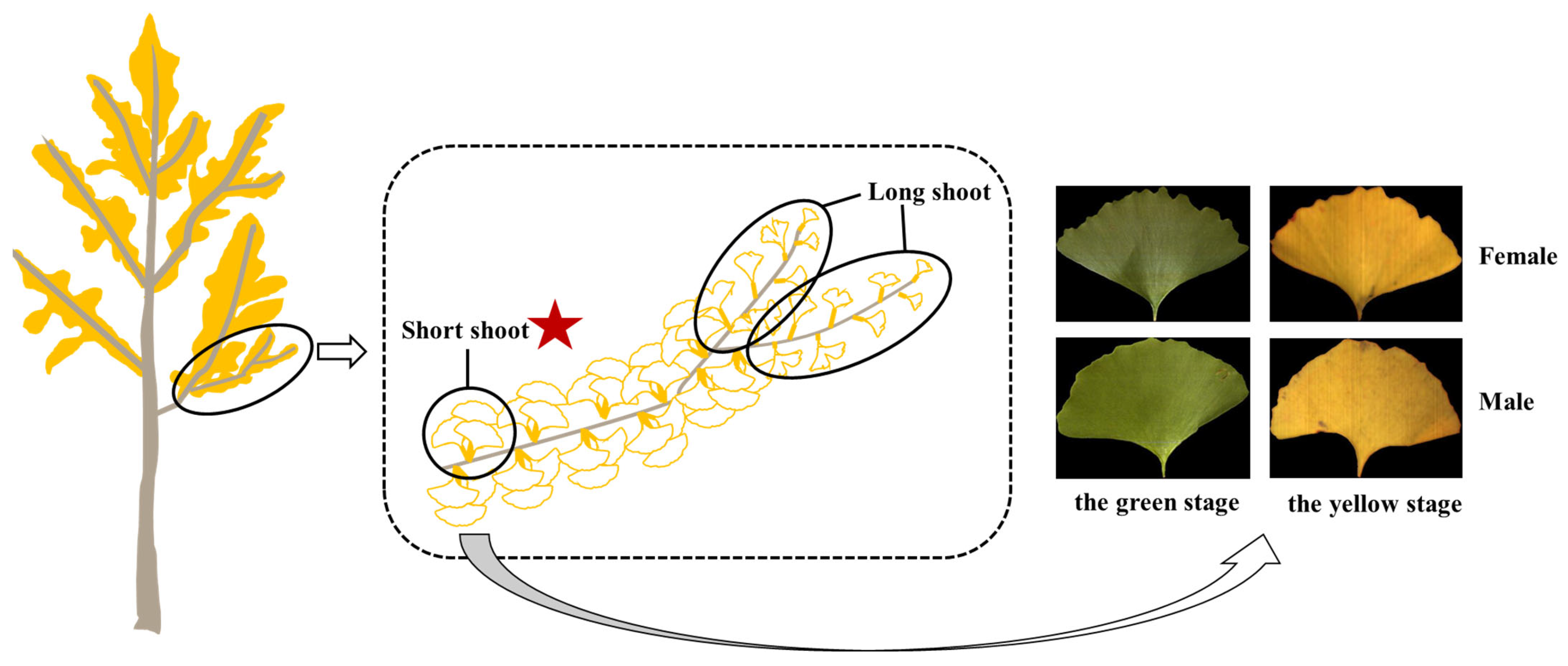

2.1. Data Acquisition

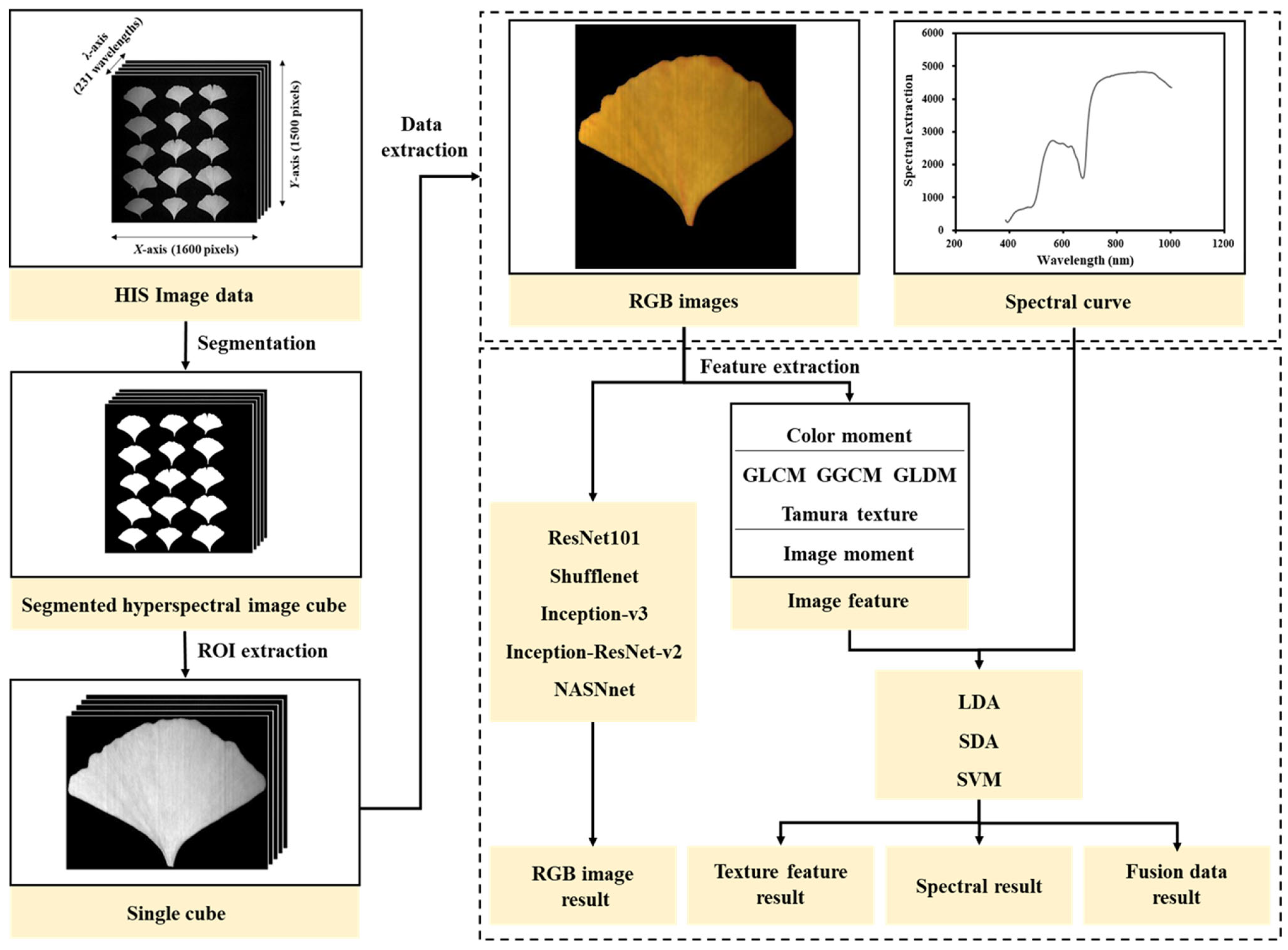

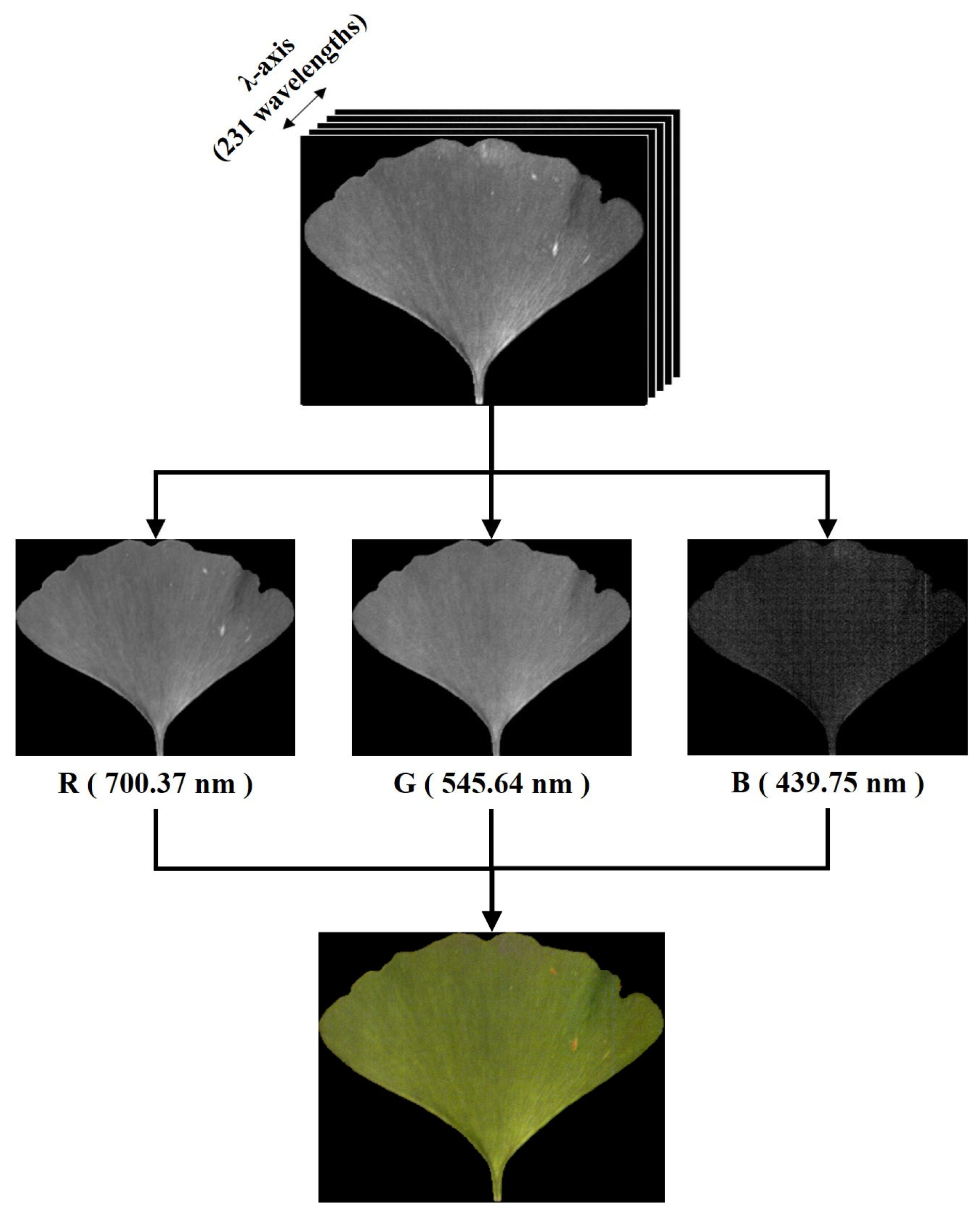

2.2. Sex Identification Method

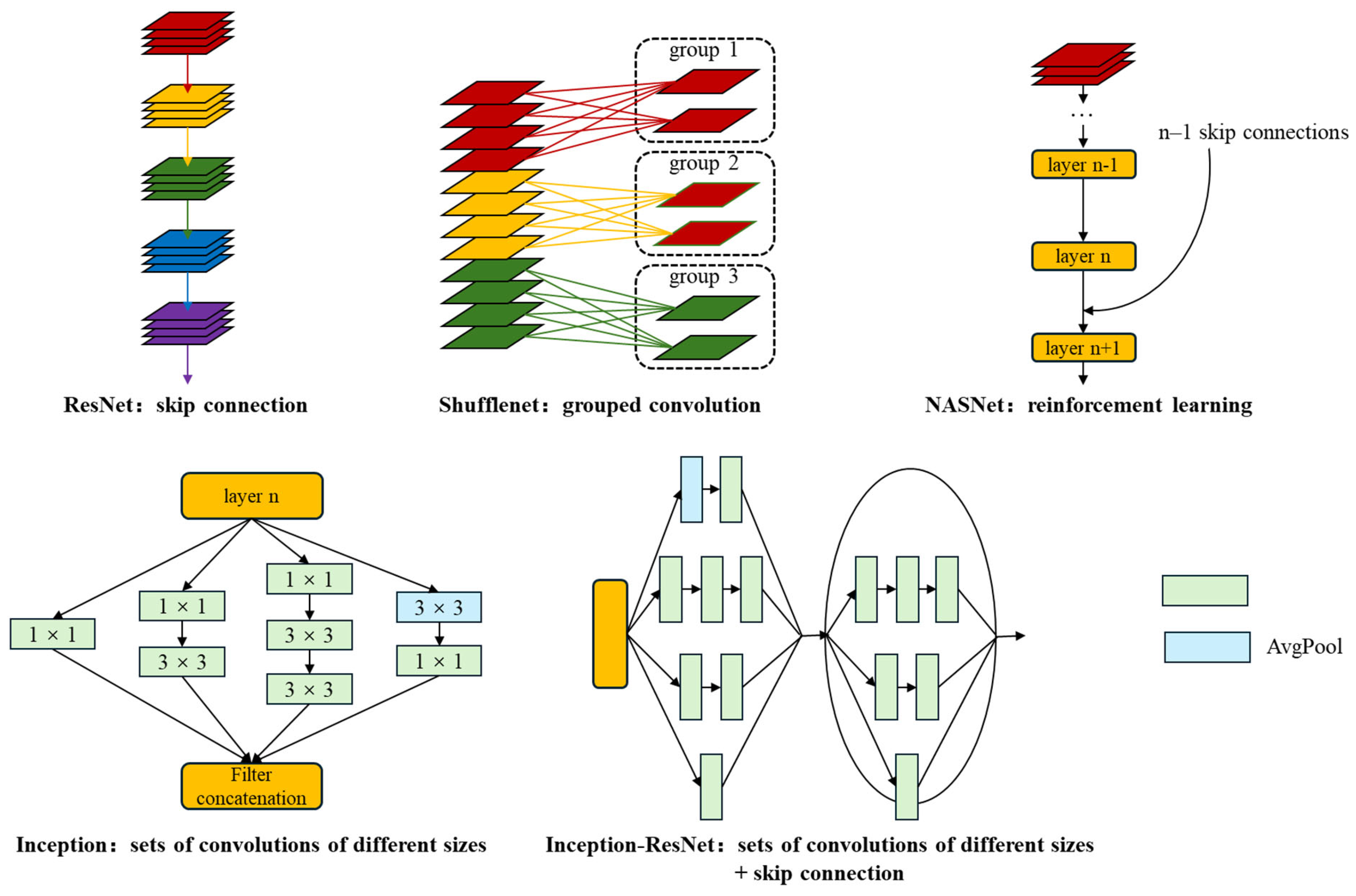

2.2.1. Deep Learning Model

2.2.2. One-Dimensional Data Modeling Methods

2.2.3. Image Feature Extraction

2.3. Data Training and Model Evaluation

2.3.1. Dataset Construction

2.3.2. Modeling Setup

2.3.3. Model Evaluation

3. Results

3.1. RGB Classification Results Based on Deep Learning

3.1.1. Model Selection

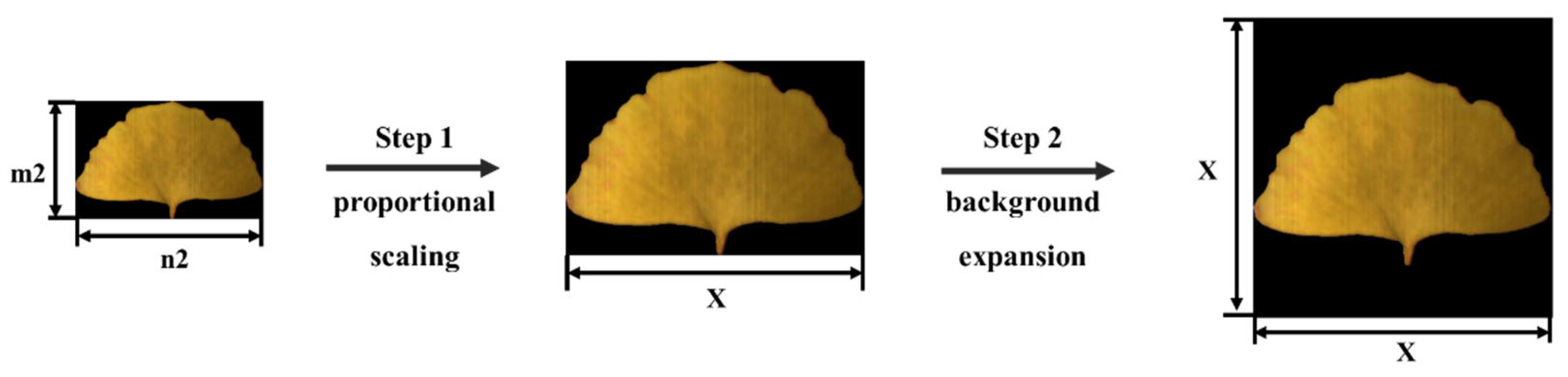

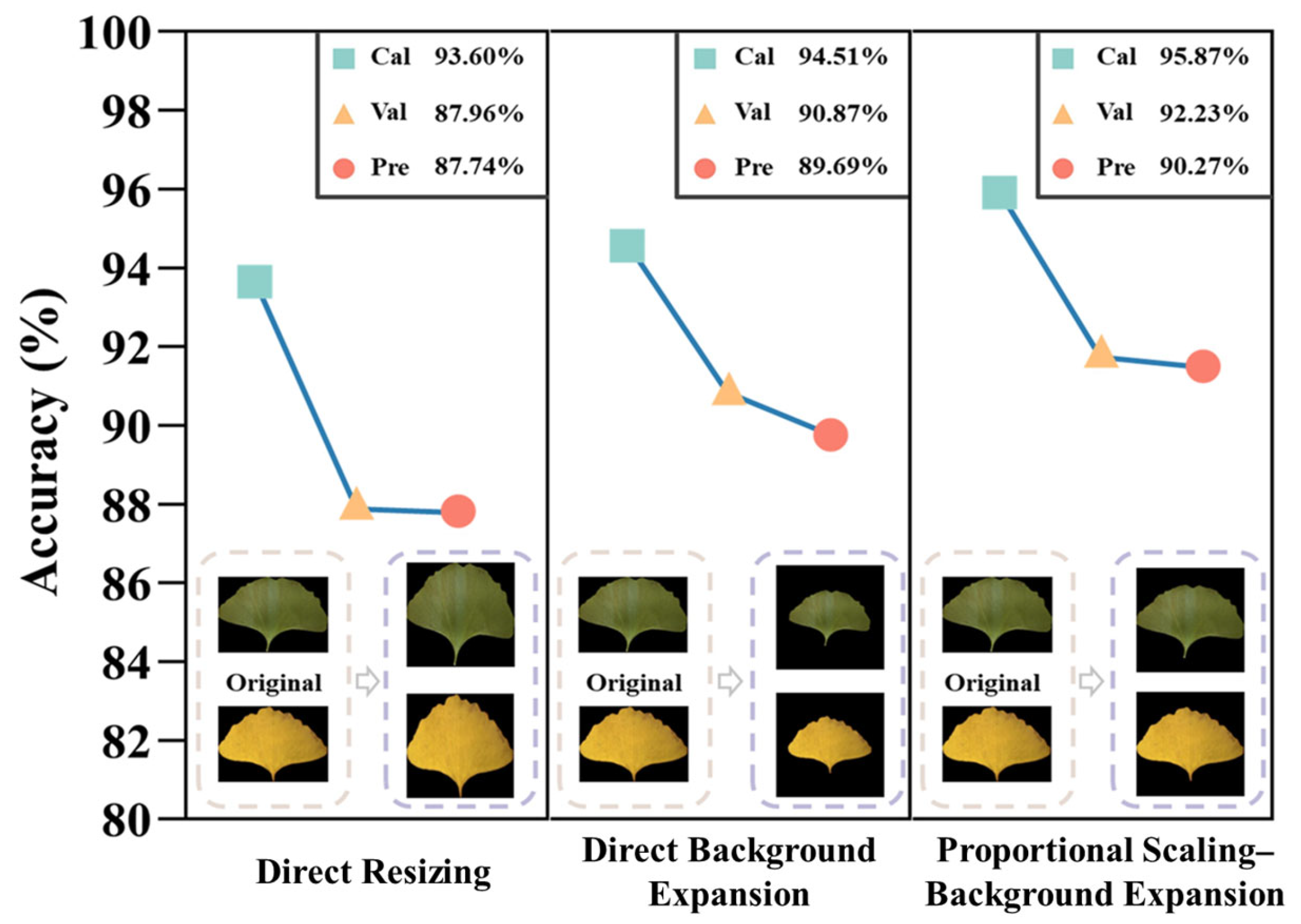

3.1.2. Background Expansion

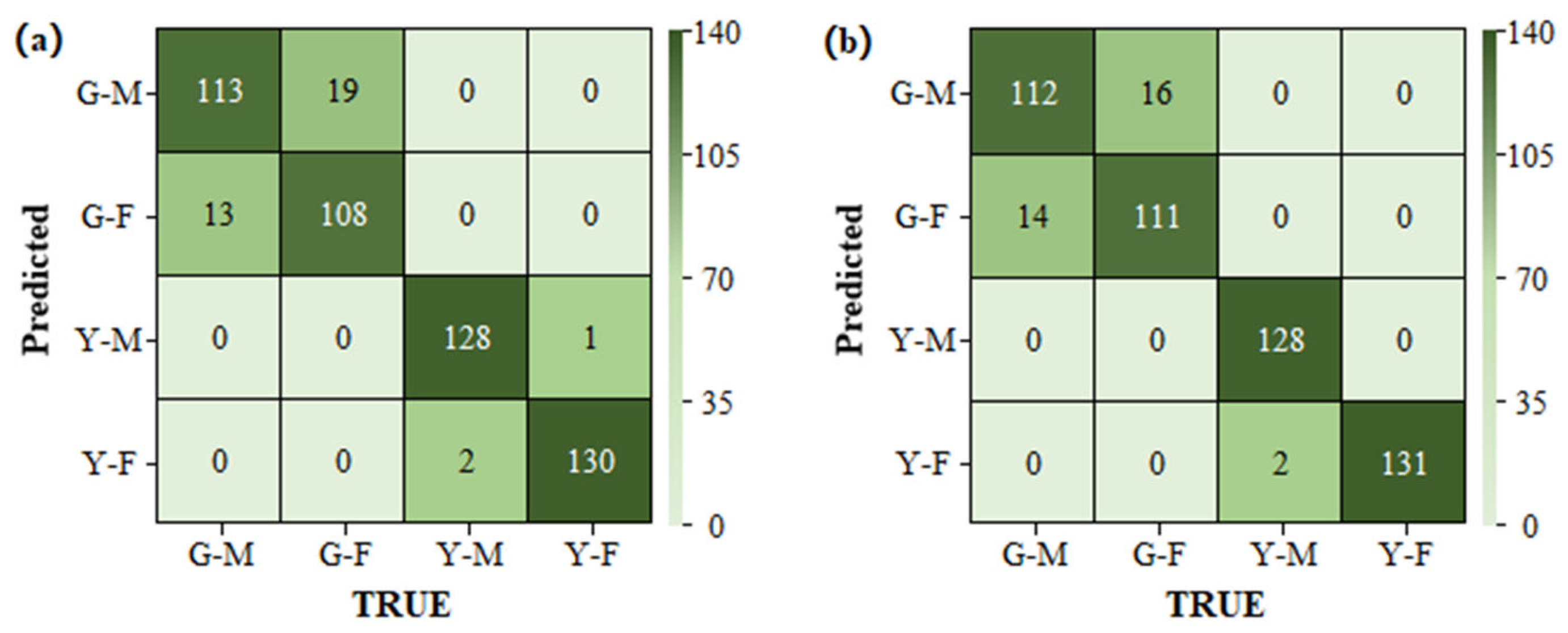

3.1.3. Verification Using Images Taken with Mobile Phones

3.2. Classification Results Based on Spectral Information

3.2.1. Spectral Feature

3.2.2. Effects of Leaf Stage

3.2.3. Model Results Based on the Two-Stage Period-Predetermined Method

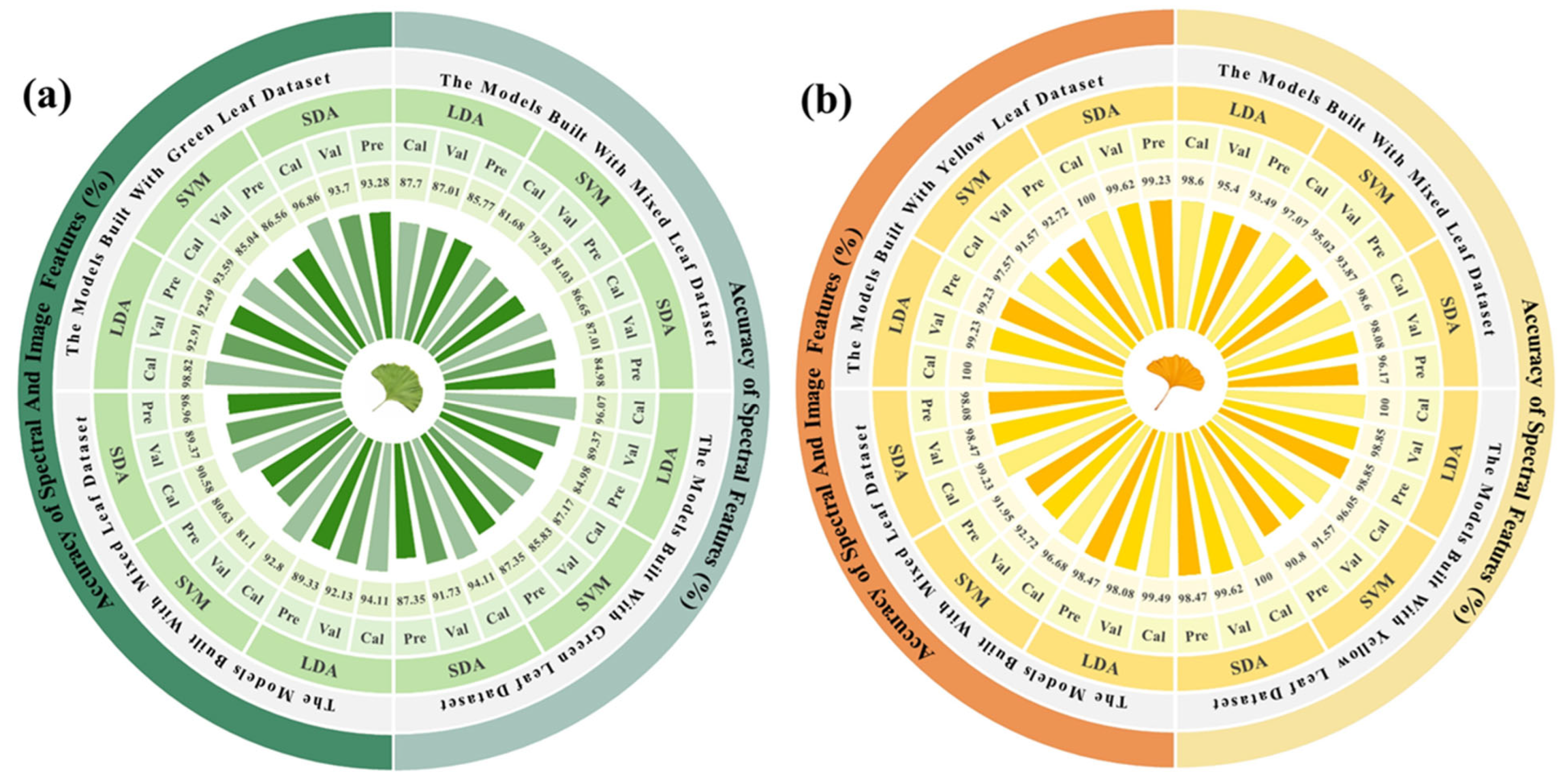

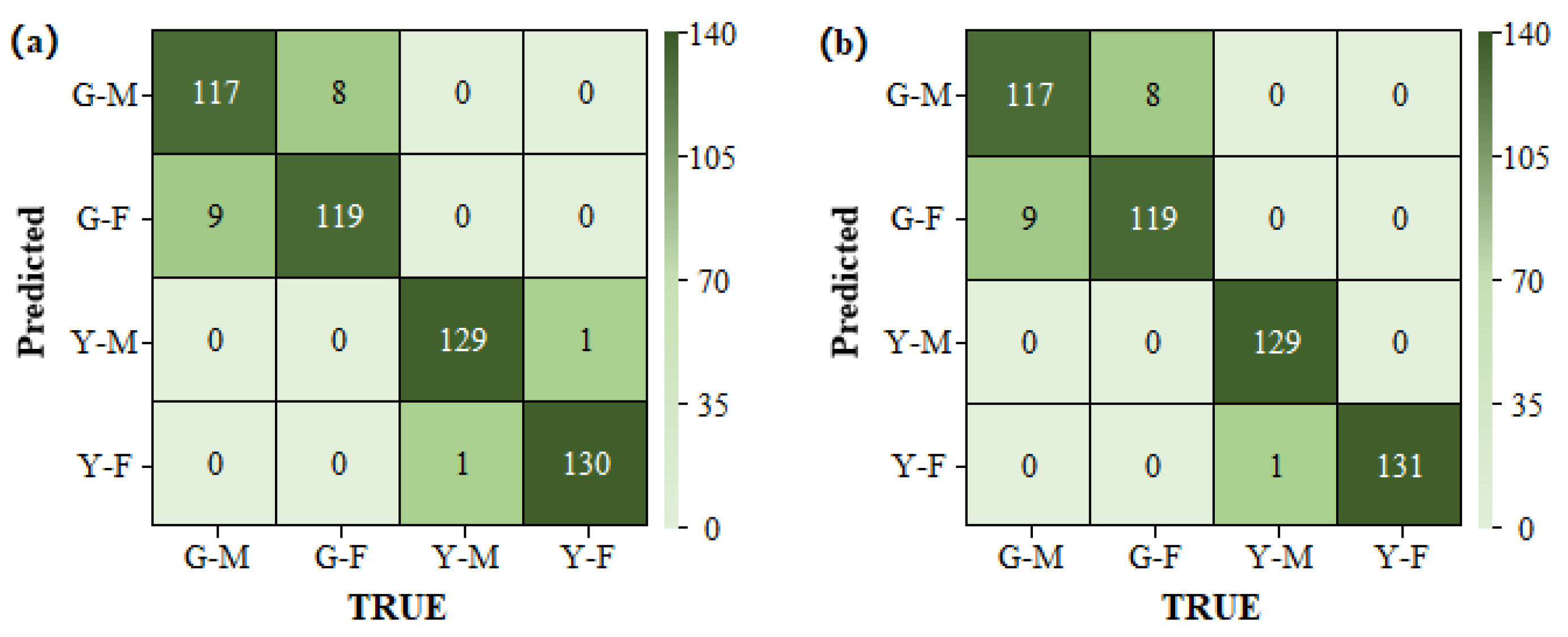

3.3. Classification Results Based on Fusion Information

3.3.1. Results Based on Image Information

3.3.2. Results Based on Fused Data

3.3.3. Model Results Based on the PP Method

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lin, H.; Li, W.; Lin, C.; Wu, H.; Zhao, Y. International biological flora: Ginkgo biloba. J. Ecol. 2022, 110, 951–982. [Google Scholar] [CrossRef]

- Crane, P. An evolutionary and cultural biography of ginkgo. Plants People Planet 2019, 1, 32–37. [Google Scholar] [CrossRef]

- Chen, W.; Shen, H. Research on ginkgo cultivation technology. Mod. Agric. Res. 2016, 9, 58. [Google Scholar]

- Hosoda, S.; Kawazoe, Y.; Shiba, T.; Numazawa, S.; Manabe, A. Anti-obesity effect of ginkgo vinegar, a fermented product of ginkgo seed coat, in mice fed a high-fat diet and 3T3-L1 preadipocyte cells. Nutrients 2020, 12, 230. [Google Scholar] [CrossRef]

- Lu, Y.; Wang, L.; Wang, D.; Wang, Y.; Zhang, M.; Jin, B.; Chen, P. Male cone morphogenesis, pollen development and pollen dispersal mechanism in Ginkgo biloba L. Can. J. Plant Sci. 2011, 91, 971–981. [Google Scholar] [CrossRef]

- Jin, B.; Wang, D.; Lu, Y.; Jiang, X.; Zhang, M.; Zhang, L.; Wang, L. Female short shoot and ovule development in Ginkgo biloba L. with emphasis on structures associated with wind pollination. Int. Scholarly Res. Not. 2012, 2, 230685. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, C.; Meng, Y.; Zhang, F.; Huang, N.; Wang, J.; Li, Y. Hydraulic and Economical Traits in Short- and Long-Shoot Leaves of Ginkgo biloba Males and Females. Forests 2023, 14, 535. [Google Scholar] [CrossRef]

- Wu, Z.; Lin, C.; Wang, S.; Gong, Y.; Fu, Y.; Tang, J.; Boeck, H.; Vitasse, Y.; Zhao, Y. The sensitivity of ginkgo leaf unfolding to the temperature and photoperiod decreases with increasing elevation. Agric. For. Meteorol. 2022, 315, 108840. [Google Scholar] [CrossRef]

- Rasheid, N.; Sofi, P.; Masoodi, T. Phenodynamics of Ginkgo biloba L.-A Living Fossil under threat under temperate conditions of Kashmir Himalayas, India. Chem. Sci. Rev. Lett. 2018, 7, 469–473. [Google Scholar]

- Huang, Q.; Liu, J.; Cao, M.; Yang, X. Advance of gender characteristics expression and identification of Ginkgo biloba. J. Fruit. Sci. 2013, 30, 1065–1071. [Google Scholar]

- Guo, Y.; Wang, M.; Gao, C.; Fu, F.; El-Kassaby, Y.; Wang, T.; Wang, G. Spatial prediction and delineation of Ginkgo biloba production areas under current and future climatic conditions. Ind. Crops Prod. 2021, 166, 113444. [Google Scholar] [CrossRef]

- Jiang, L.; You, R.; Li, M.; Shi, C. Identification of a Sex-Associated RAPD Marker in Ginkgo biloba. J. Integr. Plant Biol. 2003, 45, 742–747. [Google Scholar]

- Hong, Y.; Lee, J. Development of SCAR marker for identifying male trees of Ginkgo biloba using multiplex PCR. J. Korean For. Soc. 2016, 105, 422–428. [Google Scholar]

- Zhao, Y.; Gu, K.; Sun, Y.; Fan, G.; Zhang, R. A Molecular Marker, Primer Pair and Method for Sex Identification of Ginkgo biloba. China Patent ZL201911204191.2, 4 January 2020. [Google Scholar]

- Fu, L.; Su, W.; Chen, F.; Zhao, S.; Zhang, H.; Karimi-Maleh, H.; Yu, A.; Yu, J.; Lin, C. Early sex determination of Ginkgo biloba based on the differences in the electrocatalytic performance of extracted peroxidase. Bioelectrochemistry 2021, 140, 107829. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, M.; Gao, C.; Fu, F.; Wang, T.; El-Kassaby, Y.; Wang, G. Amino acid metabolism reprogramming in response to changing growth environment in Ginkgo biloba leaves. LWT 2021, 144, 111276. [Google Scholar] [CrossRef]

- Elakremi, M.; Sillero, L.; Ayed, L.; Mosbah, M.; Labidi, J.; Salem, R.; Moussaoui, Y. Pistacia vera L. leaves as a renewable source of bioactive compounds via microwave assisted extraction. Sustain. Chem. Pharm. 2022, 29, 100815. [Google Scholar] [CrossRef]

- Holman, A.; Goff, N.; Juárez, I.; Higgins, S.; Rodriguez, A.; Bagavathiannan, M.; Kurouski, D.; Subramanian, N. Elucidation of sex from mature palmer amaranth (Amaranthus palmeri) leaves using a portable raman spectrometer. RSC Adv. 2024, 14, 1833–1837. [Google Scholar] [CrossRef]

- Khan, A.; Al-Harrasi, A.; Numan, M.; AbdulKareem, N.; Mabood, F.; Al-Rawahi, A. Spectroscopic and molecular methods to differentiate gender in immature date palm (Phoenix dactylifera L.). Plants 2021, 10, 536. [Google Scholar] [CrossRef]

- Li, Q.; Hu, Y. Kinetic models of peroxidase activity in potato leaves infected with late blight based on hyperspectral data. Int. J. Agric. Biol. Eng. 2019, 12, 160–165. [Google Scholar] [CrossRef]

- Elsherbiny, O.; Fan, Y.; Zhou, L.; Qiu, Z. Fusion of feature selection methods and regression algorithms for predicting the canopy water content of rice based on hyperspectral data. Agriculture 2021, 11, 51. [Google Scholar] [CrossRef]

- Wang, T.; Gao, M.; Cao, C.; You, J.; Zhang, X.; Shen, L. Winter wheat chlorophyll content retrieval based on machine learning using in situ hyperspectral data. Comput. Electron. Agric. 2022, 193, 106728. [Google Scholar] [CrossRef]

- Liu, H.; Bruning, B.; Garnett, T.; Berger, B. Hyperspectral imaging and 3D technologies for plant phenotyping: From satellite to close-range sensing. Comput. Electron. Agric. 2020, 175, 105621. [Google Scholar] [CrossRef]

- Blanco, M.; Villarroya, I. NIR spectroscopy: A rapid-response analytical tool. TRAC-Trend Anal. Chem. 2002, 21, 240–250. [Google Scholar] [CrossRef]

- Cozzolino, D. Infrared Spectroscopy as a Versatile Analytical Tool for the Quantitative Determination of Antioxidants in Agricultural Products, Foods and Plants. Antioxidants 2015, 4, 482–497. [Google Scholar] [CrossRef]

- Jin, J.; Jiang, H.; Yu, S.; Zhou, G. Sex-linked photosynthetic physiologic research and the evolutionary ecological analysis in living fossil plant, Ginkgo biloba L. Acta Ecol. Sin. 2008, 28, 1128–1136. [Google Scholar]

- Wang, D.; Zhang, Y.; Pan, Y.; Peng, B.; Liu, H.; Ma, R. An automated inspection method for the steel box girder bottom of long-span bridges based on deep learning. IEEE Access 2020, 8, 94010–94023. [Google Scholar] [CrossRef]

- Nawaz, M.; Nazir, T.; Javed, A.; Tariq, U.; Yong, H.; Khan, M.; Cha, J. An efficient deep learning approach to automatic glaucoma detection using optic disc and optic cup localization. Sensors 2022, 22, 434. [Google Scholar] [CrossRef]

- Rolfe, M.; Hayes, S.; Smith, M.; Owen, M.; Spruth, M.; McCarthy, C.; Forkan, A.; Banerjee, A.; Hocking, R. An AI based smart-phone system for asbestos identification. J. Hazard. Mater. 2024, 463, 132853. [Google Scholar] [CrossRef]

- Mehmood, M.; Alshammari, N.; Alanazi, S.; Basharat, A.; Ahmad, F.; Sajjad, M.; Junaid, K. Improved colorization and classification of intracranial tumor expanse in MRI images via hybrid scheme of Pix2Pix-cGANs and NASNet-large. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 4358–4374. [Google Scholar] [CrossRef]

- Qadri, A.; Raza, A.; Eid, F.; Abualigah, L. A novel transfer learning-based model for diagnosing malaria from parasitized and uninfected red blood cell images. Decis. Anal. J. 2023, 9, 100352. [Google Scholar] [CrossRef]

- Chen, Z.; Yang, J.; Chen, L.; Jiao, H. Garbage classification system based on improved ShuffleNet v2. Resour. Conserv. Recycl. 2022, 178, 106090. [Google Scholar] [CrossRef]

- Karthik, R.; Joshua, A.; Joel, K. Inception-based global context attention network for the classification of coffee leaf diseases. Ecol. Inform. 2023, 77, 102213. [Google Scholar] [CrossRef]

- Gao, J.; Westergaard, J.; Sundmark, E.; Bagge, M.; Liljeroth, E.; Alexandersson, E. Automatic late blight lesion recognition and severity quantification based on field imagery of diverse potato genotypes by deep learning. Knowl.-Based Syst. 2021, 214, 106723. [Google Scholar] [CrossRef]

- Hu, W.; Chen, L.; Huang, B.; Lin, H. A computer vision-based intelligent fish feeding system using deep learning techniques for aquaculture. IEEE Sensors J. 2022, 22, 7185–7194. [Google Scholar] [CrossRef]

- Choi, J.; Kim, H.; Moon, K. Geographical origin discriminant analysis of Chia seeds (Salvia hispanica L.) using hyperspectral imaging. J. Food Compos. Anal. 2021, 101, 103916. [Google Scholar] [CrossRef]

- Gadekar, H.; Bugalia, N. Automatic classification of construction safety reports using semi-supervised YAKE-Guided LDA approach. Adv. Eng. Inform. 2023, 56, 101929. [Google Scholar] [CrossRef]

- Gao, J.; Li, L. A robust geometric mean-based subspace discriminant analysis feature extraction approach for image set classification. Optik 2019, 199, 163368. [Google Scholar] [CrossRef]

- Liu, Y.; Huang, J.; Li, M.; Chen, Y.; Cui, Q.; Lu, C.; Wang, Y.; Li, L.; Xu, Z.; Zhong, Y.; et al. Rapid identification of the green tea geographical origin and processing month based on near-infrared hyperspectral imaging combined with chemometrics. Spectrochim. Acta A 2022, 267, 120537. [Google Scholar] [CrossRef]

- Sim, J.; Dixit, Y.; Mcgoverin, C.; Oey, I.; Frew, R.; Reis, M.; Kebede, B. Machine learning-driven hyperspectral imaging for non-destructive origin verification of green coffee beans across continents, countries, and regions. Food Control 2024, 156, 110159. [Google Scholar] [CrossRef]

- Xuan, G.; Gao, C.; Shao, Y. Spectral and image analysis of hyperspectral data for internal and external quality assessment of peach fruit. Spectrochim. Acta A 2022, 272, 121016. [Google Scholar] [CrossRef]

- Gu, K.; Lin, C.; Wu, J.; Zhao, Y. GinkgoDB: An ecological genome database for the living fossil, Ginkgo biloba. Database 2022, 2022, baac046. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q. Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8697–8710. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the AAAI Conference on Artificial Intelligence, AAAI 2017, San Francisco, CA, USA, 4–9 February 2017; pp. 4278–4284. [Google Scholar]

- Belhumeur, P.; Hespanha, J.; Kriegman, D. Eigenfaces vs. fisherfaces: Recognition using class specific linear projection. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 711–720. [Google Scholar] [CrossRef]

- Platt, J. Sequential Minimal Optimization: A Fast Algorithm for Training Support Vector Machines; Technical Report MSR-TR-98-14; Microsoft Research: Redmond, WA, USA, 1998. [Google Scholar]

- Flury, L.; Boukai, B.; Flury, B. The discrimination subspace model. J. Am. Stat. Assoc. 1997, 92, 758–766. [Google Scholar] [CrossRef]

- Mohanaiah, P.; Sathyanarayana, P.; Gurukumar, L. Image texture feature extraction using GLCM approach. Int. J. Sci. Res. Publ. 2013, 3, 1–5. [Google Scholar]

- Ubul, K.; Yadikar, N.; Amat, A.; Aysa, A.; Yibulayin, T. Uyghur document image retrieval based on gray gradient co-occurrence matrix. In Proceedings of the Chinese Automation Congress, Wuhan, China, 27–29 November 2015; pp. 762–766. [Google Scholar]

- Conners, R.; Harlow, C. A theoretical comparison of texture algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 1980, 3, 204–222. [Google Scholar] [CrossRef] [PubMed]

- Tamura, H.; Mori, S.; Yamawaki, T. Textural features corresponding to visual perception. IEEE Trans. Syst. Man Cybern. 1978, 8, 460–473. [Google Scholar] [CrossRef]

- Hu, M. Visual pattern recognition by moment invariants. IEEE Trans. Inf. Theory 1962, 8, 179–187. [Google Scholar]

- Penrose, L. The elementary statistics of majority voting. J. R. Stat. Soc. 1946, 109, 53–57. [Google Scholar] [CrossRef]

- Lee, J.; Woo, J.; Shin, W.; Park, Y.; Kim, H.; Ahn, J.; Kim, Y. Distinction of Male and Female Trees of Ginkgo biloba Using LAMP. Mol. Biotechnol. 2023, 65, 1693–1703. [Google Scholar] [CrossRef]

| Feature Type | Specific Feature | Number of Features | Details |

|---|---|---|---|

| Color features | Color moment | 6 | The mean values and standard deviations of the red, green, and blue channels. |

| Texture features | GLCM | 16 | Contrast, correlation, energy, and homogeneity at 0°, 45°, 90°, and 135°. |

| GGCM | 15 | Small-gradient dominance, large-gradient dominance, uniformity of gray distribution, uneven gradient distribution, energy, gray mean, gray mean square error of gradient, correlation, gray entropy, gradient entropy, mixing entropy, inertia, and deficit moment. | |

| GLDM | 4 | The mean value, contrast, directional second moment and entropy. | |

| Tamura texture | 5 | Coarseness, contrast, directionality, linearity, and roughness | |

| Shape features | Image moment | 7 | Geometric moments proposed by Hu [55]. |

| Model | Accuracy (%) | Inference Speed (Files/s) | Parameter Quantity (M) | ||

|---|---|---|---|---|---|

| Calibration Set | Validation Set | Prediction Set | |||

| ResNet101 | 93.60 | 87.96 | 87.74 | 35.18 | 44.6 |

| NASNet | 92.64 | 87.57 | 87.55 | 9.68 | 5.3 |

| Inception-ResNet-v2 | 93.22 | 86.21 | 87.16 | 55.29 | 55.9 |

| Inception-v3 | 90.57 | 83.69 | 86.58 | 24.19 | 23.9 |

| Shufflenet | 90.89 | 84.66 | 85.41 | 65.50 | 1.4 |

| Size of Calibration Set (Images) | Initial Parameter | Accuracy (%) | ||

|---|---|---|---|---|

| Calibration Set | Validation Set | Prediction Set | ||

| 800 | ImageNet | 88.50 | 86.00 | 84.60 |

| Ours | 95.25 | 89.00 | 88.20 | |

| 600 | ImageNet | 88.17 | 82.00 | 80.80 |

| Ours | 93.83 | 90.00 | 87.20 | |

| 400 | ImageNet | 88.75 | 82.00 | 79.00 |

| Ours | 92.00 | 88.00 | 86.20 | |

| 200 | ImageNet | 91.50 | 78.00 | 77.10 |

| Ours | 91.50 | 82.00 | 85.80 | |

| Period | Data | Method | Accuracy (%) | ||

|---|---|---|---|---|---|

| Calibration Set | Validation Set | Prediction Set | |||

| G | d | LDA | 87.70 | 87.01 | 85.77 |

| SVM | 81.68 | 79.92 | 81.03 | ||

| SDA | 86.65 | 87.01 | 84.98 | ||

| s | LDA | 96.07 | 89.37 | 84.98 | |

| SVM | 87.17 | 85.83 | 87.35 | ||

| SDA | 94.11 | 91.73 | 87.35 | ||

| Y | d | LDA | 98.60 | 95.40 | 93.49 |

| SVM | 97.07 | 95.02 | 93.87 | ||

| SDA | 98.60 | 98.08 | 96.17 | ||

| s | LDA | 100.00 | 98.85 | 98.85 | |

| SVM | 96.05 | 91.57 | 90.80 | ||

| SDA | 100.00 | 99.62 | 98.47 | ||

| Method | Accuracy (%) | ||

|---|---|---|---|

| Calibration Set | Validation Set | Prediction Set | |

| LDA | 84.04 | 83.07 | 80.58 |

| SVM | 93.22 | 84.24 | 83.69 |

| SDA | 91.28 | 82.65 | 81.52 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, M.; Lin, C.; Sun, Y.; Yang, R.; Lu, X.; Lou, W.; Deng, X.; Zhao, Y.; Liu, F. Ginkgo biloba Sex Identification Methods Using Hyperspectral Imaging and Machine Learning. Plants 2024, 13, 1501. https://doi.org/10.3390/plants13111501

Chen M, Lin C, Sun Y, Yang R, Lu X, Lou W, Deng X, Zhao Y, Liu F. Ginkgo biloba Sex Identification Methods Using Hyperspectral Imaging and Machine Learning. Plants. 2024; 13(11):1501. https://doi.org/10.3390/plants13111501

Chicago/Turabian StyleChen, Mengyuan, Chenfeng Lin, Yongqi Sun, Rui Yang, Xiangyu Lu, Weidong Lou, Xunfei Deng, Yunpeng Zhao, and Fei Liu. 2024. "Ginkgo biloba Sex Identification Methods Using Hyperspectral Imaging and Machine Learning" Plants 13, no. 11: 1501. https://doi.org/10.3390/plants13111501