A Mini-Review of Recent Developments in Plenoptic Background-Oriented Schlieren Technology for Flow Dynamics Measurement

Abstract

:1. Introduction

2. Fundamentals

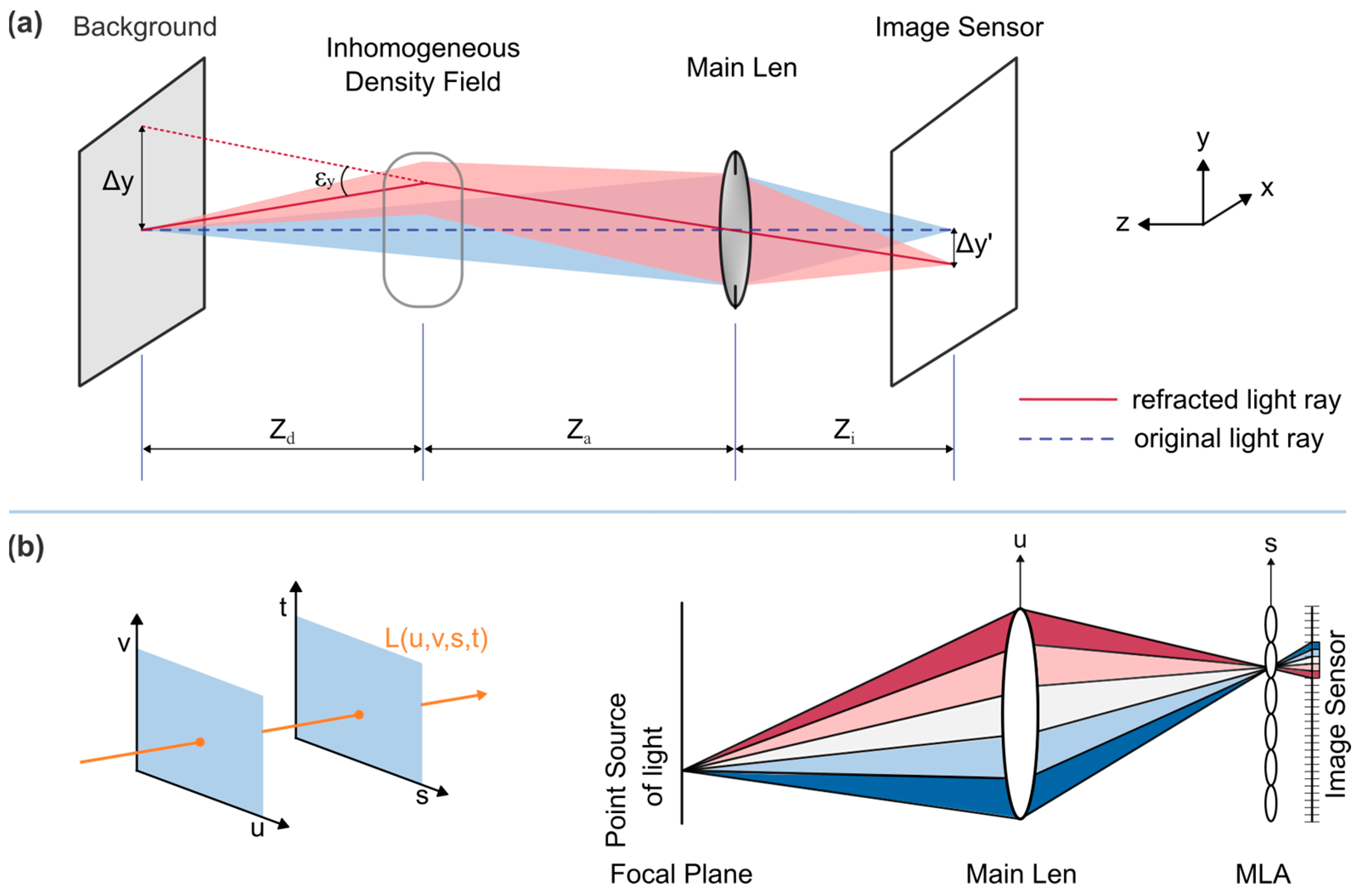

2.1. Background-Oriented Schlieren

2.2. Light-Field Imaging and the Plenoptic Camera

2.3. Plenoptic Background-Oriented Schlieren

3. Plenoptic BOS System

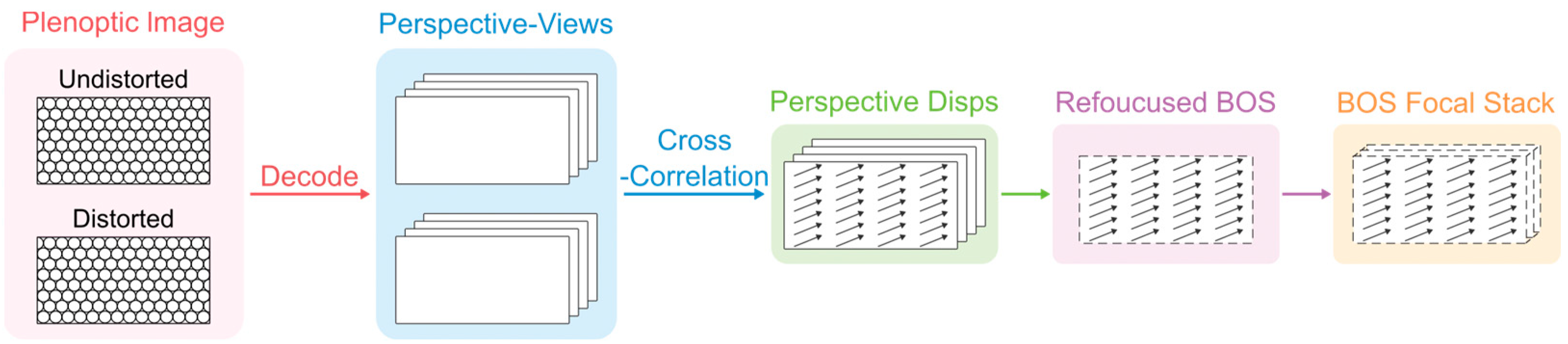

3.1. Single-View Plenoptic BOS Imaging

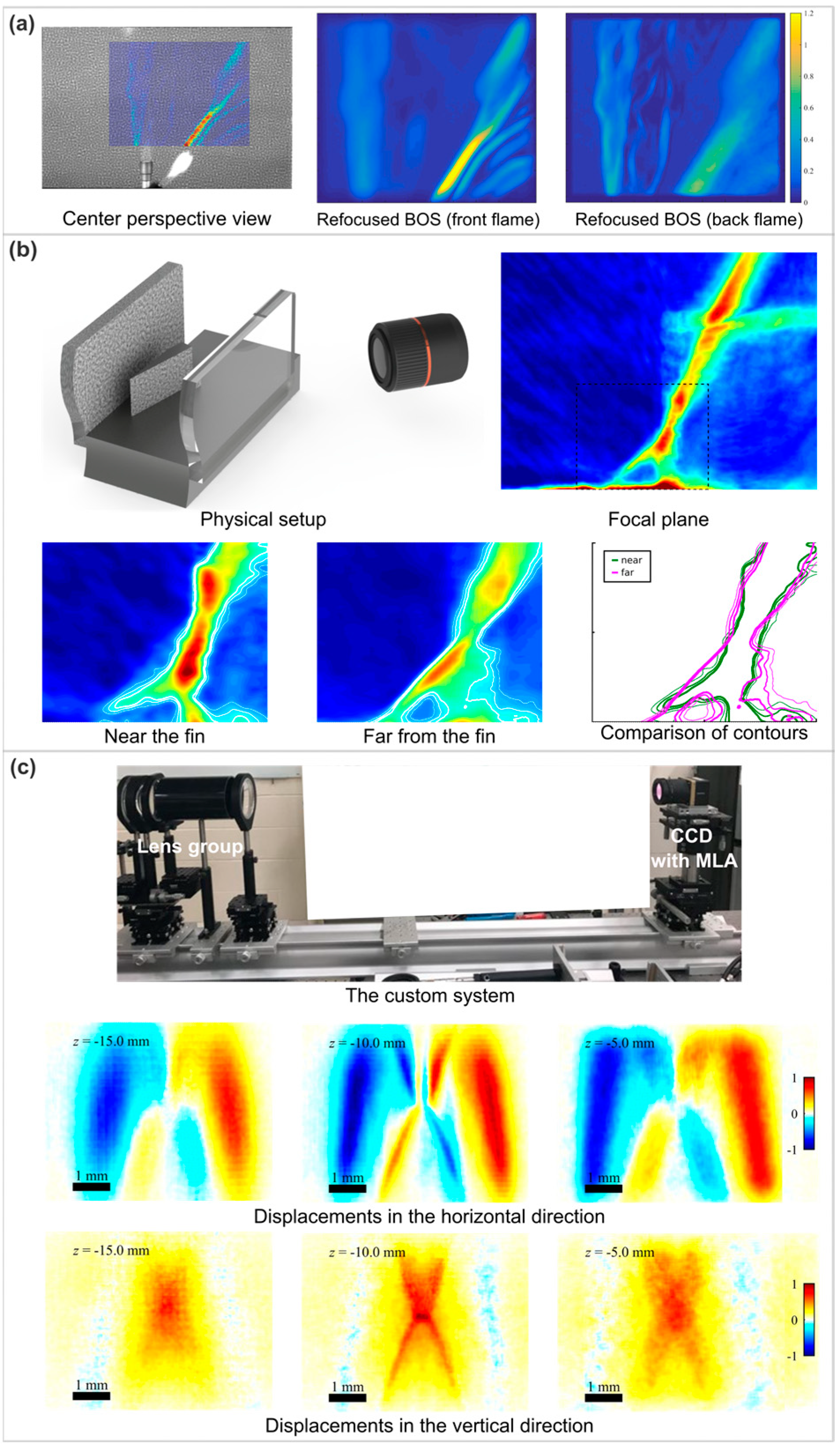

3.1.1. Imaging Process

3.1.2. Applications

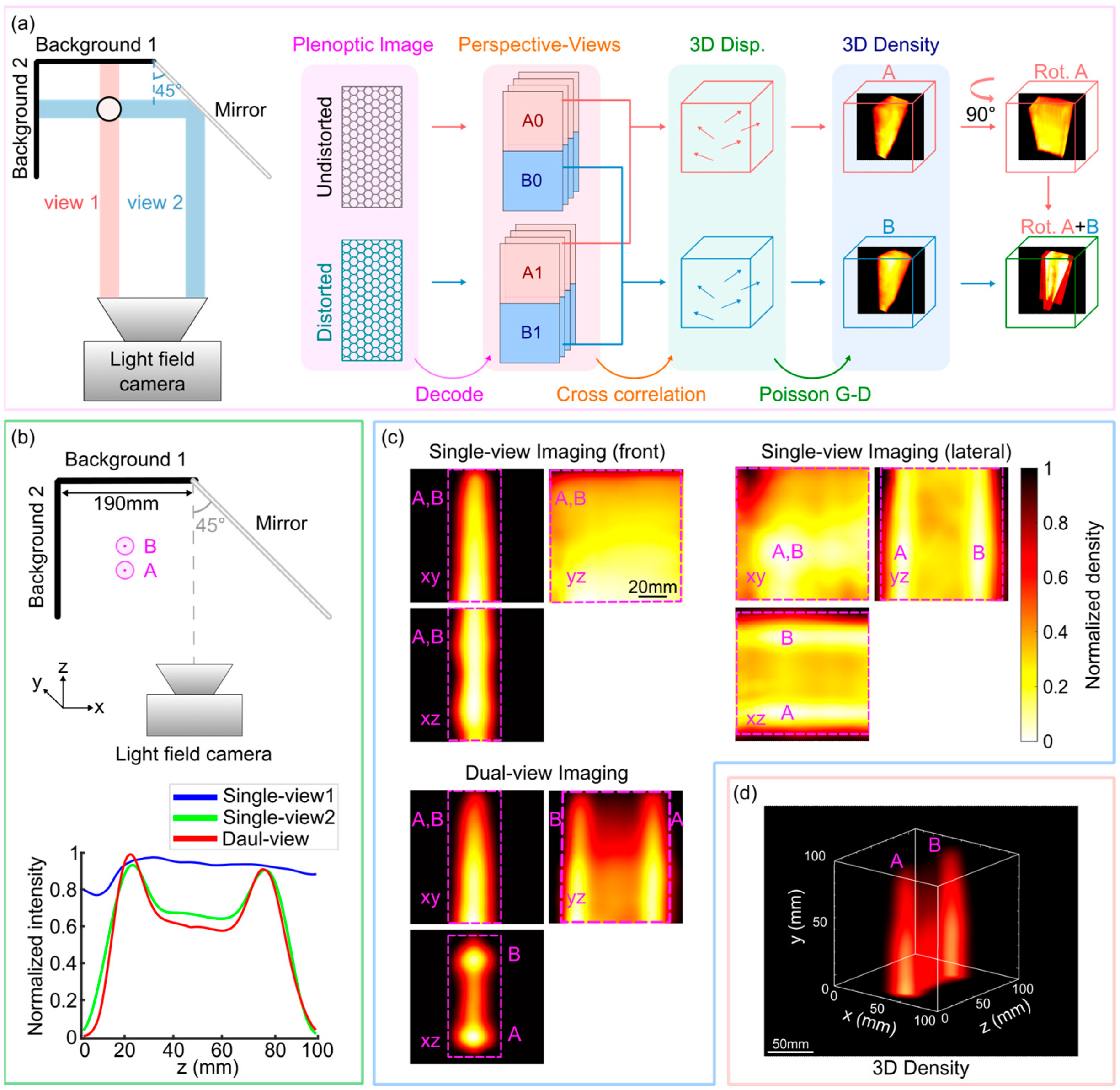

3.2. Multi-View Plenoptic BOS Imaging

3.2.1. Tomographic Plenoptic BOS

3.2.2. Mirror-Enhanced Isotropic-Resolution Dual-View Plenoptic BOS

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Nomenclature

| BOS | Background-oriented schlieren |

| DOF | Depth of field |

| MLA | Microlens array |

| PIV | Particle image velocimetry |

| LFIT | Light-field imaging toolkit |

| SBLI | Shock/boundary layer interaction |

| SART | Simultaneous algebraic reconstruction technique |

| FOV | Field of view |

| ISO | Isotropic |

| 2D | Two-dimensional |

| 3D | Three-dimensional |

| 4D | Four-dimensional |

| ε | Angular ray deflection |

| Za | Distance from schlieren object plane to the main lens |

| Zd | Distance from schlieren object plane to background plane |

| Δy’ | Magnitude of vector displacement in the imaging sensor plane |

| fm | Focal length of main lens |

| fμ | Focal length of the microlenses |

| n | Refractive index |

| ρ | Density |

| K | Constant related to the experimental configuration |

| G | Gladstone–Dale constant |

| da | Diameter of the main lens aperture |

| lo | Object distance to nominal focal plane |

| li | Image distance to nominal film plane |

| co | Circle of confusion in object space |

| znear | Near depth of field limit |

| zfar | Far depth of field limit |

| deff | Effective diameter aperture used to render a perspective view |

| pp | Pixel pitch |

| psig | Pound per square inch |

References

- Settles, G.S.; Hargather, M.J. A review of recent developments in schlieren and shadowgraph techniques. Meas. Sci. Technol. 2017, 28, 042001. [Google Scholar] [CrossRef]

- Raffel, M. Background-oriented schlieren (BOS) techniques. Exp. Fluids 2015, 56, 60. [Google Scholar] [CrossRef]

- Elsinga, G.; Van Oudheusden, B.; Scarano, F.; Watt, D.W. Assessment and application of quantitative schlieren methods: Calibrated color schlieren and background oriented schlieren. Exp. Fluids 2004, 36, 309–325. [Google Scholar] [CrossRef]

- Guo, G.-M.; Liu, H. Density and temperature reconstruction of a flame-induced distorted flow field based on background-oriented schlieren (BOS) technique. Chin. Phys. B 2017, 26, 064701. [Google Scholar] [CrossRef]

- Kaneko, Y.; Nishida, H.; Tagawa, Y. Technology. Background-oriented schlieren measurement of near-surface density field in surface dielectric-barrier-discharge. Meas. Sci. Technol. 2021, 32, 125402. [Google Scholar] [CrossRef]

- Nicolas, F.; Donjat, D.; Léon, O.; Le Besnerais, G.; Champagnat, F.; Micheli, F. 3D reconstruction of a compressible flow by synchronized multi-camera BOS. Exp. Fluids 2017, 58, 46. [Google Scholar] [CrossRef]

- Bauknecht, A.; Ewers, B.; Wolf, C.; Leopold, F.; Yin, J.; Raffel, M. Three-dimensional reconstruction of helicopter blade–tip vortices using a multi-camera BOS system. Exp. Fluids 2015, 56, 1866. [Google Scholar] [CrossRef]

- Hazewinkel, J.; Maas, L.R.; Dalziel, S.B. Tomographic reconstruction of internal wave patterns in a paraboloid. Exp. Fluids 2011, 50, 247–258. [Google Scholar] [CrossRef]

- Bichal, A. Development of 3D Background Oriented Schlieren with a Plenoptic Camera. Ph.D. Thesis, Auburn University, Auburn, AL, USA, 2015. [Google Scholar]

- Klemkowsky, J.N.; Fahringer, T.W.; Clifford, C.J.; Bathel, B.F.; Thurow, B.S. Technology. Plenoptic background oriented schlieren imaging. Meas. Sci. Technol. 2017, 28, 095404. [Google Scholar] [CrossRef]

- Klemkowsky, J.N.; Clifford, C.J.; Bathel, B.F.; Thurow, B.S. A direct comparison between conventional and plenoptic background oriented schlieren imaging. Meas. Sci. Technol. 2019, 30, 064001. [Google Scholar] [CrossRef]

- Klemkowsky, J.; Thurow, B.; Mejia-Alvarez, R. 3D visualization of density gradients using a plenoptic camera and background oriented schlieren imaging. In Proceedings of the 54th AIAA Aerospace Sciences Meeting, San Diego, CA, USA, 4–8 January 2016. [Google Scholar]

- Clifford, C.J.; Klemkowsky, J.N.; Thurow, B.S.; Arora, N.; Alvi, F.S. Visualization of an SBLI using Plenoptic BOS. In Proceedings of the 55th AIAA Aerospace Sciences Meeting, Grapevine, TX, USA, 9–13 January 2017; p. 1643. [Google Scholar]

- Guildenbecher, D.R.; Kunzler, M.; Sweatt, W.; Casper, K.M. High-Magnification, Long-Working Distance Plenoptic Background Oriented Schlieren (BOS). In Proceedings of the AIAA Scitech 2020 Forum, Orlando, FL, USA, 6–10 January 2020; p. 2206. [Google Scholar]

- Dalziel, S.á.; Hughes, G.O.; Sutherland, B.R. Whole-field density measurements by ‘synthetic schlieren’. Exp. Fluids 2000, 28, 322–335. [Google Scholar] [CrossRef]

- Richard, H.; Raffel, M. Principle and applications of the background oriented schlieren (BOS) method. Meas. Sci. Technol. 2001, 12, 1576–1585. [Google Scholar] [CrossRef]

- Meier, G. Computerized background-oriented schlieren. Exp. Fluids 2002, 33, 181–187. [Google Scholar] [CrossRef]

- Adrian, R.J. Twenty years of particle image velocimetry. Exp. Fluids 2005, 39, 159–169. [Google Scholar] [CrossRef]

- Atcheson, B.; Heidrich, W.; Ihrke, I. An evaluation of optical flow algorithms for background oriented schlieren imaging. Exp. Fluids 2009, 46, 467–476. [Google Scholar] [CrossRef]

- Rajendran, L.K.; Bane, S.P.; Vlachos, P.P. Dot tracking methodology for background-oriented schlieren (BOS). Exp. Fluids 2019, 60, 162. [Google Scholar] [CrossRef]

- Shimazaki, T.; Ichihara, S.; Tagawa, Y.J.E.T.; Science, F. Background oriented schlieren technique with fast Fourier demodulation for measuring large density-gradient fields of fluids. Exp. Therm. Fluid Sci. 2022, 134, 110598. [Google Scholar] [CrossRef]

- Settles, G.S. Schlieren and Shadowgraph Techniques: Visualizing Phenomena in Transparent Media; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2001. [Google Scholar]

- Gershun, A. Svetovoe pole (the light field). J. Math. Phys. 1936, 18, 51–151. [Google Scholar] [CrossRef]

- Levoy, M.; Hanrahan, P. Light field rendering. In Seminal Graphics Papers: Pushing the Boundaries, Volume 2; Association for Computing Machinery: New York, NY, USA, 2023; pp. 441–452. [Google Scholar]

- Adelson, E.H.; Wang, J. Single lens stereo with a plenoptic camera. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 99–106. [Google Scholar] [CrossRef]

- Ng, R.; Levoy, M.; Brédif, M.; Duval, G.; Horowitz, M.; Hanrahan, P. Light Field Photography with a Hand-Held Plenoptic Camera. Ph.D. Thesis, Stanford University, Stanford, CA, USA, 2005. [Google Scholar]

- Hall, E.M.; Fahringer, T.W.; Guildenbecher, D.R.; Thurow, B.S. Volumetric calibration of a plenoptic camera. Appl. Opt. 2018, 57, 914–923. [Google Scholar] [CrossRef]

- Kingslake, R. Optics in Photography; SPIE Press: Bellingham, WA, USA, 1992; Volume 6. [Google Scholar]

- Bichal, A.; Thurow, B. Development of a background oriented Schlieren-based wavefront sensor for aero-optics. In Proceedings of the 40th Fluid Dynamics Conference and Exhibit, Chicago, IL, USA, 28 June–1 July 2010; p. 4842. [Google Scholar]

- Tan, Z.P.; Thurow, B.S. Perspective on the development and application of light-field cameras in flow diagnostics. Meas. Sci. Technol. 2021, 32, 101001. [Google Scholar] [CrossRef]

- Klemkowsky, J.N.; Clifford, C.J.; Thurow, B.S.; Kunzler, W.M.; Guildenbecher, D.R. Recent developments using background oriented schlieren with a plenoptic camera. In Proceedings of the 2018 IEEE Research and Applications of Photonics in Defense Conference (RAPID), Miramar Beach, FL, USA, 22–24 August 2018; pp. 1–4. [Google Scholar]

- Bolan, J.; Hall, E.; Clifford, C.; Thurow, B.J.S. Light-field imaging toolkit. SoftwareX 2016, 5, 101–106. [Google Scholar] [CrossRef]

- Settles, G.; Dolling, D. Swept shock wave/boundary-layer interactions. In Tactical Missile Aerodynamic; American Institute of Aeronatics and Astronautics: Reston, VA, USA, 1992. [Google Scholar]

- Gaitonde, D.V. Progress in shock wave/boundary layer interactions. Prog. Aerosp. Sci. 2015, 72, 80–99. [Google Scholar] [CrossRef]

- Cook, R.L.; DeRose, T. Wavelet noise. ACM Trans. Graph. 2005, 24, 803–811. [Google Scholar] [CrossRef]

- Bolton, J.T.; Thurow, B.S.; Alvi, F.S.; Arora, N. Volumetric measurement of a shock wave-turbulent boundary layer interaction using plenoptic particle image velocimetry. In Proceedings of the 32nd AIAA Aerodynamic Measurement Technology and Ground Testing Conference, Washington, DC, USA, 13–17 June 2016; p. 4029. [Google Scholar]

- Bolton, J.T.; Thurow, B.S.; Alvi, F.S.; Arora, N. Single camera 3D measurement of a shock wave-turbulent boundary layer interaction. In Proceedings of the 55th AIAA Aerospace Sciences Meeting, Grapevine, TX, USA, 9–13 January 2017; p. 0985. [Google Scholar]

- Davis, J.K.; Clifford, C.J.; Kelly, D.L.; Thurow, B.S. Tomographic background oriented schlieren using plenoptic cameras. Meas. Sci. Technol. 2021, 33, 025203. [Google Scholar] [CrossRef]

- Andersen, A.H.; Kak, A.C. Simultaneous algebraic reconstruction technique (SART): A superior implementation of the ART algorithm. Ultrason. Imaging 1984, 6, 81–94. [Google Scholar] [CrossRef] [PubMed]

- Perwass, C.; Wietzke, L. Single lens 3D-camera with extended depth-of-field. In Proceedings of the Human Vision and Electronic Imaging XVII, Burlingame, CA, USA, 22 January 2012; pp. 45–59. [Google Scholar]

- Farnebäck, G. Two-frame motion estimation based on polynomial expansion. In Proceedings of the Image Analysis: 13th Scandinavian Conference, SCIA 2003, Proceedings 13, Halmstad, Sweden, 29 June–2 July 2003; pp. 363–370. [Google Scholar]

- Liu, Y.; Xing, F.; Su, L.; Tan, H.; Wang, D. Isotropic resolution plenoptic background oriented schlieren through dual-view acquisition. Opt. Express 2024, 32, 4603–4613. [Google Scholar] [CrossRef]

- Xing, F.; He, X.; Wang, K.; Wang, D.; Tan, H. Single camera based dual-view light-field particle imaging velocimetry with isotropic resolution. Opt. Lasers Eng. 2023, 167, 107592. [Google Scholar] [CrossRef]

- Chen, Y.-C.; Hsu, W.-L.; Xie, M.-Q.; Yang, H.-H.; Cheng, Y.-C.; Wang, C.-M. The miniature light-field camera with high spatial resolution. Opt. Rev. 2023, 30, 246–251. [Google Scholar] [CrossRef]

- Schwarz, C.; Braukmann, J.N. Practical aspects of designing background-oriented schlieren (BOS) experiments for vortex measurements. Exp. Fluids 2023, 64, 67. [Google Scholar] [CrossRef]

- Bolan, J.T. Enhancing Image Resolvability in Obscured Environments Using 3D Deconvolution and a Plenoptic Camera. Master’s Thesis, Auburn University, Auburn, AL, USA, 2015. [Google Scholar]

- Tomac, I.; Slavič, J. Damping identification based on a high-speed camera. Mech. Syst. Signal Process. 2022, 166, 108485. [Google Scholar] [CrossRef]

- Ek, S.; Kornienko, V.; Roth, A.; Berrocal, E.; Kristensson, E. High-speed videography of transparent media using illumination-based multiplexed schlieren. Sci. Rep. 2022, 12, 19018. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Zhou, T.; Lu, Y.; Di, H. Detail-preserving transformer for light field image super-resolution. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 22 February–1 March 2022; pp. 2522–2530. [Google Scholar]

- Cai, H.; Song, Y.; Ji, Y.; Li, Z.-H.; He, A.-Z. Displacement extraction of background-oriented schlieren images using Swin Transformer. J. Opt. Soc. Am. A 2023, 40, 1029–1041. [Google Scholar] [CrossRef] [PubMed]

- Molnar, J.P.; Venkatakrishnan, L.; Schmidt, B.E.; Sipkens, T.A.; Grauer, S.J. Estimating density, velocity, and pressure fields in supersonic flows using physics-informed BOS. Exp. Fluids 2023, 64, 14. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Xing, F.; Su, L.; Tan, H.; Wang, D. A Mini-Review of Recent Developments in Plenoptic Background-Oriented Schlieren Technology for Flow Dynamics Measurement. Aerospace 2024, 11, 303. https://doi.org/10.3390/aerospace11040303

Liu Y, Xing F, Su L, Tan H, Wang D. A Mini-Review of Recent Developments in Plenoptic Background-Oriented Schlieren Technology for Flow Dynamics Measurement. Aerospace. 2024; 11(4):303. https://doi.org/10.3390/aerospace11040303

Chicago/Turabian StyleLiu, Yulan, Feng Xing, Liwei Su, Huijun Tan, and Depeng Wang. 2024. "A Mini-Review of Recent Developments in Plenoptic Background-Oriented Schlieren Technology for Flow Dynamics Measurement" Aerospace 11, no. 4: 303. https://doi.org/10.3390/aerospace11040303