1. Introduction

The beginning of research in the field of subgradient methods for minimizing a convex, but not necessarily differentiable, function was laid in the works [

1,

2], the results of which can be found in [

3]. There are several directions for constructing non-smooth optimization methods. One of them [

4,

5,

6] is based on the construction and use of function approximations. A number of effective approaches in the field of non-smooth optimization are associated with a change in the space metric as a result of space dilation operations [

7,

8]. Distance-to-extremum relaxation methods for minimization were first proposed in [

9] and developed in [

10]. The first relaxation-by-function methods were proposed in [

11,

12,

13].

The need for methods for solving complex non-smooth high-dimensional minimization problems is constantly growing. In the case of smooth functions, the conjugate gradient method (CGM) [

3] is one of the universal methods for solving ill-conditioned high-dimensional problems. The CGM is a multi-step method that is optimal in terms of the convergence rate on quadratic functions [

3,

14].

CGM generates search directions that are more consistent with the geometry of the minimized function. In practice, the CGM shows faster convergence rates than gradient descent algorithms, so CGM is widely used in machine learning. The original CGM, known as the Hestenes–Stiefel method [

15], was introduced in 1952 for solving linear systems. There are several modifications of the Hestenes–Stiefel method, such as the Fletcher–Reeves method [

16], Polak–Ribiere method [

17], or Dai–Yuan method [

18], which mainly differ in the way the conjugate gradient update parameter is calculated.

Fletcher and Reeves justified the convergence of the CGM for quadratic functions and generalized it for the case of non-quadratic functions. The Polak–Ribiere method is based on an exact procedure for searching along a straight line and on a more general assumption about the approximation of the objective function. At each iteration of the Polak–Ribiere or Fletcher–Reeves methods, the function and its gradient are calculated once, and the problem of one-dimensional optimization is solved. Thus, the complexity of one step of the CGM is of the same order as the complexity of the step of the steepest descent method. It was proven in [

19] that the Polak–Ribiere method is also characterized by a linear convergence rate in the absence of returns to the initial iteration, but it has an advantage over the Fletcher–Reeves method in solving problems with general objective functions and is less sensitive to rounding errors when conducting a one-dimensional search. The Dai–Yuan algorithm converged globally, provided the line search made the standard Wolfe conditions hold.

Miele and Cantrell [

20] generalized the approach of Fletcher and Reeves by proposing a gradient method with memory. The method is based on the use of two selectable minimization parameters in each of the search directions. This method is efficient in terms of the number of iterations required to solve the problem, but it requires more computations of the function values and gradient components than the Fletcher–Reeves method. The idea of the memory gradient method was further extended to the multi-dimensional search methods that are used mostly for unconstrained optimization in large-scale problems [

21,

22,

23,

24,

25,

26].

The improved CGM [

27], Fletcher–Reeves (IFR), and Dai–Yuan methods mixed together with the second inequality of the strong Wolfe line search can be used to construct two new conjugate parameters. In online CGM, Xue et al. [

28] combined the IFR method with the variance reduction approach [

29]. This algorithm achieves a linear convergence rate under the strong Wolfe line search for the smooth and strongly convex objective function.

Dai and Liao [

30] introduced CGM based on a modified conjugate gradient update parameter. Modifications of this method were later presented in [

31,

32,

33,

34].

In [

35], an improved CG algorithm with a generalized Armijo search technique was proposed. A modified Fletcher–Reeves CGM for monotone nonlinear equations was described in [

36]. Nonlinear CGM was considered an adaptive momentum method combined with the steepest descent along the search direction in [

37]. In [

38], the author used an estimate of the Hessian to approximate the optimal step size. The paper in [

39] proposed a CGM on Riemannian manifolds. CG algorithms for stochastic optimization were introduced in [

40,

41,

42]. Algorithms of this type use a small part of samples for large-scale learning problems.

Preconditioning is another technique to speed up the convergence of CG descent. The idea of preconditioning is to make a change in variables using an invertible matrix. The authors in [

43] proposed a non-monotone scaled CG algorithm for solving large-scale unconstrained optimization problems, which combines the idea of a scaled memoryless Broyden–Fletcher–Goldfarb–Shanno (BFGS) method with the non-monotone technique. Inexact preconditioned CGM with an inner–outer iteration for a symmetric positive definite system was proposed in [

44]. In [

45], the authors developed an optimizer that uses CG with a diagonal preconditioner.

In [

46], the authors combined the limited memory technique with a subspace minimization conjugate gradient method and presented a limited memory subspace minimization conjugate gradient algorithm that, by the first step, determines the search direction, and by the second step, applies the quasi-Newtonian method in the subspace to improve the orthogonality of gradients.

The idea of the spectral CG method is based on combining the idea of CG methods with spectral gradients. Li et al. [

47] proposed a spectral three-term conjugate gradient method and proved the global convergence of this algorithm for uniformly convex functions. This work was further developed in [

48].

The practical application of the conjugate gradient method is very wide and includes, for example, structured prediction problems and neural network learning [

29], continuum mechanics [

49], signal and image recovery problems [

32,

36], COVID-19 regression models [

50], robot motion control problems [

50], psychographic reconstruction [

51], and molecular dynamics simulations [

52].

For a more detailed review of conjugate gradient methods, see [

40,

53].

It seems relevant to create multi-step universal methods for solving non-smooth problems that are applicable in terms of computer memory resources for solving high-dimensional minimization problems [

54,

55,

56,

57]. In this work, we propose a family of multi-step RSMs for solving large-scale problems. With a certain organization of the methods of the family, such as the CGM, they enable us to find the minimum of a quadratic function in a finite number of iterations.

The subgradient method is an algorithm that was originally developed by Shor [

1] for minimizing a non-differentiable convex function. The issue of subgradient methods is their speed, and several approaches can be used to speed them up.

Incremental subgradient methods were studied in [

58,

59,

60,

61,

62]. The main difference with the standard subgradient method is that at each iteration,

x is changed incrementally through a sequence of steps. In [

60], a class of subgradient methods for minimizing a convex function that consists of the sum of many component functions was considered. In [

63], the authors presented a family of subgradient methods that dynamically incorporate knowledge of the geometry of the data observed in earlier iterations to perform more informative gradient-based learning. An adaptive subgradient method for the split quasi-convex feasibility problems was developed in [

64]. Proximal subgradient methods were presented in [

65,

66]. The authors in [

65] proposed a model with a proximal conjugate subgradient (PCS-TT) method for solving the non-convex rank minimization problem by using properties of Moreau’s decomposition. A conjugate subgradient projection model as applied to continuous road network design problems was presented in [

67]. The paper in [

68] described a conjugate subgradient algorithm that minimizes a convex function containing a least squares fidelity term and an absolute value regularization term. This method can be applied to the inversion of ill-conditioned linear problems. A non-monotone conjugate subgradient type method without any line search was described in [

69].

The principle of organization in a number of the RSMs [

70] is that, in a particular RSM, there is an independent algorithm for finding the descent direction, which makes it possible to go beyond some neighborhood of the current minimum. In [

70,

71], the problem of finding the descent direction in RSM was formulated as the problem of solving systems of inequalities on separable sets. The use of a particular model of subgradient sets makes it possible to reduce the original problem to the problem of estimating the parameters of a linear function from information about subgradients obtained during the operation of the minimization algorithm, and mathematically formalize it as a problem of minimizing the quality functional. This makes it possible to use the ideas and methods of machine learning [

72] to find the descent direction in RSM [

70,

71,

73,

74].

Thus, a specific new learning algorithm will be used as the basis of a new RSM method. The properties of the minimization method are determined by the learning algorithm underlying it. The aim of this work is to develop a family of methods for solving systems of inequalities (MSSIs) and, on this basis, to create a family of multi-step RSMs (MRSMs) for solving large-scale smooth and non-smooth minimization problems. Known methods [

73,

74] are special cases of the MRSM family presented here.

It Is proven that the algorithms of the MSSI family converge in a finite number of iterations on separable sets. On strictly convex functions, the convergence of the MRSM algorithms is theoretically substantiated. It is proven that MRSM algorithms on quadratic functions are equivalent to the CGM.

In the practical implementation of RSM, several problems arise in combining the use of information about the function, both for minimization and for the internal algorithm for finding the descent direction. If, in CGM, the goal of a one-dimensional search is high accuracy, then, in RSM, the goal is to keep the step of a one-dimensional search proportional to the distance to the extremum, which eliminates looping and enables the learning algorithm to find a way out of a wide neighborhood of the current minimum. In accordance with the noted principle, we use a one-dimensional minimization procedure in which the rate of step decrease is controlled.

The described algorithms are implemented. A numerical experiment was carried out to select efficient versions from a family of algorithms. For the selected versions, an extensive experiment was carried out to compare them on smooth functions with various versions of the CGM. It was found that, along with the CGM, the proposed algorithms can be used to minimize smooth functions. The proposed methods are studied numerically on large-scale tests for solving convex and non-convex non-smooth optimization problems.

The rest of this paper is organized as follows: In

Section 2, we state the problem of our study. In

Section 3, we describe the method for solving systems of inequalities. In

Section 4, we present a subgradient minimization method. In

Section 5, we implement the proposed minimization algorithm. In

Section 6, we perform a series of experiments with the implemented method. In the last section, we provide a short conclusion of the work.

2. The Problem Formulation

Let us solve a minimization problem for a convex function

f(

x) in

Rn. In the RSM, the successive approximations are constructed according to the expressions [

13]:

where the descent direction

sk+1 is chosen as a solution for the system of inequalities [

13]:

Here, is the ε-subgradient set at point xi. Denote by S(G) the set of solutions to (2) and the subgradient set in x by . Iterative methods (learning algorithms) are used to solve systems of inequalities (2) in the RSM. Since elements of the ε-subgradient set are not explicitly specified, subgradients calculated on the descent trajectory of the minimization algorithm are used instead.

The solution vector s* of the system (2) forms an acute angle with each of the subgradients of the set G. If the subgradients of some neighborhood of the current minimum of (1) act as the set G, then iteration (1) for provides the possibility of going beyond this neighborhood with a simultaneous decrease in the function. It seems relevant to search for efficient methods for solving (2).

In [

70,

71,

73,

74], the authors proposed the following approach to reduce the system (2) to an equivalent system of equalities. Let

belong to some hyperplane, and its vector

closest to the origin be also the vector of the hyperplane closest to the origin. In this case, the solution of the system

is also a solution for (2). It can be found as a solution to the system [

70,

71,

73,

74]:

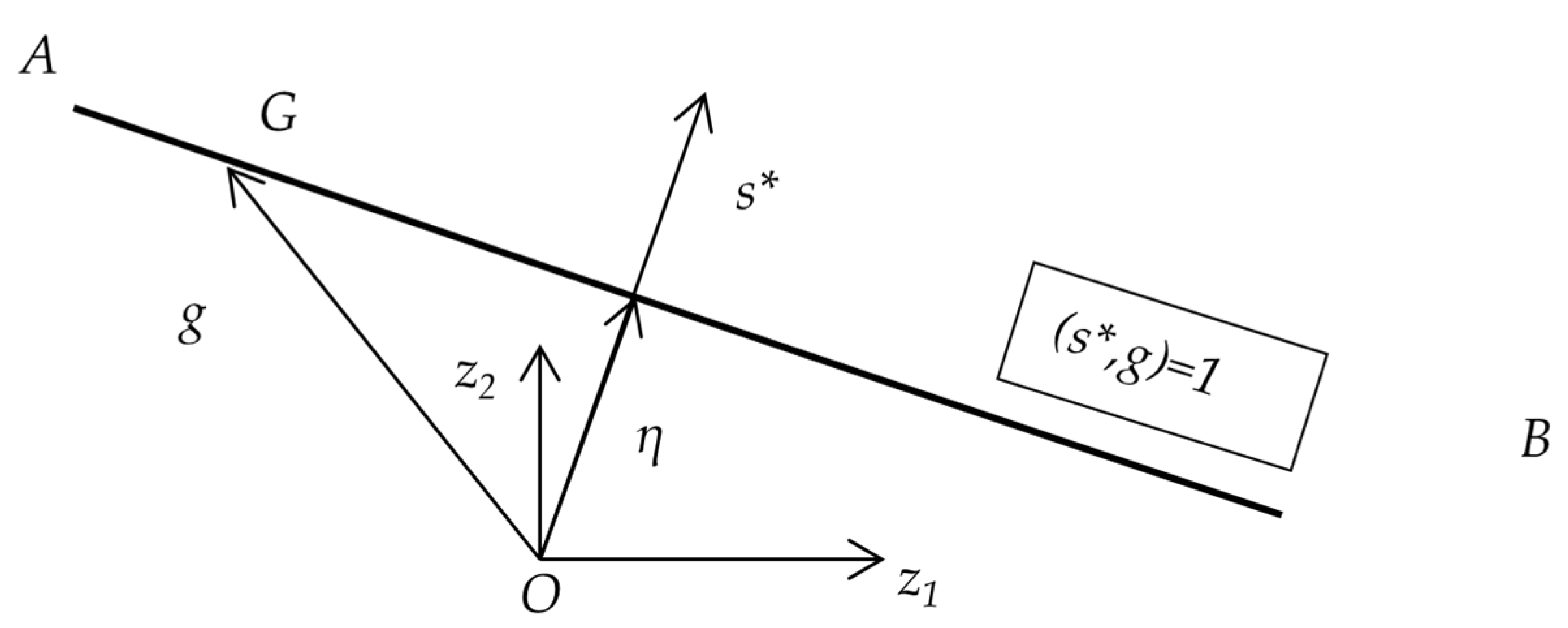

Figure 1 shows the projection of a subgradient set in the form of a segment [

A,

B] lying on a straight line in the plane of vectors

z1 and

z2. The vector

lies in this plane and is the normal of the hyperplane (

s*,

g)

= 1 formed by the vectors

g at

.

The problem of solving the system (3) is one of the most common data analysis problems for which gradient minimization methods are used. The minimization function is formulated as:

To minimize it, various gradient-type methods are used. In a similar way, a solution is sought in the problems of constructing approximations by neural networks.

In [

70], for solving system (3), a gradient minimization method was proposed—the Kaczmarz algorithm [

75]:

The method (4) provides an approximation that satisfies the equation , i.e., the last-received training equation from (3).

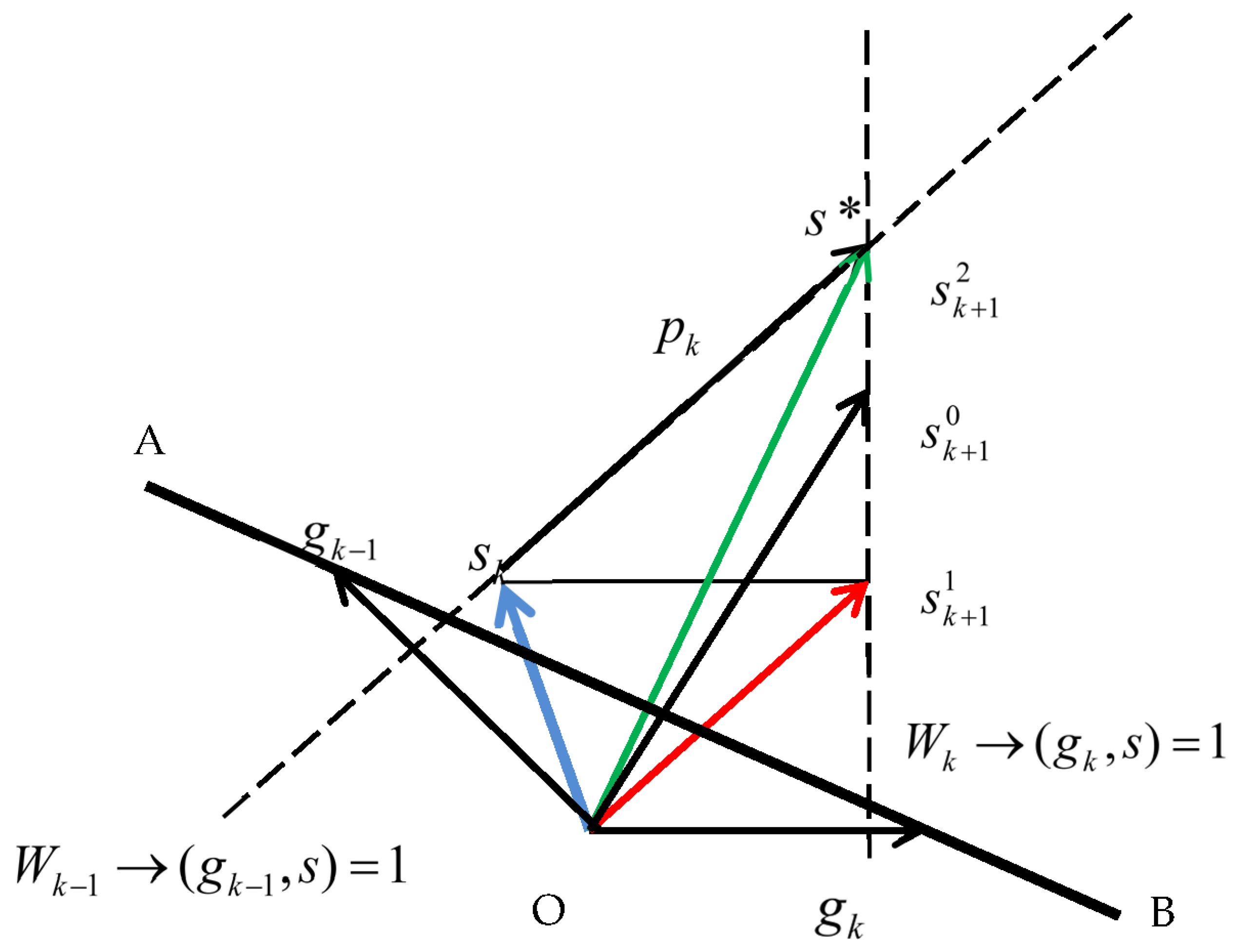

Figure 2 shows iterations (4) in the plane of vectors

gk,

s*, assuming that the set

G represented by the segment [

A,

B] belongs to the hyperplane. The dashed line

Wk in

Figure 2 is the projection of the hyperplane

for vectors

s. In the case when the set

G belongs to the hyperplane, the hyperplane of vectors

s formed with some

contains the vector

s*.

In [

71], to solve the system of inequalities (2), the descent direction correction scheme was used based on the exact solution of the last two equalities from (3) for the pair of indices

k−1 and

k, which can be realized by correction along the vector

pk orthogonal to vector

gk−1.

Here,

αk is the space dilation parameter. It is assumed here that before operations (5) and (6) are performed, the initial conditions

and

are satisfied, which is shown in

Figure 3.

Figure 3 shows iterations (6) and (5) in the plane of vectors

gk and gk−1. As a result of the operation, the vector

s2k+1 will be found—the projection of the vector

s* in the plane of the vectors

gk and

gk−1. The projections of the hyperplanes

and

are shown as dashed lines

Wk and

Wk−1. The vector

s1k+1 is the projection into the plane of the result of iteration (4).

On separable sets, iterations (6) and (5) lead to an acceleration in the convergence of the method for solving systems of inequalities. In the minimization method, under conditions of a rapidly changing position of the current minimum, the subgradients used in (6) and (5) in many cases do not belong to separable sets, which leads to the need to update the process (6), (5) with the loss of accumulated information.

In this paper, we consider a linear combination of solutions

s1k+1 and

s2k+1 as a descent vector

s0k+1. This enables us to form a family of methods for solving systems of inequalities. On this basis, a family of subgradient MRSMs is constructed. Practical implementations with a special choice of the solution

s0k+1 turn out to be more efficient, capable of covering wider neighborhoods of the current approximation using a rough one-dimensional search. The wider the neighborhood is, the greater the progress towards the extremum, and the higher the stability of the method to roundoff errors, noise, and the ability to overcome small local extrema. In this regard, the minimization methods studied in this work are of particular importance, in which, unlike the method from [

11] and its modification [

13], the built-in algorithms for solving systems of inequalities enable us to use the subgradients of a fairly wide neighborhood of the current minimum approximation and do not require exact one-dimensional descent.

3. A Family of Methods for Solving Systems of Inequalities

In the family of algorithms presented below, successive approximations of the solution to the system of inequalities (2) are constructed by correcting the current approximation.

Let us denote the vector closest to the origin of the coordinates in the set G as: , , , , . Let us make an assumption concerning the set G.

Assumption 1. The set G is non-empty, convex, closed, bounded , satisfying the separability condition, i.e., .

Figure 4 shows the separable set and its elements.

Under the assumption made, since the vector

ηG is a vector of minimal length in

G, taking into account the convexity of the set, the inequalities

and

will hold. Under these conditions, the vectors

ηG, μG, and

s* are solutions to (2), and the vectors

satisfy the constraints:

The vector s* is one of the solutions to system (2). The following algorithm searches for an approximation of s* using linear combinations of iterations (4) and (6), (5).

Algorithm 1 for α

k = 0 implements a scheme based on the Kaczmarz algorithm [

73], denote it as A0. For α

k = 1, it implements an algorithm for solving systems of inequalities from [

74].

| Algorithm 1: A(αk). |

Input: initial approximation s0

Output: solution s*

1. Assume k = 0, gk−1 = 0.

2. Choose arbitrary so that

If such a vector does not exist, then s* = , stop the algorithm.

3. Estimate sk+1:

where the correction vector pk, taking into account the condition:

Which is given by:

if (10) does not hold, then

if (10) holds.

The value αk is limited by:

4. Assign k = k + 1. Go to step 2. |

Since the algorithm is designed to find a solution to system (2) in the form of a vector s*, we will study the behavior of the residual vector .

Lemma 1. Let the sequence {sk} be obtained as a result of the use of Algorithm 1. Then, for k = 0, 1, 2,…, we have the following estimates:

Proof of Lemma 1. Let us prove (14). Consider the cases of transformation (9) combined with (11) and (12). According to (9) and (11)

Thus, equality (14) always holds. In the case of transformation (12) with α

k = 1, the vectors

pk and

gk−1 are orthogonal:

Therefore, the equality is preserved. This case corresponds to the exact solution of the last two equalities in (3).

Let us prove (15). Inequalities (15) will hold in the case (11). In the case (12), we carry out transformations proving (15):

Hence, from (13) and (12) follows:

Let us prove (16). For

k = 0, (16) is satisfied due to

g−1 = 0. For

k > 0, (16) follows from (7) and (14):

Let us prove (17). The first of the inequalities in (17) holds as an equality for (11), and in case (12), taking into account the sign under condition (10) and inequality (16), we obtain:

The second inequality in (17) follows from constraints (7). The last inequality in (17) follows from condition (8). □

The following theorem states that transformation (12) provides a direction pk to the solution point s* with a more acute angle compared to gk.

Theorem 1. Let the sequence {sk} be obtained as a result of the use of Algorithm 1. Then, for k = 0, 1, 2…, we have the estimate: Proof of Theorem 1. Consistently using (17) and (15), we obtain (18):

□

Lemma 2. Let the set G satisfy Assumption 1. Then, if Proof of Lemma 2. Using (19) and the scalar product property, we obtain an estimate in the form of a strict inequality for vectors from

G:

Hence, taking into account the constraint (7), we obtain the proof. □

The following theorem substantiates the finite convergence of Algorithm 1.

Theorem 2. Let the set G satisfy Assumption 1. Then, to estimate the convergence rate of the sequence {sk}, k = 0, 1, 2… to the point s* generated by Algorithm 1 up to the moment of stopping, the following observations are true:for ρG−1 we have the estimate:and for some value k, satisfying the inequality:we will obtain the vector . Proof of Theorem 2. Using (9), we obtain an equality for the squared norm of the residual

:

We transform the right side of the resulting expression, considering inequalities (17), replacing

with

:

In the resulting expression, we replace the factor

, according to (15), by a larger value

. As a result, we obtain:

Here, the last two inequalities are obtained considering (8) and the definition of

RG. With the indexing taken into account, we prove (20). Using recursively (20) and the inequality:

which follows from the properties of the norm, we obtain estimate (21). Estimate (22) is a consequence of (21).

According to (21) . Therefore, at some step k, inequality (19) will be satisfied for the vector sk, i.e., a vector will be obtained that is a solution to system (2). As an upper bound for the required number of steps, we can take k*, equal to the value k at which the right side of (21) vanishes, increased by 1. This provides an estimate for the required number of iterations k*. □

In the minimization algorithm,

s0 = 0 is set. In this case, (22) will take the form:

Inequalities (23) will hold as long as it is possible to find a vector , satisfying the condition (8). In the minimization algorithm, under the condition of exact one-dimensional descent, there will always be gk satisfying condition (8). Therefore, estimates (23) will be used in the rules for updating the algorithm for solving systems of inequalities in the minimization method under constraints on the parameters of subgradient sets.

4. A Family of Subgradient Minimization Methods

The idea of organizing a minimization algorithm is to construct a descent direction that provides a solution to a system of inequalities of type (2) for subgradients in the neighborhood of the current minimum. Such a solution will allow, by means of one-dimensional minimization (1), to go beyond this neighborhood, that is, to find a point with a smaller value of the function outside the neighborhood of the current minimum.

Let the function be convex. Denote as the length of the vector of the minimum length of the subgradient set at the point x, .

Note 1. For a function convex on Rn, if the set D(x0) is bounded, for points satisfying the condition , the following estimate is correct [13]:where D is the diameter of set D(x0), d0 is a given value, .

The minimization algorithm must build a sequence of approximations of which the limit points x* satisfy the condition d(x*) < d0 for a given value of d0. This will provide, according to (24), the specified accuracy of minimization with respect to the function. For these purposes, the parameters are set in the algorithm in such a way as to ensure the search for points x* that satisfy the condition d(x*) < d0. The connection between d0 and the parameters of the algorithm will be established in more detail in Theorem 3.

When solving a minimization problem with a built-in algorithm for solving systems of inequalities in an exact one-dimensional search along a direction, according to the necessary condition for the minimum of a one-dimensional function, there is always a subgradient that satisfies condition (8). Therefore, criteria for updating the method for solving systems of inequalities are necessary, sufficient, but not excessive, for convergence to limit points x* satisfying the condition . For these purposes, relations (23) will be used, signaling the solution of a system of inequalities with given characteristics sufficient to exit the neighborhood of the current minimum.

Let us describe the minimization method with a built-in Algorithm 1 for finding points such that , where .

In Algorithm 2, in steps 2, 4, and 5, there is a built-in algorithm for solving inequalities. Algorithm 2 for α

k = 0 was obtained in [

73] and uses the method for solving the inequalities with the Kaczmarz Formula (4) (we denote it as M0). Algorithm 2 for α

k = 1 was obtained in [

74].

| Algorithm 2: MA(αk). |

Input: initial approximation point x0

Output: minimum point x*

1. Set the initial approximation , integer k = j = 0.

2. Assign , , , .

3. Set

4. Calculate the subgradient , which satisfies . If , then x* = xk, stop the algorithm.

5. Obtain a new approximation where

The value of αk is bounded similarly to (13).

6. Calculate a new approximation of the criterion .

7. Calculate a new approximation of the minimum point

8. Set k = k + 1.

9. If , then go to step 2.

10. If , then go to step 2; otherwise, go to step 4. |

The index qj, j = 0, 1, 2,… was introduced to denote the numbers of iterations k, at which, in step 2, when the criteria of steps 9 and 10 are met, the algorithm for solving inequalities is updated (sk = 0, gk−1 = 0). According to (21) and (22), the algorithm for solving the system of inequalities with s0 = 0 has the best convergence rate estimates. Therefore, when updating in step 2 of Algorithm 2, we set sk = 0. The need for updating arises due to the fact that as a result of the shifts in step 7, the subgradient sets in the neighborhood of the current point of the minimum are changed, which leads to the need to solve the system of inequalities based on new information.

By virtue of exact one-dimensional descent along the direction (−

sk+1) in step 7, at a new point

xk+1, the vector

gk+1 ∈ ∂

f(

xk+1), such that (

gk+1,sk+1) ≤ 0, always exists according to the necessary condition for a minimum of one-dimensional function (see [

13]). Therefore, regardless of the number of iterations

k, the condition (

gk,sk) ≤ 0 of step 4 will always be satisfied.

The proof of the convergence of Algorithm 2 is based on the following lemma.

Lemma 3 ([13]). Let the function f(x) be strictly convex on Rn, the set D(x0) be bounded, and the sequence be such that . Then, .

Under the conditions of an exact one-dimensional search, the conditions of Lemma 3 will be satisfied in iterations of Algorithm 2.

Denote by the ε-neighborhood of the set G, by the δ-neighborhood of the point x, i.e., the points xk and the values of the , corresponding to the indices k at the time of updating in step 2 of Algorithm 2.

Theorem 3. Let the function f(x) be strictly convex on Rn and the set D(x0) be bounded, and the parameters εj and mj specified in step 2 of Algorithm 2 are fixed: Then, if x* is the limit point of the sequence generated by Algorithm 2; then,where .

In particular, if , then .

Proof of Theorem 3. Let conditions (25) be satisfied. The existence of limit points of the sequence {

zk} follows from the fact that the set

D(

x0) is bounded and

. Assume that the statement of the theorem is false: suppose that the subsequence

, but

Denote

. Choose

, so that

Such a choice is possible due to the upper semicontinuity of the point-set mapping

(see [

13]).

Choose a number

K, such that for

js > K, the following will hold:

i.e., such a number

K that the points

xk remain in the neighborhood

for at least

M0 steps of the algorithm. Such a choice is possible due to the assumption of convergence

and the result of Lemma 3, the conditions of which are satisfied under the conditions of Theorem 3 and an exact one-dimensional descent in step 7 of Algorithm 2.

According to assumption (27), the choice conditions of ε in (28), δ in (29), and

K, which ensures (30), for

js > K, the inequality will hold:

For

js >

K, due to the validity of relations (30), it follows from (29):

. Algorithm 2 includes Algorithm 1. Therefore, taking into account the estimates from (23), depending on the steps of Algorithm 2 (step 9 or step 10), the update occurs at some

k, and one of the inequalities will be satisfied:

The last transition in the inequalities follows from the definition of d0 in (26). However, (31) contradicts both (32) and (33). The resulting contradiction proves the theorem.

According to estimate (26), for any limit point of the sequence {zj} generated by Algorithm 2, will be satisfied, and therefore, estimate (24) will be valid. □

The following theorem defines the conditions under which Algorithm 2 generates a sequence {xk} converging to a minimum point.

Theorem 4. Let the function f(x) be strictly convex, the set D(x0) be bounded, and Then, any accumulation point of the sequence generated by Algorithm 2 is a minimum point of the function f(x) on Rn.

Proof of Theorem 4. Assume that the statement of the theorem is false: suppose that the subsequence

, but in this case, there exists

d0 > 0, such that inequality (27) is satisfied. As before, we set

ε according to (28). We choose

δ > 0, such that (29) will be satisfied. By virtue of conditions (34), there is

K0, such that when

j > K0, the relation will hold:

Denote E0 = d0 and denote by M0 the minimum value mj with j > K0. This renaming allows us to use the proofs of Theorem 3. Let us choose an index K > K0, such that (30) holds for js > K, i.e., a number K such that the points xk remain in neighborhood Uδ(x*) for at least M0 steps of the algorithm. According to assumption (27), conditions for choosing ε in (28), δ in (29), and k in (30) for js > K inequality (31) will hold. For js > K, due to (30), from (29) follows . Algorithm 2 contains Algorithm 1. Therefore, taking into account the estimates from (23), depending on the step number of Algorithm 2 (step 9 or step 10) in which the update occurs at some k, one of the inequalities (32) and (33) will be satisfied, where the last transition in inequalities follows from the definition of E0 and M0. However, (31) contradicts both (32) and (33). The resulting contradiction, taking into account (35) and (34), proves that the limit point can only be the minimum point. □

5. Correlation with the Conjugate Gradient Method

Let us show that the presented Algorithm 2 has the properties of the conjugate gradient method, and successive approximations of the minimum of both methods are the same on quadratic functions. Denote by

the gradient of a function, which, in the case of a differentiable convex function, coincides with the subgradient and is the only element of the subgradient set [

13]. Denote by

m a number of iterations (

m ≤ n) at which the minimum point is not reached. Iterations of Algorithm 2 for

k = 1,

2,

…,

m can be written as follows:

The value of αk is limited .

Let us establish a connection between Algorithm 2 and the CGM, the iteration of which has the form:

Theorem 5. Let the function f(x), , be quadratic, and its matrix of second derivatives is strictly positive definite; then, provided that the initial points in the algorithms (36)–(38), (39), and (40) are equal , they generate an identical sequence of approximations of the minimum, and their characteristics satisfy the relations:In this case, the minimum will be found after no more than n steps.

Proof of Theorem 5. We will use induction. As a result of iterations (36)–(38), for k = 1, due to g0 = 0 and s1 = 0, we have and . As a result of iterations (39) and (40), for k = 1, we have . Consequently, equalities (41(a)) and (41(b)) are satisfied for k = 1. Due to the exact one-dimensional descent and the collinearity of the descent directions, equality (41(c)) will hold for k = 1.

Assume that equalities (41) are satisfied for

k = 1, 2,…,

l, where

l > 1. Let us show that they are satisfied for

k = l + 1. According to (41(a)), the gradients of the CGM algorithms (39), (40), and (36)–(38) coincide due to the identity of the points (41(c)) at which they are calculated, and the gradients used in the CGM and, hence, in (36)–(38), are mutually orthogonal [

3]. Thus, in (38), for

k = l + 1, as a result of the orthogonalization of vectors

gl +1 and

gl, we obtain

. This proves (41(a)) for

k = l + 1.

According to the condition of exact one-dimensional descent, the equality

follows. Therefore, the transformation (37), taking into account (41(a)) for

k = l + 1, (41(b)) for

k = l, and (40), takes the form:

This implies (41(b)). Due to the exact one-dimensional descent and the collinearity of the descent directions, equality (41(c)) will hold for

k = l + 1.

From the above proof of the equivalence of sequences generated by the CGM algorithms and (36)–(38), taking into account the property of the termination of the process of minimization by the CGM method after no more than

n steps [

3], the proof of the theorem follows. □

6. Implementation of the Minimization Algorithm

Algorithm 2 is implemented according to the RSM implementation technique [

70,

71,

73,

74]. Consider a version of Algorithm 2 that includes a one-dimensional minimization procedure along the direction

s. This procedure: (a) constructs the current approximation of the minimum

xm; (b) constructs a point

y from a neighborhood

xm such that for

, the inequality

holds. The subgradient

g1 is used to solve the system of inequalities. Calling the procedure will be denoted as follows:

The input parameters are the point of the current approximation of the minimum x, descent direction s, , , and the initial step h0. It is assumed that the necessary condition for the possibility of descent in direction s is satisfied. The output parameters include γm, which is a step to the point of the obtained approximation of the minimum , , , γ1, which is a step along s, such that at the point for , the inequality holds and h1, which is an initial descent step calculated in the procedure for the next iteration. In the algorithm presented below, vectors are used to solve a set of inequalities, and points are used as points of approximations of a minimum.

Algorithm of one-dimensional descent (OM). Let it be required that to find an approximation of the minimum of the one-dimensional function

, where

x is some point, and

s is the descent direction. Take an ascending sequence

and

for

. Denote

,

,

l as the minimum number

i at which the relation

is satisfied for the first time,

. Let us set the parameters of the segment

of localization of the one-dimensional minimum:

,

,

,

,

,

. Let us find the point of minimum

γ* of the one-dimensional cubic approximation of the function on the segment of localization. Calculate:

Calculate the initial descent step for the next iteration:

In (42), a rough search for the minimum on the interval is carried out, and when choosing γ0 or γ1 instead of γm, the calculation of the function and the gradient is not required. We use parameters qγ = 0.2 and qγ1 = 0.1 and coefficients qM > 1 and qm < 1.

Minimization algorithm. In the implementation of Algorithm 2 proposed below, the method for solving inequalities is not updated, and the exact one-dimensional descent is replaced by an approximate one.

Let us explain the steps of the algorithm. The OM procedure returns two subgradients and . The first of them is used to solve the inequalities in step 2, and the second one is used in step 3 to correct the direction of descent using Equation (4) in order to provide the necessary condition for the possibility of descent in the direction (). Iteration (4) in (45) for is a correction (4) by the Kaczmarz algorithm. This transformation is carried out in order to direct the descent according to the subgradient of the current approximation of the minimum.

Unlike the idealized case, Algorithm 3 does not provide updates. Although the rationale for the convergence of idealized versions of RSM is made under the condition of exact one-dimensional descent, the implementation of these algorithms is carried out with one-dimensional minimization procedures in which the initial step, depending on progress, can increase or decrease, which is determined by the given coefficients

qM > 1 and

qm < 1. These coefficients should be chosen so that the step length (43) decrease in the one-dimensional minimization procedure corresponds to the rate of reduction in the distance to the minimum point. The minimum iteration step cannot be less than some fraction of the initial step, the value of which is given in (42) by the parameters

qγ = 0.2 and

qγ1 = 0.1. We used these values in our calculations.

| Algorithm 3: MOM(αk). |

Input: initial approximation x0, initial step of one-dimensional descent h0, maximum allowed number of iterations N, argument minimization precision εx, gradient minimization precision εg

Output: minimum point x*

1. Set the initial approximation , the initial step of one-dimensional descent h0. Set , , , , . Set the stop parameters: maximum allowed number of iterations N, argument minimization precision εx, gradient minimization precision εg.

2. Obtain an approximation

where

3. Obtain the descent direction

4. Perform a one-dimensional descent along the normalized direction :

5. Calculate the minimum point approximation .

6. If k > N or or , then x* = xk+1, stop the algorithm; otherwise, k = k + 1, and go to step 2. |

Consider ways to set parameters α

k. With a numerical implementation with α

k = 1, the number of iterations is either less than it is when α

k = 0, or greater. Unplanned stops often occur in (44) due to the proximity to the zero of the

values. Denote by

εp a value from a segment [0, 1]. In step 2 of the algorithm, we will use the following method for setting the parameter α

k:

We also used the second choice of parameter α

k:

In the next section, we will select an appropriate parameter εp from the set , with which the main computational experiment will be carried out.

7. Numerical Experiment

In Algorithm 3, the coefficients of decrease

qm < 1 and increase

qM > 1 of the initial step of the one-dimensional descent at iteration play a key role. Values

qm close to 1 provide a low rate of step decrease and, accordingly, a low rate of method convergence. A small rate of step decrease eliminates the looping of the method due to the fact that the subgradients of the function involved in solving the inequalities are taken from a wider neighborhood. The choice of the parameter

qm must be commensurate with the possible rate of convergence of the minimization method. The higher the speed capabilities of the algorithm, the smaller this parameter can be chosen. For example, in RSM with space dilation [

71,

73],

qm = 0.8 is chosen. For smooth functions, the choice of this parameter is not critical and can be taken from the interval [0.8, 0.98]. The convergence rate practically does not depend on the step increase parameter, so it can be taken as

.

The computational experiment is preceded by the choice of a parameter εp for the proposed Algorithm 3, which is used in Formulas (46) and (47). After that, we will conduct the main testing of the method with the selected parameter εp and its comparison with the known methods of conjugate gradients according to the following scheme:

Testing on smooth and non-smooth test functions with known characteristics of level surface elongation.

Testing on non-convex smooth and non-smooth test functions.

Testing on known smooth test functions.

We used the following methods:

AMMI—the distance-to-extremum relaxation method of minimization [

10];

sub—Algorithm 3 with (46);

subm—Algorithm 3 with more precise one-dimensional descent and (46);

subg—Algorithm 3 with (47);

subgm—Algorithm 3 with (47) and exact one-dimensional descent;

sub0—Algorithm 3 with αk = 0;

sgrFR—the conjugate gradient method (Fletcher–Reeves method [

3]) with exact one-dimensional descend;

sgr—method sgrFR with one-dimensional minimization procedure OM;

sgrPOL—the Polak–Ribiere–Polyak method [

17];

sgrHS—the Hestenes–Stiefel method [

15];

sgrDY—the Dai–Yuan method [

18].

We used the following test groups. Each group has its own stopping criterion.

The first group of tests includes smooth and non-smooth functions with a maximum ratio of level surfaces elongation along the coordinate axes equal to 100:

The stopping criterion is

The second group of tests includes the Extended White and Holst function, which is not convex:

The non-smooth non-convex function derived from it:

The Raydan1 function is biased to obtain a new function with a zero minimum value:

We transform this function into a non-smooth one as follows:

Here, the coefficients ai are bounded and not equal to . Criterion (48) is used as a stopping criterion for these functions.

The third group of tests is composed of functions from [

76]. We chose the functions that were difficult to minimize by gradient methods, which was revealed by the study in [

53]. The stopping criteria:

Several experiments were carried out for each function. The number of iterations and the number of function and gradient calculations were counted.

Denote:

S1 is a sum of resulting scores for dimensions 100, 200,…, and 1000;

S2 is a sum of resulting scores for dimensions 100, 500, 1000, 2000, 3000, 5000, 7000, 8000, 10,000, and 15,000.

The results for the dimensions Ti = 100,000 × i are given separately for changing i. We will use these notations for arbitrary functions.

For the functions from [

76], the following notation is used: the Diagonal 9 function: (Diagonal9); the LIARWHD function (CUTE): (LIARWHD); the Quadratic QF2 function: (QF2); the DIXON3DQ function (CUTE): (DIXON3DQ); the TRIDIA function (CUTE): (TRIDIA); the Extended White and Holst function: (WHolst); and the Raydan 1 function: (Raydan1).

As a result for the methods, we will use it—the number of iterations, nfg—the number of functions and gradient calculations necessary to solve the problem with a given stopping criterion for a specific function.

Preliminarily, based on an experiment on some of the above functions, we study the dependence of Algorithm 3 (sub, subg, and sub0), using Formulas (46) or (47), on the parameter

εp chosen from the set

. The results for the costs (

nfg—the number of calculations of the function and the gradient) are given in

Table 1.

When α

k = 0, the methods spend significantly more computations of the function and gradient. When α

k = 1, the method is not operational due to unplanned stops. Therefore, these variants are not considered during testing. According to the results of

Table 1, starting from

εp = 10

−3, the results stabilize and are almost always the best. In further studies, we used

εp = 10

−8, which reflects some geometric mean of the effective interval for both methods (46) and (47). Given the equivalence of the

sub and

subg methods, we carried out subsequent studies with only one of them for a given objective function.

The results for the first group of tests are presented in

Table 2. The cell shows the number of iterations (upper number) and the number of function and gradient evaluations (lower number).

For example, part of the calculations on function f1 was carried out for the sgr method, which is the sgrFR method with the one-dimensional OM procedure. The results here are two times worse than for sgrFR, and for other functions, it was sometimes not possible to solve the problem. This result is presented in order to emphasize the effectiveness of choosing the descent direction in the new method, where, in contrast to the CGM, it is possible to obtain a rapidly converging method for inexact one-dimensional descent, which is important when solving non-smooth minimization problems. To solve smooth problems, there are many efficient variants of the CGM.

Here, we should note the quality of the descent direction of the new method. With inexact one-dimensional descent, the subg cost is less than that of the sgrFR method. The method proposed in the paper is stable with both minimization procedures, and its results are almost equivalent to the results of the sgrFR method, which is a finite method for minimizing quadratic functions. In this case, the sgrFR method acts as a reference method. Since the results for other CGMs on this function are completely identical, we do not present them here.

On a non-smooth function, the

AMMI method [

10] acts as a reference, in which only one calculation of the function and gradient is required at each iteration. As follows from the results of

Table 2, the number of iterations on large-dimensional functions differs insignificantly. The running cost of calculating the function and the gradient in the transition from a smooth quadratic function to a non-smooth one for functions at

n = 500,000 with equal proportions of the level line elongation for these methods is 119,063/1343 = 88.65 for the

subg method, and 41,528/753 = 55.15 for the

AMMI method. Considering that the conditions here are ideal for the

AMMI method, since the minimum value of the function and its degree of homogeneity is known, and the calculations were carried out for functions at high dimensionalities, such a result for the

subg method can be considered excellent.

The minimization results for the second group of tests are given in

Table 3.

On smooth variants of functions, the subgm and subg methods are commensurate with sgrFR in terms of the cost of calculating the number of functions and gradient values. Therefore, taking into account these and previous tests, along with the CGM, when minimizing smooth functions, these methods can be used.

The subg method also handles non-smooth variants of functions (function fNW is non-smooth and non-convex).

The minimization results for the third group of smooth test functions are given in

Table 4. A dash means that no calculations were made. The sign NaN marks the problems that could not be solved by this method.

Based on the results for this group of tests, we can conclude that subm and sub methods are applicable for minimizing smooth large-scale functions.

In general, the following conclusions can be drawn from the results of the experiment:

The choice of the parameters of the method, which ensures its stable operation, is carried out.

On tests with known parameters of level surface elongation, the behavior of the method and its comparison with other methods, confirming its effectiveness, were studied.

The method was studied on non-smooth, including non-convex, functions.

On commonly accepted tests of smooth functions, the method was compared with variants of the CGM, which enables us to conclude that it is applicable along with the CGM for minimizing smooth functions.

8. Conclusions

In our work, we proposed a family of iterative methods for solving systems of inequalities, which are generalizations of the previously proposed algorithms. The developed methods were substantiated theoretically and the estimates of their convergence rate were obtained. On this basis, a family of relaxation subgradient minimization algorithms was formulated and justified, which is applicable to solving non-convex problems as well.

According to the properties of convergence on quadratic functions of high dimension, with large spreads of eigenvalues, the developed algorithm is equivalent to the conjugate gradient method. The new method enables us to solve non-smooth non-convex large-scale minimization problems with a high degree of elongation of level surfaces.