1. Introduction

Text classification, a computational technique, involves discerning and comprehending the emotional sentiment conveyed in a text, be it a sentence, document, or social media message. This process proves invaluable for businesses aiming to glean insights into the perceptions surrounding their brands, products, and services, as gleaned from online interactions with customers. Notably, platforms like Twitter experience a substantial daily surge in user-generated content in Arabic, a trend anticipated to persist with an anticipated rise in user-generated content in the years ahead. Opinions expressed in Arabic are estimated to constitute about five percent of the languages represented on the Internet. Furthermore, Arabic has emerged as one of the most influential languages in the online sphere in recent years. Spoken by a global community of over 500 million individuals, Arabic belongs to the Semitic language family. It serves as the official and administrative language in over 21 countries, spanning from the Arabian Gulf to the Atlantic Ocean. In terms of linguistic structure, Arabic possesses a rich and intricate system compared to English, and its distinctive feature lies in the presence of numerous regional variations. The substantial distinctions between Modern Standard Arabic (MSA) and Arabic dialects (ADs) contribute to the heightened complexity of the language. Additionally, within the realm of Arabic, an intriguing linguistic phenomenon known as diglossia arises, where individuals employ Arabic vernaculars in casual settings and turn to Modern Standard Arabic (MSA) for formal contexts. As an illustration, residents of Syria adapt their language choice based on the situation, seamlessly switching between MSA and their local Syrian dialects. The Syrian dialect serves as a reflection, mirroring the intricate tapestry of the nation’s history, cultural identity, heritage, and shared life experiences. It is worth noting that Arabic dialects exhibit regional diversity, encompassing the Levantine dialects of Palestine, Jordan, Syria, and Lebanon, the Maghrebi dialects prevalent in Morocco, Algeria, Libya, and Tunisia, as well as the Iraqi and Nile Basin dialects spoken in Egypt and Sudan, alongside the Arabian Gulf dialects used in the UAE, Saudi Arabia, Qatar, Kuwait, Yemen, Bahrain, and Oman. Unraveling the sentiments expressed in these various Arabic variations presents a distinctive challenge due to the complex interplay of morphological features, diverse spellings, and the overall linguistic intricacy. Each Arabic-speaking nation takes pride in its unique vernacular, further contributing to the inherent nuances of the language. This linguistic diversity is exemplified by the fact that Arabic texts encountered on social platforms are composed in both Modern Standard Arabic (MSA) and dialectal Arabic, leading to distinct interpretations of the same word. Furthermore, within Arabic dialects (ADs), a notable syntactic concern lies in the arrangement of words. To delve into this matter, it is imperative to pinpoint the verb, subject, and object within an AD sentence. As previously elucidated in the literature review, languages fall into distinct categories based on their sentence structures, such as subject–object–verb (as seen in Korean), subject–verb–object (as in English), verb–object–subject (as in Arabic), and others that allow for flexible phrase order, as is the case in ADs [

1]. In AD expressions, this flexible word order imparts crucial information about subjects, objects, and various other elements. Consequently, employing a single-task learning approach and relying solely on manually crafted features proves inadequate for conducting sentiment analysis in Arabic dialects [

2]. Furthermore, these disparities in ADs present a formidable challenge for conventional deep learning algorithms and models based on word embeddings. This is because as phrases in ADs grow lengthier, they amass a greater volume of information pertaining to objects, verbs, and subjects, as well as intricate and potentially confounding contextual cues. A drawback of traditional deep learning methods is the loss of input sequence data, leading to a reduction in the performance of the sentiment analysis (SA) model as the length of the input sequence escalates. Furthermore, depending on the context, the root and characters of Arabic words can be in many different forms. In addition, the lack of systematic orthographies is one of the main problems of ADs. This deficiency includes morphological differences between these dialects, which are visible in the use of affixes and suffixes that are not found in MSA.

Furthermore, a multitude of Arabic terms display varying nuances in meaning when employing the same syntax with diacritics. Additionally, the training of sentiment analysis (SA) models rooted in deep learning calls for an extensive corpus of training data, a particularly daunting challenge in the realm of Analytical Dependencies (ADs). ADs, characterized by their lack of structured linguistic elements and resources, pose a significant hurdle for information extraction [

3]. As the pool of training data dwindles for ADs, the precision of classification follows suit. Additionally, the majority of tools designed for Modern Standard Arabic (MSA) do not account for the distinctive features of Arabic dialects [

4]. Relying on lexical resources, like lexicons, is also considered a less effective approach for Arabic SA due to the vast array of words stemming from various dialects, rendering it improbable to encompass all of them in a lexicon [

5]. Moreover, crafting tools and resources tailored specifically for Arabic dialects proves to be a painstaking and time-intensive endeavor [

6].

In recent times, there has been a notable upswing in research efforts devoted to sentiment analysis in Arabic vernaculars. These endeavors primarily center around the categorization of reviews and tweets into binary and ternary polarities. The majority of these methodologies [

7,

8,

9,

10,

11,

12] rely on lexicons, manually crafted attributes, and tweet-specific features, which serve as inputs for machine learning (ML) algorithms. Conversely, alternative approaches adopt a rule-based approach, exemplified by the process of lexicalization. This entails formulating and prioritizing a set of heuristic rules used to classify tweets as either negative or positive [

13]. Moreover, the realm of Arabic sentiment ontology encompasses sentiments with varying degrees of intensity to discern user sentiments and facilitate tweet classification. Additionally, advanced deep learning techniques for sentiment classification [

14,

15,

16,

17,

18], including recursive auto-encoders, have garnered considerable attention due to their impressive adaptability and robustness in automated feature extraction. Notably, the recently introduced Transformer model [

19] has surpassed deep learning models [

20] across a range of natural language processing (NLP) tasks, thus piquing the interest of researchers delving into deep learning. The application of the Transformer model, which incorporates a self-attention (SE-A) mechanism to analyze the interactions among words in a sentence, has greatly enhanced the effectiveness of various endeavors in Natural Language Processing (NLP). However, strategies to tackle the challenges of Analytical Dependency Sentiment Analysis (ADs SA) are currently under examination and exploration. Up to this point, no prior research has focused on constructing an ADs text classification model based on self-attention (SE-A) within an Inductive Transfer (INT) framework. Inductive Transfer (INT) enhances comprehension abilities, the quality of the encoder, and the proficiency of text classification compared to a traditional single-task classifier by concurrently addressing related tasks through a shared representation of textual sequences [

21]. The primary advantage of Inductive Transfer (INT) lies in its sophisticated approach to utilizing diverse resources for similar tasks. However, the majority of methodologies used in ADs text classification studies lean towards binary and ternary classifications. Hence, our emphasis in this study pertains to the five-polarity AD SA challenge. To the best of our knowledge, no prior investigations have explored the integration of a self-attention (SE-A) approach within an Inductive Transfer (INT) framework for ADs text. Previous techniques addressing this classification dilemma were rooted in single-task learning (STL). In summary, our contributions can be outlined as follows:

In this article, we present a cutting-edge Transformer model that incorporates Inductive Transfer (INT) for the purpose of conducting analytical dependencies text classification. We have meticulously designed a specialized INT model that makes use of the self-attention mechanism in aiming to exploit the connections between three to five distinct polarities. To achieve this, we employ a transformer encoder, which integrates both self-attention and Feed-Forward Layers (FFLs), thus serving as a foundational layer shared across the tasks. We elucidate the process of simultaneously and interchangeably training on two tasks—ternary and five-class classifications—within the Inductive Transfer (INT) framework. This strategic approach aims to enhance the representation of ADs text for each task, resulting in an expanded spectrum of captured features.

In this research endeavor, we investigated how altering the number of encoders and utilizing multiple attention heads (AHs) within the self-attention (SE-A) sub-layer of the encoder influences the performance of the proposed model. The training regimen encompassed a range of dimensions for word embedding and utilized a shared vocabulary that was applied across both tasks.

The remainder of this paper is organized as follows.

Section 2 presents the literature review.

Section 3 discusses the proposed model in detail.

Section 4 presents the results of the experiments. Finally, the conclusions of this study are presented in

Section 5.

2. Related Work

Research focused on five-level classification tasks in Arabic text classification has received less attention compared to binary and ternary polarity classification tasks. Moreover, the majority of approaches applied to this task rely on traditional machine learning algorithms. For instance, methods based on corpora and lexicons were evaluated using Bag of Words (BoW) features along with a range of machine learning algorithms, including passive-aggressive (PA), Support Vector machine (SVM), Logistic Regression (LR), Naïve Bayes (NB), perceptron, and stochastic gradient descent (SGD) on the Arabic Book Review dataset [

22]. In a related study using the same dataset, [

23] explored the effects of stemming and balancing the BoW features using various machine learning algorithms. They discovered that stemming led to a decrease in performance. Another strategy presented in [

24] proposed a divide-and-conquer approach to tackle ordinal-scale classification tasks. Their model was organized as a hierarchical classifier (HC) in which the five labels were divided into sub-problems. They noted that the HC model outperformed a single classifier. Building on this foundation, several hierarchical classifier configurations were suggested [

25]. These configurations were compared with machine learning classifiers, such as SVM, KNN, NB, and DT. The experimental outcomes indicated that the hierarchical classifier enhanced performance. Nevertheless, it should be noted that many of these configurations demonstrated reduced performance. Another investigation [

26] delved into various machine learning classifiers, encompassing LR, SVM, and PA, using n-gram characteristics within the Book Reviews in Arabic Dataset (BRAD). The findings demonstrated that SVM and LR achieved the most impressive results. Similarly, [

27] assessed a range of sentiment classifiers, including AdaBoost, SVM, PA, random forest, and LR, on the Hotel Arabic-Reviews Dataset (HARD). Their findings highlighted that SVM and LR exhibited superior performance when paired with n-gram features. The previously mentioned methodologies conspicuously overlook the incorporation of deep learning techniques for the five-tier polarity classification tasks in Arabic Sentiment Analysis. Furthermore, the majority of approaches targeted at these five polarity tasks rely on traditional machine learning algorithms that hinge on the feature engineering process, a method deemed time consuming and laborious. Additionally, these approaches are founded on single-task learning (STL) and lack the capability to discern the interrelationships between various tasks (cross-task transfer) or to model multiple polarities simultaneously, such as both five and three polarities.

Other research endeavors have employed Inductive Transfer (INT) to address the challenge of five-point Sentiment Analysis (SA) classification tasks. For example, in [

28], an Inductive Transfer model centered on a recurrent neural network (RNN) was proposed, which simultaneously handled five-point and ternary classification tasks. Their model comprised Bidirectional Long Short-Term Memory (Bi-LSTM) and multilayer perceptron (MLP) layers. Similarly, in [

29], the interplay between five-polarity and binary sentiment classification tasks was leveraged by concurrently learning them. The suggested model featured an encoder (LSTM) and decoder (variational auto-encoder) as shared layers for both tasks. The empirical results indicated that the INT model bolstered performance in the five-polarity task. The introduction of adversarial multitasking learning (AINT) was first presented in [

21]. This model incorporated two LSTM layers as task-specific components and one LSTM layer shared across tasks. Additionally, a Convolutional Neural Network (CNN) was integrated with the LSTM, and the outputs of both networks were merged with the shared layer output to form the ultimate sentence latent representation. The authors determined that the proposed INT model elevated the performance of five-polarity classification tasks and enhanced encoder quality. Although the INT strategies delineated above have been applied in English, a conspicuous dearth exists in the application of Inductive Transfer and deep learning techniques to five-polarity Arabic Sentiment Analysis. Existing studies for this task predominantly rely on single-task learning with machine learning algorithms. Therefore, there remains ample room for improvement in the effectiveness of current Arabic Sentiment Analysis approaches for the five polarities, which still stand at a relatively modest level. Further investigations have leveraged deep learning techniques in Sentiment Analysis (SA) across a diverse array of domains encompassing finance [

30,

31], evaluations of movies [

32,

33,

34], Twitter posts related to weather conditions [

35], feedback from travel advisors [

36], and recommendation systems for cloud services [

37]. As highlighted by the authors in [

35], text attributes were automatically extracted from diverse data sources. Weather-related knowledge and user information were transformed into word embedding through the application of the word2vec tool. This approach has been adopted in multiple research studies [

30,

38]. Numerous papers have employed sentiment analysis based on polarity using deep learning techniques applied to Twitter posts [

39,

40,

41,

42]. The researchers elucidated how the employment of deep learning methodologies led to an augmentation in the precision of their distinct sentiment analyses. While the majority of these deep learning models have been implemented for English text, there are a few models that handle tweets in other languages, such as Persian [

38], Thai [

41], and Spanish [

43]. The researchers conducted analyses on tweets utilizing various models tailored for polarity-based Sentiment Analysis, including Deep Neural Networks (DNNs), Convolutional Neural Networks (CNNs), hybrid models [

42], and Support Vector Machines (SVMs) [

44].

Various methods have been suggested to identify fake information, with most of them concentrating solely on linguistic attributes and disregarding the potential of dual emotional characteristics. Luvembe et al. [

45] present an efficient strategy for discerning false information by harnessing dual emotional attributes. To accomplish this, the authors employ a stacked bi-GRU layer to extract emotional traits and represent them in a feature vector space. Furthermore, the researchers implement a profound attention mechanism atop the Bi-GRU to enhance the model’s ability to grasp vital dual-emotion details. Lei et al. [

46] present MsEmoTTS, a multi-scale framework for synthesizing emotional speech that aims to model emotions at various tiers. The approach follows a standard attention-based sequence-to-sequence model and integrates three crucial components: the global-level emotion-representation module (GM), the utterance-level emotion-representation module (UM), and the local-level emotion-representation module (LM). The findings demonstrate that MsEmoTTS surpasses existing methods relying on reference audio and text-based approaches for emotional speech synthesis, excelling in both emotions transfer and emotion prediction tasks. Li et al. [

47] introduce a fresh methodology termed “Attributed Influence Maximization based on Crowd Emotion” (AIME). The main goal is to scrutinize the influence of multi-dimensional characteristics on the dissemination of information by incorporating user emotion and group attributes. The findings demonstrate that AIME surpasses heuristic approaches and yields results comparable to greedy methods while significantly enhancing time efficiency. The experimental results affirm its superiority over traditional methods, highlighting its potential as an efficient and potent tool for comprehending the dynamics of information dissemination. A plethora of scholarly articles have increasingly relied on Twitter datasets as a fundamental source for constructing and refining models for sentiment analysis. For example, Vyas et al. [

48] introduced an innovative hybrid framework that blends a lexicon-based approach with deep learning techniques to scrutinize and categorize the sentiments conveyed in tweets pertaining to COVID-19. The central aim was to automatically discern the emotions conveyed in tweets about this subject matter. To accomplish this, the authors employed the VADER lexicon method to identify positive, negative, and neutral sentiments, which were then used to tag COVID-19-related tweets accordingly. In the categorization process, a range of machine learning (ML) and deep learning (DL) techniques were utilized. Furthermore, Qureshi et al. [

49] introduce a model designed to assess the polarity of reviews written in Roman Urdu text. The research employed nine diverse machine learning algorithms, including Naïve Bayes, SVM, Logistic Regression, K-Nearest Neighbors, ANN, CNN, RNN, ID3, and Gradient Boost Tree. Among these algorithms, Logistic Regression showcased the highest efficacy, demonstrating superior performance with testing and cross-validation accuracies of 92.25% and 91.47%, respectively. Furthermore, Alali [

2] introduced a multitasking approach termed the Multitask learning Hierarchical Attention Network, which was engineered to enhance sentence representation and enhance generalization. The outcomes of the experiments underscore the outstanding effectiveness of this proposed model.

By utilizing the BRAD, HARD, and LARB datasets with five-point scales for investigating ADs, we evaluated the T-TC-INT model specifically tailored for this purpose against the most current standard techniques. Initially, Logistic Regression (LR) was introduced in [

26] by employing unigrams, bi-grams, and TF-IDF, and it was subsequently applied to the BRAD dataset. Similarly, Logistic Regression (LR) was initially recommended in [

27] using unigrams, bi-grams, and TF-IDF, and it was then put into action on the HARD dataset. Our proposed T-TC-INT model has also undergone a comparative assessment utilizing the LABR datasets. These established methods encompass the following: first, the Support Vector Machine (SVM), which employs a Support Vector Machine classifier with n-gram characteristics, as advocated in [

23]. Second, the Multinomial Naïve Bayes (MNB), which implements a multinomial Naïve Bayes approach with bag-of-words attributes, as outlined in [

22]. Third, the Hierarchical Classifiers (HCs), a model utilizing hierarchical classifiers, is constructed based on the divide-and-conquer technique introduced by [

24]. Finally, Enhanced Hierarchical Classifiers (HC(KNN)), which is a refined iteration of the hierarchical classifiers model still rooted in the divide-and-conquer strategy, as elucidated by [

25]. In recent developments, tasks in Natural Language Processing (NLP) have achieved remarkable proficiency by harnessing the power of the bi-directional encoder representation from transformers, known as BERT [

50]. The AraBERT [

51], an Arabic pre-trained BERT model, underwent training on three distinct corpora: OSIAN [

52], Arabic Wikipedia, and the MSA corpus, encompassing a staggering 1.5 billion words. We conducted a comparative evaluation between the proposed ST-SA system for ADs and AraBERT [

51], which boasts 786 latent dimensions, 12 attention facets, and a composition of 12 encoder layers.

3. The Proposed Transformer-Based Text Classification Model for Arabic Dialects That Utilizes Inductive Transfer

We designed a text classification model employing self-attention (SE-A) and Inductive Transfer (INT) for classifying text in ADs. The goal of utilizing INT (Inductive Transfer) is to improve the performance of Arabic sentiment analysis, which involves classifying ADs into five-point scales, by leveraging the relationship between the AD sentiment analysis tasks (in both five and ternary polarities). Our approach, the Transformer text classification T-TC-INT model that utilizes Inductive Transfer (INT), is based on the Transformer model recently introduced by Vaswani et al. [

19]. Inductive Transfer (INT) proves more advantageous than single-task learning (STL), as it employs a shared representation of various loss functions while simultaneously addressing sentiment analysis tasks (with three and five polarities) to enhance the representation of semantic and syntactic features in ADs text. Knowledge gained from one task can benefit others in learning more effectively. Additionally, a significant advantage of INT is its ability to tap into resources developed for similar tasks, thereby enhancing the learning process and increasing the amount of useful information available. Sharing layers across associated tasks enhances the model’s ability to generalize, learn quickly, and comprehend what it has learned. Furthermore, by leveraging domain expertise observed in the training signals of interconnected tasks as an inductive bias, the INT method facilitates prompt transfers that improve generalization. This Inductive Transfer aids in increasing the accuracy of generalization, the speed of learning, and the clarity of the learned models. A learner engaged in multiple interconnected tasks simultaneously can use these tasks as an inductive bias for one another, resulting in a better grasp of the domain’s regularities. This approach allows for a more effective understanding of sentiment analysis tasks for ADs, even with a small amount of training data. Furthermore, Inductive Transfer can concurrently capture the significant interrelationships between the tasks being learned. As illustrated in

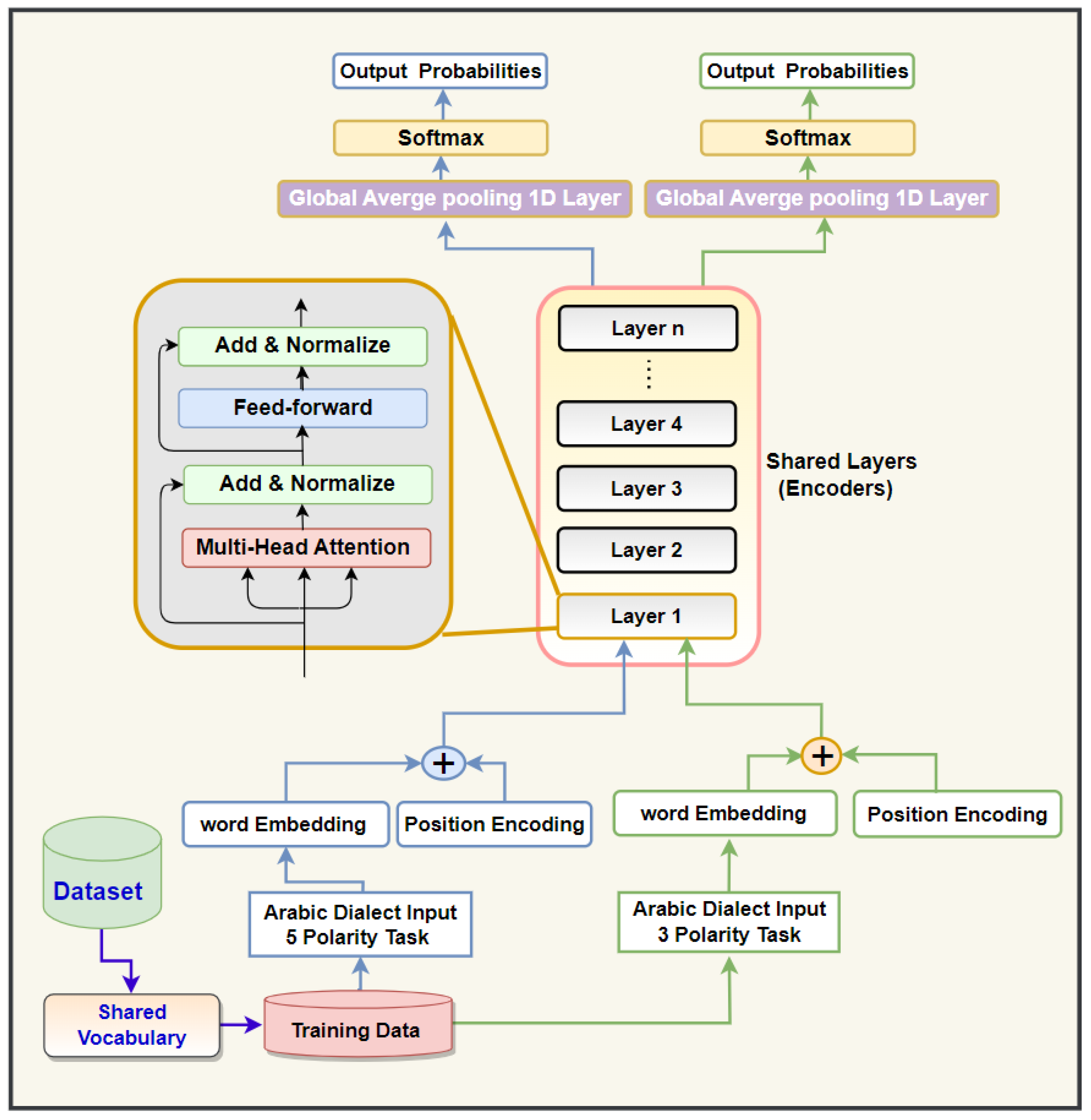

Figure 1, our proposed T-TC-INT approach features a unique architecture based on the SE-A, INT, shared vocabulary, and 1D_GlobalAveragepooling layer. The T-TC-INT model we propose is designed to handle diverse classification tasks, encompassing both ternary and five-polarity classification tasks, and it learns them simultaneously. By incorporating a shared Transformer block (encoding layer), we enable the transfer of knowledge from the ternary task to the five-point task as part of the learning process. This leads to an enhancement in the learning capabilities of the current task (the five-point task). The encoder within our proposed T-TC-INT model, as depicted in

Figure 1, consists of a range of distinct layers. Each layer is further divided into two sub-layers: self-attention (SE-A) and position-wise feed-forward (FNN).

In this suggested structure, the encoder employs the SE-A and FNN sub-layers to classify ADs of varying lengths without resorting to RNN or CNN algorithms. The model we propose utilizes these sub-layers to encode the input phrases from ADs, thus generating an intermediate representation. The encoder in the suggested model corresponds the input sentence

into a vector representation sequence

. By considering

, the T-TC-INT model generates the sentiment of an AD sentence. Word embeddings, a form of word representation in the realm of natural language processing (NLP), are designed to encapsulate the semantic associations among words. They manifest as numerical vectors that depict words within a continuous, compact space, where words sharing akin meanings or contextual relevance are positioned in closer proximity. Word embeddings have evolved into a cornerstone of numerous NLP applications, including machine translation and sentiment analysis. In contrast, traditional approaches for representing words in NLP, such as one-hot encoding, represent words as sparse binary vectors. In this scheme, each word receives a distinct index and is essentially treated as a standalone entity. This representation falls short of capturing the underlying semantic connections between words, thereby posing challenges for machine learning models in grasping word meanings in context. Word embeddings, conversely, capture these semantic links by adhering to the distributional hypothesis, which posits that words found in similar contexts tend to share similar meanings. By mapping words to dense vectors within a continuous space, word embeddings possess the ability to capture subtleties in meaning, synonymy, analogy, and more. This empowers machine learning models to acquire a deeper comprehension of word semantics and their interrelations. What sets word embeddings apart is their contextual richness. The embedding of a word can adapt to varying contexts, and it changes based on the surrounding words. This dynamic feature permits the representation to flexibly adjust and align with different contextual scenarios. The embedding layer in the encoder converts the input tokens (source tokens in the encoder) into a vector of dimension

. When the information needed for token proximity is interpreted in the SE-A sub-layer, the information for the token’s position is embedded through positional encoding (PE). In particular, the PE is a matrix that represents the position details of the tokens in the input sentence, and the suggested T-TC-INT system integrates the PE into the embedding matrix of the input tokens. Each part of the PE is determined utilizing

sine and

cosine function equations with unstable frequencies.

where the

indicates the position of each input token,

represents the dimension of each element, and

represents the embedding dimension of the input tokens. The embedding matrix combined with the PE is fed to the encoder’s first layer. The encoder subnetwork consists of

L similar layers, where

L is set to different numbers (12, 8, 2, and 4). Each encoding layer consists of two layers: an SE-A sub-layer and an FNN sub-layer. A residual connection method and a layer normalization unit (Layer Norm) are utilized on every sub-layer to support training and improve performance. The output of every layer

is calculated as follows:

where

is the output from the SE-A sub-layer calculated based on the input sentence representation of the prior encoding layer

Self-Attention (SE-A)

The self-attention mechanism (SE-A) is a key component of the seq-2seq design, and it is utilized to solve an array of sequence-generation problems, such as NMT and document summarization [

53].

Figure 2 shows how the T-TC-INT model for ADs accomplishes the scale-dot product attention function. This function uses three vectors as inputs: queries Q, values V, and keys K. It describes the given query and key–value pairs as a weighted sum of values. The weights reveal the relationship between each query and the key. The following is an explanation of the attention method.

where

is the key,

is the value, and

is a query.

and

are the lengths of the sequences expressed as

and

, respectively.

and

are the dimensions of the value and key vectors, respectively. The query dimension is expressed as

to perform the dot product calculation. We divided

by

to measure the output of the product operation, thereby maintaining the calculation of Vaswani et al. [

19]. The overall attention weight distribution was obtained by applying the

operation to the attention score

. For better performance, the transformer architecture uses SE-A, which comprises

(number of head attentions) measured dot product attention operations. Provided with the

,

, and

, the SE-A computation is as follows:

where

, and

are the projections of the query, key, and value vectors, respectively, for the

head. These projections were made using the metrics

,

, and

. The inputs to the MHA(.) are

, and

.

is the output of the measured dot product calculation for the

head. The

measured dot product operation is combined using the concatenation function

to generate

. Eventually, the output

is obtained from the projections of

using the weight matrix

. The SE-A contains the same number of parameters as the vanilla attention.

The T-TC-INT model leverages the self-attention (SE-A) mechanism, thus enabling it to discern the relative importance of each word within a sentence and capture subtle sentiments and their varying degrees of intensity. Furthermore, the model incorporates a self-attention mechanism (SE-A) that allows it to contextualize words in relation to their neighboring words, thus leading to more refined sentiment predictions. The T-TC-INT model has undergone meticulous training on a diverse dataset to ensure its efficacy across a spectrum of Arabic text styles and domains. Consequently, the proposed approach for text classification in ADs using Transformer (T) and Inductive Transfer (INT) provides a more precise representation of sentiments, which effectively addresses the challenge of varying intensities within the Arabic text classification spectrum.