Multi-View and Multimodal Graph Convolutional Neural Network for Autism Spectrum Disorder Diagnosis

Abstract

1. Introduction

- Multi-View Attention Fusion Module: We introduce a novel module that integrates multiple views of fMRI data, enhancing the network’s ability to capture comprehensive features that are crucial for accurate ASD diagnosis.

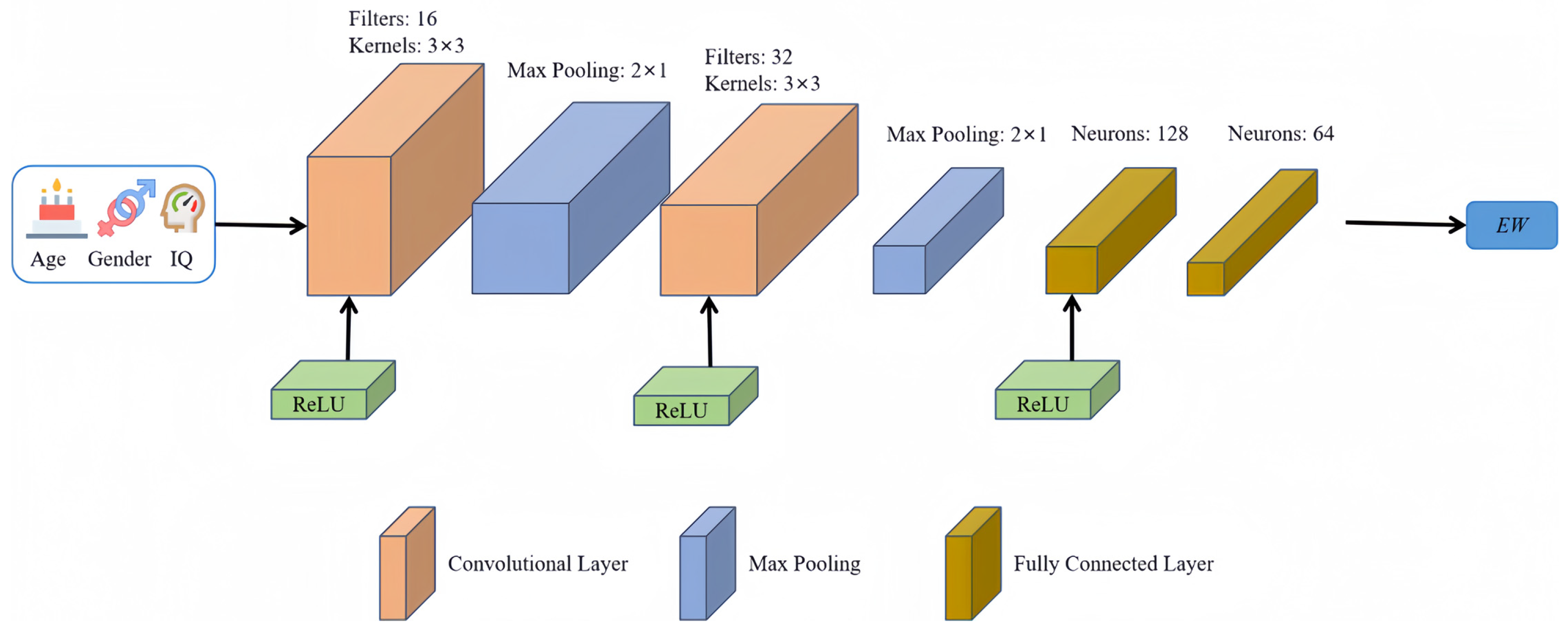

- Edge-Building Network Informed by Demographic Data: By incorporating demographic factors such as age and gender, we construct a more informed graph structure, enhancing inter-subject connectivity and relevance.

- Advanced Graph Structure Techniques: We have combined DropEdge regularization with residual connections to combat the common issues of oversmoothing and neighborhood explosion in deep graph convolutional networks. DropEdge selectively drops edges during training to enhance model robustness and prevent overfitting, while residual connections preserve feature diversity and improve generalization across various data presentations.

- Validation and Evaluation on Public Datasets: The enhanced model was rigorously trained and evaluated using the ABIDE-I and ABIDE-II datasets. Our experimental results demonstrate the superiority of our approach compared to existing methods, showcasing its effectiveness in leveraging complex multimodal data for medical diagnostics.

2. Related Work

3. Materials and Methods

3.1. Datasets and Data Preprocessing

3.2. Multi-View Data Fusion to Build Nodes of the Graph

3.3. Multimodal Data Dusion to Build the Edges of the Graph

3.4. Improved Spectral Graph Convolutional Neural Network

4. Results

4.1. Experiment Settings

4.2. Parametric Analysis

4.3. Ablation Study

4.4. Performance Evaluation

4.5. Leave-One-Site-Out Cross-Validation

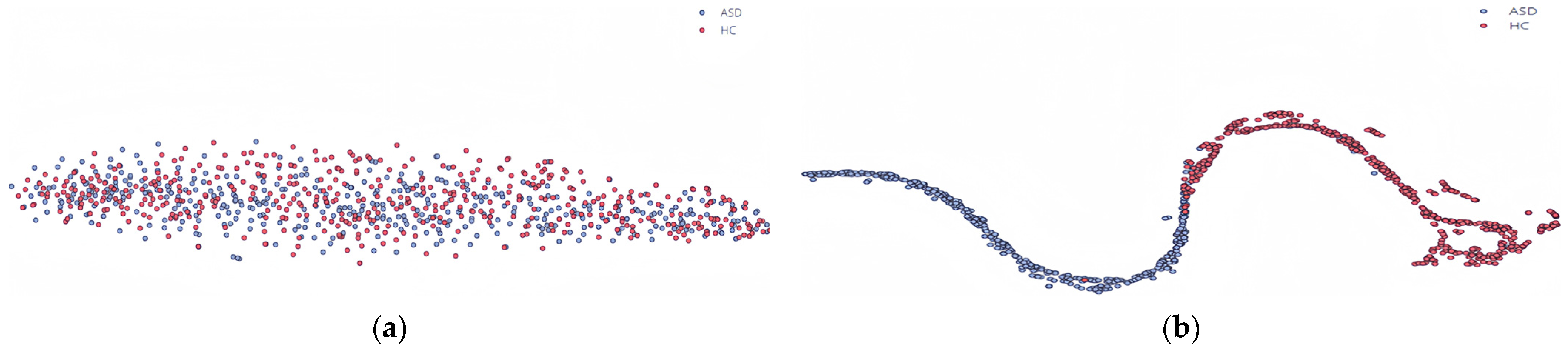

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kenny, L.; Hattersley, C.; Molins, B.; Buckley, C.; Povey, C.; Pellicano, E. Which terms should be used to describe autism? Perspectives from the UK autism community. Autism 2015, 20, 442–462. [Google Scholar] [CrossRef] [PubMed]

- Hirota, T.; King, B.H. Autism Spectrum Disorder. JAMA 2023, 329, 157–168. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Xiao, Z.; Wu, J. Functional connectivity-based classification of autism and control using SVM-RFECV on rs-fMRI data. Phys. Medica 2019, 65, 99–105. [Google Scholar] [CrossRef] [PubMed]

- Xu, K.; Sun, Z.; Qiao, Z.; Chen, A. Diagnosing autism severity associated with physical fitness and gray matter volume in children with autism spectrum disorder: Explainable machine learning method. Complement. Ther. Clin. Pract. 2024, 54, 101825. [Google Scholar] [CrossRef] [PubMed]

- Rathore, A.; Palande, S.; Anderson, J.S.; Zielinski, B.A.; Fletcher, P.T.; Wang, B. Autism Classification Using Topological Features and Deep Learning: A Cautionary Tale. Med. Image Comput. Comput. Assist. Interv. 2019, 11766, 736–744. [Google Scholar] [CrossRef]

- Chen, J.; Liao, M.; Wang, G.; Chen, C. An intelligent multimodal framework for identifying children with autism spectrum disorder. Int. J. Appl. Math. Comput. Sci. 2020, 30, 435–448. [Google Scholar] [CrossRef]

- Wang, M.; Zhang, D.; Huang, J.; Shen, D.; Liu, M. Low-Rank Representation for Multi-center Autism Spectrum Disorder Identification. Med. Image Comput. Comput. Assist. Interv. 2018, 11070, 647–654. [Google Scholar] [CrossRef] [PubMed]

- Mostafa, S.; Tang, L.; Wu, F.-X. Diagnosis of Autism Spectrum Disorder Based on Eigenvalues of Brain Networks. IEEE Access 2019, 7, 128474–128486. [Google Scholar] [CrossRef]

- Guo, X.; Wang, J.; Wang, X.; Liu, W.; Yu, H.; Xu, L.; Li, H.; Wu, J.; Dong, M.; Tan, W.; et al. Diagnosing autism spectrum disorder in children using conventional MRI and apparent diffusion coefficient based deep learning algorithms. Eur. Radiol. 2022, 32, 761–770. [Google Scholar] [CrossRef]

- Epalle, T.M.; Song, Y.; Liu, Z.; Lu, H. Multi-atlas classification of autism spectrum disorder with hinge loss trained deep architectures: ABIDE I results. Appl. Soft Comput. 2021, 107, 107375. [Google Scholar] [CrossRef]

- Ma, H.; Cao, Y.; Li, M.; Zhan, L.; Xie, Z.; Huang, L.; Gao, Y.; Jia, X. Abnormal amygdala functional connectivity and deep learning classification in multifrequency bands in autism spectrum disorder: A multisite functional magnetic resonance imaging study. Hum. Brain Mapp. 2023, 44, 1094–1104. [Google Scholar] [CrossRef]

- Heinsfeld, A.S.; Franco, A.R.; Craddock, R.C.; Buchweitz, A.; Meneguzzi, F. Identification of autism spectrum disorder using deep learning and the ABIDE dataset. Neuroimage Clin. 2018, 17, 16–23. [Google Scholar] [CrossRef] [PubMed]

- Lu, Z.; Wang, J.; Mao, R.; Lu, M.; Shi, J. Jointly Composite Feature Learning and Autism Spectrum Disorder Classification Using Deep Multi-Output Takagi-Sugeno-Kang Fuzzy Inference Systems. IEEE ACM Trans. Comput. Biol. Bioinform. 2023, 20, 476–488. [Google Scholar] [CrossRef] [PubMed]

- Kashef, R. ECNN: Enhanced convolutional neural network for efficient diagnosis of autism spectrum disorder. Cogn. Syst. Res. 2022, 71, 41–49. [Google Scholar] [CrossRef]

- Ahmed, M.R.; Zhang, Y.; Liu, Y.; Liao, H. Single Volume Image Generator and Deep Learning-Based ASD Classification. IEEE J. Biomed. Health Inf. 2020, 24, 3044–3054. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Feng, F.; Han, T.; Gong, X.; Duan, F. Detection of Autism Spectrum Disorder using fMRI Functional Connectivity with Feature Selection and Deep Learning. Cogn. Comput. 2022, 15, 1106–1117. [Google Scholar] [CrossRef]

- Wang, M.; Guo, J.; Wang, Y.; Yu, M.; Guo, J. Multimodal Autism Spectrum Disorder Diagnosis Method Based on DeepGCN. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 3664–3674. [Google Scholar] [CrossRef]

- Sarraf, A.; Khalili, S. An upper bound on the variance of scalar multilayer perceptrons for log-concave distributions. Neurocomputing 2022, 488, 540–546. [Google Scholar] [CrossRef]

- Williams, C.M.; Peyre, H.; Toro, R.; Beggiato, A.; Ramus, F. Adjusting for allometric scaling in ABIDE I challenges subcortical volume differences in autism spectrum disorder. Hum. Brain Mapp. 2020, 41, 4610–4629. [Google Scholar] [CrossRef]

- Di Martino, A.; O’Connor, D.; Chen, B.; Alaerts, K.; Anderson, J.S.; Assaf, M.; Balsters, J.H.; Baxter, L.; Beggiato, A.; Bernaerts, S.; et al. Enhancing studies of the connectome in autism using the autism brain imaging data exchange II. Sci. Data 2017, 4, 170010. [Google Scholar] [CrossRef]

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.-A.; Bottou, L. Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion. J. Mach. Learn. Res. 2010, 11, 3371–3408. [Google Scholar]

- Messaritaki, E.; Foley, S.; Schiavi, S.; Magazzini, L.; Routley, B.; Jones, D.K.; Singh, K.D. Predicting MEG resting-state functional connectivity from microstructural information. Netw. Neurosci. 2021, 5, 477–504. [Google Scholar] [CrossRef] [PubMed]

- Xu, H.; Deng, Y. Dependent Evidence Combination Based on Shearman Coefficient and Pearson Coefficient. IEEE Access 2018, 6, 11634–11640. [Google Scholar] [CrossRef]

- van Aert, R.C.M. Meta-analyzing partial correlation coefficients using Fisher’s z transformation. Res. Synth. Methods 2023, 14, 768–773. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Yao, H.; Zhao, S. Auto-encoder based dimensionality reduction. Neurocomputing 2016, 184, 232–242. [Google Scholar] [CrossRef]

- Li, Z.; Hou, B.; Wu, Z.; Guo, Z.; Ren, B.; Guo, X.; Jiao, L. Complete Rotated Localization Loss Based on Super-Gaussian Distribution for Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5618614. [Google Scholar] [CrossRef]

- Malinen, M.I.; Fränti, P. Clustering by analytic functions. Inf. Sci. 2012, 217, 31–38. [Google Scholar] [CrossRef]

- Wang, M.; Ma, Z.; Wang, Y.; Liu, J.; Guo, J. A multi-view convolutional neural network method combining attention mechanism for diagnosing autism spectrum disorder. PLoS ONE 2023, 18, e0295621. [Google Scholar] [CrossRef] [PubMed]

- Watanabe, T.; Rees, G. Brain network dynamics in high-functioning individuals with autism. Nat. Commun. 2017, 8, 16048. [Google Scholar] [CrossRef]

- Mian, X.; Bingtao, Z.; Shiqiang, C.; Song, L. MCMP-Net: MLP combining max pooling network for sEMG gesture recognition. Biomed. Signal Process. Control 2024, 90, 105846. [Google Scholar] [CrossRef]

- Xu, Y.; Zhang, H. Convergence of deep ReLU networks. Neurocomputing 2024, 571, 127174. [Google Scholar] [CrossRef]

- Liu, Z.; Huang, H. Comment on “New cosine similarity and distance measures for Fermatean fuzzy sets and TOPSIS approach”. Knowl. Inf. Syst. 2023, 65, 5151–5157. [Google Scholar] [CrossRef]

- Bai, J.; Ding, B.; Xiao, Z.; Jiao, L.; Chen, H.; Regan, A.C. Hyperspectral Image Classification Based on Deep Attention Graph Convolutional Network. IEEE Trans. Geosci. Remote Sens. 2022, 60, 3066485. [Google Scholar] [CrossRef]

- Sandryhaila, A.; Moura, J.M.F. Discrete Signal Processing on Graphs. IEEE Trans. Signal Process. 2013, 61, 1644–1656. [Google Scholar] [CrossRef]

- Defferrard, M.; Bresson, X.; Vandergheynst, P. Convolutional neural networks on graphs with fast localized spectral filtering. In Proceedings of the 30th Conference on Neural Information Processing Systems (NIPS 2016), Barcelona, Spain, 5–10 December 2016; Volume 29. [Google Scholar]

- Ganji, R.M.; Jafari, H.; Baleanu, D. A new approach for solving multi variable orders differential equations with Mittag–Leffler kernel. Chaos Solitons Fractals 2020, 130, 109405. [Google Scholar] [CrossRef]

- Tikhomirov, A.N. Limit Theorem for Spectra of Laplace Matrix of Random Graphs. Mathematics 2023, 11, 764. [Google Scholar] [CrossRef]

- Nayef, B.H.; Abdullah, S.N.H.S.; Sulaiman, R.; Alyasseri, Z.A.A. Optimized leaky ReLU for handwritten Arabic character recognition using convolution neural networks. Multimed. Tools Appl. 2021, 81, 2065–2094. [Google Scholar] [CrossRef]

- Canbek, G.; Taskaya Temizel, T.; Sagiroglu, S. BenchMetrics: A systematic benchmarking method for binary classification performance metrics. Neural Comput. Appl. 2021, 33, 14623–14650. [Google Scholar] [CrossRef]

- Lu, H.; Liu, S.; Wei, H.; Tu, J. Multi-kernel fuzzy clustering based on auto-encoder for fMRI functional network. Expert. Syst. Appl. 2020, 159, 113513. [Google Scholar] [CrossRef]

- Eslami, T.; Mirjalili, V.; Fong, A.; Laird, A.R.; Saeed, F. ASD-DiagNet: A Hybrid Learning Approach for Detection of Autism Spectrum Disorder Using fMRI Data. Front. Neuroinform. 2019, 13, 70. [Google Scholar] [CrossRef]

- Parisot, S.; Ktena, S.I.; Ferrante, E.; Lee, M.; Guerrero, R.; Glocker, B.; Rueckert, D. Disease prediction using graph convolutional networks: Application to Autism Spectrum Disorder and Alzheimer’s disease. Med. Image Anal. 2018, 48, 117–130. [Google Scholar] [CrossRef] [PubMed]

- Wen, G.; Cao, P.; Bao, H.; Yang, W.; Zheng, T.; Zaiane, O. MVS-GCN: A prior brain structure learning-guided multi-view graph convolution network for autism spectrum disorder diagnosis. Comput. Biol. Med. 2022, 142, 105239. [Google Scholar] [CrossRef] [PubMed]

- Jiang, H.; Cao, P.; Xu, M.; Yang, J.; Zaiane, O. Hi-GCN: A hierarchical graph convolution network for graph embedding learning of brain network and brain disorders prediction. Comput. Biol. Med. 2020, 127, 104096. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Chung, A.C.S. Disease prediction with edge-variational graph convolutional networks. Med. Image Anal. 2022, 77, 102375. [Google Scholar] [CrossRef] [PubMed]

- Ji, J.; Li, J. Deep Forest with Multi-Channel Message Passing and Neighborhood Aggregation Mechanisms for Brain Network Classification. IEEE J. Biomed. Health Inf. 2022, 26, 5608–5618. [Google Scholar] [CrossRef] [PubMed]

- Liu, R.; Huang, Z.A.; Hu, Y.; Zhu, Z.; Wong, K.C.; Tan, K.C. Spatial-Temporal Co-Attention Learning for Diagnosis of Mental Disorders from Resting-State fMRI Data. IEEE Trans. Neural Netw. Learn. Syst. 2023. Epub ahead of print. [Google Scholar] [CrossRef]

- Ke, Q.; Zhang, J.; Wei, W.; Damasevicius, R.; Wozniak, M. Adaptive Independent Subspace Analysis of Brain Magnetic Resonance Imaging Data. IEEE Access 2019, 7, 12252–12261. [Google Scholar] [CrossRef]

| DAE-1 | DAE-2 | |||||

|---|---|---|---|---|---|---|

| View | Input Layer | Hidden Layer | Output Layer | Input Layer | Hidden Layer | Output Layer |

| AAL | 6670 | 3500 | 6670 | 3500 | 2500 | 3500 |

| CC200 | 19,900 | 10,000 | 19,900 | 10,000 | 2500 | 10,000 |

| CC400 | 79,800 | 40,000 | 79,800 | 40,000 | 2500 | 40,000 |

| HO | 6105 | 3100 | 6105 | 3100 | 2500 | 3100 |

| EZ | 6770 | 3500 | 6770 | 3500 | 2500 | 3500 |

| View | Accuracy (%) | Precision (%) | Recall (%) | AUC |

|---|---|---|---|---|

| AAL | 71.74 | 71.09 | 73.20 | 0.75 |

| CC200 | 72.37 | 71.92 | 74.36 | 0.76 |

| CC400 | 73.26 | 72.24 | 75.69 | 0.77 |

| HO | 70.42 | 69.78 | 72.10 | 0.74 |

| EZ | 69.53 | 70.11 | 71.35 | 0.73 |

| Method | Accuracy (%) | Precision (%) | Recall (%) | AUC |

|---|---|---|---|---|

| GCN | 71.14 | 69.30 | 74.96 | 0.74 |

| GCN + Fusion Module | 73.62 | 73.72 | 77.09 | 0.77 |

| GCN + Fusion Module + Edge-building network | 76.38 | 76.33 | 79.82 | 0.80 |

| GCN + Fusion Module + Edge-building network + DropEdge | 77.25 | 77.51 | 80.56 | 0.82 |

| MMGCN | 78.31 | 78.18 | 81.73 | 0.84 |

| Method | Accuracy (%) | Precision (%) | Recall (%) | AUC |

|---|---|---|---|---|

| DAE | 69.26 | 63.84 | 76.41 | 0.70 |

| ASD-DiagNet | 70.41 | 70.47 | 71.55 | 0.72 |

| GCN | 71.14 | 69.30 | 74.96 | 0.74 |

| MVS-GCN | 70.08 | 66.23 | 71.02 | 0.70 |

| Hi-GCN | 74.36 | 66.89 | 72.67 | 0.79 |

| EV-GCN | 76.21 | 77.35 | 84.40 | 0.82 |

| MMGCN | 78.31 | 78.18 | 81.73 | 0.84 |

| Site | Number | GCN | Hi-GCN | MMGCN |

|---|---|---|---|---|

| CALTECH | 32 | 56.84 | 62.72 | 73.23 |

| CMU | 26 | 71.03 | 73.62 | 81.53 |

| KKI | 46 | 72.91 | 78.61 | 78.71 |

| LEUVEN | 54 | 64.69 | 70.57 | 75.80 |

| MAX_MUN | 45 | 47.83 | 53.52 | 70.71 |

| NYU | 160 | 72.17 | 80.98 | 80.07 |

| OHSU | 23 | 72.53 | 75.01 | 79.11 |

| OLIN | 33 | 67.14 | 69.60 | 82.04 |

| PITT | 51 | 73.56 | 79.08 | 74.87 |

| SBL | 27 | 57.05 | 62.62 | 81.71 |

| SDSU | 31 | 64.40 | 67.25 | 68.71 |

| STANFORD | 37 | 53.59 | 62.19 | 69.27 |

| TRINITY | 44 | 57.84 | 60.52 | 69.41 |

| UCLA | 90 | 68.49 | 71.27 | 73.76 |

| UM | 132 | 67.91 | 73.80 | 82.72 |

| USM | 67 | 70.49 | 79.00 | 68.99 |

| YALE | 51 | 66.15 | 75.01 | 77.63 |

| Average | 56 | 64.98 | 70.32 | 75.78 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, T.; Ren, Z.; Zhang, J.; Wang, M. Multi-View and Multimodal Graph Convolutional Neural Network for Autism Spectrum Disorder Diagnosis. Mathematics 2024, 12, 1648. https://doi.org/10.3390/math12111648

Song T, Ren Z, Zhang J, Wang M. Multi-View and Multimodal Graph Convolutional Neural Network for Autism Spectrum Disorder Diagnosis. Mathematics. 2024; 12(11):1648. https://doi.org/10.3390/math12111648

Chicago/Turabian StyleSong, Tianming, Zhe Ren, Jian Zhang, and Mingzhi Wang. 2024. "Multi-View and Multimodal Graph Convolutional Neural Network for Autism Spectrum Disorder Diagnosis" Mathematics 12, no. 11: 1648. https://doi.org/10.3390/math12111648

APA StyleSong, T., Ren, Z., Zhang, J., & Wang, M. (2024). Multi-View and Multimodal Graph Convolutional Neural Network for Autism Spectrum Disorder Diagnosis. Mathematics, 12(11), 1648. https://doi.org/10.3390/math12111648