Abstract

As a classical combinatorial optimization problem, the traveling salesman problem (TSP) has been extensively investigated in the fields of Artificial Intelligence and Operations Research. Due to being NP-complete, it is still rather challenging to solve both effectively and efficiently. Because of its high theoretical significance and wide practical applications, great effort has been undertaken to solve it from the point of view of intelligent search. In this paper, we propose a two-stage probe-based search optimization algorithm for solving both symmetric and asymmetric TSPs through the stages of route development and a self-escape mechanism. Specifically, in the first stage, a reasonable proportion threshold filter of potential basis probes or partial routes is set up at each step during the complete route development process. In this way, the poor basis probes with longer routes are filtered out automatically. Moreover, four local augmentation operators are further employed to improve these potential basis probes at each step. In the second stage, a self-escape mechanism or operation is further implemented on the obtained complete routes to prevent the probe-based search from being trapped in a locally optimal solution. The experimental results on a collection of benchmark TSP datasets demonstrate that our proposed algorithm is more effective than other state-of-the-art optimization algorithms. In fact, it achieves the best-known TSP benchmark solutions in many datasets, while, in certain cases, it even generates solutions that are better than the best-known TSP benchmark solutions.

Keywords:

traveling salesman problem (TSP); probe machine; filter; local augmentation operators; self-escape mechanism; route modification and development MSC:

90B10; 90B20; 68P10

1. Introduction

The traveling salesman problem (TSP) is a well-known combinatorial optimization problem, which can be specifically expressed as the problem of finding the lowest-cost route throughout a given set of cities. It has been proven to be one of the most difficult NP-hard problems, i.e., NP-complete problems [1,2], so there is no algorithm to effectively solve it in polynomial time via the conventional computer systems. Because of its wide applicability and computational complexity, many researchers have been attracted to investigating the TSP for effective and efficient solutions. Actually, a variety of feasible optimization algorithms have been designed and exploited in the last few decades. According to [2,3], these algorithms can be categorized into two main streams: exact algorithms and heuristic algorithms. Each stream offers different tradeoffs in terms of solution quality, computational efficiency, and applicability to different problem instances. The exact algorithms guarantee optimal solutions, but the execution time is increased exponentially with the problem size. They are only suitable for small-size TSPs and become rather difficult for medium- to large-scale problems, even though supercomputer systems are adopted in the computational process [4]. Therefore, the exact algorithms may become computationally prohibitive. On the other hand, heuristic algorithms are designed with some efficient search rules or systems to obtain good approximate solutions instead.

Heuristic optimization algorithms are more applicable in practice because of their ability to deal with large-scale TSPs. These algorithms, however, cannot guarantee an optimal solution but can provide a satisfactory solution with an affordable computational cost. They are generally designed with the help of certain specific knowledge and intuitive experiences to construct a reasonable route solution. During the search process, they use some greedy strategies for a better solution to guide the search operation within a limited solution space. In this way, the solutions become better and better in the sequential iterations while the search is always set in a local mode. It is clear that better heuristic algorithms require a deeper understanding of the solution domains and structures, from which high-quality solutions can be found effectively and efficiently. However, a heuristic algorithm can perform well on certain specific instances but not work well for other instances, and it is often expensive to adapt to new instances and problems. In general, heuristic algorithms can serve as building blocks for more sophisticated meta-heuristic approaches.

Meta-heuristic approaches are more generic and flexible than heuristic algorithms and are often used when heuristic approaches are insufficient or impractical. Indeed, they have been widely adopted for TSPs due to their ability to efficiently explore large solution spaces and find good approximate solutions. Most of these meta-heuristic algorithms are inspired by natural and biological behaviors and employ certain top-level strategies for the solutions of TSPs. Actually, they can offer high-quality solutions with relatively less computational cost. In such an approach, the search scheme is generally designed to guide some local improvement operators in an intelligent way so that a robust iterative generation process can be produced through a proper balance regarding the diversification and intensification strategy during each search iteration. Diversification and intensification refer to the exploration and exploitation in the solution search space, respectively. The strength of meta-heuristics is the effectiveness of the employed intensification and diversification strategy, and the efficiency depends on the decision between the global search reinforcement and convergence search in the promising region. However, they often meet with the problem of premature convergence, which traps the search process in a local optimum solution. Moreover, meta-heuristics utilize many parameters that need to be tuned. In addition, most of these approaches yield probabilistic solutions due to the randomness in the process. It may be possible to enhance the effectiveness of meta-heuristics by combining two or more algorithms into a hybrid form. The performance of the hybrid algorithm is certainly better; however, it necessitates a higher computational cost, while effective hybridization is difficult to achieve.

In 2016, a new conceptual computational model named Probe Machine (PM) was proposed by Xu [5]. It is a completely parallel computing model in which the data placement mode is nonlinear. Motivated by the probe concept and data structure of the PM, we first designed a PM-based optimization algorithm for solving symmetric TSPs [6]. It is a route construction and search procedure coupled with a certain filtering mechanism. Specifically, it starts with all the possible sub-routes consisting of three cities, and then each potential sub-route is extended and enhanced step by step from its two ends until complete routes are finally formed. At the same time, the worst routes are filtered out according to the filtering proportion value. Its advantages are the clarity of the idea and the easy implementation. However, there is no scope of modifying and developing the possible routes from the current existing potential routes, so certain potential routes may easily be left out.

To ameliorate this potential drawback, we further designed a dynamic route construction optimization algorithm for both symmetric and asymmetric TSPs through the integration of the probe concept and local search mechanism [7]. This PM-based dynamic algorithm adopts the route modification and development as the routes are built. In fact, the embedded local search operators are imposed consecutively on the retained potential routes to produce more potential routes before each subsequent expansion. In this paper, we extend and develop our previous study of the PM-based search optimization framework methodologically and theoretically. Specifically, we design a two-stage search optimization algorithm for solving both symmetric and asymmetric TSPs through the stages of probe-based route development and a self-escape mechanism. In the first stage, the key idea is to design a potential route filter in each step of the probe-based route development process such that at least a sufficient number of potential or valuable partial routes are retained in each step; hence, the probability of producing the best complete route is increased under limited computational resources. Moreover, certain local augmentation operators are further employed to extend and improve the retained potential partial routes in each step. In the second stage, a self-escape mechanism is implemented regarding the obtained complete routes from the first stage to prevent the above probe-based search from being trapped in a locally optimal solution. Therefore, a local optimal solution can be skipped and the global optimization search capability can be enhanced. In fact, it is an effective search optimization framework in which the first stage is to construct and develop a set of better complete routes step by step dynamically and the second stage is to self-escape from the stagnant routes (if possible). The main contributions of this work are summarized as follows:

- A two-stage probe-based search optimization algorithm is designed for solving both symmetric and asymmetric TSPs through the stages of probe-based route development and a self-escape mechanism. The experimental results demonstrate that our proposed algorithm performs better than the other state-of-the-art algorithms with respect to the quality of the solution over a wide range of TSP datasets.

- A proportion value threshold filter is designed and integrated into the probe-based search optimization framework to retain at least a sufficient number of potential routes in each step of the route development process. Actually, we set up an initial value for the proportion value of the potential partial route filtering in the first step and then dynamically adjust it in the following steps.

- Four local augmentation operators are designed and employed on each of the developed potential routes in an efficient manner so that all the retained potential routes are further augmented and improved consecutively at each step.

- A self-escape mechanism is further implemented on the obtained complete routes from the first stage to prevent the probe-based search from being trapped in a locally optimal solution.

- A statistical analysis is conducted to validate the computed results of our proposed algorithm against the other benchmark optimization algorithms by using the Wilcoxon signed rank test.

The rest of this paper is organized as follows. The mathematical description of the TSP is provided in Section 2. Then, the concept of the probe is introduced in Section 3. We further describe our adopted proportion threshold filter in the probe-based search in Section 4 and our employed local augmentation operators in Section 5. Section 6 presents our proposed probe-based search optimization algorithm in detail. The experimental results and discussion are summarized in Section 7. Finally, we include a brief conclusion in Section 8.

2. TSP Mathematical Description

The TSP is a path planning optimization problem of finding the lowest-cost route through a given set of cities. The route must be designed in such a way that each city is visited once and only once and eventually returns to the starting city. It is known to be NP-complete, meaning that there is no known effective algorithm that can solve it for large instances in polynomial time. As the number of cities increases, the number of possible routes grows factorially, making it computationally infeasible to find the optimal solution through brute force for large instances. Despite its computational complexity, the TSP has attracted great attention from scientists and engineers due to its great value for practical applications and its connections to other optimization problems. Until now, no general method has been able to tackle this problem effectively [8].

The TSP was first mathematically formulated by Karl Menger in 1930 [9] and, since then, it has been extensively investigated in diverse applicable fields. Typical examples of the TSP real-life applications include transport routing, circuit design, X-ray crystallography, micro-chip production, scheduling, mission planning, aviation, logistics management, DNA sequencing, data association, image processing, pattern recognition, and many more [10,11,12]. Therefore, it is very important and valuable to design and implement an effective algorithm for the TSP solution.

The TSP can be represented as a graph-theoretic problem. Let be a directed graph, where is the set of vertices (nodes) and is the set of edges. Each vertex (node) denotes the position of a city and each edge indicates the path from the i-th city to the j-th city. Moreover, a non-negative cost (distance) is associated with each edge for representing the edge weight of the graph. If , the graph is referred to as symmetric TSP, whereas the asymmetric TSP corresponds to the case with for at least one pair of edges and of the graph . The aim of this problem is to construct a complete route T with n distinct cities such that the total traveling cost (distance) function of the route is minimized; i.e., the fitness function of the route is maximized. Let with all distinct cities be a complete route and be its route cost (distance). Then, the objective function of the TSP can be formulated as follows [13]:

where is the cost (distance) of the local path between the cities and . If and are coordinates of and , then is calculated by Euclidean distance as follows:

3. Probe Concept

The probe is conceptually a detection device or related operator that accurately recognizes a piece of a particular structure or pattern from the whole description of an object and implements certain operations, such as connection or transmission, between the detected structures. It has been extensively used for various purposes in diverse fields like medical, engineering, biology, computer science, electronics, information security, archaeology, and so on [5,14]. In the medical field, ultrasonic probes are utilized to generate acoustic signals and detect return signals. These probes are an essential component of ultrasound systems and work by emitting high-frequency sound waves into the body or material being examined and then receiving the echoes that bounce back. On the other hand, a short single-stranded DNA or RNA fragment (approximately 20 to 500 bp) is designed as a biological probe to detect its complementary DNA sequence or locate a particular clone. In addition, the probe concept is adopted in electronics to perform various electronic tests, while archaeologists use it to interpret the soil’s nature and to decide where and how to excavate.

As a computer model, the Probe Machine (PM) [5] was developed where each probe is assumed to be an operator to make a connection between any two pieces of fiber-tailed data or to transmit information from one piece of fiber-tailed data to another if their tails are consistent. The probe in PM accomplishes three different functions. First, it accurately finds any two target data pieces with perfectly matching adjacent edges or fiber tails. Then, it takes any pair of possible target data pieces from the database of available fiber-tailed data. Finally, it performs some well-defined operations to extend fiber-tailed data step by step to a problem solution. Motivated by the probe of the PM, we design a new kind of probe for our PM-based search optimization approach to solving TSPs. In our approach, the probes are assumed to be a connection operator of city sequences or possible sub-routes that are consistent so that the complete route can be produced step by step. The sub-route consisting of edges is referred to as the m-city basis probe. The outer two edges are called the wings of the natural probe. The actual probe performs two actions such that it first finds out the required adjacent edges and then generates the next possible basis probes based on the availability of these edges. Actually, each basis probe can use its wings to accurately detect and append two other different adjacent edges on the route to extend the current route solution. In this way, each basis probe is enhanced automatically on both ends in every step of the procedure and continues until all cities are included in the basis probe, i.e., until complete (n-city) basis probes are formed. Mathematically, to arrive at a complete probe search of n-city problem, the procedure needs to execute steps, where

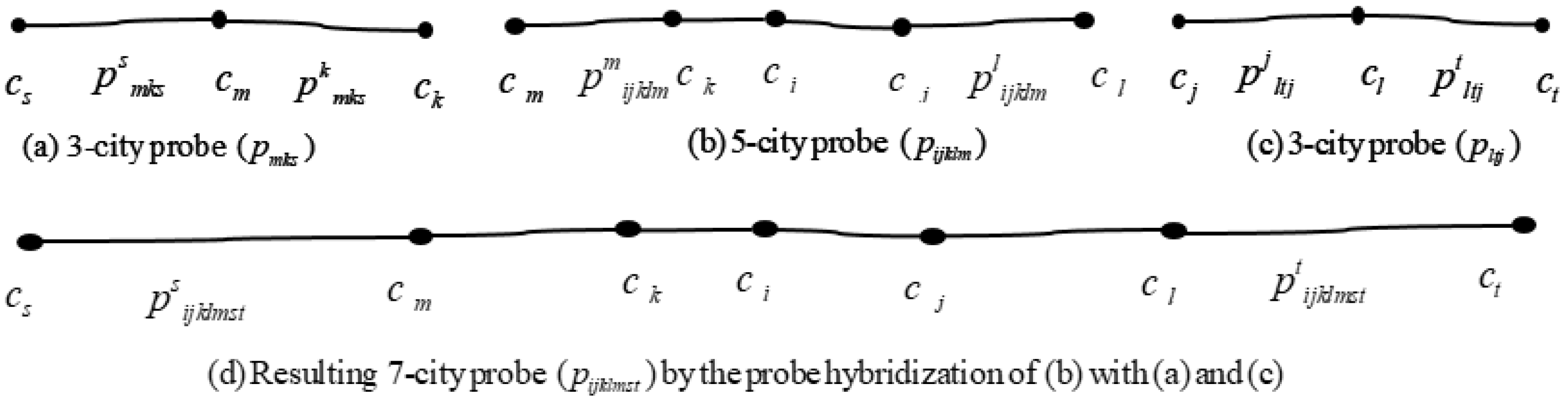

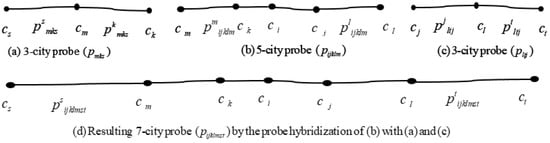

To facilitate the understanding of the network expansion mechanism of the probe, it is illustrated graphically in Figure 1. In the figure, a 5-city basis probe is hybridized with two 3-city basis probes and generates a 7-city basis probe. In our model, the first step generates 3-city basis probes, the second step generates 5-city basis probes, the third step generates 7-city basis probes, and in this way the n-th step generates n-city basis probes. The number of cities visited step-wise by the probe operation is provided in Table 1. The sample basis probes in the figure are denoted by , , and , respectively. Actually, the probe is a sub-route consisting of three cities with the wings . Similarly, the probe is a sub-route consisting of three cities having wings , while the probe is a sub-route consisting of five cities with the wings . For the expansion of the network of 5-city basis probe , it explores for two 3-city basis probes through the wings and . On the other hand, the wing of the basis probe and the wing of the basis probe are consistent with the wings of the probe . The other wings of the basis probes and are not consistent with the basis probe . After finding these types of basis probes, the 5-city basis probe hybridizes with these 3-city basis probes through the wings and . This hybridization leads to the expansion of the current network and generates a 7-city basis probe comprising the cities . The new 7-city basis probe is denoted by , and it also has two wings, namely and . In this way, the hybridized probes extend their network, and the redundant probes not being hybridized are left out.

Figure 1.

Illustration of probe hybridization mechanism: (a) 3-city basis probe; (b) 5-city basis probe; (c) 3-city basis probe; (d) resulting 7-city basis probe by the probe hybridization of (b) with (a,c).

Table 1.

The number of cities visited step-wise by the probe operation.

4. Adopted Filtering Mechanism

In searching for the solution of a TSP, a ”filter” typically refers to a technique or mechanism of reducing the search space or eliminating the unpromising solutions during the optimization process. This technique can play a crucial role in improving the efficiency of the algorithms that aim to determine the optimal or near-optimal solutions to the TSP. Some of the key filtering concepts used in TSPs include bounding filters, dominance rules, symmetry-breaking filters, etc. These filters can significantly improve the efficiency of the algorithms for solving the TSP, enabling the exploration of the larger spaces and finding the near-optimal solutions within a reasonable time frame.

The adopted filter of our approach is a proportion threshold filter that is set up rigorously in the probe-based search process. Actually, it assists the search operator in retaining the least but necessary number of potential partial routes in each step of the complete route development process. The potential routes of the current step are used to generate possible basis routes in the next step during the route construction process. Therefore, the performance of the probe-based search process is highly influenced by the appropriate choice of the filtering proportion value. In fact, setting an appropriate proportion value of generated routes is a rather challenging problem for the effectiveness of our approach. An inappropriate choice of proportion value leads to trapping the whole process, which not only yields a worse solution but consumes a longer computational time. Specifically, a larger proportion value may provide more chances to produce a better optimum solution. However, this also means that the number of possible basis routes generated in the next step is increased rapidly and the computational cost is increased too, and, sometimes, the model cannot even produce a feasible complete route. On account of this, it is important to set up a reasonable proportion threshold filter in the working steps so that the worst generated routes are filtered out in each filtering process. Through theoretical analysis and the experiments, we set a proportion value function for the kth step as follows:

where k denotes the step number that changes in , n stands for the number of cities contained in the test dataset, and is a constant. Actually, the value of is dependent on the value of n and can be fitted by the trial and error method. It can be observed from the experiments that, in some cases, a smaller value of is needed to provide a good solution, while, in some other cases, a larger value of is required to obtain a satisfactory solution. Therefore, the value of is not biased, and it offers different tradeoffs in terms of solution quality, computational efficiency, and applicability to different problem instances. The experiments and theoretical analysis demonstrate that the value of lies within an interval of , i.e., , which is a good adjustment for computing a satisfactory solution in an acceptable time frame.

The design of the adopted filter with the threshold value in Equation (4) is based on the idea that, initially, the routes are too short to clear. As the step increases, the routes are also gradually becoming more and more clear. Thus, it is reasonable to set up a large proportion value in the first step and then to reduce with time in the following steps, i.e., . From the experiments, it can be found that, as the number k of steps increases, the number of retaining potential partial routes is increased at first in a few steps and then starts to decrease. From the step where the reduction begins, it is decreased very rapidly in the remaining steps; in some cases, it even retains one single route. To mitigate this problem, we can fix the proportion value unchanged after conducting of the whole steps. In addition, if the number of retaining potential partial routes at any step is too small (e.g., ), it is believed that the procedure has already fallen into the trap of local optimum solution. Once trapped, it cannot jump out from there as the proportional value is still decreasing and it retains one single route in the remaining steps. To get rid of having one single possible route, the proportion value can be increased at that step. This increment is created in such a way that the number of retaining potential routes belongs to an interval of . After that, it is decreased as before in the remaining steps of the procedure. This strategy allows the proportion value to be increased in certain steps of the procedure. More precisely, it can be said that we set up an initial filtering proportion value in the first step, and then the algorithm dynamically adjusts it in the rest of the steps. Therefore, the search process is able to avoid having one single route, and hence the probability of producing a better complete route is increased.

The filtering mechanism can be explained through a concrete example. Suppose that we would like to solve a six-city symmetric TSP problem. In the first step of our approach, 60 basis sub-routes of three cities will be generated. If we set the proportion value to in the first step, then 30 potential 3-city sub-routes will be retained before leaving the first step, and the remaining 30 routes will be filtered out based on their fitness value. These retained potential basis routes are then modified and improved into good routes through the local augmentation operators, which are discussed in the next section. These good sub-routes are referred to as the root basis probes or sub-routes. In the second step, 5-city basis sub-routes will be generated using these 30 good basis routes. Let us say 180 basis sub-routes are produced in the second step, and, if we set as the proportion value in this step, then 60 basis sub-routes will be retained for route extension. These 60 basis sub-routes are used to construct 6-city complete routes, and, finally, a best 6-city complete route is found from there.

5. Employed Local Augmentation Operators

In our solution augmentation process, four types of local augmentation operators are employed consecutively on each retained potential basis probe or sub-route in each step of the route development procedure. These operators help to modify the existing sub-route iteratively and potentially improve its quality. Actually, the local augmentation operators are adopted in the first improvement manner; i.e., once an improvement is found, subsequent improvements are explored based on this improvement. In addition, a well-defined decision function for each operator is used to avoid generating a worse route and consuming a longer time. We briefly describe them in the following subsections, respectively. The pseudocode of improving potential basis probes or sub-routes through local augmentation operators is offered in Algorithm 1.

| Algorithm 1: Pseudocode of the potential basis probe improvement procedure via local augmentation operators |

| Input: A retained potential basis probe , Fitness value of the basis probe , Distance matrix Output: Improved basis probe , Fitness value of the improved basis probe

|

5.1. The 2-Opt Operator

The 2-opt operator eliminates two edges from the existing potential basis probes and reconnects them together to create a new good basis probe or possible route. Let , where , and , where , be the two edges of a potential basis probe , where . Then, the 2-opt operator reverses the local path between the cities and , and generates a new good basis probe, which is denoted by and is defined by . To avoid generating a worse basis probe by the 2-opt operator, it can be dominated by the following decision inequality:

5.2. Reversion Operator

The reversion operator first locates the position of two different cities of a potential basis probe and then reverses the local path between these two cities. Consider a potential basis probe , where , and the two cut points and on p. This reversion operator generates a new good basis probe, which is denoted by and is defined by . To determine whether the reversion is beneficial, we can check the following inequality:

5.3. Swap Operator

The swap operator simply exchanges the positions of two cities of a potential basis probe to create a new good basis probe. Let the two positions and be selected from a potential basis probe , where . The new good basis probe through swap operator on p is denoted by and is defined by . We accept the new basis probe if the following inequality holds:

5.4. Insertion Operator

The insertion operator first picks two positions and with and then the city is inserted into the ’s back position. Consider a potential basis probe , where . The new basis probe generated based on this operator is defined by . In fact, the new basis probe can be accepted if the following inequality holds:

6. Two-Stage Search Optimization Algorithm

In order to maintain a good balance between the effectiveness and efficiency of probe-based route development, we present a two-stage search optimization algorithm for solving both symmetric and asymmetric TSPs. Functionally, the first stage is to construct and develop an appropriate set of good complete routes through the probe extension step by step dynamically, while the second stage is a self-escape loop to prevent the search from being trapped in a locally optimal solution if possible. We describe the two stages in detail in the following two subsections, respectively.

6.1. Stage 1—Good Complete Route Development

In the first stage, we consider the partial routes as basis probes and extend them step by step to construct and develop a set of good complete routes in the end. In each step, a proportion value threshold element is set as a filter to filter out the worst partial routes or basic probes. Moreover, four local augmentation operators are employed to modify and improve the existing partial routes. According to the number of cities in the given TSP, this stage consists of certain steps (defined in Equation (3) to complete the route development. Actually, except the first one, each step implements three operations: basis probes or partial route generation, basis probe filtering, and basis probe improvement. The working steps of the first stage can be described as follows:

Step-1: 3-city basis probes are generated by taking all possible local two-path routes for each city as a center. Actually, a local two-path route is generated with three cities in which one city is central or internal and the other two cities are adjacent to it. We simply refer to it as a 3-city basis probe. Considering an n-city TSP problem with city list where , we let be a city located at the position and be a set of cities adjacent to ; i.e., . Then, the set of possible local two-path routes with internal city , with adjacent cities and , can be defined by as follows:

where represents a 3-city basis probe whose outer two wings are and , respectively. Actually, it can utilize these two wings to extend and develop the route in the next step. In total, the set of possible 3-city basis probes is a union of all provided as follows:

After generating all the possible basis probes, the quality or fitness function of basis probe can be defined and computed from its cost (distance). That is, the quality of the basis probe is inversely proportional to its cost (distance); i.e., the basis probe with a higher value of is fitter and vice versa. Then, these basis probes can be ordered through the fitness function; e.g., the order of the fittest basis probe is 1, the order of the next fittest one is 2, etc. Finally, we retain certain potential basis probes from all the generated ones with the help of a threshold value filter (defined in Equation (4)). These retained basis probes are considered as the good and root probes of this step. Actually, root probes are 3-city potential basis probes that are kept for further route extension. Assuming that is just the threshold value of the filter or proportion value threshold element at this step, the set of the retained good and root basis probes can be constructed as follows:

where denotes the number of generated 3-city basis probes in . For clarity, we let be the number of the retained good and root basis probes of .

Step-2: In this step, we generate 5-city basis probes with the retained good basis 3-city probes obtained from Step-1. Indeed, each retained good basis probe of Step-1 can be hybridized with other consistent 3-city basis probes and generate the next possible 5-city basis probes. Actually, the set of possible 5-city basis probes can be constructed through the connection operations as follows:

where indicates a 5-city basis probe as it is a route of 5 different cities. The outer two wings of this basis probe are and , respectively. Like in Step-1, the quality or fitness function of basis probe can be defined and computed in the same way to order the basis probes. Once the basis probes are constructed and their orders are obtained, the lower fitness basis probes are filtered out while the good potential basis probes are retained according to the threshold value of the filter. Actually, assuming that the filtering threshold or proportion value at this step is (defined in Equation (4)), the set of possible potential basis probes can be constructed as follows:

where is the number of generated 5-city basis probes of . The number of retaining potential basis probes can be denoted as . For the possible improvement of each potential basis probe , four local search operators (explained in Section 5) are implemented consecutively on it. If any better or fitter basis probe is developed, the earlier basis probe is directly replaced by the better basis probe. As a result, these retained potential probes are developed and improved. For clarity, we refer to the improved basis probes as the good basis probes. Therefore, the set of good basis 5-city probes can be constructed as follows:

where denotes the improved basis probe of , denotes the total operation of implementing the four local augmentation operators consecutively, and are the fitness functions of the developed and original basis probes, respectively. Therefore, it can be easily found that since .

Like Step-2, the basis probe generation, filtering, and improvement operations can be carried out in the remaining steps of the complete route development process. In general, after completing the filtering task of the th step, we obtain the set of -city potential basis probes, which can be denoted by and constructed by

In Equation (15), is a retained -city potential basis probe, denotes the set of possible generated basis probes at the th step, being expressed by Equation (17), denotes the number of basis probes in , and is the filtering proportion value at the th step. Each retained potential basis probe is further improved by the four local augmentation operators. Thus, the set of -city good basis probes is obtained at the end of the th step, which is provided by

In Equation (16), is the improved basis probe obtained by implementing the four local search operators on the basis probe , and represent the fitness values of the improved and original basis probes, respectively.

In Equation (17), is a -city generated basis probe having the outer wings and , is the set of -city good basis probes obtained from the step, and and represent the 3-city basis probes in .

In this way, after executing the last step, the route development process has produced a set of good basis probes, , where each good basis probe consists of n cities, being a complete one or a complete route for the TSP. It should be noted that, in the last step of the even-number TSP, one city remains to be connected and thus the basis probe uses any one of its wings to include the remaining city properly.

6.2. Stage 2—Self-Escape Mechanism

After the first stage, we arrive at a set of good complete basis probes or routes as the search result of the TSP. However, there may be some stagnant complete basis probes that can be considered as being trapped in a locally optimal solution during our route development and search process. In order to alleviate this locally trapped search problem, we can couple our general route development and search process (the first stage) with a self-escape mechanism, which is referred to as the second stage, i.e., Stage 2. Through this self-escape mechanism, we can enhance the diversity of the complete basis probes and further improve the search results. In fact, the self-escape mechanism was first introduced in the PSO algorithm by Wang et al. [15] in 2007. Recently, Wang et al. [16] applied it to promote the performance of the DSOS algorithm. Here, we utilize it to solve our locally trapped search problem such that, as a complete basis probe is trapped in a local optimum solution, we enforce it to effectively jump out of itself and search for the better complete basis probe. Specifically, this self-escape mechanism accomplishes two different tasks: first, identifying whether a complete basis probe is trapped in a local optimum, and, second, if it is, helping it to jump out of itself to develop a new solution.

Let , where and represent a complete basis probe and the current best basis probe in , respectively. We can judge whether a complete basis probe is a local optimum solution if and only if the following inequality holds [15,16]:

where

where and denote the sets of edges in and , respectively, denotes the set of common edges between and , denotes the number of common edges in , and is the number of complete basis probes in . That is, if the inequality Equation (18) holds, it is believed that is trapped in a local optimum solution. In such a situation, we implement the self-escape mechanism on as the following 3-opt operator such that we can transform it into promising basis probes.

We first remove 3 edges from the stagnant complete basis probe and divide it into three partial routes as it is considered a ring route. We then connect these partial routes in different ways to generate new complete routes that may be better than the original route. For example, let be a stagnant complete basis probe and its three edges, namely with , , where and , and , where and are selected. Removing these 3 edges from makes 3 partial routes, namely , where q = 1, 2, 3. The reverse of these partial routes is denoted by , where q = 1, 2, 3. Then, the new complete probe can be generated by combining and in the following seven different ways:

- , Judge by the inequality

- , Judge by the inequality

- , Judge by the inequality

- , Judge by the inequality

- , Judge by the inequality

- , Judge by the inequality

- , Judge by the inequality

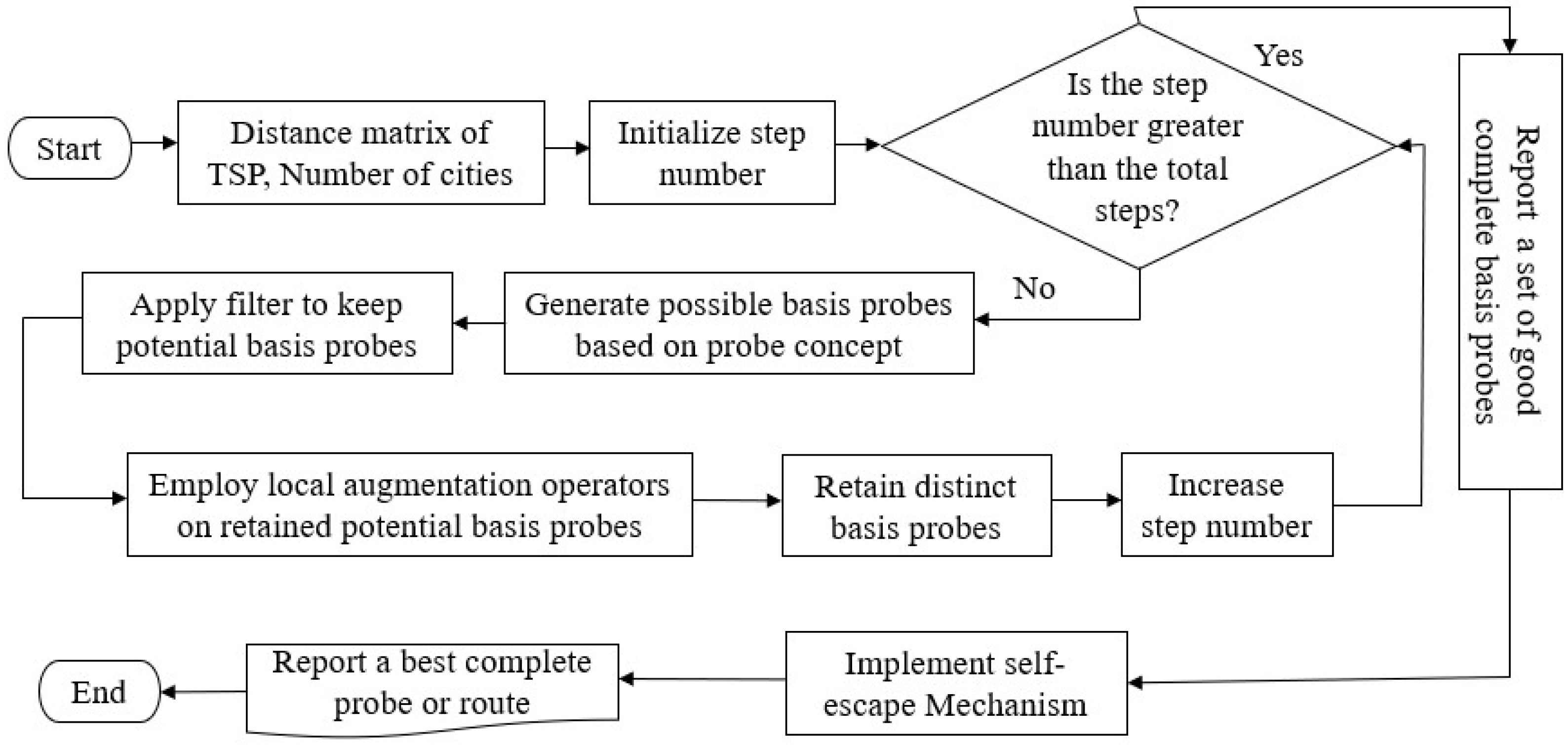

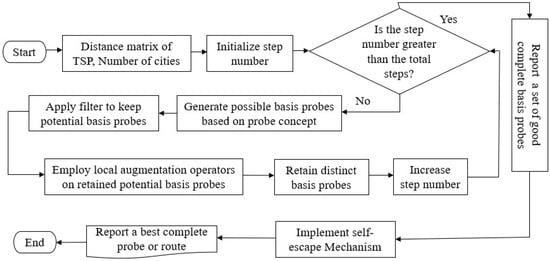

We use the cases of 3, 6, and 7 for the symmetric TSP and all cases for the asymmetric TSP to generate new complete basis probes. The flowchart and pseudocode of our proposed two-stage search optimization algorithm are sketched in Figure 2 and Algorithm 2, respectively.

| Algorithm 2: Pseudocode of the proposed two-stage probe-based search optimization algorithm |

| Input: Distance matrix , Problem size n, Filter Output: Best complete probe , Fitness of best complete probe

|

Figure 2.

Flowchart of the proposed two-stage probe-based search optimization algorithm.

7. Experimental Results and Analysis

In this section, various experiments are carried out to evaluate the performance of our proposed algorithm on the typical benchmark datasets of the TSP with a different number of cities [17,18,19,20]. The obtained experimental results are further compared with the best-known TSP benchmark results reported by the data library, as well as with the results obtained by the state-of-the-art algorithms. Finally, a rigorous statistical analysis is conducted to substantiate the advantages of our proposed algorithm against the other state-of-the-art optimization algorithms.

7.1. Experimental Configurations and Evaluation Protocol

To conduct the experiments on the datasets whose city size is up to 561, we use a desktop computer with the specifications of Intel Core i5-4590 GHz processors, 8 GB RAM, and 64-bit Windows 10 operating system. For the other datasets, we run with a 2 core GPU Linux operating system due to requiring high computational resources. The proposed algorithm is implemented in MATLAB R2019a programming language for all the simulations. Two performance evaluation indicators, error (measured in %) and computational time (measured in seconds), are calculated to evaluate the performance of the proposed algorithm. The percentage deviation of the simulated result from the best-known results (i.e., error) is enumerated based on the following formulae:

where and denote the obtained solution and best-known solution on a particular TSP dataset, respectively. For the execution time, we run the algorithm 10 times independently on each TSP dataset and compute the average and standard deviation (SD) of the times.

7.2. Performance Evaluation and Analysis

In this subsection, we conduct the experiments of our proposed algorithm on 83 symmetric and 18 asymmetric benchmark TSP test problems to evaluate its performance. Actually, the experimental results are summarized in Table 2, where the “BKS” column element indicates the best-known solution reported by the data library, while the “Our Result” column element indicates the solution obtained by our proposed algorithm. The “Difference” and “Error” column elements denote the deviation and the percentage deviation of the obtained result from the best-known result, respectively. The computational time(s) column element denotes the average execution time (in seconds) of the algorithm with the SD value in 10 runs. We boldface the names of the datasets whose best-known solutions or new solutions are obtained by our proposed algorithm.

Table 2.

Computational results of our proposed algorithm for 83 symmetric and 18 asymmetric benchmark TSP datasets.

It can be observed from Table 2 that our proposed algorithm yields the closest best-known solutions on the considered symmetric and asymmetric TSP test problems. In fact, the errors are very small. Our proposed algorithm has found exactly the best-known solution for each symmetric TSP dataset whose city size is up to 180 (except ch150) and some other symmetric TSP datasets such as tsp225, ts225, pr264, si535, and si1032, and almost all the asymmetric TSP datasets (except ftv64, ft70, rbg323, and rbg358). Specifically, in some cases (shown separately in Table 3), it exhibits a strong global exploration capability and produces better solutions than the best-known solutions with negative percentage error entries in the table. Statistically, our proposed algorithm obtains exactly the best-known solution in of the symmetric datasets (54 out of 83), and in of the asymmetric datasets (14 out of 18). For the rest of the TSP test problems, the loss of efficiency is no more than and for symmetric and asymmetric cases, respectively. In addition, the error belongs to an interval of in (27 out of 101) cases, while only in (5 out of 101) cases is it more than . Furthermore, the average error over the entire 101 datasets is , with a standard deviation value of , which strongly demonstrates the outstanding performance of our proposed algorithm.

Table 3.

Best solutions found thus far by our proposed algorithm compared with the best-known solutions from data library.

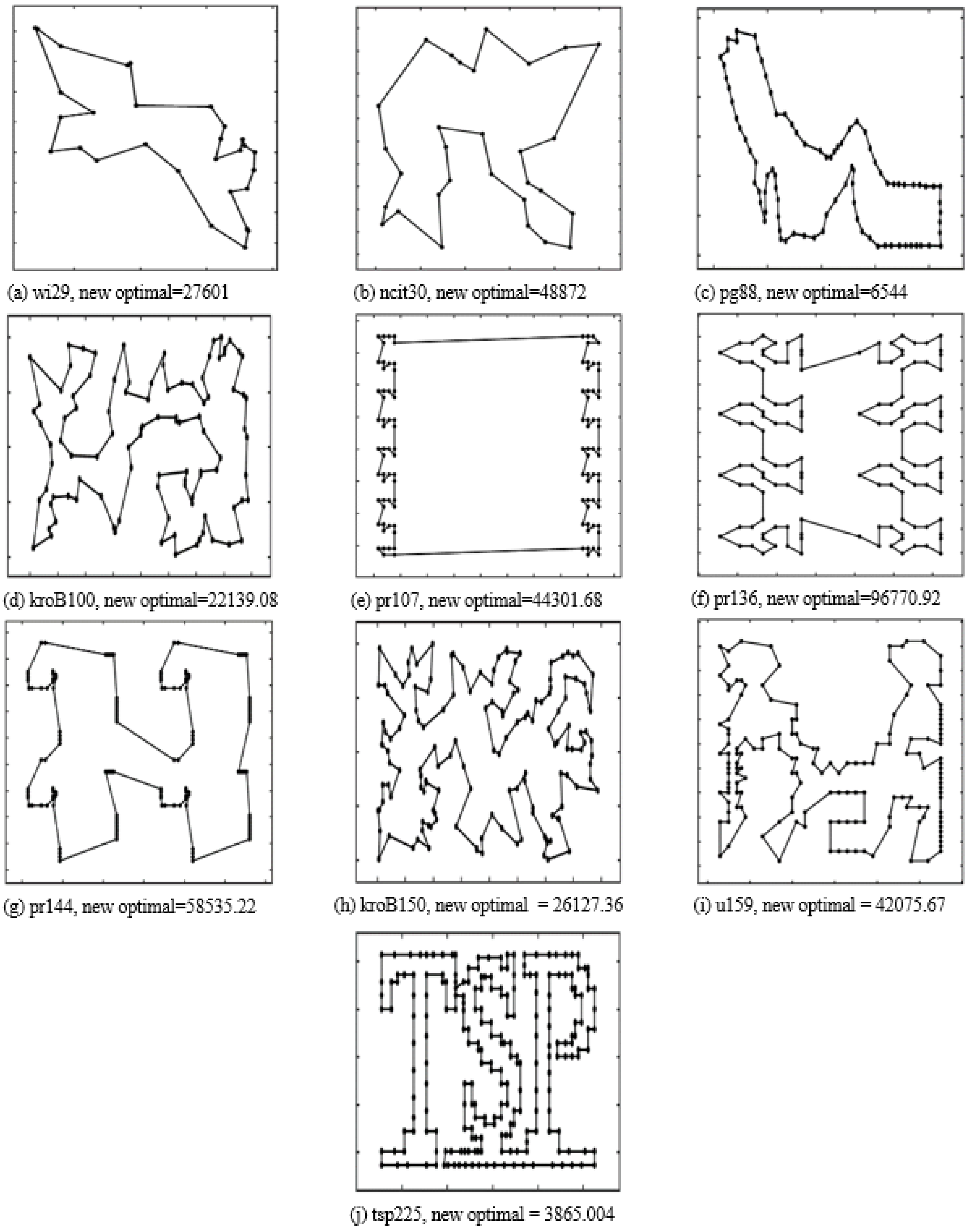

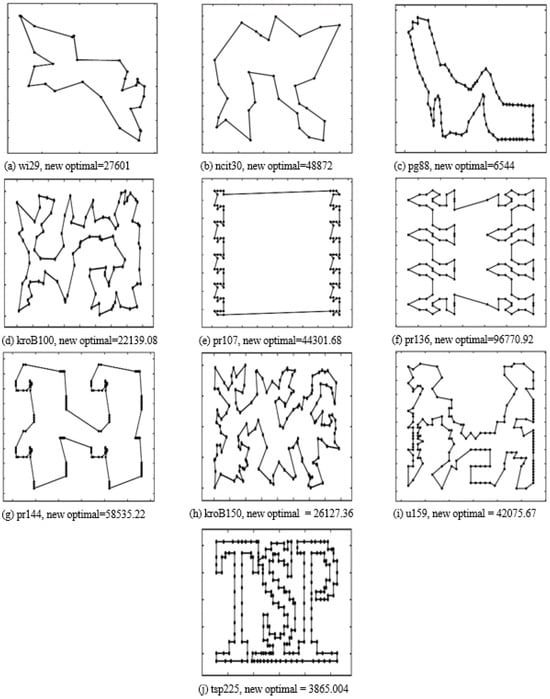

On the other hand, in terms of execution time, our proposed algorithm consumes a small amount of time to solve a small-scale TSP problem. As the scale of the TSP problem expands , its computational time may be relatively increased or decreased. For example, it takes s to solve the gr96 problem, while it requires only s for the brg180 problem. In the case of large-scale datasets , it is rapidly increased with the scale of the TSP problem. Although our proposed algorithm takes a longer time in the large-scale case, the quality of the solution is satisfactory (the maximum error is equal to ). On average, its computational time is acceptable. Therefore, we can consider that our proposed algorithm is a reliable search optimization method that can provide a good-quality solution for a general TSP within an acceptable time frame. Most importantly, it is deterministic; i.e., we run it to obtain the same result on a TSP dataset or problem at any time. The new best routes with the route length found by our proposed algorithm are further displayed in Figure 3.

Figure 3.

The new best route with route length found by our proposed algorithm for the datasets (a) wi29, (b) ncit30, (c) pg88, (d) kroB100, (e) pr107, (f) pr136, (g) pr144, (h) kroB150, (i) u159, and (j) tsp225.

7.3. Performance Comparison

In this subsection, we further compare our proposed algorithm with the state-of-the-art optimization algorithms. Table 4 describes the details of our selected state-of-the-art optimization algorithms for solving the TSPs. Specifically, our proposed algorithm is first compared with the state-of-the-art optimization algorithms with a self-escape mechanism and then compared with the other state-of-the-art algorithms (without a self-escape mechanism). Actually, the experimental results of our proposed approach are compared with those of the comparative algorithms reported in the literature, displayed in Table 5, Table 6, Table 7 and Table 8. The average (of solution route length) on all the TSP problems or datasets and the number of BKSs found corresponding to each comparison are presented at the bottom of each compared algorithm. Indeed, the number of BKSs found indicates how many datasets in which the algorithm can exactly find the best-known solution.

Table 4.

List of state-of-the-art optimization algorithms of TSPs considered for comparison.

Table 5.

Performance comparison of our proposed algorithm with the state-of-the-art optimization algorithms containing self-escape mechanism.

Table 6.

Performance comparison of our proposed algorithm with the other state-of-the-art optimization algorithms (ASA-GS, GSA-ACO-PSO, HGA+2local, IVNS, HSIHM+2local, ABCSS, DSMO, MMA, and DSCA+LS).

Table 7.

Performance comparison of our proposed algorithm with the other state-of-the-art optimization algorithms (IBA, DWCA, DSOS, MCF-ABC, GA-PSO-ACO, DIWO, PRGA, MPSO, SCGA, and VDWOA).

Table 8.

Performance comparison of our proposed algorithm with the other state-of-the-art optimization algorithms (DSSA and DA-GVNS).

7.3.1. Comparison with Self-Escape Mechanism-Based Algorithms

We begin by comparing our proposed algorithm with the state-of-the-art optimization algorithms with the self-escape mechanism. In fact, the self-escape strategy or mechanism was adopted to solve symmetric TSPs [15,16]. In the self-escape hybrid DPSO (SEHDPSO) algorithm [15], the five nearest neighbors for each node were considered to skip out the local optimum of the current best route. On the other hand, a swap-based randomized local search operator was coupled with the self-escape hybrid DSOS (ECSDSOS) algorithm [16] to find a satisfactory solution. However, these algorithms tend to produce a longer route or relatively worse solution. To find a better-quality solution, we actually employ an efficient 3-opt operator in our proposed algorithm at the self-escape mechanism stage.

Specifically, Table 5 displays the comparison results of our proposed algorithm with self-escape mechanism-based algorithms such as the SEHDPSO and ECSDSOS algorithms. In comparison with the SEHDPSO algorithm, it can be seen from Table 5 that our proposed algorithm finds better solutions than the average as well as the best solutions of the SEHDPSO algorithm in almost all the TSP test problems or datasets. In fact, the average error of our proposed algorithm over 20 datasets is , which is significantly better than the average error and the best solution error of the SEHDPSO algorithm. At the same time, our proposed algorithm captures the best-known solutions in more datasets (fourteen cases) than the SEHDPSO algorithm (one case and eight cases in the two versions). In comparison with the ECSDSOS algorithm, it can also be seen from Table 5 that the average error and BKS finding number of our proposed algorithm over 24 datasets are and 11, respectively, while those of the ECSDSOS algorithm are, respectively, and 0 (on the average solution version), and and 9 (on the best solution version). In addition, our proposed algorithm yields new solutions on certain datasets (negative entries in the table), but neither of these two algorithms can find such a solution. It can be further observed from Table 5 that, for certain small-scale datasets, all three algorithms are capable of finding the best-known solution. However, as the scale becomes larger and larger, our proposed algorithm shows better performance than both the SEHDPSO and ECSDSOS algorithms.

7.3.2. Comparison with the Other State-of-the-Art Optimization Algorithms

We further compare our proposed algorithm with the other typical state-of-the-art optimization algorithms without a self-escape mechanism. Actually, those optimization algorithms were tested on different sets of datasets. Thus, we use different sets of datasets in each group to compare the proposed algorithm with them. Specifically, Table 6, Table 7 and Table 8 offer side-by-side comparisons between our proposed algorithm and 21 state-of-the-art TSP-solving optimization algorithms.

The performance comparison of our proposed algorithm with ASA-GS [21], GSA-ACO-PSO [22], HGA+2local [24], IVNS [29], HSIHM+2local [31], ABCSS [30], DSMO [35], MMA [2], and DSCA+LS [34] is illustrated in Table 6. According to Table 6, in comparison with ASA-GS, GSA-ACO-PSO, HGA+2local, IVNS, DSMO, and DSCA+LS for the symmetric TSP datasets, our proposed algorithm achieves better results than the average results of all six algorithms in almost all the datasets except in one case of ASA-GS, in four cases of GSA-ACO-PSO, and in one case of IVNS. On the other hand, in comparison with the best results of these algorithms, the performance of our proposed algorithm is only inferior in two cases out of forty-five with ASA-GS, in six cases out of twenty-five regarding GSA-ACO-PSO, no worse out of twenty-one with HGA+2local, in one case out of fifty-seven related to IVNS, no worse out of forty concerning DSMO, and in one case out of twenty-seven connected to DSCA+LS. On the remaining test datasets, our proposed algorithm achieves better or equal scores with the above six algorithms. In addition to these, our proposed algorithm traces the best-known solution in nineteen cases as compared with five out of forty-five for ASA-GS, in eleven cases as compared with thirteen out of twenty-five for GSA-ACO-PSO, in sixteen cases as compared with four out of twenty-one for HGA+2local, in twenty-seven cases as compared with twelve out of fifty-seven for IVNS, in twenty-one cases as compared with five out of forty for DSMO, and in eighteen cases as compared with seventeen out of twenty-seven for DSCA+LS. It is also notable that, for some small-scale datasets, our proposed algorithm yields similar scores to these comparative algorithms. However, as the scale becomes larger and larger, it finds better results than these six comparative optimization algorithms.

As demonstrated in Table 6, it is clear that, in most of the considered datasets, except three small-scale datasets, namely ftv64, eil51, and berlin 52, our proposed algorithm is better than HSIHM+2local and ABCSS for symmetric and asymmetric TSPs. On the test dataset ftv64, its solution is not better than the best solution of HSIHM+2local, but it is better than the average one. It is noticed that our proposed algorithm determines the best-known solution in 14 and 11 datasets, whereas HSIHM+2local and ABCSS provide such a solution in eight and eleven datasets, respectively. It is also observed that, for some small-scale datasets, all three algorithms determine the best-known solutions. As the scale becomes increasingly large, our proposed algorithm achieves greater global exploration capability than both the HSIHM+2local and ABCSS algorithms. As shown in Table 6, it is observed that our proposed algorithm outperforms the deterministic algorithm MMA for symmetric TSP datasets on nearly all the considered datasets. Indeed, MMA performs better regarding dj38 and eil76 and achieves the best-known solution in one case, while our proposed algorithm is better in 22 datasets and captures the best-known solutions in 12 cases. In addition, MMA is suitable only for small-size TSP datasets, whereas our proposed algorithm can be applied to solve small- and relatively large-scale datasets. Therefore, it can be concluded that the performance of our proposed algorithm is better in (21 out of 24) cases compared to MMA.

Table 7 and Table 8 demonstrate the comparison of our proposed algorithm with IBA [26], DWCA [28], DSOS [27], MCF-ABC [32], GA-PSO-ACO [23], DIWO [25], PRGA [33], MPSO [3], SCGA [37], VDWOA [36], DSSA [8], and DA-GVNS [38]. According to Table 7 and Table 8, in comparison with DSOS, GA-PSO-ACO, DIWO, MPSO, SCGA, and DSSA for the symmetric TSP datasets, our proposed algorithm provides better results than the average results of all six algorithms regarding almost all the datasets except in two cases of DSOS, in one case of GA-PSO-ACO, in one case of DIWO, and in four cases of DSSA. On the other hand, compared with the best results of these algorithms, the performance of our algorithm is inferior in five cases out of twenty-eight with DSOS, in four cases out of thirty regarding GA-PSO-ACO, in three cases out of nineteen related to DIWO, in three cases out of thirty-five with MPSO, no worse out of twelve concerning SCGA, and in five cases out of thirty-two connected to DSSA. On the remaining test datasets, our proposed algorithm achieves better or equal results with these six algorithms. In addition, our proposed algorithm obtains the best-known solution in fourteen cases as compared with eleven out of twenty-eight by DSOS, in eleven cases as compared with one out of thirty by GA-PSO-ACO, in eight cases as compared with zero out of nineteen by DIWO, in twenty-three cases as compared with twenty-five out of thirty-five by MPSO, in six cases as compared with one out of twelve by SCGA, and in nineteen cases as compared with twenty-two out of thirty-two by DSSA. In a word, the solutions obtained by our algorithm have better accuracy than those obtained by the above six comparative algorithms.

According to the results in Table 7 and Table 8, in comparison with IBA, DWCA, and DA-GVNS for symmetric and asymmetric test problems, our proposed algorithm produces better or equal solutions compared to the average as well as the best solutions of these three algorithms in each considered dataset by excluding two smaller datasets, namely eil51 and berlin52. Moreover, our proposed algorithm obtains the best-known solution in 23 cases as compared with 18 out of 29 by IBA, in 23 cases as compared with 14 out of 27 by DWCA, and in 41 cases as compared with 20 out of 68 by DA-GVNS. It is also observed that our proposed algorithm significantly outperforms these three algorithms regarding almost all the datasets. Specifically, regarding the large-scale datasets, it obtains more accurate results than these three comparative algorithms. As demonstrated in Table 7, in comparison with the best results of MCF-ABC, VDWOA, and PRGA for symmetric test problems, it is apparent that our proposed algorithm achieves worse solutions than those computed by VDWOA and PRGA on the datasets of oliver30, berlin52, st70, rat99, and kroA200. On the rest of the test datasets, it obtains similar or better results than the best of these algorithms. Actually, our proposed algorithm obtains the best-known solution in twenty-eight cases as compared with twenty-seven out of twenty-eight by MCF-ABC, in four cases as compared with two out of twelve by VDWOA, and in five cases as compared with one out of nine by PRGA. It is also evident that MCF-ABC has a strong capability regarding escaping the local optimum; nevertheless, this algorithm is not capable of producing a better solution, which is obtained by our algorithm in seven cases.

From the above discussion and analysis, it can be determined that our proposed algorithm achieves better results on both the average and best solutions compared to all 21 algorithms regarding almost all the TSP test datasets. Overall, regarding the test datasets, the average route length of our proposed algorithm is actually smaller than that of each comparative algorithm. Furthermore, our proposed algorithm traces the best-known solutions in many more datasets than each of the comparative algorithms. Therefore, our proposed algorithm is superior to these benchmark optimization algorithms in terms of solution quality. In the literature, it is generally believed that meta-heuristics and hybridization optimization algorithms can provide good-quality solutions for the TSPs. However, our proposed algorithm has better performance than such optimization algorithms, including recently improved ones. Apart from this, most benchmark algorithms (except IBA, DWCA, ABCSS, and DA-GVNS) are designed with many fine-tuned parameters to solve either the symmetric TSP or asymmetric TSP, while our proposed algorithm is capable of handling both of these cases by tuning only one parameter. Furthermore, our proposed algorithm is designed without any randomness, so running it multiple times on a dataset always produces the same result. Thus, it is deterministic, while most of the existing standard optimization algorithms are probabilistic and their solutions may change in every single run. In this way, it is quite convenient for practical applications.

7.4. Statistical Analysis

To determine the difference between the performance of our proposed and comparative optimization algorithms, we finally undertake a rigorous statistical analysis in this subsection. Actually, the popular Wilcoxon signed rank test [32,39] at the confidence level is implemented to statistically examine the superior performance of the proposed algorithm against the other standard optimization algorithms. In this non-parametric test, we evaluate our proposed algorithm with one of the comparative optimization algorithms. The test statistic is calculated by the following formula:

where N is the number of datasets tested by the two algorithms. If the two algorithms perform equally on a dataset, we ignore this dataset and adjust the value of N accordingly. denotes the sum of ranks of the datasets on which our proposed algorithm performs better than the comparative algorithm, while denotes the sum of ranks of the datasets on which the comparative algorithm dominates our proposed algorithm. Moreover, the rank of the dataset is defined by the ascending order of the absolute error difference of the two algorithms (i.e., the rank 1 to the dataset with the smallest absolute difference, rank 2 to the next, etc.). returns to the minimum value of .

The critical values corresponding to N effective datasets () at different confidence levels can be found in [39]. If , it failed to reject the null hypothesis (: there is no significant difference regarding the performance of the two algorithms). On the other hand, the null hypothesis is rejected under the condition , and the statistical test concludes that there is a significant difference regarding the performance of the two algorithms. Since most of the comparative algorithms (except MMA) are probabilistic, we perform the statistical test separately with the percentage deviation of average results (PDavg.(%)) and percentage deviation of best results ((PDbest.(%))). The test results are summarized in Table 9, where ‘*’ indicates that the test result is undetermined (for a critical value at confidence level, value of N must be ≥6) and ‘-’ means the original reference did not provide any results.

Table 9.

Statistical test results based on the performance of our proposed algorithm against each state-of-the-art optimization algorithm.

According to the test results of Table 9, on the test with PDavg.(%), in all the cases (except ABCSS), the statistical test yields with . That is, the test suggests that our proposed algorithm is significantly better in comparison with all the comparative algorithms except ABCSS. Moreover, our proposed algorithm is still comparable with ABCSS due to having . Therefore, our proposed algorithm outperforms all the state-of-the-art comparative algorithms. Specifically, it significantly dominates eight recent optimization algorithms because . On the other hand, on the test with PDbest(%), the test results indicate that the performance of our proposed algorithm is significantly better than all the comparative algorithms (except SEHDPSO and GSA-ACO-PSO) owing to and . Its performance against SEHDPSO and GSA-ACO-PSO is not statistically sound; however, our proposed algorithm is still comparable with them due to having .

8. Conclusions

We have established a reliable two-stage optimization algorithm to deterministically solve both symmetric and asymmetric TSPs, which utilizes the probe concept to design the local augmentation operators for dynamically generating and developing TSP routes step by step. It also utilizes a proportion value to filter out the worst routes automatically during each step. Furthermore, a self-escape mechanism is imposed on each stagnant complete route for its further possible variation and improvement. It is demonstrated by the experiments on various real-world TSP datasets that our proposed algorithm outperforms the state-of-the-art optimization algorithms with respect to solution accuracy. In addition, it can ascertain the best-known solution regarding a significant number of datasets and even determine a new solution in certain cases (as shown in Table 3). In fact, our proposed algorithm is designed without randomness so that it is a deterministic algorithm, which can be a benefit over the existing algorithms in certain ways. Moreover, our proposed algorithm can deal with both symmetric and asymmetric TSPs by fitting only one parameter, while most of the existing standard optimization algorithms are designed with many fine-tuned parameters for either symmetric or asymmetric problems.

The main drawback of our proposed optimization algorithm is the computational time required to solve large-scale datasets. In fact, as the number of cities becomes larger, a general computer system will eventually run out of memory and cease functioning. It would be beneficial to extend the current work by investigating ways to improve the computational time required to solve large-scale datasets. In the future, we plan to improve the computational complexity by integrating our algorithm with the Delaunay Triangulation (DT) geometric concept because DT can provide potential edges rather than all the edges that are more likely to appear in an optimal solution of the TSP even though it does not contain a route of the TSP [40,41]. We also plan to apply this framework to solve other NP-complete problems, such as the vehicle routing problem, the job-shop scheduling problem, the flow-shop scheduling problem, etc.

Author Contributions

Conceptualization, M.A.R. and J.M.; Methodology, M.A.R. and J.M.; Software, M.A.R.; Validation, M.A.R.; Formal analysis, M.A.R.; Investigation, M.A.R.; Resources, J.M.; Data curation, M.A.R.; Writing—original draft, M.A.R.; Writing—review & editing, J.M.; Visualization, M.A.R.; Supervision, J.M.; Project administration, J.M.; Funding acquisition, J.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Key Research and Development Program of China under grant 2019YFA0706401.

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found in reference number [17,18,19,20].

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Papadimitriou, C.H. The Euclidean travelling salesman problem is NP-complete. Theor. Comput. Sci. 1977, 4, 237–244. [Google Scholar] [CrossRef]

- Naser, H.; Awad, W.S.; El-Alfy, E.S.M. A multi-matching approximation algorithm for Symmetric Traveling Salesman Problem. J. Intell. Fuzzy Syst. 2019, 36, 2285–2295. [Google Scholar] [CrossRef]

- Yousefikhoshbakht, M. Solving the Traveling Salesman Problem: A Modified Metaheuristic Algorithm. Complexity 2021, 2021, 6668345. [Google Scholar] [CrossRef]

- Applegate, D.L.; Bixby, R.E.; Chvátal, V.; Cook, W.; Espinoza, D.G.; Goycoolea, M.; Helsgaun, K. Certification of an optimal TSP tour through 85,900 cities. Oper. Res. Lett. 2009, 37, 11–15. [Google Scholar] [CrossRef]

- Xu, J. Probe machine. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 1405–1416. [Google Scholar] [CrossRef]

- Rahman, M.A.; Ma, J. Probe Machine Based Consecutive Route Filtering Approach to Symmetric Travelling Salesman Problem. In Proceedings of the Third International Conference on Intelligence Science (ICIS), Beijing, China, 2–5 November 2018; Springer: Cham, Switzerland, 2018; Volume 539, pp. 378–387. [Google Scholar]

- Rahman, M.A.; Ma, J. Solving Symmetric and Asymmetric Traveling Salesman Problems Through Probe Machine with Local Search. In Proceedings of the Fifteenth International Conference on Intelligent Computing (ICIC), Nanchang, China, 3–6 August 2019; Springer: Cham, Switzerland, 2019; Volume 11643, pp. 1–13. [Google Scholar]

- Zhang, Z.; Han, Y. Discrete sparrow search algorithm for symmetric traveling salesman problem. Appl. Soft Comput. 2022, 118, 108469. [Google Scholar] [CrossRef]

- Menger, K. Das botenproblem. Ergeb. Eines Math. Kolloquiums 1930, 2, 11–12. [Google Scholar]

- Banaszak, D.; Dale, G.; Watkins, A.; Jordan, J. An optical technique for detecting fatigue cracks in aerospace structures. In Proceedings of the ICIASF 99. 18th International Congress on Instrumentation in Aerospace Simulation Facilities. Record (Cat. No. 99CH37025), Toulouse, France, 14–17 June 1999; pp. 1–7. [Google Scholar]

- Matai, R.; Singh, S.P.; Mittal, M.L. Traveling salesman problem: An overview of applications, formulations, and solution approaches. Travel. Salesm. Probl. Theory Appl. 2010, 1, 1–24. [Google Scholar]

- Puchinger, J.; Raidl, G.R. Combining metaheuristics and exact algorithms in combinatorial optimization: A survey and classification. In International Work-Conference on the Interplay Between Natural and Artificial Computation; Springer: Berlin/Heidelberg, Germany, 2005; pp. 41–53. [Google Scholar]

- Khanra, A.; Maiti, M.K.; Maiti, M. Profit maximization of TSP through a hybrid algorithm. Comput. Ind. Eng. 2015, 88, 229–236. [Google Scholar] [CrossRef]

- Ultrasonic Probe. Available online: https://www.ndk.com/en/products/search/ultrasonic/ (accessed on 25 February 2024).

- Wang, W.F.; Liu, G.Y.; Wen, W.H. Study of a self-escape hybrid discrete particle swarm optimization for TSP. Comput. Sci. 2007, 34, 143–145. [Google Scholar]

- Wang, Y.; Wu, Y.; Xu, N. Discrete symbiotic organism search with excellence coefficients and self-escape for traveling salesman problem. Comput. Ind. Eng. 2019, 131, 269–281. [Google Scholar] [CrossRef]

- Kocer, H.E.; Akca, M.R. An improved artificial bee colony algorithm with local search for traveling salesman problem. Cybern. Syst. 2014, 45, 635–649. [Google Scholar] [CrossRef]

- Rajabi Bahaabadi, M.; Shariat Mohaymany, A.; Babaei, M. An efficient crossover operator for traveling salesman problem. Iran Univ. Sci. Technol. 2012, 2, 607–619. [Google Scholar]

- TSPLIB. Available online: http://comopt.ifi.uni-heidelberg.de/software/TSPLIB95/ (accessed on 25 February 2024).

- TSP Test Data. Available online: http://www.math.uwaterloo.ca/tsp/world/countries.html (accessed on 25 February 2024).

- Geng, X.; Chen, Z.; Yang, W.; Shi, D.; Zhao, K. Solving the traveling salesman problem based on an adaptive simulated annealing algorithm with greedy search. Appl. Soft Comput. 2011, 11, 3680–3689. [Google Scholar] [CrossRef]

- Chen, S.M.; Chien, C.Y. Solving the traveling salesman problem based on the genetic simulated annealing ant colony system with particle swarm optimization techniques. Expert Syst. Appl. 2011, 38, 14439–14450. [Google Scholar] [CrossRef]

- Deng, W.; Chen, R.; He, B.; Liu, Y.; Yin, L.; Guo, J. A novel two-stage hybrid swarm intelligence optimization algorithm and application. Soft Comput. 2012, 16, 1707–1722. [Google Scholar] [CrossRef]

- Wang, Y. The hybrid genetic algorithm with two local optimization strategies for traveling salesman problem. Comput. Ind. Eng. 2014, 70, 124–133. [Google Scholar] [CrossRef]

- Zhou, Y.; Luo, Q.; Chen, H.; He, A.; Wu, J. A discrete invasive weed optimization algorithm for solving traveling salesman problem. Neurocomputing 2015, 151, 1227–1236. [Google Scholar] [CrossRef]

- Osaba, E.; Yang, X.S.; Diaz, F.; Lopez-Garcia, P.; Carballedo, R. An improved discrete bat algorithm for symmetric and asymmetric traveling salesman problems. Eng. Appl. Artif. Intell. 2016, 48, 59–71. [Google Scholar] [CrossRef]

- Ezugwu, A.E.S.; Adewumi, A.O. Discrete symbiotic organisms search algorithm for travelling salesman problem. Expert Syst. Appl. 2017, 87, 70–78. [Google Scholar] [CrossRef]

- Osaba, E.; Del Ser, J.; Sadollah, A.; Bilbao, M.N.; Camacho, D. A discrete water cycle algorithm for solving the symmetric and asymmetric traveling salesman problem. Appl. Soft Comput. 2018, 71, 277–290. [Google Scholar] [CrossRef]

- Hore, S.; Chatterjee, A.; Dewanji, A. Improving variable neighborhood search to solve the traveling salesman problem. Appl. Soft Comput. 2018, 68, 83–91. [Google Scholar] [CrossRef]

- Khan, I.; Maiti, M.K. A swap sequence based Artificial Bee Colony algorithm for Traveling Salesman Problem. Swarm Evol. Comput. 2019, 44, 428–438. [Google Scholar] [CrossRef]

- Boryczka, U.; Szwarc, K. The Harmony Search algorithm with additional improvement of harmony memory for Asymmetric Traveling Salesman Problem. Expert Syst. Appl. 2019, 122, 43–53. [Google Scholar] [CrossRef]

- Choong, S.S.; Wong, L.P.; Lim, C.P. An artificial bee colony algorithm with a modified choice function for the Traveling Salesman Problem. Swarm Evol. Comput. 2019, 44, 622–635. [Google Scholar] [CrossRef]

- Kaabi, J.; Harrath, Y. Permutation rules and genetic algorithm to solve the traveling salesman problem. Arab J. Basic Appl. Sci. 2019, 26, 283–291. [Google Scholar] [CrossRef]

- Tawhid, M.A.; Savsani, P. Discrete sine-cosine algorithm DSCA with local search for solving traveling salesman problem. Arab. J. Sci. Eng. 2019, 44, 3669–3679. [Google Scholar] [CrossRef]

- Akhand, M.; Ayon, S.I.; Shahriyar, S.; Siddique, N.; Adeli, H. Discrete Spider Monkey Optimization for Travelling Salesman Problem. Appl. Soft Comput. 2020, 86, 105887. [Google Scholar] [CrossRef]

- Zhang, J.; Hong, L.; Liu, Q. An Improved Whale Optimization Algorithm for the Traveling Salesman Problem. Symmetry 2021, 13, 48. [Google Scholar] [CrossRef]

- Deng, Y.; Xiong, J.; Wang, Q. A Hybrid Cellular Genetic Algorithm for the Traveling Salesman Problem. Math. Probl. Eng. 2021, 2021, 6697598. [Google Scholar] [CrossRef]

- Karakostas, P.; Sifaleras, A. A double-adaptive general variable neighborhood search algorithm for the solution of the traveling salesman problem. Appl. Soft Comput. 2022, 121, 108746. [Google Scholar] [CrossRef]

- Wilcoxon, F.; Katti, S.; Wilcox, R.A. Critical values and probability levels for the Wilcoxon rank sum test and the Wilcoxon signed rank test. Sel. Tables Math. Stat. 1970, 1, 171–259. [Google Scholar]

- Lau, S.K.; Shue, L.Y. Solving travelling salesman problems with an intelligent search approach. Asia Pac. J. Oper. Res. 2001, 18, 77–88. [Google Scholar]

- Lau, S.K. Solving Travelling Salesman Problems with Heuristic Learning Approach. Ph.D. Thesis, Department of Information System, University of Wollongong, Wollongong, Australia, 2002. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).