Abstract

This study addresses the disruptive impact of incidents on road networks, which often lead to traffic congestion. If not promptly managed, congestion can propagate and intensify over time, significantly delaying the recovery of free-flow conditions. We propose an enhanced model based on an exponential decay of the time required for free flow recovery between incident occurrences. Our approach integrates a shot noise process, assuming that incidents follow a non-homogeneous Poisson process. The increases in recovery time following incidents are modeled using exponential and gamma distributions. We derive key performance metrics, providing insights into congestion risk and the unlocking phenomenon, including the probability of the first passage time for our process to exceed a predefined congestion threshold. This probability is analyzed using two methods: (1) an exact simulation approach and (2) an analytical approximation technique. Utilizing the analytical approximation, we estimate critical extreme quantities, such as the minimum incident clearance rate, the minimum intensity of recovery time increases, and the maximum intensity of incident occurrences required to avoid exceeding a specified congestion threshold with a given probability. These findings offer valuable tools for managing and mitigating congestion risks in road networks.

Keywords:

shot noise process; performance characteristics; congestion risk; incident occurrences; incident clearance MSC:

60G99; 60J25; 37N99

1. Introduction

The traffic flow of road networks is subject to a delicate balance between travel demand and network capacity. However, this equilibrium can be disturbed by various incidents such as accidents, road work, breakdowns, etc. These incidents lead to traffic congestion, which degrades the capacity of networks and amplifies disturbances. Disruptions can even appear when incidents are not treated and solved in time. In this work, we focus on modeling the occurrence and duration of such incidents, specifically the time needed for free flow recovery. Our research is based on an existing stochastic risk model for incident occurrences and duration in road networks [], which assumes a linear decay in the time between consecutive incident occurrences. In this work, we propose a reformulation of the model with an exponential decay of the time between consecutive incident occurrences. This modification allows us to better capture the temporal dynamics of incident occurrences and their impact on traffic flow.

One of our aims is to capture the dynamics of the system, for which we make certain assumptions. We consider an initial traffic state before incident occurrences. Then, we assume that the increases in the time needed for free flow recovery after incident occurrences follow probability distributions such as gamma and exponential distributions. Lastly, we model the incident occurrences using a non-homogeneous Poisson process. We then develop our model and derive some interesting performance characteristics. These derivations are performed using exact methods whenever possible, and approximate methods are employed when exact solutions are not feasible. The derived characteristics include the expected and variance values of the time needed for the free flow recovery process, the expected value of the first time when the process exceeds a given congestion threshold, and the probability for the process to exceed a specified congestion threshold for the first time. To compute the latter probability, we employ two distinct methods: (1) an exact simulation method and (2) an analytic approximation method. The latter method relies on computing the expected value of the first time when the process exceeds a given congestion threshold. The derivation of these performance characteristics allows us to gain valuable insights into the behavior of the free flow recovery process and its associated congestion risks. These results contribute to a deeper understanding of the dynamics of road networks and aid in the development of effective strategies to manage and mitigate congestion.

We illustrate our model and results with some numerical approximations for several extreme quantities, including the following: the approximate minimum incident clearance rate, the approximate maximum intensity of the incident occurrence process, and the approximate maximum average value of the increment in the time needed for free flow recovery following incident occurrences, all of which are requisite to limit congestion level with respect to a specified probability. We also illustrate our findings with examples, figures, and thorough interpretations and discussions. These results highlight the applicability and effectiveness of capturing incident-induced congestion dynamics in road networks.

The structure of this paper is organized as follows. Section 2 presents existing works in the literature relevant to our contribution. Section 3 introduces our new model, where we assume an exponential decay for the time needed for the free flow recovery process in road networks. We provide a comprehensive performance analysis of the time needed for free flow recovery, including an examination of the probability of the first passage time for our process to exceed a given congestion threshold. In Section 4, we present the results of numerical simulations conducted for our proposed model. Section 5 focuses on the practical applications of our model under two specific cases and various traffic conditions. Finally, in Section 6, we summarize our findings, draw meaningful conclusions from the study, and outline potential avenues for future research.

2. Existing Works

The existing literature includes numerous studies on modeling traffic incidents, non-recurrent congestion, and incident duration and frequency. These studies have advanced various techniques and methods in the field. Notably, three prominent research areas have garnered significant attention:

Incident duration and frequency prediction models: Significant research focuses on models that predict traffic incident duration and frequency to improve traffic management and control. These models provide insights into incident dynamics and help assess their impact on road networks. Previous studies employed various distributions to fit incident duration based on observed datasets in road networks, such as the log-normal distribution []. The authors of [] introduced a parametric log-logistic accelerated failure time model for predicting incident duration. In [], a mixture risk competing approach to predict the duration of different types of incidents was employed. In [], a Weibull accelerated failure time model to fit the clearance accident duration was used. The authors of [] proposed a non-parametric regression model to estimate incident duration. Moreover, in [], tree-structured quantile regression was incorporated to model incident duration. Bayesian networks were used in [] to fit the detected incident duration. Machine learning techniques were also employed for incident duration prediction, as demonstrated in []. The authors of [] applied artificial neural networks to model the sequential prediction of incident duration. Additionally, in [], a comparative analysis of existing methods was conducted for predicting incident duration in road networks.

Stochastic approach modeling the impact of incident occurrences on road traffic flow: Stochastic modeling approaches have been widely used to understand the complex behavior of traffic flow in the presence of incidents. Incidents, being stochastic events themselves, have the potential to disrupt traffic flow within road networks. In [], the authors addressed this by modeling the occurrence process and clearance time of incidents using an queuing system. This approach provided insights into the queuing behavior of incidents and their impact on traffic flow. To capture the resulting traffic congestion from incidents, the authors of [] introduced a discrete-time non-linear stochastic model. The latter effectively captured the dynamic effects of incidents on traffic flow, enabling a comprehensive analysis of congestion patterns. In response to incidents, a stochastic optimal control framework to manage real-time control of highway ramps was proposed in []. The approach aimed to optimize traffic operations by considering the stochastic nature of incidents and their impact on the ramp control strategies.

Traffic safety and risk assessment: Another crucial research area related to our work is assessing risks associated with road traffic incidents. Studies aim to quantify risks and develop methods for evaluating the safety implications of incidents. These assessments help to create proactive strategies and policies for incident management and road safety improvement. Extensive research has explored traffic safety risks, including incident and congestion risks, using various methodologies. There are studies that focus on incident risk as in [] where the authors employed a K-means clustering analysis method to create clusters representing different traffic flow states. They investigated the correlation between accident risk and these traffic states by constructing conditional logistic regression models. In another study [], the focus was on predicting real-time accident precursor risk across various types of freeway sections. There are other studies that examine congestion risk, such as the statistical analysis implemented in [] to measure both recurrent and non-recurrent traffic congestion across different freeway sections. With another approach, a dynamic Bayesian graph convolution network was developed to model the propagation of both recurrent and non-recurrent traffic congestion [].

Our contributions: Our modeling approach differs from the existing ones in the literature. We incorporated here a new class of continuous temporal processes, based on piece-wise deterministic Markov processes (PDMPs). Specifically, in our modeling, we capture the dynamics of the evolution of the time needed for free flow recovery after incident occurrences. Our first contribution consists in the derivation of congestion risk, which also differs from the literature. Our congestion risk is calculated as the probability that the time needed for free flow recovery exceeds a certain threshold. We notice that some existing models are limited to estimating incident duration, whereas our model combines the effect on time due to incident occurrences and free flow recovery after cleared incidents. The model proposed in this article presents a reformulation of the existing model in []. Our model assumes an exponential decay of the time needed for free flow recovery, instead of a linear decay as assumed in []. From this extension, we give some explicit formulas and analytic approximations for certain indicators and measures of interest, interpreted in terms of traffic resulting from incidents.

3. The Model

In this section, we present our stochastic model for the evolution of the time needed for free flow recovery following incident occurrences in a road network. The key feature of our model is the assumption of an exponential decay in contrast to the linear decay considered in the model presented in []. The introduction of an exponential decay in our model allows for its applicability across all values of the time needed for free flow recovery. Unlike the existing model [], which necessitates re-initialization when the required time for free flow recovery approaches zero, our extended model can handle all values of the time needed for free flow recovery without any constraints. Assuming that the time needed for free flow recovery is exponential, this is like assuming that the non-recurrent congestion clearance speed is linear and depends on the incident clearance rate. We note that this rate can be given by its average over the free flow recovery time, which permits us to calibrate this rate in practice.

In our model, we maintain two important assumptions, traffic homogeneity and stability, similar to the case of a linear decay in the required time for free flow recovery []. The inclusion of the first assumption (traffic homogeneity) is critical, as it enables the modeling of the traffic state using a stochastic process. This assumption assumes that traffic conditions remain consistent and do not vary significantly across the road network or the specific part of the network under consideration. The second assumption (stability) is necessary to ensure the reliability of all the parameters that govern the model throughout the specified time period. This assumption guarantees that the model remains consistent and applicable over the entire duration of interest, providing meaningful and accurate results. It ensures that the underlying factors influencing the time needed for free flow recovery remain stable and do not undergo significant changes that could affect the validity of the model.

In our modeling approach, we focus exclusively on primary incidents occurring on a specific road section or part of a road network. Secondary incidents, the spread of incidents, and correlations among flows on adjacent roads are not considered. This simplification allows us to focus on analyzing the direct impacts of primary incidents on traffic flow.

The model we present here is based on piece-wise deterministic Markov processes (PDMPs) elaborated by Davis [], and also used to model food risk in []. Our main variable here is the stochastic process X, where denotes the time needed for free flow recovery in a road network, at time step s, after incident occurrences. We characterize the time needed for free flow recovery between two consecutive incident occurrences with an exponential decay by assuming a linear stochastic differential equation, which is given as follows:

where

- is the time needed for free flow recovery, at time s.

- is a function modeling the non-recurrent congestion speed clearance assumed to be linear.

- c is a parameter modeling the incident clearance rate. This rate c can be constant or stochastic.

From Equation (1), is given as follows (see, for example, []):

where

- are the increases in the time needed for flow recovery after incident occurrences, for .

- with represent the incident occurrence times, for .

- gives the counting number of incident occurrences before time t. The incident occurrence process is denoted by .

Then, by rearranging (2), we obtain a shot noise process as follows []:

A discrete-time expression of (3) is an auto-regressive process (see, for example, []):

We denote as the initial state of the process .

For the model we propose here, we assume the following:

- The random variables are assumed to be mutually independent and identically distributed. We denote by the cumulative distribution probability of .

- The random variables with are assumed to be independent.

- The random variables (which represent here the incident inter-occurrence times) are assumed to be mutually independent and identically distributed with a cumulative distribution probability .

- The two random variables and the process are independent.

Let us fix the following additional notation:

- denotes the cumulative increase in the time needed for free flow recovery after incident occurrences.

- b is a constant denoting the threshold on the time needed for free flow recovery, i.e., the congestion threshold.

We are interested in this paper in the derivation of some performance characteristics of the process having interpretations in terms of traffic flow recovery and traffic incidents in road networks. These performance characteristics are defined as follows:

- The expected values and have finite and infinite time horizons, respectively, and the variance values and have finite and infinite time horizons for the process . The two quantiles represent the expectations of the time needed for free flow recovery process and their variations for the finite and infinite time horizons, respectively.

- Let denote the time needed for the free flow recovery to exceed a given congestion threshold b, defined as follows:We are interested in approximating the expected value .

- The cumulative distribution probability of is as follows:We can link this probability distribution to as follows:

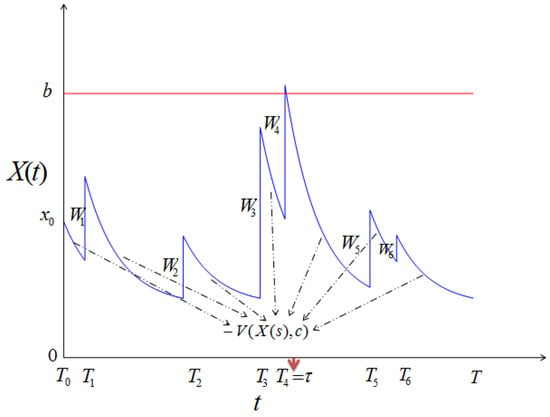

Figure 1 illustrates the evolution of the time needed for free flow recovery, with a linear non-recurrent congestion speed clearance.

Figure 1.

Illustration of process (blue curve), with presentations of first passage time (red arrow) and congestion threshold b (red line).

3.1. Performance Characteristics of Time Needed for Free Flow Recovery Process

We derive in this subsection some performance characteristics defined above for the process as given in (3). Specifically, these performance characteristics are determined for the two specific cases where the incident occurrence process follows a non-homogeneous Poisson distribution with intensity , and the increases in the time needed for free flow recovery after incident occurrences are independent and identically distributed, with exponential distribution of intensity and gamma distribution with parameters and .

3.1.1. The Probability Distribution of the Time Needed for Free Flow Recovery Process

We give as follows the cumulative distribution function (cdf) and the probability density function (pdf) denoted, respectively, by and for the time needed for free flow recovery process :

and

For the calculated and , we use and assume the following:

- and denote, respectively, the probability density and the cumulative distribution functions of the random variables , which are assumed to be independent and identically distributed.

- is a non-homogeneous Poisson process; hence, , with .

- The conditional probability density function of the random variables is .

- The joint cumulative distribution function (for ) is the n-fold convolution of the joint cumulative distribution function . Then, is as follows:We obtain the last Formula (10) by considering a change of variable defined as follows:Under this transformation:

- When , ;

- When , .

- The joint probability density function (for ) is the n-fold convolution of the joint probability density function . Then, is as follows:

For detailed derivations of the cumulative distribution function (cdf) and the probability density function (pdf) (given in (8)) and (given in (9)) for the time needed for free flow recovery process in our two specific cases where the incident occurrences process follows a homogeneous Poisson with intensity , (i.e., ), and the increases in the time needed for free flow recovery after incident occurrences are independent and identically distributed, with an exponential distribution of intensity and a gamma distribution with parameters and , refer to Appendix A.

3.1.2. The Expected and Variance Values of the Time Needed for the Free Flow Recovery Process

We give in this subsection the expected and the variance values of the time needed for free flow recovery process :

and

In the following, we use items 1, 2, and 3 from Section 3.1.1. According to [], the moment generating function (mgf) of the process defined by is written as follows:

where , and . Finally, the expected and the variance values for the time needed for the free flow recovery process are as follows:

and

Refer to Appendix B for detailed derivations of the expected and variance values of the time needed for the free flow recovery process in the two particular cases considered in Section 3.1.1.

3.1.3. The Probability Distribution of the First Passage Time for Process to Exceed a Given Congestion Threshold

In this part, we propose a method that approximates the probability distribution of the first passage time for our process to exceed a given congestion threshold, defined in (6) and (7). According to [], this method is based on a martingale families technique. Let us first give some definitions.

Definition 1.

We define several functions and quantities as follows (see []):

- Let , where is the moment generating function of . Here, represents the cumulative increase in the time needed for free flow recovery after incident occurrences evaluated at , and is the moment generating function of the random variables of the increases in the time needed for free flow recovery after incident occurrences.

- For , we let , and .

- Let generally be with an unknown distribution and assumed to be independent of τ. We can see that corresponds to the value of the process at , which is also influenced by the rate c; see (3). Then, under these conditions (, ), we have as and as .

Proposition 1.

The probability distribution of the first passage time for our process to exceed a given congestion threshold is approximated as follows []:

where is the expected value of the first passage time for our process to exceed a given congestion threshold , which is given as follows:

We can also write as follows:

where Definition 1 covers all necessary parameters and functions used in this proposition.

Proof.

We refer the reader to Section 3 of [] (and the references cited therein), which can be viewed as a technical proof of Proposition 1. □

Remark 1.

Let us consider the case where the incident occurrences process is Poisson with intensity λ, and the increases in the time needed for free flow recovery after incident occurrences are gamma distributed with two parameters α and β. In this specific case, we denote the function g by , the expected value of the first passage time for our process to exceed a given congestion threshold by , and the exponential approximation probability of the first passage time for our process to exceed a given congestion threshold by . Then, we have the following.

- The function is given as follows:where is the specific function of the generalized hyper-geometric function, and , where is the Pochhammer symbol, and is the gamma function.

- The expected value of the first passage time for our process to exceed a given congestion threshold is given as follows:where .Specifically for this case, we haveBy putting , and , we obtain

- The exponential approximation probability of the first passage time for our process to exceed a given congestion threshold is given as follows:

Remark 2.

Let us consider the case where the incident occurrences process is Poisson with intensity λ, and the increases in the time needed for free flow recovery after incident occurrences are exponentially distributed with intensity μ (and hence and ). In this specific case, we denote the function g by , the expected value of the first passage time for our process to exceed a given congestion threshold by , and the exponential approximation probability of the first passage time for our process to exceed a given congestion threshold by . Then, we have the following:

- The function is given as follows (by taking from (15) and ; see []):

- The expected value of the first passage time for our process to exceed a given congestion threshold is given as follows:where .Then, by putting , we obtain a similar formula to (12), from reference [], as follows:

- The exponential approximation probability of the first passage time of our process to exceed a given congestion threshold is given as follows:where is given by the equivalent Formulas (20), (21), and (A1) from Appendix C.

4. Numerical Simulation

We first give in this section an algorithm (Algorithm 1) that allows us to perform the steps of a numerical simulation of the time needed for free flow recovery process , for the two specific cases where the incident occurrences process follows a non-homogeneous Poisson with intensity , and the increases in the time needed for free flow recovery after incident occurrences are independent and identically distributed, with an exponential distribution of intensity and a gamma distribution with parameters and .

| Algorithm 1 Steps of numerical simulation of the time needed for free flow recovery process |

|

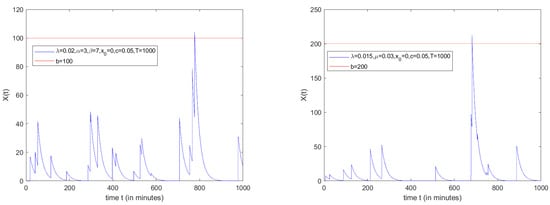

Figure 2 illustrates the simulation of the time needed for the free flow recovery process, where we consider two cases: (1) the incident occurrences process is homogeneous Poisson with intensity (i.e., ), and the increases in the time needed for free flow recovery after incident occurrences are independent and identically distributed with a gamma distribution with two parameters and , and (2) the incident occurrences process is homogeneous Poisson with intensity (i.e., ), and the increases in the time needed for free flow recovery after incident occurrences are independent and identically distributed with an exponential distribution with intensity .

Figure 2.

Left side: Numerical simulation of the process , where , (blue color), and congestion threshold (red color). Right side: Numerical simulation of the process , where (blue color), and congestion threshold (red color).

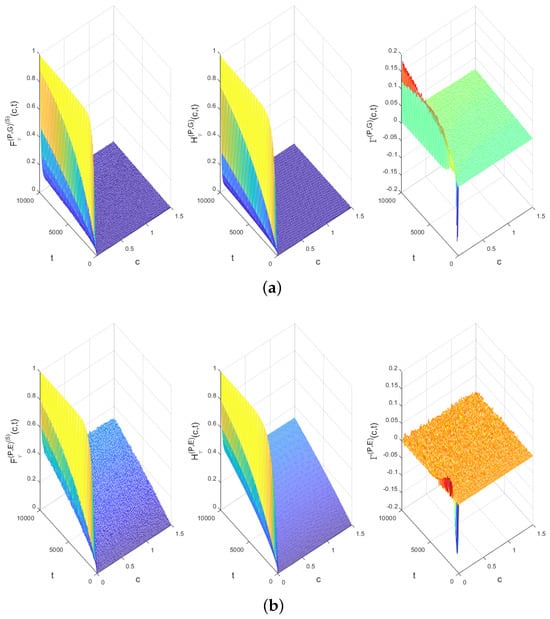

Figure 3 gives a 3D illustration of the probability of the first passage time for our process to exceed a given congestion threshold with an exact simulation method, and a 3D illustration of the exponential approximation probability of the first passage time for our process to exceed a given congestion threshold. The simulations involved varying the incident clearance rate c and the time t. For the two specific cases given above, we have the following:

Figure 3.

3D illustration of the probability of the first passage time for our process to exceed a given congestion threshold using two methods (exact simulation and exponential approximation), with their deviations. (a) Numerical calculus of , and , where . (b) Numerical calculus of , and , where .

- Instead of and , we denote, respectively, and .

We then present the deviation between the calculation of the probabilities with the 3D illustrations, and those presented by the calculation of and .

5. Applications

In this part, we give some interesting applications for the time needed for the free flow recovery process on a part of a road network. We consider that , where is the expected value of time needed for free flow recovery on infinite time-horizon (stationary expected value), and hence, and ; see Definition 1.

5.1. Determination of Approximate Minimum Value of Incident Clearance Rate Needed to Limit Congestion Level with Respect to Given Probability

We do not provide in this work the exact expression of the probability of the first passage time for our process to exceed a given congestion threshold . Instead, we propose an analytic approximation, denoted as ; see Proposition 1. We determine in this subsection the approximate minimum value of the incident clearance rate needed to limit a given congestion level with respect to a given probability. To obtain this approximate minimum value, we rely on historical data sets where the incident occurrence process follows a homogeneous Poisson distribution with intensity , and the increases in the time needed for free flow recovery after incident occurrences are gamma distributed with two parameters and , or exponentially distributed with intensity . In order to place greater emphasis on the incident clearance rate, noted c, we emphasize its central role in the following analysis: Instead of the two probabilities (see (6) or (7)) and (see (12) in Proposition 1), we denote, respectively, and . We also denote instead of . Instead of the two specific approximate probabilities (see (18) in Remark 1) and (see (22) in Remark 2), we denote, respectively, and . Also, instead of the two specific approximate expected values (see (16) in Remark 1) and (see for example (21)) in Remark 2), we denote, respectively, and . Therefore, we have the following proposition:

Proposition 2.

The probabilities and are decreasing with respect to c.

Proof.

For , we have the following: By adopting the same direct proof of Proposition 2 from [] (with the only difference here being consideration of the process (given by (3) or (4))).

For , we have

where with the fact that , and as ; see Definition 1.

Specifically, for the approximate probabilities and , we have the following:

- where with particular regard to the fact that , and as ; see Definition 1.

- where , with particular regard to the fact that , and as ; see Definition 1.

□

5.1.1. Analysis over Finite Time Horizon

The purpose of the analysis in this subsection is to determine the approximate minimum value of the incident clearance rate needed to avoid a given congestion threshold b with a given probability p. This minimum value is approximated as and is given as follows:

where is the pseudo-inverse of .

Two specific cases are presented as follows, where the following hold:

- The incident occurrences process is Poisson with intensity and the increases in the time needed for free flow recovery after incident occurrences are gamma distributed with two parameters and . In this specific case, the approximate value of the minimum incident clearance rate is denoted by and is given as follows:where is the pseudo-inverse of .

- The incident occurrences process is Poisson with intensity and the increases in the time needed for free flow recovery after incident occurrences are exponential distributed with intensity . In this specific case, the approximate value of the minimum incident clearance rate is denoted by , and is as follows:where is the pseudo-inverse of .

The analytic formula of the approximate minimum value is not derived. Specifically, the analytic formulas approximate minimum values and are not derived. However, is decreasing with respect to c; . This also applies to two special cases: (1) is decreasing with respect to c; (), and (2) is decreasing with respect to c (); see Proposition 2. We propose here to calculate numerically the two approximate minimum values and by employing one of the following algorithms: dichotomy, Fibonnacci, or the gold number.

5.1.2. Description of Data Sets

We present in this subsection two data sets:

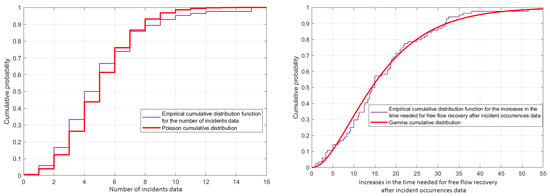

- For the first data set, we describe the incident data collected in [] for the morning and evening peak periods (7:00–10:00 a.m. and 4:00–7:00 p.m.), from 1 January 2003 to 30 April 2003, on the southbound I-405, Seattle, Washington. We present some data metrics in Table 1 concerning the number of incidents and the increases in the time needed for free flow recovery after incident occurrences. Various continuous and discrete distributions were tested to fit the increases in the time needed for free flow recovery after incident occurrences and the number of incidents, respectively. Parameters were obtained using Maximum Likelihood Estimation (MLE). Goodness-of-fit tests (Kolmogorov-Smirnov, Anderson-Darling, and Chi-squared) indicate that a gamma distribution with parameters and best fits the increases in the time needed for free flow recovery after incident occurrences, while a Poisson distribution with parameter per 6 h of time period best fits the number of incident occurrences. These results are shown in Figure 4.

Table 1. Summary of metrics for the number of incidents and the increases in the time needed for free flow recovery after incident occurrences data.

Table 1. Summary of metrics for the number of incidents and the increases in the time needed for free flow recovery after incident occurrences data. Figure 4. Left side: Empirical cumulative distribution function for the number of incidents data fitted by a Poisson cumulative distribution function with intensity per 6 h of the time period. Right side: Empirical cumulative distribution function for the increases in the time needed for free flow recovery after incident occurrences data fitted by a gamma cumulative distribution function with the two parameters and .

Figure 4. Left side: Empirical cumulative distribution function for the number of incidents data fitted by a Poisson cumulative distribution function with intensity per 6 h of the time period. Right side: Empirical cumulative distribution function for the increases in the time needed for free flow recovery after incident occurrences data fitted by a gamma cumulative distribution function with the two parameters and . - For the second data set, it is described in [] for a 1 km freeway segment of I-880 located in Hayward, Alameda County, California, USA, during the spring of 1993. The incident occurrences follow a homogeneous Poisson process with an intensity of , and the increases in the time needed for free flow recovery after incident occurrences are modeled by an exponential distribution with an intensity of .

5.1.3. Numerical Results

In this subsection, we give some significant numerical results from Example 1 and graphical illustrations. Two specific cases are discussed.

Example 1.

From the two data sets described above, the following is considered:

- For the first case :

- We take (the intensity of incident occurrences process) and and (the two parameters estimated for the gamma distribution of the increases in the time needed for free flow recovery after incident occurrences). By using Formula (24), we calculate the approximate values for the minimum clearance rate needed to avoid the congestion threshold b with respect to the probability p for the finite time horizons and . For instance, Figure 5a shows . Then, we have, for example, and .

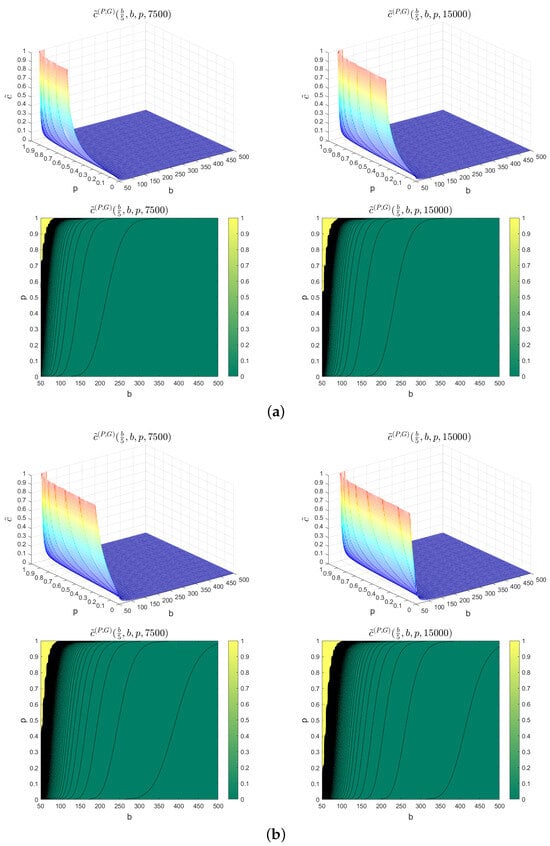

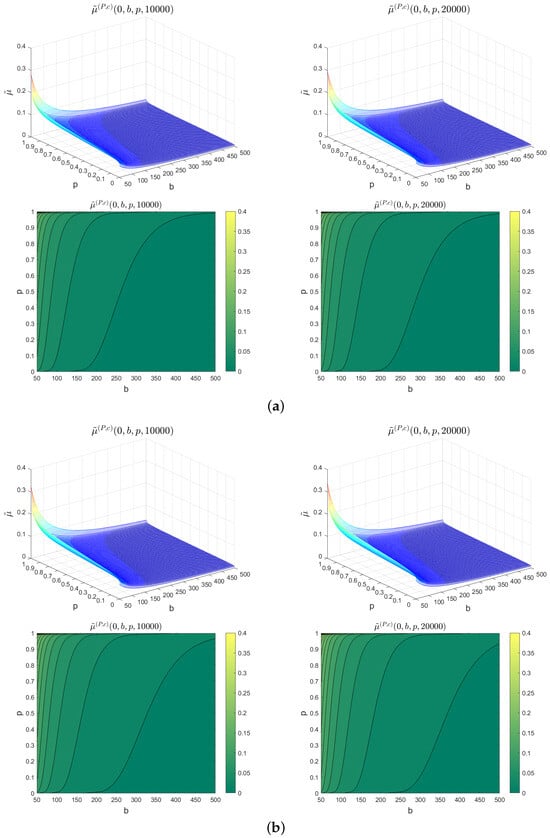

Figure 5. 3D illustration and contour lines of . (a) 3D illustration of (top left) with its contour lines (bottom left), and 3D illustration of (top right) with its contour lines (bottom right), for , and . (b) 3D illustration of (top left) with its contour lines (bottom left), and 3D illustration of (top right) with its contour lines (bottom right), for , and .

Figure 5. 3D illustration and contour lines of . (a) 3D illustration of (top left) with its contour lines (bottom left), and 3D illustration of (top right) with its contour lines (bottom right), for , and . (b) 3D illustration of (top left) with its contour lines (bottom left), and 3D illustration of (top right) with its contour lines (bottom right), for , and . - Instead of (the intensity of incident occurrences process), we take . Similarly, we calculate the approximate values for the minimum clearance rate needed to avoid the congestion threshold b with respect to the probability p, for the finite time horizons and . Figure 5b shows . Then we have, for example, , and .

- For the second case :

- We take (the intensity of incident occurrences process) and (the intensity of the increases in the time needed for free flow recovery after incident occurrences). Using Formula (25), we calculate the approximate values for the minimum clearance rate needed to avoid the congestion threshold b with respect to the probability p for the finite time horizons and . In Figure 6a, we present and . Then, for example, we have and .

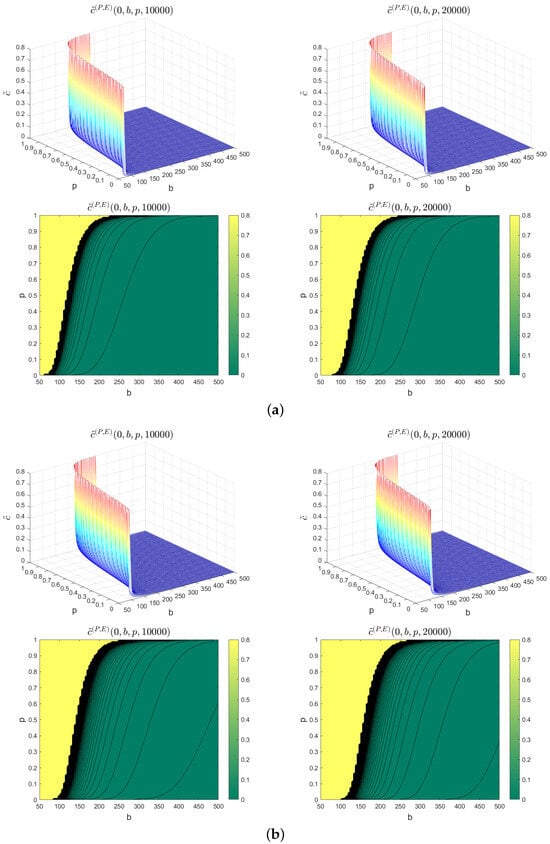

Figure 6. 3D illustration and contour lines of . (a) 3D illustration of (top left) with its contour lines (bottom left), and 3D illustration of (top right) with its contour lines (bottom right), for , . (b) 3D illustration of (top left) with its contour lines (bottom left), and 3D illustration of (top right) with its contour lines (bottom right), for , .

Figure 6. 3D illustration and contour lines of . (a) 3D illustration of (top left) with its contour lines (bottom left), and 3D illustration of (top right) with its contour lines (bottom right), for , . (b) 3D illustration of (top left) with its contour lines (bottom left), and 3D illustration of (top right) with its contour lines (bottom right), for , . - Instead of (the intensity of incident occurrences process), we take . In the same way as the above instance, we calculate the approximate values for the minimum clearance rate needed to avoid the congestion threshold b with respect to the probability p for the finite time horizons and . In Figure 6b, we show and . Then, for example, we have and .

5.1.4. Interpretation of Results

Figure 5 and Figure 6 show a 3D illustration and contour lines for two specific cases of the numerical presentation of the approximate minimum values of incident clearance rate, which we denoted, respectively, by and . These approximate minimum values of the incident clearance rate are given as a function of the traffic congestion threshold b and the probability p. Following from Figure 5 and Figure 6 for the two specific cases presented with fixed two different finite times and initial states, we have the following comments: First, we observe that the approximate minimum values and increase with respect to the probability p, which can be interpreted as the fact that road operators prioritize a high level of assurance (reflected by a high value of p) in ensuring that the congestion threshold b is not surpassed; thus, they need to guarantee prompt incident resolution, and it is essential to step up efforts by allocating additional resources such as equipment, personnel, and other necessary means. Second, we can see that the approximate minimum values and decrease with respect to the congestion threshold b. This means that if road operators do not intend to exceed the low-level of congestion threshold b, they need to promptly address incidents by enhancing their efforts, such as deploying additional equipment, increasing staff numbers, etc. Third, we can see that the approximate minimum values and increase with respect to t. Similarly, if road operators aim to prevent the congestion threshold b from being exceeded for extended periods of time t, they must promptly address incidents by intensifying their efforts, which may involve deploying additional equipment, increasing staff numbers, and implementing other appropriate measures. Fourth, we can see that the approximate minimum values and increase due to an increase in the intensity of incident occurrences process and fixed parameters of the known distribution of the increases in the time needed for free flow recovery due to the incident occurrences (gamma or exponential distributions). The increase in these minimum values can be interpreted as the need for high level of assurance from the road operators that the congestion threshold b will not be reached. In order to achieve this, they must significantly increase their efforts in resolving incidents promptly. This could involve multiplying their resources, such as equipment and staff, dedicated to effectively managing and resolving incidents in a timely manner.

5.2. Determination of Approximate Maximum Intensity of Incident Occurrences Process Needed to Limit Congestion Level with Respect to Given Probability

As in Section 5.1, we do not provide the exact expression of the probability of the first passage time for our process to exceed a given congestion threshold . Instead, we propose an analytic approximation, denoted as ; see Proposition 1. We determine in this subsection the approximate maximum intensity of the incident occurrences process needed to limit a given congestion level with a given probability. In order to determine the approximate maximum value of this intensity, our approximations will be based on historical data sets and the average values of the incident clearance rate. We estimate the parameters of the distributions of the increases in the time needed for free flow recovery after incident occurrences (gamma distributed with two parameters and , and exponential distribution with intensity ). As in Section 5.1, and in order to highlight the intensity of the incident occurrences process, denoted by , we consider the following: (1) Instead of the two probabilities (see (6) or (7)) and (see (12) in Proposition 1), we denote, respectively, and . Instead of the two specific approximation probabilities and (see (18) in Remark 1 and (22) in Remark 2, respectively), we denote instead of and instead of . We also denote instead of . We denote the expected values and instead of and . Therefore, we have the following proposition:

Proposition 3.

The two probabilities and are increasing with respect to λ.

Proof.

For , we adopt the same proof of Proposition 4 from [] (with consideration of the process (given (3) or (4)) here).

For , we have the following:

where , with the fact that , and as ; see Definition 1.

Specifically, for the approximate probabilities and , we have the following:

- where with the fact that , and as ; see Definition 1.

- where with the fact that , and as ; see Definition 1.

□

5.2.1. Analysis over Finite Time Horizon

The aim of the analysis in this subsection is to find the approximate maximum value of the incident occurrences process needed to avoid a given congestion threshold b with a given probability p. The approximate maximum value of the incident occurrences process is defined as and is given as follows:

where is the pseudo-inverse of .

Two cases are distinguished:

- The increases in the time needed for free flow recovery after incident occurrences are gamma distributed with two parameters and , and the incident clearance rate is modeled with the constant c. In this specific case, the approximate maximum value is denoted by , and is as follows:where is the pseudo-inverse of .

- The increases in the time needed for free flow recovery after incident occurrences are exponentially distributed with intensity , and the incident clearance rate is modeled with the constant c. In this specific case, the approximate maximum value is denoted by , and is as follows:where is the pseudo-inverse of .

The analytic formula of approximate maximum value is not derived. Specifically, the analytic formulas of approximate maximum values and are not derived. However, is increasing with respect to ; (). This also applies to two special cases: (1) is increasing with respect to —; and (2) is increasing with respect to ; () (see Proposition 3). We propose here to compute the two approximate maximum values and numerically, using algorithms such as dichotomy, Fibonnacci, or the gold number.

5.2.2. Numerical Results

We propose in this part for this second application Example 2 accompanied by graphical illustrations. Two special cases are dealt with.

Example 2.

- For the first specific case :

- 1.

- We take (the incident clearance rate), and and (the two parameters for the gamma distribution of the increases in the time needed for free flow recovery after incident occurrences). By using Formula (27), we calculate the approximate values for the maximum intensity of the incident occurrences process needed to avoid the congestion threshold b with respect to the probability p, for the finite time horizons and . Figure 7a presents and . We also have and .

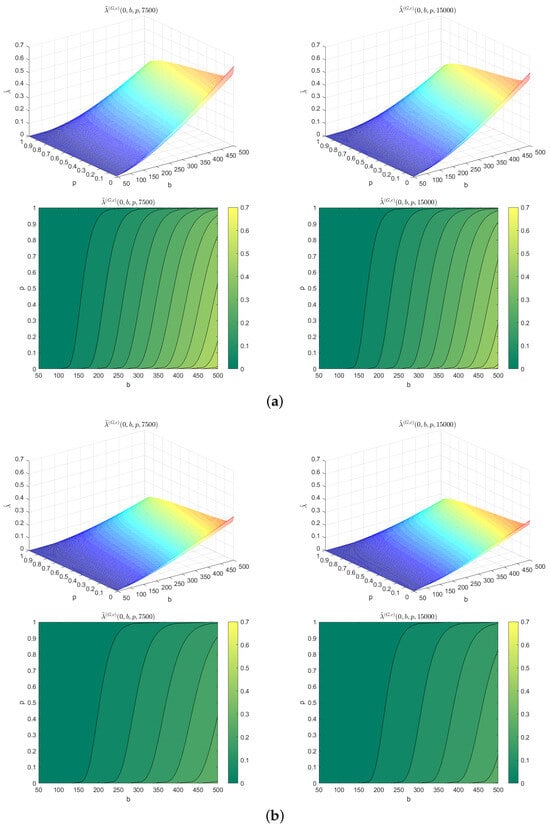

Figure 7. 3D illustration and contour lines of . (a) 3D illustration of (top left) with its contour lines (bottom left), and 3D illustration of (top right) with its contour lines (bottom right), for , , and . (b) 3D illustration of (top left) with its contour lines (bottom left), and 3D illustration of (top right) with its contour lines (bottom right), for , , and .

Figure 7. 3D illustration and contour lines of . (a) 3D illustration of (top left) with its contour lines (bottom left), and 3D illustration of (top right) with its contour lines (bottom right), for , , and . (b) 3D illustration of (top left) with its contour lines (bottom left), and 3D illustration of (top right) with its contour lines (bottom right), for , , and . - 2.

- Instead of (the incident clearance rate), we take . In the same way as in the first instance, we calculate the approximate values for the maximum intensity of the incident occurrences process needed to avoid the congestion threshold b with respect to the probability p, for the finite time horizons and . Figure 7b illustrates and . We also have and .

- For the second specific case :

- 1.

- We take (the incident clearance rate) and (the intensity of the increases in the time needed for free flow recovery after incident occurrences). By using Formula (28), we calculate the approximate values for the maximum intensity of the incident occurrences process needed to avoid the congestion threshold b with respect to the probability p, for the finite time horizons and . In Figure 8a, we present and . Then, for example, we have , and .

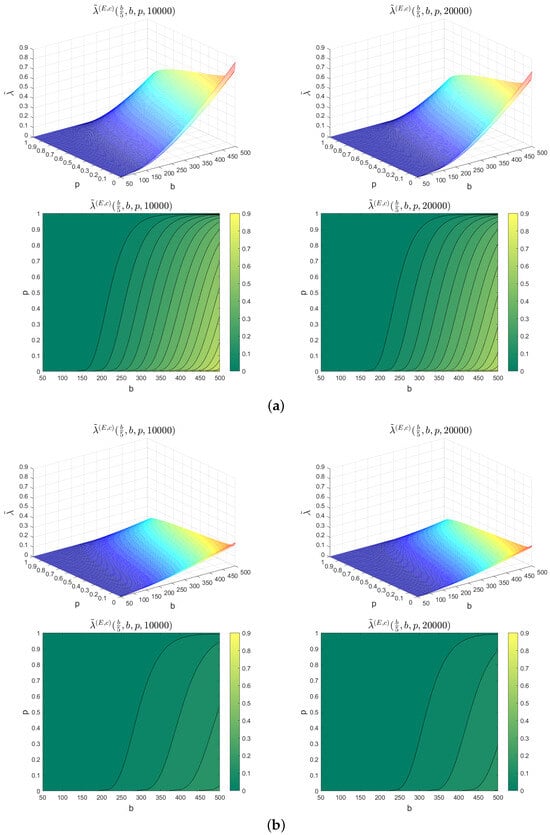

Figure 8. 3D illustration and contour lines of . (a) 3D illustration of (top left) with its contour lines (bottom left), and 3D illustration of (top right) with its contour lines (bottom right), for , . (b) 3D illustration of (top left) with its contour lines (bottom left), and 3D illustration of (top right) with its contour lines (bottom right), for , .

Figure 8. 3D illustration and contour lines of . (a) 3D illustration of (top left) with its contour lines (bottom left), and 3D illustration of (top right) with its contour lines (bottom right), for , . (b) 3D illustration of (top left) with its contour lines (bottom left), and 3D illustration of (top right) with its contour lines (bottom right), for , . - 2.

- Instead of (the incident clearance rate), we take . In the same way as in the second instance, we calculate the approximate values for the maximum intensity of incident occurrences process needed to avoid the congestion threshold b with respect to the probability p, for the finite time horizons and . In Figure 8b, we also present for this instance and . Then, for example, we have and .

5.2.3. Interpretation of Results

Figure 7 and Figure 8 show 3D illustrations and contour lines for two specific cases of the numerical approximations of the maximum values of the intensity of the incident occurrences process, which we denoted, respectively, by and . These approximate maximum values of the intensity of the incident occurrences process are given as functions of the traffic congestion threshold b and probability p for the two specific cases presented with two different finite horizon times and initial states. Then, we have the following comments: First, we observe that the approximate maximum values and decrease with respect to the probability p, which implies that for road operators, in order to have a high level of assurance (represented with a high value of p) that the congestion threshold b will not be surpassed, they should aim for low values of the approximate maximum intensity of the incident occurrences process. Lower values of the approximate maximum average incident count will contribute to maintaining the desired level of assurance. Second, we observe that the approximate maximum values and increase with respect to the congestion threshold b, which indicates that for road operators, in order to have a high level of assurance that the congestion threshold b will not be exceeded, it is necessary to reduce the approximate maximum intensity of the incident occurrences process. By decreasing the approximate maximum average values of the incident count, road operators can enhance their level of assurance. Third, we observe that the approximate maximum values and decrease with respect to time t. Similarly, if road operators aim to guarantee that the congestion threshold b is not surpassed for extended periods of time t, they should also strive for a low approximate intensity of the incident occurrence process. By achieving a lower approximate maximum value of the incident count, road operators can enhance their ability to prevent the congestion threshold from being exceeded over longer times. Fourth, we observe that the approximate maximum values and increase due to the increase in the incident clearance rate c and fixed the intensity of the increases in the time needed for free flow recovery after incident occurrences . This means that for road operators to have a high level of assurance that the congestion threshold b will not be exceeded, they should increase their efforts in incident resorption, for example, by increasing the incident clearance rate. This will lead to higher values of the approximate maximum intensity of the incident occurrence process for the two specific cases. Consequently, the approximate maximum values of the resorted incident count will also be high.

5.3. Determination of Approximate Minimum Value of Intensity of Increases in Time Needed for Free Flow Recovery After Incident Occurrences Needed to Limit Given Congestion Level with Respect to Given Probability

As in Section 5.1 and Section 5.2, we use in this subsection the analytic approximation probability of the first passage time for our process to exceed a given congestion threshold (which we denoted by ; see Proposition 1). We determine the approximate minimum intensity of the increases in the time needed for free flow recovery after incident occurrences while avoiding a given congestion threshold with respect to a given probability. In order to find this minimum value, we base our approximation on historical data sets. The incident occurrences process is homogeneous Poisson with intensity , while the average value of the incident clearance rate is modeled with a constant c. In order to place greater emphasis on the intensity of the increases in the time needed for free flow recovery after incident occurrences, noted , we emphasize its central role in the following analysis: Instead of denoting the two probabilities (see (6) or (7)) and (see (12) in Proposition 1), we denote, respectively, and . In addition, instead of denoting the probability (see (22) in Remark 2), we denote . Instead of denoting (see, for example, (21) in Remark 2) we denote . Therefore, we have the following proposition:

Proposition 4.

The probabilities and, (i.e., ) are decreasing with respect to μ.

Proof.

For , we adopt the proof of Proposition 6 from [] (with consideration of the process (given (3) or (4)) here).

For , we have

where , by bearing in mind that , and as ; see Definition 1. □

5.3.1. Analysis over Finite Time Horizon

The aim of the analysis in this subsection is to determine the approximate minimum intensity of the increases in the time needed for free flow recovery after incident occurrences, denoted , necessary to avoid a given congestion threshold b with a given probability p. We consider the case where the incident occurrences process is Poisson, and the incident clearance rate is modeled with a constant c. In this specific case, the approximate value of the minimum intensity of the increases in the time needed for free flow recovery after incident occurrences is denoted by , and is given as follows:

where is the pseudo-inverse of .

The approximate minimum is not given analytically. However, as is decreasing with respect to ; () (see Proposition 4), we can compute numerically using one of the following algorithms: dichotomy, Fibonnacci, or the gold number.

5.3.2. Numerical Results

We propose in this part for this third application Example 3 accompanied by graphical illustrations, where we take the same values given in Table 3 (from [] the two values f and r). Only one special case is covered.

Example 3.

For :

- We take (the incident clearance rate) and (the intensity of the incident occurrences process). By using Formula (29), we calculate the approximate values for the minimum intensity of the increases in the time needed for free flow recovery after incident occurrences needed to avoid the congestion threshold b with respect to probability p for the finite time horizons , and . Figure 9a presents and . We also have and .

Figure 9. 3D illustration and contour lines of . (a) 3D illustration of (top left) with its contour lines (bottom left), and 3D illustration of (top right) with its contour lines (bottom right), for , . (b) 3D illustration of (top left) with its contour lines (bottom left), and 3D illustration of (top right) with its contour lines (bottom right), for , .

Figure 9. 3D illustration and contour lines of . (a) 3D illustration of (top left) with its contour lines (bottom left), and 3D illustration of (top right) with its contour lines (bottom right), for , . (b) 3D illustration of (top left) with its contour lines (bottom left), and 3D illustration of (top right) with its contour lines (bottom right), for , . - Let us take instead of (the incident clearance rate). Figure 9b illustrates and . We also have and .

5.3.3. Interpretation of Results

Figure 9 shows 3D illustrations and contour lines for the approximate minimum value of the intensity of the increases in the time needed for free flow recovery after incident occurrences, which we denoted by . This approximate minimum value is given as a function of the traffic congestion threshold b and the probability p. From Figure 9, and for the case presented with two different finite horizon times and initial states, we have the following comments: First, we can observe that the approximate minimum value increases with respect to probability p, which implies that, in order for road operators to have a high level of assurance that the congestion threshold b will not be surpassed, they must have a high value of the approximate minimum intensity of the increases in the time needed for free flow recovery after incident occurrences. Second, we can observe that the approximate minimum value decreases with respect to the congestion threshold b, which means that the road operators need to maintain low values of the congestion threshold b to ensure that it is not exceeded. They must also have a high value of the approximate minimum intensity of the increases in the time needed for free flow recovery after incident occurrences. Third, we can observe that the approximate minimum value increases with respect to the time t, which means that the road operators must have a high level of assurance, represented by a high value of p, to ensure that the congestion threshold b is not exceeded; in particular, when considering longer times, they must have a high value of the approximate minimum intensity of the increases in the time needed for free flow recovery after incident occurrences. Fourth, by decreasing the incident clearance rate, and fixing the intensity of the incident occurrences process, we can observe that the approximate minimum value increases with the decreasing of the incident clearance rate c. In order for road operators to guarantee a high level of assurance that the congestion threshold b is not exceeded, they must decrease the approximate minimum value of the intensity of the increases in the time needed for free flow recovery after incident occurrences with respect to increases in the incident clearance rate.

6. Conclusions

Within road networks, a multitude of incidents, including accidents, roadwork, and vehicle breakdowns, exert a significant toll on road capacity, inevitably leading to subsequent congestion. These incidents stand as prominent contributors to extensions of the free flow recovery period, thereby compounding challenges. In this article, we presented a mathematical model for the progression of incident frequency and duration. This model built upon an established stochastic risk framework [], thereby expanding its applicability and effectiveness. Using a shot noise process, we introduced a novel reconfiguration of the prevailing linear model governing the temporal requirements for free flow recovery. Through this extension, we established an exponential decay pattern between successive incident occurrences.

Our article encompassed an array of essential performance metrics, including the probability of the first time that the process exceeds a given congestion threshold. Two distinct methods were used to determine the previous probability, including an analytical approximation approach and a numerical simulation approach. This probability was computed for two distinct cases: Firstly, when the incident occurrence process follows a Poisson distribution and the increases in the time needed for free flow recovery after incident occurrences follow a gamma distribution; and second, when the incident occurrences process follows a Poisson distribution, accompanied with an exponential distribution for the increases in the time needed for free flow recovery after incident occurrences.

Based on the exponential approximation probability for the first time that the process exceeds a given congestion threshold, we presented a range of applications spanning diverse traffic conditions and scenarios. Within this context, we established the approximate minimum value of the incident clearance rate and the approximate minimum intensity of the increases in the time needed for free flow recovery after incident occurrences, and identified the approximate maximum intensity of the incident occurrences process; all of these steps were necessary to preempt a designated congestion threshold at a specified probability.

In practical implementation, our findings serve as valuable tools, empowering road operators to adeptly manage congestion levels while upholding desired guarantees. This entails ensuring the availability of sufficient personnel and equipment to quickly clear incidents and recover normal traffic flow, a focal point underscored in the first application, which addresses two distinct cases concerning incident frequency and duration. Furthermore, to confine congestion within predetermined assurances, the imperative lies in maintaining minimal values for the approximate peak incident count. This specific concern is explicitly tackled in the second application, which delves into two particular cases centered on the mean incident clearance rate and the impact on the time-to-occurrence of incidents. Conversely, our third application centers on congestion prevention with a designated degree of certainty. Here, the crux lies in attaining diminished values for the approximate peak average augmentation in time needed for free flow recovery after incident events. This application ties in with the mean incident clearance rate and a constant incident occurrence frequency.

In the trajectory of our research, forthcoming endeavors should encompass an extension of the model to include secondary incidents, thus broadening the focus beyond primary occurrences exclusively. The future work should also consider the spread of incidents and correlations among traffic flows on adjacent roads, providing a more comprehensive understanding of the dynamics within transportation networks. Additionally, it should be interesting to develop a traffic flow model that offers both behavioral interpretation and empirical validation. This would involve incorporating a detailed analysis of driver behaviors during incidents and clearance operations.

Author Contributions

Methodology, F.M. and N.F.; Software, F.M.; Formal analysis, F.M.; Investigation, F.M.; Data curation, F.M.; Writing—original draft, F.M.; Writing—review & editing, N.F.; Supervision, D.A. and N.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Particular Cases for the Cumulative Distribution Function (cdf) and the Probability Density Function (pdf) FX and fX for Process {X(t)}t≥0

Firstly, we derive the joint probability density function, denoted by , and the joint cumulative distribution function, denoted by , in our two particular cases:

- If the incident occurrences process is Poisson with intensity for all , and the increases in the time needed for free flow recovery after incident occurrences are independent and identically distributed with gamma distribution with two parameters and , then we denote in this case instead of (see (10)) and instead of (see (11)), and for , we haveandwhere is the lower incomplete gamma function, and is the gamma function.

- If are independent and identically distributed with an exponential distribution with intensity (i.e., two parameters and ), instead of a gamma distribution, then, in this case, we denote instead of (see (10)) and instead of (see (11)), and for , we have the following:andwhere is the exponential integral function.

Let us continue with the two particular cases above, and return to the formulas of and given in (9) and (8), respectively. We have the following:

- If the incident occurrences process is Poisson with intensity , (i.e., ) and the increases in the time needed for free flow recovery after incident occurrences are independent and identically distributed with a gamma distribution with two parameters , and , then we denote in this case instead of (see (8)) and instead of (see (9)), and we have the following:andwhere is the indicator function.

where is the indicator function.

Appendix B. Particular Cases for Expected and Variance Values of Time Needed for Free Flow Recovery Process

In the following, we present particular cases for the expected and variance values of the time needed for the free flow recovery process:

- In the specific case where the incident occurrences process is homogeneous Poisson with intensity , (i.e., ), and where the increases in the time needed for free flow recovery after incident occurrences are independent and identically distributed with gamma distribution (so , and ), we denote instead of , and instead of . Then, we haveandBy letting , we denote instead of , and instead of . Then, we have and

- If the increases in the time needed for the free flow recovery after incident occurrences follow an exponential distribution instead of a gamma distribution, then , and . In this case, we denote instead of and instead of . Then, we haveandBy letting , we denote also instead of and instead of . Then, we have and

Appendix C. Expansion Series of Expected Value of First Passage Time for Our Process {X(t)}t≥0 to Exceed Given Congestion Threshold (τ)

Returning to (21) or to (12) from reference [], we derive expansion series of the expected value of the first passage time for our process to exceed a given congestion threshold . In this derivation, we use the following components:

- The expansion series, described as

- The integral function, defined as for , , where is the gamma function.

The above components are essential in order to derive easily the following formula:

where:

- ,

- .

The above formulas are two different specific functions of the generalized hyper-geometric function, where is the Pochhammer symbol and is the gamma function. We note that Formula (A1) is similar to (12) from reference [].

References

- Mouhous, F.; Aissani, D.; Farhi, N. A stochastic risk model for incident occurrences and duration in road networks. Transp. A Transp. Sci. 2023, 19, 2077469. [Google Scholar] [CrossRef]

- Golob, T.F.; Recker, W.W.; Leonard, J.D. An analysis of the severity and incident duration of truck-involved freeway accidents. Accid. Anal. Prev. 1987, 19, 375–395. [Google Scholar] [CrossRef] [PubMed]

- Chung, Y. Development of an accident duration prediction model on the Korean Freeway Systems. Accid. Anal. Prev. 2010, 42, 282–289. [Google Scholar] [CrossRef] [PubMed]

- Li, R.; Guo, M. Competing risks analysis on traffic accident duration time. J. Adv. Transp. 2015, 49, 402–415. [Google Scholar] [CrossRef]

- Alkaabi, A.M.S.; Dissanayake, D.; Bird, R. Analyzing clearance time of urban traffic accidents in Abu Dhabi, United Arab Emirates, with hazard-based duration modeling method. Transp. Res. Rec. 2011, 2229, 46–54. [Google Scholar] [CrossRef]

- Wang, S.; Li, R.; Guo, M. Application of nonparametric regression in predicting traffic incident duration. Transport 2018, 33, 22–31. [Google Scholar] [CrossRef]

- He, Q.; Kamarianakis, Y.; Jintanakul, K.; Wynter, L. Incident duration prediction with hybrid tree-based quantile regression. In Advances in Dynamic Network Modeling in Complex Transportation Systems; Springer: Berlin/Heidelberg, Germany, 2013; pp. 287–305. [Google Scholar]

- Park, H.; Haghani, A.; Zhang, X. Interpretation of Bayesian neural networks for predicting the duration of detected incidents. J. Intell. Transp. Syst. 2016, 20, 385–400. [Google Scholar] [CrossRef]

- Hamad, K.; Khalil, M.A.; Alozi, A.R. Predicting freeway incident duration using machine learning. Int. J. Intell. Transp. Syst. Res. 2020, 18, 367–380. [Google Scholar] [CrossRef]

- Wei, C.H.; Lee, Y. Sequential forecast of incident duration using Artificial Neural Network models. Accid. Anal. Prev. 2007, 39, 944–954. [Google Scholar] [CrossRef]

- Saracoglu, A.; Ozen, H. Estimation of traffic incident duration: A comparative study of decision tree models. Arab. J. Sci. Eng. 2020, 45, 8099–8110. [Google Scholar] [CrossRef]

- Baykal-Gürsoy, M.; Xiao, W.; Ozbay, K. Modeling traffic flow interrupted by incidents. Eur. J. Oper. Res. 2009, 195, 127–138. [Google Scholar] [CrossRef]

- Sheu, J.B.; Chou, Y.H.; Chen, A. Stochastic modeling and real-time prediction of incident effects on surface street traffic congestion. Appl. Math. Model. 2004, 28, 445–468. [Google Scholar] [CrossRef]

- Sheu, J.B. Stochastic modeling of the dynamics of incident-induced lane traffic states for incident-responsive local ramp control. Phys. A Stat. Mech. Its Appl. 2007, 386, 365–380. [Google Scholar] [CrossRef]

- Xu, C.; Liu, P.; Wang, W.; Li, Z. Evaluation of the impacts of traffic states on crash risks on freeways. Accid. Anal. Prev. 2012, 47, 162–171. [Google Scholar] [CrossRef]

- Zheng, Q.; Xu, C.; Liu, P.; Wang, Y. Investigating the predictability of crashes on different freeway segments using the real-time crash risk models. Accid. Anal. Prev. 2021, 159, 106213. [Google Scholar] [CrossRef]

- Skabardonis, A.; Varaiya, P.; Petty, K.F. Measuring Recurrent and Nonrecurrent Traffic Congestion. Transp. Res. Rec. 2003, 1856, 118–124. [Google Scholar] [CrossRef]

- Luan, S.; Ke, R.; Huang, Z.; Ma, X. Traffic congestion propagation inference using dynamic Bayesian graph convolution network. Transp. Res. Part C Emerg. Technol. 2022, 135, 103526. [Google Scholar] [CrossRef]

- Davis, M.H. Piecewise-deterministic Markov processes: A general class of non-diffusion stochastic models. J. R. Stat. Soc. Ser. B (Methodol.) 1984, 46, 353–376. [Google Scholar] [CrossRef]

- Bertail, P.; Clémençon, S.; Tressou, J. Statistical analysis of a dynamic model for dietary contaminant exposure. J. Biol. Dyn. 2010, 4, 212–234. [Google Scholar] [CrossRef]

- Cox, D.R.; Isham, V. Point Processes; CRC Press: Boca Raton, FL, USA, 1980; Volume 12. [Google Scholar]

- Smith, P.L. Corrigendum to “From Poisson shot noise to the integrated Ornstein–Uhlenbeck process: Neurally principled models of information accumulation in decision-making and response time” [J. Math. Psychol. 54 (2010) 266–283]. J. Math. Psychol. 2010, 54, 464–465. [Google Scholar] [CrossRef]

- Novikov, A.; Melchers, R.; Shinjikashvili, E.; Kordzakhia, N. First passage time of filtered Poisson process with exponential shape function. Probabilistic Eng. Mech. 2005, 20, 57–65. [Google Scholar] [CrossRef]

- Systematics, C. Traffic Congestion and Reliability: Trends and Advanced Strategies for Congestion Mitigation; Technical Report; Federal Highway Administration: Washington, DC, USA, 2005. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).