Abstract

We address the problem of finding a natural continuous time Markov type process—in open populations—that best captures the information provided by an open Markov chain in discrete time which is usually the sole possible observation from data. Given the open discrete time Markov chain, we single out two main approaches: In the first one, we consider a calibration procedure of a continuous time Markov process using a transition matrix of a discrete time Markov chain and we show that, when the discrete time transition matrix is embeddable in a continuous time one, the calibration problem has optimal solutions. In the second approach, we consider semi-Markov processes—and open Markov schemes—and we propose a direct extension from the discrete time theory to the continuous time one by using a known structure representation result for semi-Markov processes that decomposes the process as a sum of terms given by the products of the random variables of a discrete time Markov chain by time functions built from an adequate increasing sequence of stopping times.

1. Introduction

After the first works introducing homogeneous open Markov population models in [1] followed by those in [2] and then in [3], further expanded by several authors and exposed in [4] and then in [5], the study of open populations in a finite state space in discrete time with a Markov chain structure became well established.

Following the pioneering work of Gani, introducing in [6] what now is known as Cyclic Open Markov population models, there were further extensions in [7], for non-homogeneous Markov chains and then, for cyclic non-homogeneous Markov systems or equivalently for non-homogeneous open Markov population processes, by the authors of [8,9]. Let us stress that continuous time non-homogeneous Markov systems have been studied lately in [10]. Furthermore, the recent work in [11] develops an approach to open Markov chains in discrete time—allowing a particle physics interpretation—for which there is a state space of the Markov chain—where distributions are studied by means of moment generating functions—there is an exit reservoir, which is tantamount to a cemetery state and, there is an incoming flow of particles, defined as a stochastic process in discrete time whose properties—e.g., stationarity—condition the distribution law of the particles in the state space.

Discrete time non-homogeneous semi-Markov systems or equivalently open semi-Markov population models were introduced and studied in [12,13]. The study of open populations in a finite state space in continuous time and governed by Markov laws, has already been carried in [14] and the references therein, and extensions to a general state space have been given in [15,16,17]. The continuous time framework has also been addressed, for instance, in [18,19,20], for the case of semi-Markov processes and for non-homogeneous semi-Markov systems [21]. We may also refer a framework of open Markov chains with finite state space—see in [22] and references therein—that has already seen applications in Actuarial or Financial problems—as, for instance, in [23,24]—but also in population dynamics (see [25]). The weaker formalism open Markov schemes, in discrete time—developed in [26]—allows for influxes of new elements in the population to be given as general time series models.

Another example was motivated by the study of a continuous time non homogeneous Markov chain model for Long Term Care, based on an estimated Markov chain transition matrix with a finite state space, in [27], by means of a method for calibrating the intensities on the continuous time Markov chain using the discrete time transition matrix in the context of usual existence theorems for ordinary differential equations (ODE); this method will be considered, in Section 3.2, in the more general context of Caratheodory existence theorems for ODE.

The main contribution of the present work is to extend results on open Markov chains in discrete time to some continuous time process of Markov type using different methods of associating a continuous process to an observed process in discrete time. One of these methods—presented in Section 3.2 and Section 3.3—is by calibration of the transition intensities. Another method considered for open Markov schemes—in Section 4.2 and also, briefly, for some particular cases, in Section 4.3—is to exploit a natural representation of the continuous time Markov type process, in Formula (2) of Section 2.

2. From Discrete Time to Continuous Time via a Structural Approach

We present the main ideas on a structural representation for continuous time process of Markov type that are crucial to our approach. The structure of continuous time processes—for instance, Markov, semi-Markov, and Markov type schemes processes—allows us to consider a fairly general representation formula—Formula (2)—decoupling the continuous time process as a discrete time process and a sequence of time functions depending on the sequence of the jump stopping times.

Consider a complete probability space , a continuous time stochastic process defined on this probability space and the natural filtration associated to this process, that is, such that is the algebra- generated by the variables of the process until time t. Consider also a sequence of random variables taking values in a finite state space , the sequence being adapted to the filtration and an increasing sequence of -stopping times, denoted by , satisfying the following hypothesis:

Hypothesis 1.

Almost surely, and, for any and almost all :

This hypothesis means that in every compact time interval , for almost all , there is only a finite number of stopping times realizations in this interval.

Hypothesis 2.

The continuous time process admits a representation given, for , by

that is, a hypothesis on the structure of the continuous time process .

It is well known—see in [28] (pp. 367–379) and in [29] (pp. 317–320)—that if is a Markov chain and the time intervals are Exponentially distributed then can be taken to be a continuous time homogeneous Markov chain. If is a Markov chain and the time intervals have a distribution that can depend on the present state as well as on the one visited next then can be taken to be a semi-Markov process (see in [30] (pp. 261–262) and in [31] (pp. 295–299), for brief references). In the case of a semi-Markov processes, a nice result of Ronald Pyke (see in [32] (p. 1236)), reproduced ahead in Theorem A7, guarantees that when the state space is finite the process is regular implying that almost all paths of such a semi-Markov process are step-functions over and so, the paths satisfy Formula (1). In another important case (see Theorems A5 and A6 ahead, or [30] (pp. 262–266) and [31] (pp. 195–244)), adequate hypothesis on the distribution of the stopping times and on the sequence implies that will be a non homogeneous Markov chain process in continuous time, whose trajectories are step functions also satisfying Formula (1). The representation in Formula (2), thus covers the cases of homogeneous and non homogeneous Markov processes in continuous time as well as semi-Markov processes, providing a desired connection between a continuous time process and a discrete one that is a component of the former. We observe that there is a practical justification for Hypothesis 1, namely, the identifiability of the process; as can be read in [33] (p. 3): “…Actually, in real systems the transition from one observable state into another takes some time.” Being so, the existence of accumulation points in a compact interval would preclude estimation procedures for instance of the distribution of the sequence .

3. From Discrete to Continuous Time Markov Chains: A Calibration Approach

In this section, we consider a calibration approach in order to determine a set of probability densities that best approaches a sequence of discrete time transition matrices with respect to a quadratic loss function. We then show that embeddable stochastic matrices, according to Definition 1, are solutions of the calibration problem. For the reader’s convenience, we recall in the first appendix the most important results on continuous time Markov chains with finite state space that are relevant for our study with emphasis on the crucial non-accumulation property of the jump times of a continuous time Markov chain (see Theorem A6 ahead). We will start by recalling the main information on embeddable chains. We then present one of the main contributions of this work, that is, a general result on the optimization problem of calibration and its relations with embeddable properties of discrete time Markov chains.

3.1. The Embedding of a Discrete Time Markov Chain in a Continuous One

The embedding of the discrete time Markov chain in a continuous one following the guidelines, for instance, in [34,35,36,37,38,39,40], can be considered as a method to connect a discrete time process with a continuous one. For notations on non-homogeneous continuous time Markov chains see Section 3.2.

Definition 1

(Embeddable stochastic matrix (see [38])). A stochastic matrix is said to be embeddable if there exists a time and a family of stochastic matrices continuously defined in the set of times such that

We observe that by Theorem A2 ahead, the condition in Formulas (3) is tantamount to the definition of a continuous time Markov chain with transition probabilities given by .

Remark 1

(Intrinsic time for embeddable chains). Goodman in [41]—aiming at a more general result for the Kolmogorov differential equations—showed that with the change of time given by —which amounts to a change in the matrix coefficients of —we have that

This remarkable representation for the embedding time will be useful for a result in Section 3.2 devoted to the calibration approach. It has also been used for estimation in [42] (p. 330).

See the work in [35] for a definition similar to Definition 1 and for a summary of many important results on this subject. The characterization of an embeddable stochastic matrix in a form useful for practical purposes was recently achieved in [43]. More useful results were obtained in [44]. The connections between this kind of embedding and the other approaches, for the association of a discrete time Markov chain and a continuous time process, deserve further study.

3.2. Continuous Time Markov Chains Calibration with a Discrete Time Markov Transition Matrix

The calibration of transition intensities of a non homogeneous Markov chain, with a discrete time Markov chain transition matrix estimated from data, was proposed in [27]. In this section, we establish a general formulation of the existence a unicity result that subsumes the approach and we establish a connection with the embedding approach of Section 3.1. Notation and needed essential results on non-homogeneous Markov processes in continuous time were recalled in Appendix A.

The procedure for calibration of intensities consists in finding the intensities of a non homogeneous continuous time Markov chain using a probability transition matrix of a discrete time Markov chain and a given loss function—having as arguments the transition probabilities of the continuous time Markov chain and some function of the transition matrix of the discrete time Markov chain—in such a way that the loss function is minimized.

Previously to the consideration of the theorem on the calibration of intensities we discuss some motivation for this approach. It may happen that a phenomena that could be dealt—due to its characteristics—with a continuous time Markov chain model can only be observed at regularly spaced time intervals. This is the case of the periodic assessments of the healthcare status of patients that can change at any time but are only object of a comprehensive evaluation on, say, a weekly basis. With the data originated by these observations we can only determine transition probabilities—for a defined period, say, a week—and, most importantly we cannot determine the time stamps for the patient status change. The question naturally poses itself: is it possible to associate—in some canonical way—to an estimated discrete time Markov chain transition matrix a process in continuous time that encompasses the discrete time process? First steps in this direction are provided by Theorem 1 that we now present and the following Theorems 2 and 3.

We formulate Theorem 1 in the context of Caratheodory’s general existence theory of solutions of ordinary differential equations that we briefly recall. One reason for this choice is that according to [41] (p. 169) and we quote: “…This fact gives further evidence in support of the view that Caratheodory equations occupy a natural place in the theory of non-stationary Markov chains.” Another reason is the fact that Caratheodory existence theory is particularly suited for regime switching models and these models are the object of Theorem 3 ahead. Following the work in [45] (pp. 41–44), we consider the definition of an extended solution for a Cauchy problem of a differential equation,

or formulated in an equivalent form,

for a non-necessarily continuous function, with and , to be an absolutely continuous function (see [46], pp. 144–150) such that for and Formula (5) is verified for all possibly with the exception of a set of null Lebesgue measure. The well-known Caratheodory’s existence theorem (see in [45], p. 43) ensures the existence of an extended solution with a given initial condition—given in a neighborhood of the initial time—under the conditions that is measurable in the variable t, for fixed , and continuous in the variable , for fixed t, and moreover that there exists a Lebesgue integrable function , defined on a neighborhood of the initial time, let us say I, such that for . The question of unicity of the solution is dealt, usually, either directly using Theorem 18.4.13 in [47] (p. 337) or using Osgood’s uniqueness theorem—as exposed, for instance, in [48] (p. 58) or in [49] (pp. 149–151)—to conclude that the extended solution—that with Caratheodory’s theorem we know to exist—is unique in the sense that two solutions may only differ on a set of Lebesgue measure equal to zero. For our purposes we need an existence and unicity theorem for ordinary differential equations with solutions depending continuously on a parameter such as the general result of Theorem 4.2 in [45] (p. 53) with an omitted proof that follows for a lengthy previous exposition of related matters. For completeness we now establish a result that is suited to our purposes as it deals with the particular type of Kolmogorov equations for continuous time Markov chains.

Theorem 1

(Calibration of intensities with Caratheodory’s type ODE existence theorem hypothesis). Let, for , be the generic element of a sequence of numerical transition matrices taken at sequence of increasing dates . Consider a set of intensities —with being a parameter and Λ being a compact set—satisfying the following conditions:

- 1.

- For every fixed λ the functions are measurable as functions of u.

- 2.

- For every fixed u the functions are continuous as functions of λ.

- 3.

- There exists a locally integrable function , such that for all , , and , the following conditions are verified:

Then, we have

- 1.

- There exists a probability transition matrix, with entries absolutely continuous in s and t, such that conditions in Definition A2, the Chapman–Kolmogorov equations in Theorem A1 and Theorem A3 are verified.

- 2.

- For each fixed , consider the loss functionThen, for the optimization problem there exists such thatthe unique minimum being attained at possibly several points .

Proof.

We will prove, simultaneously, the existence of the probability transition matrix, the unicity in the extended solution sense and the continuous dependence of the parameter following the lines of the proof of the result denominated Hostinsky’s representation (see in [29], pp. 348–349). As we suppose that is compact, the continuity of , as a function of for every fixed t, will be enough to establish the second thesis.

We want to determine an extended solution of the Kolmogorov forward equation given in Formula (A11), that is an extended solution of

an equation which, as seen in Formula (A12), can be read in integral form as,

As previously said, we will now follow the general idea of successive approximations in the proof of the Picard–Lindelöf theorem for proving existence and unicity of solutions of ordinary differential equations for the forward Kolmogorov equation. By replacing in the right-hand member of Equation (11) by this right-hand member we get,

and, by induction, we obtain

Now, considering the function in the third hypothesis stated above about the intensity matrix, we have that, by Lemma A1 (see also Lemma 8.4.1 in [29], p. 348), since is integrable over any compact set, considering the component of the matrix, we have that

Finally, as

we have that the series for which the sum represents , that is,

is a series—of absolutely continuous functions of the variable t which are also continuous as functions of the parameter —converging normally and so the sum is an absolutely continuous function of the variable t and continuous function of the parameter . With a similar reasoning applied to the backward Kolmogorov equation we also have that is absolutely continuous in the variable s and, obviously, continuous as a function of the parameter . We observe that it was stated in [41], pp. 166–167 (with a reference to a proof in [50] and proved also in [51]), that the separate absolute continuity of in the variables s and t ensures the uniqueness of the solution. □

Remark 2

(An alternative path for the existence result). We observe that, for every fixed value of the parameter λ, by a direct application of Caratheodory’s existence theorem to the forward and backward Kolmogorov equations in Theorem A3, we obtain a probability transition matrix , such that conditions in Definition A2 and the Chapman–Kolmogorov equations in Theorem A1 are verified, that in addition has entries absolutely continuous in s and t and such that Kolmogorov’s equations are satisfied almost everywhere. With this approach the continuous dependence of the probability transition matrix on the parameter λ requires further proof.

Remark 3

(On the parametrized intensities and transition probabilities). In a first application to Long-Term Care of a simpler version of Theorem 1 presented in [27], we chose as intensities a parametrized family—of Gompertz–Makeham type (see, for instance, in [52], p. 62)—with a three dimensional parameter. We observe that, in its actual formulation, Theorem 1 contemplates the case of a set of intensities—and of associated transition probabilities—not necessarily with the same functional form with varying parameters but merely with a finite set of different functional forms indexed by the parameters.

Remark 4

(Only one transition matrix observation). In the case where we only have one estimated transition matrix , we can consider the sequence of n step transition matrices given by the n fold product of the matrix by itself. This situation will be addressed in Theorem 2 ahead, in the case of homogeneous Markov chains and in Theorem 3 for the non-homogeneous case.

We also observe that in the case of a multidimensional parameter set Λ—say —and even in a reasonable state space of the discrete time Markov chain—say with states—the optimization problem of Formula (8) may require adequate algorithms to be solved as the number of variables is of the order of . In [27] we opted for a modified grid search coupled with the numerical solutions of the Kolmogorov equations in order to recover the transition probabilities of the continuous time Markov chain.

Remark 5

(On the unicity of the solution of the calibration problem). The unicity in law of the solution of the calibration problem deserves discussion. If there are several minimizers of the calibration problem, to each of these minimizers corresponds an intensity and to each intensity a, possible, different law for the stopping times of the continuous time Markov chain, as these laws are determined by the intensities (see Remark A2). The existence of criteria allowing to identify a distribution of inter-arrival times that stochastically dominates all other solutions is an open problem.

We can establish a connection between the approach in Section 3.1 and Theorem 1 on calibration above, showing first—in Theorem 2—that, if a matrix is embeddable in a homogeneous continuous time Markov chain—with intensities depending continuously on a parameter—for a fixed value of the parameter, then this continuous time Markov chain solves the calibration problem in an optimum way. We recall that the continuous time Markov chain is homogeneous if, for all the transition probabilities satisfy

and that the intensities matrix is constant as a function of time (see [41] (pp. 165–166) for definitions in this context).

Theorem 2

(Discrete chains embeddable in homogeneous continuous chains can be optimally calibrated). Suppose that the matrix is embeddable and let and the transition probabilities satisfy Definition 1 in the case of a homogeneous continuous time Markov chain for some family of intensities where is a given parameter. Then, with for and —the n fold product of the matrix by itself—we have that the optimization problem, with respect to the loss function given by Formula (8) has an optimal solution such that

Proof.

Remark 6

(On the skeletons of a homogeneous continuous time Markov chain). Another possible way to extend results from discrete time to continuous time is the approach of skeletons of Kingman and other authors (see [53,54], for instance). As we are more interested in non-homogeneous continuous time Markov chains we do not pursue this approach in the present work.

We now address the case of non homogeneous Markov chain. In Theorem 3, we show that if every element of a sequence, with no gaps, of matrix powers of a discrete time Markov chain is embeddable then there is a regime switching process of Markov type that solves optimally the calibration problem.

Theorem 3

Discrete power-embeddable discrete chains can be optimally calibrated). Suppose that all the powers , for , of a discrete time Markov chain transition matrix are embeddable and let be the transition probabilities of the embedding continuous time Markov chain for given in their intrinsic time—defined in Remark 1—in such a way that the respective embedding times verifies (according to Formula (4)). We suppose that the intensities for each of the transition probabilities depend on parameters , possibly different but all in a common parameter set Λ. With the convention , and

let be defined by

and thus satisfying . Then, we have that the optimization problem, with respect to the loss function given by

has an optimal solution such that

Proof.

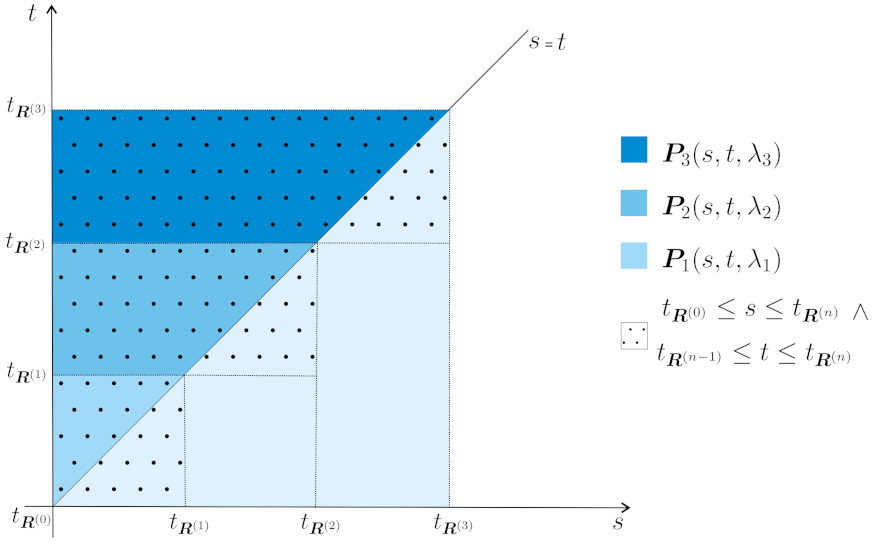

We observe that the definition in Formula (12) is coherent—see Figure 1—and then it is a simple verification with the definitions proposed. □

Figure 1.

A representation of in Formula (12) for the first three initial times.

Remark 7

(An associated regime switching process). The function defined in Formula (12) was obtained by superimposing different transition probabilities for different Markov chains in continuous time. A natural question is to determine if there is—based on these different transitions probabilities—a regime switching Markov chain in continuous time that bears some connection with . From a brief analysis of Figure 1 we can guess the natural definition of a regime switching Markov chain based on the probabilities . Let

Formula (14) has the following interpretation. For each , consider continuous time Markov chain processes with transition probabilities defined in the domains with the convention . The regime switching process is such that (compare with Formula (2)):

that is, the process is obtained by gluing together , the paths of the processes which are bona fide continuous time Markov processes in each of their—non-random—time intervals . It is clear that can be interpreted as a transition probability only when restricted to some domain and that, in general, it will not be a transition probability in the whole interval .

Remark 8.

The regime switching process defined in Remark 7 deserves further study. We may, nevertheless, define transition probabilities for —with properties to be thoroughly investigated—by considering

3.3. Conclusions on the Relations between Embeddable Matrices, Calibration, and Open Markov Chain Models

From Theorems 1–3, the following conclusions can be drawn. Given a discrete time Markov transition matrix,

- if the matrix is embeddable—according to Definition 1 of Section 3.1—there is an unique in law homogeneous Markov chain in continuous time that solves the calibration problem optimally; the unicity is a consequence of Remark A2 that shows that the laws of the stopping times in the representation of Formula (A13) only depend on the intensities and these are uniquely determined whenever the discrete time Markov chain is embeddable.

- if the matrix is power-embeddable—that is, if all the matrices of a finite sequence with no gaps of powers of the matrix are embeddable—then there is an unique regime switching continuous time non-homogeneous Markov chain—in the sense of Remark 7—that solves the calibration problem optimally. In this case, the unicity has a justification similar to the previously referred case, that is, the laws of the stopping times only depends on the intensities and these are determined by the fact that the matrix is power-embeddable.

As a consequence, for our purposes, it appears of fundamental importance to determine if a discrete time Markov chain transition matrix is embeddable and to determine—if possible, explicitly—the embedding continuous time Markov chain. Regarding this problem the results in [43,55] deserve further consideration.

Remark 9

(Aplying Theorems 1–3). Suppose that discrete time Markov chain transition matrix, of a Markov chain process is embeddable in a continuous time Markov chain . We have, for this continuous time process and for a determined sequence of stopping times , the representation given in Formula (A13) of Theorem A5, that is,

Now, as the Theorems referred to may consider that the process is suitably approximated by , we can also consider that the continuous time process defined by

is an approximation of in continuous time. For processes with a structural representation similar to the one of the process we propose in Section 4.3 a method to extend from discrete to continuous time the open populations methodology.

4. More on Open Continuous Time Processes from Discrete Ones

In this section, we discuss an extension of the formalism of open Markov chains to the case of semi-Markov processes (sMp) and other continuous time processes, namely, the open Markov chain schemes introduced in [26]. For the reader’s convenience we present in Appendix B a short summary on sMp and in the next Section 4.1 a review of the main results on the open Markov chain formalism for discrete time. Finally, we propose the second main contribution of this work, that is, an extension of the open Markov chain formalism in discrete time to continuous time in the case of sMp. We also briefly refer the case of open Markov schemes that, in some particular instances, can be dealt as the sMp case.

4.1. Open Markov Chain Modeling in Discrete Time: A Short Review

We now detail and comment the results that will be used in this paper on discrete time open Markov chains. The study of open Markov chain models we will present next relies on results and notations that were introduced in [56], further developed in [22] and that we reproduce next, for the readers convenience. We will suppose that, in general, the transition matrix of the Markov chain model may be written in the following form:

where is a transition matrix between transient states, a matrix of transitions between the transient and the recurrent states, and a matrix of transitions between the recurrent states. A straightforward computation then shows that

with . We write the vector of the initial classification, for a time period i, as

with the vector of the initial allocation probabilities for the transient states and the vector of the initial allocation probabilities for the recurrent states. We suppose that at each epoch there is an influx of new elements in the classes of the population—population that has its evolution governed by the Markov chain transition matrix—that is, a Poisson distributed with parameter . It is a consequence of the randomized sampling principle (see [57], pp. 216–217) that, if the incoming populations are distributed by the classes according with the multinomial distribution, then the sub-populations in the transient classes have independent Poisson distributions, with parameters given by the product of the Poisson parameter by the probability of the incoming new member being affected to the given class. With Formulas (16) and (17), we now notice that the vector of the Poisson parameters, for the population sizes in each state at an integer time N, may be written as

We observe that the first block corresponds to the transient states and the second block, the one in the right-hand side, corresponds to the recurrent states. From now on, as a first restricting hypothesis, we will also suppose that the transition matrix of the transient states, , is diagonalizable and so

with the eigenvalues, the left eigenvectors and the right eigenvectors of matrix . We observe that corresponds to a transient state if and only if . We may write the powers of as

and so, as a consequence of (18), for the vector of the Poisson parameters corresponding only to the transient states, , we have

The main result describing the asymptotic behaviour, established in [22], is the following.

Theorem 4

(Asymptotic behavior of Poisson parameters of an open Markov chain with Poisson distributed influxes). Let a Markov chain driven system have a diagonalizable transition matrix between the transient states , written in its spectral decomposition form. Suppose the system to be fed by Poisson inputs with intensities and such that the vector of initial classification of the inputs in the transient states converges to a fixed value, that is, . Then, with the vector of Poisson parameters of the transient sub-populations, at date , we have the following:

- 1.

- If , then

- 2.

- If and there exists a constant such thatthen

Remark 10.

We observe that proportions in the Markov chain transient classes, on both statements of the Theorem 4, only depend on the eigenvalues . In fact, whenever using Formula (21) to compute proportions these proportions do not depend on the value of λ as we have that

and the term in the right-hand side multiplying λ is a vector with the dimension equal to the number of transient classes k, which is equal to the dimension of the square matrix . As so, when computing proportions, by normalizing this vector with the sum of its components, disappears.

4.2. Open sMP from Discrete time Open Markov Chains

Let us suppose that the successive Poisson distributions of the influx of new members in the population are independent of the random time at which the influx of new members in the population occurs. For the notations used, see Appendix B. Consider a sMp given by the representation in Formula (A17), that is,

in which is the embedded Markov chain and are the jump times of the process. We now propose a method to extend the known method to study open Markov chains in discrete time to sMps.

- (1)

- In applications we usually consider that we have the influx of new members in the population being modeled by Poisson random variables that at each time t has a parameter . Being so, Formula (20) may be rewritten aswhere usually we can take , as in a discrete time Markov chain, the actual time stamp is irrelevant as we only consider the sequence of epochs .

- (2)

- In a sMp the only difference we have with respect to a discrete time Markov chain is that the dates corresponding to each epoch i are random; altogether, the structure of the changes in the sub-populations in the transient states is governed by the transition matrix of the Markov chain. In a sMp, the only possible observable changes are those that occur at the random times where it jumps; as so, we will suppose that the influxes of the new members of the population only occur at these random times. As a consequence, we should have that the vector parameter of the Poisson parameters, in the transient classes, is random since it depends on the random times in each we consider influxes and so, Formula (23) becomes

- (3)

- The parameters of interest will be the expected values of the random variables —with the correspondent asymptotic behavior of these expected values when N grows indefinitely—and these expected values can be computed whenever the joint laws of are known, for . In fact, we observe that by Formula (24) we haveThis formula has two consequences. The first one is that given an arbitrary strictly increasing sequence of dates we havethus justifying the assumption that given the strictly increasing of non accumulating stopping times dates we can proceed as with the usual open Markov chain model in discrete time. The second consequence deserving mention is that in order to compute the expected value of the vector parameters of the transient classes sub-populations, while preserving the Poisson distribution of the influx new members, we computeusing the joint laws of for , laws we will suppose to be given.

Theorem 6, in the following, is one possible extension of the open Markov chain formalism to the sMp case taking as a starting point a discrete time Markov chain. To prove this result we will need Theorem 5—a generalization of Lebesgue dominated convergence theorem with varying measures—that we quote from Theorem 3.5 in [58] (p. 390).

Theorem 5

(Lebesgue dominated convergence theorem with varying measures). Consider a locally compact, separable topological space endowed with its Borel σ-algebra. Suppose that the sequence of probability measures —each one of them defined in —converges weakly to μ on and that the sequence of measurable functions converges continuously to f. Suppose additionally that, for some sequence of measurable functions defined on :

- 1.

- For all and , we have that .

- 2.

- With the function g defined on bywe have that

Then, we have

As said, we will suppose that we only observe the influx of the new members of the population into the sMp classes at the random times where it jumps—but, of course, accounting the state before the jump and the state after the jump—which is a hypothesis that makes sense under the perspective that we usually observe trajectories of the process. We then have the following extension of Theorem 4 to the case of sMp.

Theorem 6

(On the stability of open sMp transient states). Let a sMp given by the representation in Formula (A17), that is,

in which is the embedded Markov chain and are the jump times of the process. For the embedded Markov chain , consider the notations of Section 4.2 and of Theorem 4 in this subsection. Suppose that the influx of new members in the population is modeled by Poisson random variables that at each time have a parameter , with λ a continuousfunction. Suppose, furthermore, that the following hypothesis are verified.

- 1.

- The stopping times are integrable, that is, for all .

- 2.

- There exists such that, for every sequence of positive real numbers such that we have

Then, we have that the asymptotic behavior of the expected value vector of parameters of Poisson distributed sub-populations in the transient classes of an open sMp, submitted to a Poisson influx of new members at the jump times of the sMp, is given by

Proof.

For each , let be the joint distribution function of . We want to compute the following limit of expectations:

and we observe that by Theorem 4 and by the first hypothesis, for every sequence of positive real numbers such that and , we have that

The limit in the last term of Formula (27) requires a result of Lebesgue convergence theorem type but with varying measures. For the purpose of applying Theorem 5, we introduce the adequate context and notations and then we will apply the referred theorem. Consider the space defined to be the space of infinite sequences of numbers in , that is,

Recall that with the metric d given by

is a metric space, locally compact, separable and complete (see, for instance, in [59], pp. 9–10). We will consider endowed with the Borel -algebra generated by the family given by

with the Borel -algebra of . We now take the sequence of the jump times of the process represented in Formula (A17). First, we define the sequence of measures where for each we have that is defined on the measurable space by considering, for with , that

Being so, is the probability joint law of and the last integral in the last term of Formula (27) is exactly an integration with respect to the measure . As a consequence of Formula (29), the sequence verifies the compatibility conditions of Kolmogorov extension theorem (see [60], p. 46) and so there is a probability measure , defined on , having as finite dimensional distributions the measures of the sequence .

Now, for each , we can consider the extension of to the measurable space in the following way:

In fact, with this definition the restriction of to is exactly . An important observation is the following. Consider . Then, for we have that

thus showing that for every the sequence converges to . Now, by Theorem 2.2 in [59] (p. 17), as is a -system and every open set in the metric space is a countable union of elements of , we have that the sequence converges weakly to . In order to apply Theorem 5 to compute the limit, we may consider two approaches to deal with the fact that is a vector of finite dimension k. Either we proceed component wise or we consider norms. Let us follow the second path. Define, for integer N, and some constant M,

and also,

in such a way that ; such choice of M is possible as a consequence of Formula (28). We can verify that the sequence converges continuously to a function f by using Theorem 4.1.1 in [22] (p. 373). In fact, let us consider a sequence converging to some in the metric space . With we surely have that for all . As a consequence of the continuity of and of Theorem 4.1.1 in [22] (p. 373), we have that

It is clear now that the sequences , and satisfy together with the hypothesis of Theorem 5 and so the announced result in Formula (25) follows. □

Remark 11

(Alternative proof for the weak convergence of the sequence ). There is another proof the weak convergence of the sequence to μ that we now present. We proceed by showing that the sequence is relatively compact—as a consequence of Prohorov theorem (see [59], pp. 59–63)—because, as we will show next, this sequence is tight. Let an arbitrary be given and consider a sequence of positive numbers such that, by Tchebychev inequality and using the fact that the stopping times have finite integrals,

in such a way that

Now consider the Borel set which is compact by Tychonov theorem. We now have that

thus showing that the sequence of probability measures is tight in the measurable space . As said, by Prokhorov’s theorem, this implies that the sequence is relatively compact, that is, for every subsequence of , there exists a further subsequence and a probability measure such that this subsequence converges weakly to the said probability measure. Now, as, by construction, the probability measure μ has, as finite dimensional distributions the probability measures we can say that for , the finite dimensional distributions of converge weakly to the finite dimensional distributions of μ. As a consequence, following the observation in [59] (p. 58), the sequence converges weakly to μ.

Remark 12

(Applying Theorem 6). If we manage to estimate a discrete time Markov chain transition matrix and if we manage to fit some function f—such that —to the number of new incoming members in the population at a set of non accumulating non-evenly spaced dates (as done with a statistical procedure in [22] or, with a simple fitting in [25]) then, Theorem 6 allows us to get the asymptotic expected number of elements in the transient classes of a sMp having as embedded Markov chain the estimated one.

4.3. Open Continuous Time Processes from Open Markov Schemes

We may follow the approach of open Markov schemes in [26] and define a process in continuous time after getting a process in random discrete times describing, at least on average, the evolution of the elements in each transient class. Let us briefly recall the main idea. A population model is driven by a Markov chain defined by a sequence of initial distributions given, for , by and a transition matrix . After the first transition, the new values of the proportions in all states, after one transition, can be recovered from and, after n transitions, by . We want to account for the evolution of the expected number of elements in each class supposing that, at each random date , a random number of new elements enters the population. Just after the second cohort enters the population, a first transition occurs in the first cohort driven by the Markov chain law and so on and so forth. Table 1 summarizes this accounting process in which, at each step k, we distribute multinomially the new random arrivals according to the probability vector and the elements in each class are redistributed according to the Markov chain transition matrix .

Table 1.

Accounting of n Markov cohorts each with an initial distribution.

At date , if we suppose that each new set of individuals in the population, a cohort, evolves independently from any one of the already existing sets of individuals but, accordingly, to the same Markov chain model, we may recover the total expected number of elements in each class at date by computing the sum:

Each vector component corresponds precisely to the expected number of elements in each class. In order to further study the properties of , given the properties of a stochastic process , we will randomize formula (32) by considering, instead, for :

and we observe that in any case . It is known that if the vector of classification probabilities is constant and if the is an ARMA, ARIMA, or SARIMA process, then the populations in each of the transient classes can be described by a sum of a deterministic trend, plus an ARMA process plus an evanescent process, that is a centered process such that (see Theorems 3.1 and 3.2 in [26]).

The step process in continuous time naturally associated with the discrete time one would be then defined by for by

In order to study this process we will have to take advantage of the properties of and of the family of stopping times . It should be noticed that if the process is Poisson distributed and the laws of the sequence are known and it possible to determine the expected value of for with a result similar to Theorem 6.

5. Conclusions

In this work, we studied several ways to associate, to an open Markov chain process in discrete time—which is often the sole accessible fruit of observation—a continuous time Markov or semi-Markov process that bears some natural relation with the discrete time process. Furthermore, we expect that association to allow the extension of the study of open populations from the discrete to the continuous time model. For that purpose, we consider three approaches: the first, for the continuous time Markov chains; the second, for the semi Markov case; and the third, for the open Markov schemes (see in [26]). For the semi-Markov case, under the hypothesis that we only observe the influx of new individuals in the population at the times of the random jumps, in the main result we determine the expected value of the vector of parameters of the conditional Poisson distributions in the transient classes when the influx of new members is Poisson distributed. The third approach, dealing with open Markov schemes is similar to the second one whenever we consider a similar context hypothesis, that is, distributed incoming new members of the population with known distributions and observation of this influx of new individuals at the times of the random jumps. In the case of the first approach, that is, for the case of Markov chain in continuous time, we propose a calibration procedure for which the embeddable Markov chains provide optimal solutions. In this case also, the study of open populations models relies on the main result proved for the semi-Markov case approach. Future work encompasses applications to real data and the determination of criteria to assess the quality of the association of the continuous model to the observed discrete time model.

Author Contributions

All authors contributed equally to this work. All authors have read and agreed to the published version of the manuscript.

Funding

For the second author, this work was done under partial financial support of RFBR (Grant n. 19-01-00451). For the first and third author this work was partially supported through the project of the Centro de Matemática e Aplicações, UID/MAT/00297/2020 financed by the Fundação para a Ciência e a Tecnologia (Portuguese Foundation for Science and Technology). The APC was funded by the insurance company Fidelidade.

Acknowledgments

This work was published with finantial support from the insurance company Fidelidade. The authors would like to thank Fidelidade for this generous support and also, for their interest in the development of models for insurance problems in Portugal. The authors express gratitude to Professor Panagiotis C.G. Vassiliou for his enlightening comments on a previous version of this work and to the comments, corrections and questions of the referees, in particular, to the one question that motivated the inclusion of Remark 5.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Some Essential Results on Continuous Time Markov Chains

In this exposition of the most relevant results pertinent to our purposes, we follow mainly the references [29,30,31]. As this exposition is a mere reminder of needed notions and results, the proofs are omitted unless the result is essential for our purposes.

Definition A1

(Continuous time Markov chain). Let be some finite set; for instance, of Section 2. A stochastic process is a continuous time Markov chain with state space if and only if the following Markov property is verified, namely, for all and we have that

We observe that by force of the Markov property in Definition A1 the law of a continuous time Markov chain depends only on the following transition probabilities. Let be the identity matrix with dimension the Kronecker’s delta be given by

Definition A2

(Transition probabilities). Let be the state space of a continuous time Markov chain. The transition probabilities are defined by

Let be the space of square matrices with coefficients in . The transition probability matrix function is defined by

Transition probabilities of Markov processes in general satisfy a very important functional equation that results from the Markov property.

Theorem A1

(Chapman-Kolmogorov equations). Consider a NH-CT-MC as given in Definition A1. Let its transition probability matrix function as given in Definition A2. We then have

As an application of the celebrated existence theorem of Kolmogorov (in the form exposed in [61], pp. 8–10) we have that, under a set of natural hypothesis, there exists a NH-CT-MC such as the one in Definition A1.

Theorem A2

(On the existence of NH-CT-MC). Let be an initial probability over . Consider a matrix valued function denoted by and satisfying Formulas (A3) and (A4) below, that is,

- 1.

- For all and for all

- 2.

- Formula (A2) in Theorem A1, namely,

Define, for all and , the function

and extend this definition to all possible by considering, with the adequate ordering permutation σ of such that we have ,

Then, is a family of probability measures satisfying the compatibility conditions of Kolmogorov existence theorem and so, there exists a probability measure over the canonical probability space —with and —such that if the stochastic process is denoted by

then,

that is, has —together with —as its transition probabilities.

A natural and useful way of defining transition probabilities is by means of the transition intensities that act like differential coefficients of transition probability functions.

Definition A3

(Transition intensities). Let be the space of square matrices with coefficients in . A function denoted by

is a transition intensity iff for almost all it verifies

- (i)

- ;

- (ii)

- ;

- (iii)

- .

There is a way to write differential equations—the Kolmogorov backward and forward equations—useful for recovering the transition probability matrix from the intensities matrix and to study important properties of these transition probabilities.

Theorem A3

(Backward and Forward Kolmogorov equations). Suppose that is continuous at s, that is,

If there exists such that

then we have the backward Kolmogorov (matrix) equation:

and the forward Kolmogorov (matrix) equation:

Remark A1.

The general theory of Markov processes shows that the condition that is continuous in both s and t is sufficient to ensure the existence of the matrix intensities given in Formulas (A9) (see [31], p. 232). By means of a change of time Goodman (see [41]) proved that the existence of solutions of Kolmogorov equations is amenable to an application of Caratheodory’s existence theorem for differential equations.

Given transition intensities satisfying an integrability condition there are transition probabilities uniquely associated with these transition intensities.

Theorem A4

(Transition probabilities from intensities). Let be a transition intensity as in Definition A3 such that Theorem A3 holds. Then, we have that

The existence of a NH-CT-MC can also be guaranteed by a constructive procedure that we now present and that is most useful for simulation.

Remark A2

(Constructive definition). Given a transition intensity define

- 1.

- Let , according to some initial distribution on ; the sequence is defined by induction as follows; .

- 2.

- time of first jump with Exponential distribution function:andand so for . We note that this distribution of the stopping time is mandatory as a consequence of a general result on the distribution of sojourn times of a continuous time Markov chain (see Theorem 2.3.15 in [31], p. 221).

- 3.

- Given that and , time of the second jump with Exponential distribution functionandand so for .

The following result ensures that the preceding construction yields the desired result.

Theorem A5

(The continuous time Markov chain). Let the intensities satisfy condition given by Formula (A12) in Theorem A4. Then, given the times , we have that with the sequence defined by , the process defined by:

is a continuous time Markov chain with transition probabilities given by Definition A2 and transition intensities given by Definition A3 and Theorem A3.

Proof.

This theorem is stated and proved, in the general case of Markov continuous time Markov processes in [31] (p. 229). □

Lemma A1.

Let a measurable function integrable over every bounded interval of . Then, we have that

for all , .

Proof.

Let us observe that, for , we have that

By induction we have for all , and for every permutation

as all the integrals in the sum are equal by the symmetry of the integrand function, and then, by Fubini theorem. □

Remark A3

(On a fundamental condition). The condition on q stated in Lemma A1 and reformulated in Formula (7) is the key to the proof of important results. In fact we have that this condition is sufficient to ensure that the associated Markov process has no discontinuities of the second type (see [31], p. 227) and, most important for the goals in this work, that the trajectories of the associated Markov process are step functions, that is, any trajectory has only a finite number of jumps in any compact subinterval of ; we will detail this last part of the remark in Theorem A6.

Under the perspective of our main motivation the following result is crucial.

Theorem A6

(The non accumulation property of the jump times of a Markov chain). Let the intensities satisfy condition given by the statement of Lemma A1. Then, given the times , we have that:

and so the trajectories of the process are step functions.

Proof.

Property in Formula (A14) has non immediate proof. We present a proof based on a result in [62] (p. 160), stating that the condition given by:

guarantees that the process has a stochastic equivalent that is a step process, meaning that for any trajectory the set of jumps of this trajectory has no limit points in the interval , with being the end date of the trajectory. This result is based on a thorough analysis (see [62], pp. 149–159) of the conditions for a Markov process not to have discontinuities of the second type, meaning that the right-hand side and left-hand side limits exists for every date point and every trajectory. Now, with,

by virtue of the condition on q in Lemma A1—that is reformulated more precisely in Formula (7) of the statement in Theorem 1—we have that:

Therefore, for almost all ,

as the series is uniformly convergent and for almost all ,

by Lebesgue’s differentiation theorem. □

Remark A4

(Negative properties). The following negative properties suggest the alternative calibration approach that we propose in Section 3.2. Given, the successive states occupied by the process, we observe that

- the times are not independent;

- the sequence defined by is not a Markov chain.

Appendix B. Semi-Markov Processes: A Short Review

For the reader’s convenience we present a short summary of the most important results semi-Markov processes (sMp), needed in this work, following [63] (pp. 189–200). The main foundational references for the theory of sMp are [32,64,65]. Important developments can be read in [33,66,67]. Among the many works with relevance for applications we refer, for instance, [68,69,70,71,72,73]. Let us consider a complete probability space . The approach of Markov and semi-Markov processes via kernels if fruitful and so we are lead to the following definitions and results for what we will now follow, mainly, the works in [67] (pp. 7–15) and in [33]. Consider a general measurable state space . The -algebra may be seen as the observable sets of the state space of the process .

Definition A4

(Semi-Markov transition kernel). A map such that is a semi-Markov transition kernel if it satisfies the following properties.

- (i)

- is measurable with respect to with the Borel σ-algebra of .

- (ii)

- For fixed , is a semistochastic kernel, that is,

- (ii.1)

- For fixed and , the map is a measure and we have ; if we have that is a stochastic kernel.

- (ii.2)

- For a fixed we have that is measurable with respect to .

- (iii)

- For fixed we have that the function is a nondecreasing function, continuous from the right and such that .

- (iv)

- defined to be: is a stochastic kernel.

- (v)

- For any we have that the function defined for by is a probability distribution function.

Now, consider a semi-Markov transition kernel, a continuous time stochastic process defined on this probability space and the natural filtration associated to this process, i.e., is the algebra- generated by the variables of the process until time t. We now consider a sequence of random variables —taking values in a state space , that for our purposes will, in general, be finite state space and sometimes an infinite one —the sequence being adapted to the filtration . We consider also an increasing sequence of -stopping times, denoted by and for .

Definition A5

(Markov renewal process). A two dimensional discrete time process with state space verifying,

for all , and almost surely that is, an homogeneous two dimensional Markov Chain, is a Markov renewal process if its transition probabilities are given by:

Remark A5

(Markov chains and Markov renewal processes). The transition probabilities of a Markov renewal process do not depend on the second component; as so, a Markov renewal process is a process of different type of a two dimensional Markov chain process. The first component of a Markov renewal process is a Markov chain, denoted the embedded Markov chain, with transition probabilities given by:

Definition A6

(Markov renewal times). The Markov renewal times of the Markov renewal process are defined by

and the probability distribution functions of the Markov renewal times depend on the states of the embedded Markov chain, as, by definition we have

Proposition A1.

Consider a general measurable state space . Let be a semi-Markov transition kernel and the associated stochastic kernel according to Definition A1. Then, there exists a function such that:

Proof.

As we have for and that , we may conclude that and so, the measure is absolutely continuous with respect to the probability measure on and so, by the Radon–Nicodym theorem, there exists a density verifying Formula (A16). □

Remark A6

(Semi-Markov kernel for discrete space state). In the case of a discrete state space, say , we may consider the maximal σ-algebra of all the subsets of and, with this condition, a semi-Markov kernel is defined by a matrix function such that

- (i)

- For fixed the function is nondecreasing.

- (ii)

- For fixed the function is a probability distribution function.

- (iii)

- The matrix with is a stochastic matrix.

Definition A7

(Semi-Markov process). The process is a semi-Markov process if:

- (i)

- The process admits a representation given, for , by

- (ii)

- For we have that .

- (iii)

- The process is a Markov renewal process (Mrp), that is, it verifiesfor all , and almost surely—as it is a conditional expectation.

Proposition A2

(The sMp as a Markov chain). The process is a Markov chain with state space and with semi-Markov transition kernel given by:

Proposition A3

(The embedded Markov chain of the Mrp). The process is a Markov chain with state space Θ with transition probabilities given by:

and is denoted as the embedded Markov chain of the Mrp.

Proposition A4

(The conditional distribution function of the time between two successive jumps). Let be the semi-Markov kernel as in Proposition A20. Let the times between successive jumps be have the conditional distribution function of the time between two successive jumps be given by

Then, the semi-Markov kernel verifies,

with as defined in Proposition A3.

Proof.

It is a consequence of Proposition A1. □

Remark A7

(Homogeneous Markov chains as semi Markov processes). Let be a homogeneous Markov chain in continuous time with state space and with—time independent—transition intensities given by (see Definition A3). Then, by the well known results on homogeneous Markov chains (see [29], pp. 317, 318) and by the representation given by Formula (A22), we have that

is the semi Markov kernel of a sMp. Being so, comparing Formula (A23) with Formulas (A21) and (A22), we can see that the main difference between a sMp and a continuous time Markov process is the fact that in the sMp case the conditional distribution function of the time between two successive jumps depend not only on the initial state of the jump but also on the final state, while in the homogeneous Markov chain case the dependence is only on the initial state of the jump.

Definition A8

(The sojourn time distribution in a state). The sojourn time distribution in the state , is defined by:

Its mean value represent the mean sojourn time in state of the sMP .

Definition A9

(Regular sMp). A sMP is regular, with the number of jumps of the process in the time interval given by:

defined for verifies for all ,

Proposition A5

(Jumps times of a regular sMp do not have accumulation points). Let the sMP be regular. Then, almost surely, and, for any and almost all :

This means that in every compact time interval , for almost all there is only a finite number of times in this interval.

The following fundamental theorem ensures that for sMp with finite state space the sequence of stopping times do not accumulate in a compact interval.

Theorem A7

(A sufficient condition for regularity of a sMp). Let and be constants such that or every state the sojourn time distribution in this state defined in Definition A8 verifies:

Then, the sMp is regular. In particular, any sMp with a finite state space is regular.

Proof.

See in [74] (p. 88). □

Remark A8

(On the estimation of sMp). The estimation of sMp is dealt, for instance, in [75,76].

References

- Vajda, S. The stratified semi-stationary population. Biometrika 1947, 34, 243–254. [Google Scholar] [CrossRef] [PubMed]

- Young, A.; Almond, G. Predicting Distributions of Staff. Comput. J. 1961, 3, 246–250. [Google Scholar] [CrossRef][Green Version]

- Bartholomew, D.J. A multi-stage renewal process. J. R. Statist. Soc. Ser. B 1963, 25, 150–168. [Google Scholar] [CrossRef]

- Bartholomew, D.J. Stochastic Models for Social Processes, 2nd ed.; Wiley Series in Probability and Mathematical Statistics; John Wiley & Sons: London, UK; New York, NY, USA; Sydney, Australia, 1973. [Google Scholar]

- Bartholomew, D.J. Stochastic Models for Social Processes, 3rd ed.; Wiley Series in Probability and Mathematical Statistics; John Wiley & Sons, Ltd.: Chichester, UK, 1982. [Google Scholar]

- Gani, J. Formulae for Projecting Enrolments and Degrees Awarded in Universities. J. R. Stat. Soc. Ser. A 1963, 126, 400–409. [Google Scholar] [CrossRef]

- Bowerman, B.; David, H.T.; Isaacson, D. The convergence of Cesaro averages for certain nonstationary Markov chains. Stoch. Process. Appl. 1977, 5, 221–230. [Google Scholar] [CrossRef][Green Version]

- Vassiliou, P.C.G. Cyclic behaviour and asymptotic stability of nonhomogeneous Markov systems. J. Appl. Probab. 1984, 21, 315–325. [Google Scholar] [CrossRef]

- Vassiliou, P.C.G. Asymptotic variability of nonhomogeneous Markov systems under cyclic behaviour. Eur. J. Oper. Res. 1986, 27, 215–228. [Google Scholar] [CrossRef]

- Dimitriou, V.A.; Georgiou, A.C. Introduction, analysis and asymptotic behavior of a multi-level manpower planning model in a continuous time setting under potential department contraction. Commun. Statist. Theory Methods 2021, 50, 1173–1199. [Google Scholar] [CrossRef]

- Salgado-García, R. Open Markov Chains: Cumulant Dynamics, Fluctuations and Correlations. Entropy 2021, 23, 256. [Google Scholar] [CrossRef]

- Vassiliou, P.C.G.; Papadopoulou, A.A. Nonhomogeneous semi-Markov systems and maintainability of the state sizes. J. Appl. Probab. 1992, 29, 519–534. [Google Scholar] [CrossRef]

- Papadopoulou, A.A.; Vassiliou, P.C.G. Asymptotic behavior of nonhomogeneous semi-Markov systems. Linear Algebra Appl. 1994, 210, 153–198. [Google Scholar] [CrossRef]

- Vassiliou, P.C.G. Asymptotic Behavior of Markov Systems. J. Appl. Probab. 1982, 19, 851–857. [Google Scholar] [CrossRef]

- Vassiliou, P.C.G. Markov Systems in a General State Space. Commun. Stat. Theory Methods 2014, 43, 1322–1339. [Google Scholar] [CrossRef]

- Vassiliou, P.-C.G. Rate of Convergence and Periodicity of the Expected Population Structure of Markov Systems that Live in a General State Space. Mathematics 2020, 8, 1021. [Google Scholar] [CrossRef]

- Vassiliou, P.-C.G. Non-Homogeneous Markov Set Systems. Mathematics 2021, 9, 471. [Google Scholar] [CrossRef]

- McClean, S.I. A continuous-time population model with Poisson recruitment. J. Appl. Probab. 1976, 13, 348–354. [Google Scholar] [CrossRef]

- McClean, S.I. Continuous-time stochastic models of a multigrade population. J. Appl. Probab. 1978, 15, 26–37. [Google Scholar] [CrossRef]

- McClean, S.I. A Semi-Markov Model for a Multigrade Population with Poisson Recruitment. J. Appl. Probab. 1980, 17, 846–852. [Google Scholar] [CrossRef]

- Papadopoulou, A.A.; Vassiliou, P.C.G. Continuous time nonhomogeneous semi-Markov systems. In Semi-Markov Models and Applications (Compiègne, 1998); Kluwer Academic Publishers: Dordrecht, The Netherlands, 1999; pp. 241–251. [Google Scholar]

- Esquível, M.L.; Fernandes, J.M.; Guerreiro, G.R. On the evolution and asymptotic analysis of open Markov populations: Application to consumption credit. Stoch. Models 2014, 30, 365–389. [Google Scholar] [CrossRef]

- Guerreiro, G.R.; Mexia, J.A.T.; de Fátima Miguens, M. Statistical approach for open bonus malus. Astin Bull. 2014, 44, 63–83. [Google Scholar] [CrossRef]

- Afonso, L.B.; Cardoso, R.M.R.; Egídio dos Reis, A.D.; Guerreiro, G.R. Ruin Probabilities And Capital Requirement for Open Automobile Portfolios With a Bonus-Malus System Based on Claim Counts. J. Risk Insur. 2020, 87, 501–522. [Google Scholar] [CrossRef]

- Esquível, M.L.; Patrício, P.; Guerreiro, G.R. From ODE to Open Markov Chains, via SDE: An application to models for infections in individuals and populations. Comput. Math. Biophys. 2020, 8, 180–197. [Google Scholar] [CrossRef]

- Esquível, M.; Guerreiro, G.; Fernandes, J. Open Markov chain scheme models. REVSTAT 2017, 15, 277–297. [Google Scholar]

- Esquível, M.L.; Guerreiro, G.R.; Oliveira, M.C.; Corte Real, P. Calibration of Transition Intensities for a Multistate Model: Application to Long-Term Care. Risks 2021, 9, 37. [Google Scholar] [CrossRef]

- Resnick, S.I. Adventures in Stochastic Processes; Birkhäuser: Boston, MA, USA, 1992. [Google Scholar]

- Rolski, T.; Schmidli, H.; Schmidt, V.; Teugels, J. Stochastic Processes for Insurance and Finance; Wiley Series in Probability and Statistics; John Wiley & Sons Ltd.: Chichester, UK, 1999. [Google Scholar] [CrossRef]

- Iosifescu, M. Finite Markov Processes and Their Applications; Wiley Series in Probability and Mathematical Statistics; JohnWiley & Sons, Ltd.: Chichester, UK; Editura Tehnică: Bucharest, Romania, 1980; p. 295. [Google Scholar]

- Iosifescu, M.; Tăutu, P. Stochastic Processes and Applications in Biology and Medicine. I: Theory; Biomathematics; Editura Academiei RSR: Bucharest, Romania; Springer: Berlin, Germany; New York, NY, USA, 1973; Volume 3, p. 331. [Google Scholar]

- Pyke, R. Markov renewal processes: Definitions and preliminary properties. Ann. Math. Statist. 1961, 32, 1231–1242. [Google Scholar] [CrossRef]

- Korolyuk, V.S.; Korolyuk, V.V. Stochastic Models of Systems. In Mathematics and its Applications; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1999; Volume 469. [Google Scholar] [CrossRef]

- Kingman, J.F.C. The imbedding problem for finite Markov chains. Probab. Theory Relat. Fields 1962, 1, 14–24. [Google Scholar] [CrossRef]

- Johansen, S. The Imbedding Problem for Finite Markov Chains. In Geometric Methods in System Theory, 1st ed.; Mayne, D.Q.B.R.W., Ed.; D. Reidel Publishing Company: Dordrecht, The Netherlands; Boston, MA, USA, 1973; Volume 1, Chapter 13; pp. 227–237. [Google Scholar]

- Johansen, S. A central limit theorem for finite semigroups and its application to the imbedding problem for finite state Markov chains. Z. Wahrscheinlichkeitstheorie Verw. Gebiete 1973, 26, 171–190. [Google Scholar] [CrossRef]

- Johansen, S. Some Results on the Imbedding Problem for Finite Markov Chains. J. Lond. Math. Soc. 1974, 2, 345–351. [Google Scholar] [CrossRef]

- Fuglede, B. On the imbedding problem for stochastic and doubly stochastic matrices. Probab. Theory Relat. Fields 1988, 80, 241–260. [Google Scholar] [CrossRef]

- Guerry, M.A. On the Embedding Problem for Discrete-Time Markov Chains. J. Appl. Probab. 2013, 50, 918–930. [Google Scholar] [CrossRef]

- Jia, C. A solution to the reversible embedding problem for finite Markov chains. Stat. Probab. Lett. 2016, 116, 122–130. [Google Scholar] [CrossRef]

- Goodman, G.S. An intrinsic time for non-stationary finite Markov chains. Probab. Theory Relat. Fields 1970, 16, 165–180. [Google Scholar] [CrossRef]

- Singer, B. Estimation of Nonstationary Markov Chains from Panel Data. Sociol. Methodol. 1981, 12, 319–337. [Google Scholar] [CrossRef]

- Lencastre, P.; Raischel, F.; Rogers, T.; Lind, P.G. From empirical data to time-inhomogeneous continuous Markov processes. Phys. Rev. E 2016, 93, 032135. [Google Scholar] [CrossRef]

- Ekhosuehi, V.U. On the use of Cauchy integral formula for the embedding problem of discrete-time Markov chains. Commun. Stat. Theory Methods 2021, 1–15. [Google Scholar] [CrossRef]

- Coddington, E.A.; Levinson, N. Theory of Ordinary Differential Equations; McGraw-Hill Book Company, Inc.: New York, NY, USA; Toronto, ON, Canada; London, UK, 1955. [Google Scholar]

- Rudin, W. Real and Complex Analysis, 3rd ed.; McGraw-Hill Book Co.: New York, NY, USA, 1987. [Google Scholar]

- Kurzweil, J. Ordinary differential equations. In Studies in Applied Mechanics; Introduction to the theory of ordinary differential equations in the real domain, Translated from the Czech by Michal Basch; Elsevier Scientific Publishing Co.: Amsterdam, The Netherlands, 1986; Volume 13, p. 440. [Google Scholar]

- Teschl, G. Ordinary differential equations and dynamical systems. In Graduate Studies in Mathematics; American Mathematical Society: Providence, RI, USA, 2012; Volume 140. [Google Scholar] [CrossRef]

- Nevanlinna, F.; Nevanlinna, R. Absolute Analysis; Translated from the German by Phillip Emig, Die Grundlehren der mathematischen Wissenschaften, Band 102; Springer: New York, NY, USA; Heidelberg, Germany, 1973. [Google Scholar]

- Severi, F.; Scorza Dragoni, G. Lezioni di analisi. Vol. 3. Equazioni Differenziali Ordinarie e Loro Sistemi, Problemi al Contorno Relativi, Serie Trigonometriche, Applicazioni Geometriche; Cesare Zuffi: Bologna, Italy, 1951. [Google Scholar]

- Dobrušin, R.L. Generalization of Kolmogorov’s equations for Markov processes with a finite number of possible states. Matematicheskii Sbornik 1953, 33, 567–596. [Google Scholar]

- Pritchard, D.J. Modeling Disability in Long-Term Care Insurance. N. Am. Actuar. J. 2006, 10, 48–75. [Google Scholar] [CrossRef]

- Kingman, J.F.C. Ergodic properties of continuous-time Markov processes and their discrete skeletons. Proc. Lond. Math. Soc. 1963, 13, 593–604. [Google Scholar] [CrossRef]

- Conner, H. A note on limit theorems for Markov branching processes. Proc. Am. Math. Soc. 1967, 18, 76–86. [Google Scholar] [CrossRef]

- Israel, R.B.; Rosenthal, J.S.; Wei, J.Z. Finding generators for Markov chains via empirical transition matrices, with applications to credit ratings. Math. Financ. 2001, 11, 245–265. [Google Scholar] [CrossRef]

- Guerreiro, G.R.; Mexia, J.A.T. Stochastic vortices in periodically reclassified populations. Discuss. Math. Probab. Stat. 2008, 28, 209–227. [Google Scholar] [CrossRef][Green Version]

- Feller, W. An Introduction to Probability Theory and Its Applications. Vol. I, 3rd ed.; John Wiley & Sons, Inc.: New York, NY, USA; London, UK; Sydney, Australia, 1968. [Google Scholar]

- Serfozo, R. Convergence of Lebesgue integrals with varying measures. Sankhyā Ser. A 1982, 44, 380–402. [Google Scholar]

- Billingsley, P. Convergence of Probability Measures, 2nd ed.; Wiley Series in Probability and Statistics: Probability and Statistics; John Wiley & Sons, Inc.: New York, NY, USA, 1999. [Google Scholar] [CrossRef]

- Durrett, R. Probability—Theory and Examples. In Cambridge Series in Statistical and Probabilistic Mathematics; Cambridge University Press: Cambridge, UK, 2019; Volume 49. [Google Scholar] [CrossRef]

- Skorokhod, A.V. Lectures on the Theory of Stochastic Processes; VSP: Utrecht, The Netherlands; TBiMC Scientific Publishers: Kiev, Ukraine, 1996. [Google Scholar]

- Dynkin, E.B. Theory of Markov Processes; Translated from the Russian by D. E. Brown and edited by T. Köváry, Reprint of the 1961 English translation; Dover Publications, Inc.: Mineola, NY, USA, 2006. [Google Scholar]

- Iosifescu, M.; Limnios, N.; Oprişan, G. Introduction to Stochastic Models; Applied Stochastic Methods Series; Translated from the 2007 French original by Vlad Barbu; ISTE: London, UK; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2010. [Google Scholar] [CrossRef]

- Pyke, R. Markov renewal processes with finitely many states. Ann. Math. Statist. 1961, 32, 1243–1259. [Google Scholar] [CrossRef]

- Feller, W. On semi-Markov processes. Proc. Nat. Acad. Sci. USA 1964, 51, 653–659. [Google Scholar] [CrossRef]

- Kurtz, T.G. Comparison of semi-Markov and Markov processes. Ann. Math. Statist. 1971, 42, 991–1002. [Google Scholar] [CrossRef]

- Korolyuk, V.; Swishchuk, A. Semi-Markov random evolutions. In Mathematics and its Applications; Translated from the 1992 Russian original by V. Zayats and revised by the authors; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1995; Volume 308. [Google Scholar] [CrossRef]

- Janssen, J.; de Dominicis, R. Finite non-homogeneous semi-Markov processes: Theoretical and computational aspects. Insur. Math. Econ. 1984, 3, 157–165. [Google Scholar] [CrossRef]

- Janssen, J.; Limnios, N. (Eds.) Semi-Markov Models and Applications; Selected papers from the 2nd International Symposium on Semi-Markov Models: Theory and Applications held in Compiègne, December 1998; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1999. [Google Scholar] [CrossRef]

- Janssen, J.; Manca, R. Applied Semi-Markov Processes; Springer: New York, NY, USA, 2006. [Google Scholar]

- Janssen, J.; Manca, R. Semi-Markov Risk Models for Finance, Insurance and Reliability; Springer: New York, NY, USA, 2007. [Google Scholar]

- Barbu, V.S.; Limnios, N. Semi-Markov chains and hidden semi-Markov models toward applications. In Lecture Notes in Statistics; Springer: New York, NY, USA, 2008; Volume 191. [Google Scholar]

- Grabski, F. Semi-Markov Processes: Applications in System Reliability and Maintenance; Elsevier: Amsterdam, The Netherlands, 2015. [Google Scholar]

- Ross, S.M. Applied Probability Models with Optimization Applications; Reprint of the 1970 original; Dover Publications, Inc.: New York, NY, USA, 1992. [Google Scholar]

- Moore, E.H.; Pyke, R. Estimation of the transition distributions of a Markov renewal process. Ann. Inst. Stat. Math. 1968, 20, 411. [Google Scholar] [CrossRef]

- Ouhbi, B.; Limnios, N. Nonparametric Estimation for Semi-Markov Processes Based on its Hazard Rate Functions. Stat. Inference Stoch. Process. 1999, 2, 151–173. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).