The Implications of Artificial Intelligence in Pedodontics: A Scoping Review of Evidence-Based Literature

Abstract

1. Introduction

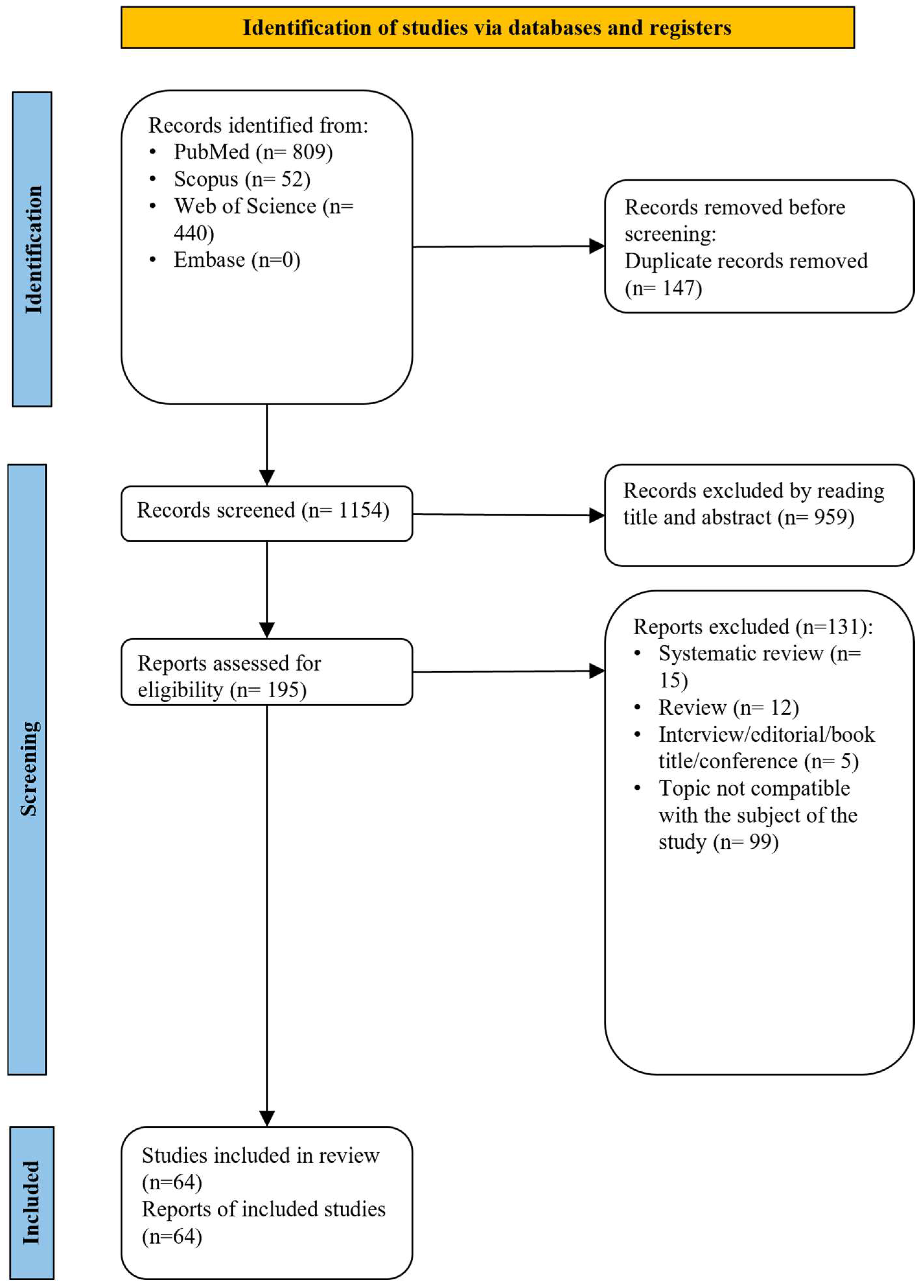

2. Materials and Methods

2.1. Research Question

2.2. Eligibility Criteria

2.3. Literature Sources and Search Parameters

2.4. Data Cleaning

2.4.1. Study Selection

2.4.2. Data Extraction

2.5. Information Synthesis

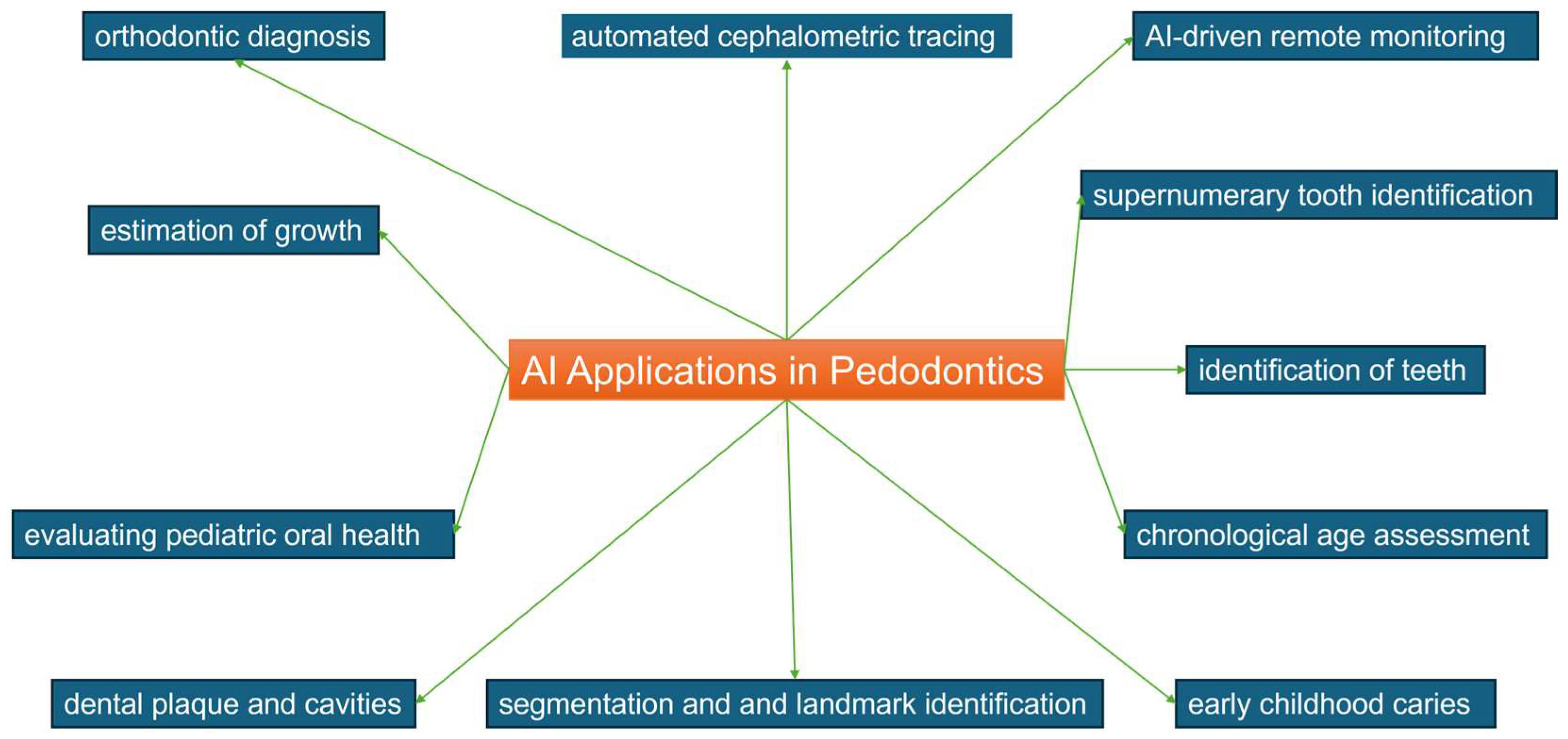

3. Results

3.1. Study Inclusion (Study Characteristics)

3.2. Study Characteristics

4. Discussion

4.1. Orthodontic Diagnosis

4.2. Automated Cephalometric Tracing

4.3. Segmentation and Landmark Identification

4.4. AI-Driven Remote Monitoring

4.5. Estimation of Growth and Development

4.6. Dental Plaque and Cavities

4.7. Evaluating Pediatric Oral Health through Toolkits Developed by Machine Learning

4.8. Supernumerary Tooth Identification

4.9. Early Childhood Caries

4.10. Chronological Age Assessment

4.11. Detecting Deciduous and Young Permanent Teeth

4.12. Application of AI in Adult and Pediatric Dentistry

4.13. Strengths and Limitations

4.14. Future Applications of AI

- AI-assisted diagnosis systems: Pediatric dentists should be able to diagnose cavities, dental abnormalities, and other oral pathologies in children early on with the use of sophisticated AI algorithms. To detect indications of dental problems swiftly and precisely, these systems might make use of natural language processing, radiography image analysis, and other clinical data.

- Personalization of treatment: AI might be used to tailor treatment regimens to the unique requirements of every child. This could entail figuring out the ideal treatment plan for a given dental disease while taking the patient’s age, general health, and preferences into account.

- Virtual assistance and tele-dentistry: AI-driven systems may be created to facilitate remote dental consultations and pediatric patient telemonitoring. These options may be especially helpful for families that do not have easy access to dental care or live in distant places.

- Automated learning and patient education: AI technologies have the potential to create engaging, customized educational programs for children that teach them good hygiene practices, make dental treatments understandable, and motivate them to take an active role in their oral healthcare.

- Patient behavior management: AI may be used to create sophisticated algorithms that control how young patients behave when they see the dentist. These systems could provide children with a cozy and comforting environment throughout therapies by utilizing interactive technologies and child psychology-based approaches.

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tandon, D.; Rajawat, J. Present and future of artificial intelligence in dentistry. J. Oral Biol. Craniofacial Res. 2020, 10, 391–396. [Google Scholar] [CrossRef] [PubMed]

- Mine, Y.; Iwamoto, Y.; Okazaki, S.; Nakamura, K.; Takeda, S.; Peng, T.Y.; Mitsuhata, C.; Kakimoto, N.; Kozai, K.; Murayama, T. Detecting the presence of supernumerary teeth during the early mixed dentition stage using deep learning algorithms: A pilot study. Int. J. Paediatr. Dent. 2022, 32, 678–685. [Google Scholar] [CrossRef] [PubMed]

- Hutson, M. AI Glossary: Artificial intelligence, in so many words. Science 2017, 357, 19. [Google Scholar] [CrossRef]

- Kunz, F.; Stellzig-Eisenhauer, A.; Boldt, J. Applications of Artificial Intelligence in Orthodontics;An Overview and Perspective Based on the Current State of the Art. Appl. Sci. 2023, 13, 3850. [Google Scholar] [CrossRef]

- Soegiantho, P.; Suryawinata, P.G.; Tran, W.; Kujan, O.; Koyi, B.; Khzam, N.; Algarves Miranda, L. Survival of Single Immediate Implants and Reasons for Loss: A Systematic Review. Prosthesis 2023, 5, 378–424. [Google Scholar] [CrossRef]

- Janiesch, C.; Zschech, P.; Heinrich, K. Machine learning and deep learning. Electron. Mark. 2021, 31, 685–695. [Google Scholar] [CrossRef]

- Dave, V.S.; Dutta, K. Neural network based models for software effort estimation: A review. Artif. Intell. Rev. 2014, 42, 295–307. [Google Scholar] [CrossRef]

- Gajic, M.; Vojinovic, J.; Kalevski, K.; Pavlovic, M.; Kolak, V.; Vukovic, B.; Mladenovic, R.; Aleksic, E. Analysis of the Impact of Oral Health on Adolescent Quality of Life Using Standard Statistical Methods and Artificial Intelligence Algorithms. Children 2021, 8, 1156. [Google Scholar] [CrossRef]

- Albayrak, B.; ÖZdemir, G.; Us, Y.Ö.; YÜZbasioglu, E. Artificial intelligence technologies in dentistry. J. Exp. Clin. Med. 2021, 38, 188–194. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Larrivée, N.; Lee, A.; Bilaniuk, O.; Durand, R. Use of Artificial Intelligence in Dentistry: Current Clinical Trends and Research Advances. J. Can. Dent. Assoc. 2021, 87, l7. [Google Scholar] [CrossRef]

- Kaya, E.; Gunec, H.G.; Aydin, K.C.; Urkmez, E.S.; Duranay, R.; Ates, H.F. A deep learning approach to permanent tooth germ detection on pediatric panoramic radiographs. Imaging Sci. Dent. 2022, 52, 275–281. [Google Scholar] [CrossRef] [PubMed]

- Allareddy, V.; Rengasamy Venugopalan, S.; Nalliah, R.P.; Caplin, J.L.; Lee, M.K.; Allareddy, V. Orthodontics in the era of big data analytics. Orthod. Craniofacial Res. 2019, 22 (Suppl. S1), 8–13. [Google Scholar] [CrossRef] [PubMed]

- Agrawal, P.; Nikhade, P. Artificial Intelligence in Dentistry: Past, Present, and Future. Cureus 2022, 14, e27405. [Google Scholar] [CrossRef] [PubMed]

- Kumar, Y.; Koul, A.; Singla, R.; Ijaz, M.F. Artificial intelligence in disease diagnosis: A systematic literature review, synthesizing framework and future research agenda. J. Ambient Intell. Humaniz. Comput. 2023, 14, 8459–8486. [Google Scholar] [CrossRef] [PubMed]

- Bichu, Y.M.; Hansa, I.; Bichu, A.Y.; Premjani, P.; Flores-Mir, C.; Vaid, N.R. Applications of artificial intelligence and machine learning in orthodontics: A scoping review. Prog. Orthod. 2021, 22, 18. [Google Scholar] [CrossRef]

- Bouletreau, P.; Makaremi, M.; Ibrahim, B.; Louvrier, A.; Sigaux, N. Artificial Intelligence: Applications in orthognathic surgery. J. Stomatol. Oral Maxillofac. Surg. 2019, 120, 347–354. [Google Scholar] [CrossRef] [PubMed]

- Peters, M.D.J.; Marnie, C.; Tricco, A.C.; Pollock, D.; Munn, Z.; Alexander, L.; McInerney, P.; Godfrey, C.M.; Khalil, H. Updated methodological guidance for the conduct of scoping reviews. JBI Evid. Synth. 2020, 18, 2119–2126. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. Br. Med. J. 2021, 372, n71. [Google Scholar] [CrossRef]

- Vishwanathaiah, S.; Fageeh, H.N.; Khanagar, S.B.; Maganur, P.C. Artificial Intelligence Its Uses and Application in Pediatric Dentistry: A Review. Biomedicines 2023, 11, 788. [Google Scholar] [CrossRef]

- Ahn, Y.; Hwang, J.J.; Jung, Y.H.; Jeong, T.; Shin, J. Automated Mesiodens Classification System Using Deep Learning on Panoramic Radiographs of Children. Diagnostics 2021, 11, 1477. [Google Scholar] [CrossRef]

- Amasya, H.; Cesur, E.; Yıldırım, D.; Orhan, K. Validation of cervical vertebral maturation stages: Artificial intelligence vs human observer visual analysis. Am. J. Orthod. Dentofac. Orthop. 2020, 158, e173–e179. [Google Scholar] [CrossRef] [PubMed]

- Arık, S.; Ibragimov, B.; Xing, L. Fully automated quantitative cephalometry using convolutional neural networks. J. Med. Imaging 2017, 4, 014501. [Google Scholar] [CrossRef] [PubMed]

- Bağ, İ.; Bilgir, E.; Bayrakdar, İ.; Baydar, O.; Atak, F.M.; Çelik, Ö.; Orhan, K. An artificial intelligence study: Automatic description of anatomic landmarks on panoramic radiographs in the pediatric population. BMC Oral Health 2023, 23, 764. [Google Scholar] [CrossRef]

- Bumann, E.E.; Al-Qarni, S.; Chandrashekar, G.; Sabzian, R.; Bohaty, B.; Lee, Y. A novel collaborative learning model for mixed dentition and fillings segmentation in panoramic radiographs. J. Dent. 2023, 140, 104779. [Google Scholar] [CrossRef]

- Bunyarit, S.S.; Nambiar, P.; Naidu, M.K.; Ying, R.P.Y.; Asif, M.K. Dental age estimation of Malay children and adolescents: Chaillet and Demirjian’s data improved using artificial multilayer perceptron neural network. Pediatr. Dent. J. 2021, 31, 176–185. [Google Scholar] [CrossRef]

- Caliskan, S.; Tuloglu, N.; Celik, O.; Ozdemir, C.; Kizilaslan, S.; Bayrak, S. A pilot study of a deep learning approach to submerged primary tooth classification and detection. Int. J. Comput. Dent. 2021, 24, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Wang, L.; Li, G.; Wu, T.H.; Diachina, S.; Tejera, B.; Kwon, J.J.; Lin, F.C.; Lee, Y.T.; Xu, T.; et al. Machine learning in orthodontics: Introducing a 3D auto-segmentation and auto-landmark finder of CBCT images to assess maxillary constriction in unilateral impacted canine patients. Angle Orthod. 2020, 90, 77–84. [Google Scholar] [CrossRef]

- Dong, W.; You, M.; He, T.; Dai, J.; Tang, Y.; Shi, Y.; Guo, J. An automatic methodology for full dentition maturity staging from OPG images using deep learning. Appl. Intell. 2023, 53, 29514–29536. [Google Scholar] [CrossRef]

- Duman, S.; Yilmaz, E.F.; Eser, G.; Çelik, Ö.; Bayrakdar, I.S.; Bilgir, E.; Costa, A.L.F.; Jagtap, R.; Orhan, K. Detecting the presence of taurodont teeth on panoramic radiographs using a deep learning-based convolutional neural network algorithm. Oral Radiol. 2023, 39, 207–214. [Google Scholar] [CrossRef]

- Felsch, M.; Meyer, O.; Schlickenrieder, A.; Engels, P.; Schönewolf, J.; Zöllner, F.; Heinrich-Weltzien, R.; Hesenius, M.; Hickel, R.; Gruhn, V.; et al. Detection and localization of caries and hypomineralization on dental photographs with a vision transformer model. NPJ Digit. Med. 2023, 6, 198. [Google Scholar] [CrossRef]

- Gomez-Rios, I.; Egea-Lopez, E.; Ortiz Ruiz, A.J. ORIENTATE: Automated machine learning classifiers for oral health prediction and research. BMC Oral Health 2023, 23, 408. [Google Scholar] [CrossRef] [PubMed]

- Ha, E.-G.; Jeon, K.J.; Kim, Y.H.; Kim, J.-Y.; Han, S.-S. Automatic detection of mesiodens on panoramic radiographs using artificial intelligence. Sci. Rep. 2021, 11, 23061. [Google Scholar] [CrossRef] [PubMed]

- Hansa, I.; Katyal, V.; Ferguson, D.J.; Vaid, N. Outcomes of clear aligner treatment with and without Dental Monitoring: A retrospective cohort study. Am. J. Orthod. Dentofac. Orthop. 2021, 159, 453–459. [Google Scholar] [CrossRef]

- Hansa, I.; Katyal, V.; Semaan, S.; Coyne, R.; Vaid, N. Artificial Intelligence Driven Remote Monitoring of orthodontic patients: Clinical Applicability and Rationale. Semin. Orthod. 2021, 27, 138–156. [Google Scholar] [CrossRef]

- Hwang, H.W.; Park, J.H.; Moon, J.H.; Yu, Y.; Kim, H.; Her, S.B.; Srinivasan, G.; Aljanabi, M.N.A.; Donatelli, R.E.; Lee, S.J. Automated identification of cephalometric landmarks: Part 2-Might it be better than human? Angle Orthod. 2020, 90, 69–76. [Google Scholar] [CrossRef] [PubMed]

- Iglovikov, V.; Rakhlin, A.; Kalinin, A.A.; Shvets, A. Pediatric Bone Age Assessment Using Deep Convolutional Neural Networks. bioRxiv 2018, 234120. [Google Scholar] [CrossRef]

- Karhade, D.S.; Roach, J.; Shrestha, P.; Simancas-Pallares, M.A.; Ginnis, J.; Burk, Z.J.S.; Ribeiro, A.A.; Cho, H.; Wu, D.; Divaris, K. An Automated Machine Learning Classifier for Early Childhood Caries. Pediatr. Dent. 2021, 43, 191–197. [Google Scholar] [PubMed]

- Kaya, E.; Gunec, H.G.; Gokyay, S.S.; Kutal, S.; Gulum, S.; Ates, H.F. Proposing a CNN Method for Primary and Permanent Tooth Detection and Enumeration on Pediatric Dental Radiographs. J. Clin. Pediatr. Dent. 2022, 46, 293–298. [Google Scholar] [CrossRef] [PubMed]

- Kaya, E.; Güneç, H.G.; Ürkmez, E.; Cesur Aydın, K.; Ateş, H.F. Deep Learning for Diagnostic Charting on Pediatric Panoramic Radiographs. Int. J. Comput. Dent. 2023, 52, 275. [Google Scholar] [CrossRef]

- Kilic, M.C.; Bayrakdar, I.S.; Çelik, Ö.; Bilgir, E.; Orhan, K.; Aydin, O.B.; Kaplan, F.A.; Saglam, H.; Odabas, A.; Aslan, A.F.; et al. Artificial intelligence system for automatic deciduous tooth detection and numbering in panoramic radiographs. Dentomaxillofacial Radiol. 2021, 50, 20200172. [Google Scholar] [CrossRef]

- Kim, D.W.; Kim, J.; Kim, T.; Kim, T.; Kim, Y.J.; Song, I.S.; Ahn, B.; Choo, J.; Lee, D.Y. Prediction of hand-wrist maturation stages based on cervical vertebrae images using artificial intelligence. Orthod. Craniofacial Res. 2021, 24 (Suppl. S2), 68–75. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Hwang, J.J.; Jeong, T.; Cho, B.H.; Shin, J. Deep learning-based identification of mesiodens using automatic maxillary anterior region estimation in panoramic radiography of children. Dentomaxillofacial Radiol. 2022, 51, 20210528. [Google Scholar] [CrossRef] [PubMed]

- Kök, H.; Acilar, A.M.; İzgi, M.S. Usage and comparison of artificial intelligence algorithms for determination of growth and development by cervical vertebrae stages in orthodontics. Prog. Orthod. 2019, 20, 41. [Google Scholar] [CrossRef] [PubMed]

- Koopaie, M.; Salamati, M.; Montazeri, R.; Davoudi, M.; Kolahdooz, S. Salivary cystatin S levels in children with early childhood caries in comparison with caries-free children; statistical analysis and machine learning. BMC Oral Health 2021, 21, 650. [Google Scholar] [CrossRef] [PubMed]

- Kunz, F.; Stellzig-Eisenhauer, A.; Zeman, F.; Boldt, J. Artificial intelligence in orthodontics: Evaluation of a fully automated cephalometric analysis using a customized convolutional neural network. J. Orofac. Orthop. 2020, 81, 52–68. [Google Scholar] [CrossRef] [PubMed]

- Kuwada, C.; Ariji, Y.; Fukuda, M.; Kise, Y.; Fujita, H.; Katsumata, A.; Ariji, E. Deep learning systems for detecting and classifying the presence of impacted supernumerary teeth in the maxillary incisor region on panoramic radiographs. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2020, 130, 464–469. [Google Scholar] [CrossRef] [PubMed]

- Larson, D.B.; Chen, M.C.; Lungren, M.P.; Halabi, S.S.; Stence, N.V.; Langlotz, C.P. Performance of a Deep-Learning Neural Network Model in Assessing Skeletal Maturity on Pediatric Hand Radiographs. Radiology 2018, 287, 313–322. [Google Scholar] [CrossRef] [PubMed]

- Lee, H.; Tajmir, S.; Lee, J.; Zissen, M.; Yeshiwas, B.A.; Alkasab, T.K.; Choy, G.; Do, S. Fully Automated Deep Learning System for Bone Age Assessment. J. Digit. Imaging 2017, 30, 427–441. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.-H.; Yu, H.-J.; Kim, M.-j.; Kim, J.-W.; Choi, J. Automated cephalometric landmark detection with confidence regions using Bayesian convolutional neural networks. BMC Oral Health 2020, 20, 270. [Google Scholar] [CrossRef]

- Lee, J.H.; Han, S.S.; Kim, Y.H.; Lee, C.; Kim, I. Application of a fully deep convolutional neural network to the automation of tooth segmentation on panoramic radiographs. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2020, 129, 635–642. [Google Scholar] [CrossRef]

- Lee, Y.-H.; Won, J.H.; Auh, Q.S.; Noh, Y.-K. Age group prediction with panoramic radiomorphometric parameters using machine learning algorithms. Sci. Rep. 2022, 12, 11703. [Google Scholar] [CrossRef] [PubMed]

- Lo Giudice, A.; Ronsivalle, V.; Spampinato, C.; Leonardi, R. Fully automatic segmentation of the mandible based on convolutional neural networks (CNNs). Orthod. Craniofacial Res. 2021, 24 (Suppl. S2), 100–107. [Google Scholar] [CrossRef] [PubMed]

- Mahto, R.K.; Kafle, D.; Giri, A.; Luintel, S.; Karki, A. Evaluation of fully automated cephalometric measurements obtained from web-based artificial intelligence driven platform. BMC Oral Health 2022, 22, 132. [Google Scholar] [CrossRef] [PubMed]

- Mladenovic, R.; Arsic, Z.; Velickovic, S.; Paunovic, M. Assessing the Efficacy of AI Segmentation in Diagnostics of Nine Supernumerary Teeth in a Pediatric Patient. Diagnostics 2023, 13, 3563. [Google Scholar] [CrossRef]

- Mohammad-Rahimi, H.; Motamadian, S.R.; Nadimi, M.; Hassanzadeh-Samani, S.; Minabi, M.A.S.; Mahmoudinia, E.; Lee, V.Y.; Rohban, M.H. Deep learning for the classification of cervical maturation degree and pubertal growth spurts: A pilot study. Korean J. Orthod. 2022, 52, 112–122. [Google Scholar] [CrossRef] [PubMed]

- Montúfar, J.; Romero, M.; Scougall-Vilchis, R.J. Automatic 3-dimensional cephalometric landmarking based on active shape models in related projections. Am. J. Orthod. Dentofac. Orthop. 2018, 153, 449–458. [Google Scholar] [CrossRef] [PubMed]

- Niño-Sandoval, T.C.; Guevara Perez, S.V.; González, F.A.; Jaque, R.A.; Infante-Contreras, C. An automatic method for skeletal patterns classification using craniomaxillary variables on a Colombian population. Forensic Sci. Int. 2016, 261, e151–e156. [Google Scholar] [CrossRef]

- Nishimoto, S.; Sotsuka, Y.; Kawai, K.; Ishise, H.; Kakibuchi, M. Personal Computer-Based Cephalometric Landmark Detection With Deep Learning, Using Cephalograms on the Internet. J. Craniofacial Surg. 2019, 30, 91–95. [Google Scholar] [CrossRef]

- Pang, L.; Wang, K.; Tao, Y.; Zhi, Q.; Zhang, J.; Lin, H. A New Model for Caries Risk Prediction in Teenagers Using a Machine Learning Algorithm Based on Environmental and Genetic Factors. Front. Genet. 2021, 12, 636867. [Google Scholar] [CrossRef]

- Park, J.H.; Hwang, H.W.; Moon, J.H.; Yu, Y.; Kim, H.; Her, S.B.; Srinivasan, G.; Aljanabi, M.N.A.; Donatelli, R.E.; Lee, S.J. Automated identification of cephalometric landmarks: Part 1-Comparisons between the latest deep-learning methods YOLOV3 and SSD. Angle Orthod. 2019, 89, 903–909. [Google Scholar] [CrossRef]

- Park, Y.-H.; Kim, S.-H.; Choi, Y.-Y. Prediction Models of Early Childhood Caries Based on Machine Learning Algorithms. Int. J. Environ. Res. Public Health 2021, 18, 8613. [Google Scholar] [CrossRef] [PubMed]

- Portella, P.D.; de Oliveira, L.F.; Ferreira, M.F.C.; Dias, B.C.; de Souza, J.F.; Assunção, L. Improving accuracy of early dental carious lesions detection using deep learning-based automated method. Clin. Oral Investig. 2023, 27, 7663–7670. [Google Scholar] [CrossRef] [PubMed]

- Ramos-Gomez, F.; Marcus, M.; Maida, C.A.; Wang, Y.; Kinsler, J.J.; Xiong, D.; Lee, S.Y.; Hays, R.D.; Shen, J.; Crall, J.J.; et al. Using a Machine Learning Algorithm to Predict the Likelihood of Presence of Dental Caries among Children Aged 2 to 7. Dent. J. 2021, 9, 141. [Google Scholar] [CrossRef]

- Rauf, A.M.; Mahmood, T.M.A.; Mohammed, M.H.; Omer, Z.Q.; Kareem, F.A. Orthodontic Implementation of Machine Learning Algorithms for Predicting Some Linear Dental Arch Measurements and Preventing Anterior Segment Malocclusion: A Prospective Study. Medicina 2023, 59, 1973. [Google Scholar] [CrossRef] [PubMed]

- Seo, H.; Hwang, J.; Jeong, T.; Shin, J. Comparison of Deep Learning Models for Cervical Vertebral Maturation Stage Classification on Lateral Cephalometric Radiographs. J. Clin. Med. 2021, 10, 3591. [Google Scholar] [CrossRef]

- Spampinato, C.; Palazzo, S.; Giordano, D.; Aldinucci, M.; Leonardi, R. Deep learning for automated skeletal bone age assessment in X-ray images. Med. Image Anal. 2017, 36, 41–51. [Google Scholar] [CrossRef]

- Tajmir, S.H.; Lee, H.; Shailam, R.; Gale, H.I.; Nguyen, J.C.; Westra, S.J.; Lim, R.; Yune, S.; Gee, M.S.; Do, S. Artificial intelligence-assisted interpretation of bone age radiographs improves accuracy and decreases variability. Skelet. Radiol. 2019, 48, 275–283. [Google Scholar] [CrossRef] [PubMed]

- Tanikawa, C.; Yagi, M.; Takada, K. Automated cephalometry: System performance reliability using landmark-dependent criteria. Angle Orthod. 2009, 79, 1037–1046. [Google Scholar] [CrossRef] [PubMed]

- Toledo Reyes, L.; Knorst, J.K.; Ortiz, F.R.; Brondani, B.; Emmanuelli, B.; Saraiva Guedes, R.; Mendes, F.M.; Ardenghi, T.M. Early Childhood Predictors for Dental Caries: A Machine Learning Approach. J. Dent. Res. 2023, 102, 999–1006. [Google Scholar] [CrossRef]

- Vila-Blanco, N.; Carreira, M.J.; Varas-Quintana, P.; Balsa-Castro, C.; Tomás, I. Deep Neural Networks for Chronological Age Estimation From OPG Images. IEEE Trans. Med. Imaging 2020, 39, 2374–2384. [Google Scholar] [CrossRef]

- Wang, L.; Gao, Y.; Shi, F.; Li, G.; Chen, K.C.; Tang, Z.; Xia, J.J.; Shen, D. Automated segmentation of dental CBCT image with prior-guided sequential random forests. Med. Phys. 2016, 43, 336. [Google Scholar] [CrossRef]

- Wang, X.; Cai, B.; Cao, Y.; Zhou, C.; Yang, L.; Liu, R.; Long, X.; Wang, W.; Gao, D.; Bao, B. Objective method for evaluating orthodontic treatment from the lay perspective: An eye-tracking study. Am. J. Orthod. Dentofac. Orthop. 2016, 150, 601–610. [Google Scholar] [CrossRef]

- Wang, Y.; Hays, R.D.; Marcus, M.; Maida, C.A.; Shen, J.; Xiong, D.; Coulter, I.D.; Lee, S.Y.; Spolsky, V.W.; Crall, J.J.; et al. Developing Children’s Oral Health Assessment Toolkits Using Machine Learning Algorithm. JDR Clin. Transl. Res. 2020, 5, 233–243. [Google Scholar] [CrossRef]

- You, W.; Hao, A.; Li, S.; Wang, Y.; Xia, B. Deep learning-based dental plaque detection on primary teeth: A comparison with clinical assessments. BMC Oral Health 2020, 20, 141. [Google Scholar] [CrossRef] [PubMed]

- You, W.Z.; Hao, A.M.; Li, S.; Zhang, Z.Y.; Li, R.Z.; Sun, R.Q.; Wang, Y.; Xia, B. Deep learning-based dental plaque detection on permanent teeth and the influenced factors. Zhonghua Kou Qiang Yi Xue Za Zhi 2021, 56, 665–671. [Google Scholar] [CrossRef]

- Zaborowicz, K.; Biedziak, B.; Olszewska, A.; Zaborowicz, M. Tooth and Bone Parameters in the Assessment of the Chronological Age of Children and Adolescents Using Neural Modelling Methods. Sensors 2021, 21, 6008. [Google Scholar] [CrossRef] [PubMed]

- Zaborowicz, M.; Zaborowicz, K.; Biedziak, B.; Garbowski, T. Deep Learning Neural Modelling as a Precise Method in the Assessment of the Chronological Age of Children and Adolescents Using Tooth and Bone Parameters. Sensors 2022, 22, 637. [Google Scholar] [CrossRef]

- Zaorska, K.; Szczapa, T.; Borysewicz-Lewicka, M.; Nowicki, M.; Gerreth, K. Prediction of Early Childhood Caries Based on Single Nucleotide Polymorphisms Using Neural Networks. Genes 2021, 12, 462. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Zhou, H.; Pu, L.; Gao, Y.; Tang, Z.; Yang, Y.; You, M.; Yang, Z.; Lai, W.; Long, H. Development of an Artificial Intelligence System for the Automatic Evaluation of Cervical Vertebral Maturation Status. Diagnostics 2021, 11, 2200. [Google Scholar] [CrossRef]

- Gillot, M.; Miranda, F.; Baquero, B.; Ruellas, A.; Gurgel, M.; Al Turkestani, N.; Anchling, L.; Hutin, N.; Biggs, E.; Yatabe, M.; et al. Automatic landmark identification in cone-beam computed tomography. Orthod. Craniofacial Res. 2023, 26, 560–567. [Google Scholar] [CrossRef]

- Lo Giudice, A.; Ronsivalle, V.; Santonocito, S.; Lucchese, A.; Venezia, P.; Marzo, G.; Leonardi, R.; Quinzi, V. Digital analysis of the occlusal changes and palatal morphology using elastodontic devices. A prospective clinical study including Class II subjects in mixed dentition. Eur. J. Paediatr. Dent. 2022, 23, 275–280. [Google Scholar] [CrossRef] [PubMed]

- Fichera, G.; Martina, S.; Palazzo, G.; Musumeci, R.; Leonardi, R.; Isola, G.; Lo Giudice, A. New Materials for Orthodontic Interceptive Treatment in Primary to Late Mixed Dentition. A Retrospective Study Using Elastodontic Devices. Materials 2021, 14, 1695. [Google Scholar] [CrossRef] [PubMed]

- Lo Giudice, A.; Ronsivalle, V.; Conforte, C.; Marzo, G.; Lucchese, A.; Leonardi, R.; Isola, G. Palatal changes after treatment of functional posterior cross-bite using elastodontic appliances: A 3D imaging study using deviation analysis and surface-to-surface matching technique. BMC Oral Health 2023, 23, 68. [Google Scholar] [CrossRef] [PubMed]

- Proffit, W.R.; Fields, H.; Larson, B.; Sarver, D. MContemporary Orthodontics-E-Book: Contemporary Orthodontics-E-Book; Elsevier Health Sciences: Amsterdam, The Netherlands, 2018. [Google Scholar]

- Murata, S.; Lee, C.; Tanikawa, C.; Date, S. Towards a Fully Automated Diagnostic System for Orthodontic Treatment in Dentistry. In Proceedings of the 2017 IEEE 13th International Conference on e-Science (e-Science), Auckland, New Zealand, 24–27 October 2017; pp. 1–8. [Google Scholar]

- Yagi, M.; Ohno, H.; Takada, K. Decision-making system for orthodontic treatment planning based on direct implementation of expertise knowledge. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2010, 2010, 2894–2897. [Google Scholar] [CrossRef] [PubMed]

- Ronsivalle, V.; Isola, G.; Lo Re, G.; Boato, M.; Leonardi, R.; Lo Giudice, A. Analysis of maxillary asymmetry before and after treatment of functional posterior cross-bite: A retrospective study using 3D imaging system and deviation analysis. Prog. Orthod. 2023, 24, 41. [Google Scholar] [CrossRef] [PubMed]

- Kim, B.M.; Kang, B.Y.; Kim, H.G.; Baek, S.H. Prognosis prediction for Class III malocclusion treatment by feature wrapping method. Angle Orthod. 2009, 79, 683–691. [Google Scholar] [CrossRef] [PubMed]

- Auconi, P.; Caldarelli, G.; Scala, A.; Ierardo, G.; Polimeni, A. A network approach to orthodontic diagnosis. Orthod. Craniofacial Res. 2011, 14, 189–197. [Google Scholar] [CrossRef] [PubMed]

- Hutton, T.J.; Cunningham, S.; Hammond, P. An evaluation of active shape models for the automatic identification of cephalometric landmarks. Eur. J. Orthod. 2000, 22, 499–508. [Google Scholar] [CrossRef]

- Hesamian, M.H.; Jia, W.; He, X.; Kennedy, P. Deep Learning Techniques for Medical Image Segmentation: Achievements and Challenges. J. Digit. Imaging 2019, 32, 582–596. [Google Scholar] [CrossRef]

- Broadbent, B.H. A new X-ray technique and its application to orthodontia. Angle Orthod. 2009, 1, 45–66. [Google Scholar]

- Leonardi, R.; Ronsivalle, V.; Barbato, E.; Lagravère, M.; Flores-Mir, C.; Lo Giudice, A. External root resorption (ERR) and rapid maxillary expansion (RME) at post-retention stage: A comparison between tooth-borne and bone-borne RME. Prog. Orthod. 2022, 23, 45. [Google Scholar] [CrossRef]

- Buhmann, M.; Melville, P.; Sindhwani, V.; Quadrianto, N.; Buntine, W.; Torgo, L.; Zhang, X.; Stone, P.; Struyf, J.; Blockeel, H.; et al. Random Decision Forests. In Encyclopedia of Machine Learning; Springer: New York, NY, USA, 2010. [Google Scholar]

- Ronsivalle, V.; Venezia, P.; Bennici, O.; D’Antò, V.; Leonardi, R.; Giudice, A.L. Accuracy of digital workflow for placing orthodontic miniscrews using generic and licensed open systems. A 3d imaging analysis of non-native.stl files for guided protocols. BMC Oral Health 2023, 23, 494. [Google Scholar] [CrossRef]

- Lo Giudice, A.; Ronsivalle, V.; Gastaldi, G.; Leonardi, R. Assessment of the accuracy of imaging software for 3D rendering of the upper airway, usable in orthodontic and craniofacial clinical settings. Prog. Orthod. 2022, 23, 22. [Google Scholar] [CrossRef]

- Leonardi, R.; Lo Giudice, A.; Farronato, M.; Ronsivalle, V.; Allegrini, S.; Musumeci, G.; Spampinato, C. Fully automatic segmentation of sinonasal cavity and pharyngeal airway based on convolutional neural networks. Am. J. Orthod. Dentofac. Orthop. 2021, 159, 824–835. [Google Scholar] [CrossRef]

- Chhikara, K.; Gupta, S.; Bose, D.; Kataria, C.; Chanda, A. Development and Trial of a Multipurpose Customized Orthosis for Activities of Daily Living in Patients with Spinal Cord Injury. Prosthesis 2023, 5, 467–479. [Google Scholar] [CrossRef]

- Reddy, L.K.V.; Madithati, P.; Narapureddy, B.R.; Ravula, S.R.; Vaddamanu, S.K.; Alhamoudi, F.H.; Minervini, G.; Chaturvedi, S. Perception about Health Applications (Apps) in Smartphones towards Telemedicine during COVID-19: A Cross-Sectional Study. J. Pers. Med. 2022, 12, 1920. [Google Scholar] [CrossRef]

- Ceraulo, S.; Lauritano, D.; Caccianiga, G.; Baldoni, M. Reducing the spread of COVID-19 within the dental practice: The era of single use. Minerva Dent. Oral Sci. 2020, 72, 206–209. [Google Scholar] [CrossRef]

- Qazi, N.; Pawar, M.; Padhly, P.P.; Pawar, V.; D’Amico, C.; Nicita, F.; Fiorillo, L.; Alushi, A.; Minervini, G.; Meto, A. Teledentistry: Evaluation of Instagram posts related to bruxism. Technol. Health Care Off. J. Eur. Soc. Eng. Med. 2023, 31, 1923–1934. [Google Scholar] [CrossRef]

- Hägg, U.; Taranger, J. Maturation indicators and the pubertal growth spurt. Am. J. Orthod. 1982, 82, 299–309. [Google Scholar] [CrossRef]

- Leonardi, R.; Ronsivalle, V.; Lagravere, M.O.; Barbato, E.; Isola, G.; Lo Giudice, A. Three-dimensional assessment of the spheno-occipital synchondrosis and clivus after tooth-borne and bone-borne rapid maxillary expansion. Angle Orthod. 2021, 91, 822–829. [Google Scholar] [CrossRef]

- Khanagar, S.B.; Al-Ehaideb, A.; Vishwanathaiah, S.; Maganur, P.C.; Patil, S.; Naik, S.; Baeshen, H.A.; Sarode, S.S. Scope and performance of artificial intelligence technology in orthodontic diagnosis, treatment planning, and clinical decision-making—A systematic review. J. Dent. Sci. 2021, 16, 482–492. [Google Scholar] [CrossRef]

- Fishman, L.S. Chronological versus skeletal age, an evaluation of craniofacial growth. Angle Orthod. 1979, 49, 181–189. [Google Scholar] [CrossRef]

- Morris, J.M.; Park, J.H. Correlation of dental maturity with skeletal maturity from radiographic assessment: A review. J. Clin. Pediatr. Dent. 2012, 36, 309–314. [Google Scholar] [CrossRef]

- Demirjian, A.; Buschang, P.H.; Tanguay, R.; Patterson, D.K. Interrelationships among measures of somatic, skeletal, dental, and sexual maturity. Am. J. Orthod. 1985, 88, 433–438. [Google Scholar] [CrossRef]

- Korde, S.J.; Daigavane, P.S.; Shrivastav, S.S. Skeletal Maturity Indicators-Review. Int. J. Sci. Res. 2017, 6, 361–370. [Google Scholar]

- Hägg, U.; Taranger, J. Menarche and voice change as indicators of the pubertal growth spurt. Acta Odontol. Scand. 1980, 38, 179–186. [Google Scholar] [CrossRef]

- Fishman, L.S. Radiographic evaluation of skeletal maturation. A clinically oriented method based on hand-wrist films. Angle Orthod. 1982, 52, 88–112. [Google Scholar] [CrossRef]

- Baccetti, T.; Franchi, L.; McNamara, J.A. The Cervical Vertebral Maturation (CVM) Method for the Assessment of Optimal Treatment Timing in Dentofacial Orthopedics. Semin. Orthod. 2005, 11, 119–129. [Google Scholar] [CrossRef]

- Alkhal, H.A.; Wong, R.W.; Rabie, A.B. Correlation between chronological age, cervical vertebral maturation and Fishman’s skeletal maturity indicators in southern Chinese. Angle Orthod. 2008, 78, 591–596. [Google Scholar] [CrossRef]

- Szemraj, A.; Wojtaszek-Słomińska, A.; Racka-Pilszak, B. Is the cervical vertebral maturation (CVM) method effective enough to replace the hand-wrist maturation (HWM) method in determining skeletal maturation?-A systematic review. Eur. J. Radiol. 2018, 102, 125–128. [Google Scholar] [CrossRef]

- Mito, T.; Sato, K.; Mitani, H. Cervical vertebral bone age in girls. Am. J. Orthod. Dentofac. Orthop. 2002, 122, 380–385. [Google Scholar] [CrossRef]

- Gandini, P.; Mancini, M.; Andreani, F. A comparison of hand-wrist bone and cervical vertebral analyses in measuring skeletal maturation. Angle Orthod. 2006, 76, 984–989. [Google Scholar] [CrossRef]

- Navlani, M.; Makhija, P.G. Evaluation of skeletal and dental maturity indicators and assessment of cervical vertebral maturation stages by height/width ratio of third cervical vertebra. J. Pierre Fauchard Acad. (India Sect.) 2013, 27, 73–80. [Google Scholar] [CrossRef]

- McNamara, J.A., Jr.; Franchi, L. The cervical vertebral maturation method: A user’s guide. Angle Orthod. 2018, 88, 133–143. [Google Scholar] [CrossRef]

- Baccetti, T.; Franchi, L.; McNamara, J.A., Jr. An improved version of the cervical vertebral maturation (CVM) method for the assessment of mandibular growth. Angle Orthod. 2002, 72, 316–323. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Liu, J.; Xu, T.; Long, X.; Lin, J. Quantitative skeletal evaluation based on cervical vertebral maturation: A longitudinal study of adolescents with normal occlusion. Int. J. Oral Maxillofac. Surg. 2010, 39, 653–659. [Google Scholar] [CrossRef] [PubMed]

- Gabriel, D.B.; Southard, K.A.; Qian, F.; Marshall, S.D.; Franciscus, R.G.; Southard, T.E. Cervical vertebrae maturation method: Poor reproducibility. Am. J. Orthod. Dentofac. Orthop. 2009, 136, e471–e477, discussion 478–480. [Google Scholar] [CrossRef]

- Zhao, X.G.; Lin, J.; Jiang, J.H.; Wang, Q.; Ng, S.H. Validity and reliability of a method for assessment of cervical vertebral maturation. Angle Orthod. 2012, 82, 229–234. [Google Scholar] [CrossRef]

- Joseph, B.; Prasanth, C.S.; Jayanthi, J.L.; Presanthila, J.; Subhash, N. Detection and quantification of dental plaque based on laser-induced autofluorescence intensity ratio values. J. Biomed. Opt. 2015, 20, 048001. [Google Scholar] [CrossRef]

- Volgenant, C.M.C.; Fernandez, Y.M.M.; Rosema, N.A.M.; van der Weijden, F.A.; Ten Cate, J.M.; van der Veen, M.H. Comparison of red autofluorescing plaque and disclosed plaque-a cross-sectional study. Clin. Oral Investig. 2016, 20, 2551–2558. [Google Scholar] [CrossRef]

- Carter, K.; Landini, G.; Walmsley, A.D. Automated quantification of dental plaque accumulation using digital imaging. J. Dent. 2004, 32, 623–628. [Google Scholar] [CrossRef]

- Sagel, P.A.; Lapujade, P.G.; Miller, J.M.; Sunberg, R.J. Objective quantification of plaque using digital image analysis. Monogr. Oral Sci. 2000, 17, 130–143. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Gomez, J.; Khan, S.; Peru, D.; Ellwood, R. Red fluorescence imaging for dental plaque detection and quantification: Pilot study. J. Biomed. Opt. 2017, 22, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Hays, R.; Wang, Y.; Marcus, M.; Maida, C.; Shen, J.; Xiong, D.; Lee, S.; Spolsky, V.; Coulter, I.; et al. Short form development for oral health patient-reported outcome evaluation in children and adolescents. Qual. Life Res. Int. J. Qual. Life Asp. Treat. Care Rehabil. 2018, 27, 1599–1611. [Google Scholar] [CrossRef]

- Anthonappa, R.P.; King, N.M.; Rabie, A.B.; Mallineni, S.K. Reliability of panoramic radiographs for identifying supernumerary teeth in children. Int. J. Paediatr. Dent. 2012, 22, 37–43. [Google Scholar] [CrossRef] [PubMed]

- Anil, S.; Anand, P.S. Early Childhood Caries: Prevalence, Risk Factors, and Prevention. Front. Pediatr. 2017, 5, 157. [Google Scholar] [CrossRef] [PubMed]

- Leonardi, R.; Ronsivalle, V.; Isola, G.; Cicciù, M.; Lagravère, M.; Flores-Mir, C.; Lo Giudice, A. External root resorption and rapid maxillary expansion in the short-term: A CBCT comparative study between tooth-borne and bone-borne appliances, using 3D imaging digital technology. BMC Oral Health 2023, 23, 558. [Google Scholar] [CrossRef]

- Olszowski, T.; Adler, G.; Janiszewska-Olszowska, J.; Safranow, K.; Kaczmarczyk, M. MBL2, MASP2, AMELX, and ENAM gene polymorphisms and dental caries in Polish children. Oral Dis. 2012, 18, 389–395. [Google Scholar] [CrossRef]

- Han, M.; Du, S.; Ge, Y.; Zhang, D.; Chi, Y.; Long, H.; Yang, J.; Yang, Y.; Xin, J.; Chen, T.; et al. With or without human interference for precise age estimation based on machine learning? Int. J. Leg. Med. 2022, 136, 821–831. [Google Scholar] [CrossRef]

| AI Term | Explanation |

|---|---|

| Artificial intelligence (AI) | The primary objective of artificial intelligence (AI) is to build intelligent computers that can learn from data and find solutions on their own. When machines are given new information, they may utilize statistical and probabilistic approaches to learn from past examples and make better decisions. Learning, the process by which behavior or performance is enhanced via practice and experience, is a necessary component of intelligent systems [5]. AI is being used in medicine to replace the manual standards that were previously used in machine learning (ML) and deep learning (DL) [6]. |

| Machine learning (ML) | Machine learning (ML) uses computers to build statistical models and algorithms that improve understanding and reasoning [7]. It entails training algorithms on massive datasets to identify patterns, which are subsequently applied to forecast or choose fresh data [8]. |

| Deep learning (DL) | Deep learning (DL) is a subfield of machine learning that uses artificial neural networks to mimic how the human brain learns [9]. Because they are trained using a large amount of data and algorithms, they are more accurate [6]. Layers of tiny communication units called neurons make up an artificial neural network (ANN), a kind of deep learning structure. An ANN with several hidden layers is all that deep learning is. A subset of ANNs called convolutional neural networks (CNNs) are mostly used in general medicine and dentistry [7,10]. CNNs, a subclass of DL, use fully connected layers plus a subsampling layer that resembles a multilayer perceptron to analyze an image with visual cortex cells [11]. |

| Big data | The term “big data” refers to large datasets and/or the compilation of all accessible data from many sources. It may be utilized to identify patterns that result in unique experiences for different individuals [12]. |

| Author/Year/Country/Journal | Sample | AI Method | Type of Study | Results | Objective |

|---|---|---|---|---|---|

| Ahn 2021 [20], Korea, Diagnostics | 1100 patients | SqueezeNet, ResNet-18, ResNet-101, and Inception-ResNet-v2 | Retrospective study | ResNet-101 and Inception-ResNet-v2 both have scores, recall, accuracy, and precision greater than 90%. SqueezeNet produced outcomes that were comparatively worse. | The objective of this research was to create and assess deep learning models that automatically identify mesiodens in panoramic radiographs with primary or mixed dentition. |

| Amasya 2020 [21], Turkey, American Journal of Orthodontics and Dentofacial Orthopedics | 647 patients | ANN model | Retrospective study | The ranges of intraobserver agreement were wκ = 0.92–0.98, cκ = 0.65–0.85, and 70.8–87.5%. The ranges of interobserver agreement were wκ = 0.76–0.92, cκ = 0.45–0.65, and 50–72.2%. Observers 1, 2, 3, and 4 and the ANN model agreed on the following values: wκ = 0.85 (cκ = 0.52, 59.7%), wκ = 0.8 (cκ = 0.4, 50%), wκ = 0.87 (cκ = 0.55, 62.5%), and wκ = 0.91 (cκ = 0.53, 61.1%), respectively (p < 0.001). The ANN model and the human observers showed an average agreement of 58.3%. | The objective of this study was to create an artificial neural network (ANN) model for the analysis of cervical vertebral maturation (CVM) and compare the model’s output to observations made by human observers. |

| Arik 2017 [22], USA, Journal of Medical Imaging (Bellingham) | 250 patients | CNN | Retrospective study | The findings show good anatomical type classification accuracy (~76% average classification accuracy for test set) and excellent anatomical landmark detection accuracy (~1% to 2% greater success detection rate for a 2 mm range compared with the top benchmarks in the literature). | The aim of this study was to use deep convolutional neural networks (CNNs) for completely automated quantitative cephalometry. |

| Bag 2023 [23], Turkey, BMC Oral Health | 981 patients | YOLOv5 | Retrospective study | A total of 14,804 labels were created, including those for the mandibular canal (1879), maxillary sinus (1922), orbit (1944), mental foramen (884), foramen mandible (1885), incisura mandible (1922), articular eminence (1645), and condylar (1733) and coronoid (990) processes. The orbit (1), incisura mandible (0.99), maxillary sinus (0.98), and mandibular canal (0.97) yielded the highest F1 scores. The orbit, maxillary sinus, mandibular canal, incisura mandible, and condylar process yielded the best sensitivity levels. The articular eminence (0.92) and mental foramen (0.92) had the lowest sensitivity ratings. | The aim of the research was to examine the efficacy and dependability of artificial intelligence in identifying maxillary and mandibular anatomic components shown on panoramic radiographs in children. |

| Bumann 2023 [24], USA, Journal of Dentistry | 448 patients | CNN | Retrospective study | Models that can distinguish between primary and permanent teeth (mean average precision [mAP] 95.32% and performance [F-1] 92.50%), as well as the dental fillings that are connected to them (mAP 91.53% and F-1 91.00%) were created by using these high-performance classifiers. Additionally, a brand-new approach to cooperative learning was created that makes use of these two classifiers to improve recognition performance (mAP 94.09 percent and F-1 93.41 percent). | The objective of this work was to create a novel collaborative learning model that simultaneously identified and distinguished between primary and permanent teeth as well as detected fillings in order to support the assessment of panoramic radiographs. |

| Bunyarit 2021 [25], Malaysia, Pediatric Dental Journal | 1569 patients | ANN model | Retrospective study | An initial comparison of estimated DA based on dental maturity scores from Chaillet and Demirjian with known CA revealed that DA was consistently overestimated for all age groups, with 2.09 ± 0.90 years for Malay boys and 2.79 ± 0.99 years for Malay girls (paired t-test, p < 0.05). An adaption utilizing artificial neural networks was used to create new dental maturity scores (NDA) that were more appropriate for the Malay individuals in order to improve the age estimation. According to the paired t-test results (p > 0.05), there was a greater degree of accuracy in determining dental age (0.035 ± 0.84 years for boys and 0.048 ± 0.928 years for girls). | The purpose of this study was to evaluate the applicability of the Chaillet and Demirjian-introduced 8-tooth approach on Malaysian children aged 5.00–17.99 years and to produce teeth maturity ratings for age estimation based on artificial neural networks (ANNs) that are more accurate. |

| Caliskan 2021 [26], Turkey, International Journal of Computerized Dentistry | CNN | Retrospective study | When compared to the reference standard, the system’s identification and categorization had a high success rate. When compared to observers, the system’s performance was incredibly accurate. | Comparing the effectiveness and dependability of an artificial intelligence (AI) program for the identification and categorization of submerged teeth in panoramic radiographs was the study’s main goal. | |

| Chen 2020 [27], China, Angle Orthodontist | 60 patients | LINKS | Prospective study | With an average dice ratio of 0.80 for three-dimensional image segmentations and a minimal mean difference of two voxels on the midsagittal plane for digitized landmarks between the manually identified and the machine learning-based (LINKS) methods, the maxillary structure was successfully auto-segmented. Between the impaction ([2.37 ± 0.34] × 10−4 mm3) and nonimpaction ([2.36 ± 0.35] × 10−4 mm3) sides of SG, there was no discernible variation in bone volume. Comparing the SG and CG maxillae, the former exhibited considerably lower volumes, widths, heights, and depths (p < 0.05). | In an effort to advance therapeutically useful knowledge, the aim of the research was to (1) provide a novel machine learning technique; and (2) evaluate maxillary structural variation in unilateral canine impaction. |

| Dong 2023 [28], China, Applied Intelligence | YOLOv3 and SOS-Net | Prospective study | With an F1 score improvement of 3.77%, the ablation trials and visual analysis of SOS-Net confirmed the efficacy and interpretability of the SFC block and ASA loss. Furthermore, comparison trials show that the suggested methodology outperforms cutting-edge techniques in maturity staging and age estimation. | In this study, an automatic system for determining the whole permanent dentition’s tooth maturity phases was developed. | |

| Duman 2023 [29], Turkey, Oral Radiology | 434 patients | CNN | Retrospective study | There were 29 false positives, 17 false negatives, and 109 genuine positives out of the 43 test group photos with 126 labels. The results of the taurodont tooth segmentation process were 0.8650, 0.7898, and 0.8257 for sensitivity, precision, and F1 score, respectively. | The goal of the work was to create and assess an AI model based on a CNN for the diagnosis of taurodontism in teeth using panoramic radiography. |

| Felsch 2023 [30], Germany, NPJ Digital Medicine | 18,179 images | SegFormer-B5 | Retrospective study | For the fine-tuned model, the overall diagnostic performance was 0.959, 0.977, and 0.978 in terms of IoU, AP, and ACC. The most significant caries classes of dentin cavities (0.692, 0.830, and 0.997) and non-cavitations (0.630, 0.813, and 0.990) had high levels of matching data. Similar outcomes were seen for atypical restoration (0.829, 0.902, and 0.999) for delimited opacity associated with MIH (0.672, 0.827, and 0.993). | The goal of this work was to create an AI-based algorithm that can identify, categorize, and pinpoint MIH and caries. |

| Gajic 2021 [8], Serbia, Children | 374 patients | CNN | Retrospective study | Both human intuition and computer algorithms arrived at the same result on the optimal division of respondents. Thus, it was shown that the technique has high quality and that there is a necessity of carrying out these kinds of analyses in dental research. | This study aimed to assess the effects of dental health on adolescents’ quality of life using both artificial intelligence algorithms and conventional statistical approaches. |

| Gomez-Rios 2023 [31], Spain, BMC Oral Health | 274 patients | ORIENTATE | Case study | As a case study, its application to a dataset of children with special healthcare requirements (SHCN) who were healthy and receiving deep sedation was presented. The feature selection technique on the sample dataset, in spite of its tiny size, identified a collection of characteristics with an F1 score of 0.83 and an ROC (AUC) of 0.92 that might predict the need for a second sedation. Eight predictive factors were identified and ranked according to the model’s significance for each of the two groups. A comparison with a traditional study and a discussion that drew conclusions from the relevance and interaction plots were also given. | The aim of the work was to present and explain ORIENTATE, a software that enables clinical practitioners without specialized technical knowledge to automatically use machine learning classification methods. |

| Ha 2021 [32], Korea, Scientific Reports | 612 patients | YOLOv3 | Retrospective study | In the original photos, the accuracy of the external test dataset was 89.8%, while the accuracy of the internal test dataset was 96.2%. The accuracies of the exterior test dataset were 86.7%, 95.3%, and 86.7% for the primary, mixed, and permanent dentition, respectively, whereas the accuracies of the internal test dataset were 96.7%, 97.5%, and 93.3%. In both test datasets, the CLAHE images produced less accurate findings than the original photos. | The goal of this work was to create an AI model that can identify mesiodens on panoramic radiographs of different dentition groups. |

| Hansa 2021 [33], South Africa, American Journal of Orthodontics and Dentofacial Orthopedics | 90 patients | Dental Monitoring | Prospective study | In terms of sample size, age, gender, angle categorization, maxillary and mandibular irregularity index, and the number of initial aligners, the two groups were homogeneous (p > 0.05). In the DM group, there was a substantial (p = 0.001) drop in the number of appointments, by 3.5 visits (33.1%). The DM group also experienced a significant (p = 0.001) decrease in the time to the first refinement (1.7 months). Final tooth positions were statistically (p < 0.05) more accurate for the DM group in comparison to the Invisalign-anticipated positions for the maxillary anterior dentition in rotational motions and the mandibular anterior dentition in buccal–lingual linear movement. For the maxillary posterior teeth, Invisalign therapy without DM was more in line with anticipated tooth placements at the tip. The clinically relevant criteria (>0.5 mm or >2°) were not exceeded by any of these variations, yet the DM group did so in 1.7 fewer months. | The results of Invisalign clear aligner therapy were compared for treatment length, number of sessions, refinements and refinement aligners, and Invisalign’s accuracy in achieving projected tooth positions (aligner tracking) with and without Dental Monitoring (DM). |

| Hansa 2021 [34], South Africa, Seminars in Orthodontics | Artificial intelligence-driven remote monitoring | Case series | A few advantages that AI-driven remote monitoring (AIRM) provides for patient care before, during, and after treatment for aligners and fixed appliances are illustrated graphically in this clinical display. Surprisingly, remote monitoring actually helps with patient–physician communication. This allows the clinician to keep a closer eye on the patient and speak with them directly as needed. | Reviewing the clinical applicability and justification of orthodontic remote monitoring was the goal of this case series. | |

| Hwang 2020 [35], Korea, Angle Orthodontist | 1028 cephalograms | YOLOv3 | Retrospective study | In contrast to the human intraexaminer variability of repeated manual detections, which showed a detection error of 0.97 ± 1.03 mm, artificial intelligence consistently detected similar positions on each landmark after multiple trials. Between humans and AI, the mean detection error was 1.46 ± 2.97 mm. Between human examiners, the mean difference was 1.50 ± 1.48 mm. Less than 0.9 mm separated the detection errors made by AI and human examiners on average, and this difference did not appear to be clinically significant. | The aim of the study was to compare the detection patterns of 80 cephalometric landmarks found by an automated identification system (AI) with those found by human examiners. The AI was based on a recently suggested deep learning technology called You-Only-Look-Once version 3 (YOLOv3). |

| Iglovikov 2018 [36], USA, bioRxiv | 12,600 images | CNN | Retrospective study | The accuracy was higher in region C, which is composed of the proximal phalanges and metacarpals, with an MAE of 8.42 months. The MAE for region A (the entire image) was 8.08 months. With an MAE of 7.52 months, the linear ensemble of the three regional models performed better than other models mentioned in the study. With very few exceptions, the geographical trend MAE(B) > MAE(C) > MAE(A) > MAE (ensemble) was also shown for various model types and patient cohorts. | In this research, data from the 2017 Pediatric Bone Age Challenge, hosted by the Radiological Society of North America, were used to demonstrate the applicability of a fully automated deep learning method to the problem of bone age assessment. |

| Karhade 2021 [37], USA, Pediatric Dentistry | 6404 patients | AutoML | Retrospective study | The highest AUC (0.74), Se (0.67), and PPV (0.64) scores were obtained by a parsimonious model that included two terms (i.e., children’s age and parent-reported child oral health status: excellent/very good/good/fair/poor). This model also performed similarly when an external National Health and Nutrition Examination Survey (NHANES) dataset was used (AUC equaled 0.80, Se equaled 0.73, and PPV equaled 0.49). On the other hand, a comprehensive model with 12 factors that included dental home, fluoride exposure, oral health behaviors, parental education, and race/ethnicity performed worse (AUC equaled 0.66, Se equaled 0.54, and PPV equaled 0.61). | The aim of the research was to design and assess an automated machine learning algorithm (AutoML) for the classification of children based on the status of early childhood caries (ECC). |

| Kaya 2022 [11], Turkey, Imaging Science in Dentistry | 4518 patients | YOLOv4 | Retrospective study | With an average precision value of 94.16% and an F1 value of 0.90, the YOLOv4 model, which identified permanent tooth germs on pediatric panoramic radiographs, demonstrated a high degree of relevance. 90 ms was the average YOLOv4 inference time. | This study evaluated a deep learning system’s efficacy in detecting permanent tooth germs on pediatric panoramic radiographs. |

| Kaya 2022 [38], USA, Journal of Clinical and Pediatric Dentistry | 4545 patients | YOLOv4 | Retrospective study | Using pediatric panoramic radiographs, the model successfully identified and numbered both primary and permanent teeth, with a weighted F1 score of 0.91, a mean average precision (mAP) value of 92.22%, and a mean average recall (mAR) value of 94.44%. When it came to automated tooth detection and numbering on pediatric panoramic radiographs, the suggested CNN approach performed well and quickly. | The goal of this paper was to assess how well a deep learning system performed on pediatric panoramic radiographs for automated tooth recognition and numbering. |

| Kaya 2023 [39], USA, International Journal of Computerized Dentistry | 4821 patients | YOLOv4 | Retrospective study | With high F1 values of 0.95, 0.90, and 0.76 for immature teeth, permanent tooth germs, and brackets, respectively, the YOLOv4 model accurately diagnosed these conditions. Despite the encouraging outcomes, this model has certain drawbacks for a few dental procedures and structures, such as fillings, root canals, and extra teeth. | This study set out to assess how well a deep learning software system performed in identifying and categorizing dental procedures and structures on pediatric patients’ panoramic radiographs. |

| Kilic 2021 [40], Turkey, Dentomaxillofacial Radiology | 421 images | CNN | Retrospective study | The artificial intelligence system was able to identify and classify children’s deciduous teeth based on panoramic radiographs. There were high rates of precision and sensitivity. According to estimates, the F1 score, precision, and sensitivity were 0.9686, 0.9571, and 0.9804, respectively. | This study assessed the automated identification and tagging of children’s deciduous teeth as seen on panoramic radiographs using a deep learning technique. |

| Kim 2021 [41], Korea, Orthodontics and Craniofacial Research | 455 patients | CNN | Retrospective study | Eight machine learning models made up the final ensemble model. The RMSE, round MAE, and MAE were, in that order, 1.20, 0.87, and 0.90. | The aim of this study was to use cervical vertebrae (CV) pictures to forecast the stages of hand–wrist maturation and evaluate the precision of the suggested methods. |

| Kim 2022 [42], Korea, Dentomaxillofacial Radiology | 988 radiographs | DeepLabV3plus and Inception-ResNet-v2 | Comparative study | The mean BF score was 0.907, the IoU was 0.762, and the segmentation performance utilizing posterior molar space in panoramic radiographs was 0.839. For the diagnosis of mesiodens using automatic segmentation, the mean values of accuracy, precision, recall, F1 score, and area under the curve were, respectively, 0.971, 0.971, 0.971, and 0.971. | The goal of this research was to create and assess the efficacy of a deep learning model that uses an automatic region of interest (ROI) setting and mesiodens diagnosis in panoramic radiographs of developing youngsters. |

| Kok 2019 [43], Turkey, Progress in Orthodontics | 300 patients | k-Nearest neighbors (k-NN), naive Bayes (NB), decision tree (Tree), artificial neural network (ANN), support vector machine (SVM), random forest (RF), and logistic regression (Log.Regr.) algorithms. | Comparative study | The methods with the highest accuracy in detecting cervical vertebrae stages were k-NN: CVS 5 (60.9%)–CVS 6 (78.7%) and SVM: CVS 3 (73.2%)–CVS 4 (58.5%) and CVS 1 (97.1%)–CVS 2 (90.5%), according to a confusion matrices decision tree. With the exception of CVS 5, which had the third-highest accuracy value of 47.4%, the ANN algorithm was found to have the second-highest accuracy values (93%, 89.7%, 68.8%, 55.6%, and 78%, respectively) in identifying all stages. The most stable algorithm was the ANN, with an average rank of 2.17, based on how well the algorithms predicted the CVS classes. | The goal of this research was to compare the performance of these algorithms and identify the cervical vertebrae stages (CVSs) for growth and development periods using the seven most popular artificial intelligence classifiers. |

| Koopaie 2021 [44], Iran, BMC Oral Health | 40 patients | Human cystatin S ELISA kit | Comparative study | In the early childhood caries group, the mean salivary cystatin S concentration was 191.55 ± 81.90 (ng/mL), while in the caries-free group, it was 370.06 ± 128.87 (ng/mL). Salivary cystatin S levels in early childhood caries and caries-free groups differed statistically significantly (p = 0.032), according to a t-test study. The logistic regression model based on salivary cystatin S levels and birth weight showed the highest, and an acceptable, potential for differentiating early childhood caries from caries-free controls, according to an analysis of the area under the curve (AUC) and accuracy of the ROC curve. Moreover, data about salivary cystatin S levels improved the machine learning techniques’ capacity to distinguish early childhood caries from controls without caries. | This work used statistical analysis and machine learning techniques to evaluate salivary cystatin S levels and demographic information in early childhood caries cases compared to caries-free cases. |

| Kunz 2020 [45], Germany, Journal of Orofacial Orthopedics | 50 patients | CNN | Comparative study | The AI’s forecasts and the gold standard set by humans nearly never differed statistically. It appears that there are no clinically significant differences between the two analyses. | The purpose of this study was to develop an artificial intelligence (AI) program specifically designed to generate an automated cephalometric X-ray examination. |

| Kuwada 2020 [46], Japan, Oral Surgery, Oral Medicine, Oral Pathology and Oral Radiology | 550 patients | AlexNet, VGG16, and DetectNet | Comparative study | In general, DetectNet yielded the greatest diagnostic effectiveness results. By comparison, VGG16 produced substantially lower values than DetectNet and AlexNet. Recall, precision, and the F-measure for detection in the incisor region were all 1.0, indicating faultless detection, according to an evaluation of DetectNet’s detection capability. | The purpose of this study was to evaluate and compare the efficacy of three deep learning algorithms for the classification of individuals with fully erupted incisors who have maxillary impacted supernumerary teeth (ISTs). |

| Larson 2018 [47], USA, Radiology | 200 images | CNN | Comparative study | With a mean RMS and MAD of 0.63 and 0.50 years, respectively, the mean difference between the reviewers’ and the model’s predictions of bone age was zero years. The three reviewers’ estimates, the clinical report’s estimate, and the model’s estimate all fell within a 95% confidence interval. The RMS for the Digital Hand Atlas dataset was 0.73 years, as opposed to a previously published model’s result of 0.61 years. | The aim of this research was to examine how well an automated deep learning bone age assessment model that uses hand radiographs performed in comparison to existing automated models and experienced radiologists. |

| Lee 2017 [48], USA, Journal of Digital Imaging | 1200 images | ImageNet | Retrospective study | Using held-out test photos, the models obtained 57.32 and 61.40% accuracies for the female and male cohorts, respectively, using an ImageNet pretrained and fine-tuned convolutional neural network (CNN). In 1 year, 90.39% of female test radiographs had a BAA assigned, and in 2 years, 98.11% of them did. In one year, 94.18% of male test radiographs were allocated, and in two years, 99.00%. The attention maps that showed the features the trained model utilized for BAA were made using the input occlusion approach. | This paper presented a fully automated deep learning pipeline designed to run BAA, normalize and preprocess input radiographs, and segment a region of interest. |

| Lee 2020 [49], Korea, BMC Oral Health | 400 patients | Bayesian Convolutional Neural Networks (BCNNs) | Retrospective study | In the 2, 3, and 4 mm range, the framework demonstrated a mean landmark error (LE) of 1.53 ± 1.74 mm and attained successful detection rates (SDRs) of 82.11, 92.28, and 95.95%, respectively. In particular, the gonion, the most inaccurate location in earlier research, almost halved its error when compared to the other points. Furthermore, these findings showed a noticeably improved ability to recognize anatomical anomalies. The framework can help with clinical convenience and decision-making by offering confidence regions (95%) that take uncertainty into account. | In this work, the authors sought to create a novel framework that makes use of Bayesian Convolutional Neural Networks (BCNNs) to locate cephalometric landmarks with confidence areas. |

| Lee 2020 [50], Korea, Oral Surgery, Oral Medicine, Oral Pathology and Oral Radiology | 50 radiographs | CNN | Prospective study | The suggested approach yielded a mean IoU of 0.877 and an F1 score of 0.875 (precision: 0.858, recall: 0.893). The segmentation method’s visual inspection revealed a strong similarity to the actual data. | The aim of this paper was to assess a fully deep learning mask region-based convolutional neural network (R-CNN) technique that uses individual annotation of panoramic radiographs for automatic teeth segmentation. |

| Lee 2022 [51], Korea, Scientific Reports | 471 patients | CNN | Prospective study | The AUCs achieved for classifying young ages (10–19 years old) in the prediction of three age-group categories ranged from 0.85 to 0.88 for five different machine learning models. The AUC value for adult individuals (20–49 years old) was roughly 0.73, whereas those for the elder age group (50–69 years old) varied from 0.82 to 0.88. With mean AUCs ranging from 0.85 to 0.87 and 80 to 0.90, respectively, age groups 1 (10–19 years old) and 6 (60–69 years old) had the best scores among the six age-group classifications. Based on LDA weights, a feature analysis revealed that the L-Pulp Area was significant in differentiating between young people (10–49 years old), while L-Crown, U-Crown, L-Implant, U-Implant, and periodontitis served as predictors for elderly people (50–69 years). | The objective of this research was to examine the correlation between 18 radiomorphometric characteristics of panoramic radiographs and age, and to employ five machine learning algorithms to accurately and non-invasively predict the age group of individuals with permanent teeth. |

| Lo Giudice 2021 [52], Italy, Orthodontics and Craniofacial Research | 40 patients | CNN | Retrospective study | The ICC score of 0.937 indicated a strong correlation between the measurements, and there was a technique error of 0.24 mm3. There was a 0.71 (±0.49) cm3 difference between the approaches, although p > 0.05 indicated that the difference was not statistically significant. Results of 90.35% (±1.88) (tolerance 0.5 mm) and 96.32% (±1.97%) (tolerance 1.0 mm) matched percentages were found. The DSC percentage discrepancies in the assessments conducted using the two methodologies were 2.8% and 3.1%, respectively. | The aim of this paper was to assess the efficacy of a fully automated deep learning-based technique for mandibular segmentation from CBCT images. |

| Mahto 2022 [53], Nepal, BMC Oral Health | 30 patients | WebCeph | Comparative study | The ICC value for each measurement was more than 0.75. Seven parameters (ANB, FMA, IMPA/L1 to MP (°), LL to E-line, L1 to NB (mm), L1 to NB (°), S-N to Go-Gn) had ICC values greater than 0.9, while five parameters (UL to E-line, U1 to NA (mm), SNA, SNB, and U1 to NA (°)) had ICC values between 0.75 and 0.90. | The aim of this study was to assess the validity and reliability of automated cephalometric measurements obtained from “WebCeph”TM and to compare the linear and angular cephalometric measurements obtained from the web-based fully automated artificial intelligence (AI)-driven platform with those from manual tracing. |

| Mine 2022 [2], Japan, International Journal of Pediatric Dentistry | 220 radiographs | AlexNet, VGG16-TL, and Inceptionv3-TL | Comparative study | In terms of accuracy, sensitivity, specificity, and area under the ROC curve, the VGG16-TL model performed best; nevertheless, the other models displayed comparable performance. | The purpose of this study was to use deep learning based on convolutional neural networks (CNNs) to identify children in the early stages of mixed dentition who had excess teeth. |

| Mladenovic 2023 [54], Serbia, Diagnostics | 1 patient | CNN | Case study | Multiple tools must be used in practice because there is currently no one tool that can meet all clinical needs regarding supernumerary teeth and their segmentation. | The aim of this study was to examine the possibilities, advantages, and drawbacks of artificial intelligence; the authors offered a highly rare instance of a youngster with nine extra teeth together with two commercial tooth segmentation technologies. |

| Mohammad-Rahimi 2022 [55], Iran, Korean Journal of Orthodontics | 890 radiographs | CNN | Retrospective study | For the six-class CVM diagnosis, the model’s validation and test accuracies were 62.63% and 61.62%, respectively. Furthermore, the three-class classification’s validation and test accuracies for the model were 75.76% and 82.83%, respectively. Moreover, significant agreements were noted between one of the two orthodontists and the AI model. | By examining lateral cephalometric radiographs, a novel deep learning model for assessing growth spurts and cervical vertebral maturation (CVM) degree was presented and evaluated in this work. |

| Montufar 2018 [56], Mexico, American Journal of Orthodontics and Dentofacial Orthopedics | 24 patients | CNN | Retrospective study | This method produced a 3.64-mm mean error in the localization of cephalometric landmarks from the 3D gold standard. The porion and sella regions had the highest localization errors due to their limited volume definition. | Using an active shape model to look for landmarks in related projections, this paper proposed a novel method for automatic cephalometric landmark localization on three-dimensional (3D) cone beam computed tomography (CBCT) volumes. |

| Niño-Sandoval 2016 [57], Colombia, Forensic Science International | 229 radiographs | Support vector machine | Retrospective study | The angles Pr-A-N, N-Pr-A, A-N-Pr, A-Te-Pr, A-Pr-Rhi, Rhi-A-Pr, Pr-A-Te, Te-Pr-A, Zm-A-Pr, and PNS-A-Pr were used to define accuracy, which came out to be 74.51%. Class II and Class III were correctly distinguished from one another, as demonstrated by the class precision and class recall. | The purpose of this work was to recreate the normal mandibular position on a modern Colombian sample by using an automatic non-parametric technology called support vector machines to classify skeletal patterns using craniomaxillary factors. |

| Nishimoto 2019 [58], Japan, Journal of Craniofacial Surgery | 219 images | CNN | Prospective study | Prediction errors were 17.02 pixels on average and 16.22 pixels on the median. In cephalometric analysis, the neural network’s predicted angles and lengths did not differ statistically from those derived from manually plotted points. | The authors developed a deep learning neural network-based automatic landmark prediction system. |

| Pang 2021 [59], China, Frontiers in Genetics | 1055 patients | CNN | Prospective study | Cohort 2 (the testing cohort) had an AUC of 0.73 for the caries risk prediction model, demonstrating a good degree of discrimination ability. The caries risk prediction algorithm was able to correctly identify people with high and very high caries risk, but it underestimated the risks for people with low and very low caries risk, according to risk stratification. | The purpose of this work was to use a machine learning algorithm to build a new model for predicting teenage caries risk based on genetic and environmental factors. |

| Park 2019 [60], Korea, Angle Orthodontist | 283 images | You-Only-Look-Once version 3 (YOLOv3) and Single Shot MultiBox Detector (SSD) methods. | Retrospective study | For 38 out of 80 landmarks, the YOLOv3 algorithm performed more accurately than the SSD. There was no statistically significant difference between YOLOv3 and SSD for the remaining 42 out of 80 markers. YOLOv3 error plots revealed a more isotropic trend in addition to a reduced error range. For YOLOv3 and SSD, the average computational times per image were 2.89 s and 0.05 s, respectively. YOLOv3 had an accuracy that was around 5% greater than the best standards reported in the literature. | The aim of this paper was to evaluate two of the most recent deep learning algorithms for the automatic recognition of cephalometric landmarks in terms of accuracy and computational efficiency. |

| Park 2021 [61], Korea, International Journal of Environmental Research and Public Health | 4195 patients | XGBoost (version 1.3.1), random forest, and LightGBM (version 3.1.1) | Comparative study | When the misclassification rates and area under the receiver operating characteristic (AUROC) values of the various models were evaluated, it was found that the AUROC values of the four prediction models ranged from 0.774 to 0.785. Moreover, there was no discernible variation found in the AUROC values of the four models. | In this study, the objective was to create prediction models for early childhood caries based on machine learning and compare their effectiveness with the conventional regression model. |

| Portella 2023 [62], Brazil, Clinical Oral Investigations | 2481 teeth | VGG19 | Retrospective study | There were 8749 photos in the training dataset and 140 images in the test dataset. With an F1 score of 0.887, accuracy of 0.879, precision of 0.949, positive agreement of 0.827, and negative agreement of 0.800, VGG19 performed well. Examiners US, ND, and SP had accuracy rates in Phase I of 0.543, 0.771, and 0.807, respectively. For the same examiners, the accuracy rates increased to 0.679, 0.886, and 0.857 in Phase II. For every examiner, the proportion of right responses was considerably greater in Phase II compared to Phase I (McNemar test; p < 0.05). | The aim of this paper was to evaluate the suitability of this deep learning method as a supplemental tool and look into how well a convolutional neural network (CNN) can identify healthy teeth and early carious lesions on occlusal surfaces. |

| Ramos-Gomez 2021 [63], USA, Dentistry Journal | 182 patients | Random forest | Retrospective study | The age of the parents (MDG = 0.84; MDA = 1.97), unmet needs (MDG = 0.71; MDA = 2.06), and the child’s race (MDG = 0.38; MDA = 1.92) were among the survey factors that were highly predictive of active caries. Strong predictors of caries experience were the age of the parent (MDG = 2.97; MDA = 4.74), whether the child had experienced dental pain in the previous year (MDG = 2.20; MDA = 4.04), and whether the child had experienced caries in the preceding year (MDG = 1.65; MDA = 3.84). | Using a machine learning algorithm applied to parent survey assessments of their child’s oral health, this study explored the potential of screening for dental caries in youngsters. |

| Rauf 2023 [64], Iraq, Medicina | 450 images | k-NN | Prospective study | Following the dataset’s application to both ML algorithms—linear regression and k-nearest neighbors—the evaluation measure reveals that k-NN outperforms LR in terms of prediction accuracy. The accuracy result was close to 99%. | In order to prevent future crowding in patients who are growing or even in adults seeking orthodontic treatment, the study attempted to use artificial intelligence to forecast the arch width as a diagnostic tool for orthodontics. |

| Seo 2021 [65], Korea, Journal of Clinical Medicine | 600 radiographs | ResNet-18, MobileNet-v2, ResNet-50, ResNet-101, Inceptionv3, and Inception-ResNet-v2 | Retrospective study | More than 90% accuracy was shown by all deep learning models, with Inception-ResNet-v2 demonstrating the best performance. | This work aimed to assess and compare the effectiveness of six cutting-edge deep learning models for cervical vertebral maturation (CVM) based on convolutional neural networks (CNNs) on lateral cephalometric radiographs. Additionally, it visualized the CVM classification for each model through the use of gradient-weighted class activation map (Grad-CAM) technology. |

| Spampinato 2017 [66], Italy, Medical Image Analysis | 1391 radiographs | CNN | Retrospective study | The findings demonstrated state-of-the-art performance, with an average difference between manual and automatic evaluation of almost 0.8 years. | In this work, the objective was to present and evaluate multiple deep learning methods for automatically determining the age of skeleton bones. |

| Tajmir 2019 [67], USA, Skeletal Radiology | 280 radiographs | CNN | Comparative study | The mean six-reader cohort accuracy was 63.6 and 97.4% within a year, whereas the total AI BAA accuracy was 68.2% and 98.6% within the same time frame. The mean single-reader RMSE was 0.661 years, whereas the AI RMSE was 0.601 years. The combined RMSE dropped from 0.661 to 0.508 years, whereas each RMSE dropped independently with AI support. The ICC was 0.9951 with AI and 0.9914 without. | The aim of this paper was to examine a cohort of pediatric radiologists’ BAA performance both with and without AI support. |

| Tanikawa 2009 [68], Japan, Angle Orthodontist | 65 radiographs | CNN | Retrospective study | The algorithm had a mean success rate of 88% (range, 77–100%) in correctly identifying the specified anatomic entities in all the images and determining the landmark placements. | This work set out to assess the reliability of a system that uses landmark-dependent criteria specific to each landmark to automatically recognize anatomic landmarks and surrounding structures on lateral cephalograms. |

| Toledo-Reyes 2023 [69], Brazil, Journal of Dental Research | 639 patients | ML algorithms: decision tree, random forest, and extreme gradient boosting (XGBoost) | Prospective study | When it came to predicting caries in primary teeth following a 2-year follow-up, all models’ areas under the receiver operating characteristic curve (AUCs) during training and testing were greater than 0.70, with baseline caries severity being the best predictor. After ten years, the XGBoost-based SHAP algorithm, in reference to the testing set, achieved an AUC greater than 0.70, indicating that the top predictors of caries in permanent teeth were caries experience, nonuse of fluoridated toothpaste, parent education, higher frequency of sugar consumption, low frequency of visits to relatives, and poor parents’ perception of their children’s oral health. | The aim of this research was to use early childhood factors to create and evaluate caries prognosis models using machine learning (ML) in primary and permanent teeth after two and ten years of follow-up. |

| Vila-Blanco 2020 [70], Spain, IEEE Transactions on Medical Imaging | 2289 radiographs | DAnet and DASnet | Comparative study | The median absolute error (AE) and median error (E) for the entire database were reduced by around 4 months, demonstrating that DASNet beats DANet in every way. As the real ages of the individuals lowered while analyzing DASNet in the reduced datasets, the AE values declined as well, reaching a median of roughly 8 months in the patients under the age of 15. In comparison to the most advanced manual age estimation techniques, the DASNet method shown notably fewer over- or underestimation issues. | This paper proposed two totally automatic approaches to determine a subject’s chronological age from an OPG image. |

| Wang 2016 [71], USA, Medical Physics | 30 patients | Random forest | Retrospective study | Based on manually labelled ground truth, segmentation results on CBCT images of thirty participants were validated, both qualitatively and numerically. The authors’ method yielded average dice ratios of 0.94 and 0.91 for the mandible and maxilla, respectively. These values are significantly higher than those of the state-of-the-art method that relies on sparse representation (p-value < 0.001). | The aim of this paper was to incorporate prior spatial information into classification-based segmentation, as this approach has outperformed the reliance on only picture appearances. Additionally, this approach has sought to overcome the difficulties in CBCT maxilla and mandible segmentation, in contrast to the majority of other methods that only concentrate on brain pictures. |

| Wang 2016 [72], China, American Journal of Orthodontics and Dentofacial Orthopedics | 88 patients | Eye-tracking device | Comparative study | There were significant differences observed in the scanpaths of laypersons viewing pretreatment smiling faces compared to those of laypersons viewing normal smiling subjects. Specifically, there was less fixation time (p < 0.05) and later attention capture (p < 0.05) on the eyes, and more fixation time (p < 0.05) and earlier attention capture (p < 0.05) on the mouth. When comparing post-treatment smiling individuals, similar findings were observed: there was a decrease in fixation duration (p < 0.05) and an earlier attention capture on the lips (p < 0.05), as well as a decrease in fixation time (p < 0.05) and a later attention capture on the eyes (p < 0.05). Compared to the normal individuals and post-treatment patients, the pretreatment repose faces showed an earlier attention catch on the mouth (p < 0.05). The categorization of pretreatment patients from normal subjects (treatment need) and pretreatment patients from post-treatment patients (treatment result) using a linear support vector machine demonstrated accuracies of 97.2% and 93.4%, respectively. | The aim of this research was to investigate the potential of an eye-tracking technique as a new, impartial means of assessing orthodontic treatment outcomes and need from the viewpoint of the general public, in contrast to more conventional evaluation methods. |