Abstract

Calibration is a highly challenging task, in particular in multiple yield curve markets. This paper is a first attempt to study the chances and challenges of the application of machine learning techniques for this. We employ Gaussian process regression, a machine learning methodology having many similarities with extended Kálmán filtering, which has been applied many times to interest rate markets and term structure models. We find very good results for the single-curve markets and many challenges for the multi-curve markets in a Vasiček framework. The Gaussian process regression is implemented with the Adam optimizer and the non-linear conjugate gradient method, where the latter performs best. We also point towards future research.

1. Introduction

It is the aim of this paper to apply machine learning techniques to the calibration of bond prices in multi-curve markets in order to predict the term structure of basic instruments. The challenges are two-fold: On the one side, interest rate markets are characterized by having not only single instruments, such as stocks, but also full term structures, i.e., the curve of yields for different investment periods. On the other side, in multi-curve markets, not only one term structure is present but multiple yield curves for different lengths of the future investment period are given in the market and have to be calibrated. This is a very challenging task (see Eberlein et al. (2019) for an example using Lévy processes).

The co-existence of different yield curves associated to different tenors is a phenomenon in interest rate markets which originates with the 2007–2009 financial crisis. In this time, spreads between different yield curves reached their peak beyond 200 basis points. Since then, the spreads have remained on a non-negligible level. The most important curves to be considered in the current economic environment are the overnight indexed swap (OIS) rates and the interbank offered rates (abbreviated as Ibor, such as Libor rates from the London interbank market) of various tenors. In the European market, these are, respectively, the Eonia-based OIS rates and the Euribor rates. The literature on multiple yield curves is manifold and we refer to the works of Grbac and Runggaldier (2015) and Henrard (2014) for an overview. The general theory and affine models have been developed and applied, among others, in Grbac et al. (2016, 2019); Fontana et al. (2020); Grbac et al. (2015); Mercurio (2010).

The recent developments have seen many machine learning techniques, in particular deep learning became very popular. While deep learning typically needs big data, here we are more confronted with small data together with a high-dimensional prediction problem, since a full curve (the term structure), and in the multi-curve market, even multiple curves have to be calibrated and predicted. To be able to deal with this efficiently, one would like to incorporate information from the past, and a Bayesian approach seems best suited to this. We choose Gaussian process regression (GPR) as our machine learning approach which ensures fast calibration (see De Spiegeleer et al. (2018)). This is a non-parametric Bayesian approach to regression and is able to capture non-linear relationships between variables. The task of learning in Gaussian processes simplifies to determining suitable properties for the covariance and mean function, which determine the calibration of our model. We place ourselves in the context of the Vasiček model, which is a famous affine model (see the works of Filipović (2009) and Keller-Ressel et al. (2018) for a guide to the literature and details.)

It is generally accepted that machine learning performs better with higher-dimensional data. In a calibration framework such as the one we are studying here with GPR, it turns out that this seems not to be the case. While we find very good results for the single-curve markets, we find many challenges for the multi-curve markets in a Vasiček framework. A possible reason for this is that, in the small data setting considered here, the GPR is not able to exploit sparseness in the data.

Related Literature

Calibration of log-bond prices in the simple Vasiček model framework via Gaussian processes for machine learning has already been applied in Beleza Sousa et al. (2012) for a single maturity and in Beleza Sousa et al. (2014) for several maturities. They both rely on the theory of Gaussian processes presented in Rasmussen and Williams (2006). While in Section 3 we extend Beleza Sousa et al. (2012) by presenting an additional optimization method, Beleza Sousa et al. (2014) and Section 4 constitute a different access to the calibration of interest rate markets with several maturities. While Beleza Sousa et al. (2014) calibrated solely zero-coupon log-bond prices, from which one is not able to construct a post-crisis multi-curve interest rate market, we calibrate zero-coupon log-bond prices and log--bond prices on top in order to encompass forward rate agreements and to be conform with the multi-curve framework (cf. Fontana et al. (2020) for the notion of -bonds). The modeling of log--bond prices allows building forward-rate-agreements (FRAs) so that we have the basic building blocks for multi-curve interest rate markets.

2. Gaussian Process Regression

Following Rasmussen and Williams (2006, chps. 2 and 5), we provide a brief introduction to Gaussian process regression (GPR). For a moment, consider a regression problem with additive Gaussian noise: we observe together with covariates and assume that

where is a regression function depending on the unknown parameter and is a d-dimensional noise vector which we assume to consist of i.i.d. mean-zero Gaussian errors and standard deviation .

In the Bayesian approach, we are not left without any knowledge on but may start from a prior distribution; sometimes this distribution can be deducted from previous experience in similar experiments, while otherwise one chooses an uninformative prior. Assuming continuity of the prior, we denote the prior density of by . Inference is now performed by computing the a posteriori distribution of conditional on the observation . This can be achieved by Bayes’ rule, i.e.,

where we assume only that the distribution of does not depend on x. Similarly, we can compute from by integrating with respect to .

For a moment, we drop the dependence on x in the notation. Assuming only that the observation is normally distributed, we are already able to state the marginal likelihood : it is given up to a normalizing constant c by

If we assume moreover that is jointly normally distributed with y, we arrive at the multivariate Gaussian case. Hence, the conditional distribution is again Gaussian and can be computed explicitly. Starting from , where we split and

we can compute through some straightforward calculations. First, observe that . We obtain . Hence, the marginal likelihood is given by

Second, we compute

This formula is the basis for the calibration in the Vasiček model, as we show now.

3. The Single-Curve Vasiček Interest Rate Model

As a first step, we calibrate the single-curve Vasiček interest rate model following Beleza Sousa et al. (2012). To begin with, we want to mention the important difference in mathematical finance between the objective measure and the risk-neutral measure . The statistical propagation of all stochastic processes takes place under . Arbitrage-free pricing means computing prices for options; of course, the prices depend on the driving stochastic factors. The fundamental theorem of asset pricing now yields that arbitrage-free prices of traded assets can be computed by taking expectations under a risk-neutral measure of the discounted pay-offs. For a calibration, the risk-neutral measure has to be fitted to observed option prices, which is our main target.

In this sense, we consider zero-coupon bond prices under the risk-neutral measure . The Vasiček model is a single-factor model driven by the short rate which is given by the solution of the stochastic differential equation

with initial value . Here, W is a -Brownian motion and are positive constants. For a positive , the process r converges to the long-term mean .

The price process of the zero-coupon bond price with maturity T is denoted by with . The Vasiček model is an affine bond price model, which implies that bond prices take an exponential affine form, i.e.,

Here, the functions are given by

We recall that the solution of the stochastic differential Equation (4) is given by (cf. Brigo and Mercurio (2001, chp. 4.2)),

Together with Equation (5), this implies that the zero coupon bond prices are log-normally distributed. To apply the Gaussian process regression, we consider in the sequel log-bond prices for ,

The corresponding mean function is given by

and the covariance function is given by

An observation now consists of a vector of log-bond prices with additional Gaussian i.i.d. noise with variance . Consequently, with

We define the vector of hyper-parameters as and aim at finding the most reasonable values of hyper-parameters, minimizing the negative log marginal likelihood defined in Equation (1) with the underlying mean covariance function from Equation (9).

Remark 1.

[Extending the observation] Note that, in the set-up considered here, the driving single factor process r is considered not observable at ; compare Equation (9) which depends on only (and on Θ of course). Such an approach may be appropriate for short time intervals. The propagation of to the future times is solely based on the risk-neutral evolution under the risk-neutral measure via μ and c.

If, on the contrary, updating of r should be incorporated, the situation gets more complicated since the evolution of r (taking place under the statistical measure ) needs to be taken into account. One can pragmatically proceed as follows: assume that at each time point r is observable. We neglect the information contained in this observation about the hyper-parameters Θ. Then, the formulas need to be modified only slightly by using conditional expressions. For example, conditional on for equals

and, if , this simplifies even further.

In a similar spirit, one may extend the observation by adding bond prices with different maturities or, additionally, derivatives. A classical calibration example would be the fitting of the hyper-parameters to the observation of bond prices and derivatives, both with different maturities, at a fixed time . The Gaussian process regression hence also provides a suitable approach for this task. We refer to De Spiegeleer et al. (2018) for an extensive calibration example in this regard.

We refer to Rasmussen and Williams (2006) for other approaches to model selection such as cross validation or alignment. For our application purposes, maximizing the log-marginal likelihood is a good choice since we already have information about the choice of covariance structure, and it only remains to optimize the hyper-parameters (cf. Fischer et al. (2016)).

3.1. Prediction with Gaussian Processes Regression

The prediction of new log-bond prices given some training data is of interest in the context of the risk management of portfolios and options on zero-coupon bonds and other interest derivatives. The application to pricing can be done along the lines of Dümbgen and Rogers (2014), which we do not deepen here. Once having found the calibrated parameters , we can apply those calibrated parameters to make predictions for new input data.

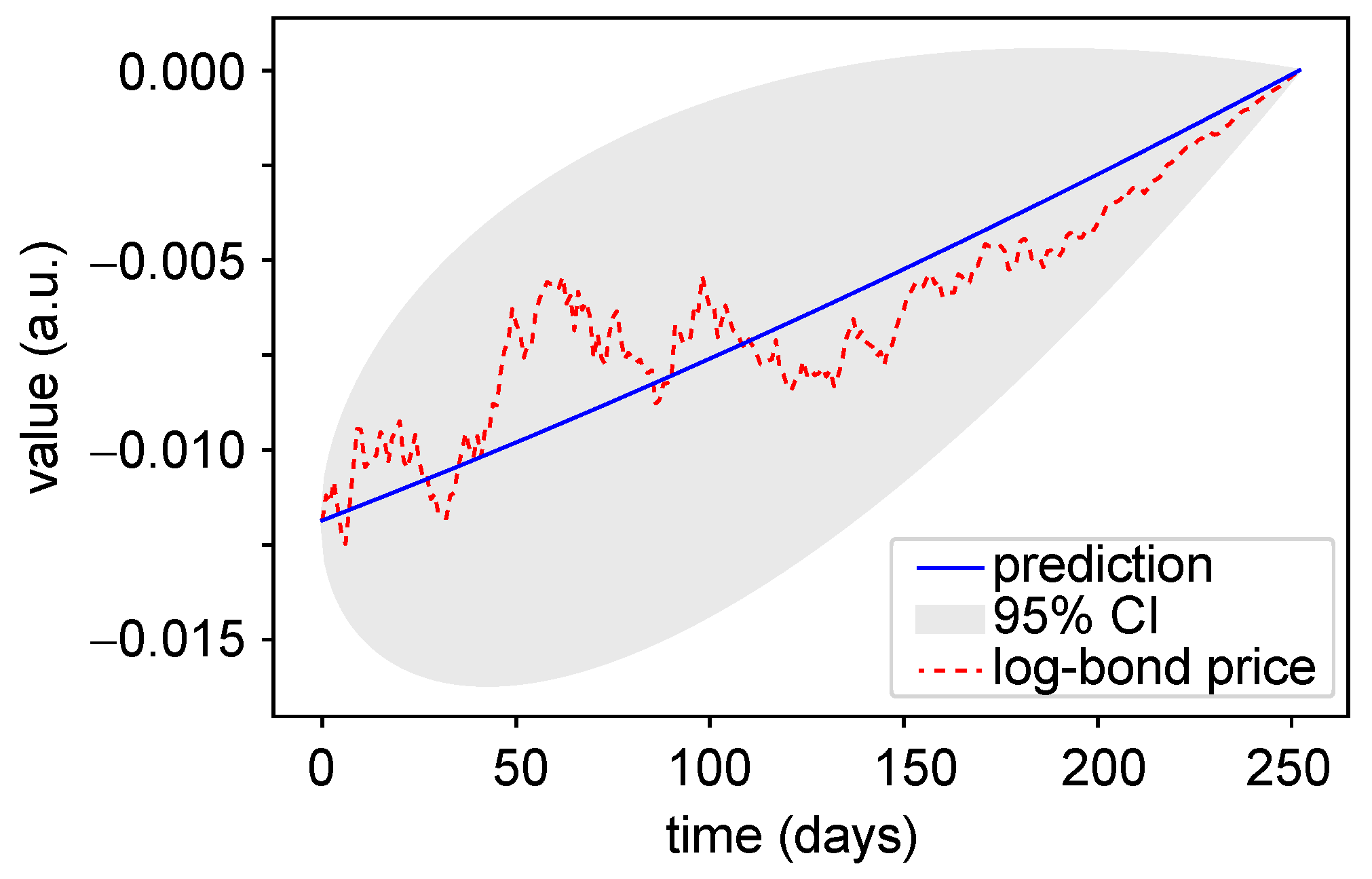

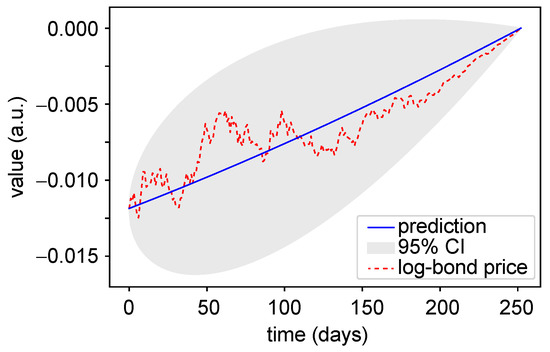

For the dynamic prediction of log-bond prices, we seek a function that is able to predict new output values given some new input values, initially based on a set of training data. For this purpose, we specify in a first step the prior distribution, expressing our prior beliefs over the function. In Figure 1, we plot for the sake of illustration one simulated log-bond price and an arbitrary chosen prior, expressing our beliefs. This could correspond to the log-prices of a one-year maturity bond, plotted over 252 days. The blue line constitutes the prior mean and the shaded area nearly twice the prior standard deviation corresponding to the 95% confidence interval (CI). Without observing any training data, we are able to neither narrow the CI of our prior nor enhance our prediction.

Figure 1.

Illustration of a Gaussian process prior. Our task is to predict the unobserved log-bond-prices (red dotted line) with maturity . The blue line represents the prior mean and the shaded area represents the 95% confidence interval. Note that the initial value at time 0 is known, as well as the final value , implying .

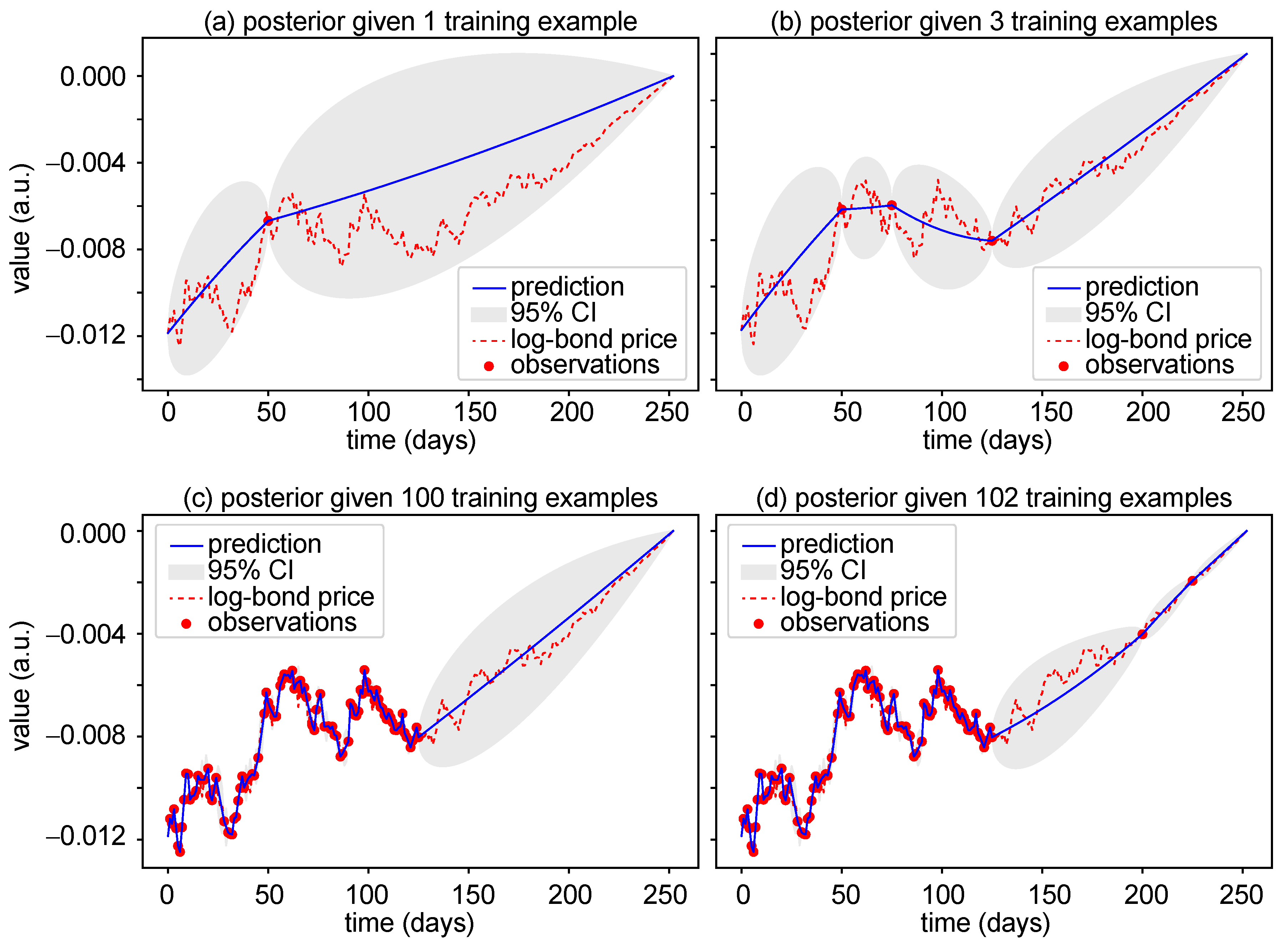

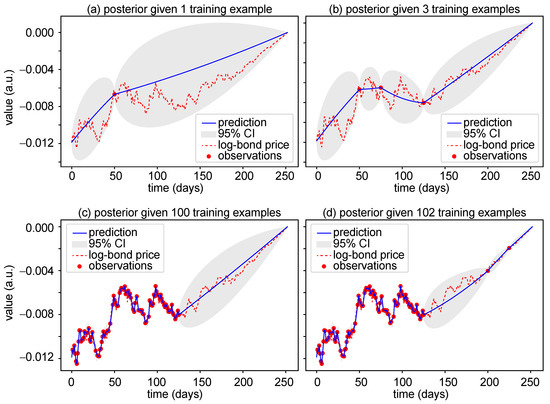

The next step is to incorporate a set of training data, to enhance our prediction. The Gaussian process regression has a quite fascinating property in this regard: consider an observation at the time point t, . The prediction for is of course itself—and hence perfect. The prediction of , be it for (which is often called smoothing) or the prediction for (called filtering) is normally distributed, but, according to Equation (3), has a smaller variance compared to our initial prediction without the observation of . Increasing the number of observations to, e.g., improves the prediction dramatically. We illustrate this property in Figure 2. Depending on the location of s relative to , the prediction can become very exact (bottom right-for ) and may still have a significant error (e.g., for s around 180).

Figure 2.

Illustration of Gaussian process posterior distributions, i.e., the prior conditioned on 1, 3, 100, and 102 observed training examples in (a–d), respectively. We want to predict log-bond-prices (red dotted line). The red dots constitute observations of the log-bond prices. The blue line denotes the posterior mean, which we take as prediction. The shaded areas represent the 95% CIs.

We now formalize the question precisely: given some training data we aim at predicting the vector .

The joint distribution of y and can directly be obtained from the mean and covariance functions in Equations (7) and (8): since they are jointly normally distributed, we obtain that

Recall that and are already specified in Equation (9). Analogously, we obtain that

The posterior distribution is obtained by using Equation (3): conditional on the training data y, we obtain that

where

and

Given some training data, Equation (10) now allows calculating the posterior distribution, i.e., the conditional distribution of the predictions given the observation y, and to make predictions, derive confidence intervals, etc.

The posterior distributions, i.e., the prior conditioned on some training data of 1, 3, 100, and 102 observations, respectively, is shown in Figure 2. The red dotted line represents a simulated trajectory of the log-bond price, while the red dots constitute the observed training data. The blue line is the posterior mean, which we take as our prediction, and the shaded areas denote the 95% confidence interval (CI). We observe that the more training data we have, the more accurate our prediction gets. While in Figure 2a the CI is quite large, two additional training data narrow the CI significantly down (see Figure 2b). Of course, the observation times play an important role in this: if we had observations close to , the additional gain in knowledge would most likely be small, while, in Figure 2b, the are nicely spread. Consequently, if one is able to choose the , one can apply results from optimal experimental design to achieve a highly efficient reduction in the prediction variance.

In most practical cases, the cannot be chosen freely, and just arrive sequentially, as we illustrate in Figure 2c,d: here, we aim at predicting log-bond prices of a one-year maturity bond which we have observed daily until day . Figure 2c describes the situation in which we do not have any information about future prices of the one-year maturity bond, i.e., the second half of the one year. Figure 2d depicts the situation in which we assume to know two log-bond prices or option strikes on zero-coupon bonds in the future, which enhances the prediction and narrows the CI down.

3.2. Performance Measures

To monitor the performance of the calibration or the prediction, one typically splits the observed dataset into two disjoint sets. The first set is called training set and is used to fit the model. The second is the validation set and used as a proxy for the generalization error. Given the number of observed data points and number of parameters to optimize for our simulation task, we recommend splitting the training and validation test set in a ratio. The quality of the predictions can be assessed with the standardized mean squared error (SMSE) loss and the mean standardized log loss (MSLL).

The SMSE considers the average of the squared residual between the mean prediction and the target of the test set and then standardizes it by the variance of the targets of the test cases.

where denotes the variance of the targets of the test cases. The SMSE is a simple approach and the reader should bear in mind that this assessment of the quality does not incorporate information about the predictive variance. However, as it represents a standard measure of the quality of an estimator, it is useful to consider it for the sake of comparability with other literature.

The MSLL as defined in Rasmussen and Williams (2006) is obtained by averaging the negative log probability of the target under the model over the test set and then standardizing it by subtracting the loss that would be obtained under the trivial model with mean and variance of the training data as in Equation (1),

The MSLL will be around zero for simple methods and negative for better methods. Note that in Equation (11) we omit in the notation the dependence on the parameter set , since now the parameter set is fixed. Moreover, we notice that the MSLL incorporates the predictive mean and the predictive variances unlike the SMSE.

In the following section, we simulate log-bond prices in a Vasiček single-curve model and calibrate the underlying parameters of the model. This is performed for one maturity. Since we deal with simulated prices, we consider the noise-free setting and set in Equation (2).

3.3. Calibration Results for the Vasiček Single-Curve Model

We generate 1000 samples of log-bond price time series, each sample consisting of 250 consecutive prices (approximately one year of trading time) via Equations (4) and (5). As parameter set, we fix

For each of the 1000 samples, we seek the optimal choice of hyper-parameters based on the simulated trajectory of 250 log-bond prices. For this purpose, we minimize the negative log marginal likelihood in Equation (1) with the underlying mean and covariance functions in Equations (7) and (8), respectively, by means of two optimization methods: the non-linear conjugate gradient (CG) algorithm and the adaptive moment estimation (Adam) optimization algorithm. The CG algorithm uses a non-linear conjugate gradient by Polak and Ribiere (1969). For details on the CG algorithm, we refer to Nocedal and Wright (2006). The Adam optimization algorithm is based on adaptive estimates of lower-order moments and is an extension of the stochastic gradient descent. For details of the Adam optimization algorithm, we refer to Kingma and Ba (2014).

After a random initialization of the parameters to optimize, the CG optimization and the Adam optimization were performed using the Python library SciPy and TensorFlow, respectively. Parallelization of the 1000 independent runs was achieved with the Python library multiprocessing. The outcomes can be summarized as follows.

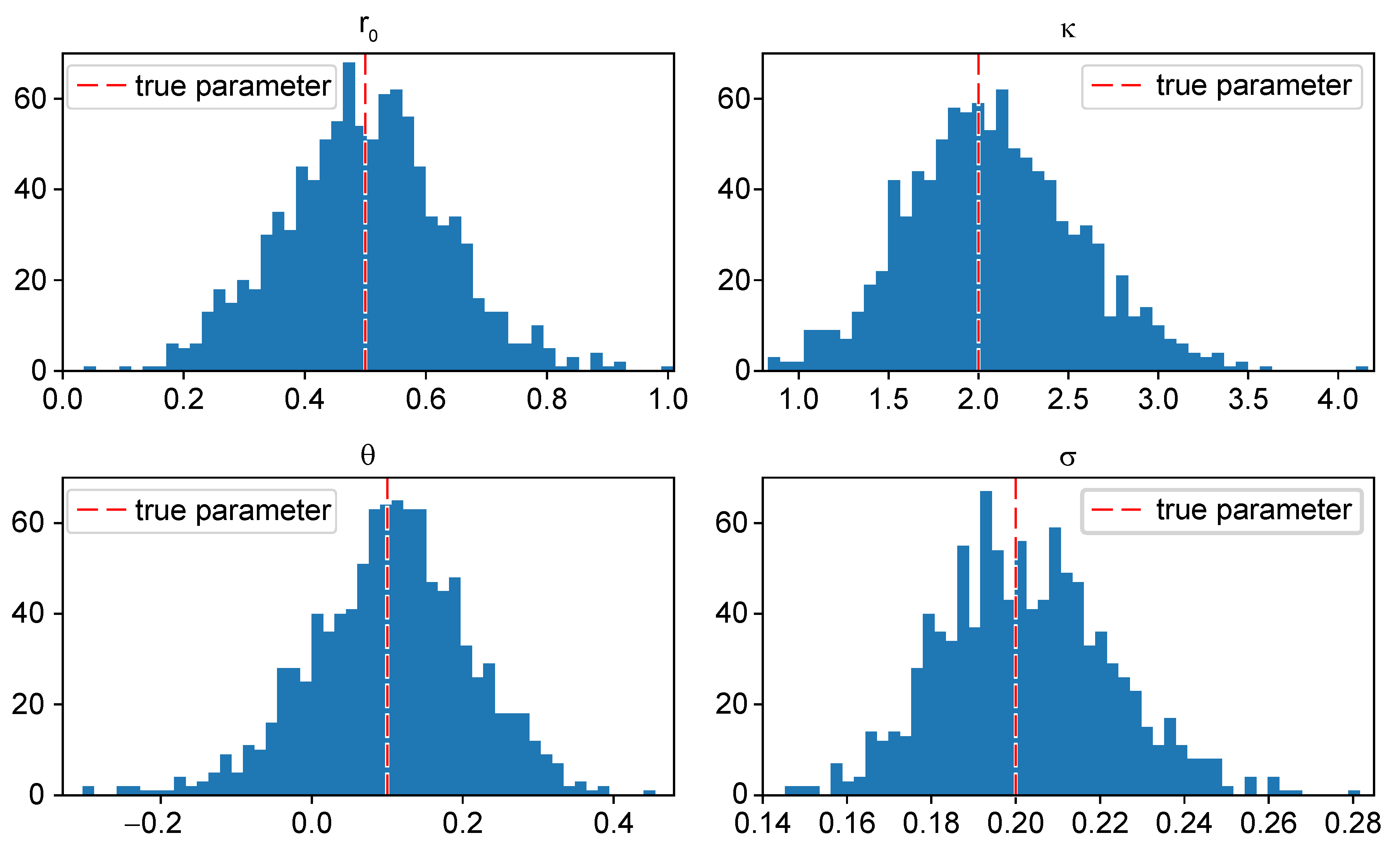

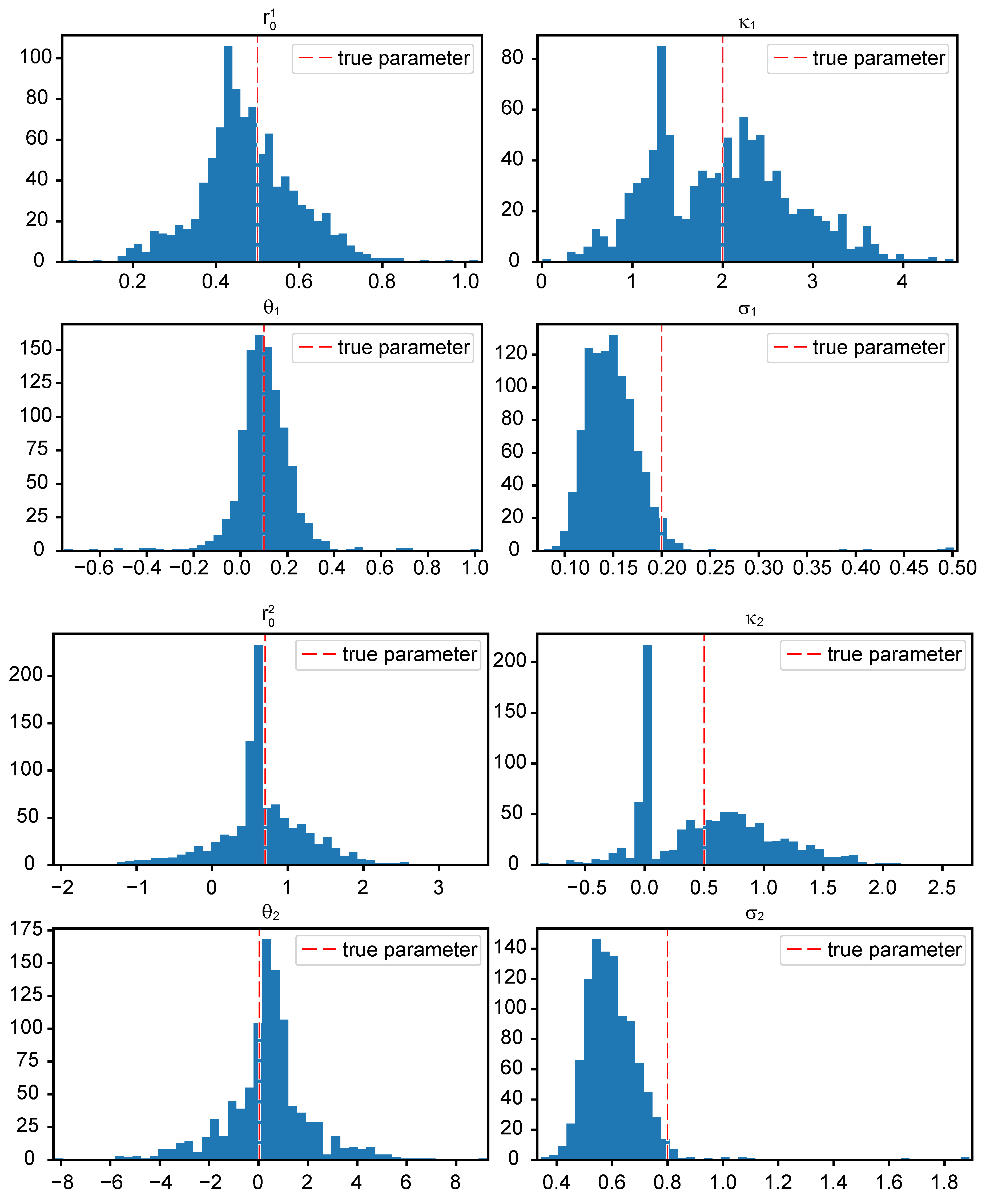

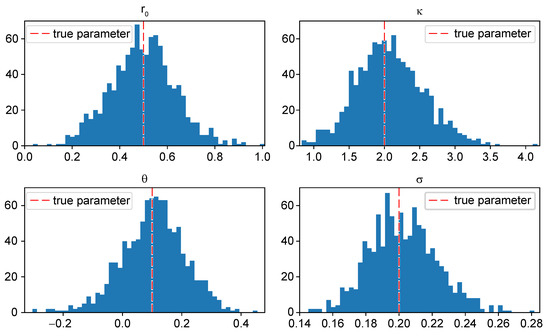

- The results of the calibration via the conjugate gradient optimization algorithm are very satisfying. In Figure 3, the learned parameters , , , and of 1000 simulated log-bond prices are plotted in 50-bin histograms. The red dashed lines in each sub-plot indicate the true model parameters.

Figure 3. Histogram of the learned parameters obtained with the CG optimizer. Total simulations: 1000.The mean and standard deviation of the learned parameters are summarized in Table 1. We observe that the mean of the learned parameters reaches the true parameters very closely while the standard deviation is reasonable.

Figure 3. Histogram of the learned parameters obtained with the CG optimizer. Total simulations: 1000.The mean and standard deviation of the learned parameters are summarized in Table 1. We observe that the mean of the learned parameters reaches the true parameters very closely while the standard deviation is reasonable. Table 1. Single curve calibration results with mean and standard deviations (StDev) of the learned parameters (params.) of 1000 simulated log-bond prices as well as the true Vasiček parameters.

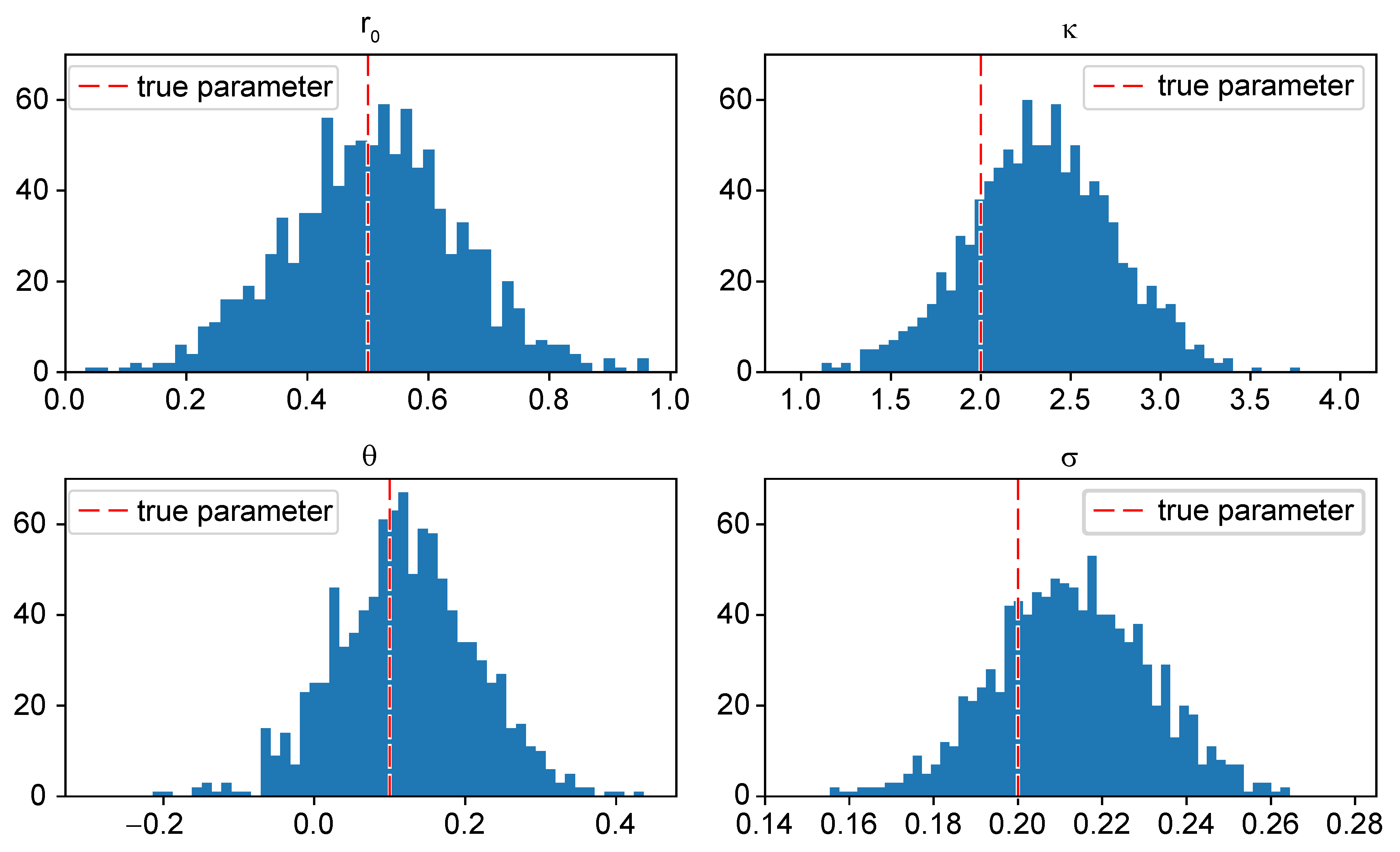

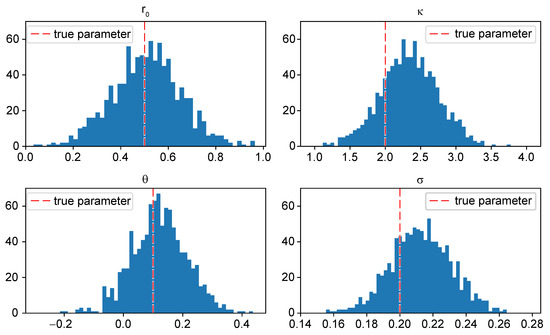

Table 1. Single curve calibration results with mean and standard deviations (StDev) of the learned parameters (params.) of 1000 simulated log-bond prices as well as the true Vasiček parameters. - The results of the calibration via the Adam algorithm are satisfying, even though we note a shift of the mean reversion parameter and the volatility parameter . The learned parameters , , , and of 1000 simulated log-bond prices are plotted in 50-bin histograms in Figure 4 and summarized with their mean and standard deviation in Table 1. The Adam algorithm slowly reduces the learning rate over time to speed up the learning algorithm. Nonetheless, one needs to specify a suitable learning rate. If the learning rate is small, training is more reliable, but the optimization time to find a minimum can increase rapidly. If the learning rate is too big, the optimizer can overshoot a minimum. We tried different learning rates of 0.0001, 0.001, 0.01, 0.05, and 0.1. Finally, we decided in favor of a learning rate of 0.05 and performed the training over 700 epochs to achieve a suitable trade-off between accuracy and computation time. We expect the results to improve slightly with more epochs, at the expense of a longer training time.

Figure 4. Histogram of the learned parameters obtained with the Adam optimizer. The learning rate is 0.05 and training is performed over 700 epochs. Total simulations: 1000.

Figure 4. Histogram of the learned parameters obtained with the Adam optimizer. The learning rate is 0.05 and training is performed over 700 epochs. Total simulations: 1000.

We conclude that, in the single-curve Vasiček specification, both optimization algorithms provide reliable results. The optimization by means of the CG algorithm outperforms the Adam algorithm (see Table 1). However, there is hope that the results obtained by the Adam algorithm can be improved by increasing the number of training epochs or by choosing a smaller learning rate in addition to more training epochs.

4. Multi-Curve Vasiček Interest Rate Model

The financial crisis of 2007–2009 has triggered many changes in financial markets. In the post-crisis interest rate markets, multiple yield curves are standard: due to credit and liquidity issues, we obtain for each tenure a different curve (see, for example, Grbac and Runggaldier (2015) and the rich literature referenced therein).

The detailed mechanism in the multi-curve markets is quite involved, and we refer Fontana et al. (2020) for a precise description. Intuitively, traded instruments are forward-rate agreements which exchange a fixed premium against a floating rate over a future time interval . Most notably, in addition to the parameter maturity, a second parameter appears: the tenor . While before the crisis, the curves were independent of , after the crisis the tenure can no longer be neglected. The authors of Fontana et al. (2020) showed that forward-rate agreements can be decomposed into -bonds, which we denote by . If we write , which is the single-curve case considered previously.

In the multi-curve Vasiček interest rate model, we consider two maturity time points , hence . We calibrate zero-coupon bond prices and tenor--bond prices under the risk-neutral measure at time t for the maturity T.

The calibration in the multi-curve framework corresponds to the situation in which we are given several datasets of different bond prices, where all of them are sharing the same hyper-parameters. We call this situation multi-task learning and reveal the different meaning in contrast to (Rasmussen and Williams 2006, sct. 5.4.3) where the corresponding log-marginal likelihoods of the individual problems are summed up and the result is then optimized with respect to the hyper-parameters. As our multi-curve example exhibits correlation, the latter approach cannot be applied.

We utilize a two-dimensional driving factor process , generalizing Equation (4). Modeling r as a two-dimensional Ornstein–Uhlenbeck process can be done as follows: let r be the unique solution of the SDE

where are two standard -Brownian motions with correlation . The zero-coupon bond prices only depend on and we utilize the single-curve affine framework:

The functions satisfy again the Riccati equations. They are obtained from Equation (5) by replacing by , etc. As in Equations (7) and (8), we obtain that zero-coupon log-bond prices are normal with mean function

For two given time points , the covariance function is

The next step is to develop the prices for the tenor- bonds. We assume that, while for the tenor 0 the interest rate is , the interest rate for tenor is . This implies that Since r is an affine process, this expectation can be computed explicitly. Using the affine machinery, we obtain that

where the function satisfies the Riccati equations. This implies that

Proposition 2.

Under the above assumptions, , is a Gaussian process with mean function

and with covariance function

Note that and c in the above proposition do not depend on . We rather use as index to distinguish mean and covariance functions for the zero-coupon bonds and the -tenor bonds.

The proof is relegated to Appendix A. The next step is to phrase observation and prediction in the Gaussian setting explicitly. We denote where and —of course, at each time point we observe two bond prices now: and . The vector y is normally distributed, with

We calculate these parameters explicitly and, analogously to the single-curve set-up, the calibration methodology with Gaussian process regression follows.

To begin with, note that coincides with from Equation (9), when we replace the parameters by , respectively. The next step for computing the covariance matrix is to compute

and, analogously,

in a similar way to , we obtain .

In the multi-curve calibration, we aim at minimizing the log marginal likelihood in Equation (1) with the corresponding mean function and covariance matrix in Equation (18).

Calibration Results

For the calibration in the multi-curve setting, we have to consider log-bond prices and the logarithm of tenor- bond prices. To be specific, we generate 1000 sequences of log-bond prices and 1000 sequences of log tenor- bond prices with maturity 1 by means of Equations (13), (16), and (17). We choose as parameters

For each sequence, we generate 125 training data points of log-bond prices and 125 training data points of log tenor- bond prices. This corresponds to a computational effort similar to the single-curve specifications, since the underlying covariance matrix will be -dimensional. Based on the simulated prices. we aim at finding the optimal choice of hyper-parameters

For this purpose, we apply the CG optimization algorithm and the Adam optimization algorithm. After a random initialization of the parameters to optimize, we perform the calibration procedure using SciPy and TensorFlow for the CG and Adam optimizer, respectively. Parallelization of the 1000 independent runs is achieved with the library multiprocessing. The outcomes are as follows.

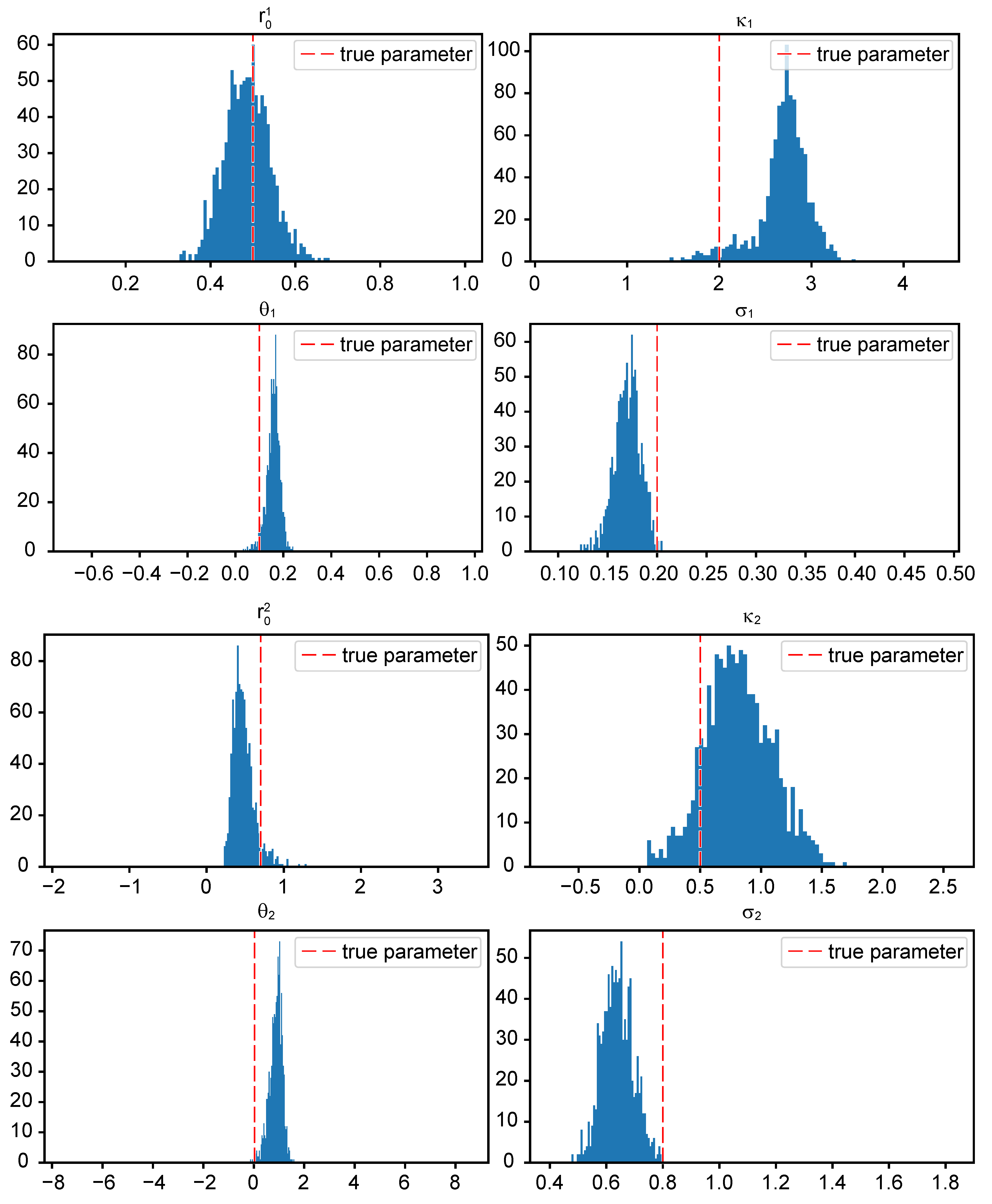

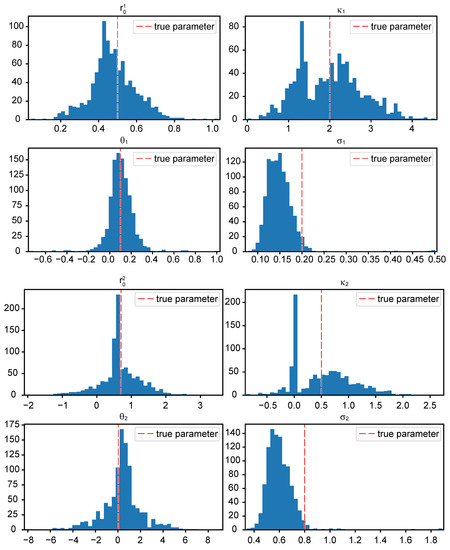

- Considering the results of the calibration by means of the CG algorithm, we note several facts. In this regard, the mean and the standard deviation of the calibrated parameters can be found in Table 2. Figure 5 shows the learned parameters of the processes and in 50-bin histograms for 1000 simulations. The red dashed line in each of the sub-plots indicates the true model parameter value. First, except for the long term mean, the volatility for is higher than the one for . This implies more difficulties in the estimation of the parameters for , which is clearly visible in the results. For , we are able to estimate the parameters well (in the mean), with the most difficulty in the estimation of which shows a high standard deviation. For estimating the parameters of , we face more difficulties, as expected. The standard deviation of is very high—it is known from filtering theory and statistics that the mean is difficult to estimate, which is reflected here. Similarly, it seems difficult to estimate the speed of reversion parameter and we observe a peak around 0.02 in . This might be due to a local minimum, where the optimizer gets stuck.

Table 2. Calibration results with mean and standard deviations (StDev) of the learned parameters (params.) of 1000 simulated log-bond prices and log tenor- bond prices, as well as the true Vasiček parameters. We note that the CG optimizer yields for every parameter a higher standard deviation than the Adam algorithm. This can in particular be observed for the parameter .

Table 2. Calibration results with mean and standard deviations (StDev) of the learned parameters (params.) of 1000 simulated log-bond prices and log tenor- bond prices, as well as the true Vasiček parameters. We note that the CG optimizer yields for every parameter a higher standard deviation than the Adam algorithm. This can in particular be observed for the parameter . Figure 5. Histogram of the learned parameters with the conjugate gradient (CG) optimizer. Total simulations: 1000. We note that the learned parameters are centered around the true parameter values except for the volatility parameters and , exhibiting a shift. In statistics, the task of estimating the volatility parameters is well known. However, our observations reveal that the task of finding the volatility parameter in the multi-curve Vasiček interest rate model constitutes a challenging task. Moreover, the range of the parameters, especially for , is very high.

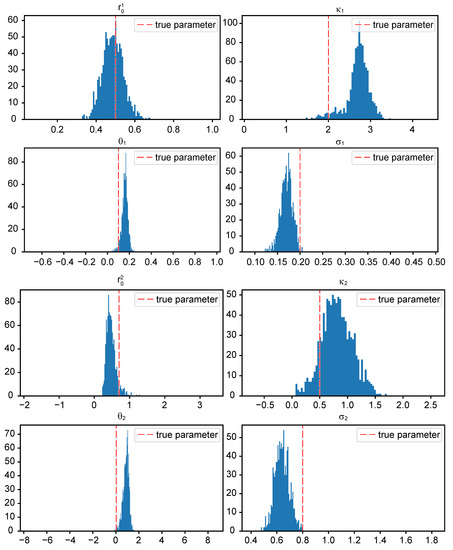

Figure 5. Histogram of the learned parameters with the conjugate gradient (CG) optimizer. Total simulations: 1000. We note that the learned parameters are centered around the true parameter values except for the volatility parameters and , exhibiting a shift. In statistics, the task of estimating the volatility parameters is well known. However, our observations reveal that the task of finding the volatility parameter in the multi-curve Vasiček interest rate model constitutes a challenging task. Moreover, the range of the parameters, especially for , is very high. - For the calibration results by means of the Adam algorithm, we note the following. The learned parameters are illustrated in a 50-bin histogram (see Figure 6), and mean and standard deviation of each parameter are stated in Table 2. After trying several learning rates of 0.0001, 0.001, 0.01, 0.05, and 0.1, we decided in favor of the learning rate 0.05 and chose 750 training epochs. While most mean values of the learned parameters are not as close to the true values as the learned parameters from the CG algorithm, we notice that the standard deviation of the learned parameters is smaller compared to the standard deviation of the learned parameters from the CG. Especially, comparing the values of in Figure 5 and Figure 6, we observe the different range of calibrated values.

Figure 6. Histogram of the learned parameters obtained with the adaptive moment estimation (Adam) optimizer. The learning rate is 0.05 and training is performed over 750 epochs. Total simulations: 1000. We note that the range of learned parameters is narrower compared to the CG optimizer, resulting in a lower standard deviation (cf. Table 2).

Figure 6. Histogram of the learned parameters obtained with the adaptive moment estimation (Adam) optimizer. The learning rate is 0.05 and training is performed over 750 epochs. Total simulations: 1000. We note that the range of learned parameters is narrower compared to the CG optimizer, resulting in a lower standard deviation (cf. Table 2).

5. Conclusions

Based on the simulation results, we can state that the calibration in the multi-curve framework constitutes a more challenging task compared to the calibration in the single-curve framework, since we need to find eight parameters instead of four parameters. In particular, to keep the computational complexity similar to the single-curve framework, we chose 125 training data time points, which results in a covariance matrix of the same dimension as in the single-curve setting. We are confident that doubling the training input data points would improve the results at the expense of computation time.

It would also be interesting to analyze how other estimators perform in comparison to the shown results, for example classical maximum-likelihood estimators. Since it is already known that it is difficult to estimate the variance with ML-techniques, it could also be very interesting to mix classical approaches with ML approaches, which we leave for future work.

A first step to extend the presented approach could consist in studying further optimization techniques such as the adaptive gradient algorithm (AdaGrad) or its extension Adadelta, the root mean square propagation (RMSProp), and the Nesterov accelerated gradient (NAG); one could further investigate the prediction of log-bond prices with the learned parameters in order to obtain decision-making support for the purchase of options on zero-coupon bonds and use the underlying strike prices as worst-case scenarios. A next step could be the development of further short rate models such as the Cox–Ingersoll–Ross, the Hull–White extended Vasiček, or the Cox–Ingersoll–Ross framework. An interesting application is the calibration of interest rate models including jumps. Beyond that, the study of the calibration of interest rate markets by means of Bayesian neural networks seems very promising and remains to be addressed in future work.

The Gaussian process regression approach naturally comes with a posteriori distribution which contains much more information compared to the simple prediction (which contains only the mean). It seems to be highly interesting to utilize this for assessing the model risk of the calibration and compare it to the non-linear approaches recently developed in Fadina et al. (2019) and in Hölzermann (2020).

Summarizing, the calibration of multiple yield curves is a difficult task and we hope to stimulate future research with this initial study showing promising results on the one side and many future challenges on the other side.

Author Contributions

Conceptualization and methodology, S.G. and T.S.; computation and data examples, S.G.; writing—original draft preparation, S.G.; and writing—review and editing, T.S. All authors have read and agreed to the published version of the manuscript.

Funding

Financial support the German Research Foundation (DFG) within project No. SCHM 2160/9-1 is gratefully acknowledged.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of Proposition 2

First, we calculate the mixed covariance function: for two time points , we obtain that

For the computation of , we applied Jacod and Shiryaev (2003), Theorem I-4.2. Furthermore, note that, for two Brownian motions with correlation , we find a Brownian motion , independent of , such that and that a Brownian motion has independent increments.

Hence, we obtain that the tenor--bond prices are log-normally distributed with mean function

and with covariance function for

Appendix B. Coding Notes and Concluding Remarks

We add some valuable remarks regarding the coding framework and deliver insight to the technical difficulties that arose during the calibration exercise.

In our application, we needed customized covariance matrices. Therefore, we decided against applying one of the Python libraries for the implementation of Gaussian process regression (amongst others, the packages pyGP, pyGPs, scikit-learn, GPy, Gpytorch, and GPflow) due to their limited choice of covariance functions. We aimed at achieving a trade-off between computational speed and accuracy. We performed all simulations in two environments. The first comprises an AMD® Ryzen 2700x CPU equipped with 16 cores and one GeForce GTX 1070 GPU. The second comprises 4 Intel(R) Xeon(R) Gold 6134 CPU resulting in 32 cores and 4 GeForce GTX 1080 GPUs. Since the calibration of the different settings was performed in two environments with different hardware specifications, we do not compare the optimizers regarding time consumption.

Utilizing all available resources comprising CPUs and GPUs for parallel operations also turned out to be a delicate task. For example the TensorFlow default distribution is built without extensions such as AVX2 and FMA. Our CPU supports the advanced vector extensions AVX2 FMA, requiring the build from source of the library TensorFlow. The reader should bear in mind that also minor code improvements of functions which are called the most improve the overall performance considerably.

References

- Brigo, D., and F. Mercurio. 2001. Interest Rate Models–Theory and Practice. Berlin: Springer Finance. [Google Scholar] [CrossRef]

- Cuchiero, C., C. Fontana, and A. Gnoatto. 2016. A general HJM framework for multiple yield curve modelling. Finance and Stochastics 20: 267–320. [Google Scholar] [CrossRef]

- Cuchiero, C., C. Fontana, and A. Gnoatto. 2019. Affine multiple yield curve models. Mathematical Finance 29: 1–34. [Google Scholar] [CrossRef]

- De Spiegeleer, J., D. B. Madan, S. Reyners, and W. Schoutens. 2018. Machine learning for quantitative finance: Fast derivative pricing, hedging and fitting. Quantitative Finance 18: 1635–43. [Google Scholar] [CrossRef]

- Dümbgen, M., and C. Rogers. 2014. Estimate nothing. Quantitive Finance 14: 2065–72. [Google Scholar] [CrossRef]

- Eberlein, E., C. Gerhart, and Z. Grbac. 2019. Multiple curve Lévy forward price model allowing for negative interest rates. Mathematical Finance 30: 1–26. [Google Scholar] [CrossRef]

- Fadina, T., A. Neufeld, and T. Schmidt. 2019. Affine processes under parameter uncertainty. Probability Uncertainty and Quantitative Risk 4: 1. [Google Scholar] [CrossRef]

- Filipović, D. 2009. Term Structure Models: A Graduate Course. Berlin: Springer Finance. [Google Scholar] [CrossRef]

- Fischer, B., N. Gorbach, S. Bauer, Y. Bian, and J. M. Buhmann. 2016. Model Selection for Gaussian Process Regression by Approximation Set Coding. arXiv, arXiv:1610.00907. [Google Scholar]

- Fontana, C., Z. Grbac, S. Gümbel, and T. Schmidt. 2020. Term structure modelling for multiple curves with stochastic discontinuities. Finance and Stochastics 24: 465–511. [Google Scholar] [CrossRef]

- Grbac, Z., A. Papapantoleon, J. Schoenmakers, and D. Skovmand. 2015. Affine libor models with multiple curves: Theory, examples and calibration. SIAM Journal on Financial Mathematics 6: 984–1025. [Google Scholar] [CrossRef]

- Grbac, Z., and W. Runggaldier. 2015. Interest Rate Modeling: Post-Crisis Challenges and Approaches. New York: Springer. [Google Scholar]

- Henrard, M. 2014. Interest Rate Modelling in the Multi-Curve Framework: Foundations, Evolution and Implementation. London: Palgrave Macmillan UK. [Google Scholar]

- Hölzermann, J. 2020. Pricing interest rate derivatives under volatility uncertainty. arXiv, arXiv:2003.04606. [Google Scholar]

- Jacod, J., and A. Shiryaev. 2003. Limit Theorems for Stochastic Processes. Berlin: Springer, vol. 288. [Google Scholar]

- Keller-Ressel, M., T. Schmidt, and R. Wardenga. 2018. Affine processes beyond stochastic continuity. Annals of Applied Probability 29: 3387–37. [Google Scholar] [CrossRef]

- Kingma, D.P., and J. Ba. 2014. Adam: A method for stochastic optimization. arXiv, arXiv:1412.6980. [Google Scholar]

- Mercurio, F. 2010. A LIBOR Market Model with Stochastic Basis. In Bloomberg Education and Quantitative Research Paper. Amsterdam: Elsevier, pp. 1–16. [Google Scholar]

- Nocedal, J., and S.J. Wright. 2006. Conjugate gradient methods. In Numerical Optimization. Berlin: Springer, pp. 101–34. [Google Scholar]

- Polak, E., and G. Ribiere. 1969. Note sur la convergence de méthodes de directions conjuguées. ESAIM: Mathematical Modelling and Numerical Analysis-Modélisation Mathématique et Analyse Numérique 3: 35–43. [Google Scholar] [CrossRef]

- Rasmussen, C.E., and C.K.I. Williams. 2006. Gaussian Processes for Machine Learning. Cambridge: MIT Press. [Google Scholar]

- Sousa, J. Beleza, Manuel L. Esquível, and Raquel M. Gaspar. 2012. Machine learning Vasicek model calibration with Gaussian processes. Communications in Statistics: Simulation and Computation 41: 776–86. [Google Scholar] [CrossRef]

- Sousa, J. Beleza, Manuel L. Esquível, and Raquel M. Gaspar. 2014. One factor machine learning Gaussian short rate. Paper presented at the Portuguese Finance Network 2014, Lisbon, Portugal, November 13–14; pp. 2750–70. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).