1. Introduction

The rapid development of society has been accompanied by an increasing demand for energy, and the problem of energy consumption has become increasingly serious. According to the World Energy Outlook 2022 published by the International Energy Agency (IEA), the industrial sector accounts for about 38% of the total global energy consumption and 45% of the total global CO

2 emissions, and improving energy efficiency in the industrial sector is of great significance for low-carbon sustainable development of industry [

1]. In China, industrial energy consumption accounts for 67% of the total energy consumption, and the energy consumption of metal smelting accounts for more than 27% of the energy consumption of the entire manufacturing industry [

2]. As a high-emission, high-energy-consumption industry, decarbonization of non-ferrous metal smelting is key to its sustainable development, and this is one of the key industries in China’s efforts to achieve the 2030 carbon peak target. Non-ferrous metals in the smelting process consume a large amount of coal, ore, and other natural mineral resources, and energy consumption is one of the most important costs in the enterprise, where energy consumption prediction to tap the potential for energy saving has a very important role [

3]. In recent years, people have become more and more interested in the study of energy consumption prediction [

4]. There are also many research methods in the field of energy consumption prediction, which can be broadly classified into mechanistic modelling, data-driven modelling, and hybrid modelling (combining mechanistic analysis and data-driven modelling).

Mechanism-based modelling methods, mostly for domain experts with a wealth of domain knowledge, are based on the reaction operation mechanism within the object process, using the laws involved in the process such as the laws of chemical reaction, thermodynamics, hydrodynamics, the law of conservation of energy and mass, etc., to establish a process model method [

5]. This method is generally complex, and the accuracy of the model is high once the model is built correctly. At the same time, the mechanism modelling calculation process is clear, the physical meaning of the results obtained is clear, and the model is highly interpretable. K. Liddell et al. [

6] conducted simulation experiments on a metal smelting process using IDEAS simulation software. Their chemical reactions were modelled through thermodynamic and chemical analyses, and the consumption of water, steam, fuel, and electricity throughout the metallurgical process was estimated on the basis of energy balance and mass balance. Umit Unver et al. [

7] used AMPL software to simulate the overall production of a high steel forging plant, to calculate its minimum production energy consumption. Peng Jin et al. [

8] analyzed the energy consumption as well as the carbon emissions of a steel mill roof gas recovery oxygen blast furnace based on material and energy flows. Hongming Na et al. [

9] analyzed the energy consumption and carbon emission of a typical steel production process with the constraints on material parameters, process parameters, and reaction conditions of the steel production process, and with the optimization objective of maximum energy efficiency. Wenjing Wei et al. [

10] analyzed the primary energy consumption and greenhouse gas emissions of nickel smelting products using a process model based on mass and energy balances. P. Coursol et al. [

11] calculated the energy consumption of the smelting process of copper sulfide concentrates using thermochemical modelling and industrial data. Lei Zhang et al. [

12] obtained the minimum fire loss, as well as the corresponding production cost and fire efficiency, by developing an optimization model based on the material balance, thermodynamic law, and reaction mechanism of a steel manufacturing process. However, most of the modelling work carried out by domain experts on this process has only addressed parts of the system and established local relationships between variables. These models can help to a certain extent to make qualitative judgements, whereas quantitative analysis is difficult to achieve. In the face of the high temperatures and dust in the tin smelting process, which involves complex physicochemical reactions and energy–mass conversion of multiphase flows, the key state parameters of the smelting process cannot be accurately sensed, and the establishment of a global model throughout the process, in order to achieve the provision of more valuable information for the production process, is still difficult with a mechanism-based modelling approach.

Data-driven approaches do not need to be overly reliant on process mechanisms and knowledge, and they only require an understanding of the system and data characteristics, in order to use the high-value data accumulated in the process for process modelling. In recent years, with the development of sensor and computer technologies, data-driven energy consumption prediction models have been widely used in power grids, buildings, metal smelting, and other fields. For example, in the field of power grids, A. Di Piazza et al. [

13] proposed an artificial neural network-based energy prediction model for grid management, to be used for predicting hourly wind speed, solar radiation, and power demand. And their simulation analysis proved that the method had good prediction performance in the short-term time periods. Nada Mounir et al. [

14] combined a modal decomposition algorithm with a bidirectional long and short-term memory network model to achieve short-term power load forecasting for smart grid energy management systems. The superiority of the model’s prediction performance was experimentally verified. Wang Yi et al. [

15] used an integrated learning approach to achieve short-term nodal voltage prediction for the grid. A case study was conducted on a real distribution network to verify the effectiveness of the proposed method. In the field of buildings, Zengxi Feng et al. [

16] proposed a combined prediction model for energy consumption prediction in office buildings and verified the superiority of the model with building data. Lucia Cascone et al. [

17] combined short-term memory with convolutional neural networks to predict household electricity consumption using data read from smart meters. Aseel Hussien et al. [

18] used the random forest algorithm to predict the thermal energy consumption of building envelope materials, which was shown using a large number of simulation results to outperform other traditional methods. In the field of metal smelting, Yang Hongtao et al. [

19] proposed a dual-wavelet neural-network-based energy consumption prediction model for manganese-silicon alloy smelting and used real data to predict the electricity consumption of the smelting process. Experiments showed that the model had a higher accuracy in electricity consumption prediction. Zhaoke Huang et al. [

20] proposed a hybrid support vector regression model with an adaptive state transition algorithm for predicting energy consumption in the non-ferrous metal industry. Experiments showed that this method outperformed other energy consumption prediction models. Zhen Cheng et al. [

21] proposed a back propagation neural network based on genetic algorithm optimization for oxygen demand prediction model of iron and steel enterprises, and experimentally proved that the prediction accuracy of the model was better than that of the ARIMA model. Shenglong Jiang et al. [

22] proposed a hybrid model integrating multivariate linear regression and Gaussian process regression for the prediction of oxygen consumption in the converter steel training process, and verified the accuracy of the model with real data. Experiments showed that the model not only achieved point prediction, but could also accurately estimate the probability interval. Zhang Qi et al. [

23] proposed an artificial-neural-network-based prediction model for the supply and demand of blast furnace gas in iron and steel mills. The results showed that the established prediction model had high accuracy and small error, and it could effectively solve the prediction problem of blast furnace gas in actual production. Xiao Xiong et al. [

24] proposed a random forest prediction model based on principal component degradation and artificial bee colony dynamic search fusion for the prediction of the power loss of multi-size locomotives in the control section of a strip steel hot finishing mill. The feasibility of the method was verified using real-time data at the mill level, and the experimental results showed that the method could accurately predict the power loss of multi-size rolling pieces, with a short calculation time and high prediction accuracy. Angelika Morgoeva et al. [

25] proposed a machine-learning-based energy consumption prediction model. Experiments on electricity consumption prediction in metallurgical companies showed that the gradient boosting model based on the CatBoost library predicted the best results. Their data-driven modelling approach did not rely excessively on the mechanistic knowledge of the reaction process, and the energy consumption prediction model was built by analyzing the process characteristics and data features, which had high precision and fast response, but the method suffered from poor interpretability and the performance of the model was also dependent on the quality of the collected data [

26]. The parameters of a data-driven model have a significant impact on the model’s predictive performance; hence, optimization algorithms are often combined with the model in the modelling process to improve the model’s predictions. Xu Yuanjin et al. [

27] explored the effectiveness of various optimization algorithms to optimize the parameters of a multilayer perceptron model and to predict the cooling and heating loads of a building. The experimental results showed that a biogeography-based optimization (BBO) algorithm was the most applicable. In addition, multi-kernel learning is often applied to data-driven models, in order to better describe the complex patterns of data. Xian Huafeng et al. [

28] proposed a multi-kernel support vector machine integration model based on unified optimization and whale optimization algorithms, and they confirmed the superiority of the model with real data. Zhang Yingda et al. [

29] proposed a multi-kernel extreme learning machine model integrating radial-based kernels and polynomial kernels, and combined this with an optimization algorithm to optimize the model parameters and finally successfully applied the model to the life prediction of batteries.

By introducing a priori knowledge into the modelling and analysis process, mechanism and data-driven hybrid models can, not only greatly improve the efficiency of modelling optimization, but also solve the problem of poor model generalization. Chengzhu Wang et al. [

30] proposed a digital twin for a zinc roaster based on knowledge-guided variable mass thermodynamics. Based on the mechanism analysis of mass and energy balance, a particle swarm optimization algorithm was introduced to optimize the parameters, from which the digital twin of the roaster was constructed, and then the control strategy of the roaster was optimized. Pourya Azadi et al. [

31] developed a hybrid dynamic model for the prediction of iron silica content and slag alkalinity in the blast furnace process by analyzing the principles of the blast furnace operation process. Wu Zhiwei et al. [

32] proposed an energy consumption prediction model for electrofused magnesia products, consisting of a single tonne energy consumption master model for mechanistic analysis and a neural-network-based compensation model. Jie Yang et al. [

33] combined a mechanistic model with a data-driven approach to achieve power demand forecasting for the electrofusion magnesium smelting process. Simulation and industrial application results showed that the effectiveness of the proposed intelligent demand forecasting method was validated.

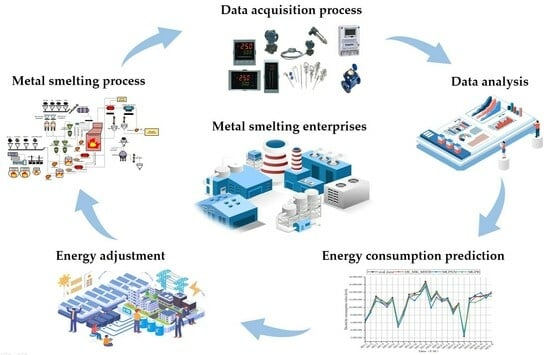

In the face of the high temperature and dust levels in the tin smelting process, which involves complex physicochemical reactions and energy–mass conversion of multiphase flows, it is difficult to use mechanistic analysis modelling when the key state parameters of the smelting process cannot be accurately sensed, and the selection of data-driven modelling methods is more suitable for the analysis of the energy consumption of the whole process in tin smelting. Despite the limitations of data-driven modelling, these models have been heavily researched in recent years and can achieve satisfactory accuracy. Many current energy forecasting models only analyze a production process or a single energy source, but a single model cannot meet the demand for multi-output forecasting. The non-ferrous metal smelting process is accompanied by multiple types of energy consumption, and the smelting process has many processes and couplings between the energy consumption of each process; if a single model is used for prediction, potential cross-correlations between multiple outputs will be ignored. Based on this, this paper proposes a multi-kernel multi-output support vector regression prediction model optimized based on a differential evolutionary algorithm for solving the problem of predicting multiple types of energy consumption for multi-process production using a small sample dataset for the tin smelting process. Due to the limited processing power of the model algorithm, redundant variable information will affect the model performance, so a distance correlation coefficient matrix is introduced to remove redundant feature variables. The collected data are multidimensional and highly nonlinear, multi-kernel learning is combined with multi-output support vector regression to improve the model fit, and a differential evolutionary algorithm is used to find the optimal model hyperparameters. Finally, a grey correlation analysis model is applied to analyze the contribution of each energy consumption influencing factor and the comprehensive energy consumption in the tin smelting process, and corresponding energy-saving suggestions are put forward for the tin smelting process. The innovations of this study are as follows: (1) Metal smelting, as a high-energy consumption industry, consumes different types of energy, while strong coupling effects exist during the process; production data collection is also challenging due to the high-temperature environment, which results in a relatively small amount of data that is also highly non-linear. This paper proposes a multi-output support vector regression model for energy consumption prediction based on optimized multi-kernel learning and a differential evolutionary algorithm. The model overcomes the shortcoming of traditional models that only predict a single type of energy consumption. (2) By introducing multi-kernel learning into a multi-output support vector regression model, the improved model is able to maintain a satisfying prediction performance even with a small data volume. The model was validated with the production data of a tin smelting enterprise in Southwest China, and the experiment results showed that the energy consumption prediction model proposed in this paper achieved a high prediction accuracy, as well as satisfying performance stability. This study also provides targeted guidance, according to the research conclusions, on energy planning and adjustment for enterprises.

5. Energy Saving Advice

Industry associations should strengthen their guidance and assessment of energy efficiency benchmarking activities for non-ferrous metal enterprises and further improve energy-efficiency benchmarking management mechanisms. They should actively implement an energy manager system for the whole industry, standardize and establish energy management accounts, diagnose and analyze the energy application status of enterprises, study and propose energy-saving measures, explore the energy-saving potential of various production links between enterprises, measure and verify the energy-saving capacity, and establish an all-round energy management system covering all production links.

The optimization level of production scheduling in the tin smelting process greatly affects the material and energy consumption of enterprises, and production planning and scheduling ability is directly related to whether the resources of enterprises can be reasonably utilized, thus affecting the production, operation, and management efficiency of enterprises. Enterprises should pay attention to the optimization of the smelting process, in order to achieve the purpose of saving energy, consumption reduction, and efficiency increases.

The recycling of energy and materials from the smelting process is a practice worth promoting. As certain enterprises have conducted, the soot produced by each process can be collected for further reuse as an auxiliary material for the process; and the waste heat from the waste heat boiler can be recovered for power generation, making full use of existing resources. The recycling aspect of the smelting process can improve the resource utilization rate and is of great significance for reducing energy consumption. However, from the results of the parameter sensitivity analyses, the flue gas temperature of waste heat recovery had a very important impact on energy consumption. To achieve energy-efficient production, the energy consumption in the waste heat recovery process should not be neglected.

6. Conclusions

The non-ferrous metal smelting process involves multiple types of energy use, and energy consumptions are often coupled with each other. The traditional single energy consumption prediction model cannot be applied to the prediction of multiple energy consumption. Facing the problems of complex production processes, multiple types of energy consumption, and small available data samples in the tin smelting process, this paper proposed a multi-kernel multi-output support vector regression prediction model based on the optimization of a differential evolutionary algorithm. The grey correlation analysis model was used to analyze the contribution of the factors affecting the energy consumption in the tin smelting process with comprehensive energy consumption, and corresponding energy-saving recommendations were put forward based on the results of the analysis for the tin smelting process. In this paper, a DE_MK_MSVR methodology for multi-process and whole-process multi-energy consumption prediction for metal smelting processes was proposed. The main conclusions are as follows:

Aiming at multi-energy use in the smelting process, less effective data samples, strong data nonlinearity, and other characteristics, the multi-output support vector regression (MSVR) model was adopted as a benchmark. The concept of multi-kernel learning was introduced, and the kernel function of MSVR was improved using the method of linear combination. Compared with the MSVR model with single kernel function, the MSVR model based on multiple kernel function had better prediction ability, and the multiple kernel function could better discover the relationships hidden in the data.

The hyperparameters of the prediction model were obtained using the optimization algorithm. Comparing different optimization algorithms, DE had better tuning ability for the MK_MSVR model and the DE_MK_MSVR prediction model had the highest accuracy. To demonstrate the prediction performance of the model, DE_MK_MSVR was compared with other multi-output prediction models. The experiments showed that the DE_MK_MSVR model had the best evaluation index, which proved the superiority of this model in multi-energy prediction.

A grey correlation analysis model explored the importance of the influencing factors on energy consumption in each process on the comprehensive energy consumption. The sensitivity of the input parameters was discussed using the Sobol sensitivity analysis method, giving corresponding energy-saving suggestions for the tin smelting process. The use of clean energy for smelting, such as natural gas, wind energy, solar energy, and other resources is conducive to achieving energy savings and efficiency; in addition, recycling and processing of soot and dust in various stages of smelting and generating electricity from waste heat is a practice worthy of being advocated, and technological inputs to the recycling process should be increased to improve energy efficiency.

In future studies, we will work on the following problems that may be of interest for industrial applications and scientific research: (1) The development of energy-intensive processes in process industries towards the direction of massiveness, integration, and scalability. A hybrid approach of mechanism analysis and data-driven modeling will be introduced into the modelling and analysis process, which may significantly improve not only the modelling efficiency, but also solve the problem of poor model generalization ability. (2) Appropriate virtual samples will be generated by combining domain prior knowledge, and they will be added to the training samples to achieve data expansion and feature enhancement, which in turn may improve the generalization ability of the model.