Deep Learning Based Egg Fertility Detection

Abstract

:Simple Summary

Abstract

1. Introduction

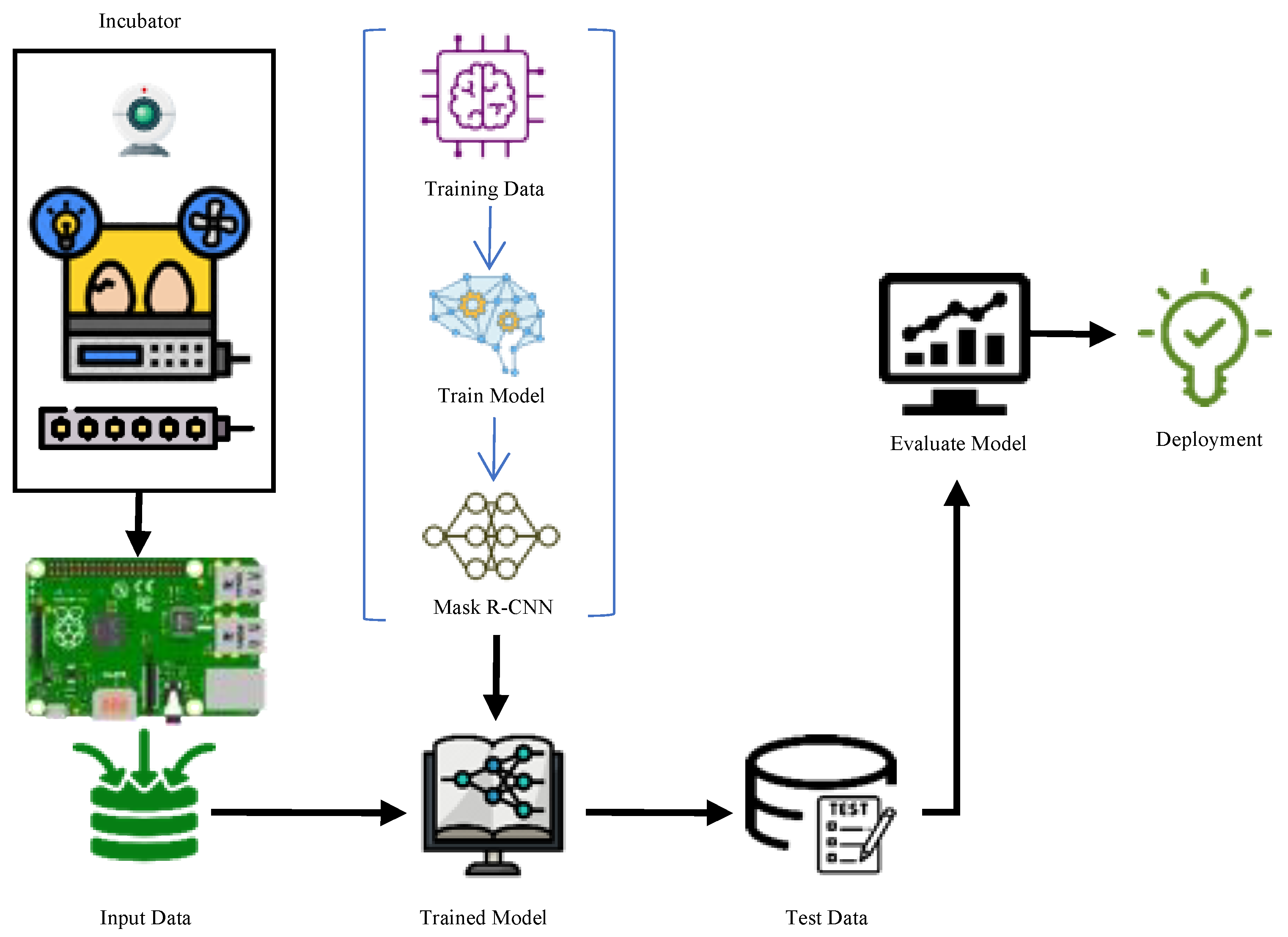

2. Materials and Methods

2.1. Evaluate Methods

2.2. Implementation

3. Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Rancapan, J.G.C.; Arboleda, E.R.; Dioses, J.; Dellosa, R.M. Egg fertility detection using image processing and fuzzy logic. Int. J. Sci. Technol. Res. 2019, 8, 3228–3230. [Google Scholar]

- Geng, L.; Hu, Y.; Xiao, Z.; Xi, J. Fertility detection of hatching eggs based on a convolutional neural network. Appl. Sci. 2019, 9, 1408. [Google Scholar] [CrossRef] [Green Version]

- Waranusast, R.; Intayod, P.; Makhod, D. Egg size classification on Android mobile devices using image processing and machine learning. In Proceedings of the 2016 Fifth ICT International Student Project Conference (ICT-ISPC), Nakhonpathom, Thailand, 27–28 May 2016; pp. 170–173. [Google Scholar]

- Fadchar, N.A.; Cruz, J.C.D. Prediction Model for Chicken Egg Fertility Using Artificial Neural Network. In Proceedings of the 2020 IEEE 7th International Conference on Industrial Engineering and Applications (ICIEA, Bangkok, Thailand, 16–21 April 2020; pp. 916–920. [Google Scholar]

- Adegbenjo, A.O.; Liu, L.; Ngadi, M.O. Non-Destructive Assessment of Chicken Egg Fertility. Sensors 2020, 20, 5546. [Google Scholar] [CrossRef]

- Das, K.; Evans, M. Detecting fertility of hatching eggs using machine vision I. Histogram characterization method. Trans. ASAE 1992, 35, 1335–1341. [Google Scholar] [CrossRef]

- Das, K.; Evans, M. Detecting fertility of hatching eggs using machine vision II: Neural network classifiers. Trans. ASAE 1992, 35, 2035–2041. [Google Scholar] [CrossRef]

- Bamelis, F.; Tona, K.; De Baerdemaeker, J.; Decuypere, E. Detection of early embryonic development in chicken eggs using visible light transmission. Br. Poult. Sci. 2002, 43, 204–212. [Google Scholar] [CrossRef] [PubMed]

- Usui, Y.; Nakano, K.; Motonaga, Y. A Study of the Development of Non-Destructive Detection System for Abnormal Eggs; European Federation for Information Technology in Agriculture, Food and the Environment (EFITA): Debrecen, Hungary, 2003. [Google Scholar]

- Lawrence, K.C.; Smith, D.P.; Windham, W.R.; Heitschmidt, G.W.; Park, B. Egg embryo development detection with hyperspectral imaging. In Optics for Natural Resources, Agriculture, and Foods; International Society for Optics and Photonics: Bellingham, WA, USA, 2006; p. 63810T. [Google Scholar]

- Smith, D.; Lawrence, K.; Heitschmidt, G. Fertility and embryo development of broiler hatching eggs evaluated with a hyperspectral imaging and predictive modeling system. Int. J. Poult. Sci. 2008, 7, 1001–1004. [Google Scholar]

- Smith, D.; Lawrence, K.; Heitschmidt, G. Detection of hatching and table egg defects using hyperspectral imaging. In Proceedings of the European Poultry Conference Proceedings (EPSA), Verona, Italy, 10–14 September 2006. [Google Scholar]

- Lin, C.-S.; Yeh, P.T.; Chen, D.-C.; Chiou, Y.-C.; Lee, C.-H. The identification and filtering of fertilized eggs with a thermal imaging system. Comput. Electron. Agric. 2013, 91, 94–105. [Google Scholar] [CrossRef]

- Liu, L.; Ngadi, M. Detecting fertility and early embryo development of chicken eggs using near-infrared hyperspectral imaging. Food Bioprocess Technol. 2013, 6, 2503–2513. [Google Scholar] [CrossRef]

- Boğa, M.; Çevik, K.K.; Koçer, H.E.; Burgut, A. Computer-Assisted Automatic Egg Fertility Control. J. Kafkas Univ. Fac. Vet. Med. 2019, 25, 567–574. [Google Scholar]

- Huang, L.; He, A.; Zhai, M.; Wang, Y.; Bai, R.; Nie, X. A multi-feature fusion based on transfer learning for chicken embryo eggs classification. Symmetry 2019, 11, 606. [Google Scholar] [CrossRef] [Green Version]

- Geng, L.; Xu, Y.; Xiao, Z.; Tong, J. DPSA: Dense pixelwise spatial attention network for hatching egg fertility detection. J. Electron. Imaging 2020, 29, 023011. [Google Scholar] [CrossRef]

- Çevik, K.K.; Koçer, H.E.; Boğa, M.; Taş, S.M. Mask R-CNN Approach for Egg Segmentation and Fertility Egg Classification. In Proceedings of the International Conference on Artificial Intelligence and Applied Mathematics in Engineering (ICAIAME 2021), Baku, Azerbaijan, 20–22 May 2021; p. 98. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems 25 (NIPS 2012), Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar] [CrossRef] [Green Version]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:14091556. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. arXiv 2016, arXiv:160207261. [Google Scholar] [CrossRef]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:160207360. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60(6), 84–90. [Google Scholar] [CrossRef] [Green Version]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 9. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Dai, J.; He, K.; Sun, J. Instance-aware semantic segmentation via multi-task network cascades. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3150–3158. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:150203167. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Mahdianpari, M.; Salehi, B.; Rezaee, M.; Mohammadimanesh, F.; Zhang, Y. Very deep convolutional neural networks for complex land cover mapping using multispectral remote sensing imagery. Remote Sens. 2018, 10, 1119. [Google Scholar] [CrossRef] [Green Version]

- Rahman, M.A.; Wang, Y. Optimizing intersection-over-union in deep neural networks for image segmentation. In International Symposium on Visual Computing; Springer: Cham, Switzerland, 2016; pp. 234–244. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Henderson, P.; Ferrari, V. End-to-end training of object class detectors for mean average precision. In Asian Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 198–213. [Google Scholar]

- Dutta, A.; Gupta, A.; Zissermann, A. VGG Image Annotator (VIA). 2016. Available online: https://www.robots.ox.ac.uk/~vgg/software/via/ (accessed on 1 September 2022).

| Author(s), Date | Method(s) | Success Rates | Achieved Day |

|---|---|---|---|

| K. Das and M. Evans, 1992 [6,7] | Histogram, Characterization and Neural Network Classifier | 93% 88–90% | At the end of the 3rd day At the end of the 3rd day |

| F. Bamelis, K. Tona, J. De Baerdemaeker, and E. Decuypere, 2002 [8] | Spectrophotometric Method | - | 4.5th day |

| Y. Usui, K. Nakano, and Y. Motonaga, 2003 [9] | Halogen Light Source and NIR Detection System | 83–96.8% | - |

| K. C. Lawrence, D. P. Smith, W. R. Windham, G. W. Heitschmidt, and B. Park, 2006 [10,11] | Hyperspectral Imaging Technique | 91% | At the end of the 3rd day |

| D. Smith, K. Lawrence, and G. Heitschmidt, 2006 [12] | Mahalanobis Distance (MD) Classification and Partial Least Squares Regression (PLSR) | 96% (MD), 100% (PSLR) 92% (MD), 100% (PSLR) 100% (MD), 100% (PSLR) | At the end of the 0th day At the end of the 1st day At the end of the 2nd day |

| Chern-Sheng Lin, Po Ting Yeh, Der-Chin Chen, Yih-Chih Chiou, Chi-Hung Lee, 2013 [13] | Thermal Images and Fuzzy System | 96% | - |

| L. Liu & M. O. Ngadi, 2013 [14] | Near Infrared Hyperspectral Images, PCA, K-Means | 100% 78.8% 74.1% 81.8% | At the end of the 0th day At the end of the 1st day At the end of the 2nd day At the end of the 4th day |

| Waranusast ve ark., 2017 [3] | Image processing and machine learning (SVM) | 80.4% | - |

| Boga et al., 2019 [15] | Image processing with thresholding | 73.34% (1st dataset) 100% (1st dataset) 93.34% (2nd dataset) 93.34% (2nd dataset) 93.34% (3rd dataset) 100%(3rd dataset) | At the end of the 3rd day At the end of the 4th day At the end of the 3rd day At the end of the 4th day At the end of the 3rd day At the end of the 4th day |

| Huang et al. [16] | Deep Convolutional Neural Network | 98.4% | five- to seven-day embryos |

| Geng et al. [17] | Deep convolutional neural networks | 98.3% 99.1% | At the end of the 5th day At the end of the 9th day |

| Lei et al., 2019 [2] | PhotoPlethysmoGraphy (PPG), convolutional neural network (CNN) | 99.50% | - |

| Glenn ve ark., 2019 [1] | Fuzy Logic and k-nearest neighbors (k-NN) | N/A | - |

| Fadchar and Cruz, 2020 [4] | Color segmentation and artificial neural network (ANN) | 97% | - |

| Hyperparameters | Values |

|---|---|

| Optimizer | ADAM |

| Epoch | 50 |

| Step in each epoch | 100 |

| Batch size | 1 |

| Learning rate | 0.001 |

| Coefficient of determination | 0.9 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Çevik, K.K.; Koçer, H.E.; Boğa, M. Deep Learning Based Egg Fertility Detection. Vet. Sci. 2022, 9, 574. https://doi.org/10.3390/vetsci9100574

Çevik KK, Koçer HE, Boğa M. Deep Learning Based Egg Fertility Detection. Veterinary Sciences. 2022; 9(10):574. https://doi.org/10.3390/vetsci9100574

Chicago/Turabian StyleÇevik, Kerim Kürşat, Hasan Erdinç Koçer, and Mustafa Boğa. 2022. "Deep Learning Based Egg Fertility Detection" Veterinary Sciences 9, no. 10: 574. https://doi.org/10.3390/vetsci9100574

APA StyleÇevik, K. K., Koçer, H. E., & Boğa, M. (2022). Deep Learning Based Egg Fertility Detection. Veterinary Sciences, 9(10), 574. https://doi.org/10.3390/vetsci9100574