Help-Seeking Situations Related to Visual Interactions on Mobile Platforms and Recommended Designs for Blind and Visually Impaired Users

Abstract

:1. Introduction

2. Literature Review

2.1. BVI Users’ Help-Seeking Situations Related to Visual Interactions

2.2. Design Issues and Solutions

3. Materials and Methods

3.1. Sampling

3.2. Data Collection

3.3. Data Analysis

4. Results

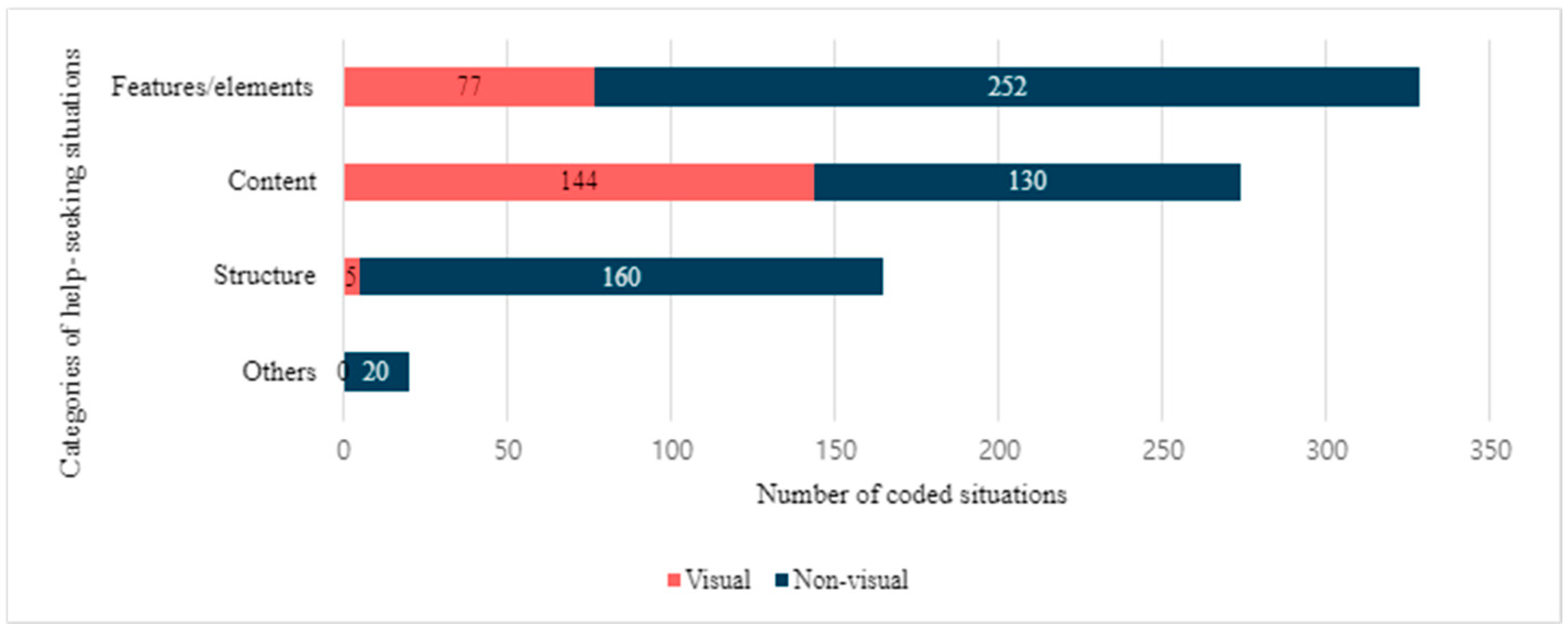

4.1. Categories of Help-Seeking Situations

4.2. Typical Help-Seeking Situations Related to Visual Interactions in Mobile Environments

4.2.1. Features/Elements: Difficulty Finding a Toggle-Based Search Feature

“I don’t know if that’s like a search. Ohh, I guess it’s a search, but it’s not really a button. Let’s see a search toggle. I don’t really understand what that means. Ohh so I guess the search toggle brings up the search bar and then then you have a text field that wasn’t … really sure what search toggle meant. I’ve never heard that before, so now I’m in the search field. Umm, OK cool. So let me just try. Uh. I don’t know. I just wanna see how it works when you search for something.”(IP5-LD)

“I wish that the search field was not hidden behind the button because if I’m learning of this page… But if I’m loading up this page and I want to jump straight to the search field. … See, I can’t find the text field.”(IP29-LH)

4.2.2. Features/Elements: Difficulty Understanding a Video Feature

“I’m noticing is it’s a little bit verbose, so it’s not properly tagged. So, it’s telling me and, you know, a bunch of numbers and what have you. It doesn’t really make sense from a screen reader point of view. So, OK, it’s telling me that there is a video thumbnail. OK, there is a button for play clip. Alright, so there is a slider. I’m not sure if that works or not, but I’ll just flick past that….”(AP14-OL)

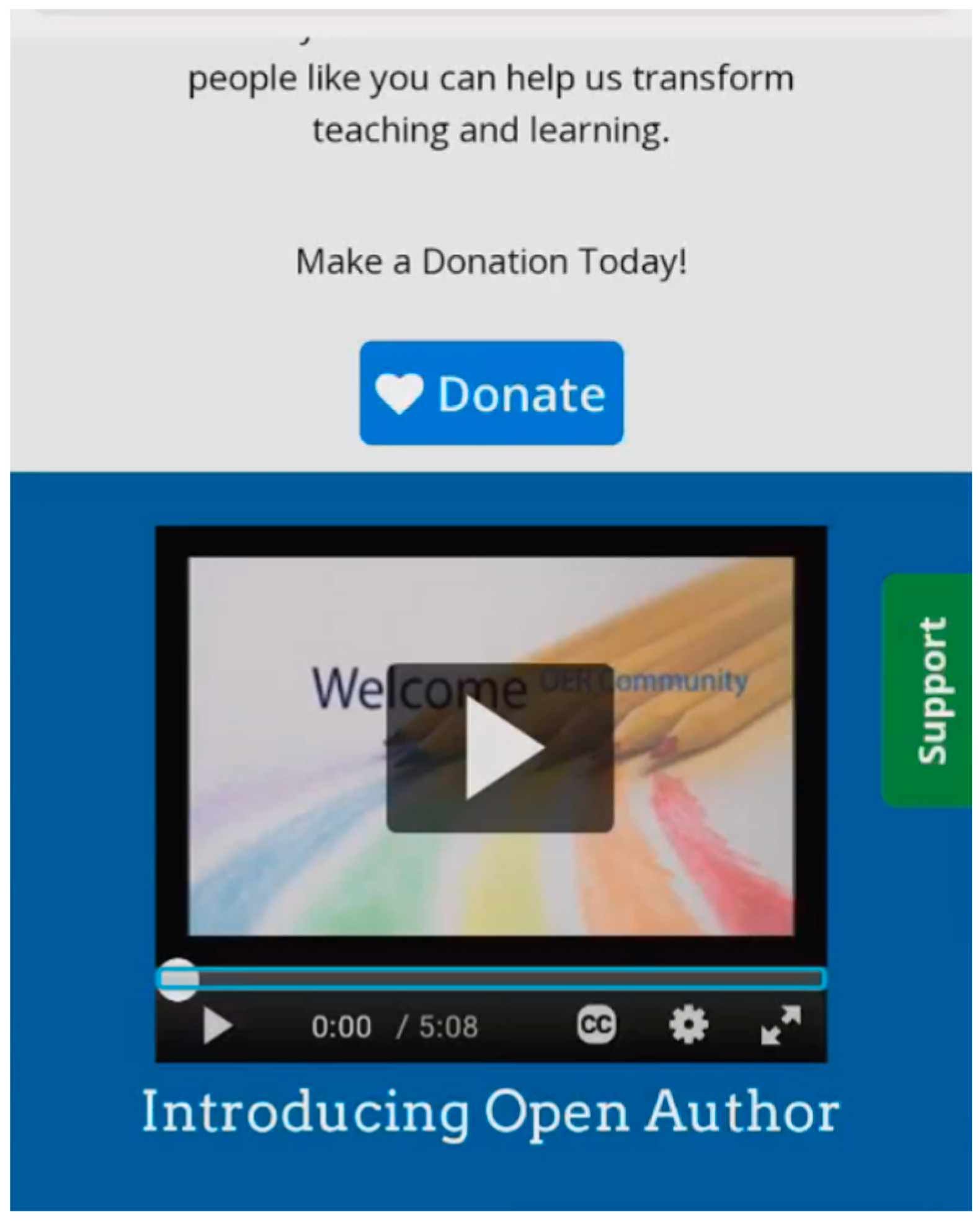

4.2.3. Features/Elements: Difficulty Navigating Items on Paginated Sections

“The tabs inside of the collection are also not accessible, so there are tabs over here. There’s one that’s selected, and it’s an unlabeled tab. The second one is not selected, third one, fourth one. At least I know that there are tabs here, but it doesn’t tell me what those tabs are.”(ID17-LO)

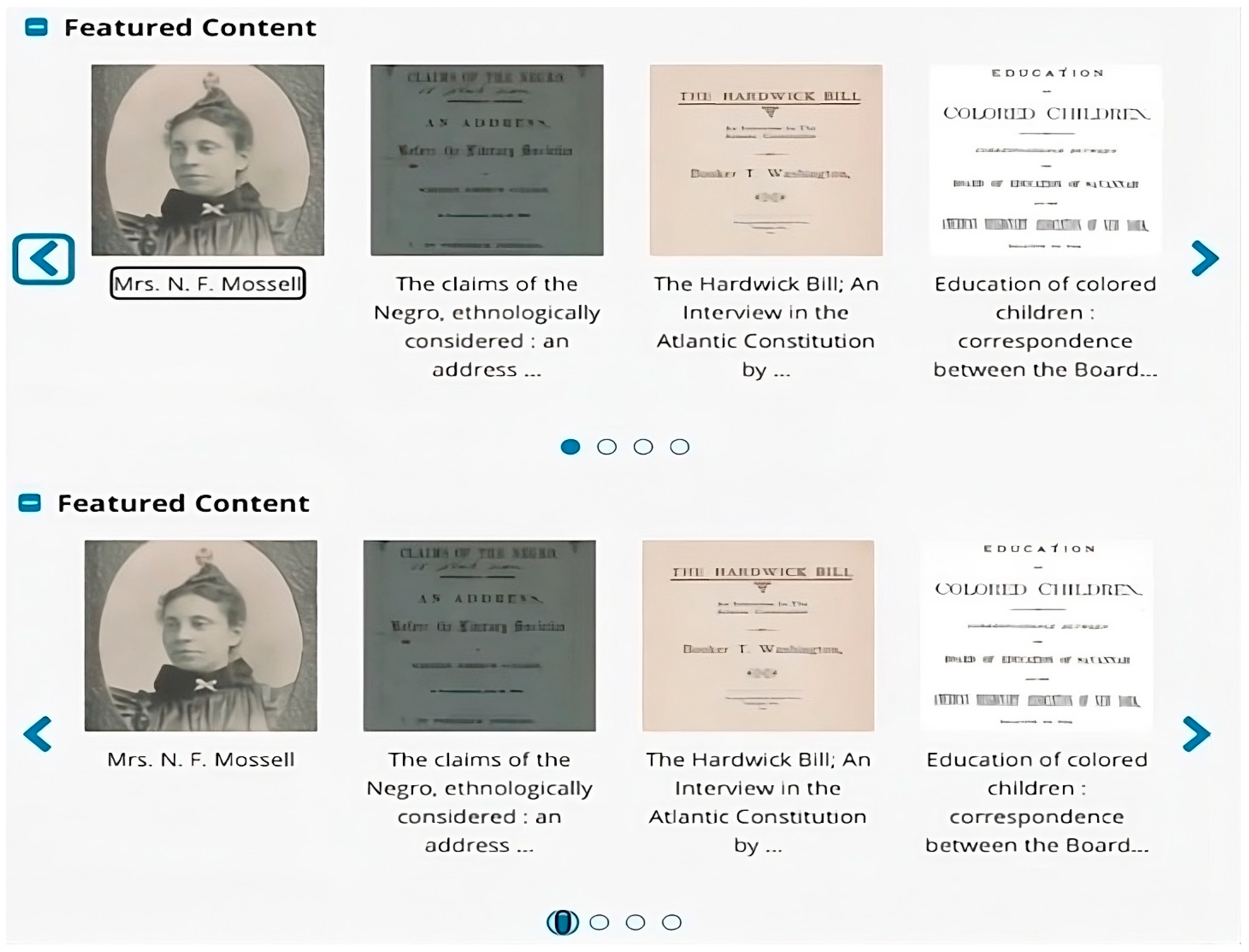

4.2.4. Features/Elements: Difficulty Distinguishing Collection Labels from Thumbnail Descriptions

“It doesn’t act like there’s a break between the description and what the category is…this one says white button with blue and red lettering politics explore so politics. But it’s not pausing to tell me it’s politics. It just runs it all through like it’s one word or like it’s one sentence. It’s so it’s some of these, it’s actually hard to tell what the category is because the category name fits into the sentence.”(AT24-ML)

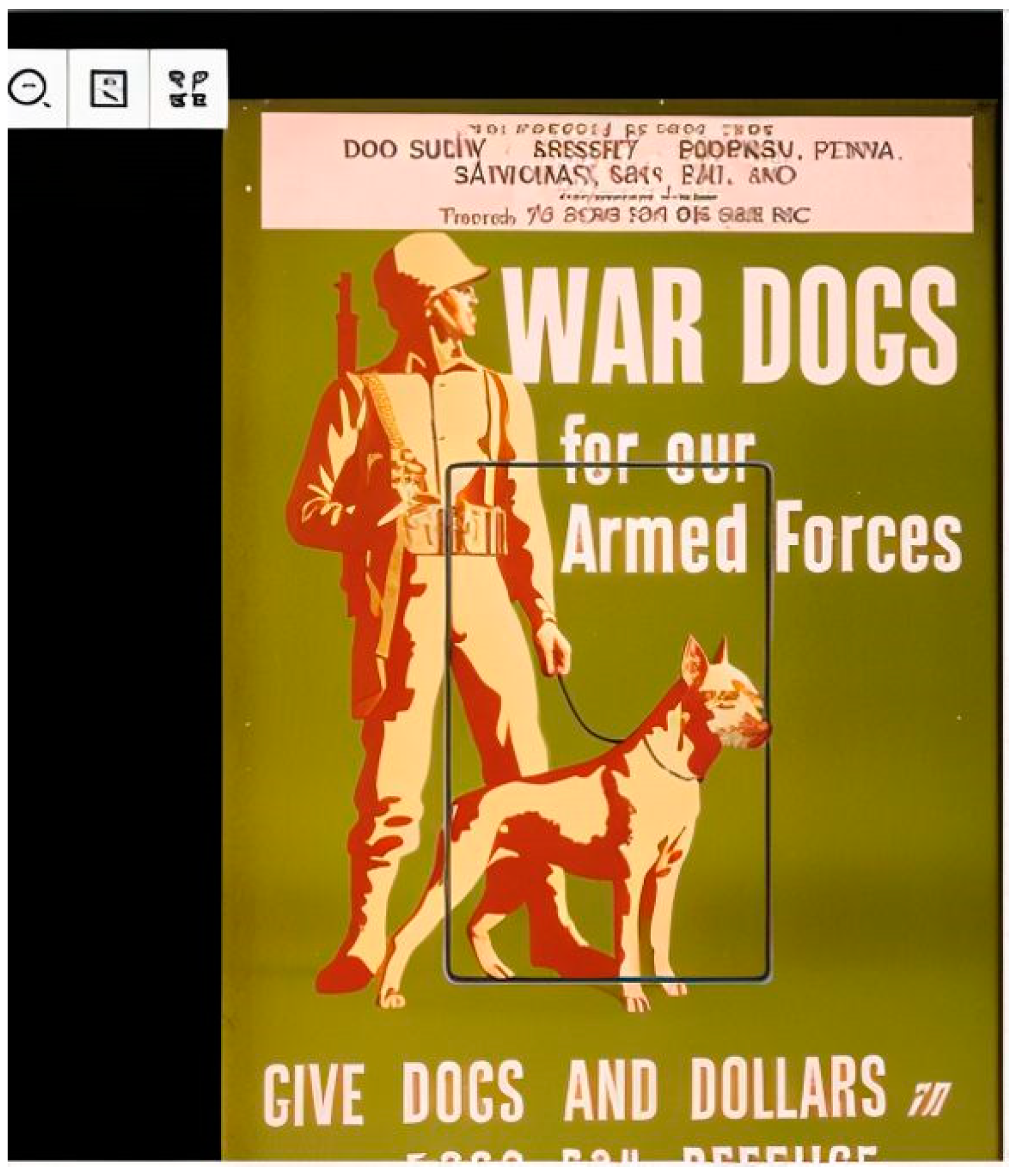

4.2.5. Content: Difficulty Recognizing the Content of Images

“Umm, oh, that’s the image there, but the only thing my VoiceOver said was the word armed. It just said like war dogs for armed forces image. And then it said armed. That’s all it says.”(ID10-AL)

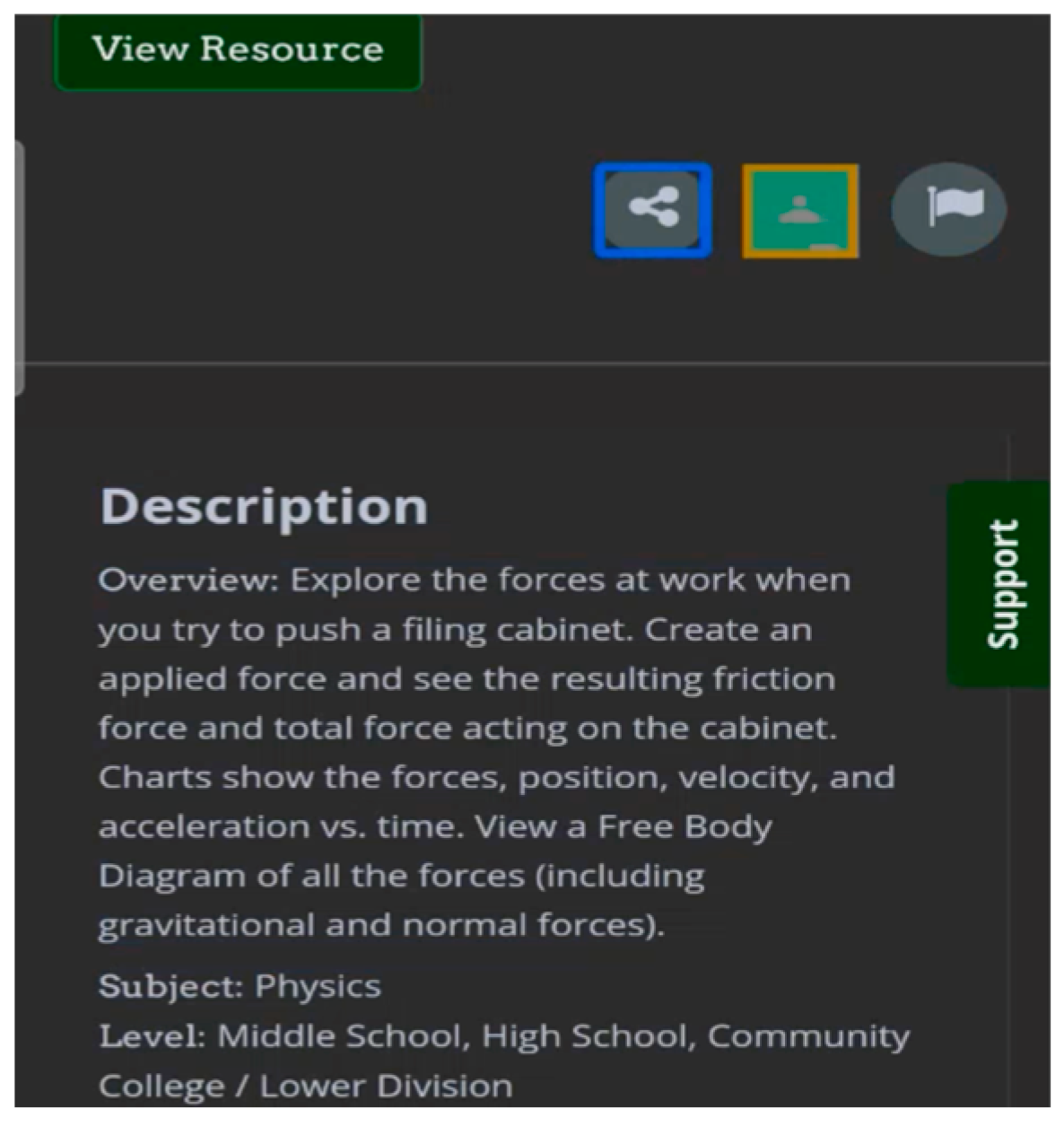

4.2.6. Content: Difficulty Recognizing the Content of Graphs

“So, the first item that I think of will be a graph. However, this graph lacks a description. I can’t fully interpret because they’re just saying that these are graphs.”(AT18-OL)

“I can’t understand it. So, the description of it says that it’s a chart that shows forces, position, and velocity, but when I go into it, it’s not understandable.”(AP15-LO)

4.2.7. Structure: Difficulty Interacting with Multilayered Windows

“I’m doing filtering…I wanted my filter…I wanna go to the other search…I went down to the bottom to touch on search. … I’m going back to my advance and I want to I wanna see. OK, I want to see. Umm. No, I took it out. I went back to my filters and it took my words.”(AP7-LA)

5. Discussion

5.1. Theoretical Implications

5.2. Practical Implications

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Xie, I.; Matusiak, K. Discover Digital Libraries: Theory and Practice; Elsevier: Amsterdam, The Netherlands, 2016. [Google Scholar]

- Xie, I.; Wang, S.; Lee, T.H.; Lee, H.S. Blind and visually impaired users’ interactions with digital libraries: Help-seeking situations in mobile and desktop environments. Int. J. Hum. Comput. Interact. 2023, 1–19. [Google Scholar] [CrossRef]

- Basole, R.C.; Karla, J. On the evolution of mobile platform ecosystem structure and strategy. Bus. Inf. Syst. Eng. 2011, 3, 313–322. [Google Scholar] [CrossRef]

- Pew Research Center. Available online: https://www.pewresearch.org/short-reads/2021/09/10/americans-with-disabilities-less-likely-than-those-without-to-own-some-digital-devices/ (accessed on 15 May 2024).

- Centers for Disease Control and Prevention. Available online: https://www.cdc.gov/vision-health-data/prevalence-estimates/vision-loss-prevalence.html (accessed on 21 August 2024).

- Abraham, C.H.; Boadi-Kusi, B.; Morny, E.K.A.; Agyekum, P. Smartphone usage among people living with severe visual impairment and blindness. Assist. Technol. 2022, 34, 611–618. [Google Scholar] [CrossRef] [PubMed]

- Carvalho, M.C.N.; Dias, F.S.; Reis, A.G.S.; Freire, A.P. Accessibility and usability problems encountered on websites and applications in mobile devices by blind and normal-vision users. In Proceedings of the 33rd Annual ACM Symposium on Applied Computing, Pau, France, 9–13 April 2018; pp. 2022–2029. [Google Scholar] [CrossRef]

- Kuppusamy, K.S. Accessible images (AIMS): A model to build self-describing images for assisting screen reader users. Univers. Access Inf. Soc. 2018, 17, 607–619. [Google Scholar] [CrossRef]

- Vigo, M.; Harper, S. Coping tactics employed by visually disabled users on the web. Int. J. Hum. Comput. Stud. 2013, 71, 1013–1025. [Google Scholar] [CrossRef]

- Bennett, C.L.; Gleason, C.; Scheuerman, M.K.; Bigham, J.P.; Guo, A.; To, A. “It’s complicated”: Negotiating accessibility and (mis) representation in image descriptions of race, gender, and disability. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–19. [Google Scholar] [CrossRef]

- Gleason, C.; Pavel, A.; McCamey, E.; Low, C.; Carrington, P.; Kitani, K.M.; Bigham, J.P. Twitter A11y: A browser extension to make Twitter images accessible. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–12. [Google Scholar] [CrossRef]

- Guinness, D.; Cutrell, E.; Morris, M.R. Caption crawler: Enabling reusable alternative text descriptions using reverse image search. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–11. [Google Scholar] [CrossRef]

- Xie, I.; Babu, R.; Castillo, M.D.; Han, H. Identification of factors associated with blind users’ help-seeking situations in interacting with digital libraries. J. Assoc. Inf. Sci. Technol. 2018, 69, 514–527. [Google Scholar] [CrossRef]

- Xie, I.; Babu, R.; Lee, H.S.; Wang, S.; Lee, T.H. Orientation tactics and associated factors in the digital library environment: Comparison between blind and sighted users. J. Assoc. Inf. Sci. Technol. 2021, 72, 995–1010. [Google Scholar] [CrossRef]

- Xie, I.; Babu, R.; Lee, T.H.; Wang, S.; Lee, H.S. Coping tactics of blind and visually impaired users: Responding to help-seeking situations in the digital library environment. Inf. Process. Manag. 2021, 58, 102612. [Google Scholar] [CrossRef]

- Alajarmeh, N. The extent of mobile accessibility coverage in WCAG 2.1: Sufficiency of success criteria and appropriateness of relevant conformance levels pertaining to accessibility problems encountered by users who are visually impaired. Univers. Access Inf. Soc. 2022, 21, 507–532. [Google Scholar] [CrossRef]

- Mateus, D.A.; Silva, C.A.; Eler, M.M.; Freire, A.P. Accessibility of mobile applications: Evaluation by users with visual impairment and by automated tools. In Proceedings of the 19th Brazilian Symposium on Human Factors in Computing Systems, Diamantina, Brazil, 26–30 October 2020; pp. 1–10. [Google Scholar] [CrossRef]

- Milne, L.R.; Bennett, C.L.; Ladner, R.E. The accessibility of mobile health sensors for blind users. In Proceedings of the International Technology and Persons with Disabilities Conference Scientific/Research Proceedings (CSUN 2014), San Diego, CA, USA, 17–22 March 2014; pp. 166–175. [Google Scholar]

- Rodrigues, A.; Nicolau, H.; Montague, K.; Guerreiro, J.; Guerreiro, T. Open challenges of blind people using smartphones. Int. J. Hum. Comput. Interact. 2020, 36, 1605–1622. [Google Scholar] [CrossRef]

- Ross, A.S.; Zhang, X.; Fogarty, J.; Wobbrock, J.O. Examining image-based button labeling for accessibility in android apps through large-scale analysis. In Proceedings of the 20th International ACM SIGACCESS Conference on Computers and Accessibility, Galway, Ireland, 22–24 October 2018; pp. 119–130. [Google Scholar] [CrossRef]

- Wentz, B.; Pham, D.; Tressler, K. Exploring the accessibility of banking and finance systems for blind users. First Monday 2017, 22. [Google Scholar] [CrossRef]

- Nair, A.; Mathew, R.; Mei, Z.; Milallos, R.; Chelladurai, P.K.; Oh, T. Investigating the user experience and challenges of food delivery applications for the blind and visually impaired. In Proceedings of the 25th International Conference on Mobile Human-Computer Interaction, Athens, Greece, 26–29 September 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Huh, M.; Peng, Y.H.; Pavel, A. GenAssist: Making image generation accessible. In Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology, San Francisco, CA, USA, 29 October–1 November 2023; pp. 1–17. [Google Scholar] [CrossRef]

- Morris, M.R.; Johnson, J.; Bennett, C.L.; Cutrell, E. Rich representations of visual content for screen reader users. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–11. [Google Scholar] [CrossRef]

- Wang, R.; Chen, Z.; Zhang, M.R.; Li, Z.; Liu, Z.; Dang, Z.; Yu, C.; Chen, X.A. Revamp: Enhancing accessible information seeking experience of online shopping for blind or low vision users. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–14. [Google Scholar] [CrossRef]

- Al-Mouh, N.; Al-Khalifa, H.S. The accessibility and usage of smartphones by Arab-speaking visually impaired people. Int. J. Pervasive Comput. Commun. 2015, 11, 418–435. [Google Scholar] [CrossRef]

- Radcliffe, E.; Lippincott, B.; Anderson, R.; Jones, M. A pilot evaluation of mHealth app accessibility for three top-rated weight management apps by people with disabilities. Int. J. Environ. Res. Public Health 2021, 18, 3669. [Google Scholar] [CrossRef]

- McCall, K. PDF/UA Structure Elements and the User Experience. J. Technol. Pers. Disabil. 2017, 5, 121–129. [Google Scholar]

- Kim, H.K.; Han, S.H.; Park, J.; Park, J. The interaction experiences of visually impaired people with assistive technology: A case study of smartphones. Int. J. Ind. Ergon. 2016, 55, 22–33. [Google Scholar] [CrossRef]

- Xie, I.; Lee, T.H.; Lee, H.S.; Wang, S.; Babu, R. Comparison of accessibility and usability of digital libraries in mobile platforms: Blind and visually impaired users’ assessment. Inf. Res. Int. Electron. J. 2023, 28, 59–82. [Google Scholar] [CrossRef]

- Alamri, J.M.; Barashi, W.A.; Alsulami, R.; AlHarigy, L.; Shuaib, B.; Hariri, S.; Alsomahi, A.; Alamoudi, D.; Barri, R. Arab online courses and the disabled: An automated and user-testing approach. Sci. J. King Faisal. Univ. Humanit. Manag. Sci. 2020, 23, 122–128. [Google Scholar] [CrossRef]

- Fok, R.; Zhong, M.; Ross, A.S.; Fogarty, J.; Wobbrock, J.O. A Large-Scale Longitudinal Analysis of Missing Label Accessibility Failures in Android Apps. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 29 April–5 May 2022; pp. 1–16. [Google Scholar] [CrossRef]

- Jain, M.; Diwakar, N.; Swaminathan, M. Smartphone Usage by Expert Blind Users. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–15. [Google Scholar] [CrossRef]

- Morillo, P.; Chicaiza-Herrera, D.; Vallejo-Huanga, D. System of recommendation and automatic correction of web accessibility using artificial intelligence. In Advances in Usability and User Experience: Proceedings of the AHFE 2019 International Conferences on Usability & User Experience, and Human Factors and Assistive Technology, Washington, DC, USA, 24–28 July 2019; pp. 479–489. [CrossRef]

- Anand, H.; Begam, N.; Verma, R.; Ghosh, S.; Harichandana, B.S.S.; Kumar, S. LIP: Lightweight Intelligent Preprocessor for meaningful text-to-speech. In Proceedings of the 2022 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT), Bangalore, India, 8–10 July 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Cohn, N.; Engelen, J.; Schilperoord, J. The grammar of emoji? Constraints on communicative pictorial sequencing. Cogn. Res. Princ. Implic. 2019, 4, 33. [Google Scholar] [CrossRef] [PubMed]

- Hovious, A.; Wang, C. Hidden Inequities of Access: Document Accessibility in an Aggregated Database. Inf. Technol. Libr. 2024, 43, 1–12. [Google Scholar] [CrossRef]

- Engel, C.; Müller, E.F.; Weber, G. SVGPlott: An accessible tool to generate highly adaptable, accessible audio-tactile charts for and from blind and visually impaired people. In Proceedings of the 12th ACM International Conference on PErvasive Technologies Related to Assistive Environments, Rhodes, Greece, 5–7 June 2019; pp. 186–195. [Google Scholar] [CrossRef]

- Giudice, N.A.; Palani, H.P.; Brenner, E.; Kramer, K.M. Learning non-visual graphical information using a touch-based vibro-audio interface. In Proceedings of the 14th International ACM SIGACCESS Conference on Computers and Accessibility, Boulder, CO, USA, 22–24 October 2012; pp. 103–110. [Google Scholar] [CrossRef]

- Kim, H.; Moritz, D.; Hullman, J. Design patterns and trade-offs in responsive visualization for communication. Comput. Graph. Forum 2021, 40, 459–470. [Google Scholar] [CrossRef]

- Singh, A.K.; Joshi, A. Grapho: Bringing line chart accessibility to the visually impaired. In Proceedings of the 13th Indian Conference on Human-Computer Interaction, Hyderabad, India, 9–11 November 2022; pp. 20–29. [Google Scholar] [CrossRef]

- Duarte, C.; Salvado, A.; Akpinar, M.E.; Yeşilada, Y.; Carriço, L. Automatic role detection of visual elements of web pages for automatic accessibility evaluation. In Proceedings of the 15th International Web for All Conference, Lyon, France, 23–25 April 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Zaina, L.A.M.; Fortes, R.P.M.; Casadei, V.; Nozaki, L.S.; Paiva, D.M.B. Preventing accessibility barriers: Guidelines for using user interface design patterns in mobile applications. J. Syst. Softw. 2018, 186, 111–213. [Google Scholar] [CrossRef]

- Strauss, A.; Corbin, J. Basics of Qualitative Research; Sage: Thousand Oaks, CA, USA, 1990. [Google Scholar]

- Krippendorff, K. Reliability in content analysis: Some common misconceptions and recommendations. Hum. Commun. Res. 2004, 30, 411–433. [Google Scholar] [CrossRef]

- Marzi, G.; Balzano, M.; Marchiori, D. K-Alpha Calculator—Krippendorff’s Alpha Calculator: A user-friendly tool for computing Krippendorff’s Alpha inter-rater reliability coefficient. MethodsX 2024, 12, 102545. [Google Scholar] [CrossRef]

- Siu, A.F. Advancing Access to Non-Visual Graphics: Haptic and Audio Representations of 3D Information and Data; Stanford University: Stanford, CA, USA, 2021. [Google Scholar]

- World Wide Web Consortium. Available online: https://www.w3.org/TR/WCAG21/ (accessed on 15 May 2024).

- Ara, J.; Sik-Lanyi, C. Artificial intelligence in web accessibility: Potentials and possible challenges. In Proceedings of the IAC, Vienna, Austria, 5–6 August 2022; pp. 173–181. [Google Scholar]

- World Wide Web Consortium. Available online: https://www.w3.org/WAI/research/ai2023/ (accessed on 15 May 2024).

- Lee, H.N.; Ashok, V. Towards Enhancing Blind Users’ Interaction Experience with Online Videos via Motion Gestures. In Proceedings of the 32nd ACM Conference on Hypertext and Social Media, Virtual event, 30 August–2 September 2021; pp. 231–236. [Google Scholar] [CrossRef]

- Tiwary, T.; Mahapatra, R.P. Web accessibility challenges for disabled and generation of alt text for images in websites using artificial intelligence. In Proceedings of the 2022 3rd International Conference on Issues and Challenges in Intelligent Computing Techniques (ICICT), Ghaziabad, India, 11–12 November 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Wu, S.; Wieland, J.; Farivar, O.; Schiller, J. Automatic alt-text: Computer-generated image descriptions for blind users on a social network service. In Proceedings of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing (CSCW’17), Portland, OR, USA, 25 February–1 March 2017; pp. 1180–1192. [Google Scholar] [CrossRef]

- Bernareggi, C.; Ahmetovic, D.; Mascetti, S. μGraph: Haptic Exploration and Editing of 3D Chemical Diagrams. In Proceedings of the 21st International ACM SIGACCESS Conference on Computers and Accessibility, Pittsburgh, PA, USA, 28–30 October 2019; pp. 312–317. [Google Scholar]

- Vision Ireland. Available online: https://vi.ie/interview-with-the-team-at-envision/ (accessed on 15 May 2024).

- Abdul-Malik, H.; Wentzell, A.; Ceklosky, S. How can AccessiBe’s ADHD-friendly profile, content and color adjustments improve reading and comprehension for Philadelphia college students with ADHD? J. Comput. Sci. Coll. 2022, 38, 203–204. [Google Scholar]

| Category | Number of Participants | |

|---|---|---|

| Age | 18–29 | 29 |

| 30–39 | 41 | |

| 40–49 | 27 | |

| 50–59 | 13 | |

| >59 | 10 | |

| Gender | Female | 60 |

| Male | 59 | |

| Non-binary | 1 | |

| Race | White | 54 |

| Black | 24 | |

| Asian or Pacific Islander | 19 | |

| Hispanic or Latino | 12 | |

| Other | 11 | |

| Education | High school and Associate | 30 |

| Bachelor | 46 | |

| Master | 33 | |

| Doctoral or professional degree | 8 | |

| Prefer not to answer | 3 | |

| Vision condition | Blind | 80 |

| Visually impaired | 40 |

| Task Type | Task Duration | Example (DL: Task Information) |

|---|---|---|

| Orientation task | 10 min | LoC, DPLA, HathiTrust, ArtStor, OER Commons, and the National Museum of African American History and Culture: You will have 10 min to explore the features and functions of this digital library. Please talk continuously about your thoughts and actions in relation to your interactions with the digital library during this task including its structure, features, content, format, search results, etc. Please specify your intentions for each action, the problems you encountered, and your solutions. |

| Specific search | 15 min | ArtStor: Find a World War II poster on war dogs in 1943. Find out the name of the repository that houses it. What were the two figures portrayed in the poster? |

| Exploratory search | 15 min | OER Commons: Find interactive items or graphics related to relationships between position, velocity, and acceleration. Each should represent different formats (e.g., interactive item, image, and text) or content (e.g., the relationship between velocity and acceleration, the relationship between position and velocity) of this search topic. |

| Category | Type of Situations | Definition |

|---|---|---|

| Features/elements | Difficulty finding a toggle-based search feature | A situation that arises when BVI users have difficulty finding a search box due to the unrecognizable toggle-based search feature. |

| Difficulty understanding a video feature | A situation that arises from difficulty interpreting the functionality of or executing a video-related feature due to inappropriate labeling. | |

| Difficulty navigating items on paginated sections | A situation that arises from difficulty selecting an item on paginated sections due to inappropriate labels of active elements. | |

| Difficulty distinguishing collection labels from thumbnail descriptions | A situation that arises from difficulty recognizing and comprehending collection titles due to the unseparated collection titles and ALT text for thumbnails. | |

| Content | Difficulty recognizing the content of images | A situation arises from difficulty obtaining details of images due to a lack of support for providing specifics of images. |

| Difficulty recognizing the content of graphs | A situation that arises from difficulty obtaining details of graphs due to a lack of support for providing specifics of graphs. | |

| Structure | Difficulty interacting with multilayered windows | A situation that arises from difficulty focusing on an active window due to the poor design of pop-up windows. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, I.; Choi, W.; Wang, S.; Lee, H.S.; Hong, B.H.; Wang, N.-C.; Cudjoe, E.K. Help-Seeking Situations Related to Visual Interactions on Mobile Platforms and Recommended Designs for Blind and Visually Impaired Users. J. Imaging 2024, 10, 205. https://doi.org/10.3390/jimaging10080205

Xie I, Choi W, Wang S, Lee HS, Hong BH, Wang N-C, Cudjoe EK. Help-Seeking Situations Related to Visual Interactions on Mobile Platforms and Recommended Designs for Blind and Visually Impaired Users. Journal of Imaging. 2024; 10(8):205. https://doi.org/10.3390/jimaging10080205

Chicago/Turabian StyleXie, Iris, Wonchan Choi, Shengang Wang, Hyun Seung Lee, Bo Hyun Hong, Ning-Chiao Wang, and Emmanuel Kwame Cudjoe. 2024. "Help-Seeking Situations Related to Visual Interactions on Mobile Platforms and Recommended Designs for Blind and Visually Impaired Users" Journal of Imaging 10, no. 8: 205. https://doi.org/10.3390/jimaging10080205