Abstract

This paper presents a hybrid study of convolutional neural networks (CNNs), machine learning (ML), and transfer learning (TL) in the context of brain magnetic resonance imaging (MRI). The anatomy of the brain is very complex; inside the skull, a brain tumour can form in any part. With MRI technology, cross-sectional images are generated, and radiologists can detect the abnormalities. When the size of the tumour is very small, it is undetectable to the human visual system, necessitating alternative analysis using AI tools. As is widely known, CNNs explore the structure of an image and provide features on the SoftMax fully connected (SFC) layer, and the classification of the items that belong to the input classes is established. Two comparison studies for the classification of meningioma tumours and healthy brains are presented in this paper: (i) classifying MRI images using an original CNN and two pre-trained CNNs, DenseNet169 and EfficientNetV2B0; (ii) determining which CNN and ML combination yields the most accurate classification when SoftMax is replaced with three ML models; in this context, Random Forest (RF), K-Nearest Neighbors (KNN), and Support Vector Machine (SVM) were proposed. In a binary classification of tumours and healthy brains, the EfficientNetB0-SVM combination shows an accuracy of 99.5% on the test dataset. A generalisation of the results was performed, and overfitting was prevented by using the bagging ensemble method.

1. Introduction

According to Cancer Research UK, over 100 different types of brain tumours (BTs), both malignant and benign, can develop in the human brain. The statistics provided by the WHO show that the average survival rate is only 35%, and in 2019, approximately over 700,000 people were diagnosed with a brain tumour [1]. In 2020, over 87,000 patients were diagnosed with brain tumours, and in 2021, over 84,170 cases were reported [2]. A report indicated by the WHO shows that BTs represent less than 2% of cancers in humans [3].

In order to detect the BTs, computed tomography (CT) and MRI (magnetic resonance imaging) techniques are used to scan the brain and display cerebral matter as texture pixels.

Machine and deep learning are two subfields of artificial intelligence. These tools can be used individually or together to classify the different types of diseases. Moreover, the detection process can be integrated into an automatic or semi-automatic model in order to achieve early detection of BTs, where the main advantage is an increase in survival rates [4]. Several efforts have been made to develop highly accurate and robust methods for the classification of BTs from CT images. The methods can be divided into the following categories: various pre-trained CNNs [4,5,6,7,8,9,10,11,12,13,14,15,16] and hybrid methods [8,9,10,17].

In our proposed framework, three deep learning and ML tools were used, and an evaluation of classification in the context of accuracy and area under the curve (AUC) was performed. This combination of CNNs and ML tools improves upon the low accuracy obtained for the base model, and it leads to the best classification accuracy when the ML tools are trained on features generated by CNNs.

The main objective of this paper is to compare different CNNs and ML models to identify the best combination for distinguishing between meningioma tumour and healthy brain tissue, using information extracted from brain MRI images. The secondary objective was to optimize a classical CNN in an ablation process and to obtain efficient results when ML models are trained with the features that pertain to an SFC layer.

The following contributions have been made in order to fulfil the paper’s objectives:

- Selection of an adequate brain MRI dataset that contains meningioma tumours and healthy brains;

- Development of an original CNN.

- Selection of two pre-trained models (i.e., EfficientNetV2B0 and DenseNet169) according to the top five accuracies obtained on an ImageNet dataset.

- Three CNN models’ features were used to train RF, KNN, and SVM machine learning classifiers.

- We measured classification accuracy and ROC-AUC concerning the base CNN and transfer learning models.

- A bagging ensemble approach with 5-fold cross-validation was employed to prevent overfitting and aggregate their results.

- In the last part of the study, the detection of the best hybrid model that can differentiate between the studied classes was performed.

The worrying statistics that show the global incidence of brain tumours is the primary motivation. Significant achievements toward advanced and integrated AI in enhancing patient quality of life include the integration of AI tools for the classification process into the medical profession and the corroboration of AI tools for better classification.

The limitations of this paper are the high computational power and dependence on a large set of images; in addition, this type of data occupies significant space.

The current paper is organized as follows in order to achieve the suggested goal and contributions. Section 2 contains a detailed selection of relevant studies that deal with the classification of different tumour types. The methodology is described in Section 3, where a step-by-step breakdown of this paper is given and a detailed flowchart is provided. The study elements, such as dataset, augmentation, feature extraction and evaluation, CNNs, ML models, and performance metrics, are also detailed. Section 4 is assigned to results and discussions. Section 5 contains a comparison with state-of-the-art models, and finally, the conclusions and some future prospects in this field of study are presented in Section 6.

2. Related Work

This section reviews prior studies that were conducted to classify brain tumours utilising pre-training with classic architecture, CNNs, and ML models. Numerous papers have already been used as references for the classification of brain MRI images by CNNs because of their superior accuracy.

The studies were carried out on the public databases Kaggle MRI [4,5,6,7,8,9,10,11,12,13,14], SARTAJ, and Br35H [6]. The MRI images were classified by specialists into healthy brains and different tumour types. This database fed CNNs with normal architectures, pre-trained CNNs, or hybrid models of CNNs ML models.

In our study, the original CNN, pre-trained CNNs, and hybrid models were applied. From the scientific literature, only the papers that dealt with this subject were selected. Furthermore, for a thorough overview, Table 1 specifies the references, datasets (with the number of MRI images), models, performance accuracy, and limitations.

Table 1.

Comparative analysis with state-of-the-art works.

This paper proposes a hybrid model because the many studied references proposed only one research direction, such as original CNN [6,13,18], original CNN and pre-trained CNN [4], pre-trained CNN [5,7,11,14], pre-trained CNN and ML [9,10,19,20], and proposed CNN and ML [8,12].

3. Proposed Method

This section provides the first explanation of our proposed method in general terms. In the next subsections, we delve deeper into the details of five key sections.

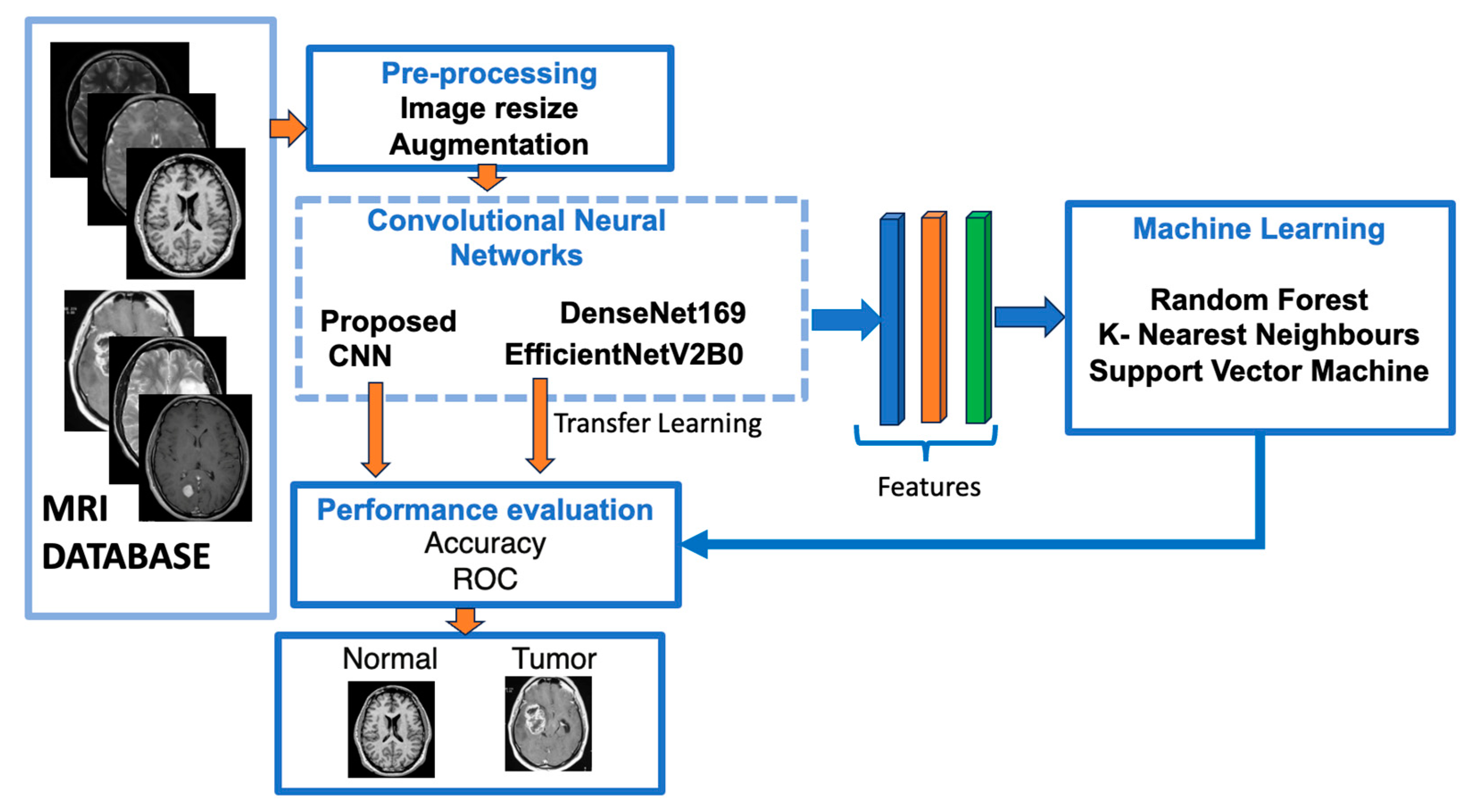

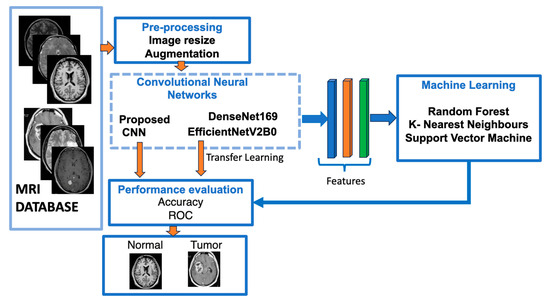

Figure 1 shows a flowchart of our suggested approach for classifying brain meningioma tumours versus normal brains.

Figure 1.

The structure of our suggested models utilising deep learning approaches.

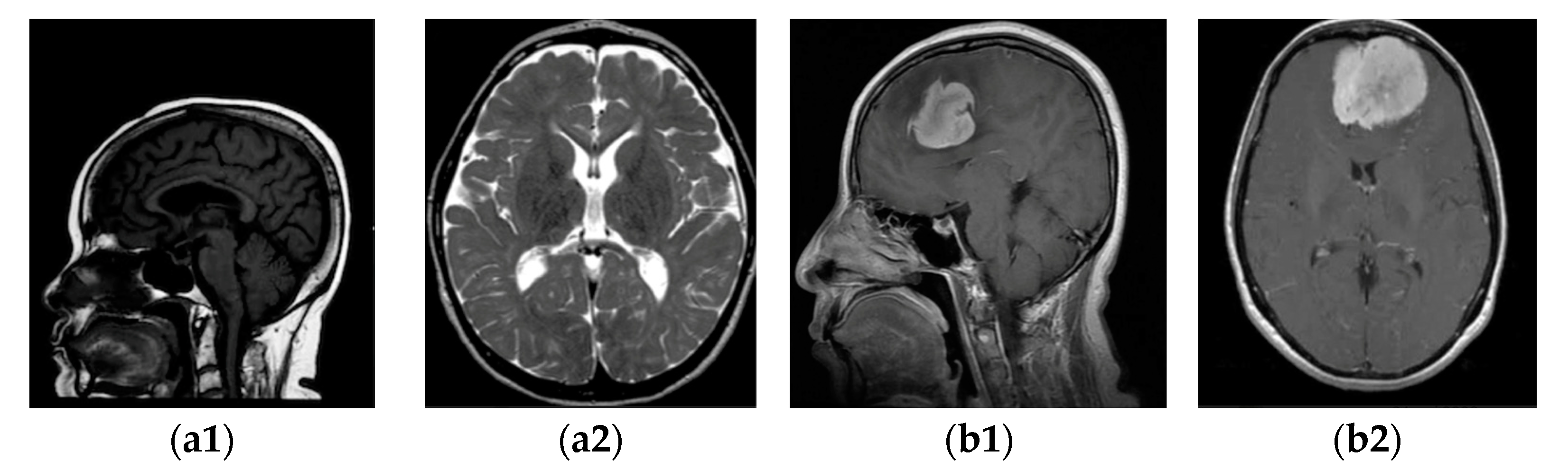

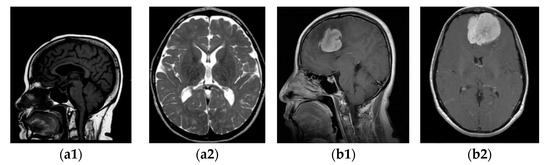

Firstly, we collected the meningioma and normal brain MRI images. The dataset is described in Section 3.1, and some samples are shown in Figure 2.

Figure 2.

MRI images of two categories: (a1,a2) without tumour; (b1,b2) with tumour.

Before feeding the models, input MRI images underwent pre-processing methods such as MRI resizing and augmentation; an example of image augmentation is shown in Figure 2. Then, as feature extractors, the pre-processed images are fed into two pre-trained CNN models, and a model is built step-by-step. The three machine learning classifiers that were trained using features extracted from previously trained CNN models are discussed in the same section.

The best mixture was chosen based on the best accuracy, F1-score, the Matthews correlation coefficient and the AUC computed from confusion matrices provided by classifiers.

3.1. Brain MRI Kaggle Dataset

The investigations delineated in this research were executed through the use of a publicly available dataset acquired from the Kaggle platform [21,22]. There were 3264 brain MRI images with different tumour types and without tumours. From this database, the following package was selected in our study: for training images, 822 meningioma tumours and 395 healthy patients, and for testing, 115 meningioma tumours and 105 healthy patients. In the dataset of images with and without tumours, “yes” and “no”, respectively, were labelled. The type of acquisition method is T1-weighted, where contrast images are enhanced by the type of MRI and fat tissue is highlighted in all three planes: axial, sagittal, and coronal. Some samples with (b) and without meningioma tumour (a) in the sagittal (a1, b1) and horizontal (a2, b2) planes are displayed in Figure 2.

The experiments were performed on a PC with the following architecture: MacBook Pro, Chip Apple, US, California (M1 Pro), memory (16 GB), total number of cores: 10 (8 performance and 2 efficiency), and sourced from Romania.

As a software environment, Google Colab was chosen because it is a powerful tool for Python 3.10 development. The image database in Google Drive was stored, and a connection with Google Colab was performed. The main libraries, i.e., NumPy 1.26.14, Pandas 2.2.1, Tensorflow 2.17.0, Seaborn 0.13.2 and Sklearn 1.4.1 versions, were imported for the showing, processing, and classification of the features and images.

3.2. Data Augmentation and Pre-Processing

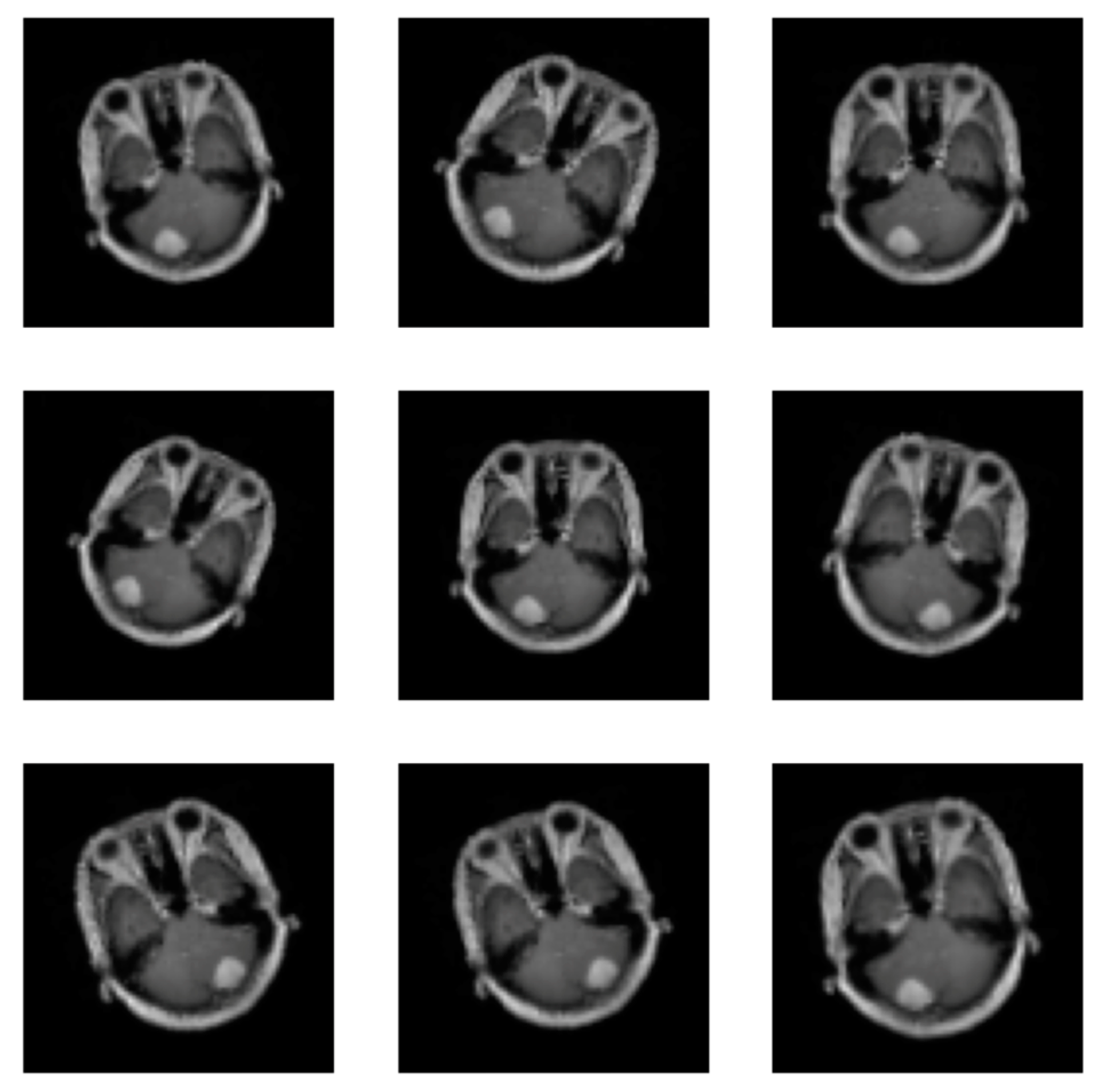

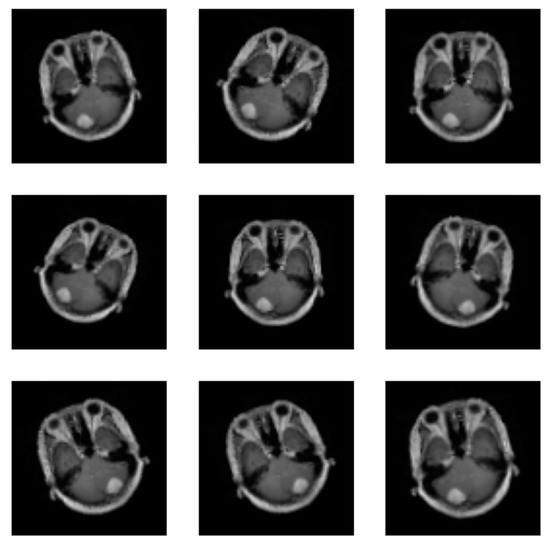

At this level, deep learning needs a lot of data to learn from. Therefore, data augmentation is employed to increase the quantity of data accessible by altering the original image. Supplementary data can be used to increase the efficacy of the categorized results. Images can undergo the following operations: rotation, scaling, translation, and filtering [23]. This paper uses the random horizontal flip process (the argument represents the probability of the image being flipped at a random angle), random rotation (the argument is a range of degrees by which the image is rotated) and zoom (the argument is a range that configures the percentage of zooming), as shown in Figure 3. All augmentation processes in the training stage are used for the proposed CNN, pre-trained EfficientNetV2B0 and DenseNet169 CNNs, so all CNNs were trained with 2466 meningioma tumour images and 1185 healthy patient images.

Figure 3.

Images augmentation with flip, rotation, and zoom methods.

All images were resized for the proposed CNN with a resolution of 80 × 80 and EfficientNetV2B0 and DenseNet169 with a resolution of 224 × 224.

3.3. State-of-the-Art CNN and ML Models

To improve classification, the output of the fully connected layer of the CNN will be processed as an input for ML models. To facilitate feature extraction and improve classification accuracy, a combination of CNNs and ML models was created, and its empirical implementation was monitored. MLs are faster and more efficient than other algorithms because of the hyperparameters and methods integrated that allow them to classify a large number of features; thus, ML models in combination with CNNs are enabled to obtain the best outcomes for brain tumours. In this study, three CNN models were used, and the number of total, trainable, and non-trainable parameters are shown in Table 2.

Table 2.

The parameters used for all CNNs.

Table 3 presents the proposed CNN model, which includes several layers and parameters, and the best architecture was chosen through an ablation process. The selection was based on obtaining low parameters and high accuracy values.

Table 3.

Ablation process for proposed CNN.

The pre-trained CNNs were chosen in accordance with Keras applications https://keras.io/api/applications/ (accessed on 10 June 2024). For DenseNet169, the top five accuracies were 93.2% (14.3 million parameters) and 94.3% (7.2 million parameters) for EfficientNetV2B0, respectively.

The empirical optimum architecture of the proposed CNN is highlighted in bold, as shown in Table 3. The number of batches was 20, the image size was 80 × 80, and there were 15 epochs and 3 convolutional layers. A total of 15 layers—one input layer, three max-pooling layers, three dropout layers, one flattening layer, two dense layers, and one output layer—define the depth of the proposed CNN. The original architecture and the parameters for each layer are shown in Table 4.

Table 4.

Parameter values at each layer of the proposed CNN model.

The roles of each layer and function contained in the proposed CNN are described as follows. The rectified linear unit activation function, called ReLU, is applied in the first dense layer, which captures more complex relationships between input and output layers, performing a threshold operation on each element of the input. In the second dense layer, the SoftMax function is used for a binary classification task [24]. In addition to the CNN model displayed in Table 4, it must be mentioned that each convolution layer contains the ReLU function in order to detect the features within the image. The model also has a pooling layer between two consecutive convolutional layers to reduce the spatial dimensions of the feature maps and a dropout layer for preventing overfitting. In the last two layers, the SoftMax function plays the role of classification, and this function is attached to the dense layer. Thus, an adequate feature extractor is set up for image classification and for reshaping the output into a one-dimensional vector by means of a flattening layer.

EfficientNetV2B0 pertains to the EfficientNet CNN model category proposed by Tan et al. [25]. The authors came up with the idea of uniformity scales in all dimensions of depth, width, and resolution using a compound coefficient. The next alternative version, EfficientNet-B0, inverts bottleneck residual blocks of MobileNetV2. The architecture of EfficientNet consists of mobile-inverted bottleneck layers, which are a combination of depth-wise separable convolutions and inverted residual blocks. In addition, the model uses squeeze-and-excitation optimisation to further enhance the performance model. This type of CNN is pre-trained on CIFAR-100 and ImageNet.

DenseNet169 (Densely Connected Convolutional Networks) pertains to the DenseNet group proposed by Huang et al. [26] and is pre-trained on the CIFAR-10, CIFAR-100, SVHN, and ImageNet datasets. The main difference between versions is the size and accuracy of the model. The DenseNet169 architecture has the following convolutional, max pooling, dense, and transition layers connected in a feed-forward fashion. Moreover, like the proposed CNN, the architecture of DenseNet169 uses two main activation functions: ReLU and SoftMax.

3.4. Training of CNNs and ML Models

After an ablation process for the proposed CNN, the following values for hyperparameters were established: batches of 20, image sizes of 80 × 80, and epochs of 15. The same hyperparameters were kept for EfficientNetV2B0 and DenseNet169. For this CNN, no other resolution size was allowed—with the exception of DenseNet169, where the resolution was 224 × 224. Also, the learning rate for all CNNs was set to 0.001.

For parameter selection for the ML models, a tuning process for the regularisation of hyperparameters was performed; these were selected as a combination of hyperparameter values with the highest accuracy. The values of hyperparameters that meet this criterion for each ML classifier are shown in Table 5. The tuning was performed in accordance with the type and number of hyperparameters for each ML model. Two key hyperparameters influence the performance of RF: the number of trees (NT) and the depth of those trees (MD). We set the depth of trees values to 10, 15, and 20, the number of trees to 50, 100, and 150, and the Gini index as a cost function instead of entropy and log loss. The SVM classifier has two main hyperparameters: C and Gamma. Their values were set to [0.00001, 0.0001, 0.001, 0.01, 0.1, 1, 10, 100, 1000, 10,000]. The SVM algorithm contains linear, sigmoid, and radial basis function kernels. The number of neighbours of the KNN classifier was set from 1 to 10, and alternatively, to find the nearest neighbours, the KDTree or BallTree classes can be used.

Table 5.

The established hyperparameters for MLs.

3.5. Deep Feature Extraction and Classification

Some of the key elements that can influence the outcome are feature extraction, the choice of an adequate classifier, and their classification. In order to extract the features from the images, the researchers employed various ML algorithms to extract the fractal dimension features from breast images (sourced from the U.S.) and classify them with RF, Extra Trees (ET), and XGBoost classifiers [27]. The combination of geometric features and AdaBoost, KNN, DT, and Gaussian Naïve Bayes classifiers produced interesting results when skin lesion images were manipulated [28]. In [29], the PyCaret AutoML performs complex tasks for feature extraction from fundus eye image classification.

All enumerated studies show that all features were extracted with processed methods from the region of interest or the whole images. This study is an extension of the classification of features produced by CNNs.

The CNN produces features automatically, and the classifiers are applied to the penultimate layer of each CNN. To train each CNN, validation and test sets are selected. The original brain MRI images are fed to the CNNs, and the output is the extracted features from the fully connected layer. Subsequently, healthy and tumour brain susceptibility predictions are performed using SVM, RF, and KNN classifiers with the new training, validation, and test data.

An SVM is an ML tool often used for the classification of features extracted from brain MRI [29]. This tool uses the maximum separating hyperplane [20] to distinguish between the healthy and tumour brains. RF is an ensemble learning method for classification based on decision trees, and together with pre-trained CNNs, it provides a comprehensive performance analysis of the classification problem [27,29,30]. The combination of CNN and KNN effectively detects various forms of brain tumours with promising accuracy [31]. KNN is a non-parametric supervised learning method that has been successfully used to solve classification problems [32].

3.6. Feature Evaluation

The performance metrics that we take into consideration in this work are accuracy (ACC) and AUC. The accuracy score is determined using true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN) extracted from the confusion matrix. The ROC metric is obtained by plotting “sensitivity” on the y-axis versus “1-specificity” on the x-axis.

The F1-score is an independent metric computed as a harmonic mean between precision and recall. The closer the F1-score value is to one, the more reliable the proposed model is.

The selected dataset is unbalanced, and in this case, the Matthews correlation coefficient (MCC) is proposed because it offers an effective solution to overcoming the class imbalance [28].

The proposed metrics are expressed by Equations (1)–(5):

The ROC curve is used to validate the objective of this paper, i.e., classifying meningioma tumours and healthy brains, as the ROC matrix is independent of the decision threshold and invariant to a priori class probability distributions [33].

4. Results and Discussions

In this section, we discuss the success of the three hybrid approaches: proposed CNN-ML model, pre-trained EfficientNetV2B0-ML model, and DenseNet169-ML model.

In the first combination, an original CNN with fifteen layers is proposed, which was trained with the images that pertain to the brain MRI described in Section 3.1. Also, the transfer learning of pre-trained CNNs was performed with features from the same database.

A connection between the ML classifier and the penultimate layer of each CNN was made because the features that pertain to this layer fed the SVM, RF, and KNN classifiers.

The scope of our experiments is to find a hybrid combination to determine the most accurate approach for meningioma tumour brain diagnosis.

In the first experiment, we tested our own CNN in the ablation process. The best combination is highlighted in Table 3, and its architecture is highlighted in Table 4. With the obtained structure, the best accuracy on the test dataset was 0.945.

The second experiment involved the transfer learning of two pre-trained CNNs and verifying their efficiency in terms of accuracy. The initial accuracy on the test dataset without ML tools was 0.985 for EfficientNetV2B0 and 0.981 for DenseNet169, respectively. Next, we found that the accuracy could be improved if the ML classifiers were applied to the features stored on the penultimate layer of each CNN. Hence, the following empirical step was performed.

The third experiment started with the combinations of CNN and ML models, pre-trained CNN EfficientNetV2B0 and ML models, and DenseNet169 and ML models to verify if the accuracy was improved.

Table 6 below shows the train and validation sets, the combinations of CNNs and ML models, the confusion matrices, and the accuracy for the validation and test datasets. A bagging ensemble method for each classifier was applied to the features generated on the penultimate layer [27]. The 5-fold cross-validation was established for optimally selecting the classifier.

Table 6.

Experimental results for combination CNNs-ML models.

Comparing the initial accuracy obtained on the test dataset, an improvement was obtained for SVM and RF, and the KNN remained the same. In this case, the performance was improved, and the two classifiers, SVM and RF, learned better from the features provided by our CNN.

Both pre-trained EfficientNetV2B0 and DenseNet169 CNNs and the ML classifiers brought about improvements in the test dataset. SVM increased the accuracy for DenseNet169 from 0.891 to 0.986, and for EfficientNetV2B0, from 0.985 to 0.995. Furthermore, Table 6 shows that all classifiers exceeded the initial accuracy.

Other reliable metrics are the F1-score and MCC. The former assesses the predictive skill of a model’s performance, and the latter is more informative than F1-score and accuracy because it can be applied when classes are imbalanced. The processed dataset has 937 meningioma tumours and 500 healthy patients. For the same combination of EfficientNetV2B0 and SVM, the F1-score and MCC provide the highest values of 0.997 and 0.990, respectively. The results provided by the proposed CNN are comparable with pre-trained CNNs, and the difference is that the proposed CNN and RF work best, with an accuracy of 0.986 obtained on the test dataset.

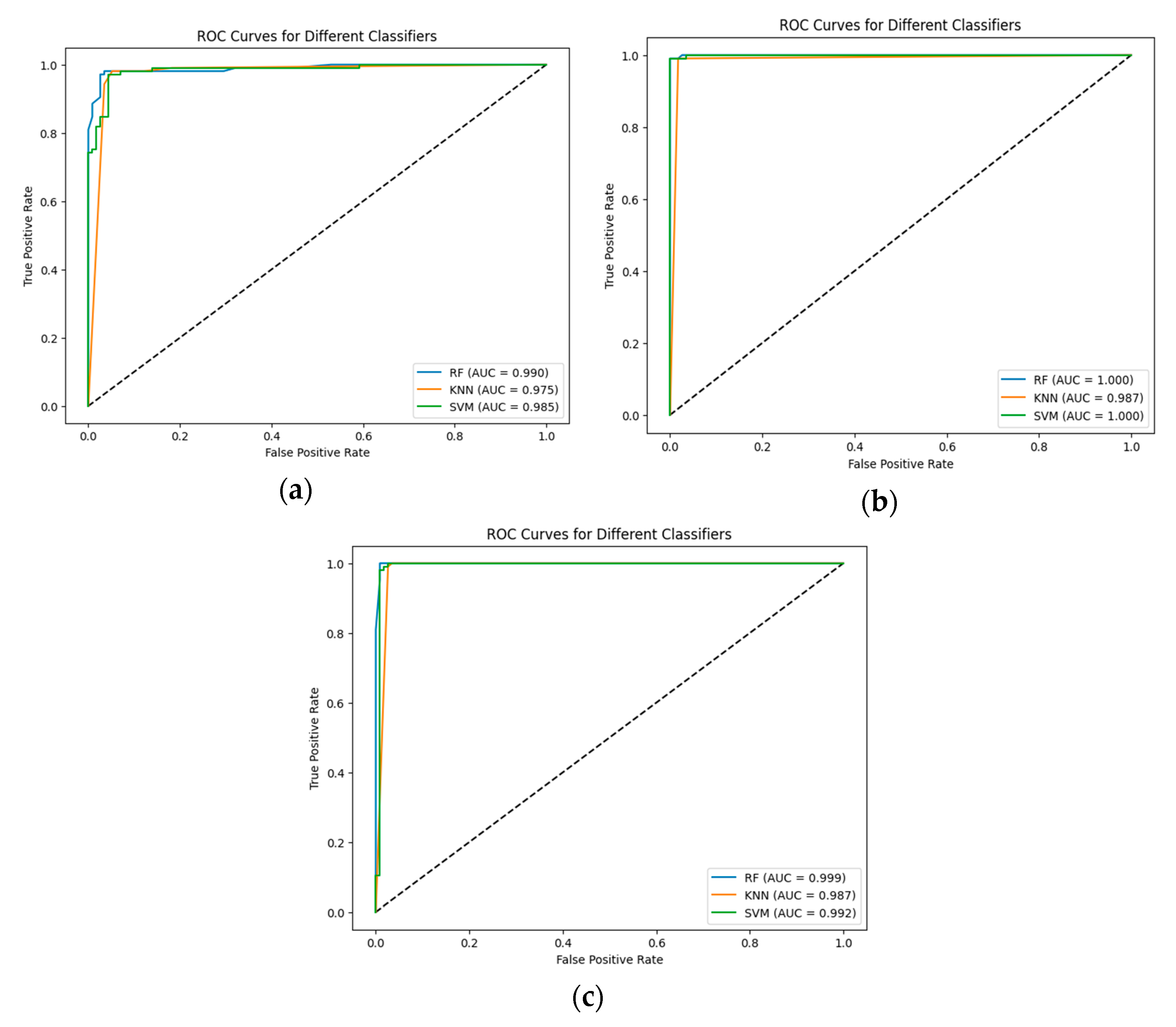

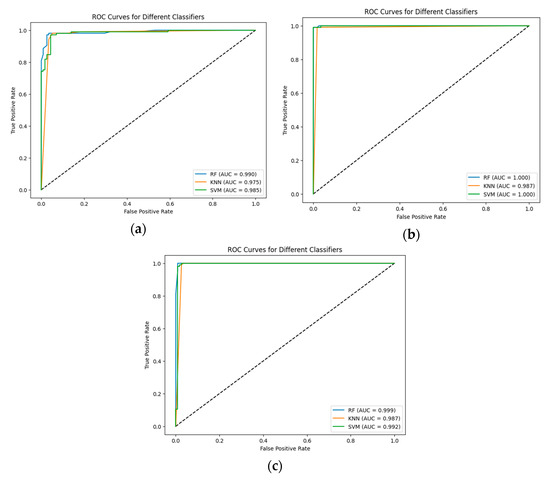

The results were validated using the ROC curve. The ROC curves and area under the curve values for each CNN and classifier are shown in Figure 4.

Figure 4.

The ROC curves and area under the curve for each classifier: (a) Proposed CNN; (b) EfficientNetV2B0; and (c) DenseNet169.

The higher value of ROC-AUC refers to the better performance of the proposed hybrid models. In the present study, we observe that the pre-trained EfficientNetV2B0 has the best performance for initial accuracy in combination with all the proposed ML models, as confirmed by the ROC-AUC, which is 100% for RF and SVM. Also, the proposed CNN and RF are validated by an ROC-AUC of 0.99.

5. Comparison with the State-of-the-Art Models

The suggested hybrid models, which use three distinct classifiers—RF, SVM, and KNN—have demonstrated the highest results in accuracy and ROC-AUC on the brain MRI datasets, as previously discussed. It yielded promising findings, answering our original query about the utility of the proposed CNN model specifications and evaluating their usefulness by contrasting their classification performance with those of other pre-trained CNNs available in the literature. To achieve that, we need to evaluate their performance against a few ML classifiers that we discussed in our literature review. The procedures in the related work section were tested using the brain MRI dataset that is accessible on the Kaggle platform. We compared our suggested strategy in all three approaches (proposed CNN-ML, EfficientNetV2B0-ML, and DenseNet169-ML) with the results we obtained on the brain MRI dataset. Table 7 shows the results of our proposal and state-of-the-art models.

Table 7.

Comparison results with state-of-the-art CNN models.

As can be seen, our proposals yield results that are similar to those shown in Table 5, and our approach produces the best accuracy when it comes to blends. DenseNet169-SVM (98.6%), CNN-RF (97.2%), and EfficientNetV2B0-SVM (99.5%) are the proposed models. The SVM classifier outperforms other classifiers in the suggested model and cutting-edge techniques, producing the greatest results when the SoftMax activation function is replaced with it. The EfficientNetV2B0-SVM combination outperforms the other pertinent experiments that employed ResNet50-SVM, AlexNet-SGD, and AlexNet-SSMO.

6. Conclusions

This study demonstrates the potential of hybrid CNN-ML models and a novel CNN-ML model for accurately classifying brain meningioma tumours from brain MRI images. ML classifiers, SVM, KNN, and RF, were efficiently used to classify features extracted from fully connected layers. Among the three models tested, the EfficientNetB0-SVM model achieved the highest classification accuracy of 99.5%. In addition, the proposed CNN model, together with the RF classifier, gives an accuracy of 97.2%. The proposed CNN-RF is an efficient model from the point of view of time and resources. Overall, we succeeded in accurately classifying meningioma brain tumours in this study by pointing out the importance of using a hybrid model.

Future work can be extended towards other types of images and pathologies and automated machine learning tools for feature classification in a tuning process.

Author Contributions

Conceptualization, S.M. and M.B.; formal analysis, S.M. and G.T.; investigation, G.T.; methodology, S.M. and M.B.; software, S.M. and G.T.; validation, S.M. and M.B.; writing—original draft preparation, S.M. and G.T.; writing—review and editing, S.M. and M.B. All authors have read and agreed to the published version of the manuscript.

Funding

This study received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

This study does not contain any studies with human participants or animals performed by any of the authors.

Data Availability Statement

The data that support the findings of this study are openly available at https://data.mendeley.com/datasets/w4sw3s9f59/1 (accessed on 10 June 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Rehman, A.; Khan, M.A.; Saba, T.; Mehmood, Z.; Tariq, U.; Ayesha, N. Microscopic brain tumour detection and classification using 3D CNN and feature selection architecture. Microsc. Res. Tech. 2021, 84, 133–149. [Google Scholar] [CrossRef] [PubMed]

- Sharif, M.I.; Li, J.P.; Khan, M.A.; Saleem, M.A. Active deep neural network features selection for segmentation and recognition of brain tumours using MRI images. Pattern Recogn. Lett. 2020, 129, 181–189. [Google Scholar] [CrossRef]

- Mehrotra, R.; Ansari, M.A.; Agrawal, R.; Anand, R.S. A Transfer Learning approach for AI-based classification of brain tumours. Mach. Learn. Appl. 2020, 2, 10–19. [Google Scholar]

- Mahmud, M.I.; Mamun, M.; Abdelgawad, A. A Deep Analysis of Brain Tumour Detection from MR Images Using Deep Learning. Netw. Algorithms 2023, 16, 176. [Google Scholar] [CrossRef]

- Ahmmed, S.; Podder, P.; Mondal, M.R.H.; Rahman, S.M.A.; Kannan, S.; Hasan, M.J.; Rohan, A.; Prosvirin, A.E. Enhancing Brain Tumour Classification with Transfer Learning across Multiple Classes: An In-Depth Analysis. BioMedInformatics 2023, 3, 1124–1144. [Google Scholar] [CrossRef]

- Albalawi, E.; Thakur, A.; Dorai, D.R.; Bhatia Khan, S.; Mahesh, T.R.; Almusharraf, A.; Aurangzeb, K. Enhancing brain tumor classification in MRI scans with a multi-layer customized convolutional neural network approach. Front. Comput. Neurosci. 2024, 18, 1418546. [Google Scholar] [CrossRef]

- Abdusalomov, A.B.; Mukhiddinov, M.; Whangbo, T.K. Brain tumour detection based on deep learning approaches and magnetic resonance imaging. Cancers 2023, 15, 4172. [Google Scholar] [CrossRef]

- Saeedi, S.; Rezayi, S.; Keshavarz, H.; Niakan-Kalhori, S.R. MRI-based brain tumour detection using convolutional deep learning methods and chosen machine learning techniques. BMC Med. Inform. Decis. Mak. 2023, 23, 16. [Google Scholar] [CrossRef]

- Celik, M.; Inik, O. Development of hybrid models based on deep learning and optimized machine learning algorithms for brain tumour Multi-Classification. Expert Syst. Appl. 2024, 238, 122159. [Google Scholar] [CrossRef]

- Sarkar, A.; Maniruzzaman, M.; Alahe, M.A.; Ahmad, M. An Effective and Novel Approach for Brain Tumour Classification Using AlexNet CNN Feature Extractor and Multiple Eminent Machine Learning Classifiers in MRIs. J. Sens. 2023, 2023, 1224619. [Google Scholar] [CrossRef]

- Mahmoud, A.; Awad, N.A.; Alsubaie, N.; Ansarullah, S.I.; Alqahtani, M.S.; Abbas, M.; Usman, M.; Soufiene, B.O.; Saber, A. Advanced Deep Learning Approaches for Accurate Brain Tumour Classification in Medical Imaging. Symmetry 2023, 15, 571. [Google Scholar] [CrossRef]

- Bansal, S.; Jadon, R.S.; Gupta, S.K. A Robust Hybrid Convolutional Network for Tumour Classification Using Brain MRI Image Datasets. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 576. [Google Scholar]

- Woźniak, M.; Siłka, J.; Wieczorek, M. Neural Computing and Applications Deep neural network correlation learning mechanism for CT brain tumour detection. Neural Comput. Appl. 2021, 35, 14611–14626. [Google Scholar] [CrossRef]

- Zebari, N.A.; Alkurdi, A.A.H.; Marqas, R.B.; Salih, M.S. Enhancing Brain Tumour Classification with Data Augmentation and DenseNet121. Acad. J. Nawroz Univ. 2023, 12, 323–334. [Google Scholar] [CrossRef]

- Haque, R.; Hassan, M.M.; Bairagi, A.K.; Shariful Islam, S.M. NeuroNet19: An explainable deep neural network model for the classification of brain tumors using magnetic resonance imaging data. Sci. Rep. 2024, 14, 1524. [Google Scholar] [CrossRef]

- Thakur, M.; Kuresan, H.; Dhanalakshmi, S.; Lai, K.W.; Wu, X. Soft Attention Based DenseNet Model for Parkinson’s Disease Classification Using SPECT Images. Front. Aging Neurosci. 2022, 14, 908143. [Google Scholar] [CrossRef]

- Hassan, M.M.; Haque, R.; Shariful Islam, S.M.; Meshref, H.; Alroobaea, R.; Masud, M.; Bairagi, A.K. NeuroWave-Net: Enhancing epileptic seizure detection from EEG brain signals via advanced convolutional and long short-term memory networks. AIMS Bioeng. 2024, 11, 85–109. [Google Scholar] [CrossRef]

- Wu, X.; Zhang, Y.T.; Lai, K.W.; Yang, M.Z.; Yang, G.L.; Wang, H.H. A Novel Centralized Federated Deep Fuzzy Neural Network with Multi-objectives Neural Architecture Search for Epistatic Detection. IEEE Trans. Fuzzy Syst. 2024. [Google Scholar] [CrossRef]

- Casapu, C.I.; Moldovanu, S. Classification of Microorganism Using Convolutional Neural Network and H2O AutoML. Syst. Theory Control. Comput. J. 2024, 4, 15–21. [Google Scholar] [CrossRef]

- Wu, X.; Wei, Y.; Jiang, T.; Wang, Y.; Jiang, S. A micro-aggregation algorithm based on density partition method for anonymizingbiomedical data. Curr. Bioinform. 2019, 7, 667–675. [Google Scholar] [CrossRef]

- Bhuvaji, S.; Kadam, A.; Bhumkar, P.; Dedge, S.; Kanchan, S. Brain tumour classifcation (MRI). Kaggle 2020. [Google Scholar] [CrossRef]

- Shahid, H.; Khalid, A.; Liu, X.; Irfan, M.; Ta, D. A deep learning approach for the photoacoustic tomography recovery from undersampled measurements. Front. Neurosci. 2021, 15, 598693. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; Yu, Z.; Wang, Y.; Zheng, H. TumorGAN: A Multi-Modal Data Augmentation Framework for Brain Tumor Segmentation. Sensors 2020, 20, 4203. [Google Scholar] [CrossRef] [PubMed]

- Boob, D.; Dey, S.S.; Lan, G.H. Complexity of training ReLU neural network. Discret. Optim. 2022, 44, 100620. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNetV2: Smaller Models and Faster Training. arXiv 2021, arXiv:2104.00298. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. 2017. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Tăbăcaru, G.; Moldovanu, S.; Barbu, M. Texture Analysis of Breast US Images Using Morphological Transforms, Hausdorff Dimension and Bagging Ensemble Method. In Proceedings of the 2024 32nd Mediterranean Conference on Control and Automation (MED), Chania, Greece, 11–14 June 2024. [Google Scholar]

- Moldovanu, S.; Miron, M.; Rusu, C.-G.; Biswas, K.C.; Moraru, L. Refining skin lesions classification performance using geometric features of superpixels. Sci. Rep. 2023, 13, 11463. [Google Scholar] [CrossRef]

- Tăbăcaru, G.; Moldovanu, S.; Răducan, E.; Barbu, M. A Robust Machine Learning Model for Diabetic Retinopathy Classification. J. Imaging 2023, 10, 8. [Google Scholar] [CrossRef]

- Uyar, K.; Tașdemir, S.; Űlker, E.; Őztűrk, M.; Kasap, H. Multi-class brain normality and abnormality diagnosis using modified Faster R-CNN. Int. J. Med. Inform. 2021, 155, 104576. [Google Scholar] [CrossRef]

- Shanjida, S.; Islam, S.; Mohiuddin, M. MRI-Image based Brain Tumour Detection and Classification using CNN-KNN. In Proceedings of the 2022 IEEE IAS Global Conference on Emerging Technologies (GlobConET), Arad, Romania, 20–22 May 2022; pp. 900–905. [Google Scholar]

- Deepak, S.; Ameer, P.M. Automated categorization of brain tumour from MRI using cnn features and SVM. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 8357–8369. [Google Scholar] [CrossRef]

- Bradley, A.P. The use of the area under the ROC curve in the evaluation of machine learning algorithms. Pattern Recognit. 1997, 30, 1145–1159. [Google Scholar] [CrossRef]

- Siar, M.; Teshnehlab, M. Brain Tumour Detection Using Deep Neural Network and Machine Learning Algorithm. In Proceedings of the 2019 9th International Conference on Computer and Knowledge Engineering (ICCKE), Mashhad, Iran, 24–25 October 2019; IEEE: Piscataway, NJ, USA; pp. 363–368. [Google Scholar]

- AlSaeed, D.; Omar, S.F. Brain MRI analysis for Alzheimer’s disease diagnosis using CNN-based feature extraction and machine learning. Sensors 2022, 22, 2911. [Google Scholar] [CrossRef] [PubMed]

- Bohra, M.; Gupta, S. Pre-trained CNN Models and Machine Learning Techniques for Brain Tumour Analysis. In Proceedings of the 2nd International Conference on Emerging Frontiers in Electrical and Electronic Technologies (ICEFEET), Patna, India, 24–25 June 2022; pp. 1–6. [Google Scholar]

- Khushi, H.M.T.; Masood, T.; Jaffar, A.; Akram, S.; Bhatti, S.M. Performance Analysis of state-of-the-art CNN Architectures for Brain Tumour Detection. Int. J. Imaging Syst. Technol. 2024, 34, e22949. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).