Vision-Based Flying Obstacle Detection for Avoiding Midair Collisions: A Systematic Review

Abstract

:1. Introduction

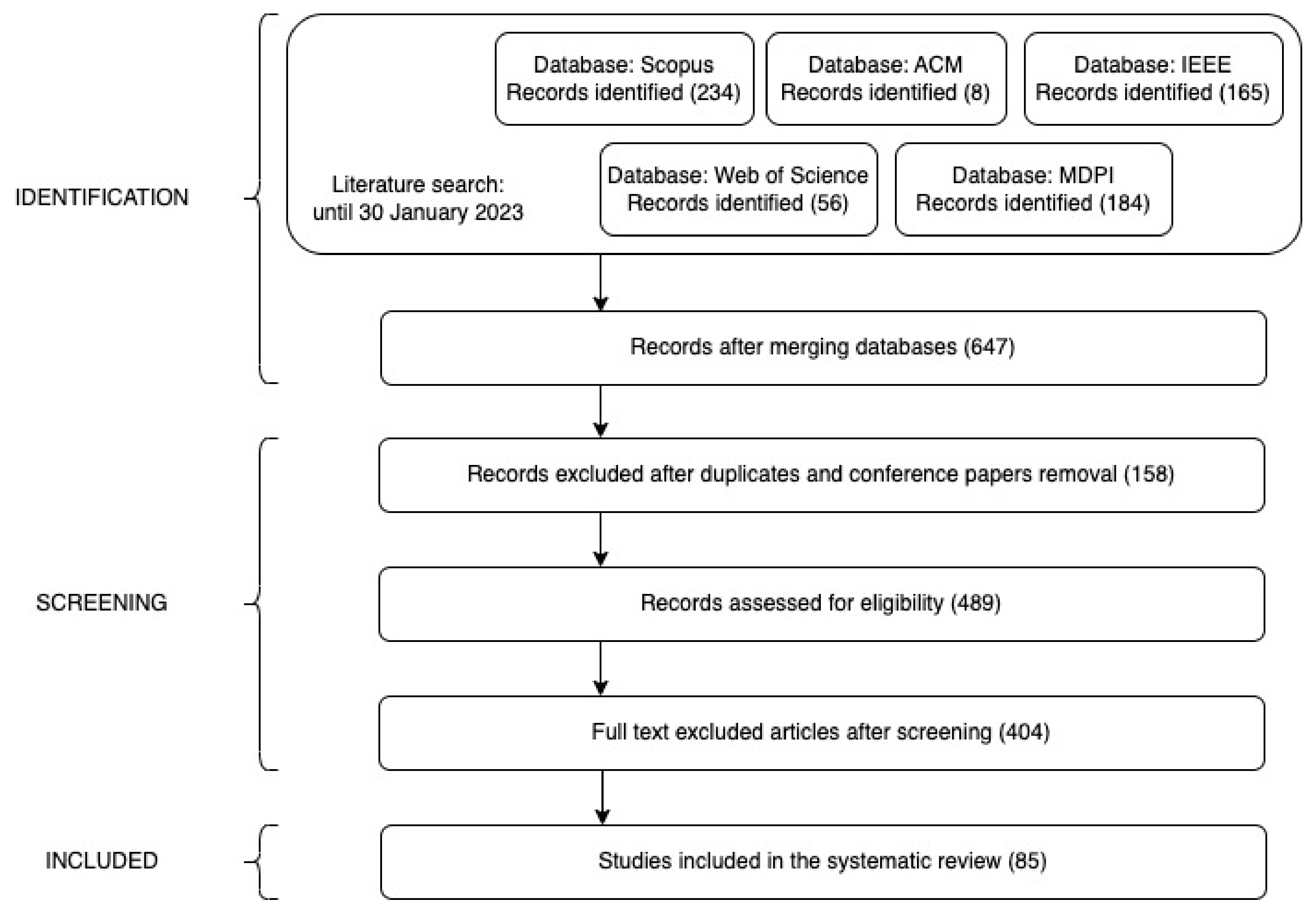

2. Research Methodology

2.1. Search Criteria

- The papers used computer vision only to detect moving obstacles or threats.

- Object detection was used to avoid midair collisions in manned or unmanned aircraft.

- The papers were written in English.

- Abstracts without full text.

- Systematic reviews, meta-analyses, and survey publications.

2.2. Search Process

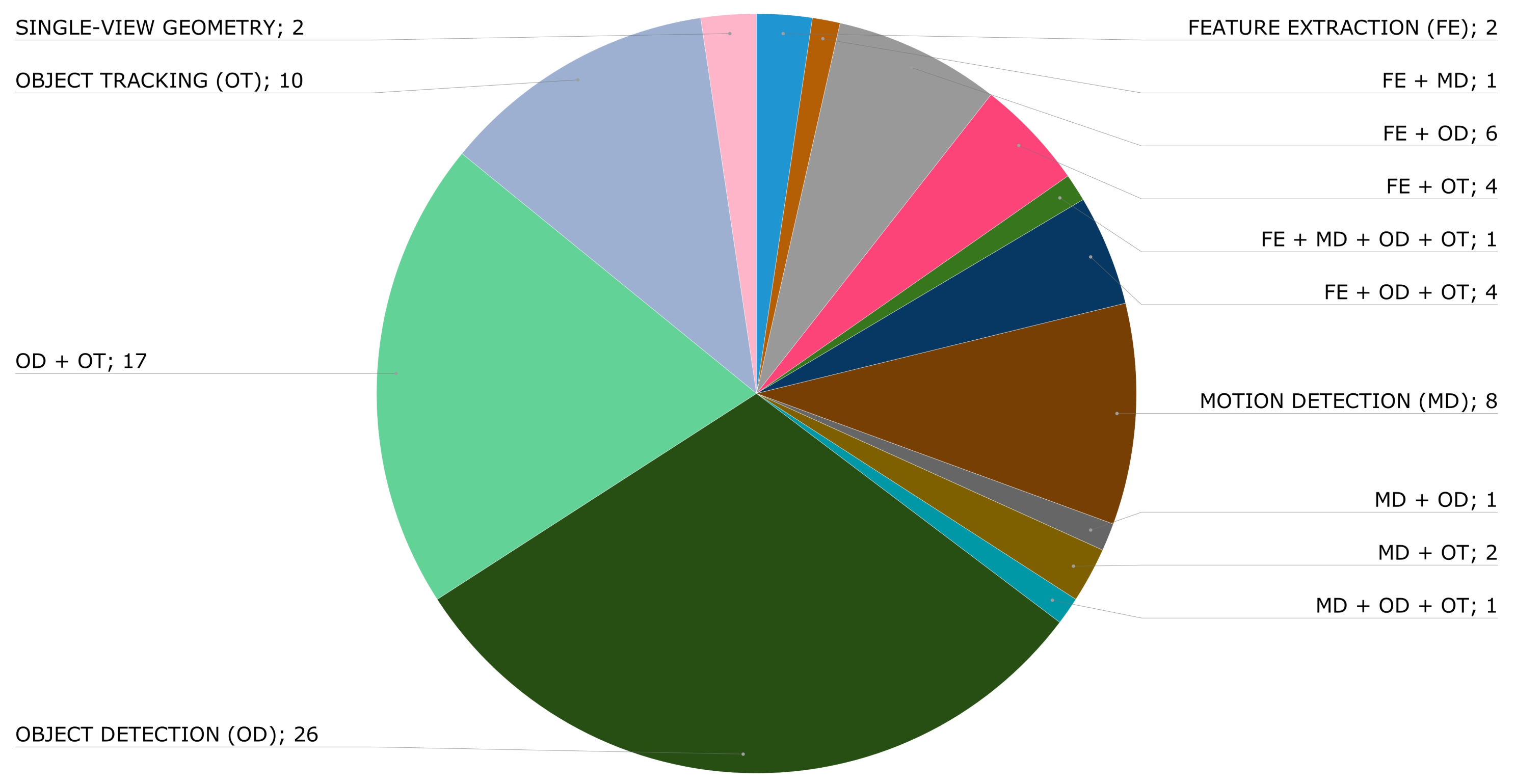

2.3. Research Directives

- Feature extraction is the identification of unique data in an image. Often lines and corners are good features because they provide large intensity contrasts. Feature extraction algorithms are the basis for object tracking and detection [33].

- Motion detection is the detection of changes in the physical position of the object. For static cameras, background subtraction algorithms can be used to detect motion. On the other hand, for moving cameras, optical flow can be used to detect the movement of pixels in the given image [34].

- Object detection is a set of computer vision tasks involving the identification of objects in images. This task requires a data set of labeled features to compare with an input image. Feature extraction algorithms are used to create the data sets [35].

- Object tracking. Given the initial state of a target object in one frame (position and size), object tracking estimates the states of the target object in subsequent frames. Tracking relies entirely on object detection. Tracking is much faster than detection because it already knows the appearance of the objects [36].

- Single-view geometry is the calculation of the geometry of an object using images from a single camera.

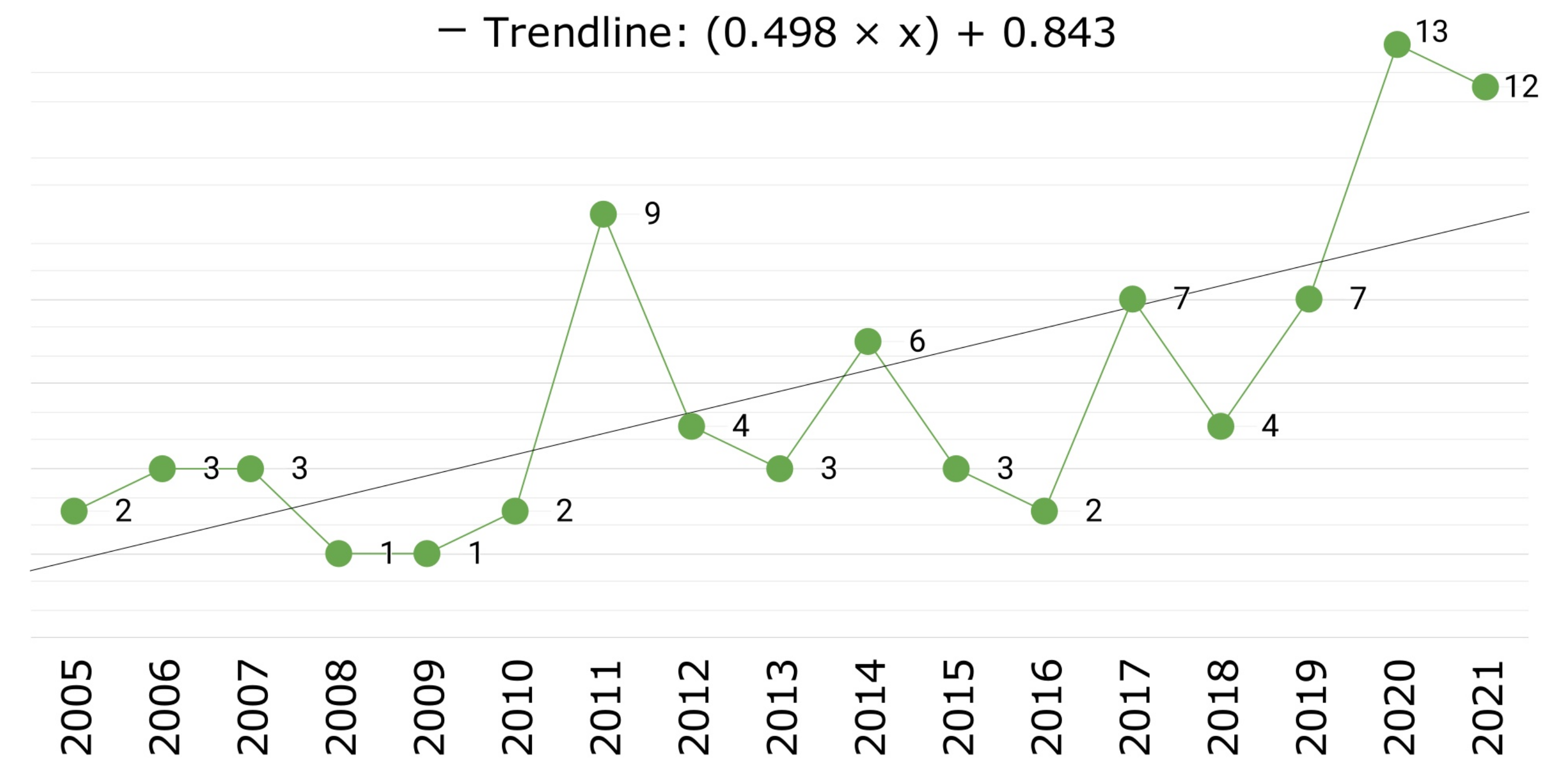

3. Results

4. Discussion

4.1. Computer Vision

4.2. Testing Tools

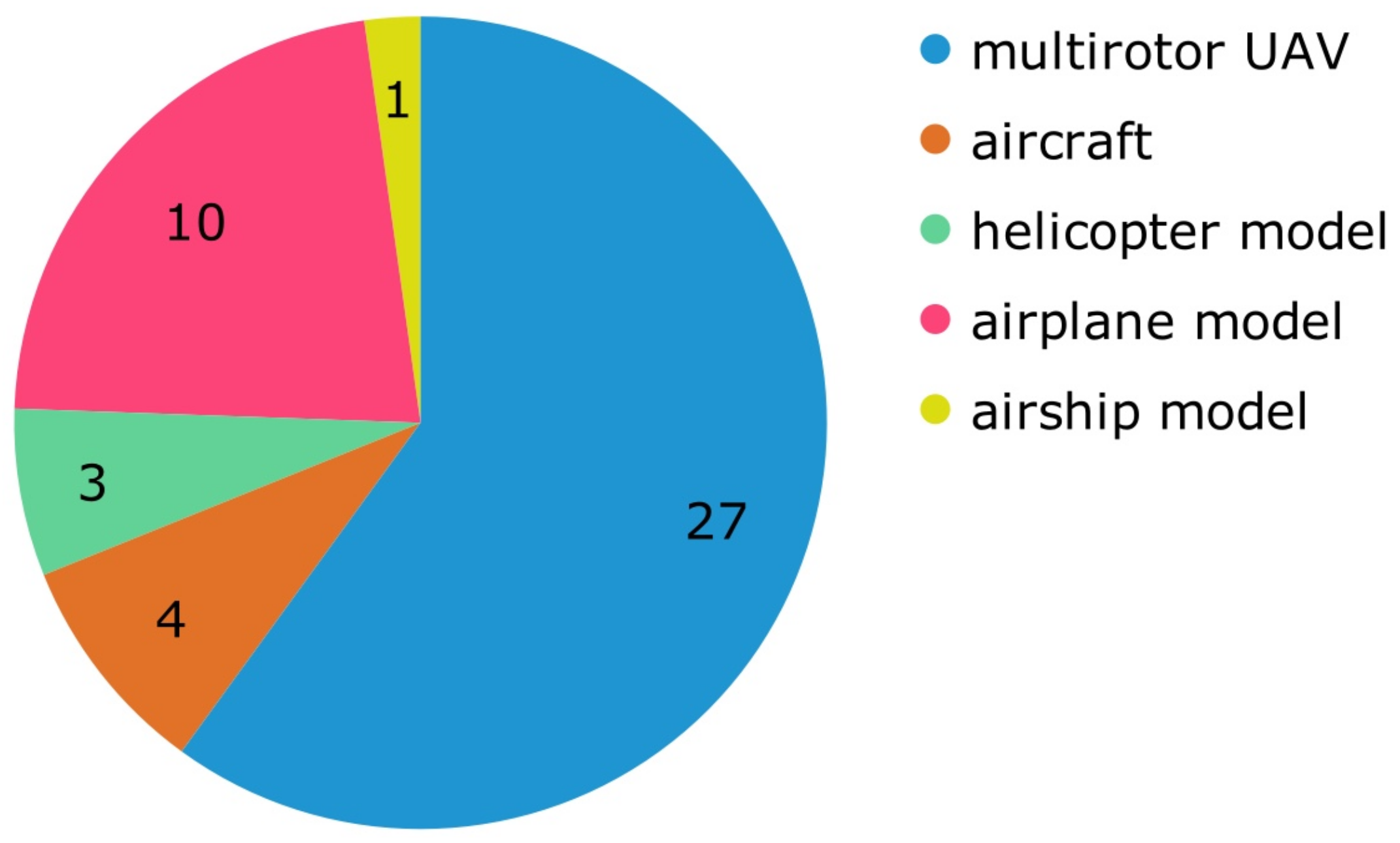

4.3. Obstacles and Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ADS-B | automatic dependent surveillance-broadcast |

| FLARM | FLight alARM |

| ROS | Robot Operating System |

| SSR | secondary surveillance radar |

| TCAS | traffic collision avoidance system |

| UAV | unmanned aerial vehicle |

Appendix A

- [P1]

- S. Fürst, E-D. Dickmanns, “A vision based navigation system for autonomous aircraft,” Robotics and Autonomous Systems, vol. 28, no. 2–3, pp. 173–184, 1999.

- [P2]

- A. Roderick, J. Kehoe, R. Lind, “Vision-Based Navigation Using Multi-Rate Feedback from Optic Flow and Scene Reconstruction,” AIAA Guidance, Navigation, and Control Conference and Exhibit, 2005.

- [P3]

- R-K. Mehra, J. Byrne, J. Boskovic, “Flight testing of a fault-tolerant control and vision-based obstacle avoidance system for uavs,” In Proceedings of the 2005 Association for Unmanned Vehicle Systems International (AUVSI) Conference, North America, 2005.

- [P4]

- Y. Watanabe, A. Calise, E. Johnson, J. Evers, “Minimum-Effort Guidance for Vision-Based Collision Avoidance,” AIAA Atmospheric Flight Mechanics Conference and Exhibit, 2006.

- [P5]

- R. Prazenica, A. Kurdila, R. Sharpley, J. Evers, “Vision-based geometry estimation and receding horizon path planning for UAVs operating in urban environments,” 2006 American Control Conference, 2006.

- [P6]

- J-C. Zufferey, D. Floreano, “Fly-inspired visual steering of an ultralight indoor aircraft,” IEEE Transactions on Robotics, vol. 22, no. 1, pp. 137–146, 2006.

- [P7]

- F. Kendoul, I. Fantoni, G. Dherbomez, “Three Nested Kalman Filters-Based Algorithm for Real-Time Estimation of Optical Flow, UAV Motion and Obstacles Detection,” Proceedings 2007 IEEE International Conference on Robotics and Automation, 2007.

- [P8]

- Y. Watanabe, A. Calise, E. Johnson, “Vision-Based Obstacle Avoidance for UAVs,” AIAA Guidance, Navigation and Control Conference and Exhibit, 2007.

- [P9]

- S. Bermudez i Badia, P. Pyk, P-F-M-J. Verschure, “A fly-locust based neuronal control system applied to an unmanned aerial vehicle: the invertebrate neuronal principles for course stabilization, altitude control and collision avoidance,” The International Journal of Robotics Research, vol. 26, no. 7, pp. 759–772, 2007.

- [P10]

- E. Hanna, P. Straznicky, R. Goubran, “Obstacle Detection for Low Flying Unmanned Aerial Vehicles Using Stereoscopic Imaging,” 2008 IEEE Instrumentation and Measurement Technology Conference, 2008.

- [P11]

- S. Shah, E. Johnson, “3-D Obstacle Detection Using a Single Camera,” AIAA Guidance, Navigation, and Control Conference, 2009.

- [P12]

- J-C. Zufferey, A. Beyeler, D. Floreano, “Autonomous flight at low altitude with vision-based collision avoidance and GPS-based path following,” 2010 IEEE International Conference on Robotics and Automation, 2010.

- [P13]

- L. Mejias, S. McNamara, J. Lai, J. Ford, “Vision-based detection and tracking of aerial targets for UAV collision avoidance,” 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, 2010.

- [P14]

- A-R. Arvai, J-J. Kehoe, R. Lind, “Vision-based navigation using multi-rate feedback from optic flow and scene reconstruction,” The Aeronautical Journal, vol. 115, no. 1169, pp. 411–420, 2011.

- [P15]

- B. Vanek, T. Peni, J. Bokor, T. Zsedrovits, A. Zarandy, T. Roska, “Performance analysis of a vision only Sense and Avoid system for small UAVs,” AIAA Guidance, Navigation, and Control Conference, 2011.

- [P16]

- A. Wainwright, J. Ford, J. Lai, “Fat and thin adaptive HMM filters for vision based detection of moving targets,” In Proceedings of the 2011 Australasian Conference on Robotics and Automation (ACRA 2011), pp. 1–10, 2011.

- [P17]

- H. Choi, Y. Kim, I. Hwang, “Vision-Based Reactive Collision Avoidance Algorithm for Unmanned Aerial Vehicle,” AIAA Guidance, Navigation, and Control Conference, 2011.

- [P18]

- D-W. Yoo, D-Y. Won, M-J. Tahk, “Optical Flow Based Collision Avoidance of Multi-Rotor UAVs in Urban Environments,” International Journal of Aeronautical and Space Sciences, vol. 12, no. 3, pp. 252–259, 2011.

- [P19]

- D. Lee, H. Lim, H-J. Kim, “Obstacle avoidance using image-based visual servoing integrated with nonlinear model predictive control,” IEEE Conference on Decision and Control and European Control Conference, 2011.

- [P20]

- Y. Chen, A. Abushakra, J. Lee, “Vision-Based Horizon Detection and Target Tracking for UAVs,” Advances in Visual Computing, pp. 310–319, 2011.

- [P21]

- T. Zsedrovits, A. Zarandy, B. Vanek, T. Peni, J. Bokor, T. Roska, “Collision avoidance for UAV using visual detection,” 2011 IEEE International Symposium of Circuits and Systems (ISCAS), 2011.

- [P22]

- J. Lai, L. Mejias, J-J. Ford, “Airborne vision-based collision-detection system,” Journal of Field Robotics, vol. 28, no. 2, pp. 137–157, 2011.

- [P23]

- M-A. Olivares-Mendez, P. Campoy, I. Mellado-Bataller, L. Mejias, “See-and-avoid quadcopter using fuzzy control optimized by cross-entropy,” 2012 IEEE International Conference on Fuzzy Systems, 2012.

- [P24]

- A. Eresen, N. İmamoğlu, M. Önder Efe, “Autonomous quadrotor flight with vision-based obstacle avoidance in virtual environment,” Expert Systems with Applications, vol. 39, no. 1, pp. 894–905, 2012.

- [P25]

- B. Vanek, T. Péni, Zarándy, J. Bokor, T. Zsedrovits, T. Roska, “Performance Characteristics of a Complete Vision Only Sense and Avoid System,” AIAA Guidance, Navigation, and Control Conference, 2012.

- [P26]

- A. Mcfadyen, P. Corke, L. Mejias, “Rotorcraft collision avoidance using spherical image-based visual servoing and single point features,” 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, 2012.

- [P27]

- J. Park, Y. Kim, “Obstacle Detection and Collision Avoidance of Quadrotor UAV Using Depth Map of Stereo Vision,” AIAA Guidance, Navigation, and Control (GNC) Conference, 2013.

- [P28]

- C-M. Huang, M-L. Chiang, L-C. Fu, “Adaptive Visual Servoing of Micro Aerial Vehicle with Switched System Model for Obstacle Avoidance,” 2013 IEEE International Conference on Systems, Man, and Cybernetics, 2013.

- [P29]

- A. Mcfadyen, L. Mejias, P. Corke, C. Pradalier, “Aircraft collision avoidance using spherical visual predictive control and single point features,” 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, 2013.

- [P30]

- L-K. Kong, J. Sheng, A. Teredesai, “Basic Micro-Aerial Vehicles (MAVs) obstacles avoidance using monocular computer vision,” 2014 13th International Conference on Control Automation Robotics & Vision (ICARCV), 2014.

- [P31]

- B. Vanek, T. Peni, P. Bauer, J. Bokor, “Vision only sense and avoid: A probabilistic approach,” 2014 American Control Conference, 2014.

- [P32]

- A. Carrio, C. Fu, J. Pestana, P. Campoy, “A ground-truth video dataset for the development and evaluation of vision-based Sense-and-Avoid systems,” 2014 International Conference on Unmanned Aircraft Systems (ICUAS), 2014.

- [P33]

- A-K. Tripathi, R-G. Raja, R. Padhi, “Reactive Collision Avoidance of UAVs with Stereovision Camera Sensors using UKF,” IFAC Proceedings Volumes, vol. 47, no. 1, pp. 1119–1125, 2014.

- [P34]

- R. Brockers, Y. Kuwata, S. Weiss, L. Matthies, “Micro air vehicle autonomous obstacle avoidance from stereo-vision,” SPIE Proceedings, 2014.

- [P35]

- L. Matthies, R. Brockers, Y. Kuwata, S. Weiss, “Stereo vision-based obstacle avoidance for micro air vehicles using disparity space,” 2014 IEEE International Conference on Robotics and Automation (ICRA), 2014.

- [P36]

- P. Agrawal, A. Ratnoo, D. Ghose, “Vision Based Obstacle Detection and Avoidance for UAVs Using Image Segmentation,” AIAA Guidance, Navigation, and Control Conference, 2015.

- [P37]

- M. Clark, Z. Kern, R-J. Prazenica, “A Vision-Based Proportional Navigation Guidance Law for UAS Sense and Avoid,” AIAA Guidance, Navigation, and Control Conference, 2015.

- [P38]

- S. Huh, S. Cho, Y. Jung, D-H. Shim, “Vision-based sense-and-avoid framework for unmanned aerial vehicles,” IEEE Transactions on Aerospace and Electronic Systems, vol. 51, no. 4, pp. 3427–3439, 2015.

- [P39]

- Y. Lyu, Q. Pan, C. Zhao, Y. Zhang, J. Hu, “Vision-based UAV collision avoidance with 2D dynamic safety envelope,” IEEE Aerospace and Electronic Systems Magazine, vol. 31, no. 7, pp. 16–26, 2016.

- [P40]

- G. Fasano, D. Accardo, A-E. Tirri, A. Moccia, E-D. Lellis, “Sky Region Obstacle Detection and Tracking for Vision-Based UAS Sense and Avoid,” Journal of Intelligent & Robotic Systems, vol. 84, no. 1–4, pp. 121–144, 2015.

- [P41]

- A. Morgan, Z. Jones, R. Chapman, S. Biaz, “An unmanned aircraft "see and avoid" algorithm development platform using opengl and opencv,” Journal of Computing Sciences in Colleges, vol. 33, no. 2, pp. 229–236, 2017.

- [P42]

- P. Bauer, A. Hiba, J. Bokor, “Monocular image-based intruder direction estimation at closest point of approach,” 2017 International Conference on Unmanned Aircraft Systems (ICUAS), 2017.

- [P43]

- H. Sedaghat-Pisheh, A-R. Rivera, S. Biaz, R. Chapman, R., “Collision avoidance algorithms for unmanned aerial vehicles using computer vision,” Journal of Computing Sciences in Colleges, vol. 33, no. 2, pp. 191–197. 2017.

- [P44]

- Y. Lyu, Q. Pan, C. Zhao, J. Hu, “Autonomous Stereo Vision Based Collision Avoid System for Small UAV,” AIAA Information Systems-AIAA Infotech @ Aerospace, 2017.

- [P45]

- D. Bratanov, L. Mejias, J-J. Ford, “A vision-based sense-and-avoid system tested on a ScanEagle UAV,” 2017 International Conference on Unmanned Aircraft Systems (ICUAS), 2017.

- [P46]

- A. Ramani, H-E. Sevil, A. Dogan, “Determining intruder aircraft position using series of stereoscopic 2-D images,” 2017 International Conference on Unmanned Aircraft Systems (ICUAS), 2017.

- [P47]

- T-L. Molloy, J-J. Ford, L. Mejias, “Detection of aircraft below the horizon for vision-based detect and avoid in unmanned aircraft systems,” Journal of Field Robotics, vol. 34, no. 7, pp. 1378–1391, 2017.

- [P48]

- B. Ruf, S. Monka, M. Kollmann, M. Grinberg, “Real-Time On-Board Obstacle Avoidance for Uavs Based on Embedded Stereo Vision,” The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, vol. XLII-1, pp. 363-370, 2018.

- [P49]

- A-J. Barry, P-R. Florence, R. Tedrake, “High-speed autonomous obstacle avoidance with pushbroom stereo,” Journal of Field Robotics, vol. 35, no. 1, pp. 52–68, 2017.

- [P50]

- D. Mercado, P. Castillo, R. Lozano, “Sliding mode collision-free navigation for quadrotors using monocular vision,” Robotica, vol. 36, no. 10, pp. 1493–1509, 2018.

- [P51]

- J. James, J-J. Ford, T-L. Molloy, “Learning to Detect Aircraft for Long-Range Vision-Based Sense-and-Avoid Systems,” IEEE Robotics and Automation Letters, vol. 3, no. 4, pp. 4383–4390, 2018.

- [P52]

- V. Varatharasan, A-S-S. Rao, E. Toutounji, J-H. Hong, H-S. Shin, “Target Detection, Tracking and Avoidance System for Low-cost UAVs using AI-Based Approaches,” 2019 Workshop on Research, Education and Development of Unmanned Aerial Systems (RED UAS), 2019.

- [P53]

- Y. Zhang, W. Wang, P. Huang, Z. Jiang, “Monocular Vision-Based Sense and Avoid of UAV Using Nonlinear Model Predictive Control,” Robotica, vol. 37, no. 9, pp. 1582–1594, 2019.

- [P54]

- C. Li, X. Xie, F. Luo, “Obstacle Detection and Path Planning Based on Monocular Vision for Unmanned Aerial Vehicles,” 2019 Chinese Automation Congress (CAC), 2019.

- [P55]

- J. James, J-J. Ford, T-L. Molloy, “Below Horizon Aircraft Detection Using Deep Learning for Vision-Based Sense and Avoid,” 2019 International Conference on Unmanned Aircraft Systems (ICUAS), 2019.

- [P56]

- D-S. Levkovits-Scherer, I. Cruz-Vega, J. Martinez-Carranza, “Real-Time Monocular Vision-Based UAV Obstacle Detection and Collision Avoidance in GPS-Denied Outdoor Environments Using CNN MobileNet-SSD,” Advances in Soft Computing, pp. 613–621, 2019.

- [P57]

- D. Zuehlke, N. Prabhakar, M. Clark, T. Henderson, R-J. Prazenica, “Vision-Based Object Detection and Proportional Navigation for UAS Collision Avoidance,” AIAA Scitech 2019 Forum, 2019.

- [P58]

- R. Opromolla, G. Fasano, D. Accardo, “Experimental assessment of vision-based sensing for small UAS sense and avoid,” 2019 IEEE/AIAA 38th Digital Avionics Systems Conference (DASC), 2019.

- [P59]

- P. Rzucidło, T. Rogalski, G. Jaromi, D. Kordos, P. Szczerba, A. Paw, “Simulation studies of a vision intruder detection system,” Aircraft Engineering and Aerospace Technology, vol. 92, no. 4, pp. 621–631, 2020.

- [P60]

- F. Gomes, T. Hormigo, R. Ventura, “Vision based real-time obstacle avoidance for drones using a time-to-collision estimation approach,” 2020 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), 2020.

- [P61]

- N. Urieva, J. McDonald, T. Uryeva, A-S. Rose Ramos, S. Bhandari, “Collision Detection and Avoidance using Optical Flow for Multicopter UAVs,” 2020 International Conference on Unmanned Aircraft Systems (ICUAS), 2020.

- [P62]

- J. Park, N. Cho, S. Lee, “Reactive Collision Avoidance Algorithm for UAV Using Bounding Tube against Multiple Moving Obstacles,” IEEE Access, vol. 8, pp. 218131–218144, 2020.

- [P63]

- W-K. Ang, W-S. Teo, O. Yakimenko, “Enabling an EO-Sensor-Based Capability to Detect and Track Multiple Moving Threats Onboard sUAS Operating in Cluttered Environments,” Proceedings of the 2019 2nd International Conference on Control and Robot Technology, 2019.

- [P64]

- S. Hussaini, J. Martin, J. Ford, “Vision based aircraft detection using deep learning with synthetic data,” In Proceedings of the Australasian Conference on Robotics and Automation (ACRA), 2020.

- [P65]

- J. James, J-J. Ford, T-L. Molloy, “A Novel Technique for Rejecting Non-Aircraft Artefacts in Above Horizon Vision-Based Aircraft Detection,” 2020 International Conference on Unmanned Aircraft Systems (ICUAS), 2020.

- [P66]

- R. Raheem Nhair, T-A. Al-Assadi, “Vision-Based Obstacle Avoidance for Small Drone Using Monocular Camera,” IOP Conference Series: Materials Science and Engineering, vol. 928, no. 3, pp. 032048, 2020.

- [P67]

- M. Petho, A. Nagy, T. Zsedrovits, “A bio-motivated vision system and artificial neural network for autonomous UAV obstacle avoidance,” 2020 3rd International Seminar on Research of Information Technology and Intelligent Systems (ISRITI), 2020.

- [P68]

- T-K. Hao, O. Yakimenko, “Assessment of an Effective Range of Detecting Intruder Aerial Drone Using Onboard EO-Sensor,” 2020 6th International Conference on Control, Automation and Robotics (ICCAR), 2020.

- [P69]

- Y-C. Lai, Z-Y. Huang, “Detection of a Moving UAV Based on Deep Learning-Based Distance Estimation,” Remote Sensing, vol. 12, no. 18, pp. 3035, 2020.

- [P70]

- C. Kang, H. Chaudhry, C-A. Woolsey, K. Kochersberger, “Development of a Peripheral–Central Vision System for Small Unmanned Aircraft Tracking,” Journal of Aerospace Information Systems, vol. 18, no. 9, pp. 645–658, 2021.

- [P71]

- E. Cetin, C. Barrado, E. Pastor, “Counter a Drone and the Performance Analysis of Deep Reinforcement Learning Method and Human Pilot,” 2021 IEEE/AIAA 40th Digital Avionics Systems Conference (DASC), 2021.

- [P72]

- S. Karlsson, C. Kanellakis, S-S. Mansouri, G. Nikolakopoulos, “Monocular Vision-Based Obstacle Avoidance Scheme for Micro Aerial Vehicle Navigation,” 2021 International Conference on Unmanned Aircraft Systems (ICUAS), 2021.

- [P73]

- G. Chen, W. Dong, X. Sheng, X. Zhu, H. Ding, “An Active Sense and Avoid System for Flying Robots in Dynamic Environments,” IEEE/ASME Transactions on Mechatronics, vol. 26, no. 2, pp. 668–678, 2021.

- [P74]

- K-S. Karreddula, A-K. Deb, “Center of View Based Guidance Angles for Collision-Free Autonomous Flight of UAV,” 2021 International Symposium of Asian Control Association on Intelligent Robotics and Industrial Automation (IRIA), 2021.

- [P75]

- W-L. Leong, P. Wang, S. Huang, Z. Ma, H. Yang, J. Sun, Y. Zhou, M-R. Abdul Hamid, S. Srigrarom, R. Teo, “Vision-Based Sense and Avoid with Monocular Vision and Real-Time Object Detection for UAVs,” 2021 International Conference on Unmanned Aircraft Systems (ICUAS), 2021.

- [P76]

- M. Petho, T. Zsedrovits, “UAV obstacle detection with bio-motivated computer vision,” 2021 17th International Workshop on Cellular Nanoscale Networks and their Applications (CNNA), 2021.

- [P77]

- H-Y. Lin, X-Z. Peng, “Autonomous Quadrotor Navigation with Vision Based Obstacle Avoidance and Path Planning,” IEEE Access, vol. 9, pp. 102450–102459, 2021.

- [P78]

- R. Opromolla, G. Fasano, “Visual-based obstacle detection and tracking, and conflict detection for small UAS sense and avoid,” Aerospace Science and Technology, vol. 119, pp. 107167, 2021.

- [P79]

- R-P. Padhy, P-K. Sa, F. Narducci, C. Bisogni, S. Bakshi, “Monocular Vision Aided Depth Measurement from RGB Images for Autonomous UAV Navigation,” ACM Transactions on Multimedia Computing, Communications, and Applications, 2022.

- [P80]

- T. Zhang, X. Hu, J. Xiao, G. Zhang, “A Machine Learning Method for Vision-Based Unmanned Aerial Vehicle Systems to Understand Unknown Environments,” Sensors, vol. 20, no. 11, pp. 3245, 2020.

- [P81]

- D. Pedro, J-P. Matos-Carvalho, F. Azevedo, R. Sacoto-Martins, L. Bernardo, L. Campos, J-M. Fonseca, A. Mora, “FFAU-Framework for Fully Autonomous UAVs,” Remote Sensing, vol. 12, no. 21, pp. 3533, 2020.

- [P82]

- L-O. Rojas-Perez, J. Martinez-Carranza, “Towards Autonomous Drone Racing without GPU Using an OAK-D Smart Camera,” Sensors, vol. 21, no. 22, pp. 7436, 2021.

- [P83]

- T. Shimada, H. Nishikawa, X. Kong, H. Tomiyama, “Pix2Pix-Based Monocular Depth Estimation for Drones with Optical Flow on AirSim,” Sensors, vol. 22, no. 6, pp. 2097, 2022.

- [P84]

- H. Alqaysi, I. Fedorov, F-Z. Qureshi, M. O’Nils, “A Temporal Boosted YOLO-Based Model for Birds Detection around Wind Farms,” Journal of Imaging, vol. 7, no. 11, pp. 227, 2021.

- [P85]

- P. Rzucidło, G. Jaromi, T. Kapuściński, D. Kordos, T. Rogalski, P. Szczerba, “In-Flight Tests of Intruder Detection Vision System,” Sensors, vol. 21, no. 21, pp. 7360, 2021.

References

- Federal Aviation Administration. How to Avoid a Mid Air Collision—P-8740-51. 2021. Available online: https://www.faasafety.gov/gslac/ALC/libview_normal.aspx?id=6851 (accessed on 11 September 2023).

- Federal Aviation Administration. Airplane Flying Handbook, FAA-H-8083-3B; Federal Aviation Administration, United States Department of Transportation: Oklahoma, OK, USA, 2016. [Google Scholar]

- UK Airprox Board. When every second counts. Airprox Saf. Mag. 2017, 2017, 2–3. [Google Scholar]

- Akbari, Y.; Almaadeed, N.; Al-maadeed, S.; Elharrouss, O. Applications, databases and open computer vision research from drone videos and images: A survey. Artif. Intell. Rev. 2021, 54, 3887–3938. [Google Scholar] [CrossRef]

- Yang, X.; Wei, P. Autonomous Free Flight Operations in Urban Air Mobility with Computational Guidance and Collision Avoidance. IEEE Trans. Intell. Transp. Syst. 2021, 22, 5962–5975. [Google Scholar] [CrossRef]

- Jiang, Y.; Wu, Q.; Zhang, G.; Zhu, S.; Xing, W. A diversified group teaching optimization algorithm with segment-based fitness strategy for unmanned aerial vehicle route planning. Expert Syst. Appl. 2021, 185, 115690. [Google Scholar] [CrossRef]

- Shin, S.Y.; Kang, Y.W.; Kim, Y.G. Reward-driven U-Net training for obstacle avoidance drone. Expert Syst. Appl. 2020, 143, 113064. [Google Scholar] [CrossRef]

- Ghasri, M.; Maghrebi, M. Factors affecting unmanned aerial vehicles’ safety: A post-occurrence exploratory data analysis of drones’ accidents and incidents in Australia. Saf. Sci. 2021, 139, 105273. [Google Scholar] [CrossRef]

- Bertram, J.; Wei, P.; Zambreno, J. A Fast Markov Decision Process-Based Algorithm for Collision Avoidance in Urban Air Mobility. IEEE Trans. Intell. Transp. Syst. 2022, 23, 15420–15433. [Google Scholar] [CrossRef]

- Srivastava, A.; Prakash, J. Internet of Low-Altitude UAVs (IoLoUA): A methodical modeling on integration of Internet of “Things” with “UAV” possibilities and tests. Artif. Intell. Rev. 2023, 56, 2279–2324. [Google Scholar] [CrossRef]

- Jenie, Y.I.; van Kampen, E.J.; Ellerbroek, J.; Hoekstra, J.M. Safety Assessment of a UAV CD&R System in High Density Airspace Using Monte Carlo Simulations. IEEE Trans. Intell. Transp. Syst. 2018, 19, 2686–2695. [Google Scholar] [CrossRef]

- Uzochukwu, S. I can see clearly now. Microlight Fly. Mag. 2019, 2019, 22–24. [Google Scholar]

- Šimák, V.; Škultéty, F. Real time light-sport aircraft tracking using SRD860 band. Transp. Res. Procedia 2020, 51, 271–282. [Google Scholar] [CrossRef]

- Vabre, P. Air Traffic Services Surveillance Systems, Including an Explanation of Primary and Secondary Radar. Victoria, Australia: The Airways Museum & Civil Aviation Historical Society. 2009. Available online: http://www.airwaysmuseum.comSurveillance.htm (accessed on 12 July 2009).

- Vitiello, F.; Causa, F.; Opromolla, R.; Fasano, G. Detection and tracking of non-cooperative flying obstacles using low SWaP radar and optical sensors: An experimental analysis. In Proceedings of the 2022 International Conference on Unmanned Aircraft Systems (ICUAS), Dubrovnik, Croatia, 21–24 June 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 157–166. [Google Scholar]

- Huang, T. Computer vision: Evolution and promise. In Proceedings of the 1996 CERN School of Computing, Egmond aan Zee, The Netherlands, 8–21 September 1996; pp. 21–25. [Google Scholar] [CrossRef]

- Belmonte, L.M.; Morales, R.; Fernández-Caballero, A. Computer vision in autonomous unmanned aerial vehicles—A systematic mapping study. Appl. Sci. 2019, 9, 3196. [Google Scholar] [CrossRef]

- Górriz, J.M.; Ramírez, J.; Ortíz, A.; Martínez-Murcia, F.J.; Segovia, F.; Suckling, J.; Leming, M.; Zhang, Y.D.; Álvarez Sánchez, J.R.; Bologna, G.; et al. Artificial intelligence within the interplay between natural and artificial computation: Advances in data science, trends and applications. Neurocomputing 2020, 410, 237–270. [Google Scholar] [CrossRef]

- Ángel Madridano, A.; Al-Kaff, A.; Martín, D.; de la Escalera, A. Trajectory planning for multi-robot systems: Methods and applications. Expert Syst. Appl. 2021, 173, 114660. [Google Scholar] [CrossRef]

- Horn, B.K.; Schunck, B.G. Determining optical flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef]

- Delgado, A.E.; López, M.T.; Fernández-Caballero, A. Real-time motion detection by lateral inhibition in accumulative computation. Eng. Appl. Artif. Intell. 2010, 23, 129–139. [Google Scholar] [CrossRef]

- López-Valles, J.M.; Fernández, M.A.; Fernández-Caballero, A. Stereovision depth analysis by two-dimensional motion charge memories. Pattern Recognit. Lett. 2007, 28, 20–30. [Google Scholar] [CrossRef]

- Liu, S. Object Trajectory Estimation Using Optical Flow. Master’s Thesis, Utah State University, Logan, UT, USA, 2009. [Google Scholar]

- Almansa-Valverde, S.; Castillo, J.C.; Fernández-Caballero, A. Mobile robot map building from time-of-flight camera. Expert Syst. Appl. 2012, 39, 8835–8843. [Google Scholar] [CrossRef]

- Chen, S.Y. Kalman Filter for Robot Vision: A Survey. IEEE Trans. Ind. Electron. 2012, 59, 4409–4420. [Google Scholar] [CrossRef]

- Tang, J.; Duan, H.; Lao, S. Swarm intelligence algorithms for multiple unmanned aerial vehicles collaboration: A comprehensive review. Artif. Intell. Rev. 2023, 56, 4295–4327. [Google Scholar] [CrossRef]

- Al-Kaff, A.; Martín, D.; García, F.; de la Escalera, A.; María Armingol, J. Survey of computer vision algorithms and applications for unmanned aerial vehicles. Expert Syst. Appl. 2018, 92, 447–463. [Google Scholar] [CrossRef]

- Cebollada, S.; Payá, L.; Flores, M.; Peidró, A.; Reinoso, O. A state-of-the-art review on mobile robotics tasks using artificial intelligence and visual data. Expert Syst. Appl. 2021, 167, 114195. [Google Scholar] [CrossRef]

- Llamazares, A.; Molinos, E.J.; Ocaña, M. Detection and Tracking of Moving Obstacles (DATMO): A Review. Robotica 2020, 38, 761–774. [Google Scholar] [CrossRef]

- Arksey, H.; O’Malley, L. Scoping studies: Towards a methodological framework. Int. J. Soc. Res. Methodol. 2005, 8, 19–32. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Stanoev, A.; Audinet, N.; Tancock, S.; Dahnoun, N. Real-time stereo vision for collision detection on autonomous UAVs. In Proceedings of the 2017 IEEE International Conference on Imaging Systems and Techniques (IST), Beijing, China, 18–20 October 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Jiang, X. Feature extraction for image recognition and computer vision. In Proceedings of the 2009 2nd IEEE International Conference on Computer Science and Information Technology, Beijing, China, 8–11 August 2009; pp. 1–15. [Google Scholar] [CrossRef]

- Manchanda, S.; Sharma, S. Analysis of computer vision based techniques for motion detection. In Proceedings of the 2016 6th International Conference-Cloud System and Big Data Engineering (Confluence), Noida, India, 14–15 January 2016; pp. 445–450. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Wu, Y.; Lim, J.; Yang, M.H. Online Object Tracking: A Benchmark. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2411–2418. [Google Scholar] [CrossRef]

- Kellermann, R.; Biehle, T.; Fischer, L. Drones for parcel and passenger transportation: A literature review. Transp. Res. Interdiscip. Perspect. 2020, 4, 100088. [Google Scholar] [CrossRef]

- Kindervater, K.H. The emergence of lethal surveillance: Watching and killing in the history of drone technology. Secur. Dialogue 2016, 47, 223–238. [Google Scholar] [CrossRef]

- Hussein, M.; Nouacer, R.; Corradi, F.; Ouhammou, Y.; Villar, E.; Tieri, C.; Castiñeira, R. Key technologies for safe and autonomous drones. Microprocess. Microsyst. 2021, 87, 104348. [Google Scholar] [CrossRef]

- Ortmeyer, C. Computer Vision: Algorithms and Applications; Springer: London, UK, 2011. [Google Scholar]

- Feng, X.; Jiang, Y.; Yang, X.; Du, M.; Li, X. Computer vision algorithms and hardware implementations: A survey. Integration 2019, 69, 309–320. [Google Scholar] [CrossRef]

- Chamola, V.; Kotesh, P.; Agarwal, A.; Gupta, N.; Guizani, M. A Comprehensive Review of Unmanned Aerial Vehicle Attacks and Neutralization Techniques. Ad Hoc Netw. 2021, 111, 102324. [Google Scholar] [CrossRef]

- Kindervater, K.H. Then and now: A brief history of single board computers. Electron. Des. Uncovered 2014, 6, 1–11. [Google Scholar]

- Fernández-Caballero, A.; López, M.T.; Saiz-Valverde, S. Dynamic stereoscopic selective visual attention (DSSVA): Integrating motion and shape with depth in video segmentation. Expert Syst. Appl. 2008, 34, 1394–1402. [Google Scholar] [CrossRef]

- Joshi, P.; Escrivá, D.; Godoy, V. OpenCV by Example: Enhance Your Understanding of Computer Vision and Image Processing by Developing Real-World Projects in OpenCV 3; Packt Publishing: Birmingham, UK, 2016. [Google Scholar]

- Moler, C.; Little, J. A History of MATLAB. Proc. ACM Program. Lang. 2020, 4, 81. [Google Scholar] [CrossRef]

- Yu, L.; He, G.; Zhao, S.; Wang, X.; Shen, L. Design and implementation of a hardware-in-the-loop simulation system for a tilt trirotor UAV. J. Adv. Transp. 2020, 2020, 4305742. [Google Scholar] [CrossRef]

- Kumar, A.; Yoon, S.; Kumar, V.R.S. Mixed reality simulation of high-endurance unmanned aerial vehicle with dual-head electromagnetic propulsion devices for earth and other planetary explorations. Appl. Sci. 2020, 10, 3736. [Google Scholar] [CrossRef]

- Dronethusiast. The History of Drones (Drone History Timeline from 1849 to 2019). 2019. Available online: https://www.dronethusiast.com/history-of-drones/ (accessed on 11 September 2023).

- Dormehl, L. The History of Drones in 10 Milestones. 2018. Available online: https://www.digitaltrends.com/cool-tech/history-of-drones/ (accessed on 11 September 2023).

- Pollicino, J. Parrot Unveils AR.Drone 2.0 with 720p HD Camera, Autonomous Video-Recording, We Go Hands-On. 2012. Available online: https://www.engadget.com/2012-01-08-parrot-unveils-ar-drone-2-0-with-720p-hd-camera-autonomous-vide.html (accessed on 11 September 2023).

- DJI. Phantom. 2021. Available online: https://www.dji.com/es/phantom (accessed on 11 September 2023).

- DrDrone.ca. Timeline of DJI Drones: From the Phantom 1 to the Mavic Air. 2018. Available online: https://www.drdrone.ca/blogs/drone-news-drone-help-blog/timeline-of-dji-drones (accessed on 11 September 2023).

- Grand View Research. Augmented Reality Market Size, Share & Trends Analysis Report By Component, By Display (HMD & Smart Glass, HUD, Handheld Devices), By Application, By Region, And Segment Forecasts, 2021–2028. 2021. Available online: https://www.grandviewresearch.com/industry-analysis/augmented-reality-market (accessed on 11 September 2023).

- Grand View Research. Virtual Reality Market Size, Share & Trends Analysis Report by Technology (Semi & Fully Immersive, Non-immersive), By Device (HMD, GTD), by Component (Hardware, Software), by Application, and Segment Forecasts, 2021–2028. 2021. Available online: https://www.grandviewresearch.com/industry-analysis/virtual-reality-vr-market (accessed on 11 September 2023).

- Bustamante, A.; Belmonte, L.M.; Morales, R.; Pereira, A.; Fernández-Caballero, A. Video Processing from a Virtual Unmanned Aerial Vehicle: Comparing Two Approaches to Using OpenCV in Unity. Appl. Sci. 2022, 12, 5958. [Google Scholar] [CrossRef]

| Method | Algorithms | Paper |

|---|---|---|

| Feature extraction | Speeded up robust feature (SURF) | [P5], [P79] |

| Sobel, Prewitt, Roberts edge detection | [P10] | |

| Threshold, blurring, Canny edge detection | [P30] | |

| Good features to track | [P32] | |

| Canny edge detection, Shi-Tomasi feature detector | [P38] | |

| Grayscale, Canny edge detection | [P41] | |

| SIFT, SURF, homography | [P47] | |

| Harris corner detection | [P57] | |

| ORB | [P60] | |

| Shi-Tomasi corner detection | [P63] | |

| Canny edge detection | [P66], [P70] | |

| Difference of Gaussians | [P67], [P76] | |

| Morphological processing, Sobel edge detection | [P68] | |

| ResNet-50 CNN | [P78] | |

| Convolutional neural network (CNN) | [P81] | |

| Motion detection | Optical flow and scene reconstruction | [P2], [P14] |

| Optical flow and inertial data | [P7] | |

| Optical flow | [P12], [P18], [P24], [P36], [P59], [P61], [P70], [P85] | |

| Background subtraction | [P13] | |

| Grayscale and binary foveal processors | [P42] | |

| Feature reprojection and matching | [P60] | |

| Object detection | Disparity map | [P3], [P48] |

| LGMD-based neural network | [P9] | |

| Extended and unscented Kalman filters | [P15] | |

| Hidden Markov model (HMM) | [P16] | |

| Shi-Tomasi corner detector | [P20] | |

| Edge detection, color segmentation | [P21] | |

| CMO combined HMM, CMO combined Viterbi-based filtering | [P22] | |

| Camshift algorithm | [P23] | |

| Single-point feature | [P6], [P29] | |

| Depth map | [P27] | |

| Hough transform and contour detection | [P30] | |

| Unscented Kalman filter | [P33] | |

| Disparity space | [P34], [P35] | |

| Viola–Jones algorithm, morphological detection algorithm | [P37] | |

| Erosion and dilation morphological operators | [P38] | |

| CMO combined HMM | [P39] | |

| CMO, bottom hat filtering, top hat filtering, standard deviation | [P40] | |

| Contour detection | [P41], [P54] | |

| Grayscale and binary foveal processors | [P42] | |

| Haar cascade | [P43] | |

| Triangulation, depth map | [P44] | |

| CMO, bottom hat filtering, adaptive contour-based morphology, | ||

| Viterbi-based filtering, HMM | [P45] | |

| Epipolar geometry | [P46] | |

| Background subtraction | [P47] | |

| Stereo block matching | [P49] | |

| CNN with SegNet architecture | [P51], [P55] | |

| Single-shot detector SSD | [P52] | |

| MobileNet-SSD CNN | [P56] | |

| YOLOv2 | [P58], [P78] | |

| Semiglobal matching (SGM), DBSCAN | [P62] | |

| Lucas–Kanade optical flow | [P63] | |

| ConvLSTM network | [P64] | |

| Bottom hat filtering, HMM | [P65] | |

| U-Net CNN | [P67] | |

| MSER blob detector | [P68] | |

| YOLOv3 | [P69], [P70], [P71], [P72], [P73], [P75], [P80], [P82] | |

| Horn–Schunck optical flow | [P74] | |

| Custom artificial neural network | [P76] | |

| Gaussian filter, Farnebäck optical flow | [P77] | |

| Recursive neural network (RNN) | [P81] | |

| Pix2Pix (optical flow) | [P83] | |

| YOLOv4 | [P84] | |

| Dynamic object contour extraction | [P85] | |

| Object tracking | Extended Kalman filter | [P1], [P4], [P5], [P8], [P11] |

| Kalman filter | [P3], [P42], [P44], [P53], [P58], [P70], [P72], [P75], [P78], [P79] | |

| Imagination-augmented agents (I2A) | [P6] | |

| Three nested Kalman filters | [P7] | |

| SIFT, Kalman filter | [P13] | |

| Hidden Markov model | [P16] | |

| Lucas–Kanade optical flow | [P20], [P28], [P32] | |

| Camshift algorithm | [P23] | |

| Extended and unscented Kalman filters | [P25] | |

| Single-point feature | [P26] | |

| Visual predictive control | [P29] | |

| Unscented Kalman filter | [P31], [P33] | |

| Kanade–Lucas–Tomasi | [P37] | |

| Lucas–Kanade optical tracker | [P38] | |

| Close-minus-open and hidden Markov model | [P39] | |

| Template matching, Kalman filtering | [P40] | |

| Distant-based and distance-agnostic | [P41] | |

| Camshift | [P43] | |

| HMM, ad hoc Viterbi temporal filtering | [P47] | |

| Parallel tracking and mapping, extended Kalman filter | [P50] | |

| MAVSDK (collision avoidance) | [P52] | |

| Kanade–Lucas–Tomasi (KLT) | [P57] | |

| SORT (Kalman filter, Hungarian algorithm) | [P73] | |

| Single-view geometry | Single-view geometry and closest point of approach | [P17] |

| Visual servoing and camera geometry | [P19] |

| Year | Feature Extraction | Single-View Geometry | Object Detection | Motion Detection | Object Tracking |

|---|---|---|---|---|---|

| 1999 | – | – | – | – | [P1] |

| 2005 | – | – | [P3] | [P2] | [P3] |

| 2006 | [P5] | – | – | – | [P4], [P5], [P6] |

| 2007 | – | – | [P9] | [P7] | [P7], [P8] |

| 2008 | [P10] | – | – | – | – |

| 2009 | – | – | – | – | [P11] |

| 2010 | – | [P17], [P19] | – | [P12], [P13] | [P13] |

| 2011 | – | – | [P15], [P16], [P20], [P21], [P22] | [P14], [P18] | [P16], [P20] |

| 2012 | – | – | [P23], [P26] | [P24] | [P23], [P25], [P26] |

| 2013 | – | – | [P27], [P29] | – | [P28], [P29] |

| 2014 | [P30], [P32] | – | [P30], [P33], [P34], [P35] | – | [P31], [P32], [P33] |

| 2015 | [P38] | – | [P37], [P38] | [P36] | [P37], [P38] |

| 2016 | – | – | [P39], [P40] | – | [P39], [P40] |

| 2017 | [P41], [P47] | – | [P41], [P42], [P43], [P44], [P45], [P46], [P47] | [P42] | [P41], [P42], [P43], [P44], [P47] |

| 2018 | – | – | [P48], [P49], [P51] | – | [P50] |

| 2019 | [P57] | – | [P52], [P54], [P55], [P56], [P58] | – | [P52], [P53], [P57], [P58] |

| 2020 | [P60], [P63], [P66], [P67], [P68], [P81] | – | [P62], [P63], [P64], [P65], [P67], [P68], [P69], [P80], [P81] | [P59], [P60], [P61] | – |

| 2021 | [P70], [P76], [P78] | – | [P70], [P71], [P72], [P73], [P74], [P75], [P76], [P77], [P78], [P82], [P84], [P85] | [P70], [P85] | [P70], [P72], [P73], [P75], [P78] |

| 2022 | [P79] | – | [P83] | – | [P79] |

| Year | Flight Simulator | Gazebo | Matlab | Simulink | Robot Operating System (ROS) | Google Earth | Blender |

|---|---|---|---|---|---|---|---|

| 2010 | – | – | [P13] | – | – | – | – |

| 2011 | [P15], [P16] | – | [P15], [P18], [P21] | [P15] | – | – | – |

| 2012 | [P25] | [P23] | [P24], [P25], [P26] | [P24], [P25] | [P23] | [P24] | – |

| 2013 | – | – | [P29] | – | – | – | – |

| 2014 | – | – | [P31] | – | – | – | – |

| 2015 | – | – | [P36] | [P36] | – | – | – |

| 2017 | – | – | [P46] | [P46] | – | – | – |

| 2018 | [P48] | – | – | – | – | – | – |

| 2019 | [P52], [P57] | [P56] | – | – | – | – | – |

| 2020 | [P59] | [P60] | [P68] | – | – | – | [P69] |

| 2021 | [P71] | [P72], [P73] | [P70], [P74] | – | [P82] | – | – |

| 2022 | [P83] | – | – | – | – | – | – |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vera-Yanez, D.; Pereira, A.; Rodrigues, N.; Molina, J.P.; García, A.S.; Fernández-Caballero, A. Vision-Based Flying Obstacle Detection for Avoiding Midair Collisions: A Systematic Review. J. Imaging 2023, 9, 194. https://doi.org/10.3390/jimaging9100194

Vera-Yanez D, Pereira A, Rodrigues N, Molina JP, García AS, Fernández-Caballero A. Vision-Based Flying Obstacle Detection for Avoiding Midair Collisions: A Systematic Review. Journal of Imaging. 2023; 9(10):194. https://doi.org/10.3390/jimaging9100194

Chicago/Turabian StyleVera-Yanez, Daniel, António Pereira, Nuno Rodrigues, José Pascual Molina, Arturo S. García, and Antonio Fernández-Caballero. 2023. "Vision-Based Flying Obstacle Detection for Avoiding Midair Collisions: A Systematic Review" Journal of Imaging 9, no. 10: 194. https://doi.org/10.3390/jimaging9100194

APA StyleVera-Yanez, D., Pereira, A., Rodrigues, N., Molina, J. P., García, A. S., & Fernández-Caballero, A. (2023). Vision-Based Flying Obstacle Detection for Avoiding Midair Collisions: A Systematic Review. Journal of Imaging, 9(10), 194. https://doi.org/10.3390/jimaging9100194