A Model of Adaptive Error Management Practices Addressing the Higher-Order Factors of the Dirty Dozen Error Classification—Implications for Organizational Resilience in Sociotechnical Systems

Abstract

:1. Introduction

1.1. Adaptive Error Management

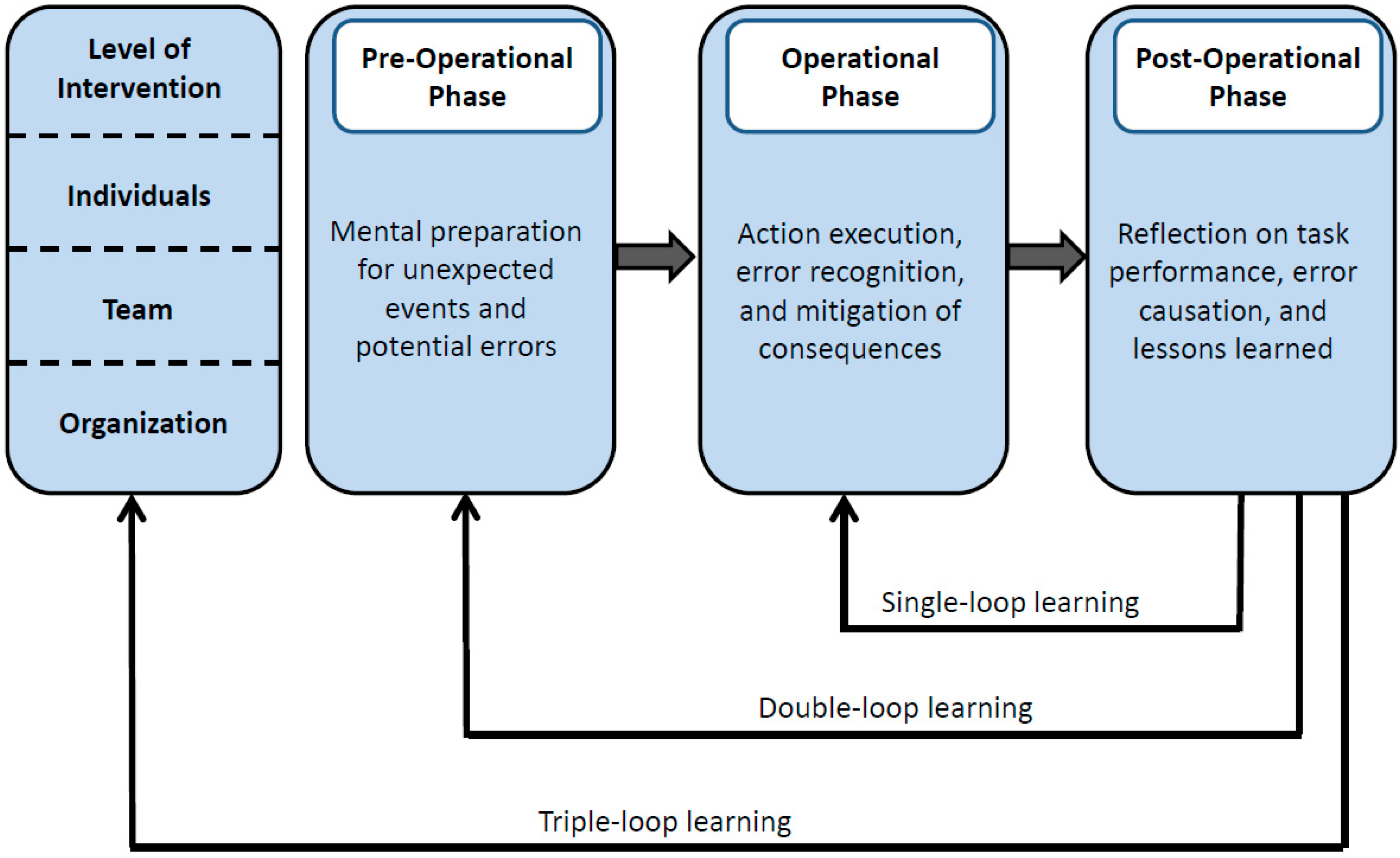

- Pre-Operational Phase: This phase occurs before members of an organization perform a task (e.g., maintaining an engine). It involves activities such as anticipating potential errors and vulnerabilities before they occur. Thus, it emphasizes the preparedness for the unexpected by equipping individuals and teams with the skills and knowledge needed to navigate potential challenges of errors and accidents.

- Operational Phase: This is the phase where actions are executed. It involves activities such as error recognition (e.g., real-time detection of errors through monitoring systems and human observation) and mitigation of the consequences of errors. Thus, it emphasizes decision-making flexibility by empowering individuals to adapt to evolving situations (e.g., by effective communication channels to address errors promptly and collaboratively).

- Post-Operational Phase: This final phase focuses on learning and reflection. It involves activities such as conducting after-action reviews or analyzing the root causes of errors and identifying opportunities for continuous improvement. Thus, it emphasizes the analysis and planning of changes in processes, structures, and the organizational culture based on the lessons learned from errors to become more resilient over time.

1.2. Organizational Learning from Errors and Resilience

2. Method

2.1. Sample

2.2. Materials

2.3. Design and Procedure

3. Results

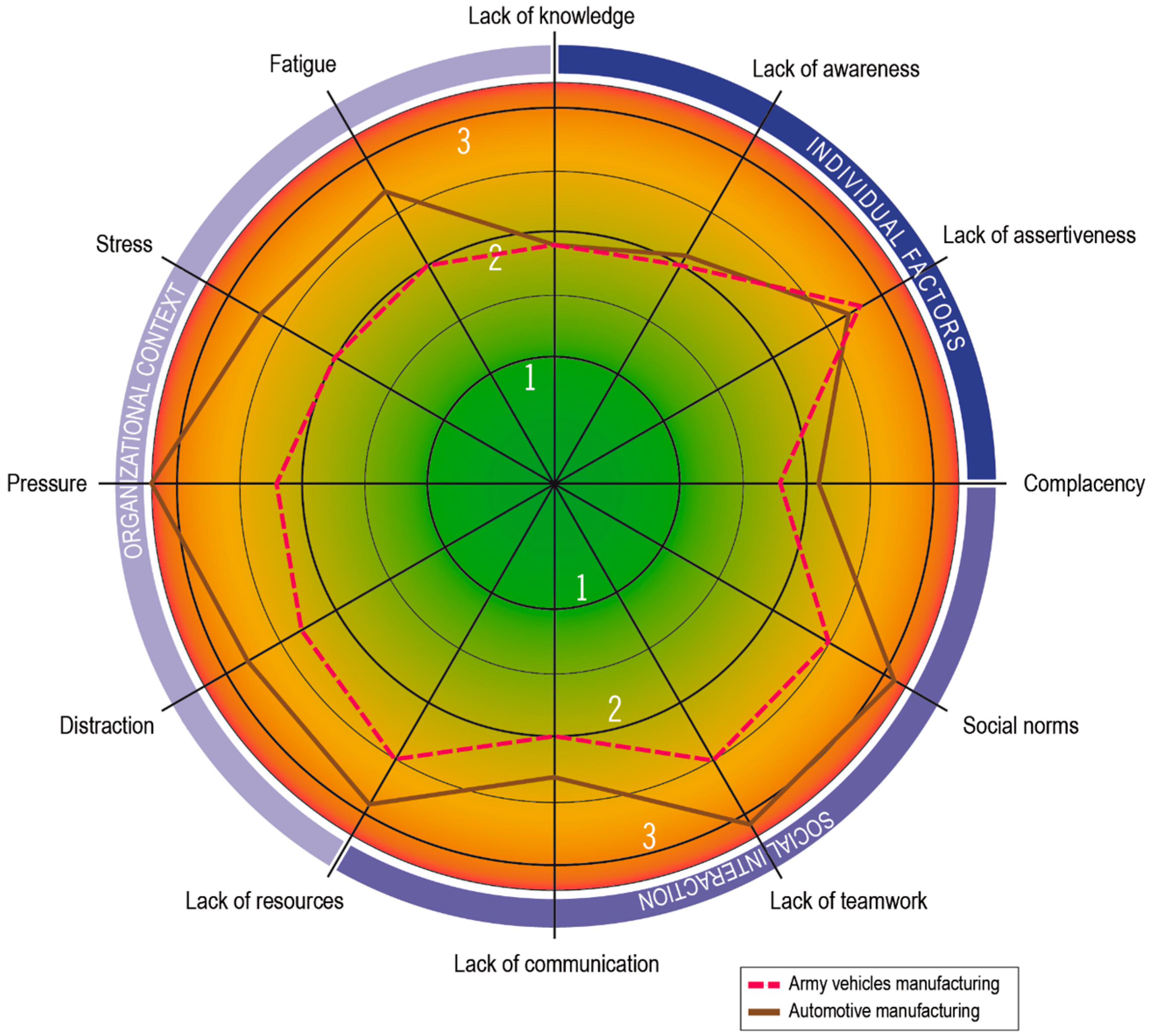

3.1. Descriptive Statistics of Error Causation Categories

3.2. Factor Analysis of Error Causation Categories

4. Discussion

4.1. Discussion of Results

4.2. Strengths, Weaknesses, and Future Research

5. Practical Implications

5.1. Error Causation Screening

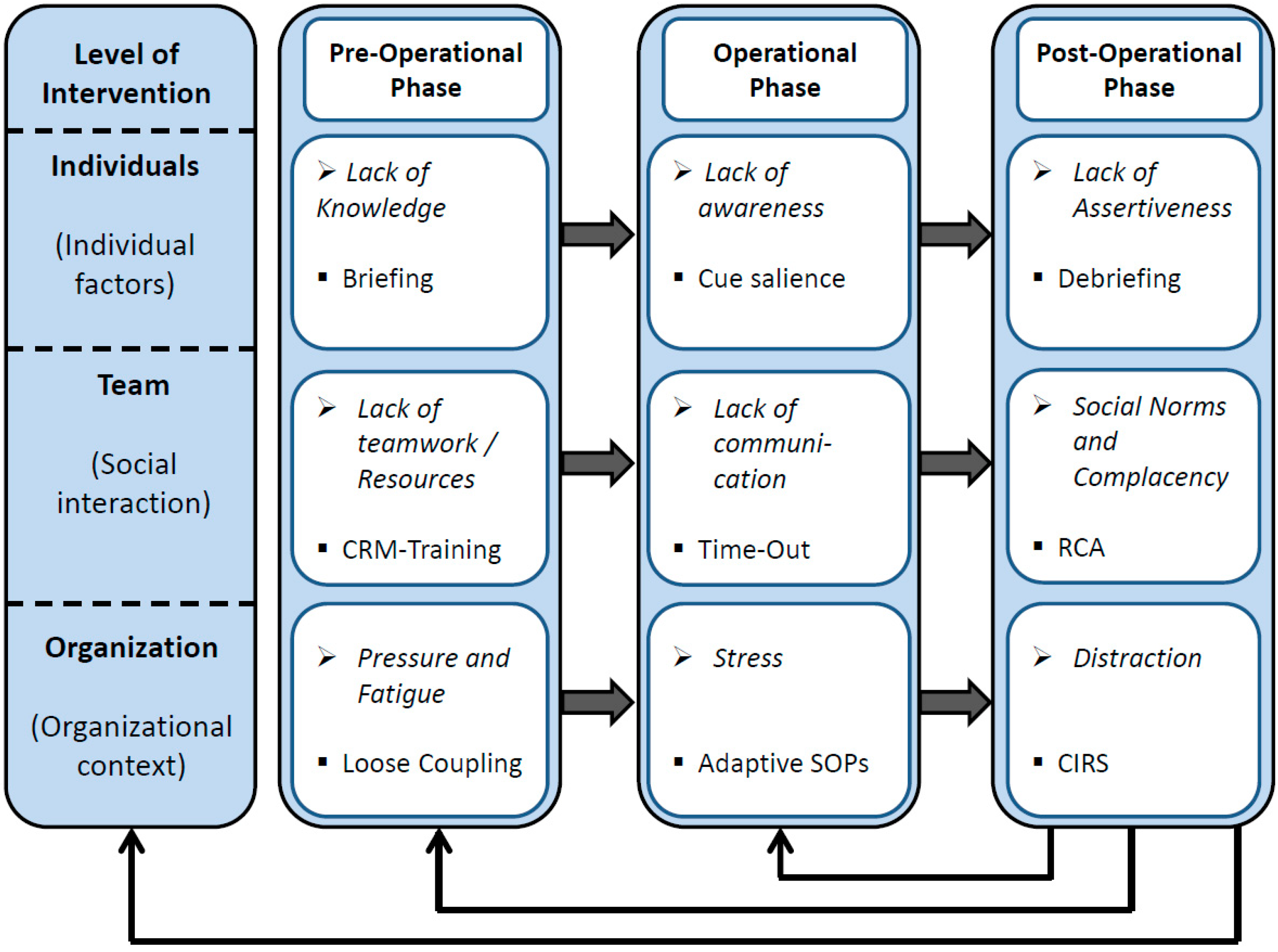

5.2. Adaptive Error Management System

5.3. Adaptive Error Management System—Individual Level

5.4. Adaptive Error Management System—Team Level

5.5. Adaptive Error Management System—Organizational Level

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Degerman, H.; Wallo, A. Conceptualising learning from resilient performance: A scoping literature review. Appl. Ergon. 2024, 115, 104165. [Google Scholar] [CrossRef] [PubMed]

- Liang, F.; Cao, L. Linking employee resilience with organizational resilience: The roles of coping mechanism and managerial resilience. Psychol. Res. Behav. Manag. 2021, 14, 1063–1075. [Google Scholar] [CrossRef] [PubMed]

- Zhao, J. Organizational immunity: How to move from fragility to resilience. Tsinghua Business Rev. 2020, 6, 101–107. [Google Scholar]

- Carayon, P.; Hancock, P.; Leveson, N.; Noy, I.; Sznelwar, L.; Van Hootegem, G. Advancing a sociotechnical systems approach to workplace safety: Developing the conceptual framework. Ergonomics 2015, 58, 548–564. [Google Scholar] [CrossRef] [PubMed]

- Clegg, C.W. Sociotechnical principles for system design. Appl. Ergon. 2000, 31, 463–477. [Google Scholar] [CrossRef]

- Emery, F.E.; Trist, E.L. The causal texture of organizational environments. Hum. Relat. 1965, 18, 21–32. [Google Scholar] [CrossRef]

- Stanton, N.A.; Harvey, C. Beyond human error taxonomies in assessment of risk in sociotechnical systems: A new paradigm with the EAST ‘broken-links’ approach. Ergonomics 2017, 60, 221–233. [Google Scholar] [CrossRef]

- Hollnagel, E. The art of efficient man-machine interaction: Improving the coupling between man and machine. In Expertise and Technology: Cognition and Human-Computer Cooperation; Hoc, J.M., Cacciabue, P.C., Hollnagel, E., Eds.; Lawrence Erlbaum: Hillsdale, MI, USA, 1995; pp. 229–242. [Google Scholar]

- Hawkins, F.H. Human Factors in Flight; Avebury Technical: Aldershot, UK, 2007. [Google Scholar]

- Storm, F.A.; Chiappini, M.; Dei, C.; Piazza, C.; André, E.; Reißner, N.; Brdar, I.; Delle Fave, A.; Gebhard, P.; Malosio, M.; et al. Physical and mental well-being of cobot workers: A scoping review using the Software-Hardware-Environment-Liveware-Liveware-Organization model. Hum. Factors Ergon. Manuf. Serv. Ind. 2022, 32, 419–435. [Google Scholar] [CrossRef]

- Endsley, M.R.; Kiris, E.O. The out-of-the-loop performance problem and level of control in automation. Hum. Factors 1995, 37, 381–394. [Google Scholar] [CrossRef]

- Marquardt, N. Situation awareness, human error, and organizational learning in sociotechnical systems. Hum. Factors Ergon. Manuf. Serv. Ind. 2019, 29, 327–339. [Google Scholar] [CrossRef]

- Snell, R.; Chak, A.M.-K. The Learning Organization: Learning and Empowerment for Whom? Manage Learn. 1998, 29, 337–364. [Google Scholar] [CrossRef]

- Solso, R.L. Cognitive Psychology; Allyn & Bacon: Paramus, NJ, USA, 2001. [Google Scholar]

- Sutcliffe, K.M.; Vogus, T.J. Organizing for resilience. In Positive Organizational Scholarship: Foundations of a New Discipline; Cameron, K.S., Dutton, J.E., Quinn, R.E., Eds.; Berrett-Koeller Publishers: San Francisco, CA, USA, 2003; pp. 94–110. [Google Scholar]

- Lengnick-Hall, C.A.; Beck, T.E. Beyond Bouncing Back: The Concept of Organizational Resilience. In Proceedings of the National Academy of Management Meetings, Seattle, WA, USA, 1–5 August 2003. [Google Scholar]

- Safety Regulation Group. An Introduction to Aircraft Maintenance Engineering Human Factors for JAR 66; TSO/Civil Aviation Authority: Norwich, UK, 2002. [Google Scholar]

- Wilf-Miron, R.; Lewenhoff, I.; Benyamini, Z.; Aviram, A. From aviation to medicine: Applying concepts of aviation safety to risk management in ambulatory care. Qual. Saf. Health Care 2003, 12, 35–39. [Google Scholar] [CrossRef] [PubMed]

- Poller, D.N.; Bongiovanni, M.; Cochand-Priollet, B.; Johnson, S.J.; Perez-Machado, M. A human factor event-based learning assessment tool for assessment of errors and diagnostic accuracy in histopathology and cytopathology. J. Clin. Pathol. 2020, 73, 681–685. [Google Scholar] [CrossRef] [PubMed]

- Patankar, M.S.; Taylor, J.C. Applied Human Factors in Aviation Maintenance; Ashgate: Aldershot, UK, 2004. [Google Scholar]

- Cantu, J.; Tolk, J.; Fritts, S.; Gharehyakheh, A. High Reliability Organization (HRO) systematic literature review: Discovery of culture as a foundational hallmark. J. Conting. Crisis Manag. 2020, 28, 399–410. [Google Scholar] [CrossRef]

- van Stralen, D. Ambiguity. J. Conting. Crisis Manag. 2015, 23, 47–53. [Google Scholar] [CrossRef]

- Ogliastri, E.; Zúñiga, R. An introduction to mindfulness and sensemaking by highly reliable organizations in Latin America. J. Bus. Res. 2016, 69, 4429–4434. [Google Scholar] [CrossRef]

- Hudson, P. Aviation safety culture. SafeSkies 2001, 1, 23. [Google Scholar]

- Marquardt, N.; Gades, R.; Robelski, S. Implicit Social Cognition and Safety Culture. Hum. Factors Ergon. Manuf. Serv. Ind. 2012, 22, 213–234. [Google Scholar] [CrossRef]

- Patankar, M.S.; Sabin, E.J. The Safety Culture Perspective. In Human Factors in Aviation; Salas, E., Maurino, D., Eds.; Elsevier: San Diego, CA, USA, 2010; pp. 95–122. [Google Scholar]

- Reason, J. Managing the Risk of Organizational Accidents; Ashgate: Aldershot, UK, 1997. [Google Scholar]

- Schein, E. Organisational Culture and Leadership; Jossey-Bass: San Francisco, CA, USA, 1992. [Google Scholar]

- La Porte, T. High reliability organizations: Unlikely demanding and at risk. J. Conting. Crisis Manag. 1996, 4, 60–71. [Google Scholar] [CrossRef]

- Roberts, K.H. Some characteristics of one type of high reliability organization. Organ. Sci. 1990, 1, 160–176. [Google Scholar] [CrossRef]

- Weick, K.E.; Sutcliffe, K.M. Managing the Unexpected: Assuring High Performance in an Age of Complexity; Jossey-Bass: San Francisco, CA, USA, 2001. [Google Scholar]

- Weick, K.E. Organizational culture as a source of high reliability. Calif. Manag. Rev. 1987, 29, 112–127. [Google Scholar] [CrossRef]

- Vogus, T.J. Mindful organizing: Establishing and extending the foundations of highly reliable performance. In Handbook of Positive Organizational Scholarship; Cameron, K., Spreitzer, G.M., Eds.; Oxford University Press: New York, NY, USA, 2011; pp. 664–676. [Google Scholar]

- Hopkins, A. The Problem of Defining High Reliability Organisations. Working Paper No 51. 2007. Available online: https://theisrm.org/documents/Hopkins%20%282007%29The%20Problem%20of%20Defining%20High%20Reliability%20Organisations.pdf (accessed on 14 March 2024).

- Enya, A.; Dempsey, S.; Pillay, M. High Reliability Organisation (HRO) Principles of Collective Mindfulness: An Opportunity to Improve Construction Safety Management. In Advances in Safety Management and Human Factors; Arezes, P.M.F.M., Ed.; Springer International Publishing: Cham, Switzerland, 2019; pp. 3–13. [Google Scholar] [CrossRef]

- Sutcliffe, K.M. High reliability organizations (HROs). Best. Pract. Res. Clin. Anaesthesiol. 2011, 25, 133–144. [Google Scholar] [CrossRef]

- Hales, D.N.C.; Satya, S. Creating high reliability organizations using mindfulness. J. Bus. Res. 2016, 69, 2873–2881. [Google Scholar] [CrossRef]

- Roberts, K.G.; Stout, S.K.; Halpern, J.J. Decision dynamics in two high reliability military organizations. Manag. Sci. 1994, 40, 614–624. [Google Scholar] [CrossRef]

- Weick, K.E.; Sutcliffe, K.M.; Obstfeld, D. Organizing for high reliability: Processes of collective mindfulness. In Research in Organizational Behavior; Sutton, R.S., Staw, B.M., Eds.; Elsevier Science/JAI Press: Amsterdam, The Netherlands, 1999; pp. 81–123. [Google Scholar]

- Turner, N.; Kutsch, E.; Leybourne, S.A. Rethinking project reliability using the ambidexterity and mindfulness perspectives. Int. J. Manag. Proj. Bus. 2016, 9, 845–864. [Google Scholar] [CrossRef]

- Somers, S. Measuring resilience potential: An adaptive strategy for organizational crisis planning. J. Conting. Crisis Manag. 2009, 17, 12–23. [Google Scholar] [CrossRef]

- Williams, T.A.; Gruber, D.A.; Sutcliffe, K.M.; Shepherd, D.A.; Zhao, E.Y. Organizational response to adversity: Fusing crisis management and resilience research streams. Acad. Manag. Ann. 2017, 11, 733–769. [Google Scholar] [CrossRef]

- Giustiniano, L.; Clegg, S.R.; Cunha, M.P.; Rego, A. Elgar Introduction to Theories of Organizational Resilience; Edward Elgar Publishing Limited: Cheltenham, UK, 2018. [Google Scholar]

- Wildavsky, A.B. Searching for Safety; Transaction Books: New Brunswick, NJ, USA, 1988. [Google Scholar]

- Holling, C.S. Resilience and Stability of Ecological Systems. Annu. Rev. Ecol. Syst. 1973, 4, 1–23. [Google Scholar] [CrossRef]

- Bhamra, R.; Dani, S.; Burnard, K.J. Resilience: The concept, a literature review, and future directions. Int. J. Prod. Res. 2011, 49, 5375–5393. [Google Scholar] [CrossRef]

- Hillmann, J.; Guenther, E. Organizational resilience: A valuable construct for management research? Int. J. Manag. Rev. 2020, 23, 7–44. [Google Scholar] [CrossRef]

- Linnenluecke, M.K. Resilience in business and management research: A review of influential publications and a research agenda. Int. J. Manag. Rev. 2017, 19, 4–30. [Google Scholar] [CrossRef]

- Marquardt, N. The effect of locus of control on organizational learning, situation awareness and safety culture. In Safety Culture: Progress, Trends and Challenges; Sacré, M., Ed.; Nova Science Publishers: New York, NY, USA, 2019; pp. 157–218. [Google Scholar]

- Frese, M.; Keith, N. Action Errors, Error Management, and Learning in Organizations. Annu. Rev. Psychol. 2014, 66, 661–687. [Google Scholar] [CrossRef]

- van Dyck, C.; Frese, M.; Baer, M.; Sonnentag, S. Organizational error management culture and its impact on performance: A two-study replication. J. Appl. Psychol. 2005, 90, 1228–1240. [Google Scholar] [CrossRef]

- Wiggins, M.W. Introduction to Human Factors for Organisational Psychologists; CRC Press: Boca Raton, FL, USA, 2022. [Google Scholar]

- Dupont, G. The Dirty Dozen Errors in Maintenance. In Proceedings of the 11th Symposium on Human Factors in Aviation Maintenance, San Diego, CA, USA, 12–13 March 1997. [Google Scholar]

- Marquardt, N.; Höger, R. The structure of contributing factors of human error in safety-critical industries. In Human Factors Issues in Complex System Performance; de Waard, D., Hockey, G.R.J., Nickel, P., Brookhuis, K.A., Eds.; Shaker Publishing: Maastricht, The Netherlands, 2007; pp. 67–71. [Google Scholar]

- Marquardt, N.; Robelski, S.; Jenkins, G. Designing and evaluating a crew resource management training for manufacturing industries. Hum. Factors Ergon. Manuf. Serv. Ind. 2011, 21, 287–304. [Google Scholar] [CrossRef]

- Marquardt, N.; Treffenstädt, C.; Gerstmeyer, K.; Gades-Buettrich, R. Mental workload and cognitive performance in operating rooms. Int. J. Psychol. Res. 2015, 10, 209–233. [Google Scholar]

- Simms, L.J. Classical and modern methods of psychological scale construction. Soc. Personal. Psychol. Compass 2008, 2, 414–433. [Google Scholar] [CrossRef]

- Lloret-Segura, S.; Ferreres-Traver, A.; Hernandez-Baeza, A.; Tomas-Marco, I. Exploratory item factor analysis: A practical guide revised and updated. Anales de Psicología 2014, 30, 1151–1169. [Google Scholar]

- Bortz, J.; Weber, R. Statistik: Für Human- und Sozialwissenschaftler; Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Lorr, M.; More, W.W. Four Dimensions of Assertiveness. Multivar. Behav. Res. 1980, 15, 127–138. [Google Scholar] [CrossRef]

- Peneva, I.; Mavrodiev, S. A Historical Approach to Assertiveness. Psychol. Thought 2013, 6, 3–26. [Google Scholar] [CrossRef]

- Lazarus, A.A. On assertive behavior: A brief note. Behav. Ther. 1973, 4, 697–699. [Google Scholar] [CrossRef]

- Stoverink, A.C.; Kirkman, B.; Mistry, S.; Rosen, B. Bouncing Back together: Towards a theoretical model of work team resilience. Acad. Manag. Rev. 2020, 45, 395–422. [Google Scholar] [CrossRef]

- Ceschi, A.; Demerouti, E.; Sartori, R.; Weller, J. Decision-Making Processes in the Workplace: How Exhaustion, Lack of Resources and Job Demands Impair Them and Affect Performance. Front. Psychol. 2017, 8, 313. [Google Scholar] [CrossRef] [PubMed]

- Merritt, S.M.; Ako-Brew, A.; Bryant, W.J.; Staley, A.; McKenna, M.; Leone, A.; Shirase, L. Automation-induced complacency potential: Development and validation of a new scale. Front. Psychol. 2019, 10, 225. [Google Scholar] [CrossRef] [PubMed]

- Billings, C.; Lauber, J.; Funkhouser, H.; Lyman, E.; Huff, E. NASA Aviation Safety Reporting System; U.S. Government Printing Office: Washington, DC, USA, 1976. [Google Scholar]

- Hyten, C.; Ludwig, T.D. Complacency in process safety: A behavior analysis toward prevention strategies. J. Organ. Behav. Manag. 2017, 37, 240–260. [Google Scholar] [CrossRef]

- Bielić, T.; Čulin, J.; Poljak, I.; Orović, J. Causes of and Preventive Measures for Complacency as Viewed by Officers in Charge of the Engineering Watch. J. Mar. Sci. Eng. 2020, 8, 517. [Google Scholar] [CrossRef]

- Reason, J.T.; Hobbs, A. Managing Maintenance Error; Ashgate: Aldershot, UK, 2003. [Google Scholar]

- Christianson, M.K.; Sutcliffe, K.M.; Miller, M.A.; Iwashyna, T.J. Becoming a high reliability organization. Crit. Care 2011, 15, 314. [Google Scholar] [CrossRef] [PubMed]

- Haynes, A.B.; Weiser, T.G.; Berry, W.R.; Lipsitz, S.R.; Breizat, A.H.S.; Dellinger, E.P.; Herbosa, T.; Joseph, S.; Kibatala, P.L.; Lapitan, M.C.M.; et al. A surgical safety checklist to reduce morbidity and mortality in a global population. N. Engl. J. Med. 2009, 360, 491–499. [Google Scholar] [CrossRef] [PubMed]

- Weick, K.E.; Sutcliffe, K.M. Managing the Unexpected: Assuring High Performance in an Age of Complexity, 2nd ed.; Jossey-Bass: San Francisco, CA, USA, 2007. [Google Scholar]

- Weick, K.E. Organizing for transient reliability: The production of dynamic non-events. J. Conting. Crisis Manag. 2011, 19, 21–27. [Google Scholar] [CrossRef]

- Endsley, M.R.; Bolte, B.; Jones, D.G. Designing for Situation Awareness—An Approach to User-Centered Design; Taylor & Francis: London, UK, 2003. [Google Scholar]

- Keiser, N.L.; Arthur, W. A meta-analysis of the effectiveness of the after-action review (or debrief) and factors that influence its effectiveness. J. Appl. Psychol. 2021, 106, 1007–1032. [Google Scholar] [CrossRef]

- Tannenbaum, S.I.; Cerasoli, C.P. Do team and individual debriefs enhance performance? A meta-analysis. Hum. Factors 2013, 55, 231–245. [Google Scholar] [CrossRef]

- Villado, A.J.; Arthur, W. The comparative effect of subjective and objective after-action reviews on team performance on a complex task. J. Appl. Psychol. 2013, 98, 514–528. [Google Scholar] [CrossRef] [PubMed]

- U.S. Army Combined Arms Center. A Leader’s Guide to After-Action Reviews (Training Circular 25-20); U.S. Army Combined Arms Center: Fort Leavenworth, KS, USA, 1993. [Google Scholar]

- Safety Regulation Group. Crew Resource Management (CRM) Training. Guidance for Flight Crew, CRM Instructors (CRMIS) and CRM Instructor Examiners (CRMIES); Civil Aviation Authority: Norwich, UK, 2006. [Google Scholar]

- O’Connor, P. Assessing the effectiveness of bridge resource management. Int. J. Aviat. Psychol. 2011, 21, 357–374. [Google Scholar] [CrossRef]

- Salas, E.; Shuffler, M.L.; DiazGranados, D. Team dynamics at 35,000 feet. In Human Factors in Aviation; Salas, E., Maurino, D., Eds.; Academic Press: London, UK, 2010; pp. 249–291. [Google Scholar]

- Salas, E.; Wilson, K.A.; Burke, C.S.; Wightman, D.C. Does crew resource management training work? An update, an extension, and some critical needs. Hum. Factors 2006, 48, 392–412. [Google Scholar] [CrossRef] [PubMed]

- O’Dea, A.; O’Connor, P.; Keogh, I. A meta-analysis of the effectiveness of crew resource management training in acute care domains. Postgrad. Med. J. 2014, 90, 699–708. [Google Scholar] [CrossRef] [PubMed]

- Wu, A.W.; Lipshutz, A.M.; Pronovost, P.J. Effectiveness and efficiency of root cause analysis in medicine. JAMA 2008, 299, 685–687. [Google Scholar] [CrossRef] [PubMed]

- Orton, J.D.; Weick, K.E. Loosley coupled systems: A reconceptualization. Acad. Manag. Rev. 1990, 15, 203–223. [Google Scholar] [CrossRef]

- Rall, M.; Dieckmann, P. Safety culture and crisis resource management in airway management: General principles to enhance patient safety in critical airway situations. Best. Pract. Res. Clin. Anaesthesiol. 2005, 19, 539–557. [Google Scholar] [CrossRef]

| Dirty Dozen Category | Description | Example Items (HEQ) |

|---|---|---|

| Lack of knowledge | The workers do not know enough about their tasks, duties, or the corporate work process. | I am well aware of the duties of all those involved in my work process. (-) |

| Complacency | Due to overconfidence, the workers are careless in the execution of their tasks. | I sometimes do not check my work, because I am convinced of my own infallibility. |

| Lack of teamwork | The workers do not cooperate well or have conflicts on a personal level. | Conflicts within the team often remain unsolved. |

| Lack of communication | The workers have deficits in terms of sharing information due to insufficient listening and talking. | Sometimes, I do not understand my supervisors. |

| Social norms | Due to informal group rules, the workers do not always follow safety regulations. | I believe safety regulations often disturb an efficient work environment. |

| Lack of resources | The organization is short of manuals, checklists, technical support, other materials, or staff. | Because of missing technical assistance, there are often delays. |

| Pressure | Due to ambitious goals, tasks have to be performed under high time or economic pressures. | Due to financial pressure, even high-risk tasks have to be performed under an increasing time demand. |

| Lack of awareness | The workers do not recognize dangers in critical situations on time. | I recognize dangers in my work environment promptly. (-) |

| Stress | The workers have a high mental or physical workload. | There are too many tasks I have to do. |

| Fatigue | Due to fatigue, the workers have less capacity to concentrate on their tasks. | I am often tired during the day. |

| Distraction | Due to different contextual factors, the workers do not focus their attention on the current task. | Because of the noise level, our efficiency is negatively affected. |

| Lack of assertiveness | The workers do not refuse to compromise safety standards. | I often make constructive suggestions to solve a problem. (-) |

| Dirty Dozen Scale | Mean | SD | Reliability (Cronbach’s α) |

|---|---|---|---|

| Lack of teamwork | 2.72 | 0.73 | .71 |

| Pressure | 2.53 | 0.78 | .74 |

| Lack of resources | 2.57 | 0.77 | .79 |

| Lack of assertiveness | 2.69 | 0.65 | .71 |

| Social norms | 2.70 | 0.66 | .65 |

| Lack of communication | 2.01 | 0.60 | .73 |

| Stress | 2.36 | 0.75 | .73 |

| Distraction | 2.50 | 0.65 | .63 |

| Fatigue | 2.07 | 0.74 | .81 |

| Lack of awareness | 2.05 | 0.47 | .64 |

| Complacency | 1.90 | 0.52 | .58 |

| Lack of knowledge | 1.90 | 0.50 | .59 |

| Human Error Categories | Error-Causing Factors (Component) | ||

|---|---|---|---|

| Individual Factors | Social Interaction | Organizational Context | |

| Lack of knowledge | 0.544 | 0.522 | 0.195 |

| Lack of awareness | 0.587 | 0.180 | 0.347 |

| Lack of assertiveness | 0.792 | −0.043 | −0.060 |

| Complacency | 0.171 | 0.679 | 0.050 |

| Stress | −0.077 | 0.357 | 0.775 |

| Fatigue | 0.258 | 0.211 | 0.753 |

| Social norms | −0.058 | 0.699 | 0.409 |

| Lack of teamwork | −0.051 | 0.723 | 0.255 |

| Lack of communication | 0.205 | 0.763 | 0.262 |

| Lack of resources | 0.243 | 0.657 | 0.367 |

| Pressure | −0.083 | 0.450 | 0.694 |

| Distraction | 0.293 | 0.134 | 0.752 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Marquardt, N.; Gades-Büttrich, R.; Brandenberg, T.; Schürmann, V. A Model of Adaptive Error Management Practices Addressing the Higher-Order Factors of the Dirty Dozen Error Classification—Implications for Organizational Resilience in Sociotechnical Systems. Safety 2024, 10, 64. https://doi.org/10.3390/safety10030064

Marquardt N, Gades-Büttrich R, Brandenberg T, Schürmann V. A Model of Adaptive Error Management Practices Addressing the Higher-Order Factors of the Dirty Dozen Error Classification—Implications for Organizational Resilience in Sociotechnical Systems. Safety. 2024; 10(3):64. https://doi.org/10.3390/safety10030064

Chicago/Turabian StyleMarquardt, Nicki, Ricarda Gades-Büttrich, Tammy Brandenberg, and Verena Schürmann. 2024. "A Model of Adaptive Error Management Practices Addressing the Higher-Order Factors of the Dirty Dozen Error Classification—Implications for Organizational Resilience in Sociotechnical Systems" Safety 10, no. 3: 64. https://doi.org/10.3390/safety10030064

APA StyleMarquardt, N., Gades-Büttrich, R., Brandenberg, T., & Schürmann, V. (2024). A Model of Adaptive Error Management Practices Addressing the Higher-Order Factors of the Dirty Dozen Error Classification—Implications for Organizational Resilience in Sociotechnical Systems. Safety, 10(3), 64. https://doi.org/10.3390/safety10030064