Research on Measuring the Bodies of Underwater Fish with Inclined Positions Using the YOLOv8 Model and a Line-Laser System

Abstract

1. Introduction

- This study first collects and constructs an underwater fish-body measurement dataset and underwater camera distance calibration dataset using our self-made experimental device.

- This study, for the first time, integrates line-laser measurement technology with deep learning technology and applies it to the field of underwater fish body measurement.

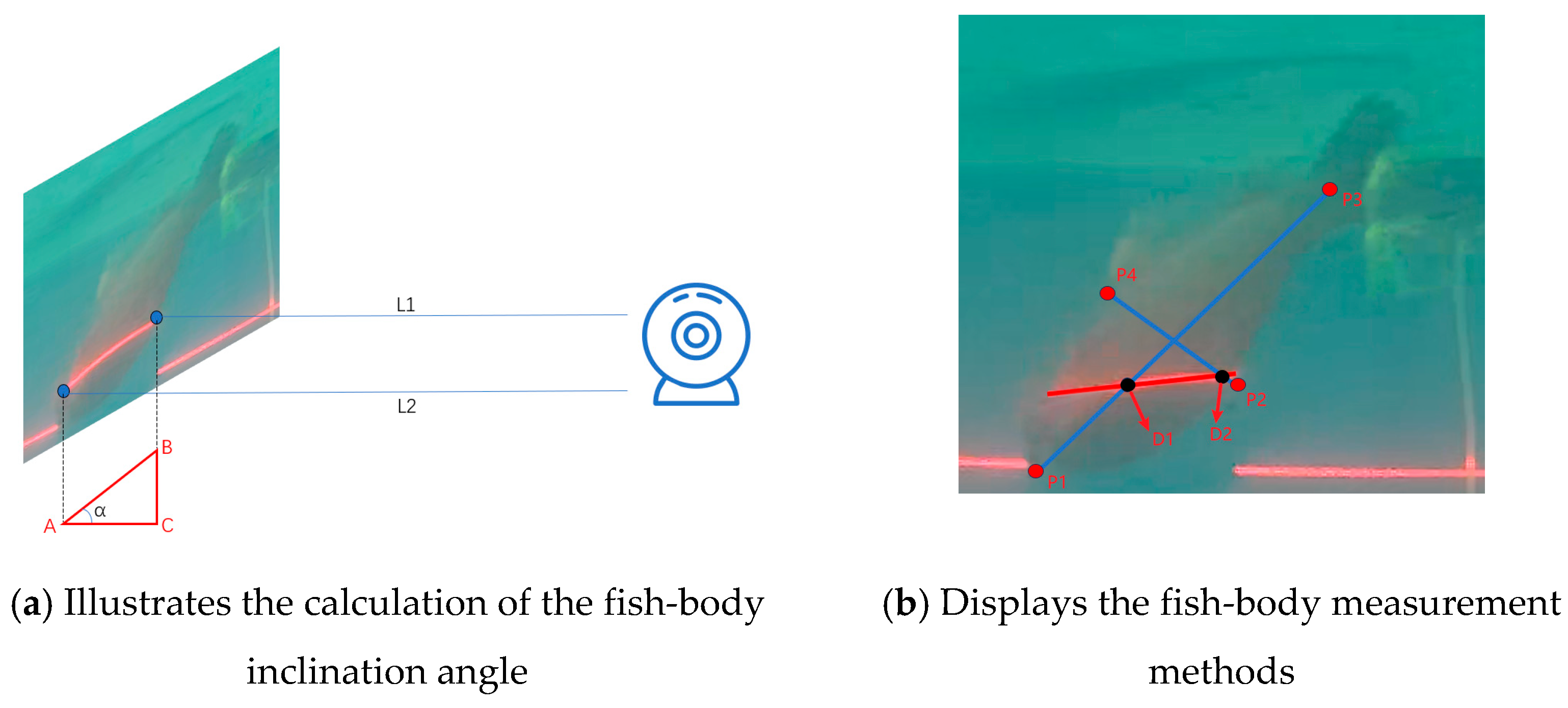

- This study, for the first time, mathematically models and calculates the inclinations, body lengths, and body widths of underwater fish images captured by a single camera with a line laser. The results show that compared to manual measurement, this method has smaller errors, introducing innovative technology to fish body measurement in aquaculture.

2. Materials and Methods

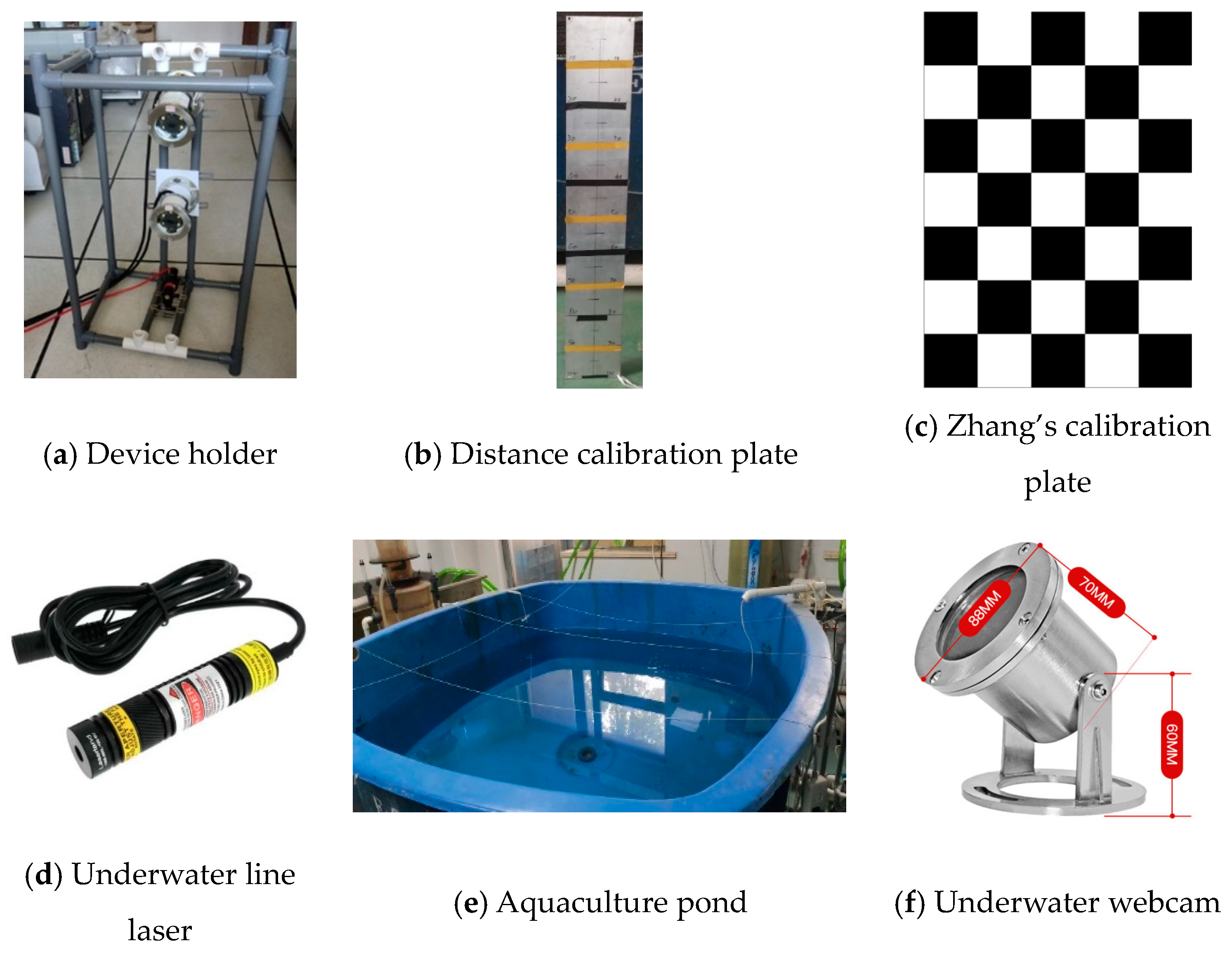

2.1. Experimental Equipment

2.2. Data Acquisition and Processing

2.3. Research Methodology

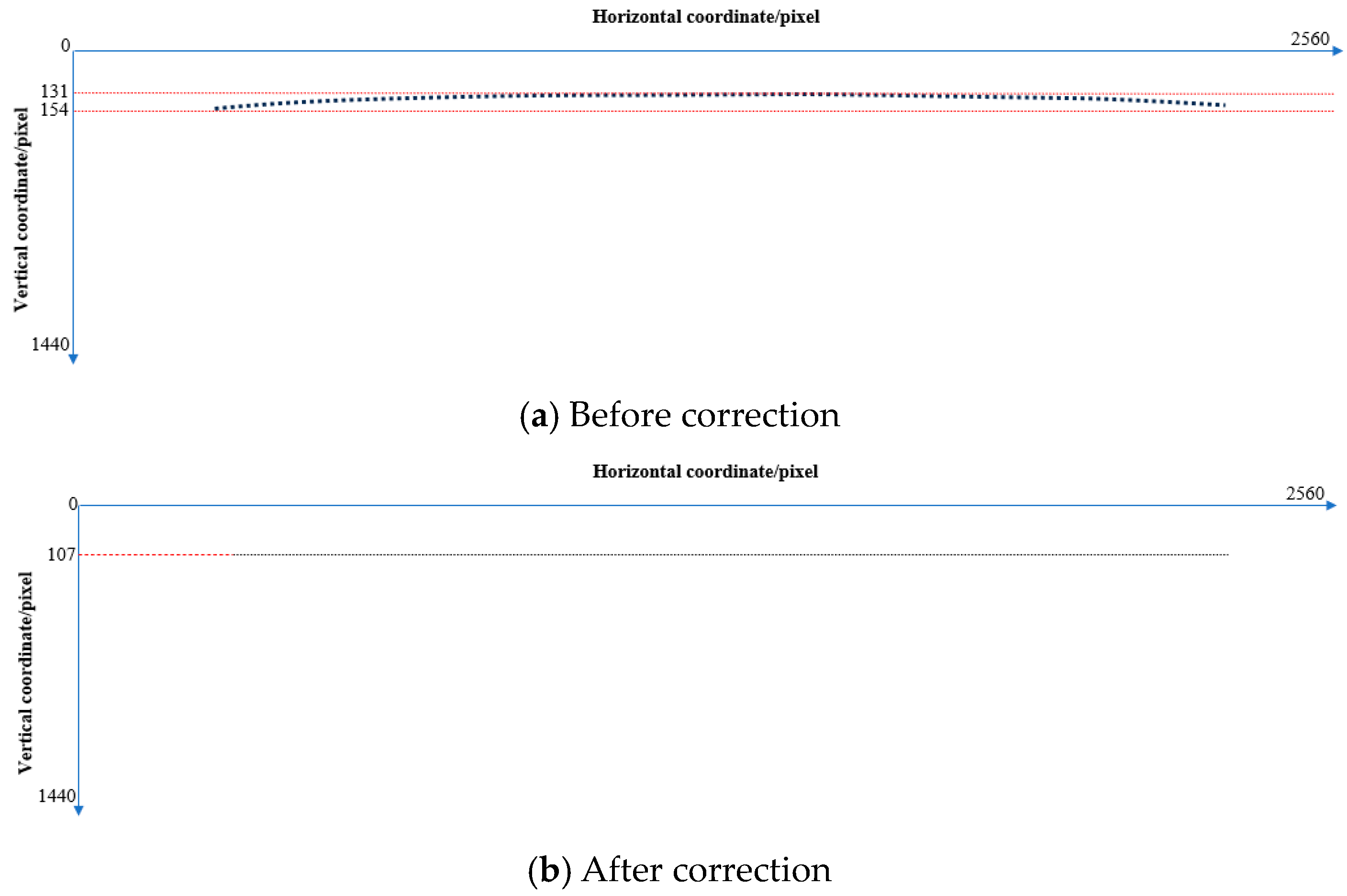

2.3.1. Camera Calibration

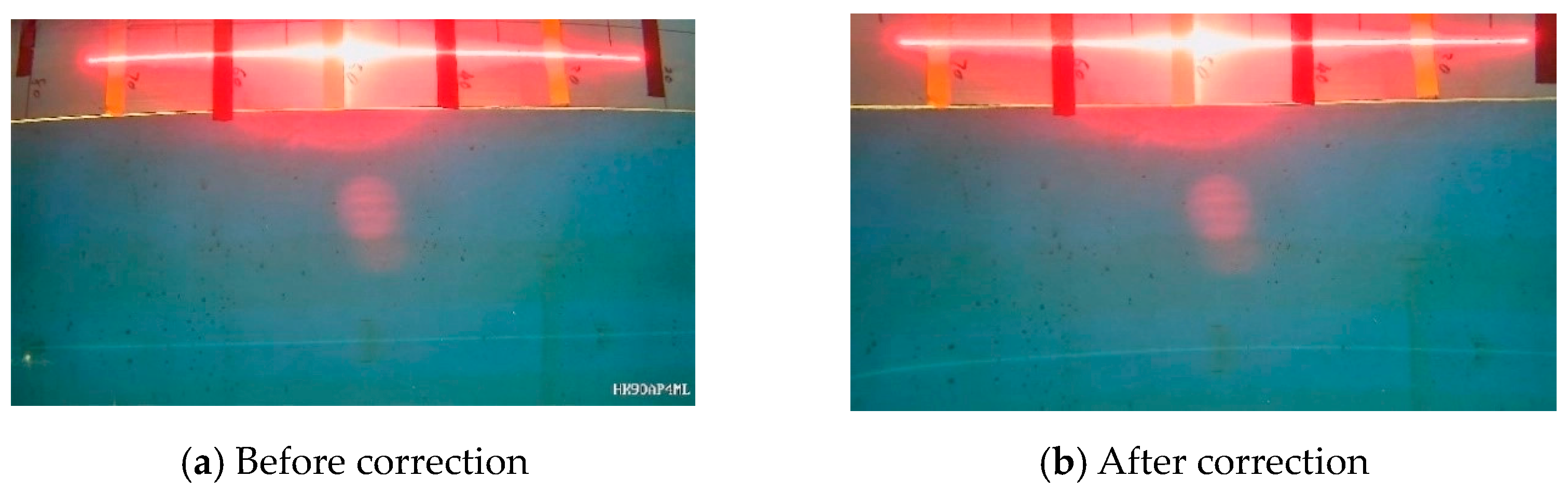

2.3.2. Underwater Laser-Line Inspection

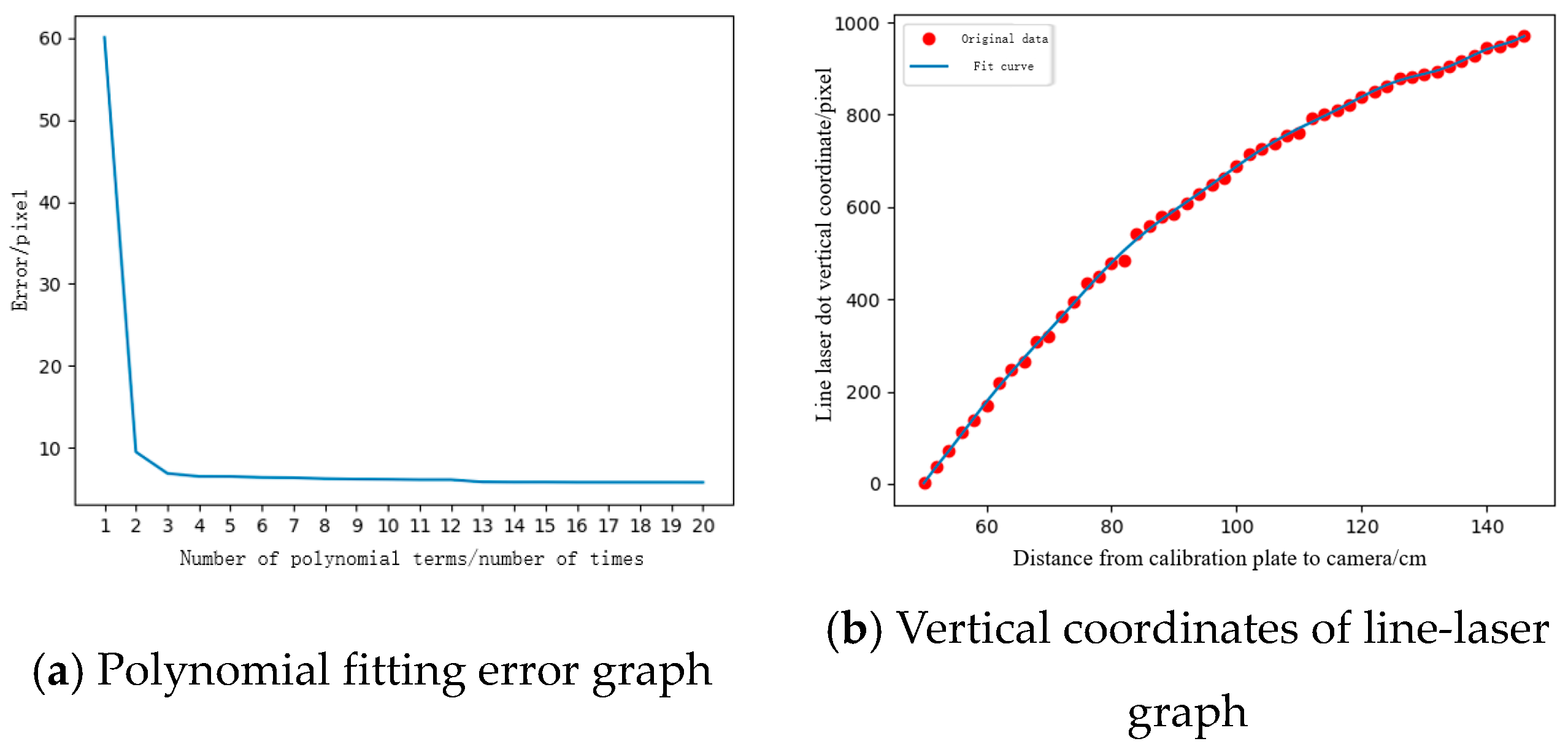

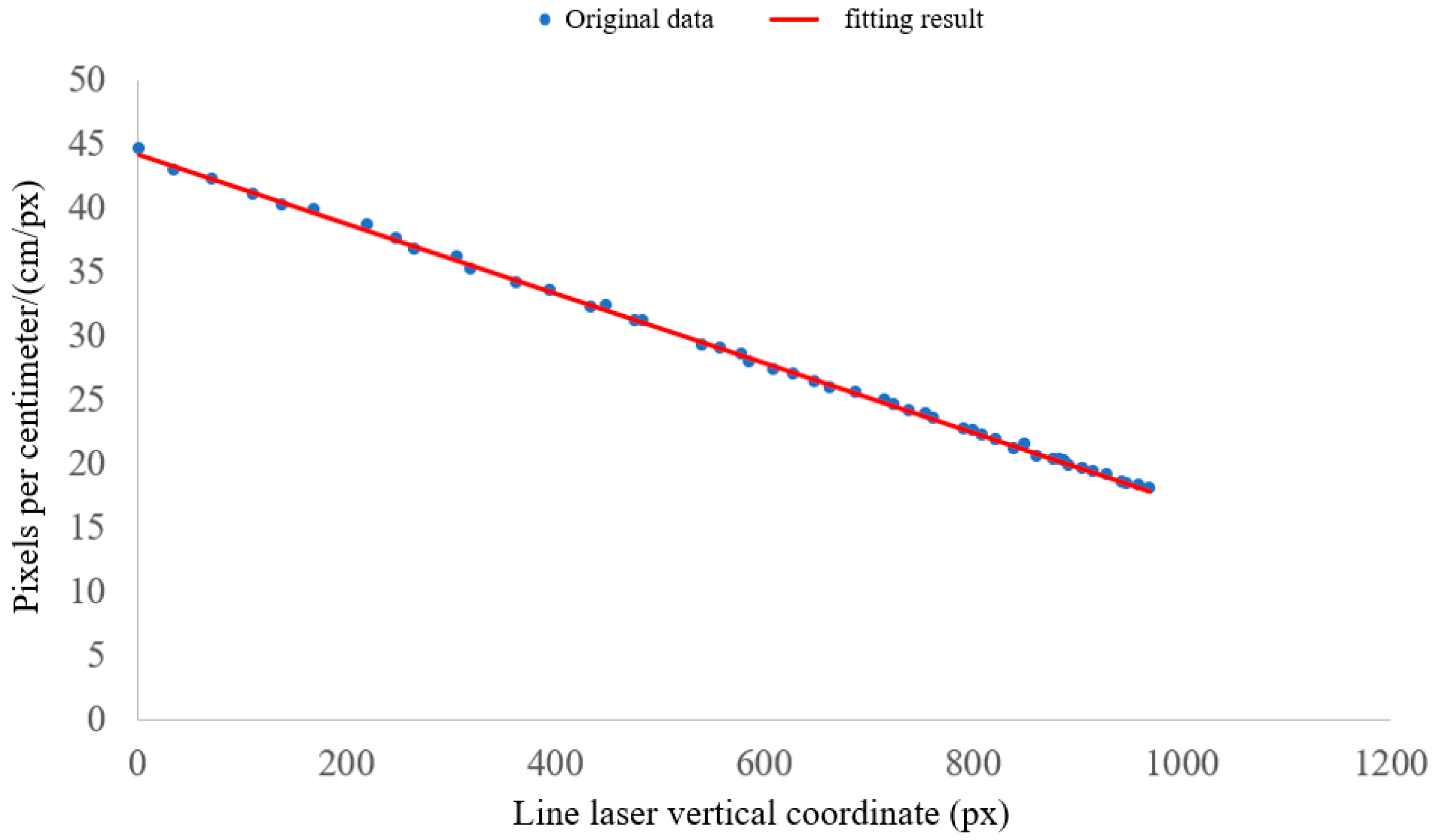

2.3.3. Underwater Camera Distance Calibration

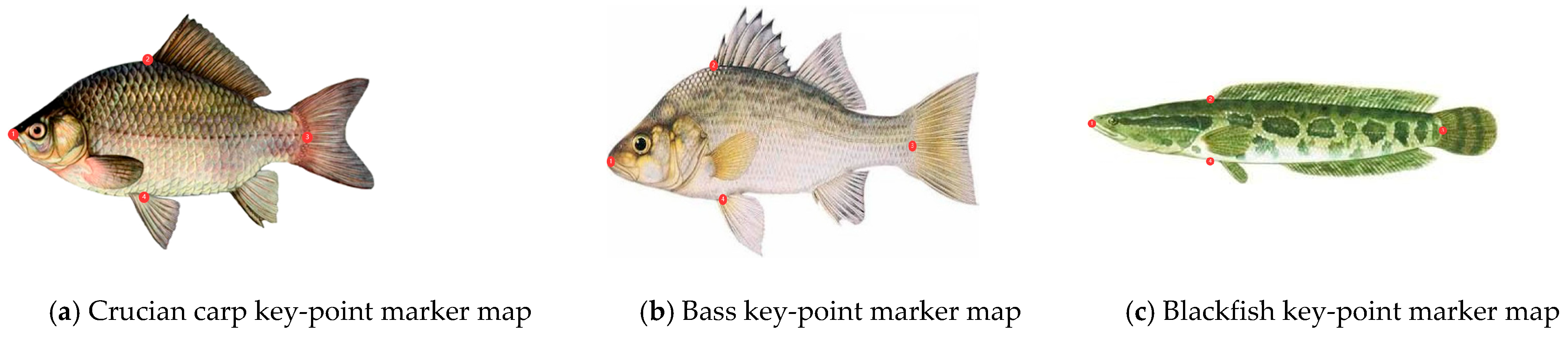

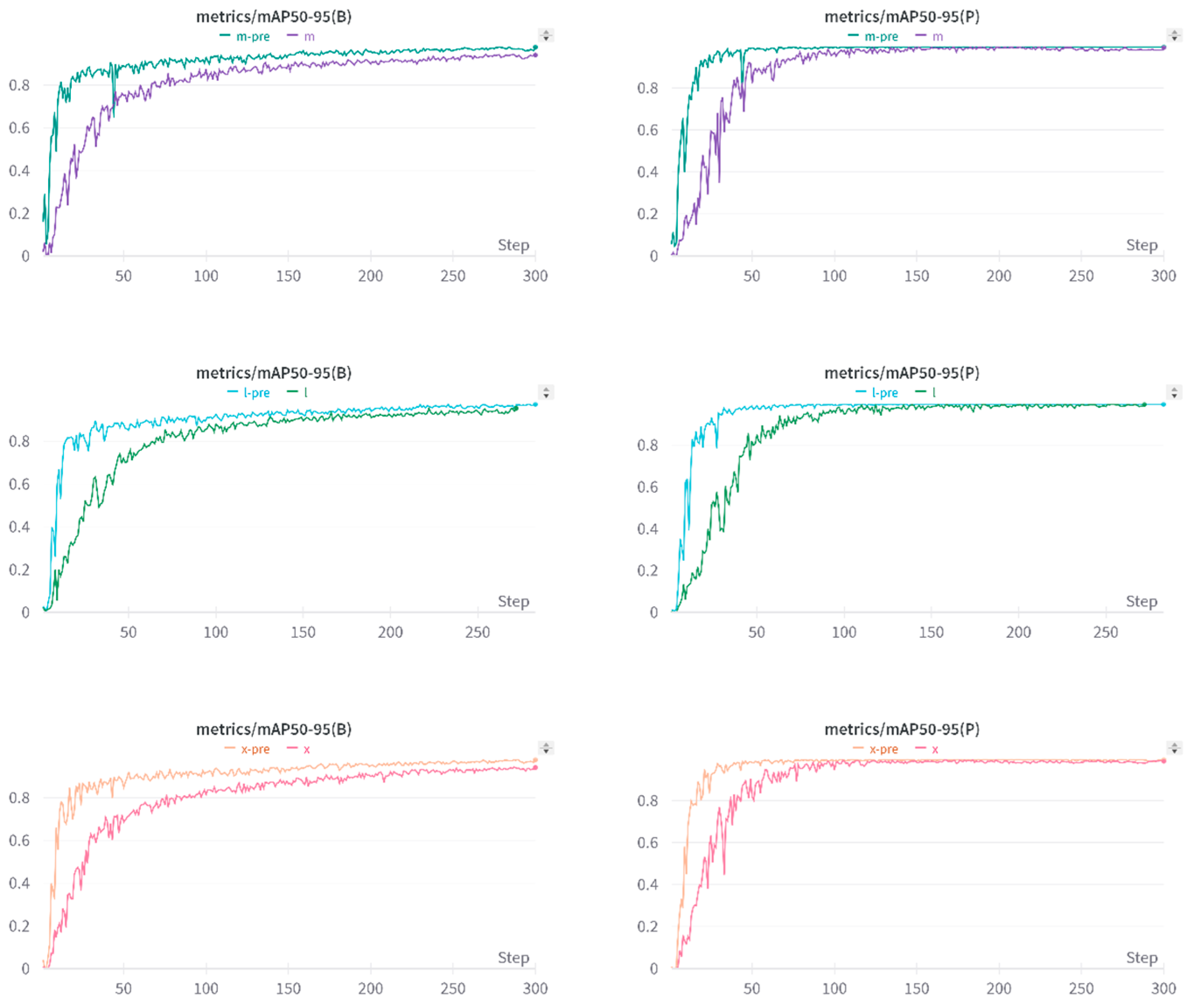

2.3.4. YOLOv8 Key-Point Detection Algorithm

2.3.5. Fish Measurement Calculations

2.4. Experimental Evaluation Indicators

2.4.1. Distance Calibration Evaluation Indicator

2.4.2. YOLOv8 Model Evaluation Metrics

2.4.3. Indicators for Evaluating Fish Body Measurements

3. Results

3.1. Camera Calibration and Distance Calibration Results

3.2. YOLOv8 Model Test Results

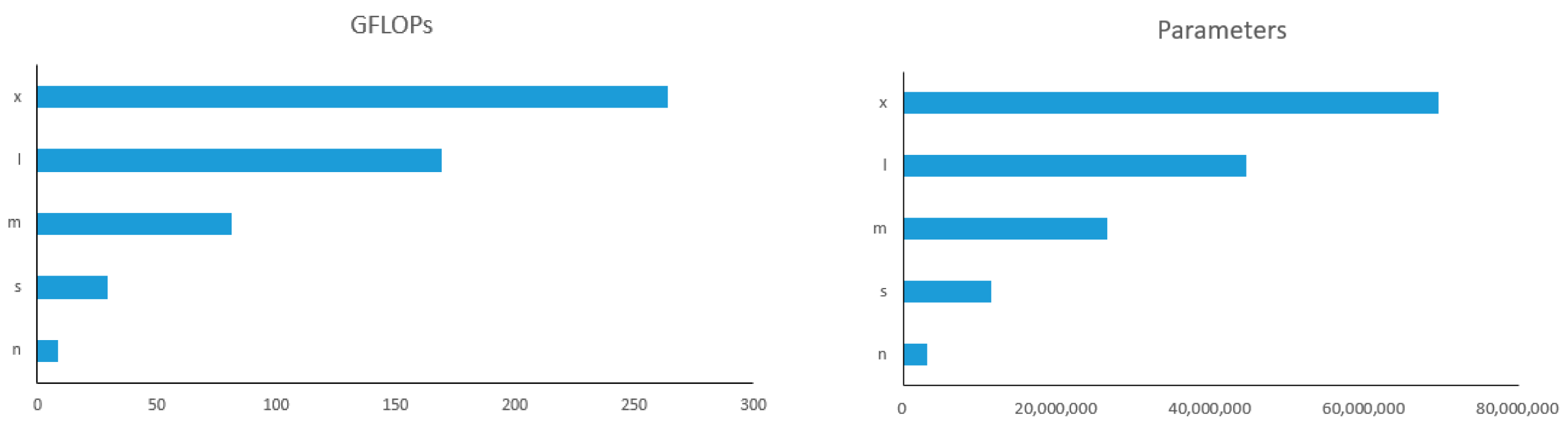

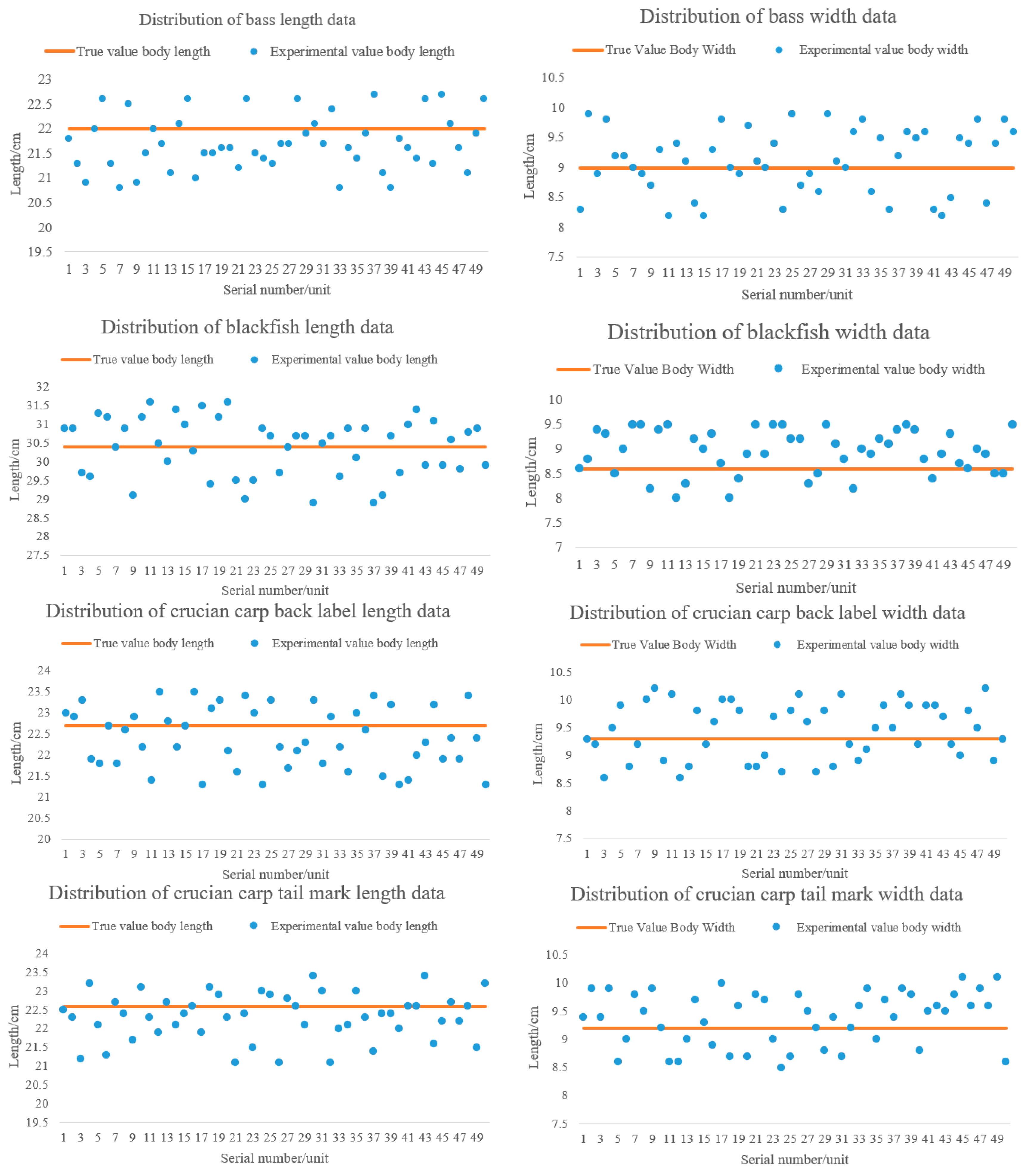

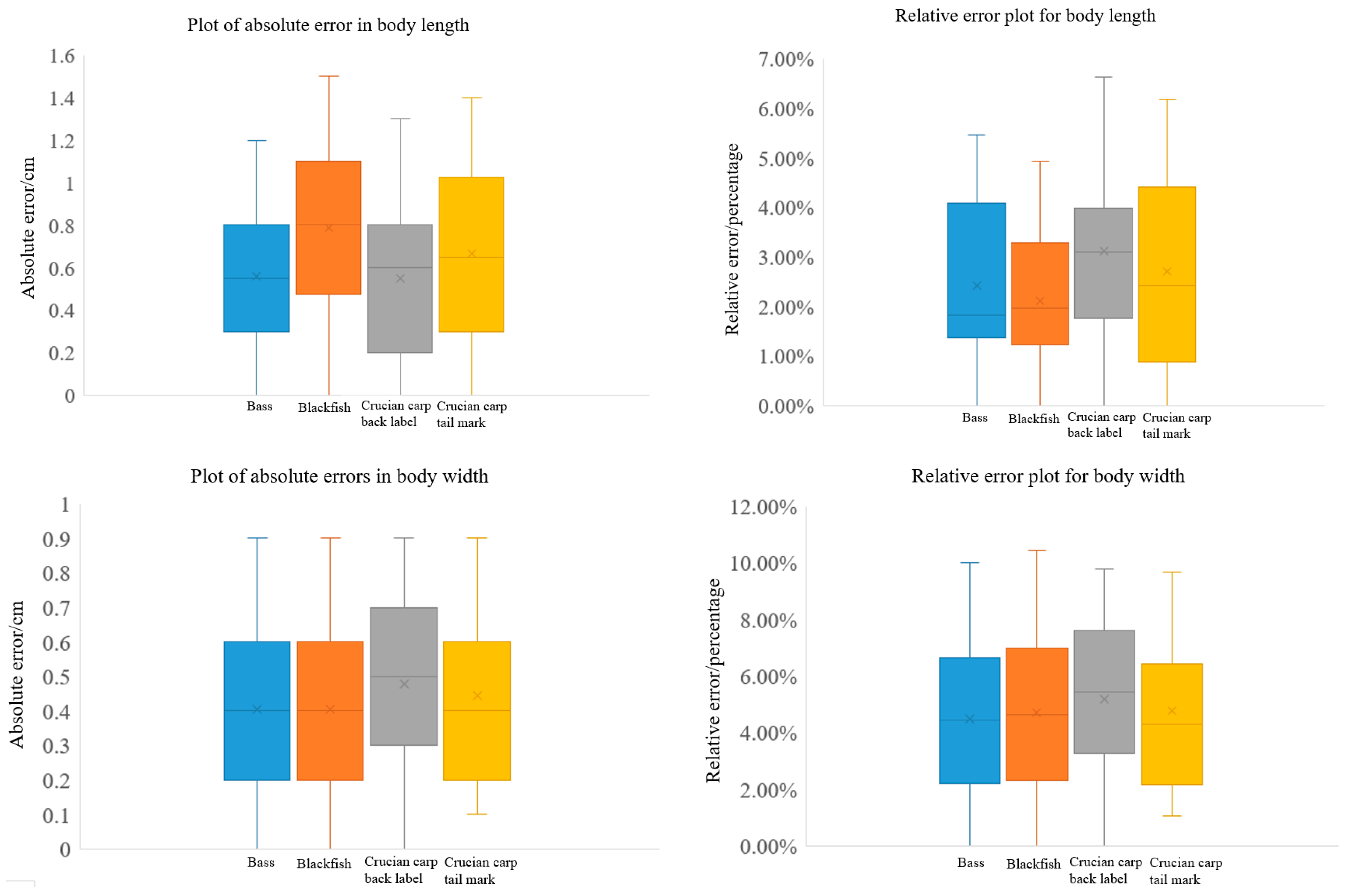

3.3. Fish Body Measurements

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Li, D.; Du, L. Recent advances of deep learning algorithms for aquacultural machine vision systems with emphasis on fish. Artif. Intell. Rev. 2022, 55, 4077–4116. [Google Scholar] [CrossRef]

- Zhang, S.; Li, J.; Tang, F.; Wu, Z.; Dai, Y.; Fan, W. Research progress on fish farming monitoring based on deep learning technology. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2024, 40, 1–13. [Google Scholar]

- Li, Z.; Zhao, Y.; Yang, P.; Wu, Y.; Li, Y.; Guo, R. Review of Research on Fish Body Length Measurement Based on Machine Vision. Trans. Chin. Soc. Agric. Mach. 2021, 52, 207–218. [Google Scholar]

- Duan, Y.; Li, D.; Li, Z.; Fu, Z. Review on visual characteristic measurement research of aquatic animals based on computer vision. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2015, 31, 1–11. [Google Scholar]

- Papadakis, V.M.; Papadakis, I.E.; Lamprianidou, F.; Glaropoulos, A.; Kentouri, M. A computer-vision system and methodology for the analysis of fish behavior. Aquac. Eng. 2012, 46, 53–59. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, S.; Fan, W.; Tang, F.; Yang, S.; Sun, Y.; Wang, S.; Liu, Y.; Zhu, W. Research on target detection of Engraulis japonicuspurse seine based on improved YOLOv5 model. Mar. Fish. 2023, 45, 618–630. [Google Scholar] [CrossRef]

- Pei, K.; Zhang, S.; Fan, W.; Wang, F.; Zou, G.; Zheng, H. Research progress of fish video tracking application based on computer vision. Mar. Fish. 2022, 44, 640–647. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, S.; Wang, S.; Yang, Y.; Dai, Y.; Xiong, Y. Recognition of Acetes chinensis fishing vessel based on 3-2D integrationmodel behavior. South China Fish. Sci. 2022, 18, 126–135. [Google Scholar]

- Palmer, M.; Álvarez-Ellacuría, A.; Moltó, V.; Catalán, I.A. Automatic, operational, high-resolution monitoring of fish length and catch numbers from landings using deep learning. Fish. Res. 2022, 246, 106166. [Google Scholar] [CrossRef]

- Yu, C.; Hu, Z.; Han, B.; Wang, P.; Zhao, Y.; Wu, H. Intelligent measurement of morphological characteristics of fish using improved U-Net. Electronics 2021, 10, 1426. [Google Scholar] [CrossRef]

- Ou, L.; Li, W.; Liu, B.; Chen, X.; He, Q.; Qian, W.; Li, W.; Hou, Q.; Shi, Y. Analysis of phenotype texture features of three Thunnus species based on computer vision. J. Fish. Sci. China 2022, 29, 770–780. [Google Scholar]

- Wang, Y.; Wang, J.; Xin, R.; Ke, Q.Z.; Jiang, P.X.; Zhou, T.; Xu, P. Application of computer vision in morphological and body weight measurements of large yellow croaker (Larimichthys crocea). J. Fish. China 2023, 47, 207–216. [Google Scholar]

- Zhou, J.; Ji, B.; Ni, W.; Zhao, J.; Zhu, S.; Ye, Z. Non-contact method for the accurate estimation of the full-length of Takifugu rubripes based on 3D pose fitting. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2023, 39, 154–161. [Google Scholar]

- Huang, K.; Li, Y.; Suo, F.; Xiang, J. Stereo vison and mask-RCNN segmentation based 3D points cloud matching for fish dimension measurement. In Proceedings of the 2020 39th Chinese Control Conference (CCC), Shenyang, China, 27–30 July 2020; pp. 6345–6350. [Google Scholar]

- Chicchon, M.; Bedon, H.; Del-Blanco, C.R.; Sipiran, I. Semantic Segmentation of Fish and Underwater Environments Using Deep Convolutional Neural Networks and Learned Active Contours. IEEE Access 2023, 11, 33652–33665. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, S.; Wang, S.; Wang, F.; Fan, W.; Zou, G.; Bo, J. Research on optimization of aquarium fish target detection network. Fish. Mod. 2022, 49, 89–98. [Google Scholar]

- Gupta, S.; Mukherjee, P.; Chaudhury, S.; Lall, B.; Sanisetty, H. DFTNet: Deep fish tracker with attention mechanism in unconstrained marine environments. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Terven, J.; Cordova-Esparza, D. A comprehensive review of YOLO: From YOLOv1 to YOLOv8 and beyond. arXiv 2023, arXiv:2304.00501. [Google Scholar]

- Talaat, F.M.; ZainEldin, H. An improved fire detection approach based on YOLO-v8 for smart cities. Neural Comput. Appl. 2023, 35, 20939–20954. [Google Scholar] [CrossRef]

- Wang, T.; Wang, L.; Zhang, W.; Duan, X.; Wang, W. Design of infrared target system with Zhang Zhengyou calibration method. Opt. Precis. Eng. 2019, 27, 1828–1835. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, H.; Li, L.; Zheng, S.; Fu, J.; Tian, Q. Line laser center extraction method based on the improved thinning method. Laser J. 2023, 44, 70–74. [Google Scholar] [CrossRef]

- Yang, S.; Wang, W.; Gao, S.; Deng, Z. Strawberry ripeness detection based on YOLOv8 algorithm fused with LW-Swin Transformer. Comput. Electron. Agric. 2023, 215, 108360. [Google Scholar] [CrossRef]

- Lin, Q.; Yu, C.; Wu, X.; Dong, Y.; Xu, X.; Zhang, Q.; Guo, X. Survey on Sim-to-real Transfer Reinforcement Learning in Robot Systems [J/OL]. J. Softw. 2024, 35, 1–28. [Google Scholar] [CrossRef]

- Sun, Y.; Chen, J.; Zhang, S.; Shi, Y.; Tang, F.; Chen, J.; Xiong, Y.; Li, L. Target detection and counting method for Acetes chinensis fishing vessels operation based on improved YOLOv7. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2023, 39, 151–162. [Google Scholar]

- Wang, S.; Meng, Z.; Gao, N.; Zhang, Z. Advancements in fusion calibration technology of lidar and camera. Infrared Laser Eng. 2023, 52, 20230427. [Google Scholar]

- Huang, W.; Peng, X.; Li, L.; Li, X.Y. Review of Camera Calibration Methods and Their Progress. Laser Optoelectron. Prog. 2023, 60, 9–19. [Google Scholar]

- Shi, X.; Ma, X.; Huang, Z.; Hu, X.; Wei, L.S. Fish Trajectory Extraction Based on Landmark Detection [J/OL]. J. Chang. River Sci. Res. Inst. 2024, 41, 30. [Google Scholar]

- Zeng, J.; Feng, M.; Deng, Y.; Jiang, P.; Bai, Y.; Wang, J.; Qu, A.; Liu, W.; Jiang, Z.; He, Q.; et al. Deep learning to obtain high-throughput morphological phenotypes and its genetic correlation with swimming performance in juvenile large yellow croaker. Aquaculture 2024, 578, 740051. [Google Scholar] [CrossRef]

- Zhu, M.; Li, M.; Wan, P.; Xiao, C.; Zhao, J. Identification of freshwater fish species based on fish feature point detection. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2023, 39, 155–164. [Google Scholar]

- Hsieh, C.L.; Chang, H.Y.; Chen, F.H.; Liou, J.-H.; Chang, S.-K.; Lin, T.-T. A simple and effective digital imaging approach for tuna fish length measurement compatible with fishing operations. Comput. Electron. Agric. 2011, 75, 44–51. [Google Scholar] [CrossRef]

- Miranda, J.M.; Romero, M. A prototype to measure rainbow trout’s length using image processing. Aquac. Eng. 2017, 76, 41–49. [Google Scholar] [CrossRef]

- Huang, K. Research and Implement of Machine Vision Based Underwater Dynamic Fish Size Measurement Method; Zhejiang University: Hangzhou, China, 2021. [Google Scholar]

| Mark Name | Number |

|---|---|

| Bass | 171 |

| Blackfish | 145 |

| Crucian carp back label | 165 |

| Crucian carp tail mark | 180 |

| Model | Size (M) | Accuracy (Box) | MAP (50-95) | Accuracy (Points) | MAP (50-95) |

|---|---|---|---|---|---|

| n-pre | 6.5 | 98.7% | 0.966 | 98.7% | 0.993 |

| s-pre | 23.1 | 99.5% | 0.951 | 99.5% | 0.991 |

| m-pre | 53.2 | 99.7% | 0.966 | 99.7% | 0.995 |

| l-pre | 89.4 | 99.6% | 0.951 | 99.6% | 0.992 |

| x-pre | 139.4 | 99.6% | 0.961 | 99.6% | 0.993 |

| Categories | Absolute Error (cm) | Mean Absolute Error (cm) | Relative Error | Average Relative Error | Standard Deviation |

|---|---|---|---|---|---|

| All category | 0~1.5 | 0.58 | 0%~6.64% | 2.46% | — |

| Bass | 0~1.2 | 0.50 | 0%~5.5% | 2.50% | 0.60 |

| Blackfish | 0~1.5 | 0.65 | 0%~4.93% | 2.16% | 0.77 |

| Crucian carp back label | 0~1.4 | 0.64 | 0%~6.17% | 2.85% | 0.70 |

| Crucian carp tail mark | 0~1.5 | 0.52 | 0%~6.64% | 2.33% | 0.62 |

| Categories | Absolute Error (cm) | Mean Absolute Error (cm) | Relative Error | Average Relative Error | Standard Deviation |

|---|---|---|---|---|---|

| All category | 0~0.9 | 0.46 | 0%~10.47% | 5.11% | — |

| Bass | 0~0.9 | 0.46 | 0%~10.0% | 5.13% | 0.53 |

| Blackfish | 0~0.9 | 0.48 | 0%~10.47% | 5.60% | 0.45 |

| Crucian carp back label | 0~0.9 | 0.45 | 0%~9.68% | 4.84% | 0.50 |

| Crucian carp tail mark | 0~0.9 | 0.44 | 0%~9.78% | 4.87% | 0.48 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Zhang, S.; Li, P.; Dai, Y.; Wu, Z. Research on Measuring the Bodies of Underwater Fish with Inclined Positions Using the YOLOv8 Model and a Line-Laser System. Fishes 2024, 9, 206. https://doi.org/10.3390/fishes9060206

Li J, Zhang S, Li P, Dai Y, Wu Z. Research on Measuring the Bodies of Underwater Fish with Inclined Positions Using the YOLOv8 Model and a Line-Laser System. Fishes. 2024; 9(6):206. https://doi.org/10.3390/fishes9060206

Chicago/Turabian StyleLi, Jiakang, Shengmao Zhang, Penglong Li, Yang Dai, and Zuli Wu. 2024. "Research on Measuring the Bodies of Underwater Fish with Inclined Positions Using the YOLOv8 Model and a Line-Laser System" Fishes 9, no. 6: 206. https://doi.org/10.3390/fishes9060206

APA StyleLi, J., Zhang, S., Li, P., Dai, Y., & Wu, Z. (2024). Research on Measuring the Bodies of Underwater Fish with Inclined Positions Using the YOLOv8 Model and a Line-Laser System. Fishes, 9(6), 206. https://doi.org/10.3390/fishes9060206