Built Environment Evaluation in Virtual Reality Environments—A Cognitive Neuroscience Approach

Abstract

:1. Introduction

2. Current Research and Needs

2.1. The Cognitive Neuroscience Approach and Selection of Electroencephalography (EEG)

2.2. Use of EEG in the Study of the Built Environment

2.3. The Need for Virtual Reality for the Design and Research of the Built Environment

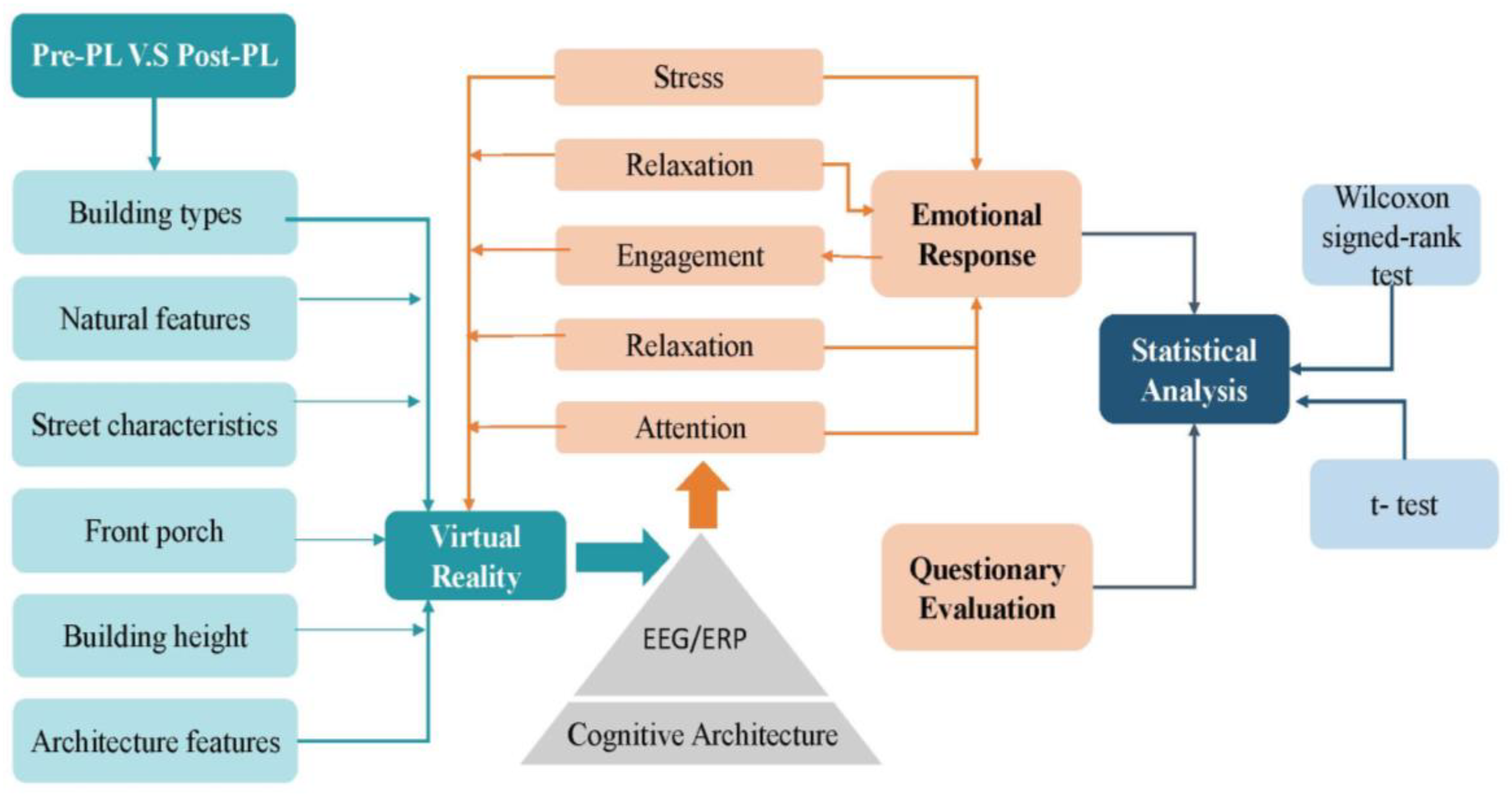

3. Methodology and Materials: Sample, Experiment, and Set Up

3.1. Neuroscience Framework: ERPs and Cognition Architecture (CA)

3.2. Sample Size

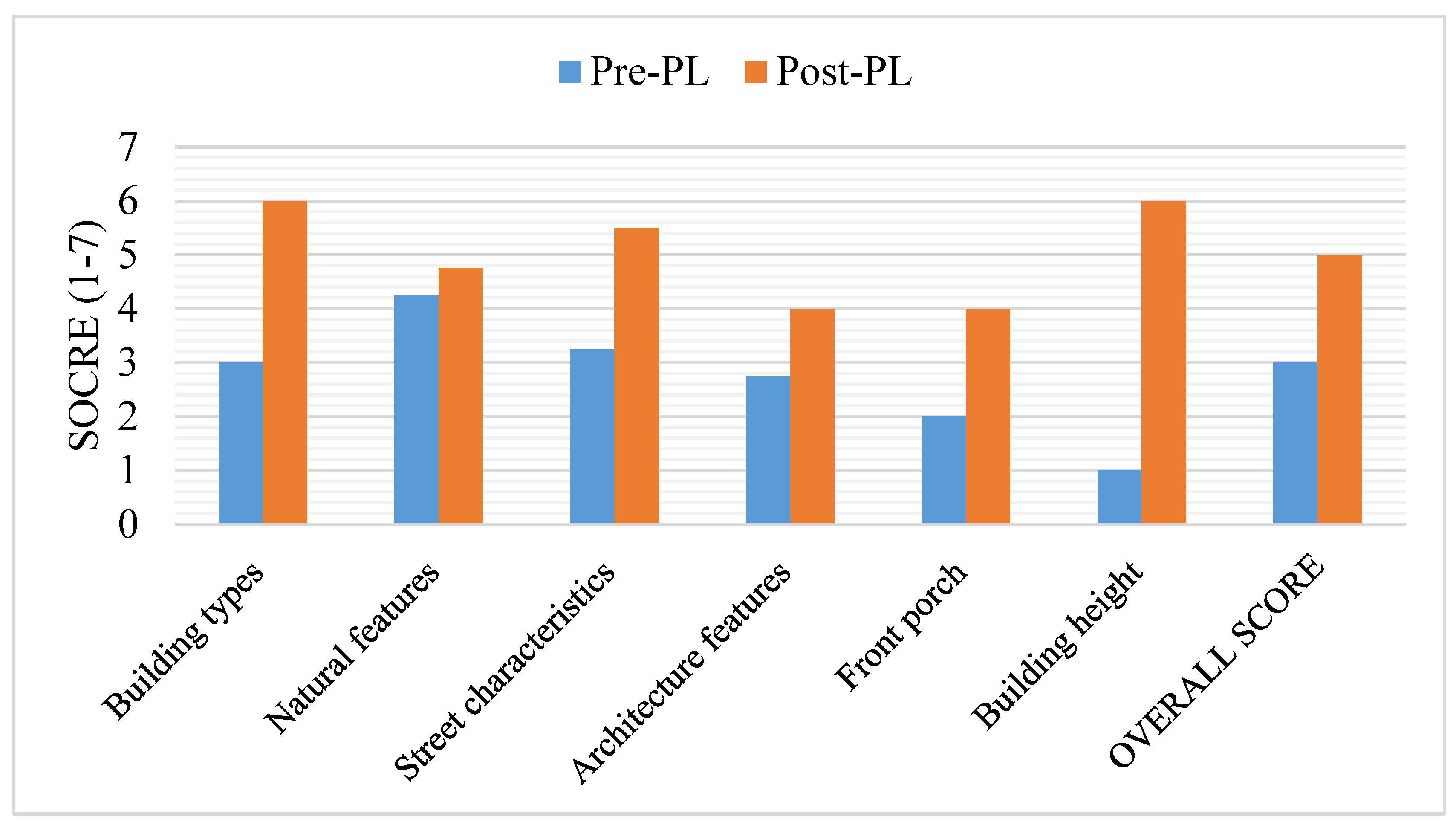

3.3. Pre-PL Data Collection

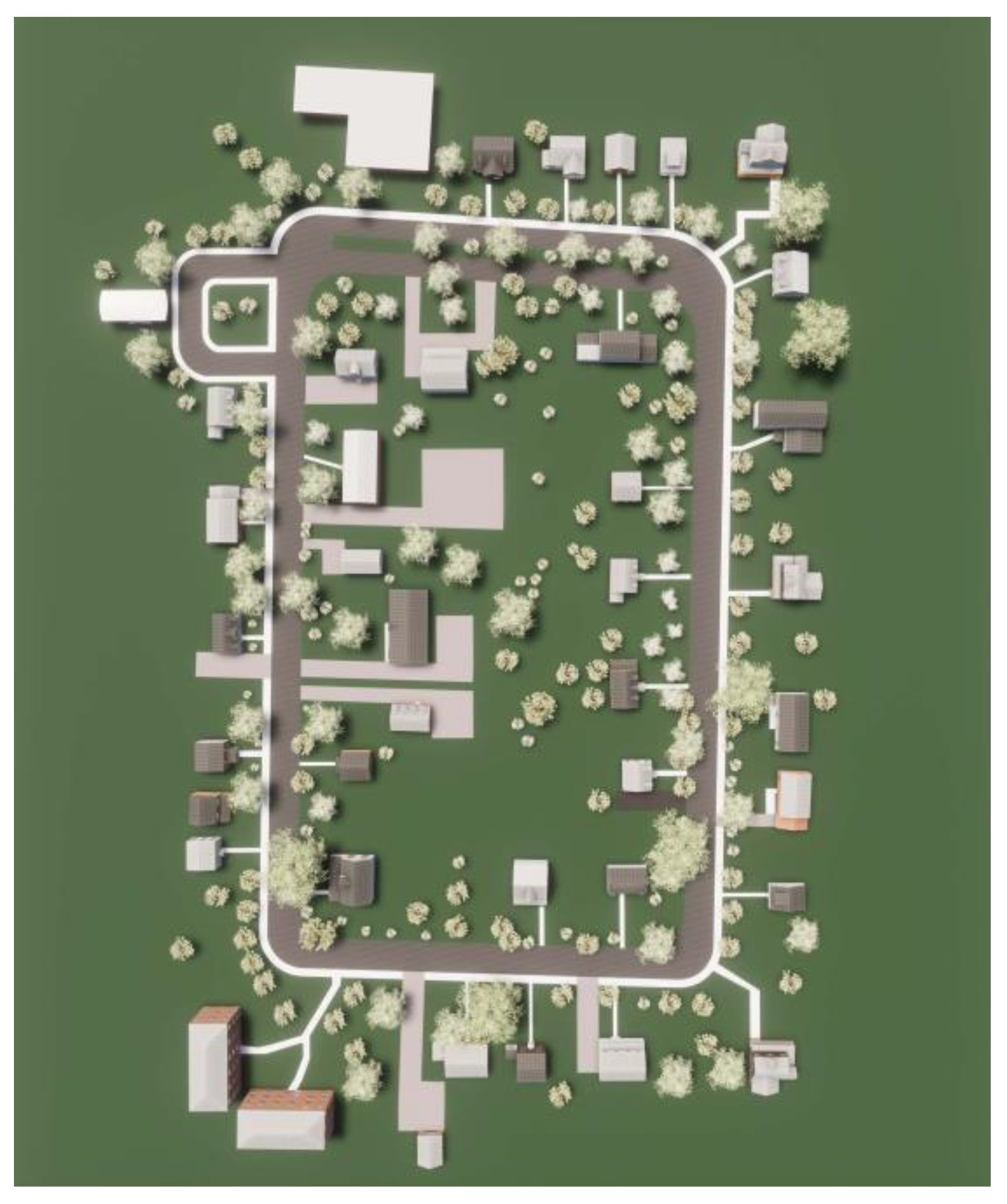

3.4. Pre-PL Scenario Reconstruction and Post-PL Scenario Creation in Virtual Reality (VR)

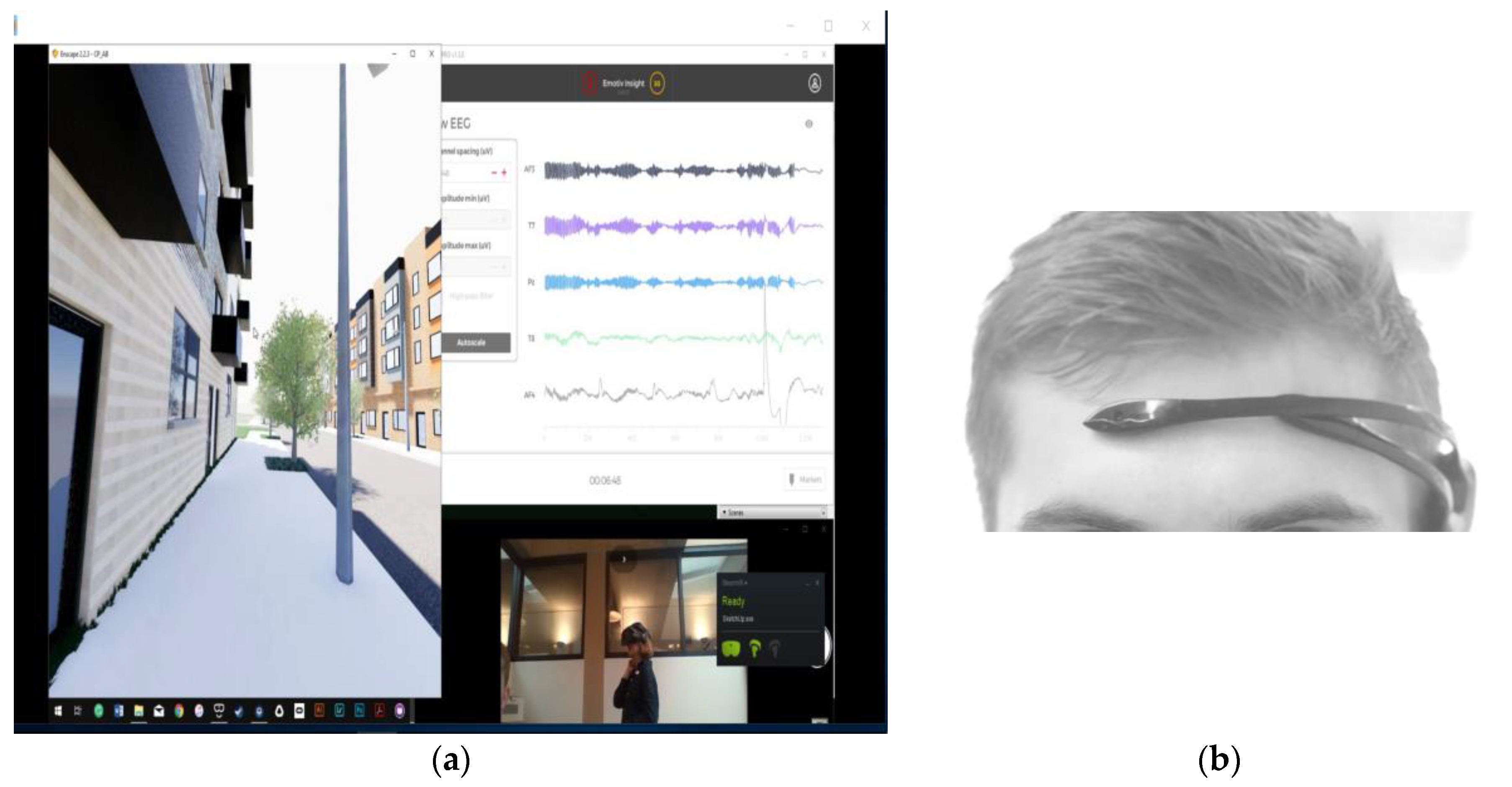

3.5. VR Headset and Mobile Electroencephalograph (Mobile EEG) Set Up

3.6. Subjective Evaluation

4. Data Analysis and Findings

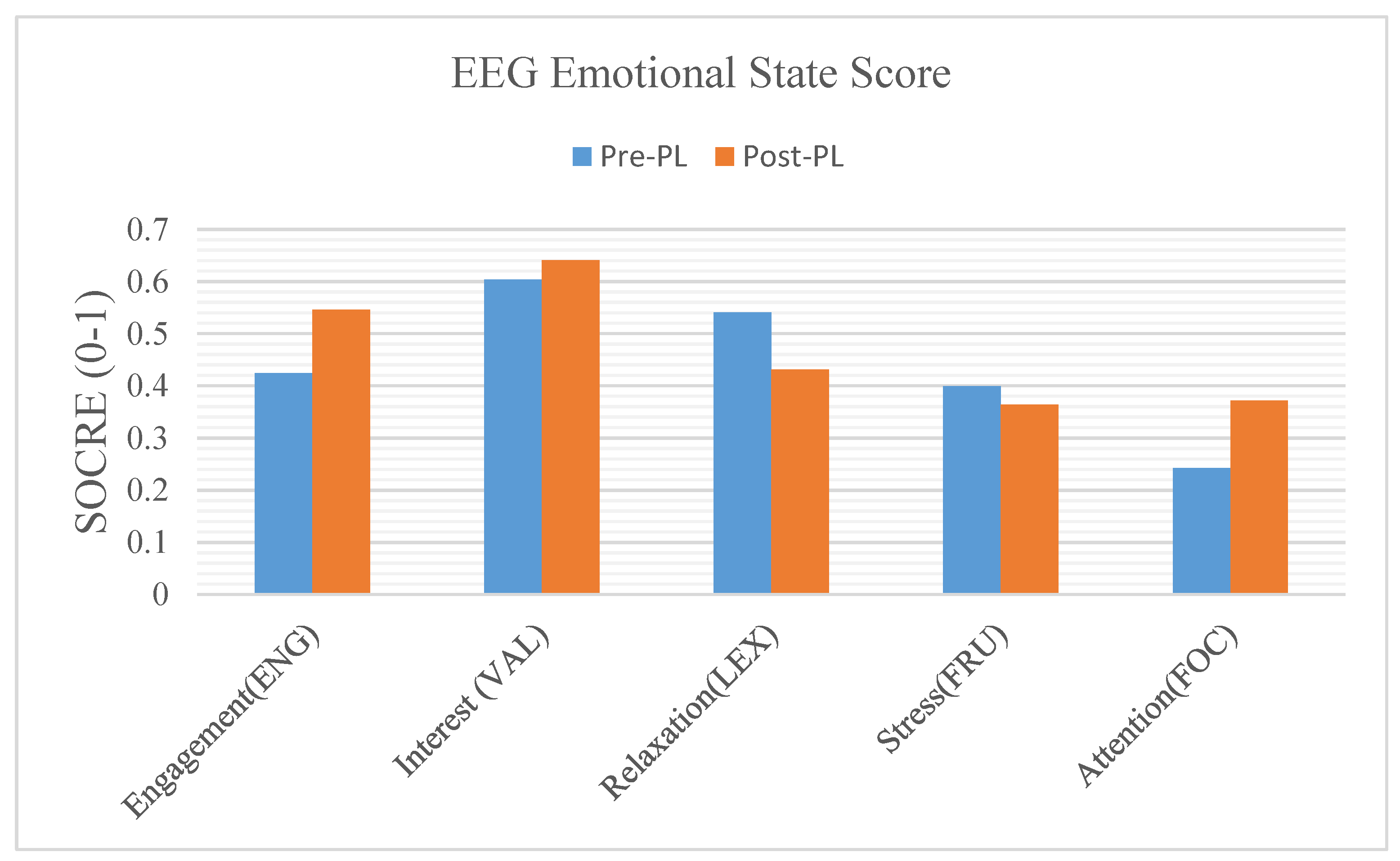

4.1. Questionnaires and EEG Results

4.2. Statistical Analysis: Wilcoxon Signed-Rank Test

4.3. Statistical Analysis: T-Test

5. Discussion

5.1. Emotion as Latent Attributes

5.2. Limitations

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Aries, M.B.; Aarts, M.; Van Hoof, J. Daylight and health: A review of the evidence and consequences for the built environment. Light. Res. Technol. 2013, 47, 6–27. [Google Scholar] [CrossRef]

- Eyles, J.; Baxter, J. Environments, Risks and Health; Routledge: London, UK, 2016. [Google Scholar]

- Burton, E.J.; Mitchell, L.; Stride, C.B. Good places for ageing in place: Development of objective built environment measures for investigating links with older people’s wellbeing. BMC Public Health 2011, 11, 839. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shanahan, D.F.; Fuller, R.A.; Bush, R.; Lin, B.B.; Gaston, K.J. The Health Benefits of Urban Nature: How Much Do We Need? BioScience 2015, 65, 476–485. [Google Scholar] [CrossRef] [Green Version]

- Brownson, R.C.; Baker, E.A.; Deshpande, A.D.; Gillespie, K.N. Evidence-Based Public Health; Oxford University Press: Oxford, UK, 2017. [Google Scholar]

- Stevenson, M.; Thompson, J.; De Sá, T.H.; Ewing, R.; Mohan, D.; McClure, R.J.; Roberts, I.; Tiwari, G.; Giles-Corti, B.; Sun, X.; et al. Land use, transport, and population health: Estimating the health benefits of compact cities. Lancet 2016, 388, 2925–2935. [Google Scholar] [CrossRef] [Green Version]

- Wei, Y.D.; Xiao, W.; Wen, M.; Wei, R. Walkability, Land Use and Physical Activity. Sustainability 2016, 8, 65. [Google Scholar] [CrossRef] [Green Version]

- Jackson, R.J.; Dannenberg, A.L.; Frumkin, H. Health and the Built Environment: 10 Years After. Am. J. Public Health 2013, 103, 1542–1544. [Google Scholar] [CrossRef] [PubMed]

- Larice, M.; Macdonald, E. The Urban Design Reader; Larice, M., Macdonald, E., Eds.; Routledge: London, UK, 2012. [Google Scholar]

- Koohsari, M.J.; Badland, H.; Giles-Corti, B. (Re)Designing the built environment to support physical activity: Bringing public health back into urban design and planning. Cities 2013, 35, 294–298. [Google Scholar] [CrossRef]

- Marsella, S.; Gratch, J. Computationally modeling human emotion. Commun. ACM 2014, 57, 56–67. [Google Scholar] [CrossRef]

- Farr, O.M.; Li, C.-S.R.; Mantzoros, C.S. Central nervous system regulation of eating: Insights from human brain imaging. Metabolism 2016, 65, 699–713. [Google Scholar] [CrossRef] [Green Version]

- Balters, S.; Steinert, M. Capturing emotion reactivity through physiology measurement as a foundation for affective engineering in engineering design science and engineering practices. J. Intell. Manuf. 2015, 28, 1585–1607. [Google Scholar] [CrossRef] [Green Version]

- Mehrabian, A.; Russell, J.A. An Approach to Environmental Psychology; MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Duncan, E.H. Environmental Aesthetics: Theory, Research, and Applications; Nasar, J.L., Ed.; Cambridge University Press: Cambridge, MA, USA, 1992. [Google Scholar]

- Pullman, M.E.; Gross, M.A. Ability of Experience Design Elements to Elicit Emotions and Loyalty Behaviors. Decis. Sci. 2004, 35, 551–578. [Google Scholar] [CrossRef]

- Seitamaa-Hakkarainen, P.; Huotilainen, M.; Mäkelä, M.; Groth, C.; Hakkarainen, K. The promise of cognitive neuroscience in design studies. In Proceedings of the Design Research Society 2014 Conference, Umeå, Sweden, 16–19 June 2014; pp. 834–846. [Google Scholar]

- Ulrich, R.S. Natural Versus Urban Scenes: Some psychophysiological effects. Environ. Behav. 1981, 13, 523–556. [Google Scholar] [CrossRef]

- Hu, W.-L.; Booth, J.; Reid, T.N. The Relationship Between Design Outcomes and Mental States During Ideation. J. Mech. Des. 2017, 139, 051101. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, T.A.; Zeng, Y. A physiological study of relationship between designer’s mental effort and mental stress during conceptual design. Computer-Aided. Des. 2014, 54, 3–18. [Google Scholar] [CrossRef]

- Roe, J.J.; Aspinall, P.A.; Mavros, P.; Coyne, R. Engaging the brain: The impact of natural versus urban scenes using novel EEG methods in an experimental setting. Environ. Sci. 2013, 1, 93–104. [Google Scholar] [CrossRef] [Green Version]

- Milgram, P.; Kishino, F. A taxonomy of mixed reality visual displays. IEICE Trans. Infor.Syst. 1994, 77, 1321–1329. [Google Scholar]

- Stouffs, R.; Janssen, P.; Roudavski, S.; Tunçer, B. What is happening to virtual and augmented reality applied to architecture? In Proceedings of the Conference on Computer-Aided Architectural Design Research in Asia (CAADRIA 2013), Singapore, 15–18 May 2013; Volume 1, p. 10. [Google Scholar]

- Milovanovic, J.; Moreau, G.; Siret, D.; Miguet, F. Virtual and augmented reality in architectural design and education. In Proceedings of the 17th International Conference Future Trajectories of Computation in Design, CAAD Futures, Istanbul, Turkey, 12–14 July 2017. [Google Scholar]

- Marín-Morales, J.; Higuera-Trujillo, J.L.; Greco, A.; Guixeres, J.; Llinares, C.; Scilingo, E.P.; Alcañiz, M.; Valenza, G. Affective computing in virtual reality: Emotion recognition from brain and heartbeat dynamics using wearable sensors. Sci. Rep. 2018, 8, 1–5. [Google Scholar] [CrossRef]

- Kotseruba, I.; Tsotsos, J.K. 40 years of cognitive architectures: Core cognitive abilities and practical applications. Artif. Intell. Rev. 2018, 53, 17–94. [Google Scholar] [CrossRef] [Green Version]

- Lieto, A.; Bhatt, M.; Oltramari, A.; Vernon, D. The role of cognitive architectures in general artificial intelligence. Cogn. Syst. Res. 2018, 48, 1–3. [Google Scholar] [CrossRef] [Green Version]

- Bertolero, M.A.; Yeo, B.T.T.; D’Esposito, M. The modular and integrative functional architecture of the human brain. Proc. Natl. Acad. Sci. USA 2015, 112, E6798–E6807. [Google Scholar] [CrossRef] [Green Version]

- Anderson, J.R.; Lebiere, C. The Newell Test for a theory of cognition. Behav. Brain Sci. 2003, 26, 587–601. [Google Scholar] [CrossRef] [PubMed]

- Langley, P. Progress and Challenges in Research on Cognitive Architectures. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 4870–4876. [Google Scholar]

- Sweller, J.; Van Merriënboer, J.J.; Paas, F. Cognitive Architecture and Instructional Design. Educ. Psychol. Rev. 1998, 10, 251–296. [Google Scholar] [CrossRef]

- Koć-Januchta, M.M.; Höffler, T.N.; Thoma, G.-B.; Prechtl, H.; Leutner, D. Visualizers versus verbalizers: Effects of cognitive style on learning with texts and pictures–An eye-tracking study. Comput. Hum. Behav. 2017, 68, 170–179. [Google Scholar] [CrossRef] [Green Version]

- Stea, D. Image and Environment: Cognitive Mapping and Spatial Behavior; Transaction Publishers: Piscataway, NJ, USA, 2017. [Google Scholar]

- Oliveira, A.S.; Schlink, B.R.; Hairston, W.D.; König, P.; Ferris, D.P. Induction and separation of motion artifacts in EEG data using a mobile phantom head device. J. Neural Eng. 2016, 13, 036014. [Google Scholar] [CrossRef] [PubMed]

- Chersi, F.; Burgess, N. The Cognitive Architecture of Spatial Navigation: Hippocampal and Striatal Contributions. Neuron 2015, 88, 64–77. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kirsch, A. A unifying computational model of decision making. Cogn. Process. 2019, 20, 243–259. [Google Scholar] [CrossRef] [Green Version]

- Bhatt, M.; Suchan, J.; Schultz, C.P.; Kondyli, V.; Goyal, S. Artificial Intelligence for Predictive and Evidence Based Architecture Design. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence (AAAI-16), Phoenix, AZ, USA, 12–17 February 2016; pp. 4349–4350. [Google Scholar]

- Zeng, M.; Nguyen, L.T.; Yu, B.; Mengshoel, O.J.; Zhu, J.; Wu, P.; Zhang, Y. Convolutional Neural Networks for Human Activity Recognition using Mobile Sensors. In Proceedings of the 6th International Conference on Mobile Computing, Applications and Services, Austin, TX, USA, 6–7 November 2014; pp. 197–205. [Google Scholar]

- Vijayalakshmi, K.; Sridhar, S.; Khanwani, P. Estimation of effects of alpha music on EEG components by time and frequency domain analysis. In Proceedings of the International Conference on Computer and Communication Engineering (ICCCE’10), Kuala Lumpur, Malaysia, 11–12 May 2010; pp. 1–5. [Google Scholar]

- Vallabhaneni, M.; Baldassari, L.E.; Scribner, J.T.; Cho, Y.W.; Motamedi, G.K. A case–control study of wicket spikes using video-EEG monitoring. Seizure 2013, 22, 14–19. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Larson, M.J.; Carbine, K.A. Sample size calculations in human electrophysiology (EEG and ERP) studies: A systematic review and recommendations for increased rigor. Int. J. Psychophysiol. 2017, 111, 33–41. [Google Scholar] [CrossRef]

- Siegel, S. Nonparametric statistics. Am. Stat. 1957, 11, 13–19. [Google Scholar]

- Xiao, D.Y. Experiencing the library in a panorama virtual reality environment. Libr. Hi Tech. 2000, 18, 177–184. [Google Scholar] [CrossRef]

- Aspinall, P.; Mavros, P.; Coyne, R.; Roe, J. The urban brain: Analysing outdoor physical activity with mobile EEG. Br. J. Sports Med. 2013, 49, 272–276. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Neale, C.; Aspinall, P.; Roe, J.; Tilley, S.; Mavros, P.; Cinderby, S.; Coyne, R.; Thin, N.; Bennett, G.; Thompson, C.W. The Aging Urban Brain: Analyzing Outdoor Physical Activity Using the Emotiv Affectiv Suite in Older People. J. Urban Health 2017, 94, 869–880. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ramirez, R.; Vamvakousis, Z. Detecting Emotion from EEG Signals Using the Emotive Epoc Device. In Proceedings of the Human-Computer Interaction, Macau, China, 4–7 December 2012; Applications and Services. Springer: Berlin, Germnay, 2012; pp. 175–184. [Google Scholar]

- Hollander, J.; Foster, V. Brain responses to architecture and planning: A preliminary neuro-assessment of the pedestrian experience in Boston, Massachusetts. Arch. Sci. Rev. 2016, 59, 474–481. [Google Scholar] [CrossRef]

- Ackerman, S. Discovering the Brain; National Academies Press: Washington, DC, USA, 1992. [Google Scholar]

- Harrison, T. The Emotiv Mind: Investigating the Accuracy of the Emotiv EPOC in Identifying Emotions and Its Use in an Intelligent Tutoring System. Ph.D. Thesis, University of Canterbury, Christchurch, NZ, USA, 2013. [Google Scholar]

- Badcock, N.A.; Mousikou, P.; Mahajan, Y.; De Lissa, P.; Thie, J.; McArthur, G.M. Validation of the Emotiv EPOC®EEG gaming system for measuring research quality auditory ERPs. Peer J. 2013, 1, e38. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, Y.; Jiang, X.; Cao, T.; Wan, F.; Mak, P.U.; Mak, P.-I.; Vai, M.I. Implementation of SSVEP based BCI with Emotiv EPOC. In Proceedings of the 2012 IEEE International Conference on Virtual Environments Human-Computer Interfaces and Measurement Systems (VECIMS) Proceedings, Tianjin, China, 2–4 July 2012; pp. 34–37. [Google Scholar]

| Time Step | Pre-PL | Post-PL | Difference | Positive | [Diff] | Rank | Signed Rank | α = 0.05 |

|---|---|---|---|---|---|---|---|---|

| Response 1 | 0.760 | 0.047 | 0.71 | 1 | 0.71 | 8 | 8 | |

| Response 2 | 0.377 | 0.636 | −0.26 | −1 | 0.26 | 5 | −5 | |

| Response 3 | 0.725 | 0.657 | 0.07 | 1 | 0.07 | 1 | 1 | |

| Response 4 | 0.871 | 0.472 | 0.40 | 1 | 0.40 | 6 | 6 | |

| Response 5 | 0.886 | 0.418 | 0.47 | 1 | 0.47 | 7 | 7 | |

| Response 6 | 0.441 | 0.650 | −0.21 | −1 | 0.21 | 4 | −4 | |

| Response 7 | 0.336 | 0.169 | 0.17 | 1 | 0.17 | 2 | 2 | |

| Response 8 | 0.323 | 0.148 | 0.18 | 1 | 0.18 | 3 | 3 | |

| 27 | Positive sum | |||||||

| −9 | Negative sum | |||||||

| 27 | Test statistic (W) |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, M.; Roberts, J. Built Environment Evaluation in Virtual Reality Environments—A Cognitive Neuroscience Approach. Urban Sci. 2020, 4, 48. https://doi.org/10.3390/urbansci4040048

Hu M, Roberts J. Built Environment Evaluation in Virtual Reality Environments—A Cognitive Neuroscience Approach. Urban Science. 2020; 4(4):48. https://doi.org/10.3390/urbansci4040048

Chicago/Turabian StyleHu, Ming, and Jennifer Roberts. 2020. "Built Environment Evaluation in Virtual Reality Environments—A Cognitive Neuroscience Approach" Urban Science 4, no. 4: 48. https://doi.org/10.3390/urbansci4040048