Frac-Vector: Better Category Representation

Abstract

1. Introduction

2. Related Work

3. Method

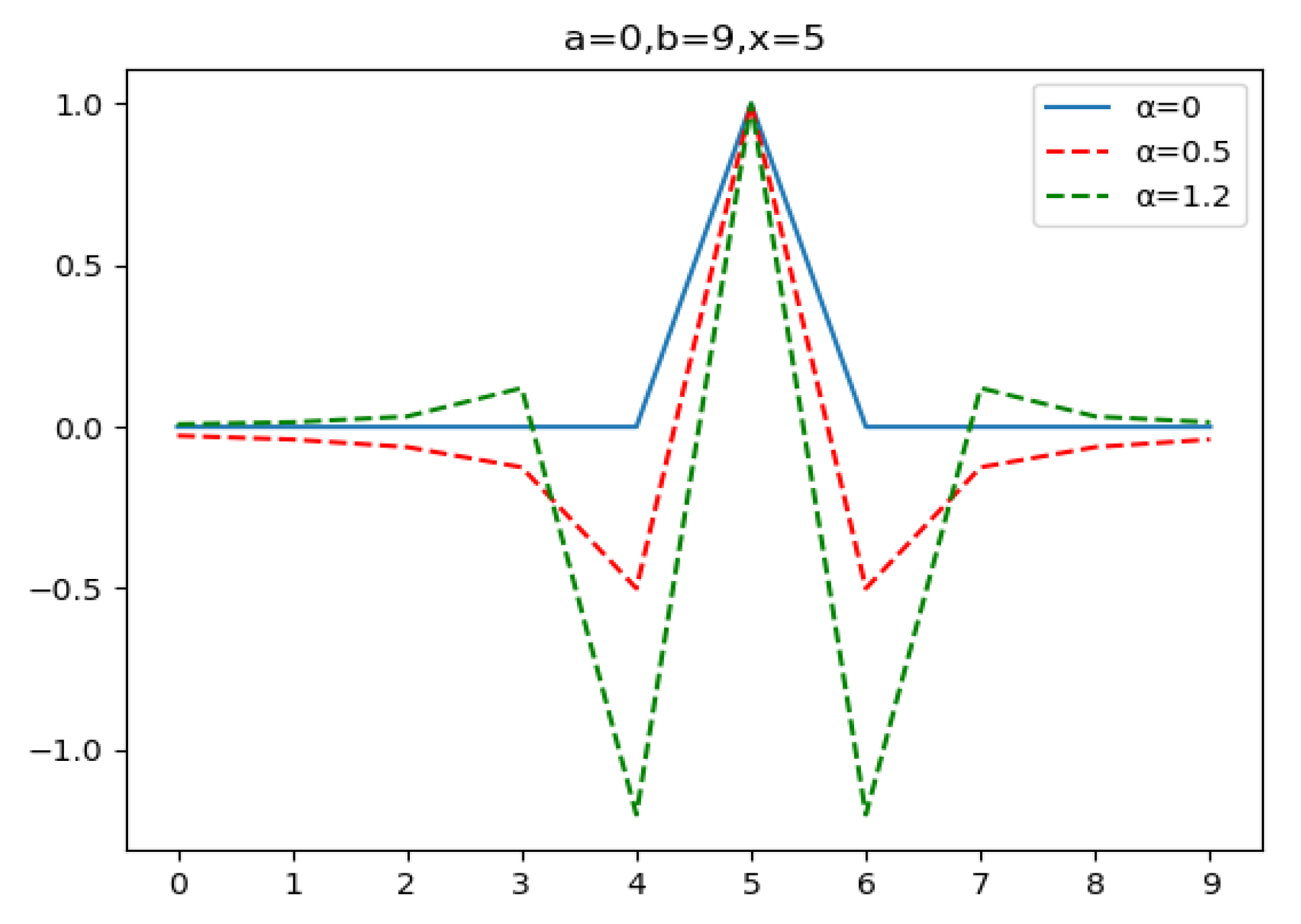

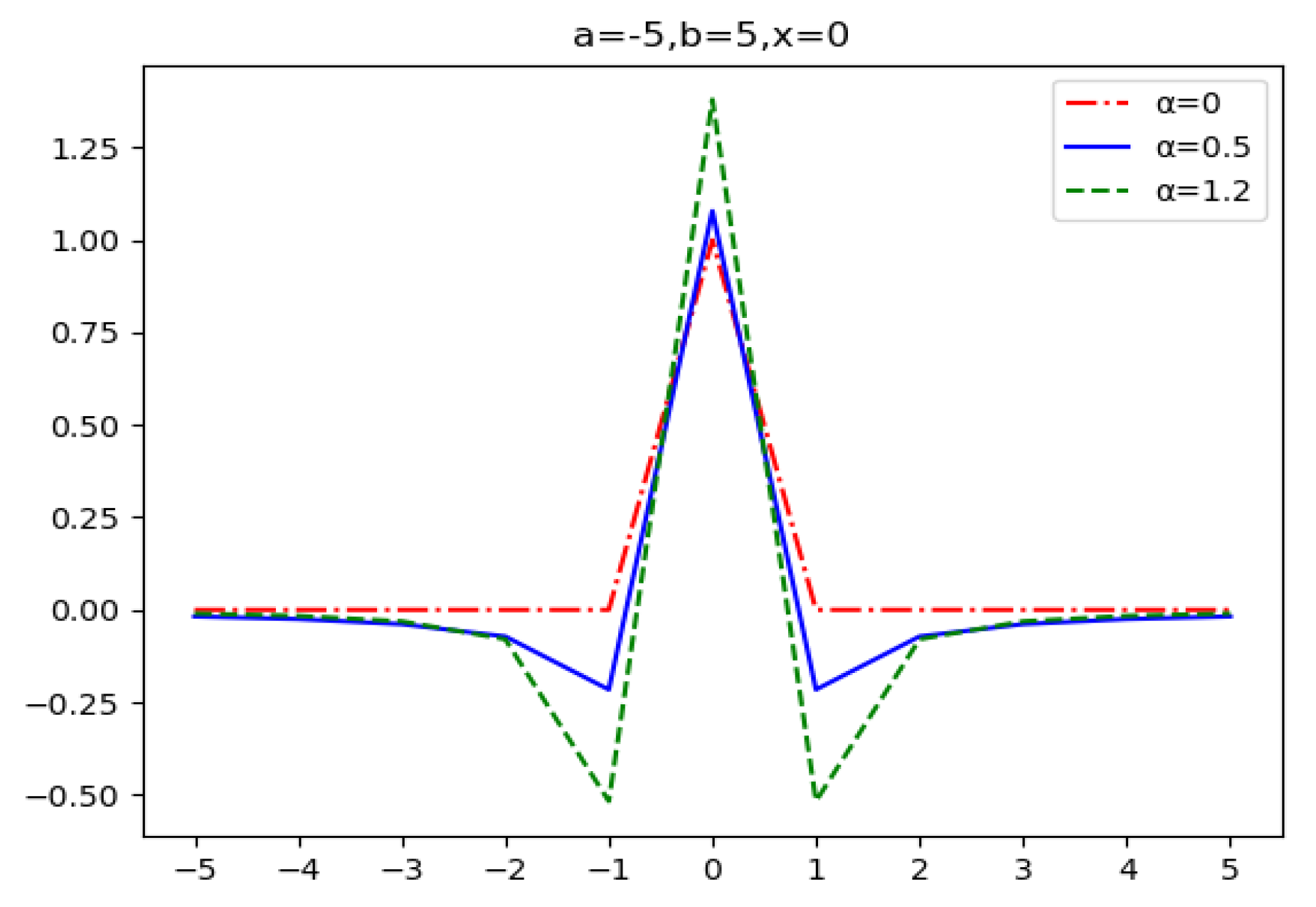

3.1. About Grünwald–Letnikov Fractional-Order Derivative

3.2. From Coefficient Vectors to Category Representation Vectors

| Algorithm 1: Codes of the sequential calculation method for the design of FVs |

| def lateral_index(index, alpha): x = [] for i in range(index): if i == 0: tmp = 1 else: tmp = (1 − (alpha + 1)/i) * tmp x.append(tmp) return x |

4. Experiments

4.1. CIFAR-10

4.2. CIFAR-100

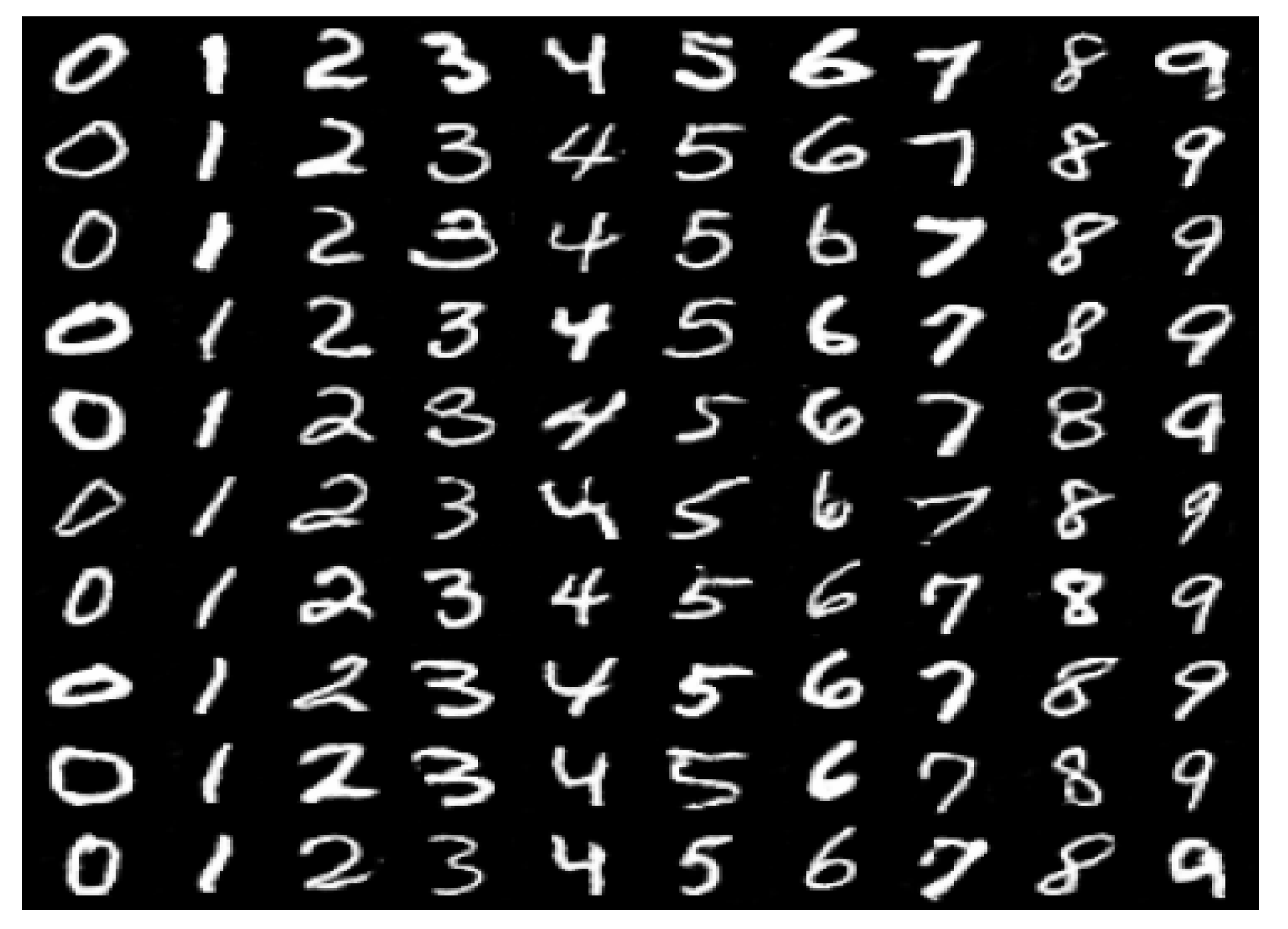

4.3. MNIST for InfoGAN

5. Discussion and Future Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Oldham, K.B. The Fractional Calculus; Academic Press: Cambridge, MA, USA, 1974. [Google Scholar]

- Podlubny, I. Fractional Differential Equation; Academic Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Sun, H.; Zhang, Y.; Baleanu, D.; Chen, W.; Chen, Y. A new collection of real world applications of fractional calculus in science and engineering. Commun. Nonlinear Sci. Numer. Simul. 2018, 64, 213–231. [Google Scholar] [CrossRef]

- Sonka, M. Image Processing, Analysis and Machine Vision; Tsinghua University Press: Beijing, China, 2011. [Google Scholar]

- Pu, Y.F.; Zhou, J.L.; Yuan, X. Fractional Differential Mask: A Fractional Differential-Based Approach for Multiscale Texture Enhancement. IEEE Trans. Image Process. 2010, 19, 491–511. [Google Scholar] [CrossRef] [PubMed]

- Yang, Q.; Chen, D.; Zhao, T.; Chen, Y. Fractional Calculus in Image Processing: A Review. Fract. Calc. Appl. Anal. 2016, 19, 1222–1249. [Google Scholar] [CrossRef]

- Zhang, X.F.; Dai, L.W. Image Enhancement Based on Rough Set and Fractional Order Differentiator. Fractal Fract. 2022, 6, 214. [Google Scholar] [CrossRef]

- Zhang, X.F.; Liu, R.; Ren, J.X.; Gui, Q.L. Adaptive Fractional Image Enhancement Algorithm Based on Rough Set and Particle Swarm Optimization. Fractal Fract. 2022, 6, 100. [Google Scholar] [CrossRef]

- Zhang, X.F.; Yan, H.; He, H. Multi-focus image fusion based on fractional-order derivative and intuitionistic fuzzy sets. Front. Inform. Technol. Elect. Eng. 2020, 21, 834–843. [Google Scholar] [CrossRef]

- Yan, H.; Zhang, J.X.; Zhang, X.F. Injected Infrared and Visible Image Fusion via L-1 Decomposition Model and Guided Filtering. IEEE Trans. Comput. Imaging 2022, 8, 162–173. [Google Scholar] [CrossRef]

- Yan, H.; Zhang, X.F. Adaptive fractional multi-scale edge-preserving decomposition and saliency detection fusion algorithm. ISA Trans. 2020, 107, 160–172. [Google Scholar] [CrossRef]

- Ghanbari, B.; Atangana, A. Some new edge detecting techniques based on fractional derivatives with non-local and non-singular kernels. Adv. Differ. Equ. 2020, 2020, 19. [Google Scholar] [CrossRef]

- Babu, N.R.; Sanjay, K.; Balasubramaniam, P. EED: Enhanced Edge Detection Algorithm via Generalized Integer and Fractional-Order Operators. Circuits Syst. Signal Process. 2022, 41, 5492–5534. [Google Scholar] [CrossRef]

- Jindal, N.; Singh, K. Applicability of fractional transforms in image processing—Review, technical challenges and future trends. Multimed. Tools Appl. 2019, 78, 10673–10700. [Google Scholar] [CrossRef]

- Yetik, I.S.; Kutay, M.A.; Ozaktas, H.; Ozaktas, H.M. Continuous and discrete fractional Fourier domain decomposition. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, Istanbul, Turkey, 5–9 June 2000; pp. 93–96. [Google Scholar]

- Zhang, X.; Shen, Y.; Li, S.; Zhang, H. Medical image registration in fractional Fourier transform domain. Optik 2013, 124, 1239–1242. [Google Scholar] [CrossRef]

- Sharma, K.K.; Joshi, S.D. Image Registration using Fractional Fourier Transform. In Proceedings of the IEEE Asia Pacific Conference on Circuits & Systems, Singapore, 4–7 December 2006. [Google Scholar]

- Zhao, T.Y.; Ran, Q.W. The Weighted Fractional Fourier Transform and Its Application in Image Encryption. Math. Probl. Eng. 2019, 2019, 10. [Google Scholar] [CrossRef]

- Zhou, N.R.; Li, H.L.; Wang, D.; Pan, S.M.; Zhou, Z.H. Image compression and encryption scheme based on 2D compressive sensing and fractional Mellin transform. Opt. Commun. 2015, 343, 10–21. [Google Scholar] [CrossRef]

- Ben Farah, M.A.; Guesmi, R.; Kachouri, A.; Samet, M. A novel chaos based optical image encryption using fractional Fourier transform and DNA sequence operation. Opt. Laser Technol. 2020, 121, 8. [Google Scholar] [CrossRef]

- Khan, S.; Ahmad, J.; Naseem, I.; Moinuddin, M. A Novel Fractional Gradient-Based Learning Algorithm for Recurrent Neural Networks. Circuits Syst. Signal Process. 2018, 37, 593–612. [Google Scholar] [CrossRef]

- Wang, J.; Wen, Y.; Gou, Y.; Ye, Z.; Chen, H. Fractional-order gradient descent learning of BP neural networks with Caputo derivative. Neural Netw. 2017, 89, 19–30. [Google Scholar] [CrossRef]

- Yang, C.; Guangyuan, Z. A Caputo-type fractional-order gradient descent learning of deep BP neural networks. In Proceedings of the 2019 IEEE 3rd Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Chongqing, China, 11–13 October 2019. [Google Scholar] [CrossRef]

- Viera-Martin, E.; Gomez-Aguilar, J.F.; Solis-Perez, J.E.; Hernandez-Perez, J.A.; Escobar-Jimenez, R.F. Artificial neural networks: A practical review of applications involving fractional calculus. Eur. Phys. J. Spec. Top. 2022, 231, 2059–2095. [Google Scholar] [CrossRef]

- Wang, X.; Su, Y.; Luo, C.; Wang, C. A novel image encryption algorithm based on fractional order 5D cellular neural network and Fisher-Yates scrambling. PLoS ONE 2020, 15, e0236015. [Google Scholar] [CrossRef]

- Mani, P.; Rajan, R.; Shanmugam, L.; Joo, Y.H. Adaptive control for fractional order induced chaotic fuzzy cellular neural networks and its application to image encryption. Inf. Sci. 2019, 491, 74–89. [Google Scholar] [CrossRef]

- Bengio, Y.; Ducharme, R.; Vincent, P. A Neural Probabilistic Language Model. J. Mach. Learn. Res. 2003, 3, 1137–1155. [Google Scholar]

- Hinton, G.E. Learning and relearning in Boltzmann machines. In Parallel Distributed Processing: Explorations in the Microstructure of Cognition; MIT Press: Cambridge, MA, USA, 1986. [Google Scholar]

- Yoshua, B.; Goodfellow, I.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Zhang, C.B.; Jiang, P.T.; Hou, Q.B.; Wei, Y.C.; Han, Q.; Li, Z.; Cheng, M.M. Delving Deep into Label Smoothing. IEEE Trans. Image Process. 2021, 30, 5984–5996. [Google Scholar] [CrossRef] [PubMed]

- Li, C.S.; Liu, C.; Duan, L.X.; Gao, P.; Zheng, K. Reconstruction Regularized Deep Metric Learning for Multi-Label Image Classification. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 2294–2303. [Google Scholar] [CrossRef]

- Fa-Wang, L.; Ping-Hui, Z.; Qingxia, L. Numerical Solution of Fractional Partial Differential Equation and Its Application; Science Press: Beijing, China, 2015. [Google Scholar]

- Bueno-Orovio, A.; Kay, D.; Grau, V.; Rodriguez, B.; Burrage, K. Fractional diffusion models of cardiac electrical propagation: Role of structural heterogeneity in dispersion of repolarization. J. R. Soc. Interface 2014, 11, 12. [Google Scholar] [CrossRef]

- Erev, I.; Bornstein, G.; Wallsten, T.S. The Negative Effect of Probability Assessments on Decision Quality. Organ. Behav. Hum. Decis. Process. 1993, 55, 78–94. [Google Scholar] [CrossRef]

- Han, Y.D.; Hwang, W.Y.; Koh, I.G. Explicit solutions for negative-probability measures for all entangled states. Phys. Lett. A 1996, 221, 283–286. [Google Scholar] [CrossRef]

- Sokolovski, D. Weak values, “negative probability,” and the uncertainty principle. Phys. Rev. A 2007, 76, 13. [Google Scholar] [CrossRef]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond Empirical Risk Minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Mirza, M.; Osindero, S. Conditional Generative Adversarial Nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Chen, X.; Duan, Y.; Houthooft, R.; Schulman, J.; Sutskever, I.; Abbeel, P. InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial Nets. arXiv 2016, arXiv:1606.03657. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.H.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5967–5976. [Google Scholar]

- Odena, A.; Olah, C.; Shlens, J. Conditional Image Synthesis with Auxiliary Classifier GANs. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017. [Google Scholar]

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | −0.6644 | 0.0283 | 0.0182 | 0.0129 | 0.0096 | 0.0074 | 0.0057 | 0.0039 | 0.0000 |

| 1 | 1 | −0.5636 | 0.0461 | 0.0292 | 0.0205 | 0.0152 | 0.0116 | 0.0086 | 0.0039 | |

| 2 | 1 | −0.5552 | 0.0488 | 0.0310 | 0.0217 | 0.0160 | 0.0116 | 0.0057 | ||

| 3 | 1 | −0.5530 | 0.0496 | 0.0314 | 0.0217 | 0.0152 | 0.0074 | |||

| 4 | 1 | −0.5525 | 0.0496 | 0.0310 | 0.0205 | 0.0096 | ||||

| 5 | 1 | −0.5530 | 0.0488 | 0.0292 | 0.0129 | |||||

| 6 | 1 | −0.5552 | 0.0461 | 0.0182 | ||||||

| 7 | 1 | −0.5636 | 0.0283 | |||||||

| 8 | 1 | −0.6644 | ||||||||

| 9 | 1 |

| Plus | Train_acc | Test_acc | Best_acc | Top5_acc | |

|---|---|---|---|---|---|

| ResNet18 | 1.0 | 0.1439 | 0.1500 | 0.5359 | |

| Label Smoothing | 1.0 | 0.4030 | 0.4100 | 0.8282 | |

| FVs | 0.9990 | 0.8648 | 0.9050 | 0.9451 | |

| mix-up | 0.7577 | 0.5194 | 0.5600 | 0.9256 | |

| FVs+mix-up | 0.8137 | 0.9520 | 0.9559 | 0.9888 | |

| ResNet101 | 0.9983 | 0.9441 | 0.9557 | 0.9986 | |

| FVs | 0.9236 | 0.8838 | 0.8883 | 0.9697 | |

| FVs+mix-up | 0.9987 | 0.9450 | 0.9450 | 0.9871 |

| Plus | Train_acc | Top1_acc | Best_acc | Top5_acc | |

|---|---|---|---|---|---|

| DenseNet | 0.9711 | 0.5769 | 0.6328 | 0.8290 | |

| Label Smoothing | 0.9777 | 0.5786 | 0.6172 | 0.8255 | |

| FVs | 0.7777 | 0.5833 | 0.6719 | 0.7058 | |

| mix-up | 0.5717 | 0.5543 | 0.6442 | 0.6833 | |

| DenseNet121 | 0.7124 | 0.5764 | 0.6328 | 0.6847 | |

| FVs | 0.9930 | 0.6283 | 0.7344 | 0.7530 | |

| mix-up | 0.6247 | 0.5897 | 0.6014 | 0.6731 | |

| FVs+mix-up | 0.6912 | 0.6628 | 0.7412 | 0.8216 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tan, S.; Pu, Y. Frac-Vector: Better Category Representation. Fractal Fract. 2023, 7, 132. https://doi.org/10.3390/fractalfract7020132

Tan S, Pu Y. Frac-Vector: Better Category Representation. Fractal and Fractional. 2023; 7(2):132. https://doi.org/10.3390/fractalfract7020132

Chicago/Turabian StyleTan, Sunfu, and Yifei Pu. 2023. "Frac-Vector: Better Category Representation" Fractal and Fractional 7, no. 2: 132. https://doi.org/10.3390/fractalfract7020132

APA StyleTan, S., & Pu, Y. (2023). Frac-Vector: Better Category Representation. Fractal and Fractional, 7(2), 132. https://doi.org/10.3390/fractalfract7020132