Abstract

Using a Donsker-type construction, we prove the existence of a new class of processes, which we call the self-stabilizing processes. These processes have a particular property: the “local intensities of jumps” vary with the values. Moreover, we also show that the self-stabilizing processes have many other good properties, such as stochastic Hölder continuity and strong localizability. Such a self-stabilizing process is simultaneously a Markov process, a martingale (when the local index of stability is greater than 1), a self-scaling process and a self-regulating process.

1. Introduction

Stable Lévy motions owe their importance in both theory and practice to, among other factors, price fluctuations. The seeming departure from normality, along with the demand for a self-similar model for financial data (i.e., one in which the shape of the distribution for yearly asset price changes resembles that of the constituent daily or monthly price changes), led Benoît Mandelbrot to propose that cotton prices follow an -stable Lévy motion with equal to . The high variability of the stable Lévy motions means that they are much more likely to take values far away from the median, and this is one of the reasons why they play an important role in modeling. Stable Lévy motions have been frequently used to model such diverse phenomena as gravitational fields of stars, temperature distributions in nuclear reactors and stresses in crystalline lattices, as well as stock market prices, gold prices and other financial data.

Recall that a stochastic process is called a (standard) –stable Lévy motion if the following three conditions hold:

- (C1)

- almost surely;

- (C2)

- L has independent increments;

- (C3)

- for any and for some .

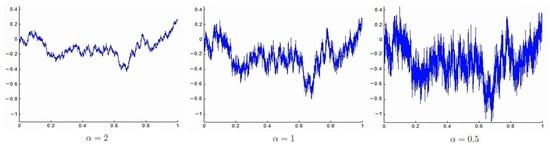

- Here, stands for a stable random variable with index of stability , scale parameter , skewness parameter and a shift parameter equal to When we denote L as for clarity. Such processes have stationary increments, and they are –self-similar (unless ); that is, for all the processes and have the same finite-dimensional distributions. An –stable Lévy motion is symmetric when . Recall that governs the intensity of jumps. When is small, the intensity of jumps is large. Contrarily, when is large, the intensity of jumps is small; see Figure 1.

Figure 1. The figure exhibits how governs the intensity of jumps. When is small, the intensity of jumps is large. Contrarily, when is large, the intensity of jumps is small.

Figure 1. The figure exhibits how governs the intensity of jumps. When is small, the intensity of jumps is large. Contrarily, when is large, the intensity of jumps is small.

However, the stationary property of their increments restricts the uses of stable Lévy motions in some situations, and generalizations are needed for instance to model real-world phenomena such as annual temperature (rainfall, wind speed), epileptic episodes in EEG, stock market prices over long periods and daily internet traffic. A significant feature of these cases is that the “local intensity of jumps” varies with time t; that is, varies with time t.

One way to deal with such a variation is to set up a class of processes whose stability index is a function of t. More precisely, one aims at defining non-stationary increments processes that are, at each time t, “tangent” (in a certain sense explained below) to a stable process with stability index . Such processes are called multistable Lévy motions (MsLM); these are non-stationary increments extensions of stable Lévy motions.

Formally, one says that a stochastic process is multistable if, for almost all , X is localizable at t with tangent process an –stable process, . Recall that is said to be –localizable at t (cf. Falconer [,]), with , if there exists a non-trivial process , called the tangent process of X at t, such that

where convergence is in finite dimensional distributions. By (1), a multistable process is also a multifractional process (cf. [,] for such processes). Two such extensions exist []:

- 1.

- The field-based MsLM admit the following series representation:where is a Poisson point process on with the Lebesgue measure as a mean measure , andTheir joint characteristic function is as follows:for and These processes have correlated increments, and they are localizable as soon as the function is Hölder-continuous.

- 2.

- The independent-increments MsLM admit the following series representation:As their name indicates, they have independent increments, and their joint characteristic function is as follows:for and These processes are localizable as soon as the function satisfies the following condition uniformly for all x in finite interval as ; see []:In particular, independent-increments MsLM are, at each time t, “tangent ” to a stable Lévy process with stability index .

- Of course, when is a constant for all t, both and are simply the Poisson representation of –stable Lévy motion In general, and are semi-martingales. For more properties of and such as Ferguson-Klass-LePage series representations, Hölder exponents, stochastic Hölder continuity, strong localizability, functional central limit theorem and the Hausdorff dimension of the range, we refer to [,,]. See also [,] for wavelet series representation of the multifractional multistable processes.

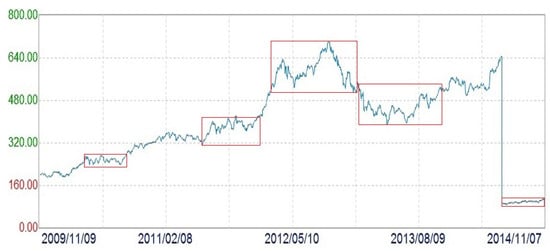

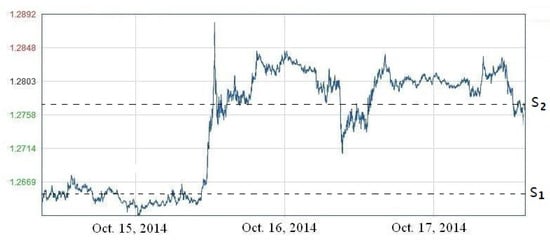

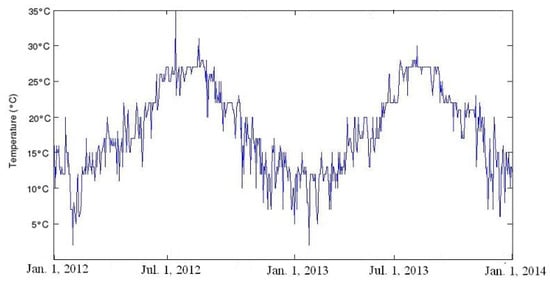

Similar to the MsLM, we find that some stochastic processes have the property that the “local intensity of jumps” varies with the values of the processes. For instance, when one analysis certain records such as the stock market prices (see Figure 2), the exchange rates (see Figure 3) or annual temperature (see Figure 4), it seems that there exits a relation between the local value of the records, denoted by , and the local intensity of jumps, measured by the index of stability .

Figure 2.

The figure exhibits the fluctuations in stock market prices of Apple; see the blue curve. We can see that the “local intensities of jumps” (which means decrements or increments) vary in prices. When the prices are low, the corresponding “local intensities of jumps” are small. Contrarily, when the prices are high, the corresponding local intensities of jumps are large.

Figure 3.

The figure exhibits the fluctuations in exchange rates of Euro against US dollar. We can see that the local intensities of jumps vary in values. When the exchange rate is small , the corresponding local intensity of jumps is small. Contrarily, when the exchange rate is large , the corresponding local intensity of jumps is large. The local intensities of jumps varies with the level of values.

Figure 4.

Here is a graph of daytime temperatures in Nice, France. We find that the local intensities of jumps vary in values. When the temperature is low, the corresponding local intensity of jumps is large. Contrarily, when the temperature is high, the corresponding local intensity of jumps is small. When the temperatures are at the same level, the local intensities of jumps coincide.

This calls for the development of the self-stabilizing models, i.e., a class of stochastic processes S satisfying a functional equation of the form: almost surely for all t, where g is a smooth deterministic function. All the information concerning the future evolution of is then incorporated in which may be estimated from historical data under the assumption that the relation between S and does not vary in time. This class of models is in a sense analogous to local volatility models: instead of having the local volatility depending on S, it is the local intensity of jumps that does so. This class of models is also in a sense analogous to MsLM: instead of the “local intensity of jumps” depending on time t, the “local intensity of jumps” depending on the values of

The main aim of this paper is to establish the self-stabilizing models, called the self-stabilizing processes (cf. Falconer and Lévy Véhel []), via a Donsker-type construction. Formally, one says that a stochastic process is a self-stabilizing process if, for almost surely all , S is localizable at t with tangent process a –stable process, with respect to the conditional probability measure In formula, it holds

where convergence is in finite dimensional distributions with respect to Formula (8) states that “local intensity of jumps” varies with the values of , instead of time t. In particular, if equality (8) implies that

provided that r is small. Thus, it is natural to define where

which illustrates the use of our method to prove the existence of these self-stabilizing processes. The main difficulty associated with using this method involves proving the weak convergence of To this end, we make use of the Arzelà–Ascoli theorem and its generalization.

The paper is organized as follows. In Section 2, we establish a self-stabilizing and self-scaling process. In Section 3, we show that it has many good properties, such as stochastic Hölder continuity and strong localizability. In particular, it is simultaneously a Markov process, a martingale (when ), and a self-regulating process. Conclusions are presented in Section 4. In Appendix A, we give the Arzelà–Ascoli theorem and its generalization.

2. Existence of Self-Stabilizing and Self-Scaling Process

In this section, we make use of the general version of the Arzelà–Ascoli theorem to prove the existence of this self-stabilizing process. Moreover, our self-stabilizing process is also a self-scaling process. We call a random process self-scaling if the scale parameter is also a function of the value of the process.

Definition 1.

We call the sequence subequicontinuous on if for any there exist and a sequence of nonnegative numbers as such that, for all functions in the sequence,

whenever In particular, if for all , then is equicontinuous.

The following lemma gives a general version of the Arzelà–Ascoli theorem, whose proof is given in Appendix A.

Lemma 1.

Assume that is a sequence of real-valued continuous functions defined on a closed and bounded set If this sequence is uniformly bounded and subequicontinuous, then there exists a subsequence that converges uniformly.

We give an approximation for a self-stabilizing and self-scaling process via Markov processes. The main idea behind this method is that the unknown stability index and scale parameter of the process at point are replaced by the predictable values and , respectively. When it is obvious that in distribution, provided that g is a Hölder function and that almost surely. The same argument holds for

Theorem 1.

Let be a Hölder function defined on with values in the range .

Let be a positive Hölder function defined on and assume that lies within the range There exists a self-stabilizing and self-scaling process such that it is tangent at u to a stable Lévy process under the conditional expectation given as .

Proof.

The theorem can be proved in four steps.

Step 1. Donsker’s construction: For all and all set

where is a symmetric –stable random variable with unit-scale parameters and is independent of the random variables for a given . Then, we define a sequence of partial sums In particular, when and , this method is known as Donsker’s construction (cf. Theorem 16.1 of Billingsley [] for instance). Define the processes , where

Then, for given n, is a Markov process. For simplicity of notation, denote this as

According to the construction of we have , and, for all

It is easy to see that, for all satisfying ,

where the last line follows from (10). In particular, it can be rewritten in the following form:

where as before. More generally, we have, for all and ,

where the last line follows from (12). Since is a Markov process. Equality (13) also holds if is replaced by where and

Set

and set

for all and It is worth noting that the following estimation holds:

Recall and Then, we may assume that lies in the range By (11), it is easy to see that the following inequalities hold for all

and

Thus, for all

By a similar argument, we have, for all

The inequalities (14) and (15) can be rewritten in the following form: for all

Step 2. Sub-equicontinuous for : Denote by Next, we prove that for given the sequence is subequicontinuous on By Theorems A2 and (14), it is easy to see that is equicontinuous with respect to However, we can even prove a better result, that is is Hölder equicontinuous of order with respect to By (14) and the Billingsley inequality (cf. p. 47 of []), it is easy to see that, for all with and all

Similarly, by (15), for all with and all

For all we have

and

The random variable is dominated by the constant 2. Therefore, (19) and (17) imply that

where C is a constant only depending on M and Thus, is Hölder equicontinuous of order with respect to Similarly, the inequalities (20) and (18) imply that

where C is a constant depending on and The last inequality implies that for all is Hölder subequicontinuous of order with respect to Notice that

Thus, the sequence is subequicontinuous on

Step 3. Convergence for a subsequence: Denote by For every given and , by Lemma 1, there exists a subsequence of and a function defined on such that uniformly on By induction, the following relation holds:

Hence, the diagonal subsequence converges to on Moreover, by (14) and (15), the following inequalities hold for all :

and, for all

By the Lévy continuous theorem, these exists a random process such that converges to in distribution for any as

Similarly, by (13) instead of by (11), we can prove that for all there exists a random process such that converges to in finite dimensional distribution, where is a subsequence of Letting by (13) and the dominated convergence theorem, we have, for all

Equality (23) also holds if is replaced by where and

Step 4. Self-stabilizing for the limiting process: Next, we prove that S is a self-stabilizing process, that is, S is localizable at u to a –stable Lévy motion under the conditional expectation given as . For any and , from equality (23), it is easy to see that

Setting , we find that

From equality (23), by an argument similar to (14), we obtain, for all and all with ,

By an argument similar to that of (18), it follows that, for and ,

where C is a constant depending on and Since g and are both Hölder functions, by (25), we get

and

in probability with respect to Hence, using the dominated convergence theorem, we have

which means that S is localizable at u to a –stable Lévy motion under the conditional expectation given as , where is the standard symmetric –stable Lévy motion. This completes the proof of the theorem. □

Remark 1.

Let us comment on Theorem 1.

- 1.

- A slightly different method by which to approximate the self-stabilizing and self-scaling process S can be described as follows. DefineNow, letBy an argument similar to the proof of Theorem 1, there exists a subsequence of such that converges to the process S in finite dimensional distribution. Notice that with this method, all of the random variables are changed from to even is a constant. However, in the proof of Theorem 1, we only add one random variable from to when a constant.

- 2.

- Notice that if we define for all then, by a similar argument of Theorem 1, we can define the self-stabilizing and self-scaling process on the positive whole line via the limit of

- 3.

- An interesting question is whether converges to in finite dimensional distribution. To answer this question, we need a judgment that if every subsequence of has a further subsequence that converges to x, then converges to According to the proof of the theorem, it is known that every subsequence of has a further subsequence that converges to S in finite dimensional distribution, where S is defined by (23). Thus, the question reduces to proving that (23) defines a unique process S.

3. Properties of the Self-Stabilizing and Self-Scaling Process

In this section, we consider the properties of the self-stabilizing and self-scaling process S established in the proof of Theorem 1. It seems that the process S shares many properties with the multistable Lévy motions (cf. Falconer and Liu []). Assume that lies in the range

3.1. Tail Probabilities

Notice that the estimation for the characteristic function of under the probability measure is given by (24). By an argument similar to that of (17) and (18), for all such that the following two inequalities hold for all :

and, for all

Thus, the following inequalities are obvious:

Property 1.

For with and all it holds

where C is a constant depending on M and In particular, it implies that, for all

For more precise bounds on tail probabilities, we have the following inequalities:

Property 2.

For with and all it holds

where

In particular, it implies that, for all

Proof.

Remark 2.

Let us comment on Property 2.

- 1.

- The order of Property 2 is the best possible. Indeed, assume and setwhere is defined by (3). By an argument similar to that of Ayache [], we can prove thatSince the two functions and are bounded, is of the order as which represents the best possible order of convergence.

- 2.

- Property 2 demonstrates that if the stock-market prices are self-stabilizing processes, then investments originating from points with larger stability indices are safer than those originating from points with smaller stability indices.In mathematical terms, if then we havefor any and any Indeed, if then it is obvious thatfor any Otherwise, since S is stochastic continuous (cf. Property 5) and is a Hölder function, there almost surely exists a point such that and for any Hence, it holds for allBy the construction of it is obvious thatHence, for

3.2. Absolute Moments

The following property gives some sharp estimations for the absolute moments of the self-stabilizing and self-scaling process.

Property 3.

Let be the positive value such that

For all and all such that it holds

where C is a constant depending on a and In particular, it implies that

and

Proof.

By Property 2, it is easy to see that, for all and all such that

where C and are constants depending on a and □

When and is a constant, without loss of generality, let . Then, is a standard symmetric –stable Lévy motion. Thus, we have

and

where is defined by (3) and is a constant depending only on a (cf. Property 1.2.18 of Samorodnitsky and Taqqu []). Thus, the in inequality (35) cannot equal or larger than

Property 4.

For all and all it holds

where is the gamma function. In particular, it implies that

Proof.

Notice that, for all and all

Recall that S is localizable at u to a –stable Lévy motion under the conditional expectation given . Thus

By Property 1, for x large enough,

Hence, by Lebesgue dominated convergence theorem, we have

We refer to page 18 of Samorodnitsky and Taqqu [] for the last line. □

3.3. Stochastic Hölder Continuity

We call a random process , u in an interval , is stochastic Hölder continuous of exponent if it holds

for a positive constant It is obvious that if is stochastic Hölder continuous of exponent then is stochastic Hölder continuous of exponent Stochastic Hölder continuity implies continuity in probability, that is, for any and it holds that

Example 1.

Assume that a random process satisfies the following condition: there exists three strictly positive constants such that

Then, is stochastic Hölder continuous of exponent Indeed, it is easy to see that, for

which implies our claim.

The following theorem shows that the process S is stochastic Hölder continuous.

Property 5.

For all and all , it holds

where C is a constant depending on and In particular, it implies that

which means S is stochastic Hölder continuous of exponent .

3.4. Markov Process

It is easy to see that in the proof of Theorem 1 is a Markov process. Thus, it is natural to guess that the self-stabilizing and self-scaling process is also a Markov process. To determine whether this is the case, we need the following theorem:

Theorem 2.

Assume that is a sequence of Markov processes. If converges to a process in finite dimensional distributions, then S is a Markov process.

Proof.

We only need show that for any and it holds for any Borel set

or Notice that is a Markov process. Thus

For any two Borel sets and such that

by (43), it is easy to see that

which means □

Since that there exists a subsequence of such that

in distribution. Thus, by Theorem 2, we have the following property.

Property 6.

The process is a Markov process.

3.5. Strongly Localizability

We have proven that S is localizable under certain conditional expectations in the proof of Theorem 1. In the following theorem, we prove that S is strongly localizable under the conditional expectation given as

Let be the set of càdlàg functions on —that is, functions which are continuous on the right and have left limits at all and are endowed with the Skorohod metric []. If X and have versions in and convergence in (1) is in distribution with respect to , then X is said to be strongly localizable at t with strong local form .

Property 7.

The process is strongly localizable at u to a –stable Lévy motion under the conditional expectation given as .

Proof.

For any define

We only need to prove the tightness. By Theorem 15.6 of Billingsley [], it suffices to show that, for some and ,

for and , where C is a positive constant. Since is a Markov process with respect to x, then is a Markov process with respect to x. Hence, it follows that

By the Billingsley inequality (cf. p. 47 of []), (29) and (30), we have

where and is a positive constant depending only on and By a similar argument, we have

Thus

Using the inequality we have

which gives inequality (44). □

3.6. Self-Regulating Process

The pointwise Hölder exponent at t of a stochastic process (or continuous function) is the number such that

A random process is called a self-regulating process if there exists a function such that, at each point t, almost surely,

The self-regulating processes were introduced by Barrière, Echelard and Lévy Véhel [], in work in which the authors established two self-regulating multi-fractional Brownian motions (srmBm) via methods of fixed-point theory and random midpoint displacement, respectively.

From Property 7, we see that, for

in distribution with respect to ; thus, the following property is obvious.

Property 8.

The process is a self-regulating process.

3.7. Martingale

Since is a martingale when , we guess that process S is also a martingale when . We prove this claim in the following theorem.

Property 9.

If , then the process is a martingale.

Proof.

By Property 3, it follows that, for any

where C is a constant depending on and We only need to verify that for any and it holds

By an argument similar to that of (23), we have, for all

Taking the derivative with respect to on both sides of the last equality, it follows that

Notice that Taking in equality (52), we obtain (51). □

4. Conclusions

Using a general version of the Arzelà–Ascoli theorem and a Donsker-type construction, we prove the existence of the self-stabilizing and self-scaling process. This type of process is localizable at t, with a –stable tangent process. Thus, the “local intensities of jumps” vary with the values of the process. Moreover, we also show that the self-stabilizing processes have many other good properties, such as stochastic Hölder continuity and strong localizability. In particular, this type of self-stabilizing process is simultaneously a Markov process, a martingale (when the local index of stability is greater than 1), a self-scaling process and a self-regulating process.

Author Contributions

Conceptualization, J.L.V.; methodology, X.F.; writing—original draft preparation, X.F.; writing—review and editing, X.F. and J.L.V.; project administration, J.L.V.; funding acquisition, X.F. and J.L.V. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been partially supported by the Natural Science Foundation of Hebei Province (Grant No. A2025501005).

Data Availability Statement

Data available on request from the authors.

Conflicts of Interest

Author Jacques Lévy Véhel was employed by Case Law Analytics. The remaining author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Appendix A

In this appendix, we give two sufficient conditions for a sequence of random vectors (random processes) to have a subsequence that converges in distribution (in finite dimensional distribution). The proofs of our theorems are based on the Arzelà–Ascoli theorem. The Arzelà–Ascoli theorem is a fundamental result of mathematical analysis and gives necessary and sufficient conditions to decide whether every sequence of a given family of real-valued continuous functions defined on a closed and bounded interval has a uniformly convergent subsequence. The main condition is the equicontinuity of the family of functions. A sequence of continuous functions defined on is equicontinuous with respect to if for every there exists such that for all functions

whenever Succinctly, a sequence is equicontinuous if and only if all of its elements admit the same modulus of continuity. It is worth noting that is equicontinuous if and only if is equicontinuous with respect to every coordinate.

Recall that a sequence of functions defined on I is uniformly bounded if there is a number M such that

for all and all

The Arzelà–Ascoli theorem states that

Lemma A1

(The Arzelà–Ascoli theorem). Assume that is a sequence of real-valued continuous functions defined on a closed and bounded set If this sequence is uniformly bounded and equicontinuous, then there exists a subsequence that converges uniformly.

Next, we give two examples in which the conditions of the Arzelà–Ascoli theorem are satisfied.

Example A1.

A sequence of uniformly bounded functions on a closed and bounded interval, with uniformly bounded derivatives.

Example A2.

A sequence of uniformly bounded functions on a closed and bounded interval, satisfies a Hölder condition of order α, with a fixed constant

for all

Appendix A.1. Weak Convergence Theorems for Random Vectors

Using the Arzelà–Ascoli theorem and the Lévy continuous theorem, we obtain the following two sufficient conditions for a sequence of random vectors to have a subsequence that converges in distribution.

Denote by the scalar product of two vectors i.e.,

and by the magnitude of a vector i.e.,

Theorem A1.

Let be a sequence of real-valued random vectors with values in . Assume that

uniformly for all Denote by

the characteristic function of Then, is equicontinuous. Therefore, there exist a subsequence and a random vector X such that in distribution.

Proof.

We first give a proof for the case of For all it is easy to see that

Since

by Lebesgue’s dominated convergence theorem and (A1), we find that is equicontinuous. Let for all For all by the Arzelà–Ascoli theorem, there exists a subsequence of denoted as and a function defined on such that uniformly on By induction, the following relation holds:

Define the diagonal subsequence whose Nth term is the Nth term in the Nth subsequence . Then, the diagonal subsequence converges to on Condition (A1) implies that for any there exists a such that

uniformly for all n and all In particular, since can be any small, inequality (A3) implies that

Moreover, by (A4),

which means is continuous at By the Lévy continuous theorem, these exists a random variable X such that converges to X in distribution as

The proof for the case of is very simple. Note the fact that, for all

Recall that is equicontinuous if and only if is equicontinuous with respect to every coordinate. Hence is equicontinuous on By an argument similar to the case of there exists a subsequence converges to on By condition (A1),

which means is continuous at By the Lévy continuous theorem again, these exists a random vector X such that converges to X in distribution as This completes the proof of the theorem. □

As an application of Theorem A1, consider the following example, which illustrates that a sequence of uniformly bounded random variables exists a subsequence that converges in distribution.

Example A3.

Let be a sequence of real-valued random vectors with values in . If for a constant C and uniformly for all then there exist a subsequence and a random vector X such that in distribution. Indeed, it is easy to see that

uniformly for all Our conclusion follows from Theorem A1.

Since condition (A1) may not easy to verify, we introduce the second sufficient condition. This sufficient condition could be easier to check.

Theorem A2.

Let be a sequence of real-valued random vectors with values in . Denote the characteristic function of by Assume that

uniformly for all Then, is equicontinuous. Therefore, there exist a subsequence and a random vector X such that in distribution.

Proof.

We first give a proof for the case of By the Billingsley inequality (cf. page 47 of []), it is easy to see that, for all

Given any by (A7), there is a constant such that for all

Thus, for all

For all we have

The random variable is dominated by the constant 2. Therefore, by the inequalities (A9) and (A10), it follows that, for all

For this fixed from (A11), it is easy to see that

uniformly for all n, whenever Hence, the family is equicontinuous. By the Arzelà–Ascoli theorem, with an argument similar to the proof of Theorem A1, there exist a subsequence and a continuous function such that Since for all n, inequality (A12) implies that whenever Thus is continuous at By the Lévy continuous theorem, these exists a random variable X such that converges to X in distribution as

For the case where the proof is similar to that of Theorem A1. This completes the proof of the theorem. □

To illustrate Theorem A2, consider the following two examples.

Example A4.

Let be a sequence of real-valued random vectors with values in . Assume

Then, there exist a subsequence and a random vector X such that in distribution. Indeed, it is easy to see that

uniformly for all Our conclusion follows from Theorem A2.

Example A5.

Assume that is a sequence of –stable symmetric random variables with an unite scale parameter, i.e., If then there exists a subsequence and a random variable X such that in distribution. Indeed, it is obvious that

uniformly for all Thus, the conclusion follows immediately from Theorem A2.

Another route to this conclusion can be obtained from the Bolzano–Weierstrass theorem. In fact, by the Bolzano–Weierstrass theorem, there exists a subsequence such that It follows that

which means in distribution.

Appendix A.2. Weak Convergence Theorems for Random Processes

In the previous subsection, we consider the case of random vectors. Now, we consider the case of random processes.

Theorem A3.

Let be a sequence of random processes with values in . Assume that

uniformly for all and all and that for any

uniformly for all and all Denote by

Then, is equicontinuous with respect to and therefore, there exist a subsequence and a random process such that, for any

in distribution.

Moreover, if and all the processes and have independent increments, then in finite dimensional distribution.

By Theorem A1, condition (A15) is sufficient to prove (A18). However, with this method, the subsequence vary in and The use of condition (A16) is to make sure that the sequence is common when and vary in the range

Proof.

By Theorem A1, condition (A15) implies that is equicontinuous with respect to Hence we need only to prove that is equicontinuous with respect to For any one has

By (A16), we find that for any is equicontinuous with respect to Similarly, is equicontinuous with respect to Thus, is equicontinuous with respect to With an argument similar to the proof of Theorem A1, by the Arzelà–Ascoli theorem and the Lévy continuous theorem, there exist a subsequence and a random process such that for any given

in distribution.

Assume that has independent increments. For any satisfying it is easy to see that, for any

Letting by the hypothesis that has independent increments, we find that

which means in finite dimensional distribution. □

Example A6.

Let be a sequence of random processes with values in . Assume that for a constant C and uniformly for all x and all If

in distribution uniformly for all and all Then, the conclusion of Theorem A3 holds.

The following sufficient condition could be easier to check than that of Theorem A3.

Theorem A4.

Let be a sequence of processes with values in . Denote by

Assume that

uniformly for all and all and that for any ,

uniformly for all and all Then, the conclusion of Theorem A3 holds.

Proof.

By Theorem A2, condition (A21) implies that is equicontinuous with respect to Next, we prove that is equicontinuous with respect to For any one has

The random variable is dominated by the constant 2. Hence, for all

Given any and any it holds, for all and all ,

By (A22), for the given there exists a such that if then

uniformly for all n and all By the Billingsley inequality, it is easy to see that if then

From (A24), for all and all such that

Thus, for any is equicontinuous with respect to Similarly, is equicontinuous with respect to Thus, is equicontinuous with respect to The rest of the proof is similar to the proof of Theorem A3. □

Example A7.

Assume that the functions and that is a sequence of (localizable) independent-increments –multistable Lévy motions (cf. Falconer and Liu []). Then, there exists a subsequence and a random process such that in distribution for any In particular, if has independent increments, then converges to in finite dimensional distribution.

Indeed, in work by Falconer and Liu [], the joint characteristic functions of read as follows:

for and It is obvious that

uniformly for all and all and that for any

uniformly for all and all Hence, the conclusion follows immediately from Theorem A4.

This conclusion can also be obtained from the Arzelà–Ascoli theorem. Since are localizable, by (7), the functions are equicontinuous. Thus, there exist a subsequence and a continuous function satisfying condition (7) such that as It is obvious that

for and which means converges to an independent-increments –multistable Lévy motion, in finite dimensional distribution.

The condition that the sequence is equicontinuous cannot be satisfied in some particular cases, for instance, in the construction of self-stabilizing process. Fortunately, in the Arzelà–Ascoli theorem, we find that this condition can be replaced by a more general one.

Definition A1.

We call the sequence subequicontinuous on if for any there exist and a sequence of nonnegative numbers as such that, for all functions in the sequence,

whenever In particular, if for all then is equicontinuous.

Notice that the definition is subequicontinuous on implies that for any there exists and N such that for all and all

whenever

Example A8.

Assume that is a sequence of equicontinuous functions. Set where is a sequence of functions that satisfies for a constant C. Then, it is easy to see that is a sequence of subequicontinuous functions. In particular, if is the Dirichlet function, then is a sequence of subequicontinuous, but not equicontinuous, functions.

Similarly, we can define Hölder subequicontinuous. A sequence is called Hölder subequicontinuous of order on if there exist a constant and a sequence of nonnegative numbers as such that, for all functions in the sequence,

whenever In particular, if for all then is Hölder equicontinuous of order It is obvious that if is Hölder subequicontinuous, then is subequicontinuous.

Appendix A.3. Generalization of The Arzel à–Ascoli Theorem

Next, we give a general version of the Arzelà–Ascoli theorem.

Lemma A2.

Assume that is a sequence of real-valued continuous functions defined on a closed and bounded set If this sequence is uniformly bounded and subequicontinuous, then there exists a subsequence that converges uniformly.

Proof.

The proof is essentially based on a diagonalization argument and is similar to the proof of the Arzelà–Ascoli theorem. We give a proof for the case of For the argument follows by applying the –dimensional version of our theorem d times.

Let be a closed and bounded interval. If is a finite set of functions, then there is a subsequence such that for all Thus, without loss of generality, we may assume that is an infinite set of functions. Fix an enumeration of all rational numbers in Since is uniformly bounded, the set of points is bounded. By the Bolzano–Weierstrass theorem, there is a sequence of the functions in such that converges. Repeating the same argument for the sequence of points , there is a subsequence of such that converges. By induction, this process can be continued forever, and so there is a chain of subsequences such that, for each the subsequence converges at Moreover, the following relation holds:

Define the diagonal subsequence whose mth term is the mth term in the mth subsequence . By construction, converges at every rational point of

Therefore, given any and rational in , there is an integer such that

Since the family is subequicontinuous, for this fixed and for every x in , there is an open interval containing x and such that

for all and all The collection of intervals forms an open cover of . Since is compact, this covering admits a finite subcover There exists an integer K such that each open interval contains a rational with Finally, for any there are j and k so that t and belong to the same interval Set then for all and

Hence, the sequence is an uniform Cauchy sequence, and it therefore uniformly converges to a continuous function, as claimed. This completes the proof. □

Appendix A.4. Some Applications

With this general version of the Arzelà–Ascoli theorem, we can easily obtain the generalizations of Theorems A1–A4. For instance, we have the following generalization of Theorem A4.

Theorem A5.

Let be a sequence of random processes with values in . Denote by

Assume that for any there exists a sequence of nonnegative numbers , depending only on M, such that

uniformly for all and that

uniformly for all Denote by

Then, is subequicontinuous with respect to and therefore, there exist a subsequence and a random process such that, for any

in distribution.

Moreover, if and all the processes and have independent increments, then in finite dimensional distribution.

The proof of Theorem A5 is omitted, since it is similar to that of Theorem A4. Using Theorem A5, we obtain the following corollary.

Denote by the greatest integer less than

Corollary A1.

in distribution.

Let be a sequence of Markov processes with values in . Assume that there exists a sequence of Lesbegue measurable functions such that, for all k and all

Assume that satisfies the following two conditions:

- (A)

- The limit holds uniformly for all

- (B)

- There exists a function such that for any holds uniformly for all and all

- Define There exist a subsequence and a random process such that, for any it converges

Proof.

For simplicity of notations, denote by

It is easy to see that, for all satisfying ,

In particular, it can be rewritten in the following form:

where, here and after, we denote

which no longer represents the coordinate of Set

Notice that By the conditions (A) and (B), it is easy to verify that

uniformly for all and all and that

uniformly for all Notice that Thus, by Theorem A5, we obtain the conclusion of Corollary A1. □

We give an example to show that how to apply Corollary A1.

Example A9.

Assume that the function satisfies condition (7). Let be a nonnegative integrable function such that for a constant Let be a sequence of real-value processes with independent increments. Assume that, for all k and

i.e., Then, the conditions (A) and (B) of Corollary A1 are satisfied with and Define There exist a subsequence and a random process such that, for any it converges

in distribution.

In fact, when , this example gives the integral of with respect to a multistable Lévy measure. In particular, when this example gives a functional central limit theorem for the independent-increments MsLM, that is, tends in distribution to in where is the Skorohod metric.

Using Theorem A5 again, we still have the following corollary. An application of this corollary is given in the next section.

Corollary A2.

Let be a sequence of Markov processes with values in . Assume that there exists a sequence of Lesbegue measurable functions such that, for all k and all

Assume that satisfies the conditions (A) and (B) of Corollary A1. Define Then, there exist a subsequence and a random process such that, for any it converges

in distribution.

Proof.

By an argument similar to that of Corollary A1, it holds, for all satisfying ,

Set

Then, (A35) implies that

uniformly for all Thus, it is easy to verify that

uniformly for all and all and that, from (A36),

uniformly for all Notice that Thus, by Theorem A5, we obtain the conclusion of Corollary A2. □

References

- Falconer, K.J. Tangent fields and the local structure of random fields. J. Theoret. Probab. 2002, 15, 731–750. [Google Scholar] [CrossRef]

- Falconer, K.J. The local structure of random processes. J. London Math. Soc. 2003, 67, 657–672. [Google Scholar] [CrossRef]

- Li, M. Multi-fractional generalized Cauchy process and its application to teletraffic. Phys. A Stat. Mechan. Appl. 2020, 550, 123982. [Google Scholar] [CrossRef]

- Li, M. Modified multifractional Gaussian noise and its application. Phys. Scr. 2021, 96.12, 125002. [Google Scholar] [CrossRef]

- Falconer, K.J.; Liu, L. Multistable Processes and Localisability. Stoch. Model. 2012, 28, 503–526. [Google Scholar] [CrossRef]

- Le Guével, R.; Lévy Véhel, J. A Ferguson-Klass-LePage series representation of multistable multifractional processes and related processes. Bernoulli 2012, 18, 1099–1127. [Google Scholar] [CrossRef]

- Le Guével, R.; Lévy Véhel, J. Incremental moments and Hölder exponents of multifractional multistable processes. ESAIM Probab. Statist. 2013, 17, 135–178. [Google Scholar] [CrossRef]

- Le Guével, R. The Hausdorff dimension of the range of the Lévy multistable processes. J. Theoret. Probab. 2019, 32, 765–780. [Google Scholar] [CrossRef]

- Ayache, A.; Hamonier, J. Wavelet series representation for multifractional multistable Riemann-Liouville process. arXiv 2020, arXiv:2004.05874. [Google Scholar] [CrossRef]

- Ayache, A.; Jaffard, S.; Taqqu, M.S. Wavelet construction of generalized multifractional processes. Rev. Mat. Iberoam. 2007, 23.1, 327–370. [Google Scholar] [CrossRef]

- Falconer, K.J.; Lévy Véhel, J. Self-stabilizing processes based on random signs. J. Theor. Probab. 2020, 33, 134–152. [Google Scholar] [CrossRef]

- Billingsley, P. Covergence of Probability Measures; John & Wiley: New York, NY, USA, 1968. [Google Scholar]

- Ayache, A. Sharp estimates on the tail behavior of a multistable distribution. Statist. Probab. Letter 2013, 83, 680–688. [Google Scholar] [CrossRef]

- Samorodnitsky, G.; Taqqu, M.S. Stable Non-Gaussian Random Processes; Chapman and Hall: London, UK, 1994. [Google Scholar]

- Barrière, O.; Echelard, A.; Lévy Véhel, J. Self-regulating processes. Electron. J. Probab. 2012, 17, 1–30. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).