1. Introduction

Emerging technology is rapidly being adopted in sport to improve performance and gain a competitive advantage over rivals. Performance enhancement requires an understanding of the perception-action cycle [

1]. One technology medium that has the potential to drastically alter the sports training space is virtual reality (VR). VR is a global term for visual-based computer simulation of a real or imaginary environment with technology being used to both display and interact with the environment [

2]. VR experiences allow users to intuitively interact with 3D computer generated models in real time, designed to either entertain, explain, or to train [

3]. There is a desire to improve sensorimotor skills through using VR as a training mechanism, rather than using VR to purely explain (for example for tactical or decision making optimization) or to entertain [

3].

VR systems have several potential advantages for athletic training; environments can be precisely controlled and scenarios standardized, augmented information can be incorporated to guide performance, and the environment can be dynamically altered to create different competitive situations [

3]. The advent of high frame rate head-mounted displays (HMDs), such as the Oculus Rift (Oculus, Irvine, CA, USA), has allowed bespoke sports training software to be developed. Current HMD VR sports training systems that are on the consumer market are primarily based on video training, for example the American Football focused STRIVR product [

4]. This software allows users to watch pre-recorded plays from an egocentric viewpoint, giving athletes immersive familiarization without the dependency of being at training. In this context, VR has benefits over 2D video playback, associated with the stereoscopic 360° field of view [

1]. These benefits may be focused on either educating athletes about the plays, hence, adding confidence, or through entertainment factors [

2].

It could be reasoned that for the VR environment to create a strong positive transfer, realistic opportunities for action, with appropriate sensory information, must be coupled with natural functional movement fidelity. VR has been used as a training simulator across a range of sports including; basketball free-throw shooting [

5], rugby side-stepping [

1,

2], handball goal-keeping [

1], soccer goal keeping [

2], and fly ball catching [

6] to varying degrees of success. Covaci et al. [

5] used a basketball free-throw shooting task. Experienced athletes shot firstly on a virtual simulator, achieving an average accuracy of 47 ± 1% with terminal feedback after each shot, followed by real court transfer test in which they achieved 53 ± 1%. These numbers do not provide evidence of a transfer of training, or success in the VR environment, which could be due to shooting a virtual ball without haptic feedback. The de-coupling of perception and action by eliminating the natural haptic inputs, when used for training situations, could cause maladaptive training adaptions [

7].

Miles et al. [

3] developed a criterion for evaluating the efficacy of sports virtual environments for training sports skills which included being, having high functionally fidelity, affordability, and to be validated to work as intended. One marker of functional fidelity is presence, the subjective feeling of “being there” [

8]; in this case, the athlete feeling that they are physically located at the virtual sporting environment [

2]. This is made possible through both the perceptual and functional realism of the system. Perceptual fidelity, defined as the audio-visual appearance of the real-environment in the system, is not nearly as important as functional fidelity for skill acquisition, defined as the elicitation of realistic movement behaviours [

2,

3]. The assessment of validity can be achieved through capturing the behaviour in the virtual environment and correlating this with the real world, ensuring results and learnings are transferable [

6]. As VR technology has become more widely adopted, the affordability problem has become less of an issue, with one of the most popular HMDs, the Oculus Rift, available for under

$500USD. Furthermore, rapid technological advances have negated Miles et al. [

3] concern with HMD being too cumbersome to wear and now users can with relative comfort wear these devices whilst performing active movement tasks.

Bideau et al. [

1] created a three step framework for creating VR sports simulators utilizing a perception action coupled task (handball goalkeeping) and a de-coupled perception task (rugby side-stepping). The framework encompasses an initial capture of the athlete’s actions (using motion capture), computer based animation of the virtual humanoids and, finally, the virtual environment presentation, an application specific process. The design framework is useful for the applications discussed, however is not generalizable to other sports, such as track cycling where motion capturing the entire performance to drive the humanoid models, is complicated due to the size of the capture volume. The aim of this paper was to extrapolate the Bideau et al. [

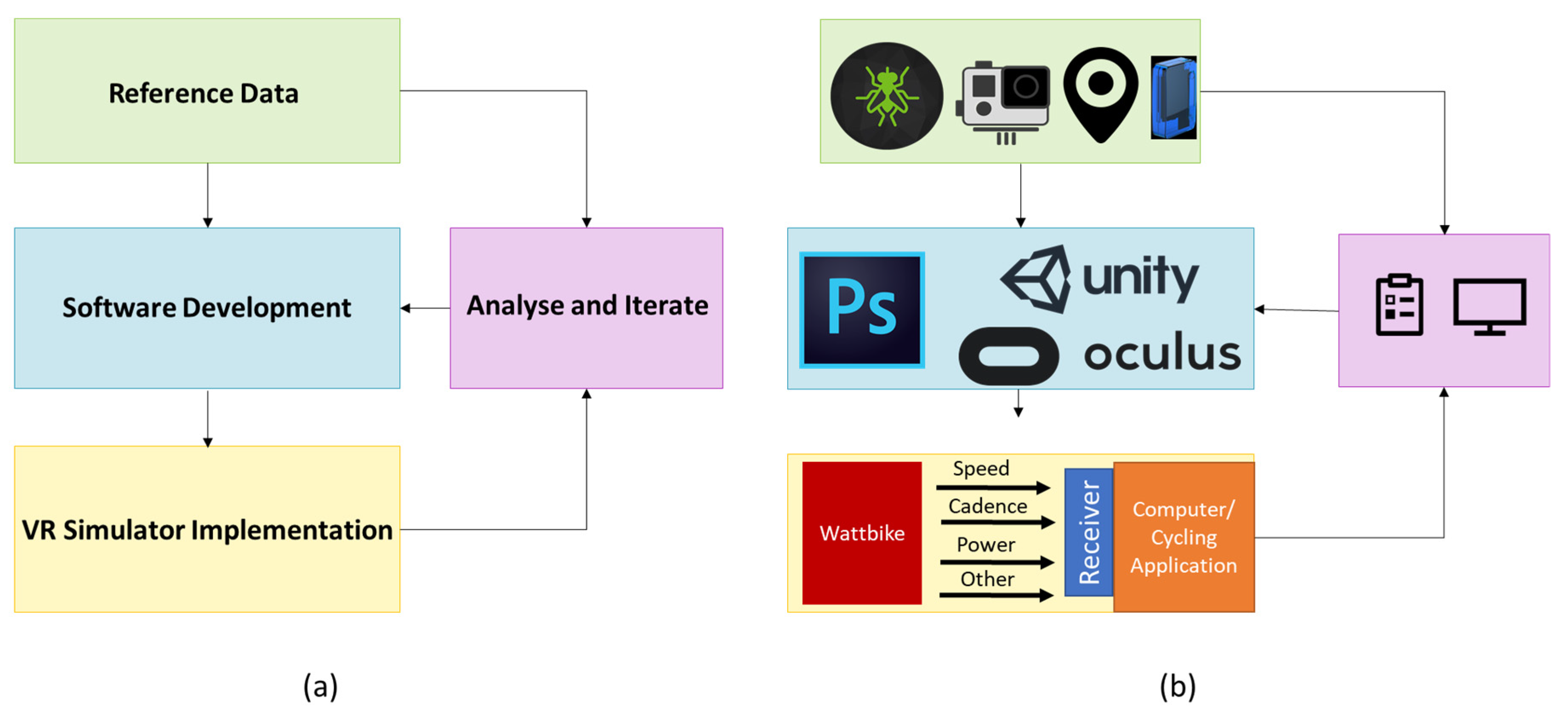

1] concept to create a more generalizable VR sports training simulator technological design framework, which includes an additional step allowing incremental modification, shown in

Figure 1a. The stepwise process includes;

Capturing Reference Data

Software Development

Hardware Development

Analysis, and Iteration.

The fourth step uses an adapted two step criteria from Miles et al. [

3], in which the following is asked: is the simulator realistic (in terms of functional and perpetual fidelity)?; and are the behaviours represented in the real environment?

2. Materials and Methods

The simulator was designed to replicate the experience of competing in a track cycling event in the 2018 Commonwealth Games stadium in Chandler, Queensland. To achieve this a stepwise design process was implemented, as illustrated in

Figure 1.

Figure 1.

Implementation guideline for the creation and iteration of a VR sports simulator. (a) Generalized guidelines (b) the detailed implementation for the creation of this simulator.

Figure 1.

Implementation guideline for the creation and iteration of a VR sports simulator. (a) Generalized guidelines (b) the detailed implementation for the creation of this simulator.

2.1. Reference Data

One elite male cyclist, who was a previous gold medallist at the Commonwealth Games track cycling in the men’s sprint, gave informed consent to participate in the research study (Ethics Number: GU2016/746). The athlete was instrumented with three 9DOF inertial sensors (SABEL Sense, Brisbane, Australia) mounted using Velcro straps on the right shank, the right wrist, and between the shoulder blades (250 Hz, ±16 g accel., ±2000°/s gyro., ±7 Gauss mag., weight = 23 g). These sensors [

9] logged data on a SD card and were calibrated before the trial [

10]. A helmet mounted 360-degree camera (360Fly, Pittsburgh, PA, USA) collected video at 60 Hz, along with additional video from a fixed sports action camera (GoPro, San Mateo, CA, USA) also sampling at 60 Hz. The athlete used a bike computer (Garmin, Olathe, KS, USA), wore a GPS sports watch (Polar, M400) and a chest strap mounted heart rate monitor (Polar, Kempele, Finland). The athlete was instructed to perform ten slow laps on the race line, followed by three flying two hundred meter efforts at maximal intensity, then finally a shadow 3 lap race, where he was asked to visualize that he was ‘off the front’ match racing an invisible opponent. The placement of these sensors and other equipment is shown in

Figure 2. A fixed camera (Panasonic HC-V750M, Osaka, Japan) was also used for reference data recording at 50 Hz and a microphone (Rode NT, Sydney, Australia) was placed next to the track to gather ambient track sound data.

Additional documentation including UCI track dimension regulations and stadium layout was also attained to complete the reference files.

2.2. Software Development

The reference video data from the sports action camera and the 360-degree camera was used to create high-fidelity models in 3DSMAX (Autodesk, Montreal, QC, Canada) and Photoshop (Adobe, San Jose, CA, USA). These models were helped driven using the temporal and spatial data captured from the IMU sensors. The models were animated in Unity (Unity, San Fransicso, CA, USA) and optimised for the Oculus Rift HMD. The software uses gaze input for menu selection. To create the simulators soundscape the captured bike noises were augmented with open-sourced crowd noises to increase the fidelity and to aid presence.

2.3. Simulator Implementation

The simulator consists of a stationary exercise bike (Wattbike, 2016 Pro) which transmits real time performance data wirelessly, via the ANT+ protocol, to a connected PC with an Oculus Rift HMD projecting the audio-visual simulated environment, the setup of which is shown

Figure 3a. Sprint performance on this cycling ergometer has shown to be highly related and reproducible to track cycling performance [

11]. The simulation experience is an egocentric viewpoint from the perspective of the Pursuiter’s Line. Riding against the user in the Sprinter’s Line is a competing avatar. For competition scalability, the virtual opponent matches the riders speed for the first three quarters of the final lap, then maintains the average speed for the remainder of the race. This segmentation is shown in

Figure 3b.

2.4. User Testing

210 non-elite riders with ranging cycling experience gave informed consent to participate in the research study (Ethics Number GU2016/746). Riders were given two laps, a warm up lap and the final sprint lap. They were instructed to try to beat the competitor, but did not know that the competitors speed was dependent on theirs. Data was captured at 15 Hz including track positioning of them and the opponent, speed, power, cadence, lap times, and the position and rotation of the head in three-axes. The data was processed using a custom MATLAB (Mathworks, Natick, MA, USA) script. An assessment of functional fidelity was performed using the following criteria; if the riders completed the two laps, whether the rider beat the avatar, and the number of times the rider looked directly at the avatar for a period over 0.5 s, which was termed unique gazes (Ug).

4. Discussion

Adapting Miles et al. [

3] success criteria, the visual fidelity is adequate to keep the riders immersed, as evidenced in

Figure 4 and through user feedback. To further improve visual fidelity, novel technologies such as laser scanning or real-world footage could be utilized to maximise the visual acuity of the environment. However, improving the visual fidelity of the simulated environment may not be imperative for a transfer of training if immersion is maintained [

2].

A determination of whether the athlete beat the competing avatar was used as marker of behavioural fidelity. It would be expected that a high percentage of non-elite riders would fatigue towards the end of the race, and therefore lose against the opponent. However, as indicated in

Table 1, the win percentage was 43.33 ± 0.5%, inferring a behavioural change has occurred as a result of the virtual environment. As the VR simulator created the affordances for this behavioural change, it could be reasoned that it is of a sufficient level of behavioural fidelity. Delving into the factors behind this change is possible through the medium of VR as the simulation is both controlled and measured.

This is a large benefit of VR simulators as they allow practitioners to understand why this behavioural change has occurred, which could create actionable insights into performance. To evidence this one performance feature extracted was the number of times a participant looked at the opponent, or unique gazes, during the final lap. A weak correlation was evident, indicating the winning percentage increased as the amount of gazes increased. Assessing whether this insight is present in elite track cyclists in both the simulated and in the real world environment will determine if this is an influential factor in the athlete’s performance. From this the simulator could be iterated to encourage optimal visual search strategies to optimise performance, which can then be transferred into the performance domain. To further investigate behavioural fidelity, the actions of the athletes could also be assessed. Future work will perform a thorough biomechanical analysis with the aim to contrast the cyclist’s movements from the reference data from the velodrome, the simulator, and riding the Wattbike without VR augmentation. This will determine whether the virtual environment improves the biomechanical validity of ergometer training.