PRISMA Review: Drones and AI in Inventory Creation of Signage

Abstract

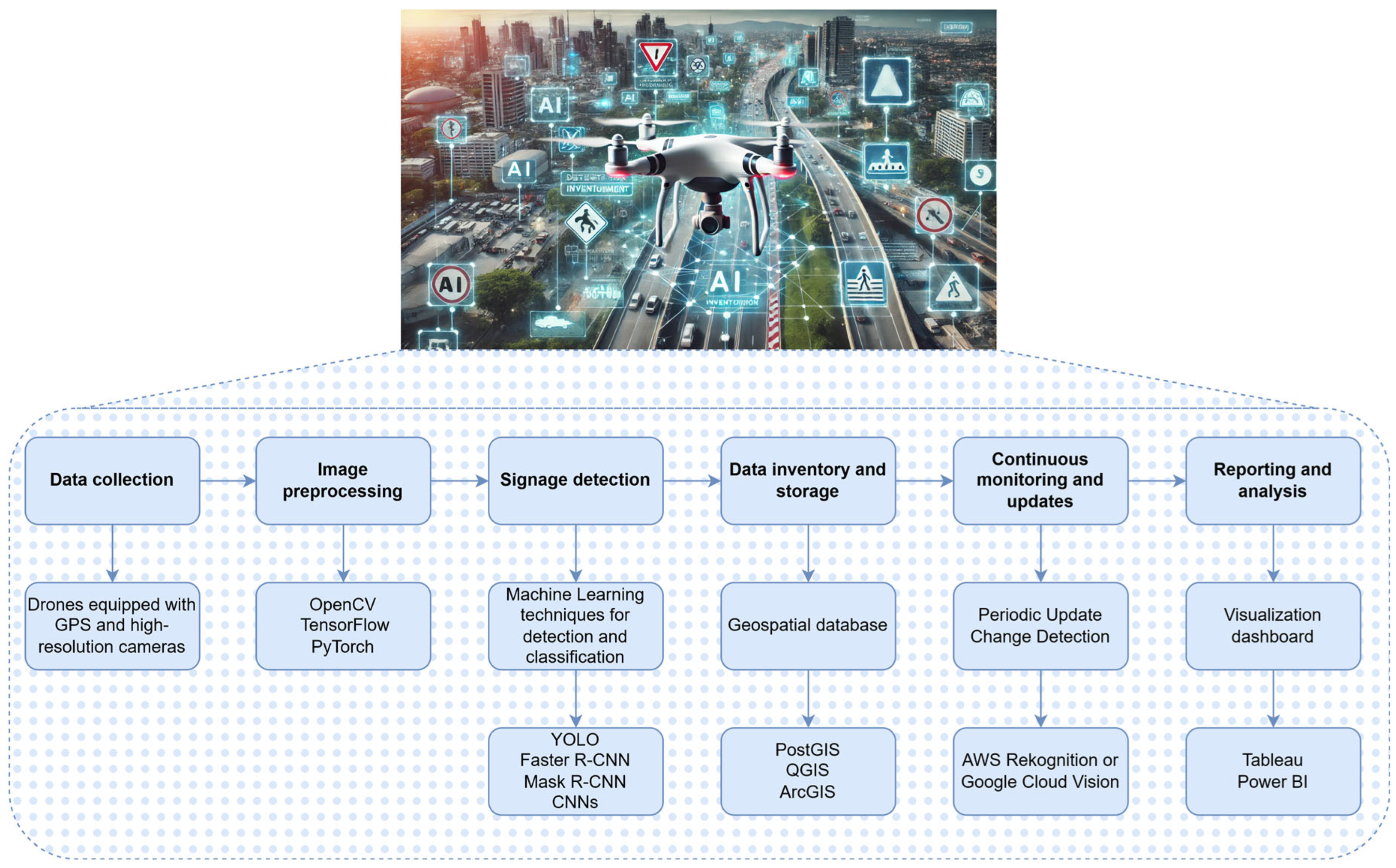

:1. Introduction

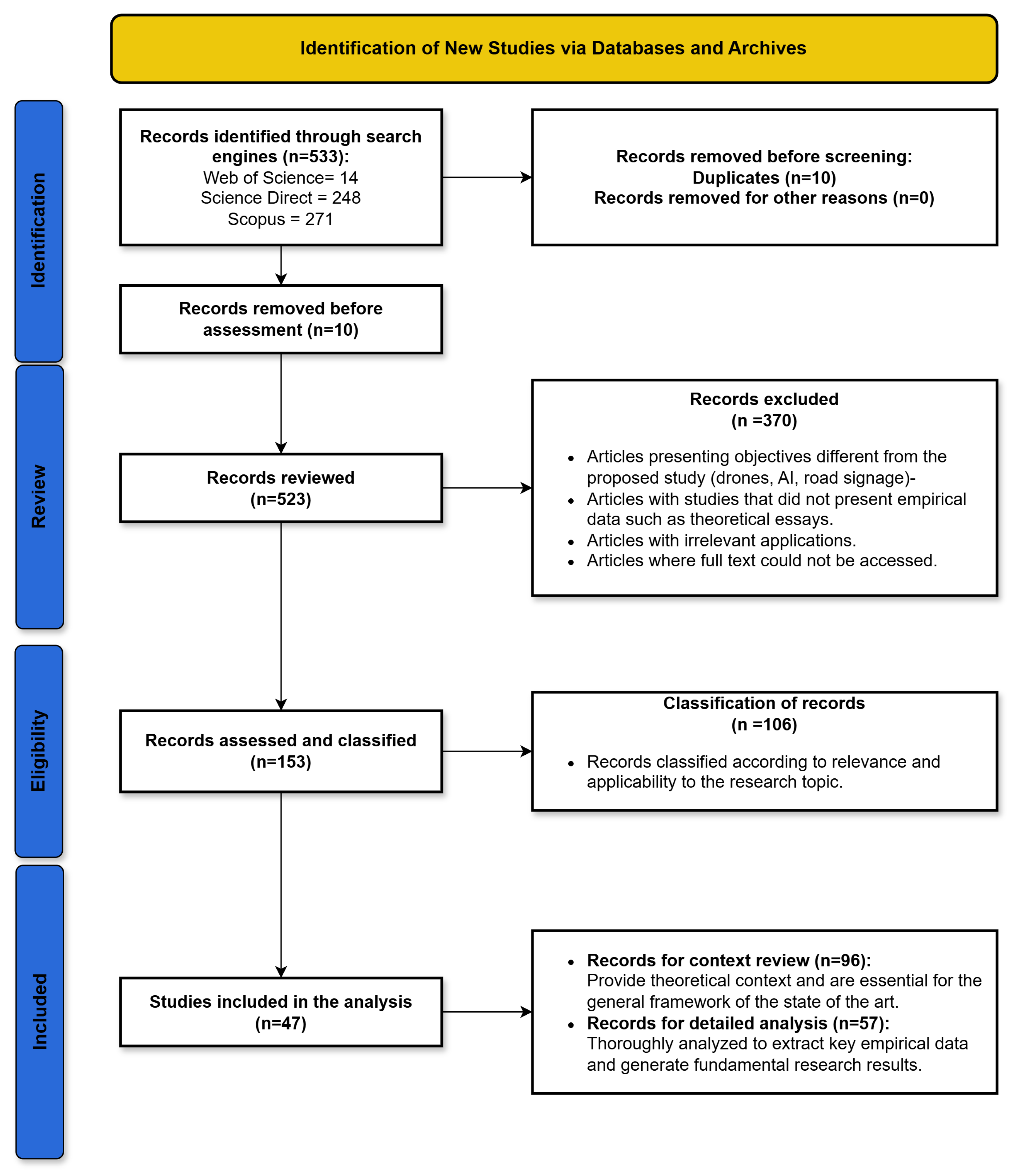

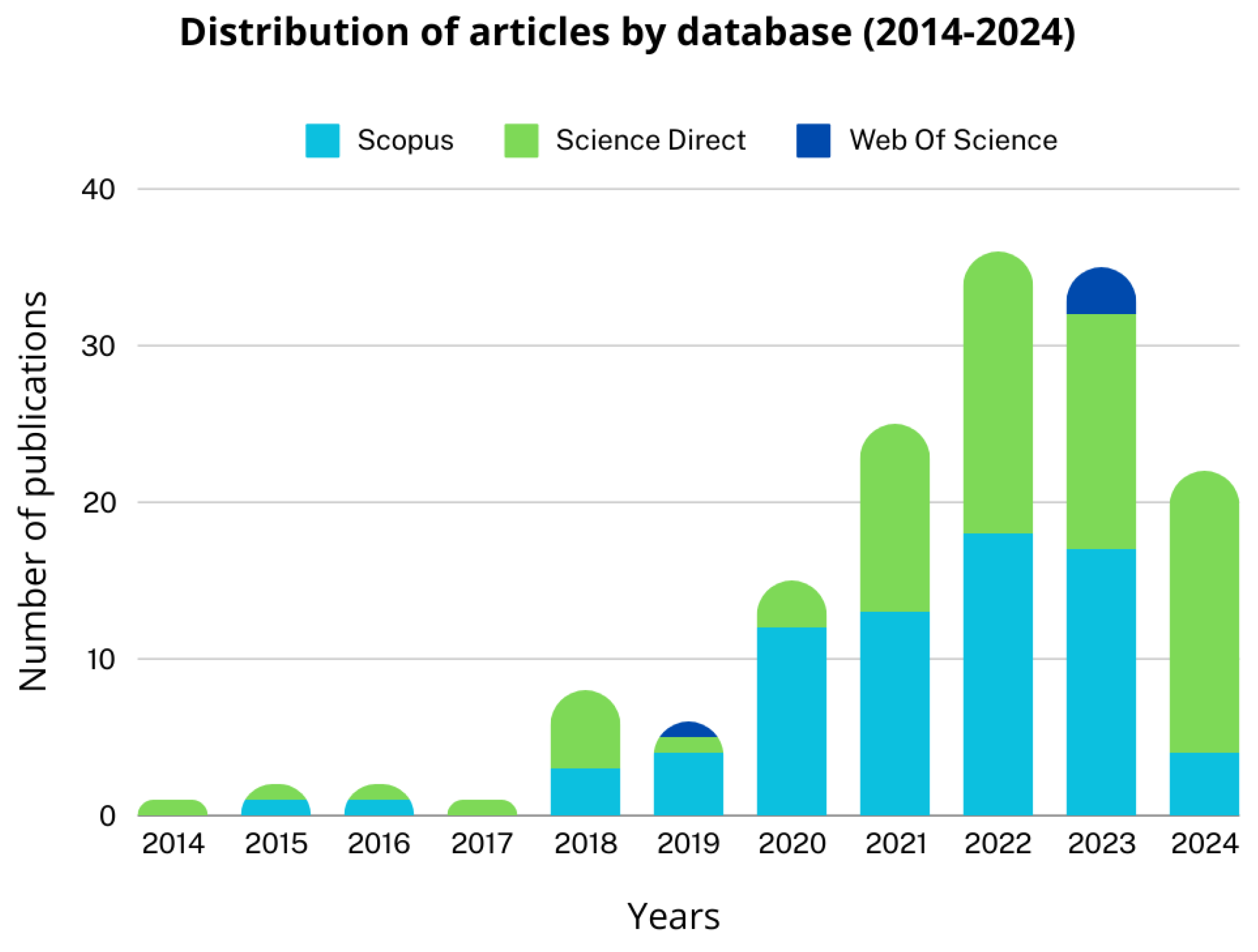

2. Systematic Review Methodology

2.1. Identification

2.2. Study Review

2.2.1. Inclusion and Exclusion Criteria

2.2.2. Application of Criteria

3. State of the Art

3.1. UAVs for Signage Detection

3.2. Applications of UAVs for Road Safety

3.3. Automated Inventory Systems in Road Infrastructure

3.4. Detection Technologies

3.5. Surface and Object Detection in Urban Applications

| Detection Technique | Operating Principle | Advantages | Disadvantages |

|---|---|---|---|

| Radar-based | Utilizes radio waves to detect and locate nearby objects |

|

|

| Radio Frequency-based | Captures wireless signals to detect UAVs radio frequency signatures |

|

|

| Acoustic-based | Detects UAVs by their unique sound signatures |

|

|

| Vision-based | Captures visual data of the UAVs using camera sensors |

|

|

3.6. Advances in Small and Hidden Object Detection

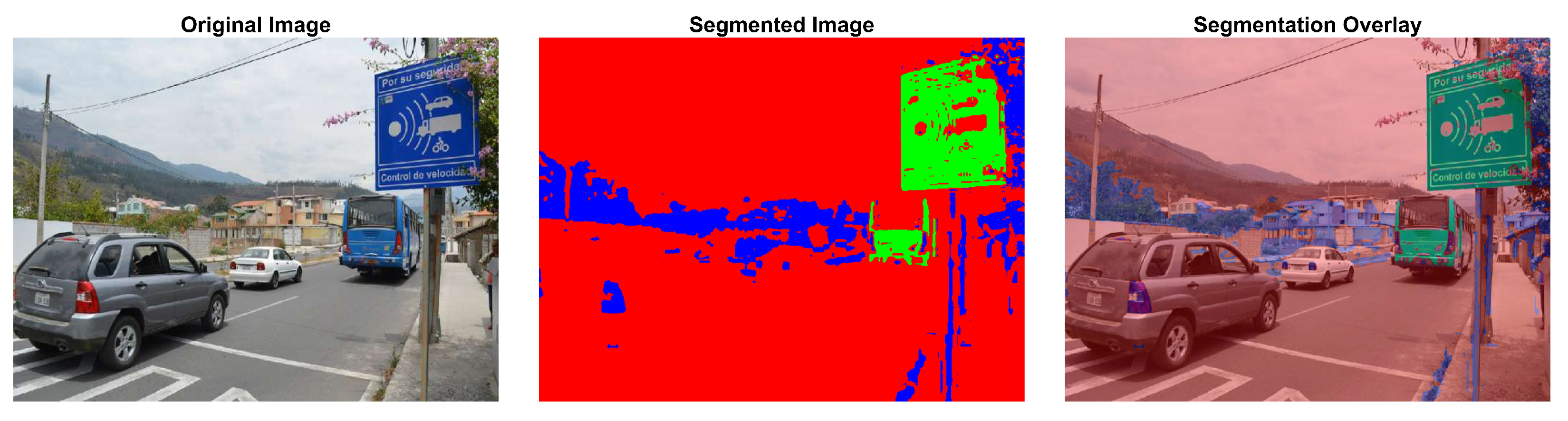

3.7. Semantic Segmentation for Object Detection

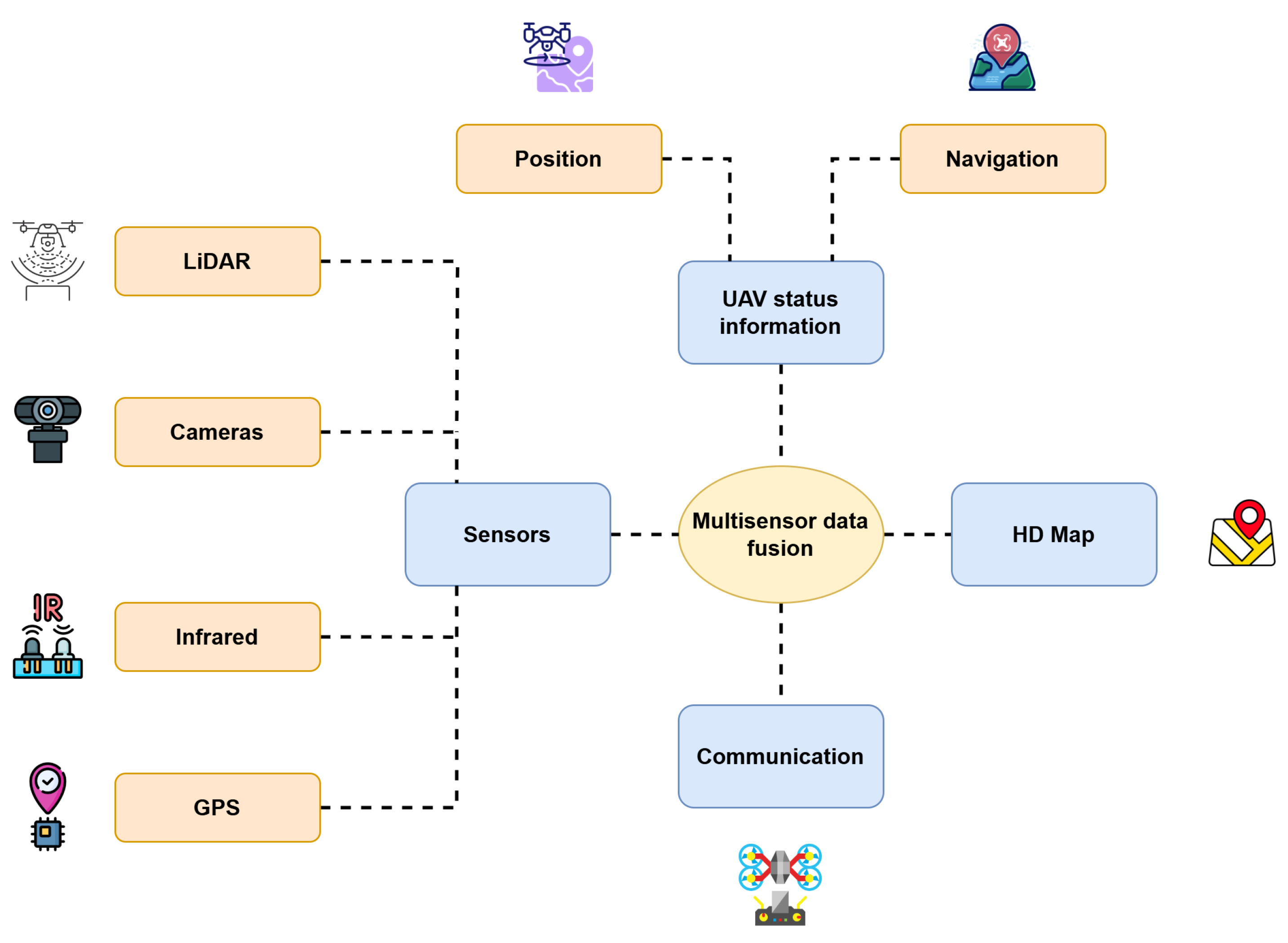

3.8. Multisensor Data Fusion

3.9. Data Fusion and Automatic Registration in Urban Applications

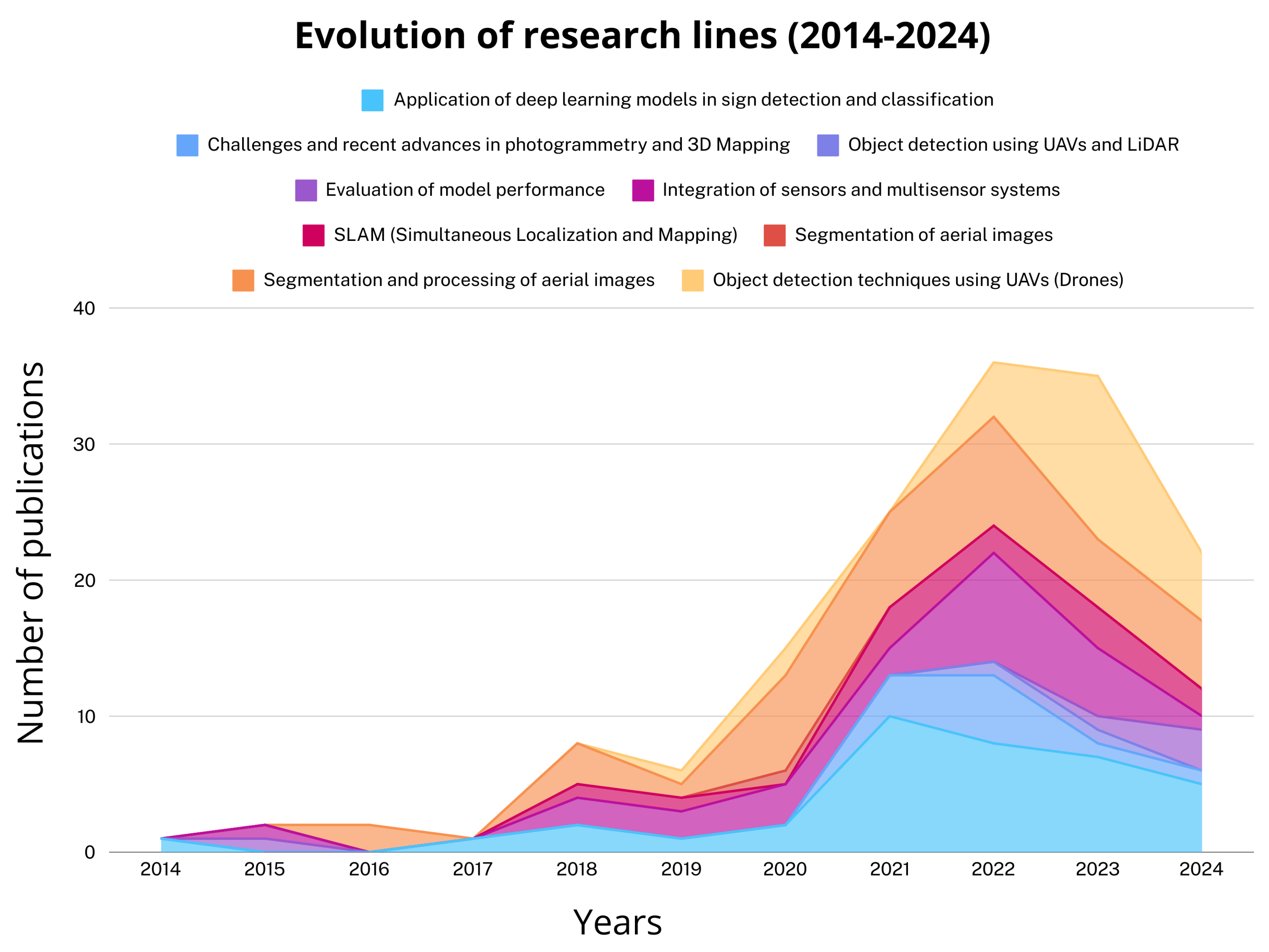

4. Quantitative Results

4.1. Performance of Detection Algorithms

4.2. Comparison Between Segmentation Techniques

4.3. Identified Quantitative Challenges

4.4. Data Extraction from Relevant Studies

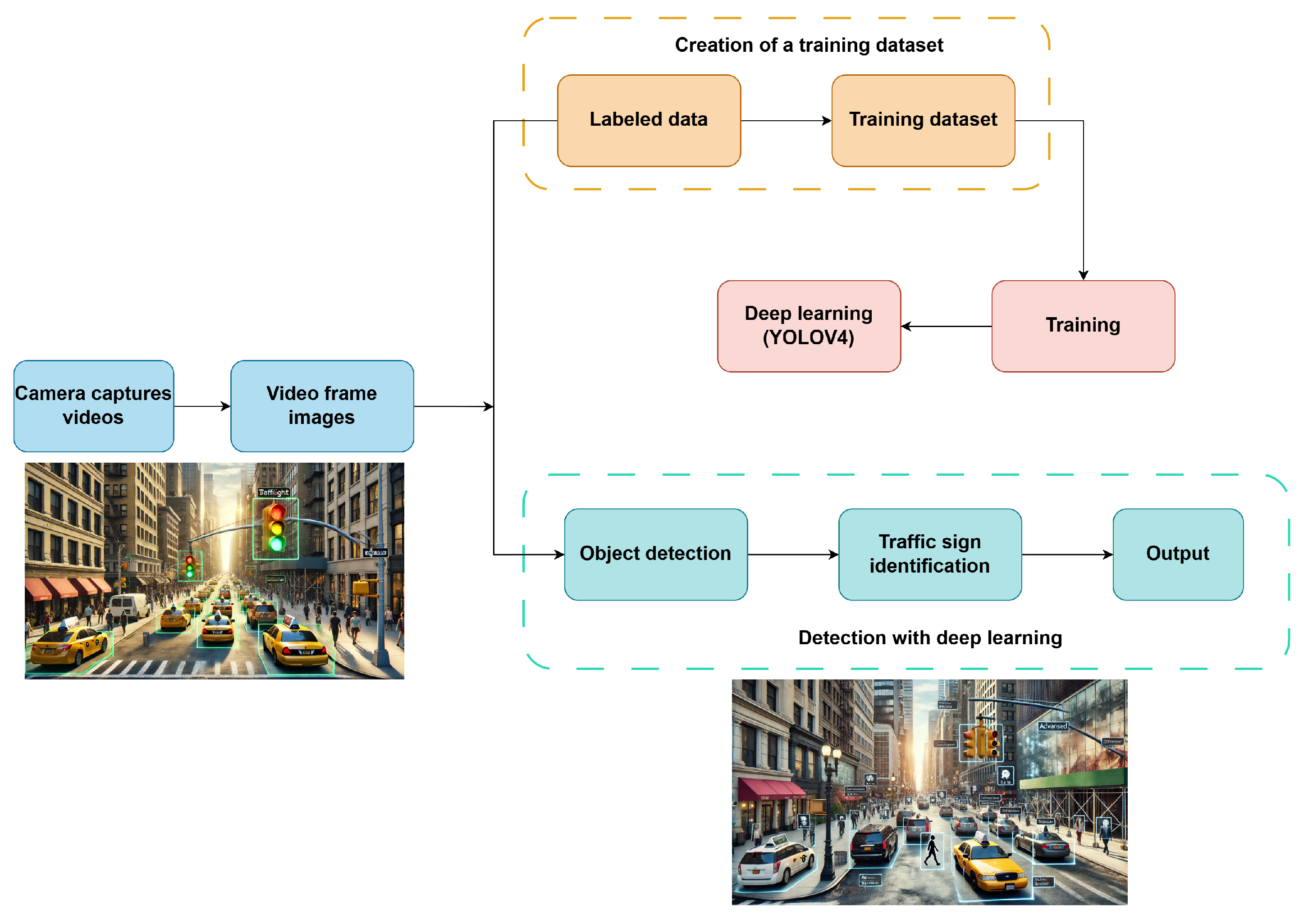

4.4.1. Application of DLMs in Traffic Sign Detection and Classification

4.4.2. Segmentation and Processing of Aerial Images

4.4.3. Integration of Sensors and Multisensor Systems

4.4.4. SLAM (Simultaneous Localization and Mapping)

4.4.5. Object Detection Techniques Using UAVs

4.4.6. Performance Evaluation of Detection and Classification Models

4.4.7. Challenges and Recent Advances in Photogrammetry and 3D Mapping

4.4.8. Aerial Image Segmentation

4.4.9. Object Detection with UAVs and LiDAR

5. Discussion

5.1. Comparative Efficiency of AI Algorithms in Road Signage Detection

5.2. Impact and Practical Applications of UAV and Advanced Sensor Integration

5.3. Future Directions for the Optimization and Adoption of UAVs in Automated Road Management

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Cai, H.; Wang, Y.; Lin, Y.; Li, S.; Wang, M.; Teng, F. Systematic Comparison of Objects Classification Methods Based on ALS and Optical Remote Sensing Images in Urban Areas. Electronics 2022, 11, 3041. [Google Scholar] [CrossRef]

- Arief, R.W.; Nurtanio, I.; Samman, F.A. Traffic Signs Detection and Recognition System Using the YOLOv4 Algorithm. In Proceedings of the 2021 International Conference on Artificial Intelligence and Mechatronics Systems (AIMS), Bandung, Indonesia, 28–30 April 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Jiang, X.; Cui, Q.; Wang, C.; Wang, F.; Zhao, Y.; Hou, Y.; Zhuang, R.; Mei, Y.; Shi, G. A Model for Infrastructure Detection along Highways Based on Remote Sensing Images from UAVs. Sensors 2023, 23, 3847. [Google Scholar] [CrossRef]

- Houben, S.; Stallkamp, J.; Salmen, J.; Schlipsing, M.; Igel, C. Detection of traffic signs in real-world images: The German traffic sign detection benchmark. In Proceedings of the The 2013 International Joint Conference on Neural Networks (IJCNN), Dallas, TX, USA, 4–9 August 2013; pp. 1–8. [Google Scholar] [CrossRef]

- Li, W.; Li, H.; Wu, Q.; Chen, X.; Ngan, K.N. Simultaneously Detecting and Counting Dense Vehicles From Drone Images. IEEE Trans. Ind. Electron. 2019, 66, 9651–9662. [Google Scholar] [CrossRef]

- Outay, F.; Mengash, H.A.; Adnan, M. Applications of unmanned aerial vehicle (UAV) in road safety, traffic and highway infrastructure management: Recent advances and challenges. Transp. Res. Part A Policy Pract. 2020, 141, 116–129. [Google Scholar] [CrossRef] [PubMed]

- Fernández-Sanjurjo, M.; Bosquet, B.; Mucientes, M.; Brea, V.M. Real-time visual detection and tracking system for traffic monitoring. Eng. Appl. Artif. Intell. 2019, 85, 410–420. [Google Scholar] [CrossRef]

- Padilla, R.; Netto, S.L.; da Silva, E.A.B. A Survey on Performance Metrics for Object-Detection Algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), Niteroi, Brazil, 1–3 July 2020; pp. 237–242. [Google Scholar] [CrossRef]

- Butilă, E.V.; Boboc, R.G. Urban Traffic Monitoring and Analysis Using Unmanned Aerial Vehicles (UAVs): A Systematic Literature Review. Remote Sens. 2022, 14, 620. [Google Scholar] [CrossRef]

- Chen, H.; Hou, L.; Wu, S.; Zhang, G.; Zou, Y.; Moon, S.; Bhuiyan, M. Augmented reality, deep learning and vision-language query system for construction worker safety. Autom. Constr. 2024, 157, 105158. [Google Scholar] [CrossRef]

- Huang, D.; Qin, R.; Elhashash, M. Bundle adjustment with motion constraints for uncalibrated multi-camera systems at the ground level. ISPRS J. Photogramm. Remote Sens. 2024, 211, 452–464. [Google Scholar] [CrossRef]

- Di Benedetto, A.; Fiani, M. Integration of LiDAR Data into a Regional Topographic Database for the Generation of a 3D City Model. In Proceedings of the Geomatics for Green and Digital Transition; Borgogno-Mondino, E., Zamperlin, P., Eds.; Springer: Cham, Switzerland, 2022; pp. 193–208. [Google Scholar] [CrossRef]

- Rashdi, R.; Martínez-Sánchez, J.; Arias, P.; Qiu, Z. Scanning Technologies to Building Information Modelling: A Review. Infrastructures 2022, 7, 49. [Google Scholar] [CrossRef]

- Shao, Y.; Huang, Q.; Mei, Y.; Chu, H. MOD-YOLO: Multispectral object detection based on transformer dual-stream YOLO. Pattern Recognit. Lett. 2024, 183, 26–34. [Google Scholar] [CrossRef]

- Wan, M.; Gu, G.; Qian, W.; Ren, K.; Maldague, X.; Chen, Q. Unmanned Aerial Vehicle Video-Based Target Tracking Algorithm Using Sparse Representation. IEEE Internet Things J. 2019, 6, 9689–9706. [Google Scholar] [CrossRef]

- Zhang, X.; Izquierdo, E.; Chandramouli, K. Dense and Small Object Detection in UAV Vision Based on Cascade Network. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27–28 October 2019; pp. 118–126. [Google Scholar] [CrossRef]

- Naranjo, M.; Fuentes, D.; Muelas, E.; Díez, E.; Ciruelo, L.; Alonso, C.; Abenza, E.; Gómez-Espinosa, R.; Luengo, I. Object Detection-Based System for Traffic Signs on Drone-Captured Images. Drones 2023, 7, 112. [Google Scholar] [CrossRef]

- Wei, J.; Liu, G.; Liu, S.; Xiao, Z. A novel algorithm for small object detection based on YOLOv4. PeerJ Comput. Sci. 2023, 9, e1314. [Google Scholar] [CrossRef]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Martinez-Gonzalez, P.; Garcia-Rodriguez, J. A survey on deep learning techniques for image and video semantic segmentation. Appl. Soft Comput. 2018, 70, 41–65. [Google Scholar] [CrossRef]

- Hsieh, M.R.; Lin, Y.L.; Hsu, W.H. Drone-Based Object Counting by Spatially Regularized Regional Proposal Network. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar] [CrossRef]

- Peng, D.; Bruzzone, L.; Zhang, Y.; Guan, H.; He, P. SCDNET: A novel convolutional network for semantic change detection in high resolution optical remote sensing imagery. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102465. [Google Scholar] [CrossRef]

- Kumar, A.; Kashiyama, T.; Maeda, H.; Omata, H.; Sekimoto, Y. Real-time citywide reconstruction of traffic flow from moving cameras on lightweight edge devices. ISPRS J. Photogramm. Remote Sens. 2022, 192, 115–129. [Google Scholar] [CrossRef]

- Li, X.; Li, X.; Pan, H. Multi-Scale Vehicle Detection in High-Resolution Aerial Images with Context Information. IEEE Access 2020, 8, 208643–208657. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Pourmohammadi, P.; Poyner, J.D. Mapping the Topographic Features of Mining-Related Valley Fills Using Mask R-CNN Deep Learning and Digital Elevation Data. Remote Sens. 2020, 12, 547. [Google Scholar] [CrossRef]

- Wang, L.; Liao, J.; Xu, C. Vehicle Detection Based on Drone Images with the Improved Faster R-CNN. In Proceedings of the 2019 11th International Conference on Machine Learning and Computing (ICMLC’19), Zhuhai China, 22–24 February 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 466–471. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems; Cortes, C., Lawrence, N., Lee, D., Sugiyama, M., Garnett, R., Eds.; Curran Associates, Inc.: New York, NY, USA, 2015; Volume 28. [Google Scholar] [CrossRef]

- Weinmann, M.; Weinmann, M. Geospatial Computer Vision Based on Multi-Modal Data—How Valuable Is Shape Information for the Extraction of Semantic Information? Remote Sens. 2018, 10, 2. [Google Scholar] [CrossRef]

- Dhulipudi, D.P.; KS, R. Multiclass Geospatial Object Detection using Machine Learning-Aviation Case Study. In Proceedings of the 2020 AIAA/IEEE 39th Digital Avionics Systems Conference (DASC), San Antonio, TX, USA, 11–15 October 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Balamuralidhar, N.; Tilon, S.; Nex, F. MultEYE: Monitoring System for Real-Time Vehicle Detection, Tracking and Speed Estimation from UAV Imagery on Edge-Computing Platforms. Remote Sens. 2021, 13, 573. [Google Scholar] [CrossRef]

- Wang, Y.; Lin, Y.; Huang, H.; Wang, S.; Wen, S.; Cai, H. A Weak Sample Optimisation Method for Building Classification in a Semi-Supervised Deep Learning Framework. Remote Sens. 2023, 15, 4432. [Google Scholar] [CrossRef]

- Susetyo, D.B.; Rizaldy, A.; Hariyono, M.I.; Purwono, N.; Hidayat, F.; Windiastuti, R.; Rachma, T.R.N.; Hartanto, P. A Simple But Effective Approach of Building Footprint Extraction in Topographic Mapping Acceleration. Indones. J. Geosci. 2021, 8, 329–343. [Google Scholar] [CrossRef]

- Rastiveis, H.; Shams, A.; Sarasua, W.A.; Li, J. Automated extraction of lane markings from mobile LiDAR point clouds based on fuzzy inference. ISPRS J. Photogramm. Remote Sens. 2020, 160, 149–166. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Specht, O.; Specht, M.; Stateczny, A.; Specht, C. Concept of an Innovative System for Dimensioning and Predicting Changes in the Coastal Zone Topography Using UAVs and USVs (4DBatMap System). Electronics 2023, 12, 4112. [Google Scholar] [CrossRef]

- Liu, H.; Ge, J.; Liu, B.; Yu, W. Multi-feature combination method for point cloud intensity feature image and UAV optical image matching. In Proceedings of the Fourth International Conference on Geoscience and Remote Sensing Mapping (GRSM 2022), Changchun, China, 4–6 November 2022; Lohani, T.K., Ed.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2023; Volume 12551, p. 125511B. [Google Scholar] [CrossRef]

- Kim, S.Y.; Yun Kwon, D.; Jang, A.; Ju, Y.K.; Lee, J.S.; Hong, S. A review of UAV integration in forensic civil engineering: From sensor technologies to geotechnical, structural and water infrastructure applications. Measurement 2024, 224, 113886. [Google Scholar] [CrossRef]

- Fu, Y.; Li, C.; Yu, F.R.; Luan, T.H.; Zhang, Y. A Survey of Driving Safety With Sensing, Vehicular Communications, and Artificial Intelligence-Based Collision Avoidance. IEEE Trans. Intell. Transp. Syst. 2022, 23, 6142–6163. [Google Scholar] [CrossRef]

- Zhang, J.S.; Cao, J.; Mao, B. Application of deep learning and unmanned aerial vehicle technology in traffic flow monitoring. In Proceedings of the 2017 International Conference on Machine Learning and Cybernetics (ICMLC), Ningbo, China, 9–12 July 2017; Volume 1, pp. 189–194. [Google Scholar] [CrossRef]

- Bisio, I.; Haleem, H.; Garibotto, C.; Lavagetto, F.; Sciarrone, A. Performance Evaluation and Analysis of Drone-Based Vehicle Detection Techniques From Deep Learning Perspective. IEEE Internet Things J. 2022, 9, 10920–10935. [Google Scholar] [CrossRef]

- Bisio, I.; Garibotto, C.; Haleem, H.; Lavagetto, F.; Sciarrone, A. A Systematic Review of Drone Based Road Traffic Monitoring System. IEEE Access 2022, 10, 101537–101555. [Google Scholar] [CrossRef]

- Kahraman, S.; Bacher, R. A comprehensive review of hyperspectral data fusion with lidar and sar data. Annu. Rev. Control 2021, 51, 236–253. [Google Scholar] [CrossRef]

- Analytics, C. Web of Science Core Collection. 2024. Available online: https://www.webofscience.com (accessed on 24 October 2012).

- Elsevier. ScienceDirect Home Page. 2024. Available online: https://www.sciencedirect.com (accessed on 24 October 2012).

- Elsevier. Scopus Document Search. 2024. Available online: https://www.scopus.com (accessed on 24 October 2012).

- Gajjar, H.; Sanyal, S.; Shah, M. A comprehensive study on lane detecting autonomous car using computer vision. Expert Syst. Appl. 2023, 233, 120929. [Google Scholar] [CrossRef]

- Soilán, M.; González-Aguilera, D.; del Campo-Sánchez, A.; Hernández-López, D.; Del Pozo, S. Road marking degradation analysis using 3D point cloud data acquired with a low-cost Mobile Mapping System. Autom. Constr. 2022, 141, 104446. [Google Scholar] [CrossRef]

- Wang, C.; Wen, C.; Dai, Y.; Yu, S.; Liu, M. Urban 3D modeling using mobile laser scanning: A review. Virtual Real. Intell. Hardw. 2020, 2, 175–212. [Google Scholar] [CrossRef]

- Movia, A.; Beinat, A.; Crosilla, F. Shadow detection and removal in RGB VHR images for land use unsupervised classification. ISPRS J. Photogramm. Remote Sens. 2016, 119, 485–495. [Google Scholar] [CrossRef]

- Lin, Y.; Zhang, H.; Li, G.; Wang, T.; Wan, L.; Lin, H. Improving Impervious Surface Extraction With Shadow-Based Sparse Representation From Optical, SAR, and LiDAR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2417–2428. [Google Scholar] [CrossRef]

- Eslamizade, F.; Rastiveis, H.; Zahraee, N.K.; Jouybari, A.; Shams, A. Decision-level fusion of satellite imagery and LiDAR data for post-earthquake damage map generation in Haiti. Arab. J. Geosci. 2021, 14, 1120. [Google Scholar] [CrossRef]

- Dalla Mura, M.; Prasad, S.; Pacifici, F.; Gamba, P.; Chanussot, J.; Benediktsson, J.A. Challenges and Opportunities of Multimodality and Data Fusion in Remote Sensing. Proc. IEEE 2015, 103, 1585–1601. [Google Scholar] [CrossRef]

- Han, Y.; Qin, R.; Huang, X. Assessment of dense image matchers for digital surface model generation using airborne and spaceborne images—An update. Photogramm. Rec. 2020, 35, 58–80. [Google Scholar] [CrossRef]

- Ryu, K.B.; Kang, S.J.; Jeong, S.I.; Jeong, M.S.; Park, K.R. CN4SRSS: Combined network for super-resolution reconstruction and semantic segmentation in frontal-viewing camera images of vehicle. Eng. Appl. Artif. Intell. 2024, 130, 107673. [Google Scholar] [CrossRef]

- Hüthwohl, P.; Brilakis, I. Detecting healthy concrete surfaces. Adv. Eng. Inform. 2018, 37, 150–162. [Google Scholar] [CrossRef]

- Jian, L.; Li, Z.; Yang, X.; Wu, W.; Ahmad, A.; Jeon, G. Combining Unmanned Aerial Vehicles With Artificial-Intelligence Technology for Traffic-Congestion Recognition: Electronic Eyes in the Skies to Spot Clogged Roads. IEEE Consum. Electron. Mag. 2019, 8, 81–86. [Google Scholar] [CrossRef]

- Shawky, M.; Alsobky, A.; Al Sobky, A.; Hassan, A. Traffic safety assessment for roundabout intersections using drone photography and conflict technique. Ain Shams Eng. J. 2023, 14, 102115. [Google Scholar] [CrossRef]

- Gupta, A.; Mhala, P.; Mangal, M.; Yadav, K.; Sharma, S. Traffic Sign Sensing: A Deep Learning approach for enhanced Road Safety. Preprint (Version 1). 2024. Available online: https://www.researchsquare.com/article/rs-3889986/v1 (accessed on 12 March 2025). [CrossRef]

- Tian, B.; Yao, Q.; Gu, Y.; Wang, K.; Li, Y. Video processing techniques for traffic flow monitoring: A survey. In Proceedings of the 2011 14th International IEEE Conference on Intelligent Transportation Systems (ITSC), Washington, DC, USA, 5–7 October 2011; pp. 1103–1108. [Google Scholar] [CrossRef]

- Ke, R.; Li, Z.; Tang, J.; Pan, Z.; Wang, Y. Real-Time Traffic Flow Parameter Estimation From UAV Video Based on Ensemble Classifier and Optical Flow. IEEE Trans. Intell. Transp. Syst. 2019, 20, 54–64. [Google Scholar] [CrossRef]

- Eskandari Torbaghan, M.; Sasidharan, M.; Reardon, L.; Muchanga-Hvelplund, L.C. Understanding the potential of emerging digital technologies for improving road safety. Accid. Anal. Prev. 2022, 166, 106543. [Google Scholar] [CrossRef]

- Hou, Y.; Biljecki, F. A comprehensive framework for evaluating the quality of street view imagery. Int. J. Appl. Earth Obs. Geoinf. 2022, 115, 103094. [Google Scholar] [CrossRef]

- Yun, T.; Li, J.; Ma, L.; Zhou, J.; Wang, R.; Eichhorn, M.P.; Zhang, H. Status, advancements and prospects of deep learning methods applied in forest studies. Int. J. Appl. Earth Obs. Geoinf. 2024, 131, 103938. [Google Scholar] [CrossRef]

- Bayomi, N.; Fernandez, J.E. Eyes in the Sky: Drones Applications in the Built Environment under Climate Change Challenges. Drones 2023, 7, 637. [Google Scholar] [CrossRef]

- Tran, T.H.P.; Jeon, J.W. Accurate Real-Time Traffic Light Detection Using YOLOv4. In Proceedings of the 2020 IEEE International Conference on Consumer Electronics—Asia (ICCE-Asia), Seoul, Republic of Korea, 1–3 November 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Singh, R.; Danish, M.; Purohit, V.; Siddiqui, A. Traffic Sign Detection using YOLOv4. Int. J. Creat. Res. Thoughts 2021, 9, i891–i897. [Google Scholar]

- Wang, Z.; Men, S.; Bai, Y.; Yuan, Y.; Wang, J.; Wang, K.; Zhang, L. Improved Small Object Detection Algorithm CRL-YOLOv5. Sensors 2024, 24, 6437. [Google Scholar] [CrossRef]

- Feng, F.; Hu, Y.; Li, W.; Yang, F. Improved YOLOv8 algorithms for small object detection in aerial imagery. J. King Saud Univ.-Comput. Inf. Sci. 2024, 36, 102113. [Google Scholar] [CrossRef]

- Flores-Calero, M.; Astudillo, C.A.; Guevara, D.; Maza, J.; Lita, B.S.; Defaz, B.; Ante, J.S.; Zabala-Blanco, D.; Armingol Moreno, J.M. Traffic Sign Detection and Recognition Using YOLO Object Detection Algorithm: A Systematic Review. Mathematics 2024, 12, 297. [Google Scholar] [CrossRef]

- Cao, J.; Li, P.; Zhang, H.; Su, G. An Improved YOLOv4 Lightweight Traffic Sign Detection Algorithm. IAENG Int. J. Comput. Sci. 2023, 50, 825–831. [Google Scholar]

- Nepal, U.; Eslamiat, H. Comparing YOLOv3, YOLOv4 and YOLOv5 for Autonomous Landing Spot Detection in Faulty UAVs. Sensors 2022, 22, 464. [Google Scholar] [CrossRef]

- Elamin, A.; El-Rabbany, A. UAV-Based Multi-Sensor Data Fusion for Urban Land Cover Mapping Using a Deep Convolutional Neural Network. Remote Sens. 2022, 14, 4298. [Google Scholar] [CrossRef]

- Jiang, F.; Ma, L.; Broyd, T.; Chen, W.; Luo, H. Building digital twins of existing highways using map data based on engineering expertise. Autom. Constr. 2022, 134, 104081. [Google Scholar] [CrossRef]

- Truong-Hong, L.; Lindenbergh, R. Automatically extracting surfaces of reinforced concrete bridges from terrestrial laser scanning point clouds. Autom. Constr. 2022, 135, 104127. [Google Scholar] [CrossRef]

- Gao, Q.; Shen, X. ThickSeg: Efficient semantic segmentation of large-scale 3D point clouds using multi-layer projection. Image Vis. Comput. 2021, 108, 104161. [Google Scholar] [CrossRef]

- Kaijaluoto, R.; Kukko, A.; El Issaoui, A.; Hyyppä, J.; Kaartinen, H. Semantic segmentation of point cloud data using raw laser scanner measurements and deep neural networks. ISPRS Open J. Photogramm. Remote Sens. 2022, 3, 100011. [Google Scholar] [CrossRef]

- Li, W.; Zheng, T.; Yang, Z.; Li, M.; Sun, C.; Yang, X. Classification and detection of insects from field images using deep learning for smart pest management: A systematic review. Ecol. Inform. 2021, 66, 101460. [Google Scholar] [CrossRef]

- Gao, X.; Bai, X.; Zhou, K. Research on the Application of Drone Remote Sensing and Target Detection in Civilian Fields. In Proceedings of the 2023 International Conference on High Performance Big Data and Intelligent Systems (HDIS), Macau, China, 6–8 December 2023; pp. 108–112. [Google Scholar] [CrossRef]

- Boudiaf, A.; Sumaiti, A.A.; Dias, J. Image-based Obstacle Avoidance using 3DConv Network for Rocky Environment. In Proceedings of the 2022 IEEE International Symposium on Robotic and Sensors Environments (ROSE), Abu Dhabi, United Arab Emirates, 14–15 November 2022; pp. 01–07. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, Q.; Zhu, Q.; Liu, L.; Li, C.; Zheng, D. A Survey of Mobile Laser Scanning Applications and Key Techniques over Urban Areas. Remote Sens. 2019, 11, 1540. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar] [CrossRef]

- Le, X.; Wang, Y.; Jo, J. Combining Deep and Handcrafted Image Features for Vehicle Classification in Drone Imagery. In Proceedings of the 2018 Digital Image Computing: Techniques and Applications (DICTA), Canberra, ACT, Australia, 10–13 December 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Acatay, O.; Sommer, L.; Schumann, A.; Beyerer, J. Comprehensive Evaluation of Deep Learning based Detection Methods for Vehicle Detection in Aerial Imagery. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Sommer, L.W.; Schuchert, T.; Beyerer, J. Fast Deep Vehicle Detection in Aerial Images. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; pp. 311–319. [Google Scholar] [CrossRef]

- Seidaliyeva, U.; Ilipbayeva, L.; Taissariyeva, K.; Smailov, N.; Matson, E.T. Advances and Challenges in Drone Detection and Classification Techniques: A State-of-the-Art Review. Sensors 2024, 24, 125. [Google Scholar] [CrossRef]

- Xiuling, Z.; Huijuan, W.; Yu, S.; Gang, C.; Suhua, Z.; Quanbo, Y. Starting from the structure: A review of small object detection based on deep learning. Image Vis. Comput. 2024, 146, 105054. [Google Scholar] [CrossRef]

- Ruan, J.; Cui, H.; Huang, Y.; Li, T.; Wu, C.; Zhang, K. A review of occluded objects detection in real complex scenarios for autonomous driving. Green Energy Intell. Transp. 2023, 2, 100092. [Google Scholar] [CrossRef]

- Akshatha, K.R.; Karunakar, A.K.; Satish Shenoy, B.; Phani Pavan, K.; Chinmay, V.D. Manipal-UAV person detection dataset: A step towards benchmarking dataset and algorithms for small object detection. ISPRS J. Photogramm. Remote Sens. 2023, 195, 77–89. [Google Scholar] [CrossRef]

- Yasmine, G.; Maha, G.; Hicham, M. Anti-drone systems: An attention based improved YOLOv7 model for a real-time detection and identification of multi-airborne target. Intell. Syst. Appl. 2023, 20, 200296. [Google Scholar] [CrossRef]

- Souza, B.J.; Stefenon, S.F.; Singh, G.; Freire, R.Z. Hybrid-YOLO for classification of insulators defects in transmission lines based on UAV. Int. J. Electr. Power Energy Syst. 2023, 148, 108982. [Google Scholar] [CrossRef]

- Bhavsar, Y.M.; Zaveri, M.S.; Raval, M.S.; Zaveri, S.B. Vision-based investigation of road traffic and violations at urban roundabout in India using UAV video: A case study. Transp. Eng. 2023, 14, 100207. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Tabernik, D.; Muhovič, J.; Skočaj, D. Dense center-direction regression for object counting and localization with point supervision. Pattern Recognit. 2024, 153, 110540. [Google Scholar] [CrossRef]

- Koelle, M.; Laupheimer, D.; Walter, V.; Haala, N.; Soergel, U. Which 3D data representation does the crowd like best? Crowd-based active learning for coupled semantic segmentation of point clouds and textured meshes. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 2, 93–100. [Google Scholar] [CrossRef]

- Lo Bianco, L.C.; Beltrán, J.; López, G.F.; García, F.; Al-Kaff, A. Joint semantic segmentation of road objects and lanes using Convolutional Neural Networks. Robot. Auton. Syst. 2020, 133, 103623. [Google Scholar] [CrossRef]

- Villareal, M.K.; Tongco, A.F. Multi-sensor Fusion Workflow for Accurate Classification and Mapping of Sugarcane Crops. Eng. Technol. Appl. Sci. Res. 2019, 9, 4085–4091. [Google Scholar] [CrossRef]

- Quan, Y.; Tong, Y.; Feng, W.; Dauphin, G.; Huang, W.; Zhu, W.; Xing, M. Relative Total Variation Structure Analysis-Based Fusion Method for Hyperspectral and LiDAR Data Classification. Remote Sens. 2021, 13, 1143. [Google Scholar] [CrossRef]

- Ahmed, N.; Islam, M.N.; Tuba, A.S.; Mahdy, M.; Sujauddin, M. Solving visual pollution with deep learning: A new nexus in environmental management. J. Environ. Manag. 2019, 248, 109253. [Google Scholar] [CrossRef]

- Alizadeh Kharazi, B.; Behzadan, A.H. Flood depth mapping in street photos with image processing and deep neural networks. Comput. Environ. Urban Syst. 2021, 88, 101628. [Google Scholar] [CrossRef]

- Kwan, C.; Gribben, D.; Ayhan, B.; Li, J.; Bernabe, S.; Plaza, A. An Accurate Vegetation and Non-Vegetation Differentiation Approach Based on Land Cover Classification. Remote Sens. 2020, 12, 3880. [Google Scholar] [CrossRef]

- Fernández-Alvarado, J.; Fernández-Rodríguez, S. 3D environmental urban BIM using LiDAR data for visualisation on Google Earth. Autom. Constr. 2022, 138, 104251. [Google Scholar] [CrossRef]

- Xiao, W.; Cao, H.; Tang, M.; Zhang, Z.; Chen, N. 3D urban object change detection from aerial and terrestrial point clouds: A review. Int. J. Appl. Earth Obs. Geoinf. 2023, 118, 103258. [Google Scholar] [CrossRef]

- Lugo, G.; Li, R.; Chauhan, R.; Wang, Z.; Tiwary, P.; Pandey, U.; Patel, A.; Rombough, S.; Schatz, R.; Cheng, I. LiSurveying: A high-resolution TLS-LiDAR benchmark. Comput. Graph. 2022, 107, 116–130. [Google Scholar] [CrossRef]

- Balali, V.; Jahangiri, A.; Machiani, S.G. Multi-class US traffic signs 3D recognition and localization via image-based point cloud model using color candidate extraction and texture-based recognition. Adv. Eng. Inform. 2017, 32, 263–274. [Google Scholar] [CrossRef]

- Gao, C.; Guo, M.; Zhao, J.; Cheng, P.; Zhou, Y.; Zhou, T.; Guo, K. An automated multi-constraint joint registration method for mobile LiDAR point cloud in repeated areas. Measurement 2023, 222, 113620. [Google Scholar] [CrossRef]

- Bolkas, D.; Guthrie, K.; Durrutya, L. sUAS LiDAR and Photogrammetry Evaluation in Various Surfaces for Surveying and Mapping. J. Surv. Eng. 2024, 150, 04023021. [Google Scholar] [CrossRef]

- Hasanpour Zaryabi, E.; Saadatseresht, M.; Ghanbari Parmehr, E. An object-based classification framework for als point cloud in urban areas. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 10, 279–286. [Google Scholar] [CrossRef]

- Fang, L.; You, Z.; Shen, G.; Chen, Y.; Li, J. A joint deep learning network of point clouds and multiple views for roadside object classification from lidar point clouds. ISPRS J. Photogramm. Remote Sens. 2022, 193, 115–136. [Google Scholar] [CrossRef]

- Suleymanoglu, B.; Gurturk, M.; Yilmaz, Y.; Soycan, A.; Soycan, M. Comparison of Unmanned Aerial Vehicle-LiDAR and Image-Based Mobile Mapping System for Assessing Road Geometry Parameters via Digital Terrain Models. Transp. Res. Rec. 2023, 2677, 617–632. [Google Scholar] [CrossRef]

- Naimaee, R.; Saadatseresht, M.; Omidalizarandi, M. Automatic extraction of control points from 3D Lidar mobile mapping and UAV imagery for aerial triangulation. In ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Proceedings of the GeoSpatial Conference 2022—Joint 6th SMPR and 4th GIResearch Conferences, Tehran, Iran, 19–22 February 2023; Curran: Red Hook, NY, USA, 2023; Volume X-4/W1-2022, pp. 581–588. [Google Scholar] [CrossRef]

- Ma, H.; Liu, Y.; Ren, Y.; Wang, D.; Yu, L.; Yu, J. Improved CNN Classification Method for Groups of Buildings Damaged by Earthquake, Based on High Resolution Remote Sensing Images. Remote Sens. 2020, 12, 260. [Google Scholar] [CrossRef]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. Detection and Tracking Meet Drones Challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 7380–7399. [Google Scholar] [CrossRef]

- Pi, Y.; Nath, N.D.; Behzadan, A.H. Convolutional neural networks for object detection in aerial imagery for disaster response and recovery. Adv. Eng. Inform. 2020, 43, 101009. [Google Scholar] [CrossRef]

- Zhou, T.; Hasheminasab, S.M.; Ravi, R.; Habib, A. LiDAR-Aided Interior Orientation Parameters Refinement Strategy for Consumer-Grade Cameras Onboard UAV Remote Sensing Systems. Remote Sens. 2020, 12, 2268. [Google Scholar] [CrossRef]

- Bakirci, M. Utilizing YOLOv8 for enhanced traffic monitoring in intelligent transportation systems (ITS) applications. Digit. Signal Process. 2024, 152, 104594. [Google Scholar] [CrossRef]

- Lin, Y.C.; Habib, A. Semantic segmentation of bridge components and road infrastructure from mobile LiDAR data. ISPRS Open J. Photogramm. Remote Sens. 2022, 6, 100023. [Google Scholar] [CrossRef]

- Vassilev, H.; Laska, M.; Blankenbach, J. Uncertainty-aware point cloud segmentation for infrastructure projects using Bayesian deep learning. Autom. Constr. 2024, 164, 105419. [Google Scholar] [CrossRef]

- Guan, H.; Lei, X.; Yu, Y.; Zhao, H.; Peng, D.; Marcato Junior, J.; Li, J. Road marking extraction in UAV imagery using attentive capsule feature pyramid network. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102677. [Google Scholar] [CrossRef]

- Balado, J.; González, E.; Arias, P.; Castro, D. Novel Approach to Automatic Traffic Sign Inventory Based on Mobile Mapping System Data and Deep Learning. Remote Sens. 2020, 12, 442. [Google Scholar] [CrossRef]

- Sun, Z.L.; Wang, H.; Lau, W.S.; Seet, G.; Wang, D. Application of BW-ELM model on traffic sign recognition. Neurocomputing 2014, 128, 153–159. [Google Scholar] [CrossRef]

- Cheng, J.C.; Wang, M. Automated detection of sewer pipe defects in closed-circuit television images using deep learning techniques. Autom. Constr. 2018, 95, 155–171. [Google Scholar] [CrossRef]

- Firmansyah, H.R.; Sarli, P.W.; Twinanda, A.P.; Santoso, D.; Imran, I. Building typology classification using convolutional neural networks utilizing multiple ground-level image process for city-scale rapid seismic vulnerability assessment. Eng. Appl. Artif. Intell. 2024, 131, 107824. [Google Scholar] [CrossRef]

- Buján, S.; Guerra-Hernández, J.; González-Ferreiro, E.; Miranda, D. Forest Road Detection Using LiDAR Data and Hybrid Classification. Remote Sens. 2021, 13, 393. [Google Scholar] [CrossRef]

- Ma, X.; Li, X.; Tang, X.; Zhang, B.; Yao, R.; Lu, J. Deconvolution Feature Fusion for traffic signs detection in 5G driven unmanned vehicle. Phys. Commun. 2021, 47, 101375. [Google Scholar] [CrossRef]

- Qiu, Z.; Martínez-Sánchez, J.; Brea, V.M.; López, P.; Arias, P. Low-cost mobile mapping system solution for traffic sign segmentation using Azure Kinect. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102895. [Google Scholar] [CrossRef]

- Saovana, N.; Yabuki, N.; Fukuda, T. Automated point cloud classification using an image-based instance segmentation for structure from motion. Autom. Constr. 2021, 129, 103804. [Google Scholar] [CrossRef]

- Zheng, J.; Chen, L.; Wang, J.; Chen, Q.; Huang, X.; Jiang, L. Knowledge distillation with T-Seg guiding for lightweight automated crack segmentation. Autom. Constr. 2024, 166, 105585. [Google Scholar] [CrossRef]

- Wei, W.; Shu, Y.; Liu, J.; Dong, L.; Jia, L.; Wang, J.; Guo, Y. Research on a hierarchical feature-based contour extraction method for spatial complex truss-like structures in aerial images. Eng. Appl. Artif. Intell. 2024, 127, 107313. [Google Scholar] [CrossRef]

- Zhang, Y.; Carballo, A.; Yang, H.; Takeda, K. Perception and sensing for autonomous vehicles under adverse weather conditions: A survey. ISPRS J. Photogramm. Remote Sens. 2023, 196, 146–177. [Google Scholar] [CrossRef]

- Aslan, M.F.; Durdu, A.; Yusefi, A.; Yilmaz, A. HVIOnet: A deep learning based hybrid visual–inertial odometry approach for unmanned aerial system position estimation. Neural Netw. 2022, 155, 461–474. [Google Scholar] [CrossRef] [PubMed]

- White, C.T.; Reckling, W.; Petrasova, A.; Meentemeyer, R.K.; Mitasova, H. Rapid-DEM: Rapid Topographic Updates through Satellite Change Detection and UAS Data Fusion. Remote Sens. 2022, 14, 1718. [Google Scholar] [CrossRef]

- Chen, S.; Shi, W.; Zhou, M.; Zhang, M.; Chen, P. Automatic Building Extraction via Adaptive Iterative Segmentation With LiDAR Data and High Spatial Resolution Imagery Fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2081–2095. [Google Scholar] [CrossRef]

- Chow, J.K.; Liu, K.F.; Tan, P.S.; Su, Z.; Wu, J.; Li, Z.; Wang, Y.H. Automated defect inspection of concrete structures. Autom. Constr. 2021, 132, 103959. [Google Scholar] [CrossRef]

- Dong, H.; Chen, X.; Särkkä, S.; Stachniss, C. Online pole segmentation on range images for long-term LiDAR localization in urban environments. Robot. Auton. Syst. 2023, 159, 104283. [Google Scholar] [CrossRef]

- Gupta, A.; Anpalagan, A.; Guan, L.; Khwaja, A.S. Deep learning for object detection and scene perception in self-driving cars: Survey, challenges, and open issues. Array 2021, 10, 100057. [Google Scholar] [CrossRef]

- Xie, C.; Liu, Q.; Chen, B.; Hao, Z. Evaluation and analysis of feature point detection methods based on vSLAM systems. Image Vis. Comput. 2024, 146, 105015. [Google Scholar] [CrossRef]

- Wen, L.H.; Jo, K.H. Deep learning-based perception systems for autonomous driving: A comprehensive survey. Neurocomputing 2022, 489, 255–270. [Google Scholar] [CrossRef]

- Balaska, V.; Bampis, L.; Gasteratos, A. Self-localization based on terrestrial and satellite semantics. Eng. Appl. Artif. Intell. 2022, 111, 104824. [Google Scholar] [CrossRef]

- Zhang, P.; Du, P.; Lin, C.; Wang, X.; Li, E.; Xue, Z.; Bai, X. A Hybrid Attention-Aware Fusion Network (HAFNet) for Building Extraction from High-Resolution Imagery and LiDAR Data. Remote Sens. 2020, 12, 3764. [Google Scholar] [CrossRef]

- Benedek, C.; Majdik, A.; Nagy, B.; Rozsa, Z.; Sziranyi, T. Positioning and perception in LIDAR point clouds. Digit. Signal Process. 2021, 119, 103193. [Google Scholar] [CrossRef]

- Luo, S.; Wen, S.; Zhang, L.; Lan, Y.; Chen, X. Extraction of crop canopy features and decision-making for variable spraying based on unmanned aerial vehicle LiDAR data. Comput. Electron. Agric. 2024, 224, 109197. [Google Scholar] [CrossRef]

- Behley, J.; Stachniss, C. Efficient Surfel-Based SLAM using 3D Laser Range Data in Urban Environments. In Robotics: Science and Systems XIV; 2018; Available online: https://www.roboticsproceedings.org/rss14/p16.pdf (accessed on 12 March 2025). [CrossRef]

- Ouattara, I.; Korhonen, V.; Visala, A. LiDAR-odometry based UAV pose estimation in young forest environment. IFAC-PapersOnLine 2022, 55, 95–100. [Google Scholar] [CrossRef]

- Häne, C.; Heng, L.; Lee, G.H.; Fraundorfer, F.; Furgale, P.; Sattler, T.; Pollefeys, M. 3D visual perception for self-driving cars using a multi-camera system: Calibration, mapping, localization, and obstacle detection. Image Vis. Comput. 2017, 68, 14–27. [Google Scholar] [CrossRef]

- Narazaki, Y.; Hoskere, V.; Chowdhary, G.; Spencer, B.F. Vision-based navigation planning for autonomous post-earthquake inspection of reinforced concrete railway viaducts using unmanned aerial vehicles. Autom. Constr. 2022, 137, 104214. [Google Scholar] [CrossRef]

- Cong, Y.; Chen, C.; Yang, B.; Liang, F.; Ma, R.; Zhang, F. CAOM: Change-aware online 3D mapping with heterogeneous multi-beam and push-broom LiDAR point clouds. ISPRS J. Photogramm. Remote Sens. 2023, 195, 204–219. [Google Scholar] [CrossRef]

- Qian, B.; Al Said, N.; Dong, B. New technologies for UAV navigation with real-time pattern recognition. Ain Shams Eng. J. 2024, 15, 102480. [Google Scholar] [CrossRef]

- Chouhan, R.; Dhamaniya, A.; Antoniou, C. Analysis of driving behavior in weak lane disciplined traffic at the merging and diverging sections using unmanned aerial vehicle data. Phys. A Stat. Mech. Its Appl. 2024, 646, 129865. [Google Scholar] [CrossRef]

- Fu, R.; Chen, C.; Yan, S.; Heidari, A.A.; Wang, X.; Escorcia-Gutierrez, J.; Mansour, R.F.; Chen, H. Gaussian similarity-based adaptive dynamic label assignment for tiny object detection. Neurocomputing 2023, 543, 126285. [Google Scholar] [CrossRef]

- Cano-Ortiz, S.; Lloret Iglesias, L.; Martinez Ruiz del Árbol, P.; Lastra-González, P.; Castro-Fresno, D. An end-to-end computer vision system based on deep learning for pavement distress detection and quantification. Constr. Build. Mater. 2024, 416, 135036. [Google Scholar] [CrossRef]

- Buters, T.M.; Bateman, P.W.; Robinson, T.; Belton, D.; Dixon, K.W.; Cross, A.T. Methodological Ambiguity and Inconsistency Constrain Unmanned Aerial Vehicles as A Silver Bullet for Monitoring Ecological Restoration. Remote Sens. 2019, 11, 1180. [Google Scholar] [CrossRef]

- Alsadik, B.; Remondino, F. Flight Planning for LiDAR-Based UAS Mapping Applications. ISPRS Int. J. Geo-Inf. 2020, 9, 378. [Google Scholar] [CrossRef]

- Bakuła, K.; Pilarska, M.; Salach, A.; Kurczyński, Z. Detection of Levee Damage Based on UAS Data—Optical Imagery and LiDAR Point Clouds. ISPRS Int. J. Geo-Inf. 2020, 9, 248. [Google Scholar] [CrossRef]

- Yasin Yiğit, A.; Uysal, M. Virtual reality visualisation of automatic crack detection for bridge inspection from 3D digital twin generated by UAV photogrammetry. Measurement 2025, 242, 115931. [Google Scholar] [CrossRef]

- Nath, N.D.; Cheng, C.S.; Behzadan, A.H. Drone mapping of damage information in GPS-Denied disaster sites. Adv. Eng. Inform. 2022, 51, 101450. [Google Scholar] [CrossRef]

- Hyyppä, E.; Muhojoki, J.; Yu, X.; Kukko, A.; Kaartinen, H.; Hyyppä, J. Efficient coarse registration method using translation- and rotation-invariant local descriptors towards fully automated forest inventory. ISPRS Open J. Photogramm. Remote Sens. 2021, 2, 100007. [Google Scholar] [CrossRef]

- Soilán, M.; Truong-Hong, L.; Riveiro, B.; Laefer, D. Automatic extraction of road features in urban environments using dense ALS data. Int. J. Appl. Earth Obs. Geoinf. 2018, 64, 226–236. [Google Scholar] [CrossRef]

- Fu, Y.; Niu, Y.; Wang, L.; Li, W. Individual-Tree Segmentation from UAV–LiDAR Data Using a Region-Growing Segmentation and Supervoxel-Weighted Fuzzy Clustering Approach. Remote Sens. 2024, 16, 608. [Google Scholar] [CrossRef]

- Guan, H.; Sun, X.; Su, Y.; Hu, T.; Wang, H.; Wang, H.; Peng, C.; Guo, Q. UAV-lidar aids automatic intelligent powerline inspection. Int. J. Electr. Power Energy Syst. 2021, 130, 106987. [Google Scholar] [CrossRef]

- Li, X.; Liu, C.; Wang, Z.; Xie, X.; Li, D.; Xu, L. Airborne LiDAR: State-of-the-art of system design, technology and application. Meas. Sci. Technol. 2020, 32, 032002. [Google Scholar] [CrossRef]

- Tang, H.; Kamei, S.; Morimoto, Y. Data Augmentation Methods for Enhancing Robustness in Text Classification Tasks. Algorithms 2023, 16, 59. [Google Scholar] [CrossRef]

- AbuKhait, J. US Road Sign Detection and Visibility Estimation using Artificial Intelligence Techniques. Int. J. Adv. Comput. Sci. Appl. 2024, 15. [Google Scholar] [CrossRef]

- Wang, W.; He, H.; Ma, C. An improved Deeplabv3+ model for semantic segmentation of urban environments targeting autonomous driving. Int. J. Comput. Commun. Control 2023, 18, e5879. [Google Scholar] [CrossRef]

- Zayani, H.M. Unveiling the Potential of YOLOv9 through Comparison with YOLOv8. Int. J. Intell. Syst. Appl. Eng. 2024, 12, 2845–2854. [Google Scholar]

- Mei, J.; Zhu, W. BGF-YOLOv10: Small Object Detection Algorithm from Unmanned Aerial Vehicle Perspective Based on Improved YOLOv10. Sensors 2024, 24, 6911. [Google Scholar] [CrossRef]

- Cheng, S.; Han, Y.; Wang, Z.; Liu, S.; Yang, B.; Li, J. An Underwater Object Recognition System Based on Improved YOLOv11. Electronics 2025, 14, 201. [Google Scholar] [CrossRef]

- Mukhamediev, R.I.; Symagulov, A.; Kuchin, Y.; Zaitseva, E.; Bekbotayeva, A.; Yakunin, K.; Assanov, I.; Levashenko, V.; Popova, Y.; Akzhalova, A.; et al. Review of Some Applications of Unmanned Aerial Vehicles Technology in the Resource-Rich Country. Appl. Sci. 2021, 11, 10171. [Google Scholar] [CrossRef]

- Ma, L.; Li, Y.; Li, J.; Wang, C.; Wang, R.; Chapman, M.A. Mobile Laser Scanned Point-Clouds for Road Object Detection and Extraction: A Review. Remote Sens. 2018, 10, 1531. [Google Scholar] [CrossRef]

- Shah, S.F.A.; Mazhar, T.; Al Shloul, T.; Shahzad, T.; Hu, Y.C.; Mallek, F.; Hamam, H. Applications, challenges, and solutions of unmanned aerial vehicles in smart city using blockchain. PeerJ Comput. Sci. 2024, 10, e1776. [Google Scholar] [CrossRef] [PubMed]

| Database | Equation |

|---|---|

| Web of Science | TS= ((“traffic sign*” OR “road sign” OR “signage”) AND (“detect” OR “inventor” OR “manage”) AND (“drone” OR “UAV” OR “unmanned aerial vehicle”) AND (“artificial intelligence” OR “machine learning” OR “deep learning”) NOT (“education” OR “medical”)) |

| ScienceDirect | (“traffic sign” OR “road sign” OR “signage”) AND (“detection” OR “inventory”) AND (“drones” OR “UAV”) AND (“artificial intelligence” OR “machine learning”) |

| Scopus | TITLE-ABS-KEY ((signage AND detection) OR (traffic AND sign AND inventory) OR (lidar)) AND (drone OR uav) AND (“machine learning” OR “deep learning”) AND (geospatial AND analysis OR gis OR “geographic information systems”) AND (LIMIT-TO (LANGUAGE, “English”)) |

| Model | Urban Accuracy (%) | Rural Accuracy (%) | Urban Recall (%) | Rural Recall (%) | Ref. |

|---|---|---|---|---|---|

| Faster R-CNN | 87 | 90 | 83 | 86 | [1] |

| YOLOv4 | 92 | 88 | 85 | 82 | [2] |

| YOLOv3 | YOLOv4 | YOLOv5 | |

|---|---|---|---|

| Neural network type | Fully convolutional | Fully convolutional | Fully convolutional |

| Backbone feature extractor | Darknet-53 | CSPDarknet53 | CSPDarknet53 |

| Loss function | Binary cross-entropy | Binary cross-entropy | Binary cross-entropy and Logits loss function |

| Neck | FPN | SSP and PANet | PANet |

| Head | YOLO layer | YOLO layer | YOLO layer |

| UAVs Type | Sensors Used | AI Algorithm | Metric | Application Domain | Key Results | Ref. |

|---|---|---|---|---|---|---|

| DJI ZenmuseP1 | High-resolution camera | MSA-CenterNet | mAP: 86.7%; Precision: 89.2%; Recall: 90.6% | Detection of infrastructure along roads | Significant improvement in small object detection; outperformed other algorithms like SSD, Faster R-CNN, RetinaNet, and YOLOv5 in most categories | [3] |

| DJI Matrice600 replica | RGB camera | Faster R-CNN | AP mAP: 56.30%; AP: 43.8% (all areas), 60.5% (small areas), 51.8% (medium areas) | Traffic sign detection in civil infrastructures | Better performance on signs; creation of new dataset with greater sign variety | [17] |

| Multirotor | RGB camera | Improved YOLOv4 | mAP: 52.76% (VisDrone2019); mAP: 96.98% (S2TLD) | Small object detection in aerial images and urban traffic | Reduces parameters; improves small object detection; outperforms original YOLOv4 | [18] |

| Multirotor | LiDAR and Multispectral Camera | SCDNET | 80% | Rural roads | Improvement in signage detection accuracy | [21] |

| Multirotor | RGB camera | Shadow detection, EAOP | mAP: 85% | Various | Segmentation of complex images | [49] |

| Multirotor | Front camera | DeepLab v3+ | Accuracy: 93.14% | Autonomous vehicles | Accuracy in segmentation of low-resolution images | [54] |

| UAVs | high-resolution cameras implied | Improved YOLOv8 | Improvements in mAP@0.5 and mAP@0.5:0.95 | Drone aerial target detection | Enhanced precision; lighter model; superior detection; optimized efficiency | [68] |

| Multirotor | LiDAR | CRG/VRG | F1-Score: 0.93 | Bridges and roads | Automatic segmentation and detection of road infrastructure | [74] |

| Custom UAVs | LiDAR | 3D CNNs (SS-3DCNN) | mIoU: 80.1% | Forest environments | Semantic segmentation of point clouds applicable to road signage | [75] |

| DJI Inspire 2 | Cameras | CNNs | 95%; 85% | Visual pollution classification | Model achieved high accuracy; applicable to live videos/images | [98] |

| UAVs-LiDAR and MPS | LiDAR; GNSS; IMU; GoPro Hero 7; GNSS Topcon HyperPro | CSF; SfM | RMSE: 1.8–2.3 cm; Average deviation: 0.18–0.19% | Road geometry analysis | Precise extraction of geometric parameters; MPS as viable alternative to LiDAR | [109] |

| DJI Phantom 4 Pro | DJI camera; Velodyne Puck LiDAR; SBG Ellipse-D IMU | SIFT; SfM; PCA; Sparse Bundle Adjustment | RMSE: 0.5 pixels; RMSE: 5 cm | UAVs photogrammetry; Mobile LiDAR mapping | Automatic extraction of control points; improved accuracy; reduced acquisition time | [110] |

| N/A | High-resolution aerial images | CNNs Inception V3 | Accuracy: 90.07%, Kappa: 0.81 | Post-earthquake building group damage classification | Effective classification of building damage at block level | [111] |

| Various UAVs | Cameras | Various algorithms | , , | Object detection in images | 10,209 images; 6471 training, 548 validation, 1580 test-challenge, 1610 test-dev | [112] |

| UAVs and helicopters | RGB cameras | YOLOv2 | 80.69% mAP for helicopters; 74.48% mAP for UAVs | Object detection in post-disaster aerial images | Better performance with balanced data and pre-training on VOC; generalizable model to new disasters | [113] |

| Multi-rotor | RGB, RF, Audio, LiDAR | CNNs, YOLO, SSD, Faster R-CNN | Accuracy: 70–93% | UAVs detection, infrastructure inspection, forestry | Improved detection and classification; real-time processing | [114] |

| UAVs | High-resolution cameras | YOLOv8 | Increased precision, faster processing speeds | Traffic monitoring and ITS | YOLOv8 achieved higher accuracy and speed in processing images for vehicle detection compared to YOLOv5 | [115] |

| Challenges | Segmentation Technique | AI Algorithm | Advantages | Ref. |

|---|---|---|---|---|

| Data management and processing of large 3D volumes | Semantic segmentation with LiDAR | CNNs | High precision in capturing 3D point clouds, even in motion | [48] |

| Limitation to frontal images | Semantic segmentation and super-resolution | CN4SRSS, DeepLab v3+ | High accuracy in segmentation of low-resolution images | [54] |

| Complexity in iterative processing | Semantic segmentation of point clouds | Self-Sorting 3D Convolutional Neural Network (SS-3DCNN) | High efficiency in label assignment for point clouds | [75] |

| High computational cost and processing time | Mobile LiDAR, Semantic Segmentation | CNNs | Precision in segmentation of infrastructure components | [116] |

| Requires high computational power | Point cloud segmentation | Bayesian deep learning | Improved handling of uncertainty in complex scenarios | [117] |

| Complex processing in densely populated urban areas | Road marking segmentation | Feature pyramid networks | Improves accuracy in detecting objects such as road signs | [118] |

| Quantitative Challenge | Description | Ref. |

|---|---|---|

| Dependence on large datasets | The performance of DLMs relies on the availability of large and diverse datasets, which are not always accessible | [49] |

| Environmental condition limitations | Variability in lighting and weather conditions can affect the quality of data collected by UAVs, reducing detection accuracy | [116] |

| Lack of evaluation standards | The absence of standardized protocols for evaluating AI model performance hinders comparison between studies and affects replicability | [116] |

| Data processing capacity | AI algorithms used, such as YOLOv4, require robust computational processing to handle the large volumes of generated data | [117] |

| Real-time data processing | Multi-sensor systems present a significant challenge in requiring real-time data synchronization, which is costly and complex | [117] |

| High implementation costs | The integration of LiDAR sensors and RGB cameras significantly increases UAVs costs, limiting their application in real-world scenarios | [119] |

| Techniques | Advantages | Challenges | Evaluation Metrics | Ref. | |

|---|---|---|---|---|---|

| mAP (%) | Accuracy (%) | ||||

| Hybrid-YOLO (YOLOv5x + ResNet-18) | Better detection and low computational effort with small dataset and adaptable to embedded systems | Similarity between components and dataset limitations | 0.99262 | 0.99441 | [90] |

| CNNs; data augmentation; RMSprop optimization; L2 regularization | Automated classification of visual pollutants | Limitation due to dataset size | N/A | 85 | [98] |

| Mask R-CNN, Canny detector; Hough transform for sign and pole detection | Simple and scalable method for estimating depth using signs as reference | Errors due to reflection; unusual shapes and sign inclination | N/A | 100 | [99] |

| HOG + Color, SVM, SfM, RANSAC | Accurate 3D detection, automatic cleaning, efficient reconstruction | Sign variability, occlusions, noise | N/A | 90.15 | [104] |

| HOG + BW-ELM | Efficient; accurate | Memory limitation | N/A | 97.19 | [120] |

| Faster R-CNN with ZF, VGG_CNN_M_1024 and VGG16 networks; data augmentation; hyperparameter tuning | Automatic and accurate detection of multiple defects with less preprocessing and automatic feature extraction | Balancing accuracy and speed when handling images with multiple similar defects | 0.83 | N/A | [121] |

| CNN, GSV API, GradCAM, Oversampling, Data augmentation | Automation of seismic vulnerability assessment; reduction of costs and time | GSV limitations in rural areas; possible classification errors | N/A | 88.2 | [122] |

| YOLOv8 for aerial vehicle detection | Higher accuracy and speed; better detection of small vehicles and improved architecture | Difficulties in shadows; high altitude and congestion; Confusion between similar types | 79.7 | 80.3 | [115] |

| DFF-YOLOv3 | Improves detection of distant signs; maintains wide vision | Balancing accuracy and speed | 74.8 | N/A | [124] |

| Traffic sign segmentation using Azure Kinect | Low cost; no training required; processes high-resolution images; performs instance segmentation | Limited to speeds of 30–40 km/h; maximum distance of 16.2 m; low sensitivity to lateral signs | N/A | 82.73 | [125] |

| Techniques | Advantages | Challenges | Evaluation Metrics | Ref. | |

|---|---|---|---|---|---|

| mAP (%) | Accuracy (%) | ||||

| Siamese UNet; MAC; deep supervision attention; combined loss | Pixel-level semantic change detection; multi-scale capture; effective fusion; gradient vanishing mitigation | Limited annotations; extreme class imbalance, small-scale changes | N/A | 0.7306 | [21] |

| PC1+C3 detection; EAOP, ObP and Cholesky removal | Higher accuracy without NIR; effective for irregular shadows; good grass reconstruction | False positives on dark surfaces; incomplete reconstruction; roof issues; classifier variability | N/A | 97.54 | [49] |

| CN4SRSS with ARNet | Improves segmentation in LR images; reduces computational cost and focuses on important regions | Inference speed affected with large input data | N/A | 93.14 | [54] |

| Comprehensive SVI quality evaluation framework with selected metrics and multi-scale analysis | Applicable to commercial and crowdsourced SVI with holistic evaluation and open-source code | Difficulty in automatically measuring certain metrics and potential bias in manual review | N/A | N/A | [62] |

| Semantic segmentation with superpoint graphs; cross-labeling; transfer learning; geometric quality control | Reduction of manual training data; adaptation to different systems and scenes; refinement of results | Classification of abutments and scanning artifacts; limited ability to identify buildings | N/A | 87 | [116] |

| KPConv with Variational Inference (VI) and Monte Carlo (MC) Dropout | Improvement in uncertainty estimation and out-of-distribution example detection | Significant increase in execution time and slight decrease in segmentation accuracy | N/A | 77.52 | [117] |

| Attentive Capsule Feature Pyramid Network (ACapsFPN) | Multi-scale feature extraction and fusion; improved feature representation through attention | Severe occlusions and highly eroded road markings | N/A | 0.7366 | [118] |

| Hierarchical feature-based contour extraction | Better accuracy in complex backgrounds; less sensitive to annotation errors; modular and transferable | Loss of contextual information in patch division | 95.7 | 99.0 | [128] |

| Techniques | Advantages | Challenges | Evaluation Metrics | Ref. | |

|---|---|---|---|---|---|

| mAP (%) | Accuracy (%) | ||||

| 4DBatMap System (UAVs and USVs with LiDAR, cameras and echo sounders) | Complete coverage; integration of aerial and marine data; coastal change prediction | Processing and integration of multisensor data; weather conditions | N/A | 0.16–0.24 [m] GNSS RTK | [35] |

| Stepwise minimum spanning tree matching | Automatic registration of VLS and BLS point clouds; robust to differences in point density | Dependency on tree distribution; sensitivity to parameters | N/A | Rotation error ; Translation error m | [129] |

| CNN + BiLSTM for visual-inertial fusion | Does not require camera calibration; raw data processing; low computational cost | Generalization to real-world environments; real-time implementation | N/A | 0.167 | [130] |

| Land cover classification with random forest; fusion of UAVs and LiDAR DEMs | Rapid and targeted DEM updates; low cost; use of multiple data sources | Vertical alignment of DEMs; UAV flight limitations | N/A | 89–91% | [131] |

| Iterative adaptive segmentation with LiDAR and HSRI data fusion | Overcomes shadow occlusion; improves horizontal accuracy | Variability in building shapes; complexity of urban environments | N/A | Completeness: 94.1%; Correctness: 90.3%; Quality: 85.5% | [132] |

| Techniques | Advantages | Challenges | Evaluation Metrics | Ref. | |

|---|---|---|---|---|---|

| mAP (%) | Accuracy (%) | ||||

| Motion constraints for BA in uncalibrated multi-camera systems | Works without overlapping FoVs or precise synchronization | Sensitive to dense traffic and complex long trajectories | N/A | ≤86.12% improvement in MAE | [11] |

| Automated inspection with camera LiDAR and deep learning | Flexible data acquisition; automatic defect detection 3D reconstruction; BIM integration | Sensor calibration; data alignment; false positives | N/A | 90.8–93.2% | [133] |

| Image-based pole extraction for LiDAR localization | Rapid pole extraction; works in various environments; generates pseudo-labels for learning | Balance between accuracy and speed | N/A | 76.5% (geometric) 67.5% (learning) | [134] |

| Evaluation of feature point detection methods in vSLAM systems | Comprehensive analysis on complex datasets; comparison of traditional and neural network-based methods | Performance variability depending on environment; trade-off between accuracy and real-time operation | N/A | Varies by method and scenario | [136] |

| Semantic localization with enhanced satellite map and particle filter | Combines satellite and ground data; semantic and metric descriptors; robust in absence of GNSS | Requires prior area mapping; computational cost | N/A | 5.24 [m] RMSE | [138] |

| LiDAR for perception and positioning | High 3D precision; works in low light | High cost; sensitive to adverse weather | N/A | 90.3% (segmentation) | [140] |

| UAVs with LiDAR and variable spraying system | Reduces spraying volume; improves application efficiency; saves pesticides | Real-time processing of large point clouds | N/A | 76.04% (average coverage) | [141] |

| Surfel-based Mapping (SuMa) | Dense and real-time mapping with 3D laser; detecting loop closures and optimizing pose graph for consistent maps | Management of environments with few references, distinguishing between static and dynamic objects in ambiguous situations | N/A | 1.4% (average translational error) | [142] |

| LiDAR odometry NDT ISAM2 IMU preintegration | Real-time estimation; robust without GNSS; wpdatable local map | Young forest environment; limited UAVs resources | N/A | APE: 0.2471–0.3123 RPE: 0.1521–0.2646 | [143] |

| Multi-camera fisheye system for 3D perception in autonomous vehicles | coverage full FOV with few cameras; low cost; automatic calibration; dense and sparse mapping; visual localization; obstacle detection | Fisheye lens distortion; frequent calibration required; fusion of data from multiple cameras | N/A | ∼7 cm (mapping) cm (obstacles) | [144] |

| Online detection of bridge columns using UAVs | Robust recognition of structural components without prior 3D model | Computational optimization for real-time processing | N/A | 96.0% (structural components) | [145] |

| Deep learning-based object detection | Improved accuracy in small object detection | High computational complexity | 91.7% | N/A | [146] |

| Techniques | Advantages | Challenges | Evaluation Metrics | Ref. | |

|---|---|---|---|---|---|

| mAP (%) | Accuracy (%) | ||||

| MSA-CenterNet with ResNet50 multiscale fusion and attention | Improved detection of small objects and scale variation | Computational complexity | 86.7 | 89.2 | [3] |

| MOD-YOLO | Efficient multispectral fusion | Speed–memory–accuracy balance | 46.0 | 86.3 (mAP50) | [14] |

| Faster R-CNN with InceptionResnetV2 | Detection and georeferencing of traffic signs in UAVs images | Lack of labeled UAV images and class imbalance | 56.30 | N/A | [17] |

| CSP in YOLOv4 neck + SiLU + New detection head + Simplified BiFPN + Coordinated attention | Reduces parameters; improves accuracy; extracts more location information; focuses on spatial relationships | Slight speed reduction | 52.76 (VisDrone) 96.98 (S2TLD) | 62.71 (VisDrone) 93.35 (S2TLD) | [18] |

| CeDiRNet (center direction regression) | Support from surrounding pixels; domain-agnostic locator; point annotation | Occlusions between objects; very dense scenes | N/A | 98.74 (Acacia-06) | [93] |

| Modified MaskRCNN with 9 convolutional layers, 4 max-pooling, 1 detection | Real-time change detection; zoom for greater detail | Extended training time | N/A | 94.3 (F1-score) | [147] |

| UAVs + vehicle trajectories | High-quality data; simultaneous capture | Processing large data volume | N/A | MAPE < 6 | [148] |

| ADAS-GPM | Improves small object detection; dynamic label assignment; Gaussian similarity metric | IoU sensitivity for small objects; sample imbalance | 27.1 | AP50 58.6 | [149] |

| Techniques | Advantages | Challenges | Evaluation Metrics | Ref. | |

|---|---|---|---|---|---|

| mAP (%) | Accuracy (%) | ||||

| YOLOv5l with rule-based post-processing | Multiple defect detection; pavement condition index; low cost | Limitations with small defects | 59 | 61 | [150] |

| UAS LiDAR flight planning | Point density estimation; LiDAR sensor comparison | Weather factors not considered | N/A | 88.9–92.4 | [152] |

| UAS LiDAR + RGB for levee damage detection | High resolution; low cost; frequent acquisition | Variable GRVI threshold; manual verification required | N/A | 95–99 (GRVI) | [153] |

| Techniques | Advantages | Challenges | Evaluation Metrics | Ref. | |

|---|---|---|---|---|---|

| mAP (%) | Accuracy (%) | ||||

| Digital twin of roads using map data | Uses existing data without field survey; follows road engineering representation; detects road components; eliminates defects from low-quality data | Implemented only in flat areas; does not model side ditches | N/A | 6.7 [cm] | [73] |

| CRG and VRG | Automatic surface extraction; efficient processing of large datasets | Sensitive to input parameters; requires adjustment for complex bridges | N/A | 0.932–0.998 | [74] |

| ThickSeg | Preserves 3D geometry; efficient; versatile | Loss of details in projection | N/A | 53.4 [%] mIoU | [75] |

| Semantic segmentation of point cloud data using raw laser scanner measurements and deep neural networks | Works with non-georeferenced data; avoids trajectory error issues | Real-time classification; misclassification of branches as trunks; less spatial context than point cloud-based methods | N/A | 80.1 [%] mIoU | [76] |

| PointNet++ | Captures local features at multiple scales | Sensitive to the number of input points | N/A | 86.75 [%] | [103] |

| PCIS (point cloud classification based on image-based instance segmentation) | Uses 2D images to classify 3D point cloud | Occlusions and multiclass segmentation | 48.41 | 82.8 [%] | [126] |

| UAVs photogrammetry + AI for crack detection + digital twin augmented by damage + VR | Remote inspection; automatic detection; interactive 3D visualization | Image quality; environmental conditions; data size | N/A | 0.391 [cm] | [154] |

| Progressive homography with SIFT and RANSAC + Deep-SORT + Kalman + ICP | Does not require GPS; works with crowdsourced videos; projects countable and massive objects | Error accumulation over time; requires initial reference points | 74.48 | 32.7–36.9 [ft] | [155] |

| Techniques | Advantages | Challenges | Evaluation Metrics | Ref. | |

|---|---|---|---|---|---|

| mAP (%) | Accuracy (%) | ||||

| Hybrid method combining CHM-based segmentation and point-based clustering with multiscale adaptive LM filter and supervoxel-weighted fuzzy clustering | Superior overall performance compared to existing methods, enhanced computational efficiency, and accurate individual tree segmentation | Parameter adjustment for diverse forest types and tree densities | N/A | 88.27 | [158] |

| Techniques | Advantages | Challenges | Evaluation Metrics | Ref. | |

|---|---|---|---|---|---|

| mAP (%) | Accuracy (%) | ||||

| Radiometric analysis of VLP-32C laser scanner, road marking degradation model based on 3D point cloud intensity, generation of degradation maps | Reliable estimation of retroreflectivity without dedicated equipment, detection of highly degraded areas, intuitive visualization of degradation | Robust segmentation of highly degraded road markings, noise in individual measurements | N/A | N/A | [47] |

| UAVs–LiDAR and mobile photogrammetric system (MPS) for road geometric parameter extraction | Acquisition of precise longitudinal and cross-sectional profiles, efficient extraction of road geometric parameters, MPS as a cost-effective alternative to LiDAR systems | Non-ground point filtering, temporal synchronization of multiple sensors, processing of large-scale datasets | N/A | N/A | [109] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Satama-Bermeo, G.; Lopez-Guede, J.M.; Rahebi, J.; Teso-Fz-Betoño, D.; Boyano, A.; Akizu-Gardoki, O. PRISMA Review: Drones and AI in Inventory Creation of Signage. Drones 2025, 9, 221. https://doi.org/10.3390/drones9030221

Satama-Bermeo G, Lopez-Guede JM, Rahebi J, Teso-Fz-Betoño D, Boyano A, Akizu-Gardoki O. PRISMA Review: Drones and AI in Inventory Creation of Signage. Drones. 2025; 9(3):221. https://doi.org/10.3390/drones9030221

Chicago/Turabian StyleSatama-Bermeo, Geovanny, Jose Manuel Lopez-Guede, Javad Rahebi, Daniel Teso-Fz-Betoño, Ana Boyano, and Ortzi Akizu-Gardoki. 2025. "PRISMA Review: Drones and AI in Inventory Creation of Signage" Drones 9, no. 3: 221. https://doi.org/10.3390/drones9030221

APA StyleSatama-Bermeo, G., Lopez-Guede, J. M., Rahebi, J., Teso-Fz-Betoño, D., Boyano, A., & Akizu-Gardoki, O. (2025). PRISMA Review: Drones and AI in Inventory Creation of Signage. Drones, 9(3), 221. https://doi.org/10.3390/drones9030221