Combined Web-Based Visualisation of 3D Point Clouds and Acoustic Descriptors: An Interdisciplinary Challenge

Abstract

:1. Introduction

- From the operational point of view, it questions the integration of visualisation practices and promotes a vision of sensemaking in large 3D datasets through the use of visualisation paradigms that complement scientific visualisation approaches per se.

- From an interdisciplinary point of view, it questions the way 3D environments can be adapted to the analysis of acoustic datasets, where parameters such as time and frequency play a central role.

- From the societal point of view, it provides feedback on approaches intended to be frugal. Methods, protocols, and tools are adapted to small-scale buildings, left aside from major sources of funding, encouraging us to reflect on the utility and added value of our results for local communities.

- Finally, from a methodological point of view, it places the notion of comparability and reproducibility at the heart of the research.

2. Background and Strategy

- Scientific visualisation, where what is primarily seen relates to and represents visually a physical “thing” [17].

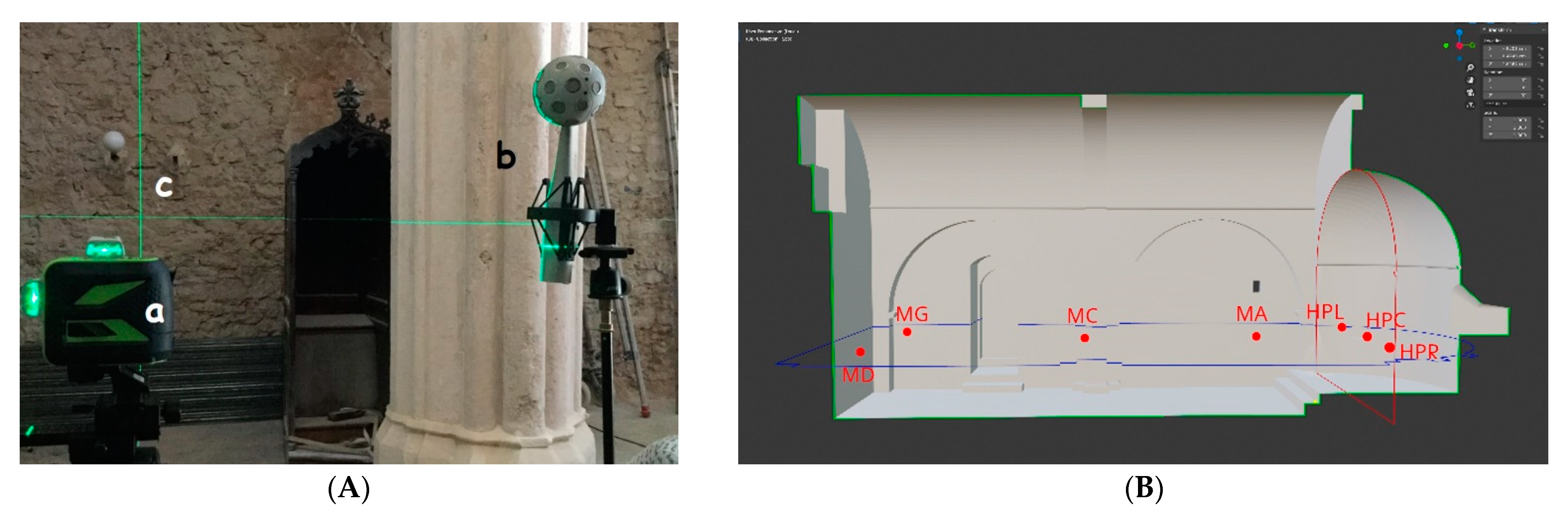

- Two self-levelling laser levels are used to project laser beams onto the building surfaces (Figure 1a).

- A systematic spatial grid comprising three speakers and four microphones (Figure 1b) is positioned with respect to the primary function of the chapels (celebrant vs. listener opposition).

- Intersections of the laser beams on surfaces and positions of the eight grid components are registered using a laser-rangefinder that outputs point-to-point distances.

- The acoustic survey consists of the recording on each of the four microphone positions of a sine wave (sweep) emitted from each of the speakers (iterated several times to spot and eliminate outliers).

- The photogrammetric acquisition is carried out with a 360 panoramic camera (pyramidal sequence in each position).

3. Components of the Prototype

3.1. Visualisation of the Survey’s Raw Outputs

3.2. Measurements and Naming (DXF Points)

3.3. Visualisation of the Laser Beams

3.4. Volume Calculation

3.4.1. Voxel-Based Volume Estimation

3.4.2. Convex Hull Method Volume Estimation

3.5. Acoustic Descriptors

- Energy descriptors: Strength Factor, Lateral Strength, Root Mean Square (RMS);

- Reverberation descriptors: Reverberation Time, Early Decay Time, Central Time, Bass Ratio, Trebble Ratio, Frequency barycenter, Schroeder frequency;

- Intelligibility descriptors: Direct-to-Reverberant Ratio, Clarity (C50 and C80), Speech Transmission Index;

- Spatial descriptors: Inter-Aural Cross-correlation Coefficient, Lateral Fraction.

3.5.1. C50 Computation

3.5.2. RMS Computation through PWD

- 6 time frames of length 5 ms from t = 0 s to t = 30 ms;

- 6 time frames of length 10 ms from t = 30 ms to t = 90 ms;

- 6 time frames of length 20 ms from t = 90 ms to t = 210 ms;

- 1 time frame from t = 210 ms to the end of the impulse response (the length of the time frame depends on the length of the impulse response).

- The time t = 0 is defined as the time of the arrival of the direct sound.

3.6. Visualisation of Acoustic Descriptors

3.6.1. Visualisation of the 32-Channel C50 Clarity Descriptor

3.6.2. Visualisation of the PWD Descriptor

4. Results and Interpretation

4.1. On Visual Metaphors

4.2. On the Need to Complement the 3D Visualisations

4.3. The C50 Clarity Descriptor Visualisations: Use Cases and Interpretation

4.4. The PWD Descriptor Visualisations: Use Cases and Interpretation

4.5. Limitations and Future Works

- Only two out of the dozen acoustic descriptors resulting from the acquisition campaigns have been visualised: there is clearly room for future work here.

- Existing solutions prove relatively efficient when trying to identify patterns for this or that interior, but the comparison of behaviours across interiors is not supported enough. Two visual solutions have been implemented that allow a global reading of collections, contrasts, and resemblances. Yet more can be conducted to enhance comparative analyses, and this is a key point as far as our research programme is concerned. The work we have conducted has helped us pinpoint families of patterns (intensity, geometric distribution, variation, consistency patterns) that we intend to re-examine using other visual solutions.

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Overview of the Components Combined in the 3D Integrator

Appendix B. Extraction of Dimensional Features

References

- Schütz, M. Potree: Rendering Large Point Clouds in Web Browsers. Ph.D. Thesis, Vienna University of Technology, Vienna, Austria, 2016. [Google Scholar]

- Blaise, J.Y.; Dudek, I.; Pamart, A.; Bergerot, L.; Vidal, A.; Fargeot, S.; Aramaki, M.; Ystad, S.; Kronland-Martinet, M. Space & sound characterisation of small-scale architectural heritage: An interdisciplinary, lightweight workflow. In Proceedings of the International Conference on Metrology for Archaeology and Cultural Heritage, Virtual Conference, 22–24 October 2020; Available online: https://halshs.archives-ouvertes.fr/halshs-02981084 (accessed on 27 November 2022).

- Henwood, R.; Pell, S.; Straub, K. Combining Acoustic Scanner Data with In-Pit Mapping Data to Aid in Determining Endwall Stability: A Case Study from an Operating Coal Mine in Australia. McElroy Bryan Geological Services. Available online: https://mbgs.com.au/wp-content/uploads/2022/03/Combining-acoustic-scanner-data-with-in-pit-mapping-data-to-aid-in-determining-endwall-stability-a-case-study-from-an-operating-coal-mine-in-Australia.pdf (accessed on 27 November 2022).

- Chemisky, B.; Nocerino, E.; Menna, F.; Nawaf, M.M.; Drap, P. A portable opto-acoustic survey solution for mapping of underwater targets. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2021, XLIII-B2-2021, 651–658. [Google Scholar] [CrossRef]

- Ceravolo, R.; Pistone, G.; Fragonara, L.Z.; Massetto, S.; Abbiati, G. Vibration-Based Monitoring and Diagnosis of Cultural Heritage: A Methodological Discussion in Three Examples. Int. J. Archit. Herit. 2016, 10, 375–395. [Google Scholar] [CrossRef] [Green Version]

- Pallarés, F.J.; Betti, M.; Bartoli, G.; Pallarés, L. Structural Health Monitoring (SHM) and Nondestructive Testing (NDT) of Slender Masonry Structures: A Practical Review. Constr. Build. Mater. 2021, 297, 123768. [Google Scholar] [CrossRef]

- Aparicio Secanellas, S.; Liébana Gallego, J.C.; Anaya Catalán, G.; Martín Navarro, R.; Ortega Heras, J.; García Izquierdo, M.Á.; González Hernández, M.; Anaya Velayos, J.J. An Ultrasonic Tomography System for the Inspection of Columns in Architectural Heritage. Sensors 2022, 22, 6646. [Google Scholar] [CrossRef]

- Fais, S.; Casula, G.; Cuccuru, F.; Ligas, P.; Bianchi, M.G. An Innovative Methodology for the Non-Destructive Diagnosis of Architectural Elements of Ancient Historical Buildings. Sci. Rep. 2018, 8, 4334. [Google Scholar] [CrossRef] [Green Version]

- Dokmanić, I.; Parhizkar, R.; Walther, A.; Lu, Y.M.; Vetterli, M. Acoustic Echoes Reveal Room Shape. Proc. Natl. Acad. Sci. USA 2013, 110, 12186–12191. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bertocci, S.; Lumini, A.; Cioli, F. Digital Survey and 3D Modeling to Support the Auralization and Virtualization Processes of Three European Theater Halls: Berlin Konzerthaus, Lviv Opera House, and Teatro Del Maggio Musicale in Florence. A Methodological Framework. In Proceedings of the 2nd Symposium: The Acoustics of Ancient Theatres, Verona, Italy, 6–8 July 2022; Available online: https://flore.unifi.it/retrieve/e398c382-5f94-179a-e053-3705fe0a4cff/sat_170.pdf (accessed on 27 November 2022).

- Lavagna, L.; Shtrepi, L.; Farina, A.; Bevilacqua, A.; Astolfi, A. Virtual Reality inside the Greek-Roman Theatre of Tyndaris: Comparison between Existing Conditions and Original Architectural Features. In Proceedings of the 2nd Symposium: The Acoustics of Ancient Theatres, Verona, Italy, 6–8 July 2022; p. 3. [Google Scholar]

- Thivet, M.; Verriez, Q.; Vurpillot, D. Aspectus: A flexible collaborative tool for 3D data exploitation in the field of archaeology and cultural heritage. In Situ 2019, 39. [Google Scholar] [CrossRef]

- Barazzetti, L.; Previtali, M.; Roncoroni, F. Can we use low-cost 360 degree cameras to create accurate 3d models? In Proceedings of the ISPRS TC II Mid-term Symposium “Towards Photogrammetry 2020”, Riva del Garda, Italy, 4–7 June 2018; pp. 69–75. [Google Scholar] [CrossRef]

- MH Acoustics. em32 Eigenmike Microphone Array Releasenotes (v17.0)—Notes for Setting Up and Using the MH Acoustics em32 Eigenmike Microphone Array; MH Acoustics: Summit, NJ, USA, 2013. [Google Scholar]

- Binelli, M.; Venturi, A.; Amendola, A.; Farina, A. Experimental Analysis of Spatial Properties of the Sound Field Inside a Car Employing a Spherical Microphone Array, May 2011. In Proceedings of the 130th AES Conference, London, UK, 13–16 May 2011. [Google Scholar]

- Farina, A.; Amendola, A.; Capra, A.; Varani, C. Spatial analysis of room impulse responses captured with a 32-capsule microphone array. In Proceedings of the 130th Audio Engineering Society Convention, London, UK, 13–16 May 2011. [Google Scholar]

- Spence, R. Information Visualization; Addison Wesley: Harlow, UK, 2001. [Google Scholar]

- Kienreich, W. Information and knowledge visualisation: An oblique view. MIA J. 2006. [Google Scholar]

- Aigner, W.; Miksch, S.; Schumann, H.; Tominski, C. Visualization of Time-Oriented Data; Human-Computer Interaction Series; Springer: London, UK, 2011. [Google Scholar]

- Blaise, J.Y.; Dudek, I.; Pamart, A.; Bergerot, L.; Vidal, A.; Fargeot, S.; Aramaki, M.; Ystad, S.; Kronland-Martinet, M. Acquisition & integration of spatial and acoustic features: A workflow tailored to small-scale heritage architecture. Acta IMEKO 2022, 11, 1–14. Available online: http://doi.org/10.21014/acta_imeko.v11i2.1082 (accessed on 27 November 2022).

- Cerdá, S.; Giménez, A.; Romero, J.; Cibrián, R.; Miralles, J. Room acoustical parameters: A factor analysis approach. Appl. Acoust. 2009, 70, 97–109. [Google Scholar] [CrossRef]

- Daniel, J. Représentation de Champs Acoustiques, Application à la Transmission et à la Reproduction de scènes Sonores Complexes dans un Contexte Multimédia. Ph.D. Thesis, University of Paris VI, Paris, France, 2000. [Google Scholar]

- Alon, D.L.; Rafaely, B. Spatial decomposition by spherical array processing. In Para-Metric Time-Frequency Domain Spatial Audio; Wiley Online Library: New York, NY, USA, 2017; pp. 25–48. [Google Scholar] [CrossRef]

- Politis, A. Microphone Array Processing for Parametric Spatial Audio Techniques. Ph.D. Thesis, Department of Signal Processing and Acoustics, Aalto University, Espoo, Finland, 2016. [Google Scholar]

- Sabol, V. (TU Graz, Graz, Austria) Visual Analysis of Relatedness in Dynamically Changing Repositories. Coupling Visualization with Machine Processing for Gaining Insights into Massive Data. 2012. Available online: http://www.gamsau.map.cnrs.fr/modys/abstracts/Sabol.pdf (accessed on 27 November 2022).

- Tufte, E.R. Visual Explanations; Graphics Press: Cheshire, CT, USA, 2001. [Google Scholar]

- Adavanne, S.; Politis, A.; Virtanen, T. A Multi-room Reverberant Dataset for Sound Event Localization and Detection. In Proceedings of the Detection and Classification of Acoustic Scenes and Events 2019 Workshop (DCASE2019), New York University, New York, NY, USA, 25–26 October 2019; pp. 10–14. [Google Scholar] [CrossRef]

- Fekete, J.D.; Van Wijk, J.; Stasko, J.T.; North, C. The Value of Information Visualization. Information Visualization: Human-Centered Issues and Perspectives; Lecture Notes in Computer Science; Springer: Berlin, Germany, 2008; pp. 1–18. ISBN 978-3-540-70955-8. [Google Scholar]

- Nishimura, K.; Hirose, M. The Study of Past Working History Visualization for Supporting Trial and Error Approach in Data Mining. In Human Interface and the Management of Information. Methods, Techniques and Tools in Information Design. Human Interface 2007; Lecture Notes in Computer, Science; Smith, M.J., Salvendy, G., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; Volume 4557. [Google Scholar] [CrossRef]

- Grilli, E.; Menna, F.; Remondino, F. A review of point clouds segmentation and classification algorithms. ISPRS Arch. 2017, XLII-2/W3, 339–344. [Google Scholar] [CrossRef] [Green Version]

- Kearney, G.; Daffern, H.; Cairns, P.; Hunt, A.; Lee, B.; Cooper, J.; Tsagkarakis, P.; Rudzki, T.; Johnston, D. Measuring the Acoustical Properties of the BBC Maida Vale Recording Studios for Virtual Reality. Acoustics 2022, 4, 783–799. [Google Scholar] [CrossRef]

- Accès Cartographique au Corpus d’étude du Projet SESAMES. 15 Chapelles Rurales en Région PACA. Schémas de Plan et de Section. Available online: https://halshs.archives-ouvertes.fr/halshs-03070251 (accessed on 27 November 2022).

- Grille D’analyse Dimensionnelle Systématique—Corpus Chapelles Rurales. Available online: http://anr-sesames.map.cnrs.fr/docs/Sesames_dimensionsGrid.pdf (accessed on 27 November 2022).

- Cohen, M.A. Conclusion: Ten Principles for the Study of Proportional Systems in the History of Architecture. Archit. Hist. 2014, 2, 7. [Google Scholar]

- Blaise, J.Y.; Dudek, I. Identifying and Visualizing Universal Features for Architectural Mouldings. IJCISIM Int. J. Comput. Inf. Syst. Ind. Manag. Appl. 2012, 4, 130–143. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bergerot, L.; Blaise, J.-Y.; Dudek, I.; Pamart, A.; Aramaki, M.; Fargeot, S.; Kronland-Martinet, R.; Vidal, A.; Ystad, S. Combined Web-Based Visualisation of 3D Point Clouds and Acoustic Descriptors: An Interdisciplinary Challenge. Heritage 2022, 5, 3819-3845. https://doi.org/10.3390/heritage5040197

Bergerot L, Blaise J-Y, Dudek I, Pamart A, Aramaki M, Fargeot S, Kronland-Martinet R, Vidal A, Ystad S. Combined Web-Based Visualisation of 3D Point Clouds and Acoustic Descriptors: An Interdisciplinary Challenge. Heritage. 2022; 5(4):3819-3845. https://doi.org/10.3390/heritage5040197

Chicago/Turabian StyleBergerot, Laurent, Jean-Yves Blaise, Iwona Dudek, Anthony Pamart, Mitsuko Aramaki, Simon Fargeot, Richard Kronland-Martinet, Adrien Vidal, and Sølvi Ystad. 2022. "Combined Web-Based Visualisation of 3D Point Clouds and Acoustic Descriptors: An Interdisciplinary Challenge" Heritage 5, no. 4: 3819-3845. https://doi.org/10.3390/heritage5040197

APA StyleBergerot, L., Blaise, J.-Y., Dudek, I., Pamart, A., Aramaki, M., Fargeot, S., Kronland-Martinet, R., Vidal, A., & Ystad, S. (2022). Combined Web-Based Visualisation of 3D Point Clouds and Acoustic Descriptors: An Interdisciplinary Challenge. Heritage, 5(4), 3819-3845. https://doi.org/10.3390/heritage5040197