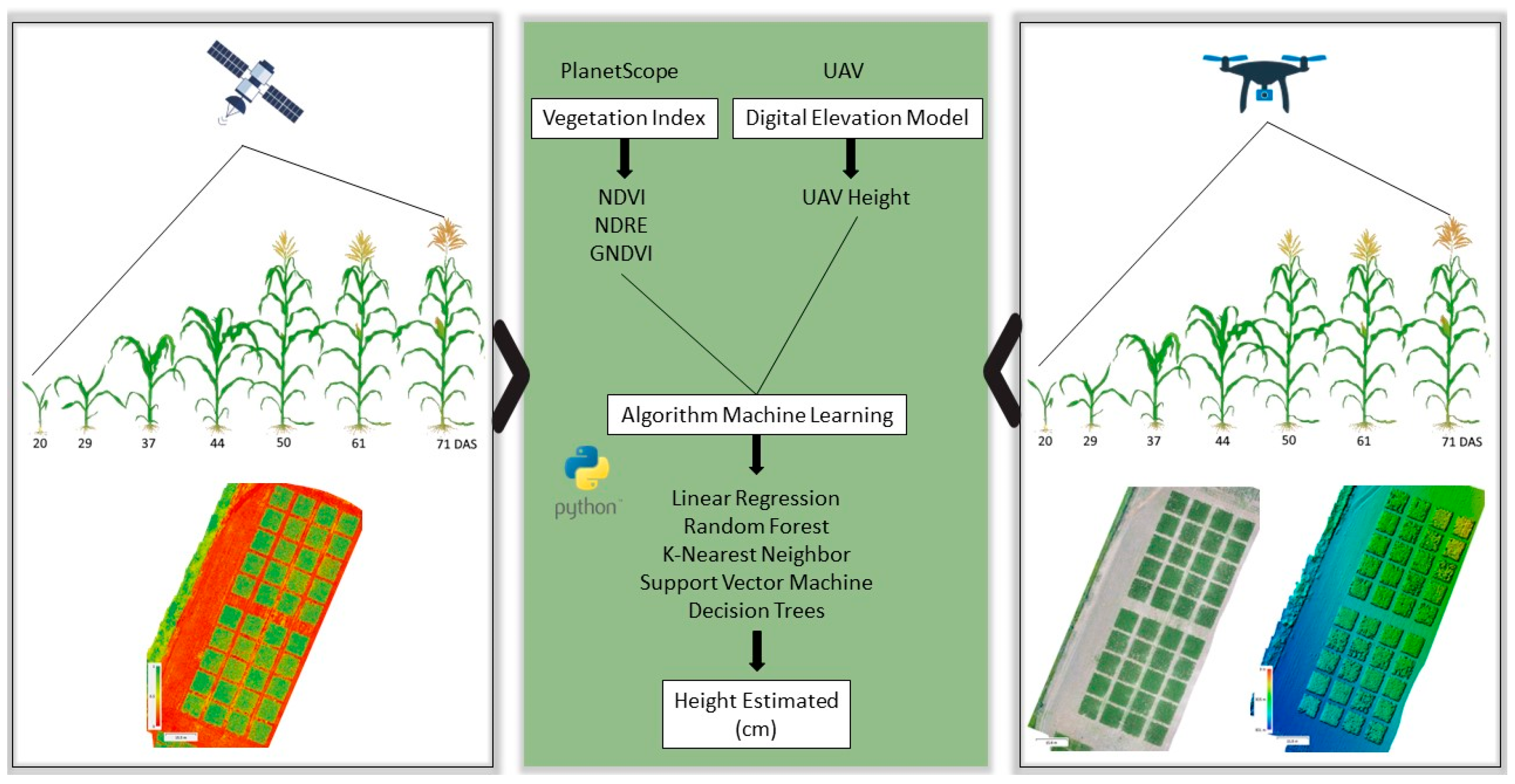

Integrating Satellite and UAV Technologies for Maize Plant Height Estimation Using Advanced Machine Learning

Abstract

:1. Introduction

2. Materials and Methods

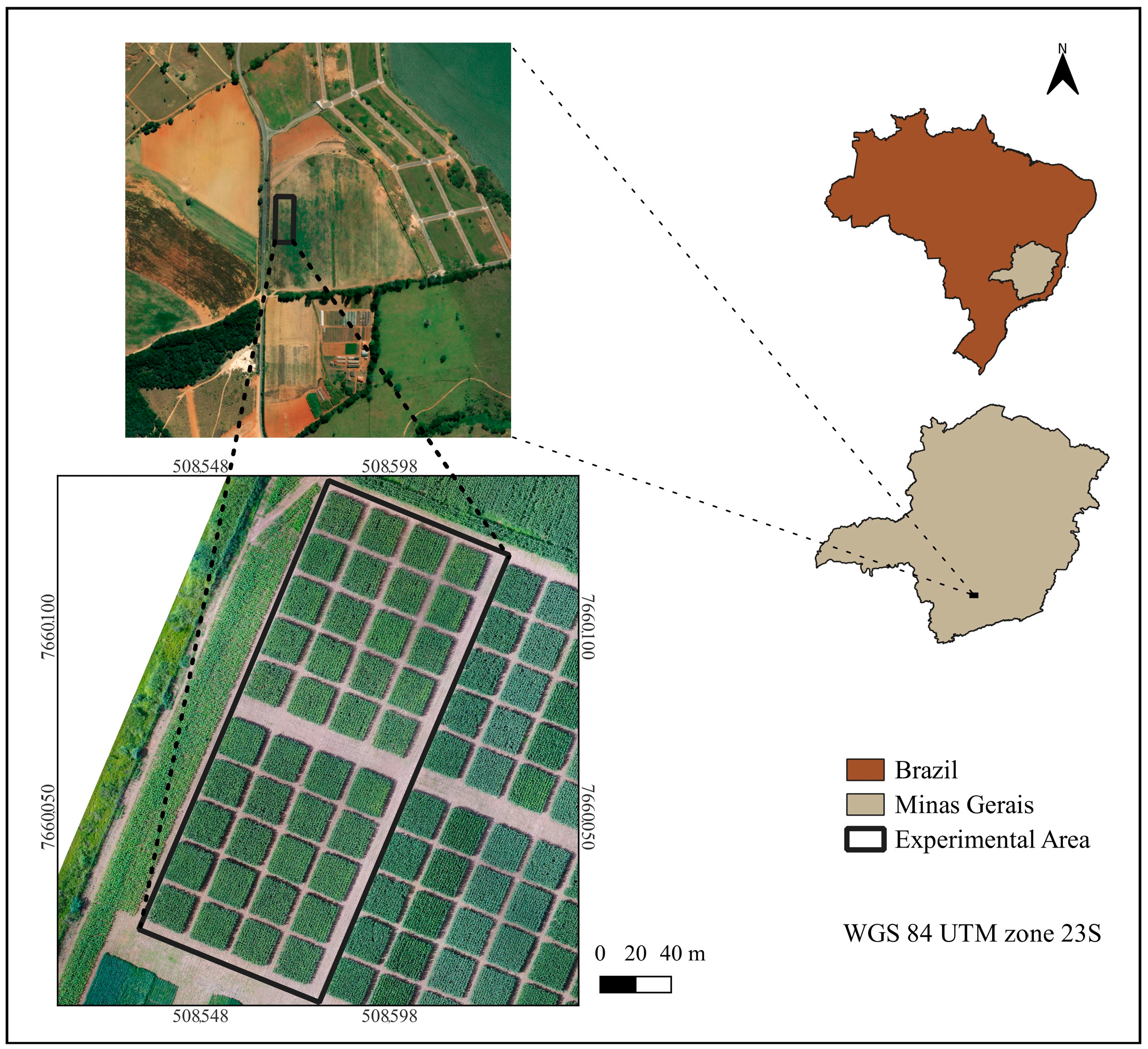

2.1. Study Area

2.2. Data Acquisition in the Field

2.3. Image Acquisition and Processing

2.4. Extraction of Vegetation Indices and Digital Elevation Model

2.5. Machine Learning Algorithms for Plant Height Estimation

2.6. Pre-Processing of Data and Statistical Analysis

3. Results

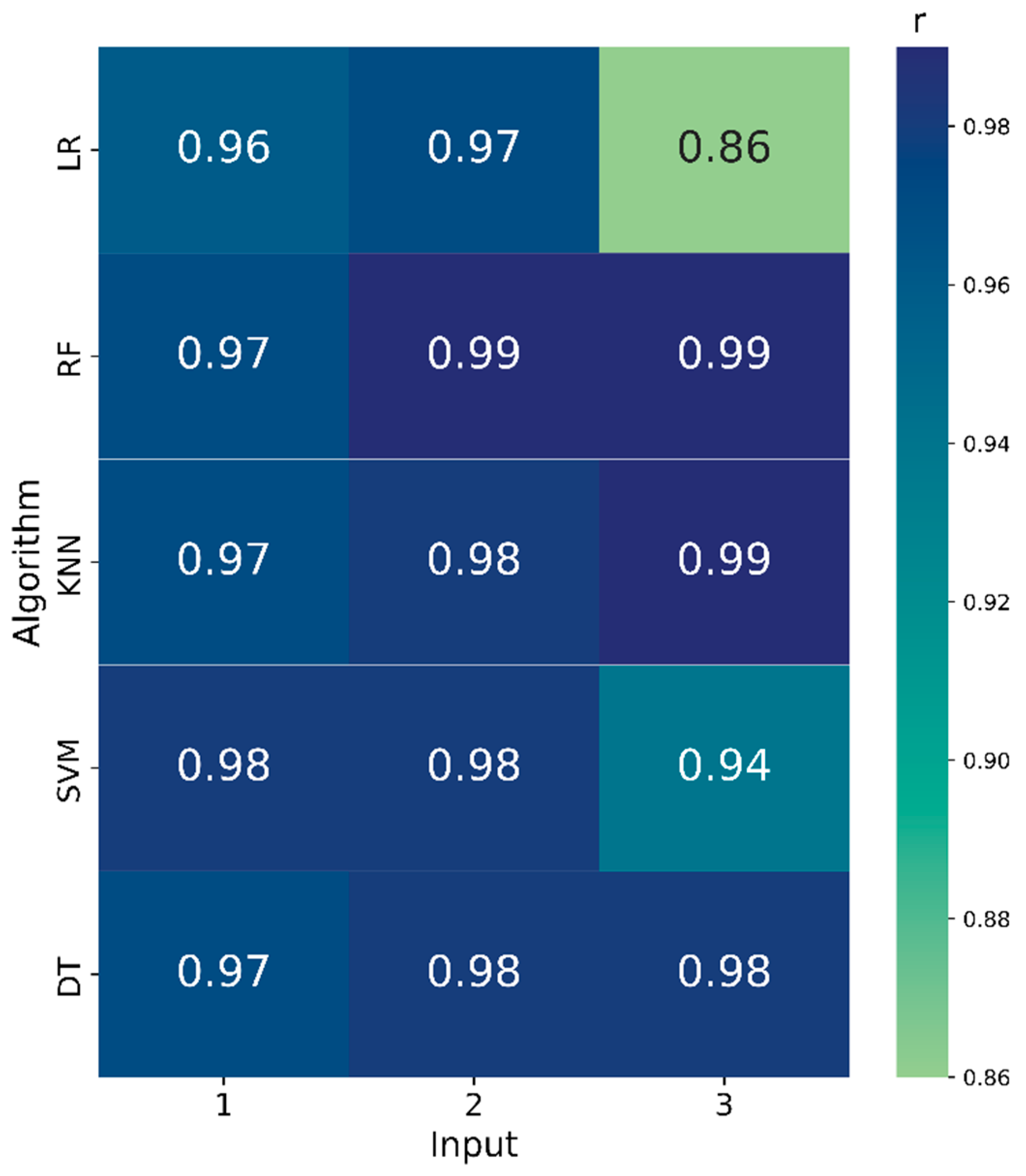

3.1. Correlation between Observed and Estimated Height Values

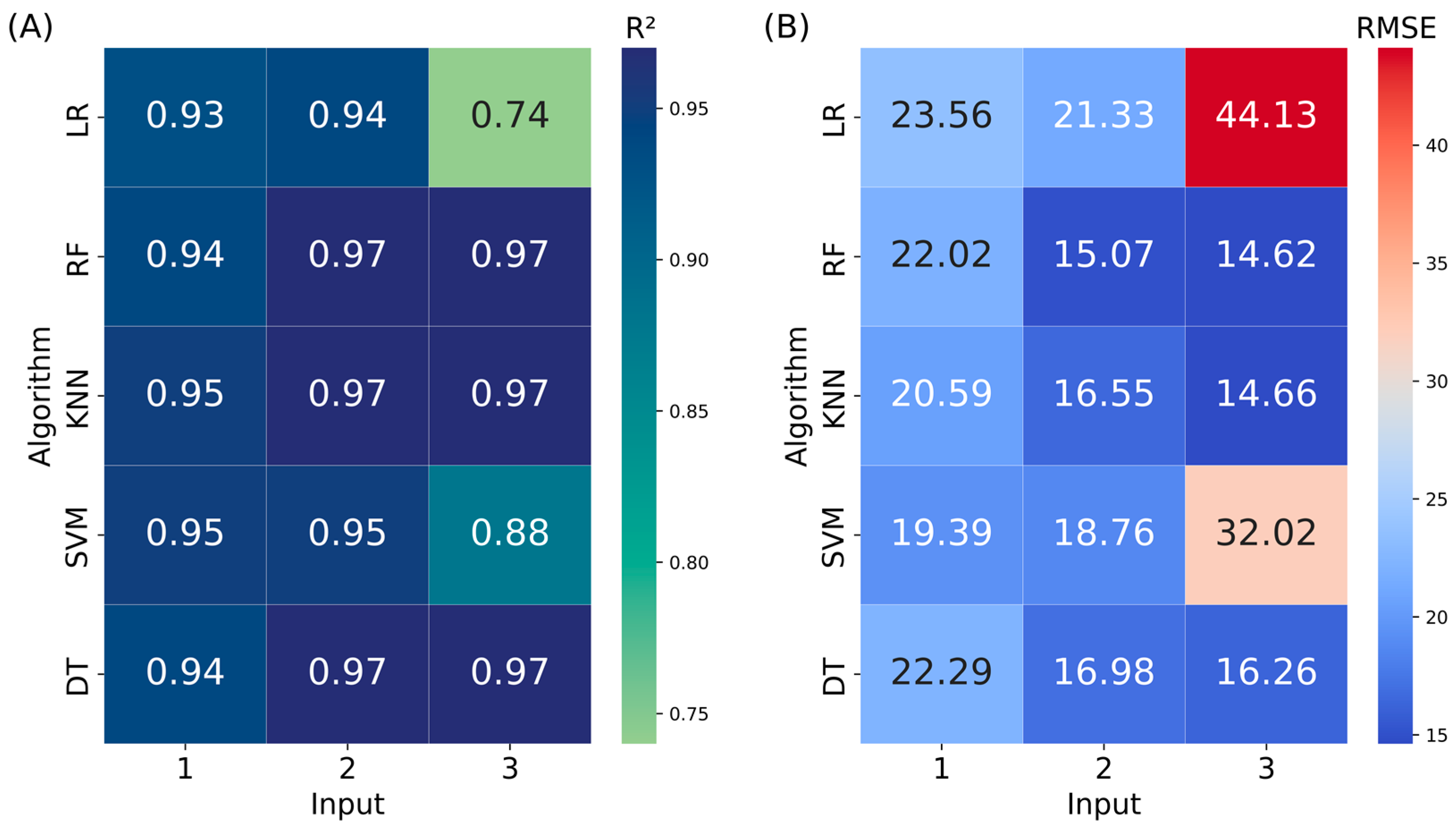

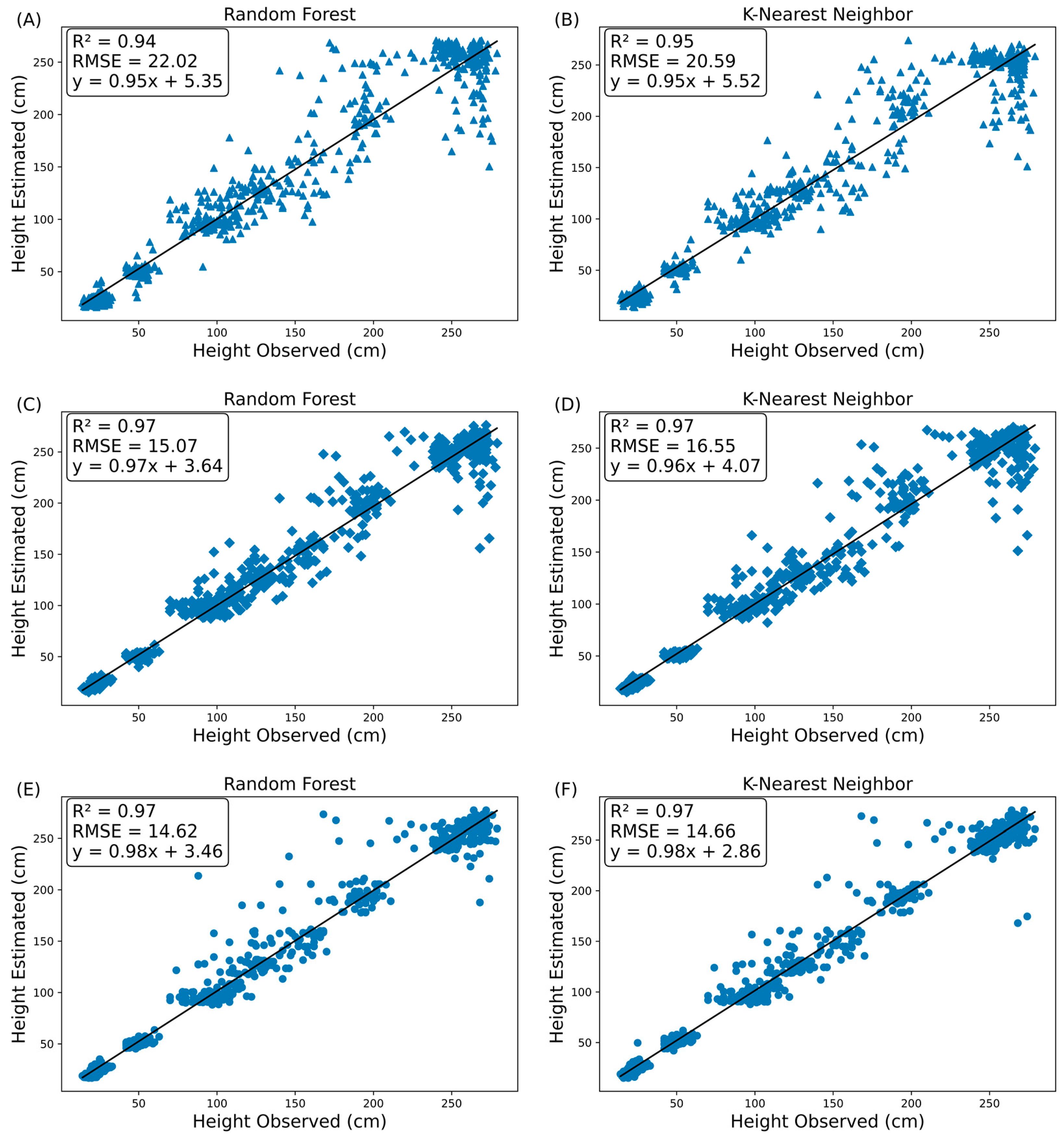

3.2. Comparison and Performance of Machine Learning Algorithms

3.3. Estimation of Plant Height in the Field

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Du, L.; Yang, H.; Song, X.; Wei, N.; Yu, C.; Wang, W.; Zhao, Y. Estimating leaf area index of maize using UAV-based digital imagery and machine learning methods. Sci. Rep. 2022, 12, 24. [Google Scholar] [CrossRef] [PubMed]

- Luo, S.; Liu, W.; Zhang, Y.; Wang, C.; Xi, X.; Nie, S.; Ma, D.; Lin, Y.; Zhou, G. Maize and soybean heights estimation from unmanned aerial vehicle (UAV) LiDAR data. Comput. Electron. Agric. 2021, 182, 106005. [Google Scholar] [CrossRef]

- Jung, J.; Maeda, M.; Chang, A.; Bhandari, M.; Ashapure, A.; Landivar-bowles, J. The potential of remote sensing and artificial intelligence as tools to improve the resilience of agriculture production systems. Curr. Opin. Biotechnol. 2021, 70, 15–22. [Google Scholar] [CrossRef] [PubMed]

- Li, F.; Miao, Y.; Chen, X.; Sun, Z.; Stueve, K.; Yuan, F. In-Season Prediction of Maize Grain Yield through PlanetScope and Sentinel-2 Images. Agronomy 2022, 12, 3176. [Google Scholar] [CrossRef]

- Schwalbert, R.A.; Amado, T.; Corassa, G.; Pott, L.P.; Prasad, P.V.V.; Ciampitti, I.A. Satellite-based soybean yield forecast: Integrating machine learning and weather data for improving crop yield prediction in southern Brazil. Agric. For. Meteorol. 2020, 284, 107886. [Google Scholar] [CrossRef]

- Souza, J.B.C.; de Almeida, S.L.H.; de Oliveira, M.F.; dos Santos, A.F.; de Brito Filho, A.L.; Meneses, M.D.; da Silva, R.P. Integrating Satellite and UAV Data to Predict Peanut Maturity upon Artificial Neural Networks. Agronomy 2022, 12, 1512. [Google Scholar] [CrossRef]

- Liu, W.; Li, Y.; Liu, J.; Jiang, J. Estimation of Plant Height and Aboveground Biomass of Toona sinensis under Drought Stress Using RGB-D Imaging. Forests 2021, 12, 1747. [Google Scholar] [CrossRef]

- Ji, Y.; Liu, R.; Xiao, Y.; Cui, Y.; Chen, Z.; Zong, X.; Yang, T. Faba bean above-ground biomass and bean yield estimation based on consumer-grade unmanned aerial vehicle RGB images and ensemble learning. Precis. Agric. 2023, 28, 1439–1460. [Google Scholar] [CrossRef]

- Che, Y.; Wang, Q.; Xie, Z.; Zhou, L.; Li, S.; Hui, F.; Wang, X.; Li, B.; Ma, Y. Estimation of maize plant height and leaf area index dynamics using an unmanned aerial vehicle with oblique and nadir photography. Ann. Bot. 2020, 126, 765–773. [Google Scholar] [CrossRef]

- Gilliot, J.M.; Michelin, J.; Hadjard, D.; Houot, S. An accurate method for predicting spatial variability of maize yield from UAV-based plant height estimation: A tool for monitoring agronomic field experiments. Precis. Agric. 2020, 22, 897–921. [Google Scholar] [CrossRef]

- Osco, L.P.; Marcato Junior, J.; Ramos, A.P.M.; Furuya, D.E.G.; Santana, D.C.; Teodoro, L.P.R.; Gonçalves, W.N.; Baio, F.H.R.; Pistori, H.; da Silva Junior, C.A.; et al. Leaf Nitrogen Concentration and Plant Height Prediction for Maize Using UAV-Based Multispectral Imagery and Machine Learning Techniques. Remote Sens. 2020, 12, 3237. [Google Scholar] [CrossRef]

- Tao, H.; Feng, H.; Xu, L.; Miao, M.; Yang, G.; Yang, X.; Fan, L. Estimation of the Yield and Plant Height of Winter Wheat Using UAV-Based Hyperspectral Images. Sensors 2020, 20, 1231. [Google Scholar] [CrossRef] [PubMed]

- Guo, T.; Fang, Y.; Cheng, T.; Tian, Y.; Zhu, Y.; Chen, Q.; Qiu, X.; Yao, X. Detection of wheat height using optimized multi-scan mode of LiDAR during the entire growth stages. Comput. Electron. Agric. 2019, 165, 104959. [Google Scholar] [CrossRef]

- Yu, J.; Wang, J.; Leblon, B. Evaluation of Soil Properties, Topographic Metrics, Plant Height, and Unmanned Aerial Vehicle Multispectral Imagery Using Machine Learning Methods to Estimate Canopy Nitrogen Weight in Maize. Remote Sens. 2021, 13, 3105. [Google Scholar] [CrossRef]

- Zhang, H.; Sun, Y.; Chang, L.; Qin, Y.; Chen, J.; Qin, Y.; Du, J.; Yi, S.; Wang, Y. Estimation of Grassland Canopy Height and Aboveground Biomass at the Quadrat Scale Using Unmanned Aerial Vehicle. Remote Sens. 2018, 10, 851. [Google Scholar] [CrossRef]

- Qiao, L.; Gao, D.; Zhao, R.; Tang, W.; An, L.; Li, M.; Sun, H. Improving estimation of LAI dynamic by fusion of morphological and vegetation indices based on UAV imagery. Comput. Electron. Agric. 2022, 192, 106603. [Google Scholar] [CrossRef]

- Liakos, K.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine Learning in Agriculture: A review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef]

- Yoosefzadeh-Najafabadi, M.; Earl, H.J.; Tulpan, D.; Sulik, J.; Eskandari, M. Application of Machine Learning Algorithms in Plant Breeding: Predicting yield from hyperspectral reflectance in soybean. Front. Plant Sci. 2021, 11, 9–99. [Google Scholar] [CrossRef]

- Corte, A.P.D.; Souza, D.V.; Rex, F.E.; Sanquetta, C.R.; Mohan, M.; Silva, C.A.; Zambrano, A.M.A.; Prata, G.; de Almeida, D.R.A.; Trautenmuller, J.W.; et al. Forest inventory with high-density UAV-Lidar: Machine learning approaches for predicting individual tree attributes. Comput. Electron. Agric. 2020, 179, 105815. [Google Scholar] [CrossRef]

- Li, M.; Zhao, J.; Yang, X. Building a new machine learning-based model to estimate county-level climatic yield variation for maize in Northeast China. Comput. Electron. Agric. 2021, 191, 106557. [Google Scholar] [CrossRef]

- Feng, L.; Zhang, Z.; Ma, Y.; Du, Q.; Williams, P.; Drewry, J.; Luck, B. Alfalfa Yield Prediction Using UAV-Based Hyperspectral Imagery and Ensemble Learning. Remote Sens. 2020, 12, 2028. [Google Scholar] [CrossRef]

- Garcia, E.M.; Alberti, M.G.; Álvarez, A.A.A. Measurement-While-Drilling Based Estimation of Dynamic Penetrometer Values Using Decision Trees and Random Forests. Appl. Sci. 2022, 12, 4565. [Google Scholar] [CrossRef]

- Liu, B.; Liu, Y.; Huang, G.; Jiang, X.; Liang, Y.; Yang, C.; Huang, L. Comparison of yield prediction models and estimation of the relative importance of main agronomic traits affecting rice yield formation in saline-sodic paddy fields. Eur. J. Agron. 2023, 148, 126870. [Google Scholar] [CrossRef]

- Rodriguez-Puerta, F.; Ponce, R.A.; Pérez-Rodríguez, F.; Águeda, B.; Martín-García, S.; Martínez-Rodrigo, R.; Lizarralde, I. Comparison of Machine Learning Algorithms for Wildland-Urban Interface Fuelbreak Planning Integrating ALS and UAV-Borne LiDAR Data and Multispectral Images. Drones 2020, 4, 21. [Google Scholar] [CrossRef]

- Zamri, N.; Pairan, M.A.; Azman, W.N.A.W.; Abas, S.S.; Abdullah, L.; Naim, S.; Tarmudi, Z.; Gao, M. A comparison of unsupervised and supervised machine learning algorithms to predict water pollutions. Procedia Comput. Sci. 2022, 204, 172–179. [Google Scholar] [CrossRef]

- Phinzi, K.; Abriha, D.; Szabó, S. Classification Efficacy Using K-Fold CrossValidation and Bootstrapping Resampling Techniques on the Example of Mapping Complex Gully Systems. Remote Sens. 2021, 13, 2980. [Google Scholar] [CrossRef]

- Köppen, W. Climatologia: Com un Estúdio de los Climas de la Tierra; Fondo de Cultura Economica: Mexico, Mexico, 1948; 478 p. [Google Scholar]

- Foloni, J.S.S.; Calonego, J.C.; Catuchi, T.A.; Belleggia, N.A.; Tiritan, C.S.; De Barbosa, A.M. Cultivares de milho em diferentes populações de plantas com espaçamento reduzido na safrinha. Rev. Bras. Milho E Sorgo 2014, 13, 312–325. Available online: https://ainfo.cnptia.embrapa.br/digital/bitstream/item/128140/1/cultivares-de-milho.pdf (accessed on 9 April 2023). [CrossRef]

- He, F.; Zhou, T.; Xiong, W.; Hasheminnasab, S.; Habib, A. Automated Aerial Triangulation for UAV-Based Mapping. Remote Sens. 2018, 10, 1952. [Google Scholar] [CrossRef]

- Esa, European Space Agency. PlanetScope. 2023. Available online: https://earth.esa.int/eogateway/missions/planetscope (accessed on 27 February 2023).

- Planet. Planet Imagery Product Specification. 2020. Available online: https://assets.planet.com/marketing/PDF/Planet_Surface_Reflectance_Technical_White_Paper.pdf (accessed on 27 February 2023).

- Jurgiel, B. Point Sampling Tool [Github Repository]. Available online: https://github.com/borysiasty/pointsamplingtool (accessed on 27 February 2023).

- Barboza, T.O.C.; Ardigueri, M.; Souza, G.F.C.; Ferraz, M.A.J.; Gaudencio, J.R.F.; Dos Santos, A.F. Performance of Vegetation Indices to Estimate Green Biomass Accumulation in Common Bean. Agriengineering 2023, 5, 840–854. [Google Scholar] [CrossRef]

- Tedesco, D.; De Oliveira, M.F.; Dos Santos, A.F.; Silva, E.H.C.; De Rolim, G.S.; Da Silva, R.P. Use of remote sensing to characterize the phenological development and to predict sweet potato yield in two growing seasons. Eur. J. Agron. 2021, 129, 126337. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Scheel, J.A.; Deering, D.W. Monitoring vegetation systems in the great plains with ERTS. In Proceedings of the Third Earth Resource Technology Satellite (ERTS) Symposium, Washington, DC, USA, 10–14 December 1974. [Google Scholar]

- Gitelson, A.; Merzlyak, M.N. Quantitative estimation of chlorophyll-a using reflectance spectra: Experiments with autumn chestnut and maple leaves. J. Photochem. Photobiol. B Biol. 1994, 22, 247–252. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Letsoin, S.M.A.; Guth, D.; Herak, D.; Purwestri, R.C. Analysing Maize Plant Height Using Unmanned Aerial Vehicle (UAV) RGB based on Digital Surface Models (DSM). IOP Conf. Ser. Earth Environ. Sci. 2023, 1187, 012028. [Google Scholar] [CrossRef]

- Trevisan, L.R.; Brichi, L.; Gomes, T.M.; Rossi, F. Estimating Black Oat Biomass Using Digital Surface Models and a Vegetation Index Derived from RGB-Based Aerial Images. Remote Sens. 2023, 15, 1363. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, R.; Song, W.; Han, L.; Liu, X.; Sun, X.; Luo, M.; Chen, K.; Zhang, Y.; Yang, H.; et al. Dynamic plant height QTL revealed in maize through remote sensing phenotyping using a high-throughput unmanned aerial vehicle (UAV). Sci. Rep. 2019, 9, 3458. [Google Scholar] [CrossRef]

- Karasiak, N. Dzetsaka: Classification Tool [Github Repository]. 2020. Available online: https://github.com/nkarasiak/dzetsaka/ (accessed on 27 February 2023).

- Cavalcanti, V.P.; Dos Santos, A.F.; Rodrigues, F.A.; Terra, W.C.; Araujo, R.C.; Ribeiro, C.R.; Campos, V.P.; Rigobelo, E.C.; Medeiros, F.H.V.; Dória, J. Use of RGB images from unmanned aerial vehicle to estimate lettuce growth in root-knot nematode infested soil. Smart Agric. Technol. 2023, 3, 100100. [Google Scholar] [CrossRef]

- Nutt, A.T.; Batsell, R.R. Multiple linear regression: A realistic reflector. Data Anal. 1973, 19, 21. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Rokach, L.; Maimon, O. Decision Trees. Data Mining and Knowledge Discovery Handbook; Springer: Berlin/Heidelberg, Germany, 2005; pp. 165–192. [Google Scholar] [CrossRef]

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R. An Introduction to Statistical Learning: With Applications in R, 1st ed.; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Müller, A.; Nothman, J.; Louppe, G. Scikit-learn: Machine learning in python. arXiv 2012, arXiv:1201.0490. [Google Scholar] [CrossRef]

- Scikit-Learn. 2011. Available online: https://scikit-learn.org/stable/ (accessed on 27 February 2023).

- Yu, D.; Zha, Y.; Sun, Z.; Li, J.; Jin, X.; Zhu, W.; Bian, J.; Ma, L.; Zeng, Y.; Su, Z. Deep convolutional neural networks for estimating maize above-ground biomass using multi-source UAV images: A comparison with traditional machine learning algorithms. Precis. Agric. 2022, 24, 92–113. [Google Scholar] [CrossRef]

- Rueda-Ayala, V.; Pena, J.; Hoglind, M.; Bengochea-Guevara, J.; Andojar, D. Comparing UAV-Based Technologies and RGB-D Reconstruction Methods for Plant Height and Biomass Monitoring on Grass Ley. Sensors 2019, 19, 535. [Google Scholar] [CrossRef] [PubMed]

- Zhao, J.; Pan, F.; Xiao, X.; Hu, L.; Wang, X.; Yan, Y.; Zhang, S.; Tian, B.; Yu, H.; Lan, Y. Summer Maize Growth Estimation Based on Near-Surface Multi-Source Data. Agronomy 2023, 13, 532. [Google Scholar] [CrossRef]

- Wang, D.; Wan, B.; Liu, J.; Su, Y.; Guo, Q.; Qiu, P.; Wu, X. Estimating aboveground biomass of the mangrove forests on northeast Hainan Island in China using an upscaling method from field plots, UAV-LiDAR data and Sentinel-2 imagery. Int. J. Appl. Earth Obs. Geoinf. 2020, 85, 101986. [Google Scholar] [CrossRef]

- Zhou, L.; Gu, X.; Cheng, S.; Yang, G.; Shu, M.; Sun, Q. Analysis of Plant Height Changes of Lodged Maize Using UAV-LiDAR Data. Agriculture 2020, 10, 146. [Google Scholar] [CrossRef]

- Bai, D.; Li, D.; Zhao, C.; Wang, Z.; Shao, M.; Guo, B.; Liu, Y.; Wang, Q.; Li, J.; Guo, S.; et al. Estimation of soybean yield parameters under lodging conditions using RGB information from unmanned aerial vehicles. Front. Plant Sci. 2022, 13, 1012293. [Google Scholar] [CrossRef]

- Kraus, K.; Waldhausl, P. Manuel de Photogrammétrie: Principes et Procédés Fondamentaux; Hermes: Paris, France, 1998. [Google Scholar]

- Hu, P.; Chapman, S.C.; Wang, X.; Potgieter, A.; Duan, T.; Jordan, D.; Guo, Y.; Zheng, B. Estimation of plant height using a high throughput phenotyping platform based on unmanned aerial vehicle and self-calibration: Example for sorghum breeding. Eur. J. Agron. 2018, 95, 24–32. [Google Scholar] [CrossRef]

- Niu, Y.; Zhang, L.; Zhang, H.; Han, W.; Peng, X. Estimating Above-Ground Biomass of Maize Using Inputs Derived from UAV-Based RGB Imagery. Remote Sens. 2019, 11, 1261. [Google Scholar] [CrossRef]

- Walter, J.D.C.; Edwards, J.; Mcdonald, G.; Kuchel, H. Estimating Biomass and Canopy Height With LiDAR for Field Crop Breeding. Front. Plant Sci. 2019, 10, 1145. [Google Scholar] [CrossRef]

- Geipel, J.; Link, J.; Claupein, W. Combined Spectral and Spatial Modeling of Maize Yield Based on Aerial Images and Crop Surface Models Acquired with an Unmanned Aircraft System. Remote Sens. 2014, 6, 10335. [Google Scholar] [CrossRef]

- Teodoro, P.E.; Teodoro, L.P.R.; Baio, F.H.R.; Da Silva Junior, C.A.; Dos Santos, R.G.; Ramos, A.P.M.; Pinheiro, M.M.F.; Osco, L.P.; Gonçalves, W.N.; Carneiro, A.M.; et al. Predicting Days to Maturity, Plant Height, and Grain Yield in Soybean: A machine and deep learning approach using multispectral data. Remote Sens. 2021, 13, 4632. [Google Scholar] [CrossRef]

- Li, W.; Niu, Z.; Chen, H.; Li, D.; Wu, M.; Zhao, W. Remote estimation of canopy height and aboveground biomass of maize using high-resolution stereo images from a low-cost unmanned aerial vehicle system. Ecol. Indic. 2016, 67, 637–648. [Google Scholar] [CrossRef]

| VI | Equation | Reference |

|---|---|---|

| NDVI 1 | (NIR—Red)/(NIR + Red) | [35] |

| NDRE | (NIR—Rededge)/(NIR + Rededge) | [36] |

| GNDVI | (NIR—Green)/(NIR + Green) | [37] |

| Training | Test | ||||

|---|---|---|---|---|---|

| Algorithms | Input 1 | R2 | RMSE (cm) | R2 | RMSE (cm) |

| Linear Regression | 1 | 0.93 | 24.56 | 0.93 | 23.56 |

| 2 | 0.93 | 22.71 | 0.94 | 21.33 | |

| 3 | 0.74 | 45.01 | 0.74 | 44.13 | |

| Random Forest | 1 | 0.94 | 24.49 | 0.94 | 22.02 |

| 2 | 0.97 | 16.49 | 0.97 | 15.07 | |

| 3 | 0.97 | 15.76 | 0.97 | 14.62 | |

| K-Nearest Neighbor | 1 | 0.95 | 18.74 | 0.95 | 20.59 |

| 2 | 0.97 | 14.10 | 0.97 | 16.55 | |

| 3 | 0.97 | 11.84 | 0.97 | 14.66 | |

| Support Vector Machine | 1 | 0.95 | 17.81 | 0.95 | 19.39 |

| 2 | 0.95 | 15.86 | 0.95 | 18.76 | |

| 3 | 0.87 | 30.86 | 0.88 | 32.02 | |

| Decision Trees | 1 | 0.94 | 19.76 | 0.94 | 22.29 |

| 2 | 0.98 | 15.60 | 0.97 | 16.98 | |

| 3 | 0.97 | 14.84 | 0.97 | 16.26 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ferraz, M.A.J.; Barboza, T.O.C.; Arantes, P.d.S.; Von Pinho, R.G.; Santos, A.F.d. Integrating Satellite and UAV Technologies for Maize Plant Height Estimation Using Advanced Machine Learning. AgriEngineering 2024, 6, 20-33. https://doi.org/10.3390/agriengineering6010002

Ferraz MAJ, Barboza TOC, Arantes PdS, Von Pinho RG, Santos AFd. Integrating Satellite and UAV Technologies for Maize Plant Height Estimation Using Advanced Machine Learning. AgriEngineering. 2024; 6(1):20-33. https://doi.org/10.3390/agriengineering6010002

Chicago/Turabian StyleFerraz, Marcelo Araújo Junqueira, Thiago Orlando Costa Barboza, Pablo de Sousa Arantes, Renzo Garcia Von Pinho, and Adão Felipe dos Santos. 2024. "Integrating Satellite and UAV Technologies for Maize Plant Height Estimation Using Advanced Machine Learning" AgriEngineering 6, no. 1: 20-33. https://doi.org/10.3390/agriengineering6010002

APA StyleFerraz, M. A. J., Barboza, T. O. C., Arantes, P. d. S., Von Pinho, R. G., & Santos, A. F. d. (2024). Integrating Satellite and UAV Technologies for Maize Plant Height Estimation Using Advanced Machine Learning. AgriEngineering, 6(1), 20-33. https://doi.org/10.3390/agriengineering6010002