Augmented Reality Applied to Identify Aromatic Herbs Using Mobile Devices

Abstract

:1. Introduction

- Morphological similarity: Many aromatic herbs have similar visual characteristics, such as leaf shape, color, and texture, which can lead to misidentification. This similarity is problematic as it compromises food safety and generates economic losses due to incorrect classification. Augmented reality performs better in problems involving morphological similarities, consuming fewer computational resources and data networks [12].

- Lack of technical knowledge: Consumers and even some professionals in the food sector may lack the technical knowledge needed to distinguish between different species of aromatic herbs. This lack of knowledge leads to errors in identification and classification, impacting food safety and quality and becoming an opportunity to use mobile devices with augmented reality [13].

- Economic impact: Incorrect identification of herbs can lead to economic losses, such as withdrawal of products from specific locations, waste, and damage to the companies’ reputation. Accurate identification is essential to prevent these economic consequences [14].

- Technological limitations: Current herb identification methods are often limited and require online environments, which may not be feasible in all settings. The proximity of CEAGESP to a provisional detention center limits Internet connectivity due to electromagnetic interference and security restrictions. This restriction prevents the use of cloud-based systems commonly used in augmented reality applications [15].

- Efficiency and user experience: Without advanced technological solutions, the current methods of identifying aromatic herbs are inefficient and do not provide a satisfactory user experience. The traditional printed leaflet method is prone to error and cannot offer detailed, real-time information about the herbs [16].

2. Background

2.1. Food Security Challenges Involving Aromatic Herbs

2.2. Augmented Reality and Its Applications in the Food Chain

2.3. Computer Vision Associated with Augmented Reality

2.4. Related Works

3. Materials and Methods

3.1. Problem Description

3.2. Define Requirements for Artifacts

- Cineol, linalool, camphor, and 4-Terpinenol

- Diallyl organosulfurs

- α-Pinene, carvone, limonene, linalool, and myristicin

- Caryophyllene, thymol, and terpinene

- Estragol and anethole

- Menthyl acetate

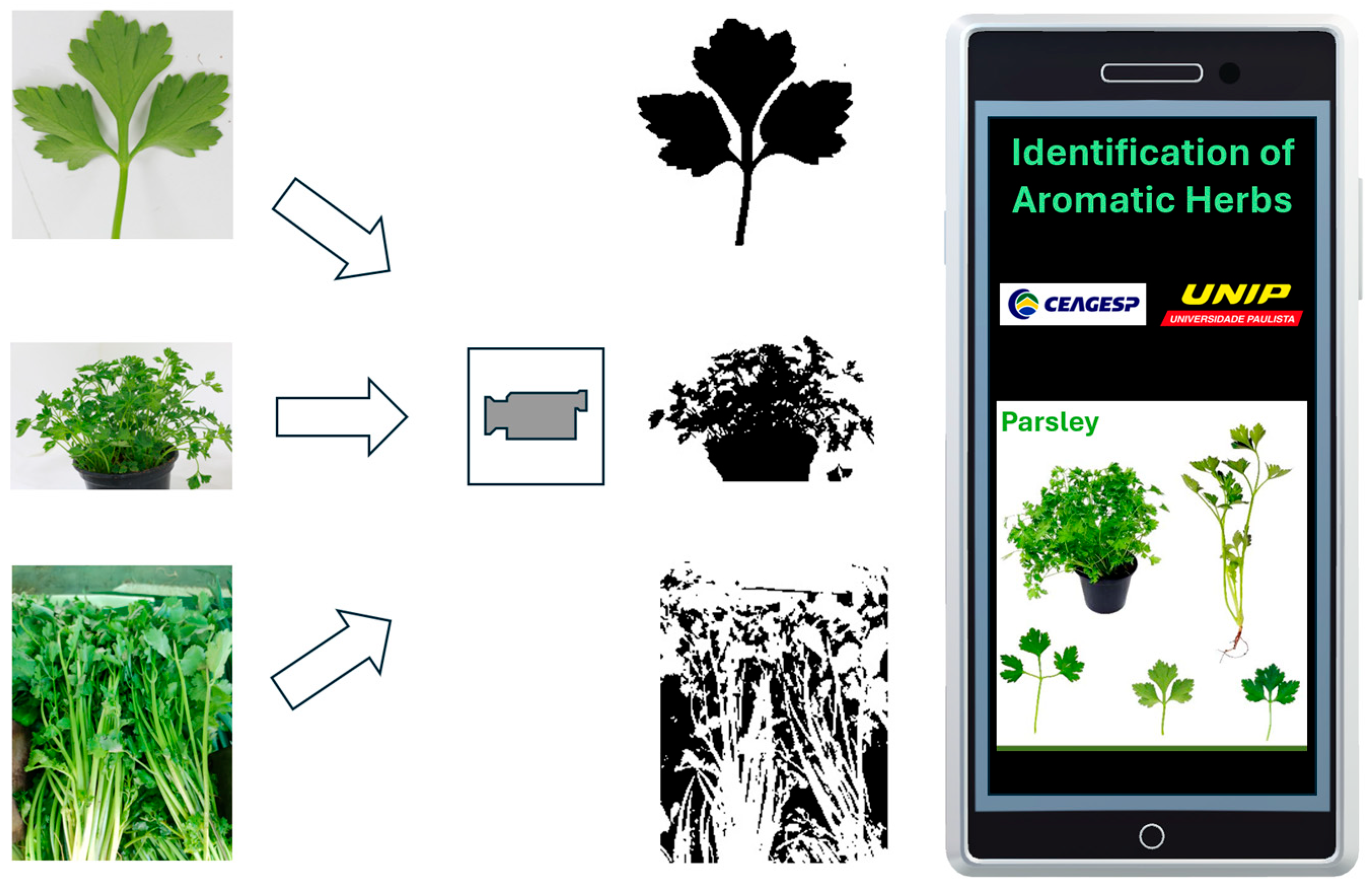

3.3. Design and Development of the Artifact

3.3.1. Design

3.3.2. Artifact Development

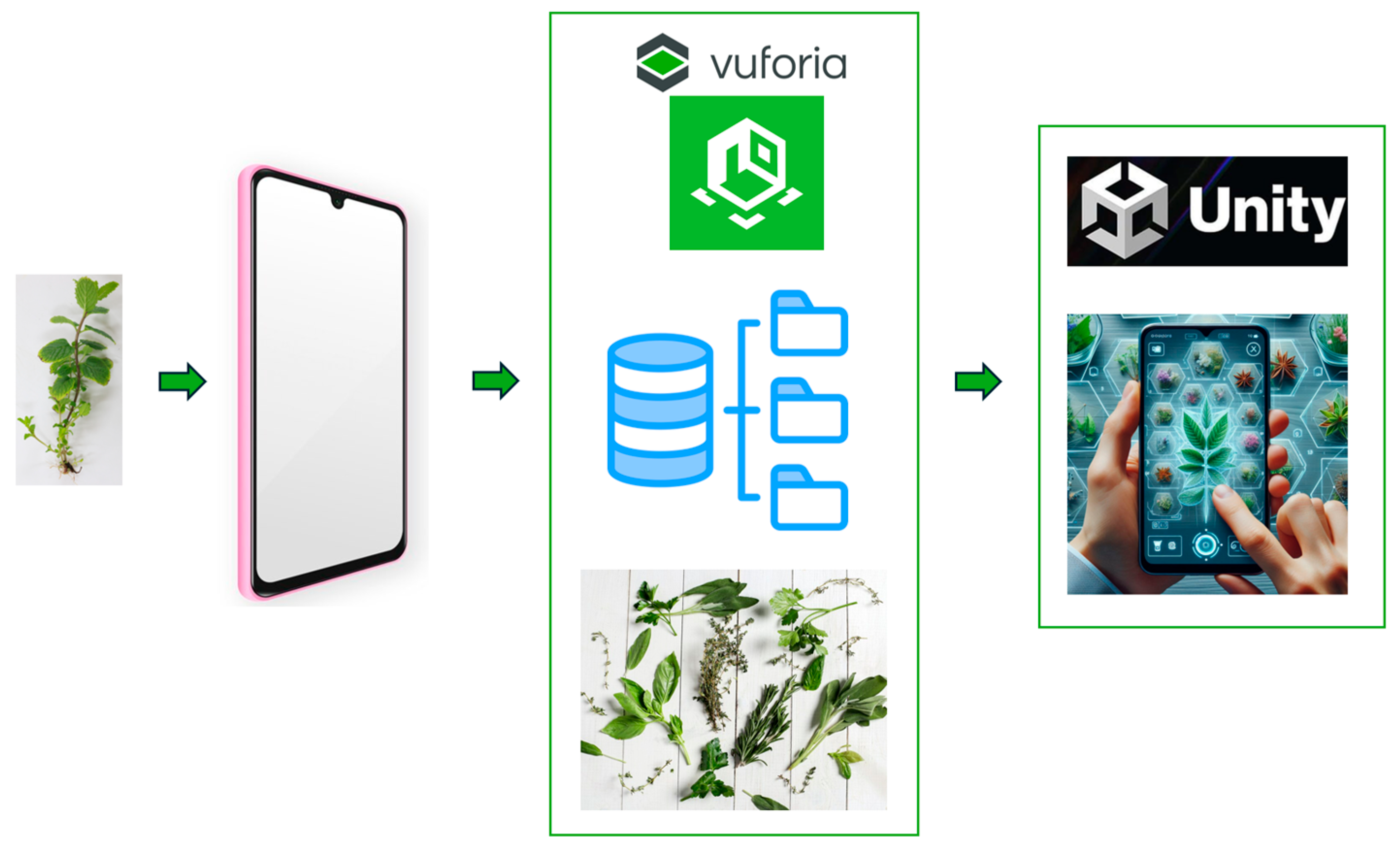

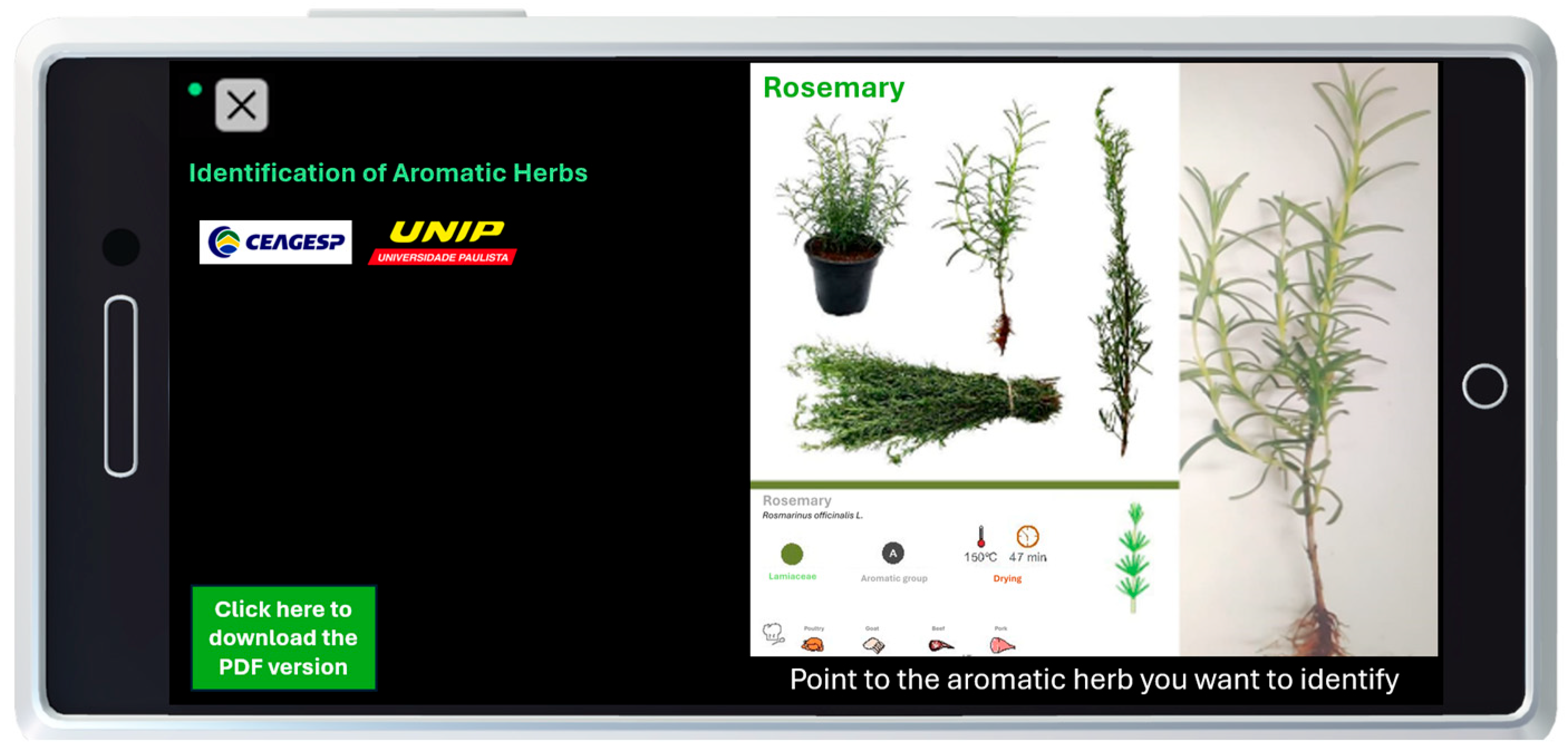

- Image tracking: The application uses Vuforia 10.6 software to track images in real time. Such use involves capturing images using the mobile device’s camera and comparing them with a local database of images of known herbs. When a match is found, virtual objects and information are overlaid onto the real-world image.

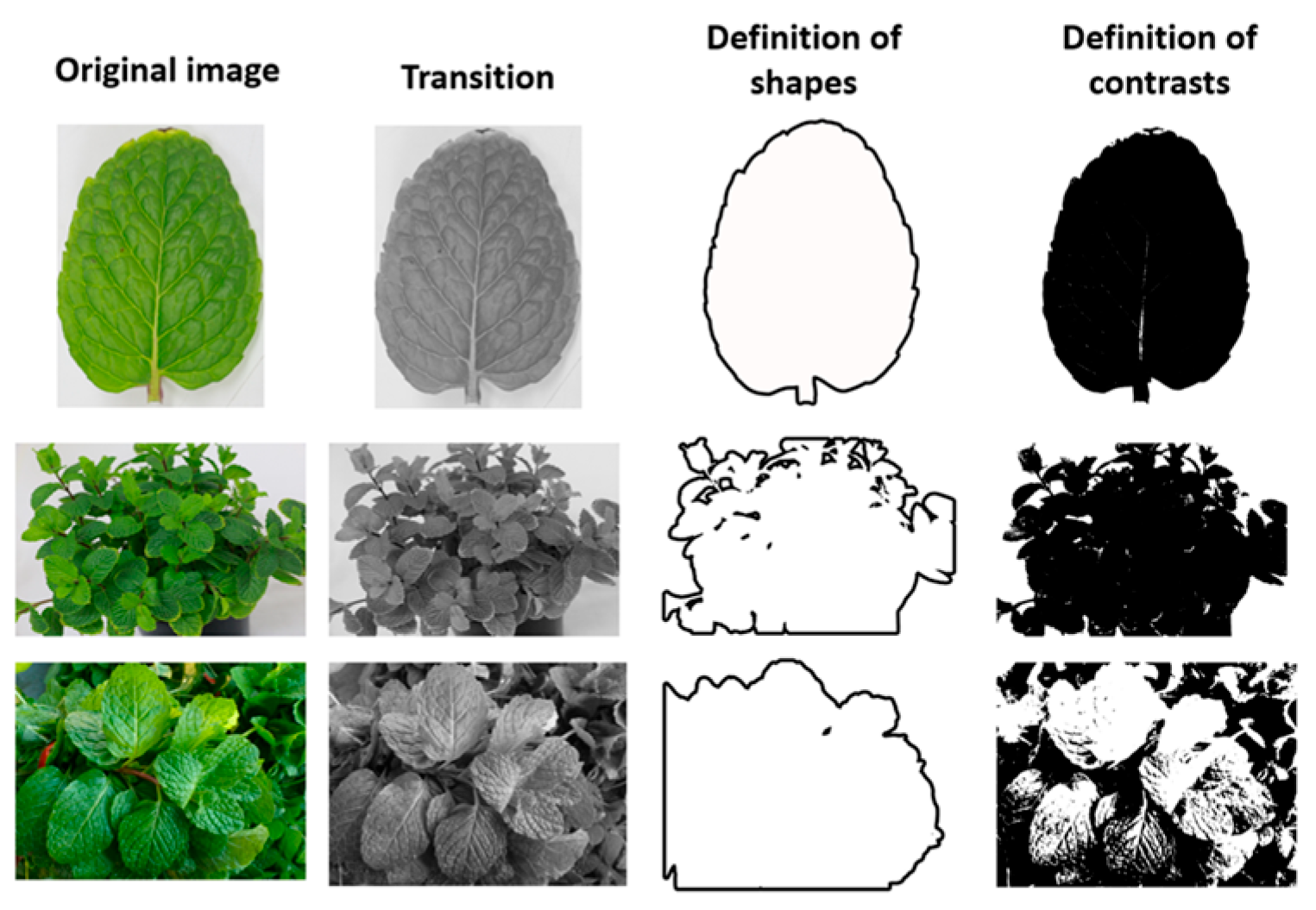

- Pattern recognition: Vuforia uses pattern recognition algorithms to identify known images in the camera feed. Such movement involves analyzing herbs’ shapes, contrasts, and geometries to facilitate precise identification.

- Feature tracking: The application uses feature tracking to identify characteristic points in images, allowing the precise overlay of virtual information on physical images of the herb.

- Depth tracking: Depth tracking algorithms measure the distance between the camera and the herbs, ensuring accurate positioning of augmented reality elements.

3.4. Demonstrate Artifact

3.5. Validation

4. Results and Discussions

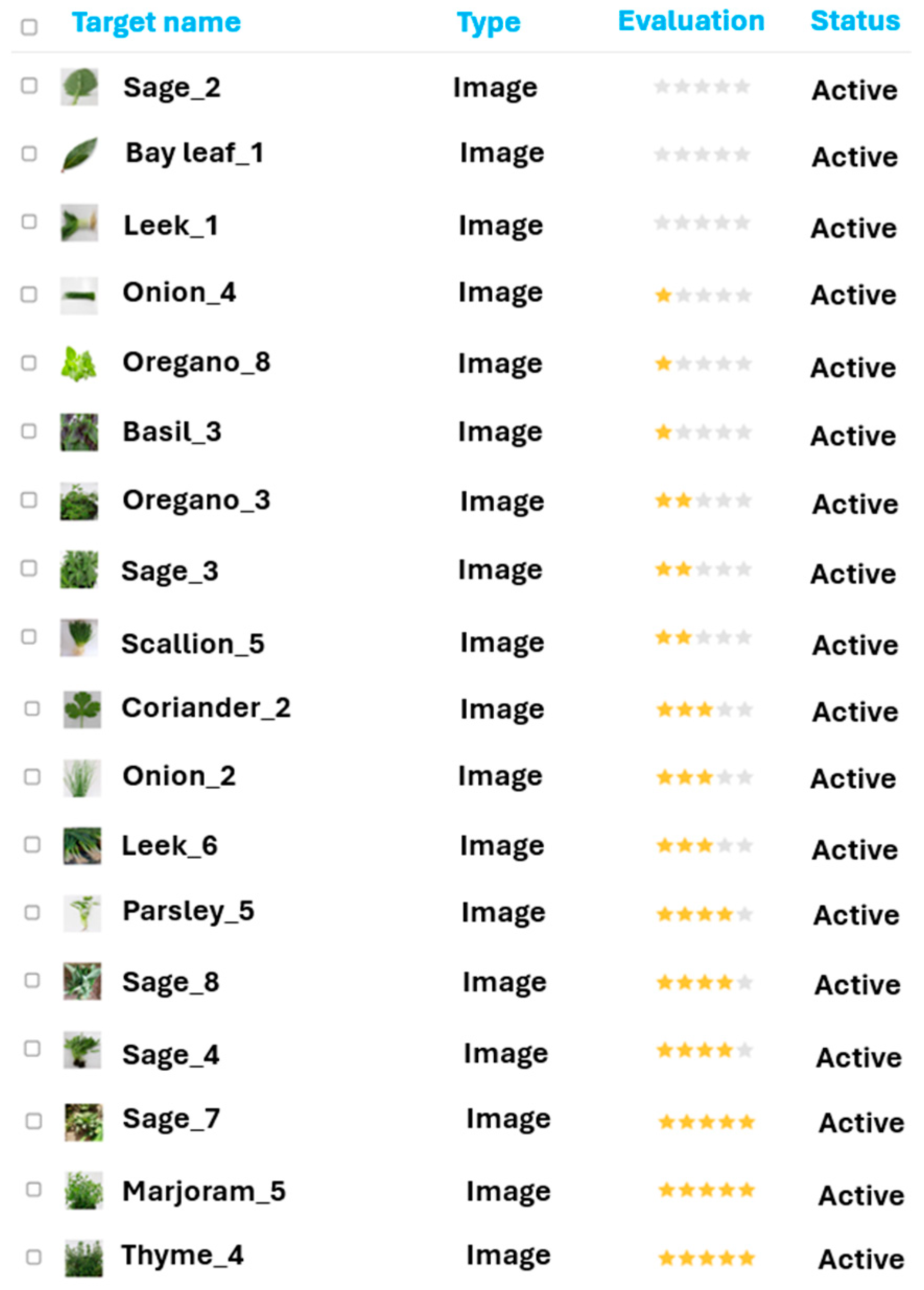

- Increased identification accuracy: By selecting high-quality and accurate images rated five stars, the new database ensures that the recognition system in Unity has a robust reference base, increasing its accuracy in identifying aromatic herbs.

- Improvement in system efficiency: With an optimized database containing only high-quality images, the processing of images by the system becomes more efficient, resulting in a shorter response time and greater agility in the user’s interaction with the application.

- Reduction in errors: Including well-classified images reduces the incidence of errors in recognizing herbs, as the selected images clearly and distinctly represent the characteristics of each species, facilitating correct classification by the system.

- Quality of user experience: With a well-structured and accurate database, the user experience is significantly improved. The augmented reality application offers fast and reliable responses, increasing user satisfaction and the system’s overall usability.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- UNDP. United Nations Development Programme. A Goal 2: Zero Hunger-Sustainable Development Goals. Available online: https://www.undp.org/sustainable-development-goals/zero-hunger (accessed on 12 December 2023).

- Chauhan, C.; Dhir, A.; Akram, M.U.; Salo, J. Food loss and waste in food supply chains. A systematic literature review and framework development approach. J. Clean. Prod. 2021, 295, e126438. [Google Scholar] [CrossRef]

- Pages-Rebull, J.; Pérez-Ràfols, C.; Serrano, N.; del Valle, M.; Díaz-Cruz, J.M. Classification and authentication of spices and aromatic herbs using HPLC-UV and chemometrics. Food Biosci. 2023, 52, e102401. [Google Scholar] [CrossRef]

- Galanakis, C.M.; Rizou, M.; Aldawoud, T.M.S.; Ucak, I.; Rowan, N.J. Innovations and technology disruptions in the food sector within the COVID-19 pandemic and post-lockdown era. Trends Food Sci. Technol. 2021, 110, 193–200. [Google Scholar] [CrossRef] [PubMed]

- Kayikci, Y.; Subramanian, N.; Dora, M.; Bhatia, M.S. Food supply chain in the era of Industry 4.0: Blockchain technology implementation opportunities and impediments from the perspective of people, process, performance, and technology. Prod. Plan. Control. 2022, 33, 301–321. [Google Scholar] [CrossRef]

- Ghobakhloo, M.; Fathi, M.; Iranmanesh, M.; Maroufkhani, P.; Morales, M.E. Industry 4.0 ten years on A bibliometric and systematic review of concepts, sustainability value drivers, and success determinants. J. Clean. Prod. 2021, 302, 127052. [Google Scholar] [CrossRef]

- Dubey, S.R.; Jalal, A.S. Application of Image Processing in Fruit and Vegetable Analysis: A Review. J. Intell. Syst. 2015, 24, 405–424. [Google Scholar] [CrossRef]

- Mohanty, S.P.; Hughes, D.P.; Salathé, M. Using deep learning for image-based plant disease detection. Front. Plant Sci. 2016, 7, e215232. [Google Scholar] [CrossRef] [PubMed]

- Jatnika, R.D.A.; Medyawati, I.; Hustinawaty, H. Augmented Reality Design of Indonesia Fruit Recognition. Int. J. Electr. Comput. Eng. 2018, 8, 4654–4662. [Google Scholar]

- Kalinaki, K.; Shafik, W.; Gutu, T.J.L.; Malik, O.A. Computer Vision and Machine Learning for Smart Farming and Agriculture Practices. In Artificial Intelligence Tools and Technologies for Smart Farming and Agriculture Practices; IGI Global: Hershey, PA, USA, 2023; pp. 79–100. [Google Scholar] [CrossRef]

- Department of Economic and Social Affairs. Food Security, Nutrition, and Sustainable Agriculture. Available online: https://sdgs.un.org/topics/food-security-and-nutrition-and-sustainable-agriculture (accessed on 12 December 2023).

- Cheok, A.D.; Karunanayaka, K. Virtual Taste and Smell Technologies for Multisensory Internet and Virtual Reality; Springer International Publishing: Cham, Switzerland, 2018. [Google Scholar] [CrossRef]

- Liberty, T.J.; Sun, S.; Kucha, C.; Adedeji, A.A.; Agidi, G.; Ngadi, M.O. Augmented reality for food quality assessment: Bridging the physical and digital worlds. J. Food Eng. 2024, 367, 111893. [Google Scholar]

- Velázquez, R.; Rodríguez, A.; Hernández, A.; Casquete, R.; Benito, M.J.; Martín, A. Spice and Herb Frauds: Types, Incidence, and Detection: The State of the Art. Foods 2023, 12, 3373. [Google Scholar] [CrossRef]

- Hossain, M.F.; Jamalipour, A.; Munasinghe, K. A Survey on Virtual Reality over Wireless Networks: Fundamentals, QoE, Enabling Technologies, Research Trends and Open Issues. TechRxiv 2023. [Google Scholar] [CrossRef]

- Ding, H.; Tian, J.; Yu, W.; Wilson, D.I.; Young, B.R.; Cui, X.; Xin, X.; Wang, Z.; Li, W. The Application of Artificial Intelligence and Big Data in the Food Industry. Foods 2023, 12, 4511. [Google Scholar] [CrossRef]

- Feng, Y.; Wang, Y.; Beykal, B.; Qiao, M.; Xiao, Z.; Luo, Y. A mechanistic review on machine learning-supported detection and analysis of volatile organic compounds for food quality and safety. Trends Food Sci. Technol. 2024, 143, 104297. [Google Scholar] [CrossRef]

- Johannesson, P.; Perjons, E. Systems Development and the Method Framework for Design Science Research. In An Introduction to Design Science; Johannesson, P., Perjons, E., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2014; pp. 157–165. [Google Scholar] [CrossRef]

- Moradkhani, H.; Ahmadalipour, A.; Moftakhari, H.; Abbaszadeh, P.; Alipour, A. A review of the 21st century challenges in the food-energy-water security in the Middle East. Water 2019, 11, 682. [Google Scholar] [CrossRef]

- Naylor, R.L.; Hardy, R.W.; Buschmann, A.H.; Bush, S.R.; Cao, L.; Klinger, D.H.; Little, D.C.; Lubchenco, J.; Shumway, S.E.; Troell, M. A 20-year retrospective review of global aquaculture. Nature 2021, 591, 551–563. [Google Scholar] [CrossRef]

- Chapman, J.A.; Bernstein, I.L.; Lee, R.E.; Oppenheimer, J.; Nicklas, R.A.; Portnoy, J.M.; Sicherer, S.H.; Schuller, D.E.; Spector, S.L.; Khan, D.; et al. Food allergy: A practice parameter. Ann. Allergy Asthma Immunol. 2006, 96, S1–S68. [Google Scholar] [CrossRef]

- Gremillion, T.M. Food Safety and Consumer Expectations. In Encyclopedia of Food Safety, 2nd ed.; Smithers, G.W., Ed.; Academic Press: Oxford, UK, 2024; pp. 547–550. [Google Scholar]

- Salgueiro, L.; Martins, A.P.; Correia, H. Raw materials: The importance of quality and safety. A review. Flavour Fragr. J. 2010, 25, 253–271. [Google Scholar] [CrossRef]

- Guiné, R.P.F.; Gonçalves, F.J. Bioactive compounds in some culinary aromatic herbs and their effects on human health. Mini-Rev. Med. Chem. 2016, 16, 855–866. [Google Scholar] [CrossRef]

- Kindlovits, S.; Gonçalves, F.J. Effect of weather conditions on the morphology, production and chemical composition of two cultivated medicinal and aromatic species. Eur. J. Hortic. Sci. 2014, 79, 76–83. [Google Scholar]

- Raffi, J.; Yordanov, N.D.; Chabane, S.; Douifi, L.; Gancheva, V.; Ivanova, S. Identification of irradiation treatment of aromatic herbs, spices and fruits by electron paramagnetic resonance and thermoluminescence. Spectrochim. Acta-Part A Mol. Biomol. Spectrosc. 2000, 56, 409–416. [Google Scholar] [CrossRef]

- Rocha, R.P.; Melo, E.C.; Radünz, L.L. Influence of drying process on the quality of medicinal plants: A review. J. Med. Plant Res. 2011, 5, 7076–7084. [Google Scholar] [CrossRef]

- Lacis-Lee, J.; Brooke-Taylor, S.; Clark, L. Allergens as a Food Safety Hazard: Identifying and Communicating the Risk. In Encyclopedia of Food Safety, 2nd ed.; Smithers, G.W., Ed.; Academic Press: Oxford, UK, 2024; pp. 700–710. [Google Scholar]

- Husin, Z.; Shakaff, A.; Aziz, A.; Farook RS, M.; Jaafar, M.N.; Hashim, U.; Harun, A. Embedded portable device for herb leaves recognition using image processing techniques and neural network algorithm. Comput. Electron. Agric. 2012, 89, 18–29. [Google Scholar] [CrossRef]

- Senevirathne, L.; Shakaff, A.; Aziz, A.; Farook, R.; Jaafar, M.; Hashim, U.; Harun, A. Mobile-based Assistive Tool to Identify & Learn Medicinal Herbs. In Proceedings of the 2nd International Conference on Advancements in Computing (ICAC), Colombo, Sri Lanka, 10–11 December 2020; Volume 1, pp. 97–102. [Google Scholar] [CrossRef]

- Lan, K.; Tsai, T.; Hu, M.; Weng, J.-C.; Zhang, J.-X.; Chang, Y.-S. Toward Recognition of Easily Confused TCM Herbs on the Smartphone Using Hierarchical Clustering Convolutional Neural Network. Evid.-Based Complement. Altern. Med. 2023, 2023, e9095488. [Google Scholar] [CrossRef]

- Weerasinghe, N.C.; AVGHS, A.; Fernando, W.W.R.S.; Rajapaksha, P.R.K.N.; Siriwardana, S.E.; Nadeeshani, M. HABARALA—A Comprehensive Solution for Food Security and Sustainable Agriculture through Alternative Food Resources and Technology. In Proceedings of the 2023 IEEE 17th International Conference on Industrial and Information Systems (ICIIS), Peradeniya, Sri Lanka, 25–26 August 2023; pp. 175–180. [Google Scholar]

- How augmented reality (AR) is transforming the restaurant sector: Investigating the impact of “Le Petit Chef” on customers’ dining experiences. Technol. Forecast. Soc. Change 2021, 172, 121013. [CrossRef]

- Reif, R.; Walch, D. Augmented & Virtual Reality applications in the field of logistics. Vis. Comput. 2008, 24, 987–994. [Google Scholar]

- Chai, J.J.K.; O’Sullivan, C.; Gowen, A.A.; Rooney, B.; Xu, J.-L. Augmented/mixed reality technologies for food: A review. Trends Food Sci. Technol. 2022, 124, 182–194. [Google Scholar] [CrossRef]

- Domhardt, M.; Tiefengrabner, M.; Dinic, R.; Fötschl, U.; Oostingh, G.J.; Stütz, T.; Stechemesser, L.; Weitgalsser, R.; Ginzinger, S.W. Training of Carbohydrate Estimation for People with Diabetes Using Mobile Augmented Reality. J. Diabetes Sci. Technol. 2015, 9, 516–524. [Google Scholar] [CrossRef] [PubMed]

- Musa, H.S.; Krichen, M.; Altun, A.A.; Ammi, M. Survey on Blockchain-Based Data Storage Security for Android Mobile Applications. Sensors 2023, 23, 8749. [Google Scholar] [CrossRef]

- Graney-Ward, C.; Issac, B.; KETSBAIA, L.; Jacob, S.M. Detection of Cyberbullying Through BERT and Weighted Ensemble of Classifiers. TechRxiv 2022. [Google Scholar] [CrossRef]

- Dunkel, E.R.; Swope, J.; Candela, A.; West, L.; Chien, S.A.; Towfic, Z.; Buckley, L.; Romero-Cañas, J.; Espinosa-Aranda, J.L.; Hervas-Martin, E.; et al. Benchmarking Deep Learning Models on Myriad and Snapdragon Processors for Space Applications. J. Aerosp. Inf. Syst. 2023, 20, 660–674. [Google Scholar] [CrossRef]

- Arroba, P.; Buyya, R.; Cárdenas, R.; Risco-Martín, J.L.; Moya, J.M. Sustainable edge computing: Challenges and future directions. Softw. Pract. Exp. 2024, 1–25. [Google Scholar] [CrossRef]

- Velesaca, H.O.; Suárez, P.L.; Mira, R.; Sappa, A.D. Computer vision based food grain classification: A comprehensive survey. Comput. Electron. Agric. 2021, 187, e106287. [Google Scholar] [CrossRef]

- Poonja, H.A.; Shirazi, M.A.; Khan, M.J.; Javed, K. Engagement detection and enhancement for STEM education through computer vision, augmented reality, and haptics. Image Vis. Comput. 2023, 136, 104720. [Google Scholar] [CrossRef]

- Engine Developer Portal. SDK Download. 2022. Available online: https://developer.vuforia.com/downloads/SDK (accessed on 23 November 2023).

- Unity Technologies. Unity 2020.3.33, Unity: Beijing, China, 2022. Available online: https://unity.com/releases/editor/whats-new/2020.3.33 (accessed on 20 November 2023).

- Sladojevic, S.; Arsenovic, M.; Anderla, A.; Culibrk, D.; Stefanovic, D. Deep Neural Networks Based Recognition of Plant Diseases by Leaf Image Classification. Comput. Intell. Neurosci. 2016, 2016, e3289801. [Google Scholar] [CrossRef] [PubMed]

- Wang, G.; Sun, Y.; Wang, J. Automatic Image-Based Plant Disease Severity Estimation Using Deep Learning. Comput. Intell. Neurosci. 2017, 2017, e2917536. [Google Scholar] [CrossRef] [PubMed]

- Patrício, D.I.; Rieder, R. Computer vision and artificial intelligence in precision agriculture for grain crops: A systematic review. Comput. Electron. Agric. 2018, 153, 69–81. [Google Scholar] [CrossRef]

- Antunes, S.N.; Okano, M.T.; Nääs, I.D.A.; Lopes, W.A.C.; Aguiar, F.P.L.; Vendrametto, O.; Fernandes, M.E. Model Development for Identifying Aromatic Herbs Using Object Detection Algorithm. AgriEngineering 2024, 6, 1924–1936. [Google Scholar] [CrossRef]

- Mustafa, M.S.; Husin, Z.; Tan, W.K.; Mavi, M.F.; Farook, R.S.M. Development of automated hybrid intelligent system for herbs plant classification and early herbs plant disease detection. Neural Comput. Appl. 2020, 32, 11419–11441. [Google Scholar] [CrossRef]

- Chaivivatrakul, S.; Moonrinta, J.; Chaiwiwatrakul, S. Convolutional neural networks for herb identification: Plain background and natural environment. Int. J. Adv. Sci. Eng. Inf. Technol. 2022, 12, 1244–1252. [Google Scholar] [CrossRef]

- Zhao, Y.; Sun, Z.; Tian, E.; Hu, C.; Zong, H.; Yang, F. A CNN Model for Herb Identification Based on Part Priority Attention Mechanism. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; pp. 2565–2571. [Google Scholar]

- Sinha, J.; Chachra, P.; Biswas, S.; Jayswal, A.K. Ayurvedic Herb Classification using Transfer Learning based CNNs. In Proceedings of the 2024 IEEE 2nd International Conference on Advancement in Computation & Computer Technologies (InCACCT), Punjab, India, 2–3 May 2024; pp. 628–634. [Google Scholar]

- Senan, N.; Najib, N.A.M. UTHM Herbs Garden Application Using Augmented Reality. Appl. Inf. Technol. Comput. Sci. 2020, 1, 181–191. [Google Scholar]

- Permana, R.; Tosida, E.T.; Suriansyah, M.I. Development of augmented reality portal for medicininal plants introduction. Int. J. Glob. Oper. Res. 2022, 3, 52–63. [Google Scholar] [CrossRef]

- Zhu, Q.; Xie, Y.; Ye, F.; Gao, Z.; Che, B.; Chen, Z.; Yu, D. Chinese herb medicine in augmented reality. arXiv 2023, arXiv:2309.13909. [Google Scholar]

- Angeles, J.M.; Calanda, F.B.; Bayon-on, T.V.V.; Morco, R.C.; Avestro, J.; Corpuz, M.J.S. Ar plants: Herbal plant mobile application utilizing augmented reality. In Proceedings of the 2017 International Conference on Computer Science and Artificial Intelligence, Jakarta, Indonesia, 5–7 December 2017; pp. 43–48. [Google Scholar]

- Gerber, A.; Baskerville, R. (Eds.) Design Science Research for a New Society: Society 5.0: 18th International Conference on Design Science Research in Information Systems and Technology, DESRIST 2023, Pretoria, South Africa, May 31–June 2 2023, Proceedings; Springer: Cham, Switzerland, 2023. [Google Scholar]

- Peffers, K.; Tuunanen, T.; Rothenberger, M.A.; Chatterjee, S. A Design Science Research Methodology for Information Systems Research. J. Manag. Inf. Syst. 2007, 24, 45–77. [Google Scholar] [CrossRef]

- Sadeghi-Niaraki, A.; Choi, S.-M. A Survey of Marker-Less Tracking and Registration Techniques for Health & Environmental Applications to Augmented Reality and Ubiquitous Geospatial Information Systems. Sensors 2020, 20, 2997. [Google Scholar] [CrossRef]

- Arena, F.; Collotta, M.; Pau, G.; Termine, F. An Overview of Augmented Reality. Computers 2022, 11, 28. [Google Scholar] [CrossRef]

| Number of Stars | Number of Images |

|---|---|

| 5 | 192 |

| 4 | 138 |

| 3 | 54 |

| 2 | 59 |

| 1 | 25 |

| 0 | 133 |

| Name | Original Image | Transition | Definition of Shapes | Definition of Contrasts | Number of Stars | Processing Time (s) |

|---|---|---|---|---|---|---|

| Mint |  |  |  |  |  | 2 |

| Parsley |  |  |  |  |  | 3 |

| Oregano |  |  |  |  |  | 8 |

| Anise |  |  |  |  |  | 20 |

| Nira |  |  |  |  |  | 30 |

| Leek |  |  |  |  | 0 | There was no processing. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lopes, W.A.C.; Fernandes, J.C.L.; Antunes, S.N.; Fernandes, M.E.; Nääs, I.d.A.; Vendrametto, O.; Okano, M.T. Augmented Reality Applied to Identify Aromatic Herbs Using Mobile Devices. AgriEngineering 2024, 6, 2824-2844. https://doi.org/10.3390/agriengineering6030164

Lopes WAC, Fernandes JCL, Antunes SN, Fernandes ME, Nääs IdA, Vendrametto O, Okano MT. Augmented Reality Applied to Identify Aromatic Herbs Using Mobile Devices. AgriEngineering. 2024; 6(3):2824-2844. https://doi.org/10.3390/agriengineering6030164

Chicago/Turabian StyleLopes, William Aparecido Celestino, João Carlos Lopes Fernandes, Samira Nascimento Antunes, Marcelo Eloy Fernandes, Irenilza de Alencar Nääs, Oduvaldo Vendrametto, and Marcelo Tsuguio Okano. 2024. "Augmented Reality Applied to Identify Aromatic Herbs Using Mobile Devices" AgriEngineering 6, no. 3: 2824-2844. https://doi.org/10.3390/agriengineering6030164