Image Analysis Artificial Intelligence Technologies for Plant Phenotyping: Current State of the Art

Abstract

1. Introduction

- i.

- To investigate the latest developments, benefits, limitations, and future directions of image analysis phenotyping technologies based on AI, ML, 3D imaging, and software solutions.

- ii.

- To investigate the challenges associated with the use of different image analysis phenotyping technologies based on AI, ML, 3D imaging, and software solutions.

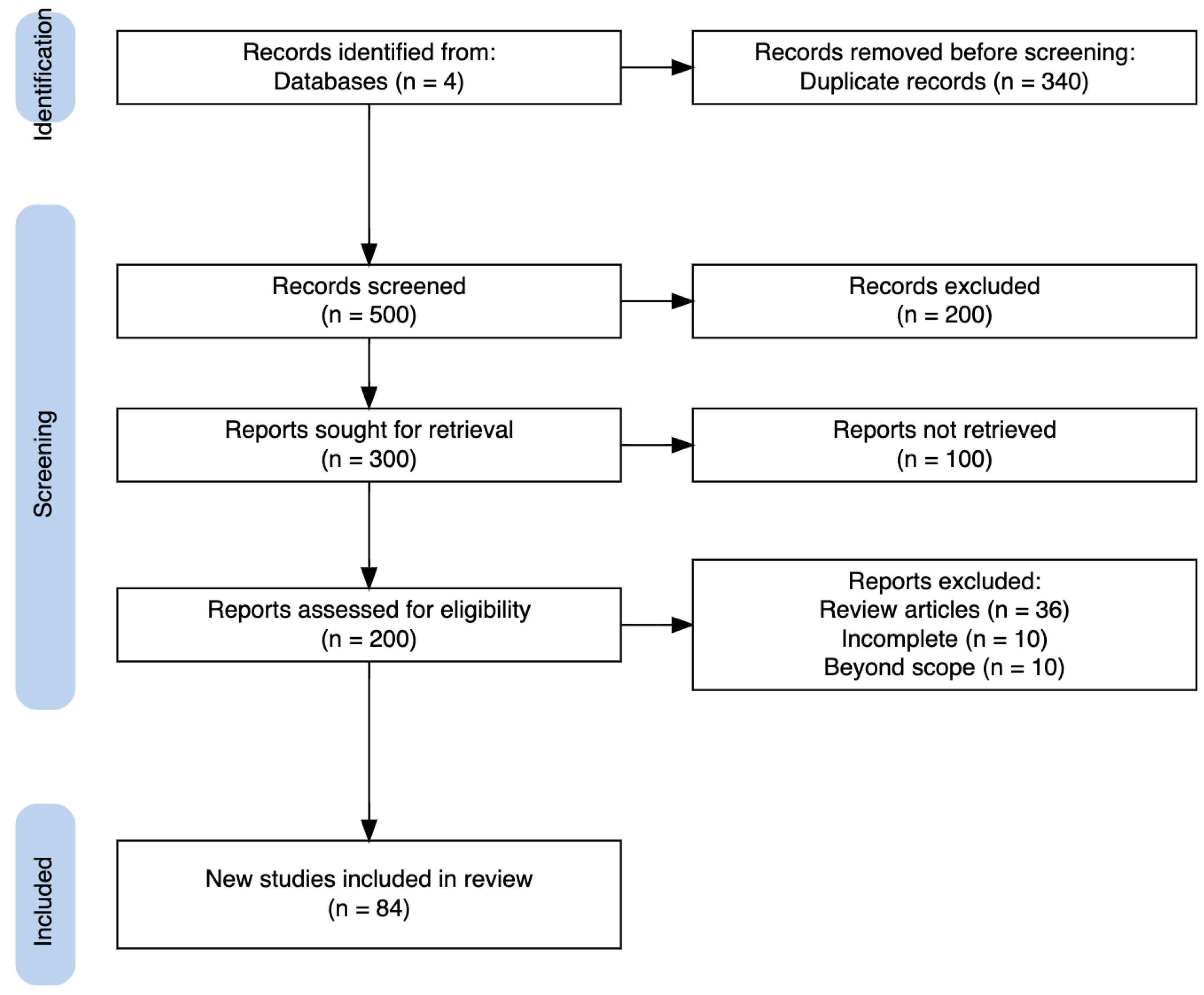

2. Materials and Methods

2.1. Literature Search

- “advanced” AND “AI algorithms” AND “plant phenotyping” AND “challenges”

- “future directions” AND “AI algorithms” AND “image analysis” OR

- “plant phenotyping”

- “challenges” AND “AI algorithms” AND “image analysis” OR

- “plant phenotyping”

2.2. Study Selection

2.3. Reporting the Findings

3. Results

3.1. Overview of Methods Used in the Selected Articles

3.2. Latest Techniques Using AI and ML Algorithms in Plant Phenotyping

3.2.1. AI (ML) Algorithms

3.2.2. Unspecified AI Algorithms

3.2.3. AI Algorithms (Software)

3.2.4. HTP Analysis

3.2.5. HSI Analysis

3.2.6. 3D Image Reconstruction

4. Discussion

5. Conclusions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Summary of the Selected Articles in the Review Article

| Article | Focus | Data processing Methods | Image Sensing Tools | Target Crop | Main Findings | Relevance |

| Yu et al. [33] | Using deep learning to assess RGB images showcasing lettuce growth cycle to identify and assess phenotypic indices | Not Specified AI(ML) | Not Specified | Lettuce | The model demonstrated high accuracy in predicting lettuce phenotypic indices with an average error of less than 0.55% for geometric indices and less than 1.7% for color and texture indices. | The study demonstrates the use of deep learning models in plant phenotypic applications. |

| Tu et al. [43] | To test the relevance of AIseed that captures and analyzes plant traits, including color, texture, and shape of individual seeds | Not Specified | AISeed | Rice, wheat, maize, scutellaria baicalensis, platycodon grandiflorum | AI seed has a high performance in the extraction of phenotypic features and testing seed quality from images for seeds of different plants of different sizes. | The study reveals the non-destructive method for seed phenotyping and seed quality assessment. |

| Ji et al. [62] | To demonstrate a labor-free and automated method for isolating crop leaf pixels from RGB imagery using a random forest algorithm | Random Forest | Not Specified | Tea, maize, rice, soybean, tomato, arabidopsis | The algorithm’s performance was comparable to or exceeded that of the latest methods. It also showed improvement in evaluation indicators from 9% to 310% higher. | The study showed that the methods were relevant in extracting crop leaf pixels from multisource RGB imagery captured using multiple platforms, including cameras, smartphones, and unmanned aerial vehicles. |

| Skobalski et al. [18] | To investigate the transferability and generalization capabilities of yield prediction models for crop breeding using different machine-learning techniques | Random Forest (RF) and Gradient Boosting (GB) | Not Specified | Soybean | The results showed that datasets from Argentina and the US representing different climate regimes had the highest performance with R2 of 0.76 using Random Forest (RF) and Gradient Boosting (GB) algorithms. | The use of transfer learning in real-world breeding scenarios improved decision-making for agricultural productivity. |

| Wang et al. [17] | To investigate lodging phenotypes in the field reconstructed from UAV images using a geometric model | Not Specified 3D image reconstruction | Not Specified | rapeseed | The results showed a high accuracy of 95.4% in classifying lodging types, where the rapeseed cultivars Zhongshuang 11 and Dadi 199 were the most dominant cultivars | The study showed that the lodging phenotyping method had the potential to enhance mechanized harvesting; hence, accurate low-yield estimates were obtained |

| Niu et al. [52] | To investigate the influence of fractional vegetation cover (FVC) on digital terrain model (DTM) reconstruction accuracy | Not Specified 3D image reconstruction | maize | The results showed that the accuracy of DTM constructed using an inverse distance weighted algorithm was influenced by FVC conditions | The results demonstrated the effectiveness of DTM reconstruction and the impact of view angle and spatial resolution on PH estimation based on UAV RGB images | |

| Haghshenas and Emam [63] | To evaluate the quantitative characterization of shading patterns using a green-gradient-based canopy segmentation model | Not Specified HTP | Not Specified | Wheat | The yielded graph was generated that could be used for accurate prediction of different canopy properties, including canopy coverage | The model demonstrates the effectiveness of a multipurpose high throughput phenotyping (HTP) platform. |

| Haque et al. [64] | To demonstrate the effectiveness of high throughput imagery in quantifying the shape and size features of sweet potato | Not Specified HTP | Not Specified | Sweet potato | Results showed that the model had 84.59% accuracy in predicting the shape features of sweet potato cultivars. | The study demonstrated the effectiveness of big data analytics in industrial sweet potato agriculture. |

| Xie et al. [53] | To compare four rapeseed height estimation methods using UAV images obtained at three growth stages using complete and incomplete data | Not Specified AI(ML) | Not Specified | Rapeseed | Results showed that where complete data was used, optimal results were obtained with an R2 of 0.932. | The study demonstrated that systematic strategies were adopted to select appropriate methods to acquire crop height with reasonable accuracy. |

| Mingxuan et al. [23] | To demonstrate the effectiveness of an anti-gravity stem-seeking (AGSS) root image restoration algorithm to repair root images and extract root phenotype information for different resistant maize seeds | AGSS | Not Specified | maize | The results showed high detection accuracy higher than 90% for root length and diameter but negatively with lateral root number | The AGSS algorithm is relevant in quick and effective repairs of root images in small embedded systems |

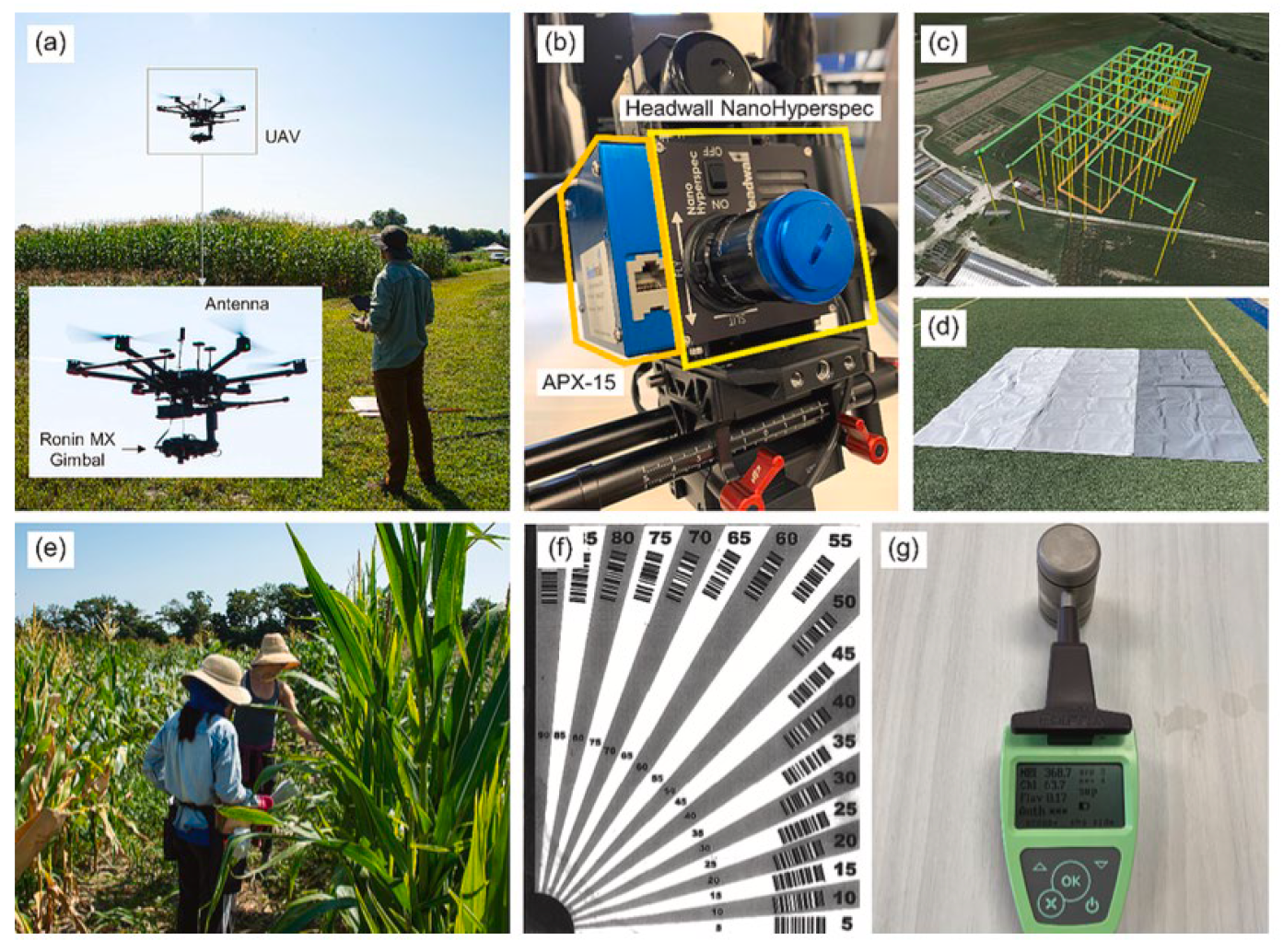

| Teshome et al. [21] | To evaluate the effectiveness of combining unmanned aerial vehicles (UAV)-based imaging and machine learning (ML) for monitoring sweet corn yield, height, biomass | kNN and SVM | Not Specified | Sweet corn | The results showed that the UAV-based imaging was effective in estimating plant height. The kNN and SVM algorithms outperformed other models | The study results showed that UAV imaging and ML models were effective in monitoring plant phenotypic features, including height, yield, and biomass |

| Bolouri et al. [44] | To investigate the effectiveness of Cotton sense high-throughput phenotyping (HTP) in assessing multiple growth phases of cotton | Cotton sense | Not Specified | Cotton | The results showed that the model had an average AP score of 79% in segmentation across fruit categories and an R2 value of 0.94. | The proposed HTP system was cost-effective and power-efficient, hence effective in high-yield cotton breeding and crop improvement |

| Zhu et al. [19] | To demonstrate the effectiveness of hyperspectral imaging (his) in mitigating illumination influences of complex canopies | Random Forest | Not Specified | basil | The results showed that the HIS pipeline permitted the mitigation of influences and enabled in situ detection of canopy chlorophyll distribution. | The method demonstrates the importance of monitoring chlorophyll status in whole canopies, hence enhancing planting management strategies. |

| Shi et al. [24] | To investigate automated detection of seed melons and cotton plants using a U-Net established with double-depth convolutional and fusion block (DFU-Net) | DFU-Net | Not Specified | Seed melons, cotton | The results indicated that the DFU-Net model had an accuracy exceeding 92% for the dataset. | The approach is novel in optimizing crop-detection algorithms, hence providing valuable technical support for intelligent crop management. |

| Jayasuriya et al. [56] | To demonstrate a machine learning vision-based plant height estimation system for protected crop facilities | Not Specified AI(ML) | Not Specified | Capsicum | The results showed that there were similar growing patterns between imaged and manually measured plant heights with an R2 score of 0.87, 0.96, and 0.79 under unfiltered ambient light, smart glass film, and shifted light | The method was feasible in a vertically supported capsicum crop in a commercial-scale protected crop facility |

| Zhuang et al. [25] | To investigate the effectiveness of a solution based on a convolutional neural network and field rail-based phenotyping platform in obtaining the maize seedling emergence rate and leaf emergence speed | R-CNN | Not Specified | Maize | The results showed that the box mAP of the maize seedling detection model was 0.969 with an accuracy rate of 99.53%, which outperformed the manual counting model. | The study showed that the developed model was relevant in exploring the genotypic differences affecting seed emergence and leafing. |

| Yang et al. [54] | To demonstrate a phenotyping platform used in the 3D reconstruction of complex plants using multi-view images and a joint evaluation criterion for the reconstruction algorithm and platform | Not Specified 3D Image Reconstruction | Not Specified | Carex cabbage and kale | The proposed algorithm had minimum average track length, minimum reprojection error, and the highest points. | The proposed platform provided a cost-effective, automated, and integrated solution to enhance fine-scale plant 3D reconstruction. |

| Varghese et al. [22] | To demonstrate ML in photosynthetic research and photosynthetic pigment studies | Regression Trees ANN | Not Specified | Zea Mays, Brassica Oleracea | The results showed that ML algorithms were pivotal in improving crop yield by correlating hyperspectral data with photosynthetic parameters. | The use of ML in photosynthesis led to sustainable crop development. |

| Krämer et al. [47] | To develop and test two spatial aggregation approaches to make airborne SIF data usable in experimental settings. | Not Specified HTP | Not Specified | Wheat, barley | The selected approaches led to a better representation of ground truth on SIF. | Spatial aggregation techniques were effective in extracting remotely sensed SIF in crop phenotyping applications. |

| Teixeira et al. [45] | To demonstrate the effectiveness of high-throughput field phenotyping to quantify canopy development rates through aerial photographs | Not Specified HTP | Oats | The results showed that a decline in plant trait effects occurred across different production system components accompanied by high response variability. | The combination of high throughput phenotyping, crop physiology field experiments, and biophysical modeling led to an increased understanding of plant trait benefits. | |

| Hoffman, Singels and Joshi [46] | To evaluate the feasibility of using aerial phenotyping in rapidly identifying genotypes with superior yield traits | Not Specified | NDVI | Ratoon crop | The results revealed the potential for using water treatment differences in canopy temperatures and stalk dry mass in identifying drought-tolerant genotypes. | The aerial phenotyping methods had the potential to enhance breeding efficiency and genetic gains toward productive sugarcane cultivars. |

| Bhadra et al. [55] | To demonstrate the use of a transfer learning-based dual stream neural network (PROSAIL) in estimating leaf chlorophyll concentrations (LCC) and average leaf angle (ALA) in corn | PROSAIL | Not Specified | Corn | The results showed that PROSAIL outperformed all other modeling scenarios in predicting LCC and ALA. | The use of large amounts of PROSAIL-simulated data combined with transfer learning and multi-angular UAV observations was effective in precision agriculture. |

| Kim et al. [48] | To demonstrate a low-light image-based crop and weed segmentation network (LCW-Net) for crop harvesting | LCW-Net | Not Specified | Sugar beet, dicot weeds, grass weeds, Carrot, weeds | The results showed that the mean intersection of unions of segmentation for crops and weeds were 0.8718 and 0.8693 for the BoniRob dataset and 0.8337 and 0.8221 for the CWFID dataset | The findings showed that the LCW-Net outperformed the state-of-the-art methods |

| Bai et al. [49] | To examine the potential of using a large-scale cable-suspended field phenotyping system to quantify bidirectional reflectance distribution function (BRDF) | (BRDF) | NDVI | Maize, Soybean | A strong correlation emerged between reflectance, Vis, and Green Pixel Fraction. The hotspots were identified in the backscatter direction at visible near-infrared (NIR) bands. | The developed system had the potential to generate rapid and detailed BRDF data at a high spatiotemporal resolution using multiple sensors. |

| Hao et al. [65] | To assess a method for evaluating the degree of wilting of cotton varieties based on phenotype | Not Specified AI(ML) | Cotton | The results showed that the PointSegAt deep learning network model performed well in leaf and stem segmentation. | The model used demonstrated an effective approach in measuring the degree of wilting of cotton varieties based on phenotype. | |

| Debnath et al. [37] | To demonstrate a method based on the Taylor-Coot algorithm to segment plant regions and biomass areas to detect emergence counting and estimate crop biomass | Taylor Coot | Different crops | The results showed that the proposed model had minimal Mean Absolute Difference (MAD), Standard Absolute Difference (SDAD), and %difference (%D) as 0.0.73, 0.074, and 16.45 in emergence counting. | The proposed model was effective in phenotypic trait estimation of crops. | |

| Hasan et al. [36] | To demonstrate a weed classification pipeline where a patch of the image was considered at a time to improve performance | Not Specified AI(ML) | Not Specified | Deep weeds, corn weeds, cotton weeds, tomato weeds | The results showed that the pipeline had significant performance improvement on all four datasets and addressed issues of intra-class dissimilarity and inter-class similarity in the dataset. | The proposed pipeline system is effective in crop and weed recognition in farming applications. |

| Fang et al. [50] | To evaluate an image-based semi-automatic root phenotyping method for field-grown crops | Not Specified HTP | Maize | The results showed that interspecific advantages for maize occurred within 5cm from the root base in the nodal roots, and the inhibition of soybean was reflected 20cm from the root base | The proposed system had high accuracy for field research on root morphology and branching features | |

| Zhao et al. [34] | To evaluate the continuous wavelet projections algorithm (CWPA) and successive projections algorithm (SPA) in generating optimal spectral feature sets for crop detection | CWPA and SPA Algorithms | Tea | An overall accuracy of 98% was reported in classifying tea plant stresses, and a high coefficient of determination was reported in retrieving corn leaf chlorophyll content. | The novel algorithm demonstrated its potential in crop monitoring and phenotyping for hyperspectral data. | |

| Yang and Cho [57] | To undertake automatic analysis of 3D image reconstruction of a red pepper plant | Not Specified | Visual SfM | red pepper | The results indicated that the proposed method had an error of 5mm or less when reconstructing the 3D images and was suitable for phenotypic analysis. | The images and analysis employed in 3D reconstruction can be applied in different image processing studies. |

| Yu et al. [32] | To demonstrate high-throughput phenotyping methods based on UAV systems to monitor and quantitatively describe the development of soybean canopy populations | Not Specified AI(ML) | Not Specified | soybean | The results showed that multimodal image segmentation outperformed traditional deep-learning image segmentation network | The proposed method has high practical value in germplasm resource identification and can enhance genotypic differentiation analysis further. |

| Selvaraj et al. [51] | To evaluate an image-analysis framework called CIAT Pheno-i to extract plot-level vegetation canopy metrics | Not Specified HTP | cassava | The results showed that the developed pheno-image analysis was easier and more rapid than manual methods. | The developed model can be adopted in the phenotype analysis of cassava’s below-ground traits. | |

| Zhang et al. [66] | To evaluate multiple imaging sensors, image resolutions, and image processing techniques to monitor the flowering intensity of canola, chickpea, pea, and camelina | Not Specified AI(ML) | Not Specified | canola, chickpea, pea, and camelina | The results showed that comparable results were obtained where standard image processing using unsupervised machine learning and thresholds were used in flower detection and feature extraction. | The study demonstrated the feasibility of using imaging for monitoring flowering intensity in multiple varieties of evaluated crops. |

| Rossi et al. [67] | To test a 3D model in the early detection of plant responses to water stress | Not Specified | SfM | Tomato | The results showed that the proposed algorithm automatically detected and measured plant height, petioles inclination, and single-leaf area. | Plant height traits may be adopted in subsequent analyses to identify how plants respond to water stress. |

| Boogaard, Henten and Gert Kootstra [68] | To evaluate the effectiveness of 3D data in measuring plants with a curved growing pattern | PointNet++ | Not Specified | cucumber | The results from the 3D method were more accurate than the 2D method | The results revealed the effectiveness of computer-vision methods to measure plant architecture and internode length |

| Cuevas- Velas quez, Gallego and Fisher [69] | To evaluate the segmentation and 3D reconstruction of rose plants from stereoscopic images | FSCN | Not Specified | Rose | The results showed that the accuracy of the segmentation improved other methods, hence leading to robust reconstruction results. | The effectiveness of 3D reconstruction in plant phenomenology was showcased. |

| Chen et al. [70] | To test the 3D perception of orchard banana central stock improved by adaptive multi-vision technology | Not specified 3D Image Reconstruction | Not Specified | Banana | The results showed that the proposed method was accurate and led to stable performance. | The work showcased the relevance of 3D sensing of banana central stocks in complex environments. |

| Chen et al. [71] | To develop a 3D unstructured and real-time environment using VR and Kinect-based immersive teleoperation in agricultural field robots | Not specified 3D Image Reconstruction | orchard | The results showed that the proposed algorithm and system had the potential applicability of immersive teleoperation within an unstructured environment | The study demonstrated that the proposed algorithms could be adopted to enhance teleoperation in agricultural environments. | |

| Isachsen, Theoharis and Misimi [72] | To demonstrate a real-time IC-based 3D registration algorithm for eye-in-hand configuration using an RBG-D camera | Not Specified 3D Image Reconstruction | Not Specified | Apple, banana, pear | The 3D reconstruction based on the GPU was faster than the CPU and eight times faster than similar library implementations. | The results indicated that the method was valid for eye-in-hand robotic scanning and 3D reconstruction of different agricultural food items. |

| Lin et al. [73] | To demonstrate a 3D reconstruction method to detect branches and fruits to avoid obstacles and plan paths for robots | Not specified 3D Image Reconstruction | Not Specified | Guava | The results revealed highly accurate results in fruit and branch reconstruction. | The results revealed that the proposed method could be adopted in reconstructing fruits and branches, hence planning obstacle avoidance paths for harvesting robots. |

| Ma et al. [74] | To test the automatic detection of branches of jujube trees based on 3D reconstruction for dormant pruning using deep learning methods | Not specified 3D Image Reconstruction | Not Specified | jujube trees | The results obtained showed that the proposed method was important in detecting significant information, including length of diameters, branch diameter | The proposed model could be adopted in developing automated robots for pruning jujube trees in orchard fields |

| Fan et al. [75] | To reconstruct root system architecture from the topological structure and geometric features of plants | Not specified 3D Image Reconstruction | Not Specified | Ash tree | The results showed that where roots had a diameter higher than 1cm, high accuracy was observed between the reconstructed root and simulated root | The high similarity between the reconstructed and simulated roots showed that the method was feasible and effective. |

| Zhao et al. [76] | To 3D characterize water usage in crops and root activity in field agronomic research | Not specified 3D Image Reconstruction | Not Specified | Maize | The results showed that the method was highly accurate and cost-efficient in phenotyping root activity. | The proposed method is vital in understanding crop water usage. |

| Zhu et al. [77] | To compute Phenotyping traits of a tomato canopy using 3D reconstruction | Not specified 3D Image Reconstruction | Not Specified | Tomato | The results showed that there was a high correlation between measured and calculated values. | The proposed method could be adopted for rapid detection of the quantitative indices of phenotypic traits of tomato canopy, hence supporting breeding and scientific cultivation. |

| Torres-Sánchez et al. [78] | To compare UAV digital aerial photogrammetry (DAP) and mobile terrestrial laser scanning (MTLS) in measuring geometric parameters of a vineyard, peach, and pear orchard | Not Specified HTP | Peach, Pear | The results showed that the results from the methods were highly correlated. The MTLS had higher values than the UAV | The proposed model showed that 3D crop characterization could be adopted in precision fruticulture | |

| Chen et al. [79] | To detail a global map involving eye-in-hand stereo vision and SLAM system | Not Specified AI(ML) | Not Specified | Passion, litchi, pineapple | The results showed that the constructed global map attained large-scale high-resolution. | The results showed that in the future, more stable and practical mobile fruit-picking robots can be developed. |

| Dong et al. [80] | To demonstrate a method to extract individual 3D apple traits and 3D mapping for apple training systems | Not specified 3D Image Reconstruction | Not Specified | Apple | The results showed high accuracy in estimating apple counting and apple volume estimation. | The proposed method combining 3D photography and 3D instance segmentation improved apple phenotypic traits from orchards. |

| Xiong et al. [81] | To propose a real-time localization and semantic map reconstruction method for unstructured citrus orchards | Not specified 3D Image Reconstruction | Not Specified | Citrus | The results indicated that the proposed method could achieve high accuracy and real-time performance in the reconstruction of the semantic map | The research contributes to the advancement of theoretical and technical work to support intelligent agriculture |

| Feng et al. [82] | To propose a voxel carving algorithm to reconstruct 3D models of maize and extract leaf traits for phenotyping | Probabilistic Voxel Carving Algorithm 3D Image Reconstruction | Not Specified | Maize | The results showed that the algorithm was robust and extracted plant traits automatically, including leaf angles and the number of leaves. | The results show that 3D reconstruction of plants from multi-view images can accurately extract multiple phenotyping traits. |

| Gao et al. [26] | To develop a clustering algorithm for corn population, point clouds to accurately extract the 3D morphology of individual crops | Not Specified 3D Image Reconstruction | Not Specified | Corn | The results showed high accuracy of the improved quick-shift method for segmentation of the corn plants. | The proposed method demonstrated an automated and efficient solution to accurately measure crop phenotypic information. |

| Wu et al. [83] | To design and deploy a prototype for automatic and high throughput seed phenotyping based on Seedscreener | Not Specified | Seed Screener | Wheat | The results showed that the platform could achieve a 94% success rate in predicting the traits of wheat | The proposed method was effective in obtaining the endophenotype and exophenotype features of wheat seeds. |

| James et al. [84] | To demonstrate a method to predict grain count for sorghum panicles | Not Specified | YoloV5 | Sorghum | The results showed that the models could predict grain counts for the high-resolution point cloud dataset with high accuracy. | The results showed that the multimodal approach was viable in estimating grain count per panicle and can be adopted in future crop development. |

| Wen et al. [85] | To present an accurate method for semantic 3D reconstruction of maize leaves | Not Specified 3D Image Reconstruction | Not Specified | Maize | The results showed that high accuracy was achieved in the reconstruction of maize leaves where high consistency appeared between the reconstructed mesh and the corresponding point cloud. | The technology can be adopted in crop phenomics to improve functional-structural plant modeling. |

| Gargiulo, Sorrentino and Mele [27] | To measure the internal traits of bean seeds using X-ray micro-CT imaging | Not Specified | X-ray microCT | Beans | The results showed that the micropyle was the most influential on the initial hydration of the bean seeds. | The approach can be adopted to study the morphological traits of beans. |

| Hu et al. [20] | To demonstrate the effectiveness of Neural Radiance Fields (NeRF) in 3D reconstruction of plants | NeRF 3D Image Reconstruction | Not Specified | Pitahaya, grape, fig, orange | The results show That NeRF is able to achieve reconstruction results that are comparable to Reality Capture, a 3D commercial reconstruction software. | The use of NeRF is identified as a novel paradigm in plant phenotyping in generating multiple representations from a single process. |

| Chang et al. [28] | To apply 3D and hyperspectral imaging (HSI) in investigating heterosis and cytoplasmic effects in pepper (Capsicum annuum) | Not Specified | NDVI | pepper (Capsicum annuum) | The results showed the potential utility of HIS data recorded throughout the plant life span in analyzing the cytoplasmic effects in crops. | The results demonstrated the potential of adopting 3D and HSI in evaluating the combining capability of crops. |

| Ni et al. [86] | To develop a complete framework of 3D segmentation for individual blueberries as they developed in clusters and to extract the cluster traits of the blueberries | R-CNN | Not Specified | blueberries | The results showed that a high accuracy of 97.3% was achieved in determining fruit number. | The study showed that 3D photogrammetry and 2D instance segmentation were effective in determining blueberry cluster traits, hence facilitating yield estimation and monitoring fruit development. |

| Xiao et al. [87] | To construct organ-scale traits of canopy structures in large-scale fields | CCO | Not Specified | Maize, cotton, sugar beet | The results demonstrated high accuracy in obtaining complete canopy structures throughout the growth stages of the crops. | The CCO method offered high affordability, accuracy, and efficiency in accelerating precision agriculture and plant breeding. |

| Xiao et al. [88] | To examine the capabilities of UAV platforms in executing precise yield estimation of 3D cotton bolls | CCO | Not Specified | Cotton | The results showed that the CCO results surpassed the nadir-derived cotton boll route. | The study demonstrated the effectiveness of CCO combined with UAV images in the high-throughput acquisition of organ-scale traits. |

| Shomali et al. [31] | To demonstrate the effectiveness of ANN-based algorithms for high light stress phenotyping of tomato genotypes using chlorophyll fluorescence features | SVM, RFE | Not Specified | Tomato | The results showed that the algorithms were reliable in high-light stress screening. | The use of deep learning algorithms was advocated for high-light stress phenotyping using chlorophyll fluorescence features. |

| Ma et al. [29] | To explore 3D reconstruction in soybean organ segmentation and phenotypic growth simulation | ISS-CPD, ICP, DFSP | Not Specified | Soybean | The results showed that an accuracy of 79.99% was achieved by using the distance-field-based segmentation pipeline algorithm (DFSP) | The method is highly accurate and reliable in 3D reconstruction of soybean canopy, phenotype calculation, and growth simulation. |

| Mon and ZarAung [30] | To propose an image processing algorithm to estimate the volume and 3D shape of mango fruit | Not Specified 3D Image Reconstruction | Not Specified | Mango | The results showed that the proposed method was 96.8% accurate to the measured structures of mango fruits | The proposed method of reconstructing mango shapes closely agreed with measured shapes |

| Liu et al. [89] | To test a 3D image analysis method for counting potato eyes and estimation of eye depth based on the evaluation of curvature | Not Specified | SfM | Potato | The results demonstrated high accuracy in estimating the number of potato eyes and their depth. | The proposed method was effective in phenotyping potato traits. |

| Zhou et al. [90] | To demonstrate the effectiveness of the soybean plant phenotype extractor (SPP-extractor) algorithm in acquiring phenotypic traits | Not Specified | YoloV5 | Soybean | The results showed that the developed model accurately identified pods and stems and could count the entire pod set of plants in a single scan. | The proposed method could be adopted in the phenotype extraction of soybean plants. |

| Liu et al. [91] | To evaluate the effectiveness of an improved watershed algorithm for bean image segmentation | Watershed Algorithm | Not Specified | Bean | The results showed that the proposed algorithm performed better than the traditional watershed algorithm. | The proposed improved watershed algorithm could be adopted in future bean image segmentation applications. |

| Li et al. [92] | To demonstrate a non-destructive measurement method for the canopy phenotype of watermelon plug seedlings | Mask R-CNN | YoloV5 | Watermelon | The results showed that the non-destructive measurement algorithm achieved good measurement performance for the watermelon plug seedlings from the one true-leaf to 3 true-leaf stages. | The proposed deep learning algorithm provided an effective solution for non-destructive measurement of the canopy phenotype of plug seedlings. |

| Zhou et al. [93] | To propose a phenotyping monitoring technology based on an internal gradient algorithm to acquire the target region and diameter of maize stems | Otsu Internal Gradient Algorithm | Not Specified | Maize | The results showed that the internal gradient algorithm could accurately obtain the target region of maize stems. | The adoption of the internal gradient algorithm is feasible in potential smart agriculture applications to assess field conditions. |

| Liu et al. [94] | To propose a wheat point cloud generation and 3D reconstruction method based on SfM and MVS using sequential wheat crop images | Not Specified | SfM | Wheat | The results showed that the method achieved non-invasive reconstruction of the 3D phenotypic structure of realistic objects with accuracy being improved by 43.3% and overall value enhanced by 14.3% | The proposed model can be adopted in future virtual 3D digitization applications. |

| Sun et al. [95] | To propose a deep learning semantic segmentation technology to preprocess images of soybean plants | Not Specified AI(ML) | Not Specified | Soybean | The results showed that semantic segmentation improved image preprocessing and long reconstruction time, hence improving the robustness of noise input | The proposed model can be adopted in future semantic segmentation for the preprocessing of 3D reconstruction in other crops |

| Begot et al. [96] | To implement micro-computed tomography (micro-CT) to study floral morphology and honey bees in the context of nectar-related traits | Not Specified | X-ray micro-computed tomography | Male and female flowers | The results showed that microcomputed tomography allowed for easy and rapid generation of 3D volumetric data on nectar, nectary, flower, and honey bee body sizes. | The protocol can be adopted to evaluate flower accessibility to pollinators at high resolution; hence, comparative analysis can be conducted to identify nectar-pollination-related traits. |

| Li et al. [97] | To subject maize seedlings to 3D reconstruction using imaging technology to assess their phenotypic traits | Not Specified 3D Image Reconstruction | Not Specified | Maize | The results from the model were highly correlated with manually measured values, showing that the method was accurate in nondestructive extraction. | The proposed model accurately constructed the 3D morphology of maize plants, hence extracting the phenotypic parameters of the maize plants. |

| Zhu et al. [98] | To combine 3D plant architecture with a radiation model to quantify and assess the impact of differences in planting patterns and row orientations on canopy light interception | Not Specified 3D Image Reconstruction | Not Specified | Maize, soybean | The results showed good agreement between measured and calculated phenotypic traits. | The study demonstrated that high throughput 3D phenotyping technology could be adopted to gain a better understanding of the association between the light environment and canopy architecture. |

| Chang et al. [99] | To develop a detecting and characterizing method for individual sorghum panicles using a 3D point cloud from UAV images | Not Specified 3D Image Reconstruction | Not Specified | Sorghum | The results showed a high correlation between UAV-based and ground measurements. | The study demonstrated that the 3D point cloud derived from UAV images provided reliable and consistent individual sorghum panicle parameters that were highly correlated with the ground measurements of panicle weight. |

| Varela, Pederson and Leakey [100] | To implement spatio-temporal 3D CNN and UAV time series to predict lodging damage in sorghum | CNN | Not Specified | Sorghum | The results showed that the 3D-CNN outperformed the 2D-CNN with high accuracy and precision. | The study demonstrated that using spatiotemporal CNN based on UAV time series images can enhance plant phenotyping capabilities in crop breeding and precision agriculture. |

| Varela et al. [101] | To use deep CNN with UAV time-series imagery to determine flowering time, biomass yield traits, and culm length | CNN | Not Specified | Miscanthus | The results showed that the use of 3D spatiotemporal CNN architecture outperformed the 2D spatial CNN architectures. | The results demonstrated that integration of high-spatiotemporal resolution UAV imagery with 3D-CNN enhanced monitoring of the key phenomenological and yield-related crop traits. |

| Nguyen et al. [102] | To demonstrate the versatility of aerial remote sensing in the diagnosis of yellow rust infection in spring wheat in a timely manner | CNN | Not Specified | Wheat | The results showed that a high correlation emerged between the proposed method and 3D-CNN. | The study demonstrated that low-cost multispectral UAVs could be adopted in crop breeding and pathology applications. |

| Okamoto et al. [103] | To test the applicability of the stereo-photogrammetry (SfM-MVS) method in the morphological measurement of tree root systems | Not Specified | SfM | Black Pine | The results showed that 3D reconstructions of the dummy and root systems were successful | The use of SfM-MVS is a new tool that can be adopted to obtain the 3D structure of tree root systems |

| Liu et al. [104] | To demonstrate a fast reconstruction method of a 3D model based on dual RGB-D cameras for peanut plants | Not Specified 3D Image Reconstruction | Not Specified | Peanut | The results showed that the average accuracy of the reconstructed peanut plant 3D model was 93.42%, which was higher than the iterative closest point (ICP) method. | The 3D reconstruction model can be adopted in future modeling applications to identify plant traits. |

| Zhu et al. [105] | To propose a method based on 3D reconstruction to evaluate phenotype development during the whole growth period | Not Specified 3D Image Reconstruction | Not Specified | Soybean | The results showed that phenotypic fingerprints of the soybean plant varieties could be established to identify patterns in phenotypic changes. | The proposed method could be adopted in future breeding and field management of soybeans and other crops. |

| Yang and Han [38] | To develop a novel approach for the determination of the 3D phenotype of vegetables by recording video clips using smartphones | Not Specified | SfM | Fruit trees | The results showed that highly accurate results were obtained compared to other photogrammetry methods. | The proposed methods can be adopted to reconstruct high-quality point cloud models by recording crop videos. |

| Sampaio, Silva and Marengoni [59] | To present a 3D model of non-rigid corn plants that can be adopted in phenotyping processes | Not Specified 3D Image Reconstruction | Not Specified | Corn | The results showed high accuracy in plant structural measurements and mapping the plant’s environment, hence providing higher crop efficiency. | The proposed solution can be adopted in future phenotyping applications for non-rigid plants. |

| Tagarakis et al. [35] | To investigate the use of RGB-D cameras and unmanned ground vehicles in mapping commercial orchard environments to provide information about tree height and canopy volume | Not Specified 3D Image Reconstruction | Not Specified | Tree Orchards | The results showed that the sensing methods provide similar height measurements and tree volumes were also calculated accurately | The proposed method, which uses UAV and UGV methods, could be adopted in future mapping applications. |

References

- United Nations Sustainable Development. Available online: https://www.un.org/sustainabledevelopment/hunger/#:~:text=Goal%202%20is%20about%20creating (accessed on 10 May 2024).

- Meraj, T.; Sharif, M.I.; Raza, M.; Alabrah, A.; Kadry, S.; Gandomi, A.H. Computer vision-based plant phenotyping: A comprehensive survey. iScience 2024, 27, 108709. [Google Scholar] [CrossRef] [PubMed]

- Sharma, V.; Honkavaara, E.; Hayden, M.; Kant, S. UAV Remote Sensing Phenotyping of Wheat Collection for Response to Water Stress and Yield Prediction Using Machine Learning. Plant Stress 2024, 12, 100464. [Google Scholar] [CrossRef]

- Chiuyari, W.N.; Cruvinel, P.E. Method for maize plant counting and crop evaluation based on multispectral image analysis. Comput. Electron. Agric. 2024, 216, 108470. [Google Scholar] [CrossRef]

- Graf, L.V.; Merz, Q.N.; Walter, A.; Aasen, H. Insights from field phenotyping improve satellite remote sensing based in-season estimation of winter wheat growth and phenology. Remote. Sens. Environ. 2023, 299, 113860. [Google Scholar] [CrossRef]

- Zahid, A.; Mahmud, M.S.; He, L.; Choi, D.; Heinemann, P.; Schupp, J. Development of an integrated 3R end-effector with a cartesian manipulator for pruning apple trees. Comput. Electron. Agric. 2020, 179, 105837. [Google Scholar] [CrossRef]

- Waqas, M.A.; Wang, X.; Zafar, S.A.; Noor, M.A.; Hussain, H.A.; Azher Nawaz, M.; Farooq, M. Thermal Stresses in Maize: Effects and Management Strategies. Plants 2021, 10, 293. [Google Scholar] [CrossRef]

- Mangalraj, P.; Cho, B.-K. Recent trends and advances in hyperspectral imaging techniques to estimate solar induced fluorescence for plant phenotyping. Ecol. Indic. 2022, 137, 108721. [Google Scholar] [CrossRef]

- Pappula-Reddy, S.-P.; Kumar, S.; Pang, J.; Chellapilla, B.; Pal, M.; Millar, A.H.; Siddique, K.H.M. High-throughput phenotyping for terminal drought stress in chickpea (Cicer arietinum L.). Plant Stress 2024, 11, 100386. [Google Scholar] [CrossRef]

- Geng, Z.; Lu, Y.; Duan, L.; Chen, H.; Wang, Z.; Zhang, J.; Liu, Z.; Wang, X.; Zhai, R.; Ouyang, Y.; et al. High-throughput phenotyping and deep learning to analyze dynamic panicle growth and dissect the genetic architecture of yield formation. Crop Environ. 2024, 3, 1–11. [Google Scholar] [CrossRef]

- Jiang, Y.; Li, C. Convolutional Neural Networks for Image-Based High-Throughput Plant Phenotyping: A Review. Plant Phenomics 2020, 2020, 4152816. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, L.; Jin, X.; Bian, L.; Ge, Y. High-throughput phenotyping of plant leaf morphological, physiological, and biochemical traits on multiple scales using optical sensing. Crop J. 2023, 11, 1303–1318. [Google Scholar] [CrossRef]

- Guo, W.; Carroll, M.E.; Singh, A.; Swetnam, T.L.; Merchant, N.; Sarkar, S.; Singh, A.K.; Ganapathysubramanian, B. UAS-Based Plant Phenotyping for Research and Breeding Applications. Plant Phenomics 2021, 2021, 9840192. [Google Scholar] [CrossRef] [PubMed]

- Carrera-Rivera, A.; Ochoa, W.; Larrinaga, F.; Lasa, G. How-to Conduct a Systematic Literature review: A Quick Guide for Computer Science Research. MethodsX 2022, 9, 101895. Available online: https://www.sciencedirect.com/science/article/pii/S2215016122002746 (accessed on 10 May 2024).

- Gusenbauer, M.; Haddaway, N.R. Which Academic Search Systems Are Suitable for Systematic Reviews or meta-analyses? Evaluating Retrieval Qualities of Google Scholar, PubMed, and 26 Other Resources. Res. Synth. Methods 2020, 11, 181–217. [Google Scholar] [CrossRef]

- Grewal, A.; Kataria, H.; Dhawan, I. Literature Search for Research Planning and Identification of Research Problem. Indian J. Anaesth. 2016, 60, 635–639. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Xu, S.; Yang, C.; You, Y.; Zhang, J.; Kuai, J.; Xie, J.; Zuo, Q.; Yan, M.; Du, H.; et al. Determining rapeseed lodging angles and types for lodging phenotyping using morphological traits derived from UAV images. Eur. J. Agron. 2024, 155, 127104. [Google Scholar] [CrossRef]

- Skobalski, J.; Sagan, V.; Alifu, H.; Al Akkad, O.; Lopes, F.A.; Grignola, F. Bridging the gap between crop breeding and GeoAI: Soybean yield prediction from multispectral UAV images with transfer learning. ISPRS J. Photogramm. Remote. Sens. 2024, 210, 260–281. [Google Scholar] [CrossRef]

- Zhu, F.; Qiao, X.; Zhang, Y.; Jiang, J. Analysis and mitigation of illumination influences on canopy close-range hyperspectral imaging for the in situ detection of chlorophyll distribution of basil crops. Comput. Electron. Agric. 2024, 217, 108553. [Google Scholar] [CrossRef]

- Hu, K.; Ying, W.; Pan, Y.; Kang, H.; Chen, C. High-fidelity 3D reconstruction of plants using Neural Radiance Fields. Comput. Electron. Agric. 2024, 220, 108848. [Google Scholar] [CrossRef]

- Teshome, F.T.; Bayabil, H.K.; Hoogenboom, G.; Schaffer, B.; Singh, A.; Ampatzidis, Y. Unmanned aerial vehicle (UAV) imaging and machine learning applications for plant phenotyping. Comput. Electron. Agric. 2023, 212, 108064. [Google Scholar] [CrossRef]

- Varghese, R.; Cherukuri, A.K.; Doddrell, N.H.; Priya, G.; Simkin, A.J.; Ramamoorthy, S. Machine learning in photosynthesis: Prospects on sustainable crop development. Plant Sci. 2023, 335, 111795. [Google Scholar] [CrossRef] [PubMed]

- Mingxuan, Z.; Wei, L.; Hui, L.; Ruinan, Z.; Yiming, D. Anti-gravity stem-seeking restoration algorithm for maize seed root image phenotype detection. Comput. Electron. Agric. 2022, 202, 107337. [Google Scholar] [CrossRef]

- Shi, H.; Shi, D.; Wang, S.; Li, W.; Wen, H.; Deng, H. Crop plant automatic detecting based on in-field images by lightweight DFU-Net model. Comput. Electron. Agric. 2024, 217, 108649. [Google Scholar] [CrossRef]

- Zhuang, L.; Wang, C.; Hao, H.; Li, J.; Xu, L.; Liu, S.; Guo, X. Maize emergence rate and leaf emergence speed estimation via image detection under field rail-based phenotyping platform. Comput. Electron. Agric. 2024, 220, 108838. [Google Scholar] [CrossRef]

- Gao, Y.; Wang, Q.; Rao, X.; Xie, L.; Ying, Y. OrangeStereo: A navel orange stereo matching network for 3D surface reconstruction. Comput. Electron. Agric. 2024, 217, 108626. [Google Scholar] [CrossRef]

- Gargiulo, L.; Sorrentino, G.; Mele, G. 3D imaging of bean seeds: Correlations between hilum region structures and hydration kinetics. Food Res. Int. 2020, 134, 109211. [Google Scholar] [CrossRef]

- Chang, S.; Lee, U.; Kim, J.-B.; Jo, Y.D. Application of 3D-volumetric analysis and hyperspectral imaging systems for investigation of heterosis and cytoplasmic effects in pepper. Sci. Hortic. 2022, 302, 111150. [Google Scholar] [CrossRef]

- Ma, X.; Wei, B.; Guan, H.; Cheng, Y.; Zhuo, Z. A method for calculating and simulating phenotype of soybean based on 3D reconstruction. Eur. J. Agron. 2024, 154, 127070. [Google Scholar] [CrossRef]

- Mon, T.; ZarAung, N. Vision based volume estimation method for automatic mango grading system. Biosyst. Eng. 2020, 198, 338–349. [Google Scholar] [CrossRef]

- Shomali, A.; Aliniaeifard, S.; Bakhtiarizadeh, M.R.; Lotfi, M.; Mohammadian, M.; Sadegh, M.; Rastogi, A. Artificial neural network (ANN)-based algorithms for high light stress phenotyping of tomato genotypes using chlorophyll fluorescence features. Plant Physiol. Biochem. 2023, 201, 107893. [Google Scholar] [CrossRef]

- Yu, H.; Weng, L.; Wu, Q.; He, J.; Yuan, Y.; Wang, J.; Xu, X.; Feng, X. Time-Series & High-Resolution UAV Data for Soybean Growth Analysis by Combining Multimodal Deep Learning and Dynamic Modelling. Plant Phenomics 2024, 6, 0158. [Google Scholar] [CrossRef] [PubMed]

- Yu, H.; Dong, M.; Zhao, R.; Zhang, L.; Sui, Y. Research on precise phenotype identification and growth prediction of lettuce based on deep learning. Environ. Res. 2024, 252, 118845. [Google Scholar] [CrossRef] [PubMed]

- Zhao, X.; Zhang, J.; Pu, R.; Shu, Z.; He, W.; Wu, K. The continuous wavelet projections algorithm: A practical spectral-feature-mining approach for crop detection. Crop J. 2022, 10, 1264–1273. [Google Scholar] [CrossRef]

- Tagarakis, A.C.; Filippou, E.; Kalaitzidis, D.; Benos, L.; Busato, P.; Bochtis, D. Proposing UGV and UAV Systems for 3D Mapping of Orchard Environments. Sensors 2022, 22, 1571. [Google Scholar] [CrossRef]

- Hasan, A.; Diepeveen, D.; Laga, H.; Jones, K.; Sohel, F. Image patch-based deep learning approach for crop and weed recognition. Ecol. Inform. 2023, 78, 102361. [Google Scholar] [CrossRef]

- Debnath, S.; Preetham, A.; Vuppu, S.; Prasad, N. Optimal weighted GAN and U-Net based segmentation for phenotypic trait estimation of crops using Taylor Coot algorithm. Appl. Soft Comput. 2023, 144, 110396. [Google Scholar] [CrossRef]

- Yang, Z.; Han, Y. A Low-Cost 3D Phenotype Measurement Method of Leafy Vegetables Using Video Recordings from Smartphones. Sensors 2020, 20, 6068. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Zhang, H.; Bian, L.; Zhou, L.; Wang, S.; Ge, Y. Poplar seedling varieties and drought stress classification based on multi-source, time-series data and deep learning. Ind. Crop. Prod. 2024, 218, 118905. [Google Scholar] [CrossRef]

- Camilo, J.; Bunn, C.; Rahn, E.; Little-Savage, D.; Schimidt, P.; Ryo, M. Geographic-scale coffee cherry counting with smartphones and deep learning. Plant Phenomics 2024, 6, 0165. [Google Scholar] [CrossRef]

- Liu, L.; Yu, L.; Wu, D.; Ye, J.; Feng, H.; Liu, Q.; Yang, W. PocketMaize: An Android-Smartphone Application for Maize Plant Phenotyping. Front. Plant Sci. 2021, 12, 770217. [Google Scholar] [CrossRef]

- Röckel, F.; Schreiber, T.; Schüler, D.; Braun, U.; Krukenberg, I.; Schwander, F.; Peil, A.; Brandt, C.; Willner, E.; Gransow, D.; et al. PhenoApp: A mobile tool for plant phenotyping to record field and greenhouse observations. F1000Research 2022, 11, 12. [Google Scholar] [CrossRef] [PubMed]

- Tu, K.; Wu, W.; Cheng, Y.; Zhang, H.; Xu, Y.; Dong, X.; Wang, M.; Sun, Q. AIseed: An automated image analysis software for high-throughput phenotyping and quality non-destructive testing of individual plant seeds. Comput. Electron. Agric. 2023, 207, 107740. [Google Scholar] [CrossRef]

- Bolouri, F.; Kocoglu, Y.; Lorraine, I.; Ritchie, G.L.; Sari-Sarraf, H. CottonSense: A high-throughput field phenotyping system for cotton fruit segmentation and enumeration on edge devices. Comput. Electron. Agric. 2024, 216, 108531. [Google Scholar] [CrossRef]

- Teixeira, E.; George, M.; Johnston, P.; Malcolm, B.; Liu, J.; Ward, R.; Brown, H.; Cichota, R.; Kersebaum, K.C.; Richards, K.; et al. Phenotyping early-vigour in oat cover crops to assess plant-trait effects across environments. Field Crop. Res. 2023, 291, 108781. [Google Scholar] [CrossRef]

- Hoffman, N.; Singels, A.; Joshi, S. Aerial phenotyping for sugarcane yield and drought tolerance. Field Crop. Res. 2024, 308, 109275. [Google Scholar] [CrossRef]

- Krämer, J.; Siegmann, B.; Kraska, T.; Muller, O.; Rascher, U. The potential of spatial aggregation to extract remotely sensed sun-induced fluorescence (SIF) of small-sized experimental plots for applications in crop phenotyping. Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 102565. [Google Scholar] [CrossRef]

- Kim, Y.H.; Lee, S.J.; Yun, C.; Im, S.J.; Park, K.R. LCW-Net: Low-light-image-based crop and weed segmentation network using attention module in two decoders. Eng. Appl. Artif. Intell. 2023, 126, 106890. [Google Scholar] [CrossRef]

- Bai, G.; Ge, Y.; Leavitt, B.; Gamon, J.A.; Scoby, D. Goniometer in the air: Enabling BRDF measurement of crop canopies using a cable-suspended plant phenotyping platform. Biosyst. Eng. 2023, 230, 344–360. [Google Scholar] [CrossRef]

- Fang, H.; Xie, Z.; Li, H.; Guo, Y.; Li, B.; Liu, Y.; Ma, Y. Image-based root phenotyping for field-grown crops: An example under maize/soybean intercropping. J. Integr. Agric. 2022, 21, 1606–1619. [Google Scholar] [CrossRef]

- Selvaraj, M.G.; Valderrama, M.; Guzman, D.; Valencia, M.; Ruiz, H.; Acharjee, A. Machine learning for high-throughput field phenotyping and image processing provides insight into the association of above and below-ground traits in cassava (Manihot esculenta Crantz). Plant Methods 2020, 16, 87. [Google Scholar] [CrossRef]

- Niu, Y.; Han, W.; Zhang, H.; Zhang, L.; Chen, H. Estimating maize plant height using a crop surface model constructed from UAV RGB images. Biosyst. Eng. 2024, 241, 56–67. [Google Scholar] [CrossRef]

- Xie, T.; Li, J.; Yang, C.; Jiang, Z.; Chen, Y.; Guo, L.; Zhang, J. Crop height estimation based on UAV images: Methods, errors, and strategies. Comput. Electron. Agric. 2021, 185, 106155. [Google Scholar] [CrossRef]

- Yang, D.; Yang, H.; Liu, D.; Wang, X. Research on automatic 3D reconstruction of plant phenotype based on Multi-View images. Comput. Electron. Agric. 2024, 220, 108866. [Google Scholar] [CrossRef]

- Bhadra, S.; Sagan, V.; Sarkar, S.; Braud, M.; Mockler, T.C.; Eveland, A.L. PROSAIL-Net: A transfer learning-based dual stream neural network to estimate leaf chlorophyll and leaf angle of crops from UAV hyperspectral images. ISPRS J. Photogramm. Remote. Sens. 2024, 210, 1–24. [Google Scholar] [CrossRef]

- Jayasuriya, N.; Guo, Y.; Hu, W.; Ghannoum, O. Machine vision based plant height estimation for protected crop facilities. Comput. Electron. Agric. 2024, 218, 108669. [Google Scholar] [CrossRef]

- Yang, M.; Cho, S.-I. High-Resolution 3D Crop Reconstruction and Automatic Analysis of Phenotyping Index Using Machine Learning. Agriculture 2021, 11, 1010. [Google Scholar] [CrossRef]

- Maraveas, C.; Asteris, P.G.; Arvanitis, K.G.; Bartzanas, T.; Loukatos, D. Application of Bio and Nature-Inspired Algorithms in Agricultural Engineering. Arch. Comput. Methods Eng. 2023, 30, 1979–2012. [Google Scholar] [CrossRef]

- Sampaio, G.S.; Silva, L.A.; Marengoni, M. 3D Reconstruction of Non-Rigid Plants and Sensor Data Fusion for Agriculture Phenotyping. Sensors 2021, 21, 4115. [Google Scholar] [CrossRef]

- Maraveas, C. Incorporating Artificial Intelligence Technology in Smart Greenhouses: Current State of the Art. Appl. Sci. 2023, 13, 14. [Google Scholar] [CrossRef]

- Anderegg, J.; Zenkl, R.; Walter, A.; Hund, A.; McDonald, B.A. Combining high-resolution imaging, deep learning, and dynamic modelling to separate disease and senescence in wheat canopies. Plant Phenomics 2023, 5, 0053. [Google Scholar] [CrossRef]

- Ji, X.; Zhou, Z.; Gouda, M.; Zhang, W.; He, Y.; Ye, G.; Li, X. A novel labor-free method for isolating crop leaf pixels from RGB imagery: Generating labels via a topological strategy. Comput. Electron. Agric. 2024, 218, 108631. [Google Scholar] [CrossRef]

- Haghshenas, A.; Emam, Y. Green-gradient based canopy segmentation: A multipurpose image mining model with potential use in crop phenotyping and canopy studies. Comput. Electron. Agric. 2020, 178, 105740. [Google Scholar] [CrossRef]

- Haque, S.; Lobaton, E.; Nelson, N.; Yencho, G.C.; Pecota, K.V.; Mierop, R.; Kudenov, M.W.; Boyette, M.; Williams, C.M. Computer vision approach to characterize size and shape phenotypes of horticultural crops using high-throughput imagery. Comput. Electron. Agric. 2021, 182, 106011. [Google Scholar] [CrossRef]

- Hao, H.; Wu, S.; Li, Y.; Wen, W.; Fan, J.; Zhang, Y.; Zhuang, L.; Xu, L.; Li, H.; Guo, X.; et al. Automatic acquisition, analysis and wilting measurement of cotton 3D phenotype based on point cloud. Biosyst. Eng. 2024, 239, 173–189. [Google Scholar] [CrossRef]

- Zhang, C.; Craine, W.; McGee, R.; Vandemark, G.; Davis, J.; Brown, J.; Hulbert, S.; Sankaran, S. Image-Based Phenotyping of Flowering Intensity in Cool-Season Crops. Sensors 2020, 20, 1450. [Google Scholar] [CrossRef]

- Rossi, R.; Costafreda-Aumedes, S.; Leolini, L.; Leolini, C.; Bindi, M.; Moriondo, M. Implementation of an algorithm for automated phenotyping through plant 3D-modeling: A practical application on the early detection of water stress. Comput. Electron. Agric. 2022, 197, 106937. [Google Scholar] [CrossRef]

- Boogaard, F.P.; van Henten, E.J.; Kootstra, G. The added value of 3D point clouds for digital plant phenotyping—A case study on internode length measurements in cucumber. Biosyst. Eng. 2023, 234, 1–12. [Google Scholar] [CrossRef]

- Cuevas-Velasquez, H.; Gallego, A.; Fisher, R.B. Segmentation and 3D reconstruction of rose plants from stereoscopic images. Comput. Electron. Agric. 2020, 171, 105296. [Google Scholar] [CrossRef]

- Chen, M.; Tang, Y.; Zou, X.; Huang, K.; Huang, Z.; Zhou, H.; Wang, C.; Lian, G. Three-dimensional perception of orchard banana central stock enhanced by adaptive multi-vision technology. Comput. Electron. Agric. 2020, 174, 105508. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, B.; Zhou, J.; Wang, K. Real-time 3D unstructured environment reconstruction utilizing VR and Kinect-based immersive teleoperation for agricultural field robots. Comput. Electron. Agric. 2020, 175, 105579. [Google Scholar] [CrossRef]

- Isachsen, U.J.; Theoharis, T.; Misimi, E. Fast and accurate GPU-accelerated, high-resolution 3D registration for the robotic 3D reconstruction of compliant food objects. Comput. Electron. Agric. 2021, 180, 105929. [Google Scholar] [CrossRef]

- Lin, G.; Tang, Y.; Zou, X.; Wang, C. Three-dimensional reconstruction of guava fruits and branches using instance segmentation and geometry analysis. Comput. Electron. Agric. 2021, 184, 106107. [Google Scholar] [CrossRef]

- Ma, B.; Du, J.; Wang, L.; Jiang, H.; Zhou, M. Automatic branch detection of jujube trees based on 3D reconstruction for dormant pruning using the deep learning-based method. Comput. Electron. Agric. 2021, 190, 106484. [Google Scholar] [CrossRef]

- Fan, G.; Liang, H.; Zhao, Y.; Li, Y. Automatic reconstruction of three-dimensional root system architecture based on ground penetrating radar. Comput. Electron. Agric. 2022, 197, 106969. [Google Scholar] [CrossRef]

- Zhao, D.; Eyre, J.X.; Wilkus, E.; de Voil, P.; Broad, I.; Rodriguez, D. 3D characterization of crop water use and the rooting system in field agronomic research. Comput. Electron. Agric. 2022, 202, 107409. [Google Scholar] [CrossRef]

- Zhu, T.; Ma, X.; Guan, H.; Wu, X.; Wang, F.; Yang, C.; Jiang, Q. A calculation method of phenotypic traits based on three-dimensional reconstruction of tomato canopy. Comput. Electron. Agric. 2023, 204, 107515. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; Escolà, A.; Isabel de Castro, A.; López-Granados, F.; Rosell-Polo, J.R.; Sebé, F.; Manuel Jiménez-Brenes, F.; Sanz, R.; Gregorio, E.; Peña, J.M.; et al. Mobile terrestrial laser scanner vs. UAV photogrammetry to estimate woody crop canopy parameters—Part 2: Comparison for different crops and training systems. Comput. Electron. Agric. 2023, 212, 108083. [Google Scholar] [CrossRef]

- Chen, M.; Tang, Y.; Zou, X.; Huang, Z.; Zhou, H.; Chen, S. 3D global mapping of large-scale unstructured orchard integrating eye-in-hand stereo vision and SLAM. Comput. Electron. Agric. 2021, 187, 106237. [Google Scholar] [CrossRef]

- Dong, X.; Kim, W.-Y.; Zheng, Y.; Oh, J.-Y.; Ehsani, R.; Lee, K.-H. Three-dimensional quantification of apple phenotypic traits based on deep learning instance segmentation. Comput. Electron. Agric. 2023, 212, 108156. [Google Scholar] [CrossRef]

- Xiong, J.; Liang, J.; Zhuang, Y.; Hong, D.; Zheng, Z.; Liao, S.; Hu, W.; Yang, Z. Real-time localization and 3D semantic map reconstruction for unstructured citrus orchards. Comput. Electron. Agric. 2023, 213, 108217. [Google Scholar] [CrossRef]

- Feng, J.; Saadati, M.; Jubery, T.; Jignasu, A.; Balu, A.; Li, Y.; Attigala, L.; Schnable, P.S.; Sarkar, S.; Ganapathysubramanian, B.; et al. 3D reconstruction of plants using probabilistic voxel carving. Comput. Electron. Agric. 2023, 213, 108248. [Google Scholar] [CrossRef]

- Wu, T.; Dai, J.; Shen, P.; Liu, H.; Wei, Y. Seedscreener: A novel integrated wheat germplasm phenotyping platform based on NIR-feature detection and 3D-reconstruction. Comput. Electron. Agric. 2023, 215, 108378. [Google Scholar] [CrossRef]

- James, C.; Smith, D.; He, W.; Chandra, S.S.; Chapman, S.C. GrainPointNet: A deep-learning framework for non-invasive sorghum panicle grain count phenotyping. Comput. Electron. Agric 2024, 217, 108485. [Google Scholar] [CrossRef]

- Wen, W.; Wu, S.; Lu, X.; Liu, X.; Gu, S.; Guo, X. Accurate and semantic 3D reconstruction of maize leaves. Comput. Electron. Agric. 2024, 217, 108566. [Google Scholar] [CrossRef]

- Ni, X.; Li, C.; Jiang, H.; Takeda, F. Three-dimensional photogrammetry with deep learning instance segmentation to extract berry fruit harvestability traits. ISPRS J. Photogramm. Remote. Sens. 2021, 171, 297–309. [Google Scholar] [CrossRef]

- Xiao, S.; Ye, Y.; Fei, S.; Chen, H.; Zhang, B.; Li, Q.; Cai, Z.; Che, Y.; Wang, Q.; Ghafoor, A.; et al. High-throughput calculation of organ-scale traits with reconstructed accurate 3D canopy structures using a UAV RGB camera with an advanced cross-circling oblique route. ISPRS J. Photogramm. Remote. Sens. 2023, 201, 104–122. [Google Scholar] [CrossRef]

- Xiao, S.; Fei, S.; Ye, Y.; Xu, D.; Xie, Z.; Bi, K.; Guo, Y.; Li, B.; Zhang, R.; Ma, Y. 3D reconstruction and characterization of cotton bolls in situ based on UAV technology. ISPRS J. Photogramm. Remote. Sens. 2024, 209, 101–116. [Google Scholar] [CrossRef]

- Liu, J.; Xu, X.; Liu, Y.; Rao, Z.; Smith, M.L.; Jin, L.; Li, B. Quantitative potato tuber phenotyping by 3D imaging. Biosyst. Eng. 2021, 210, 48–59. [Google Scholar] [CrossRef]

- Zhou, W.; Chen, Y.; Li, W.; Zhang, C.; Xiong, Y.; Zhan, W.; Huang, L.; Wang, J.; Qiu, L. SPP-extractor: Automatic phenotype extraction for densely grown soybean plants. Crop J. 2023, 11, 1569–1578. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, W.; Wang, F.; Sun, X.; Wang, J.; Wang, C.; Wang, X. Application of an improved watershed algorithm based on distance map reconstruction in bean image segmentation. Heliyon 2023, 9, e15097. [Google Scholar] [CrossRef]

- Li, L.; Bie, Z.; Zhang, Y.; Huang, Y.; Peng, C.; Han, B.; Xu, S. Nondestructive Detection of Key Phenotypes for the Canopy of the Watermelon Plug Seedlings Based on Deep Learning. Hortic. Plant J. 2023, in press. [Google Scholar] [CrossRef]

- Zhou, J.; Cui, M.; Wu, Y.; Gao, Y.; Tang, Y.; Chen, Z.; Hou, L.; Tian, H. Maize (Zea mays L.) Stem Target Region Extraction and Stem Diameter Measurement Based on an Internal Gradient Algorithm in Field Conditions. Agronomy 2023, 13, 1185. [Google Scholar] [CrossRef]

- Liu, H.; Xin, C.; Lai, M.; He, H.; Wang, Y.; Wang, M.; Li, J. RepC-MVSNet: A Reparameterized Self-Supervised 3D Reconstruction Algorithm for Wheat 3D Reconstruction. Agronomy 2023, 13, 1975. [Google Scholar] [CrossRef]

- Sun, Y.; Miao, L.; Zhao, Z.; Pan, T.; Wang, X.; Guo, Y.; Xin, D.; Chen, Q.; Zhu, R. An Efficient and Automated Image Preprocessing Using Semantic Segmentation for Improving the 3D Reconstruction of Soybean Plants at the Vegetative Stage. Agronomy 2023, 13, 2388. [Google Scholar] [CrossRef]

- Begot, L.; Slavkovic, F.; Oger, M.; Pichot, C.; Morin, H.; Boualem, A.; Favier, A.-L.; Bendahmane, A. Precision Phenotyping of Nectar-Related Traits Using X-ray Micro Computed Tomography. Cells 2022, 11, 3452. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Liu, J.; Zhang, B.; Wang, Y.; Yao, J.; Zhang, X.; Fan, B.; Li, X.; Hai, Y.; Fan, X. Three-dimensional reconstruction and phenotype measurement of maize seedlings based on multi-view image sequences. Front. Plant Sci. 2022, 13, 974339. [Google Scholar] [CrossRef]

- Zhu, B.; Liu, F.; Xie, Z.; Guo, Y.; Li, B.; Ma, Y. Quantification of light interception within image-based 3-D reconstruction of sole and intercropped canopies over the entire growth season. Ann. Bot. 2020, 126, 701–712. [Google Scholar] [CrossRef]

- Chang, A.; Jung, J.; Yeom, J.; Landivar, J. 3D Characterization of Sorghum Panicles Using a 3D Point Cloud Derived from UAV Imagery. Remote Sens. 2021, 13, 282. [Google Scholar] [CrossRef]

- Varela, S.; Pederson, T.L.; Leakey, A.D.B. Implementing Spatio-Temporal 3D-Convolution Neural Networks and UAV Time Series Imagery to Better Predict Lodging Damage in Sorghum. Remote Sens. 2022, 14, 733. [Google Scholar] [CrossRef]

- Varela, S.; Zheng, X.; Njuguna, J.N.; Sacks, E.J.; Allen, D.P.; Ruhter, J.; Leakey, A.D.B. Deep Convolutional Neural Networks Exploit High-Spatial- and -Temporal-Resolution Aerial Imagery to Phenotype Key Traits in Miscanthus. Remote Sens. 2022, 14, 5333. [Google Scholar] [CrossRef]

- Nguyen, C.; Sagan, V.; Skobalski, J.; Severo, J.I. Early Detection of Wheat Yellow Rust Disease and Its Impact on Terminal Yield with Multi-Spectral UAV-Imagery. Remote Sens. 2023, 15, 3301. [Google Scholar] [CrossRef]

- Okamoto, Y.; Ikeno, H.; Hirano, Y.; Tanikawa, T.; Yamase, K.; Todo, C.; Dannoura, M.; Ohashi, M. 3D reconstruction using Structure-from-Motion: A new technique for morphological measurement of tree root systems. Plant Soil 2022, 477, 829–841. [Google Scholar] [CrossRef]

- Liu, Y.; Yuan, H.; Zhao, X.; Fan, C.; Cheng, M. Fast reconstruction method of three-dimension model based on dual RGB-D cameras for peanut plant. Plant Methods 2023, 19, 17. [Google Scholar] [CrossRef]

- Zhu, R.; Sun, K.; Yan, Z.; Xue-hui, Y.; Jiang-lin, Y.; Shi, J.; Hu, Z.; Jiang, H.; Xin, D.; Zhang, Z.; et al. Analysing the phenotype development of soybean plants using low-cost 3D reconstruction. Sci. Rep. 2020, 10, 7055. [Google Scholar] [CrossRef]

| Focus | Inclusion | Exclusion |

|---|---|---|

| Scope | Studies focused on the latest developments, benefits, limitations, and future directions of image analysis phenotyping technologies based on AI, ML, 3D imaging, and software solutions | Studies not focused on algorithms used in plant phenotyping |

| Period | 2020–2024 | Before 2020 |

| Language | English | All non-English languages |

| Design | Primary experimental studies | Secondary reviews |

| Type | Peer-reviewed journal articles | Grey literature, blogs |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maraveas, C. Image Analysis Artificial Intelligence Technologies for Plant Phenotyping: Current State of the Art. AgriEngineering 2024, 6, 3375-3407. https://doi.org/10.3390/agriengineering6030193

Maraveas C. Image Analysis Artificial Intelligence Technologies for Plant Phenotyping: Current State of the Art. AgriEngineering. 2024; 6(3):3375-3407. https://doi.org/10.3390/agriengineering6030193

Chicago/Turabian StyleMaraveas, Chrysanthos. 2024. "Image Analysis Artificial Intelligence Technologies for Plant Phenotyping: Current State of the Art" AgriEngineering 6, no. 3: 3375-3407. https://doi.org/10.3390/agriengineering6030193

APA StyleMaraveas, C. (2024). Image Analysis Artificial Intelligence Technologies for Plant Phenotyping: Current State of the Art. AgriEngineering, 6(3), 3375-3407. https://doi.org/10.3390/agriengineering6030193