Automated Windrow Profiling System in Mechanized Peanut Harvesting

Abstract

:1. Introduction

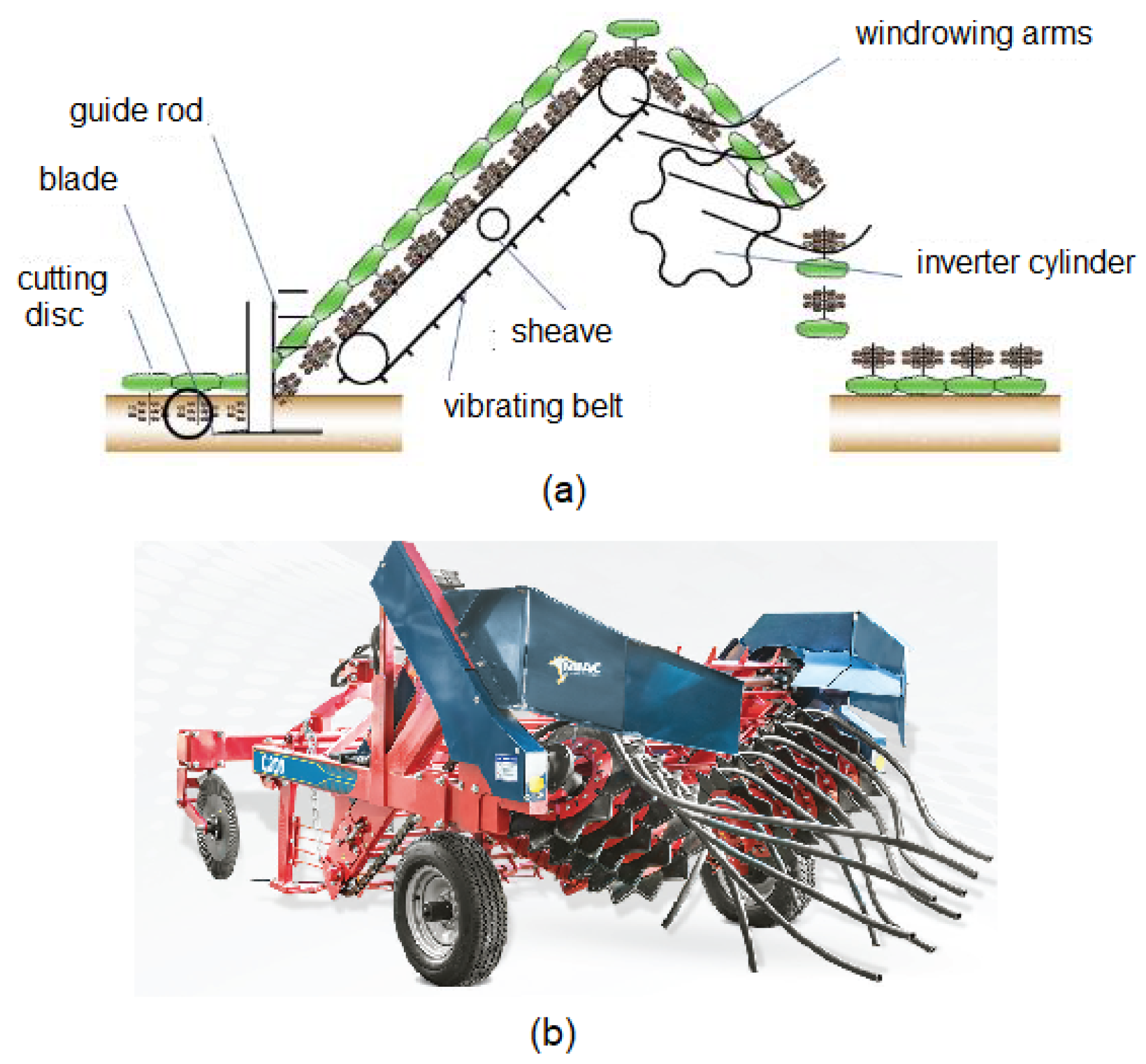

2. Mechanized Harvesting and Losses in Peanut Cultivation

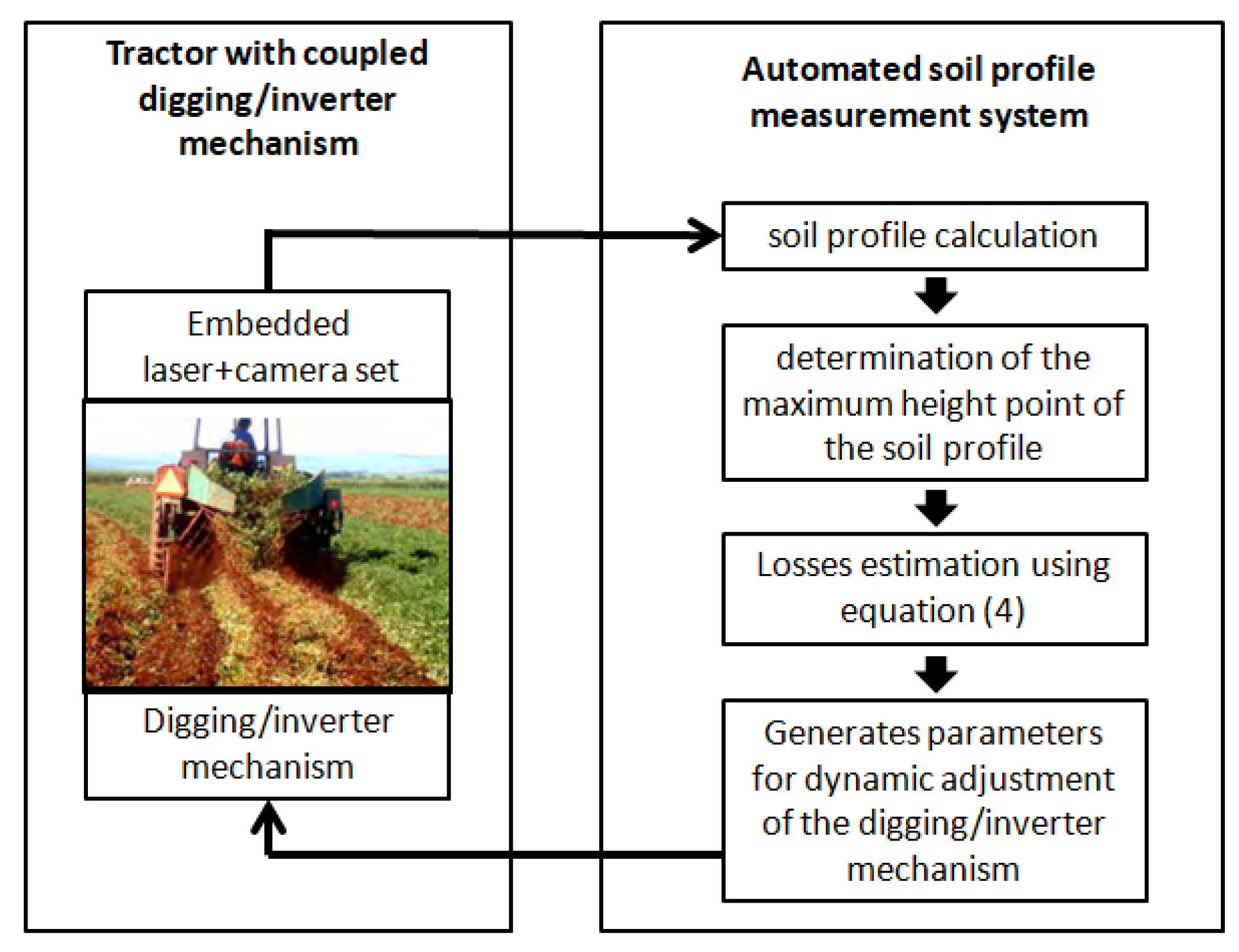

3. Materials and Methods

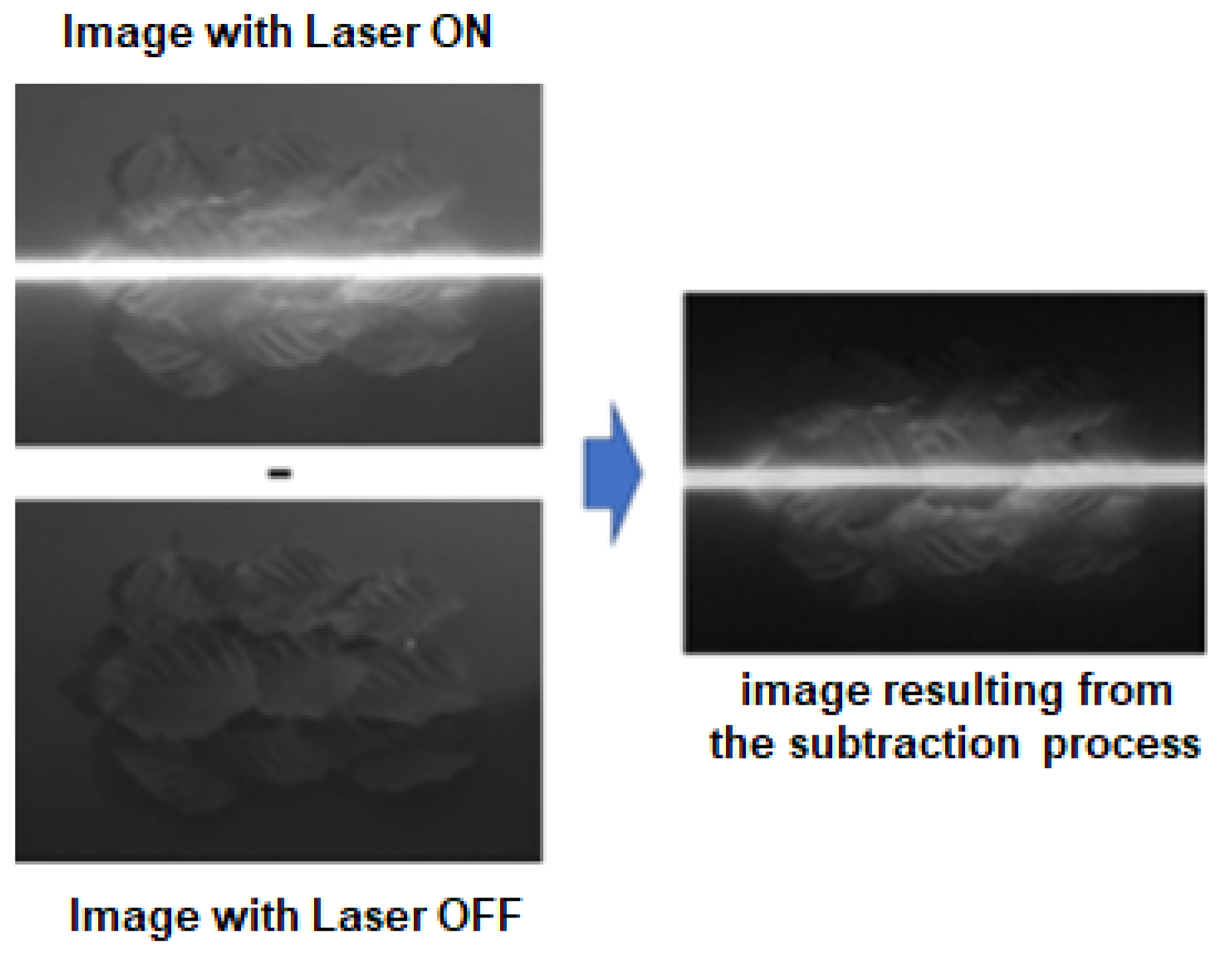

3.1. Acquisition of Images

3.2. Segmentation

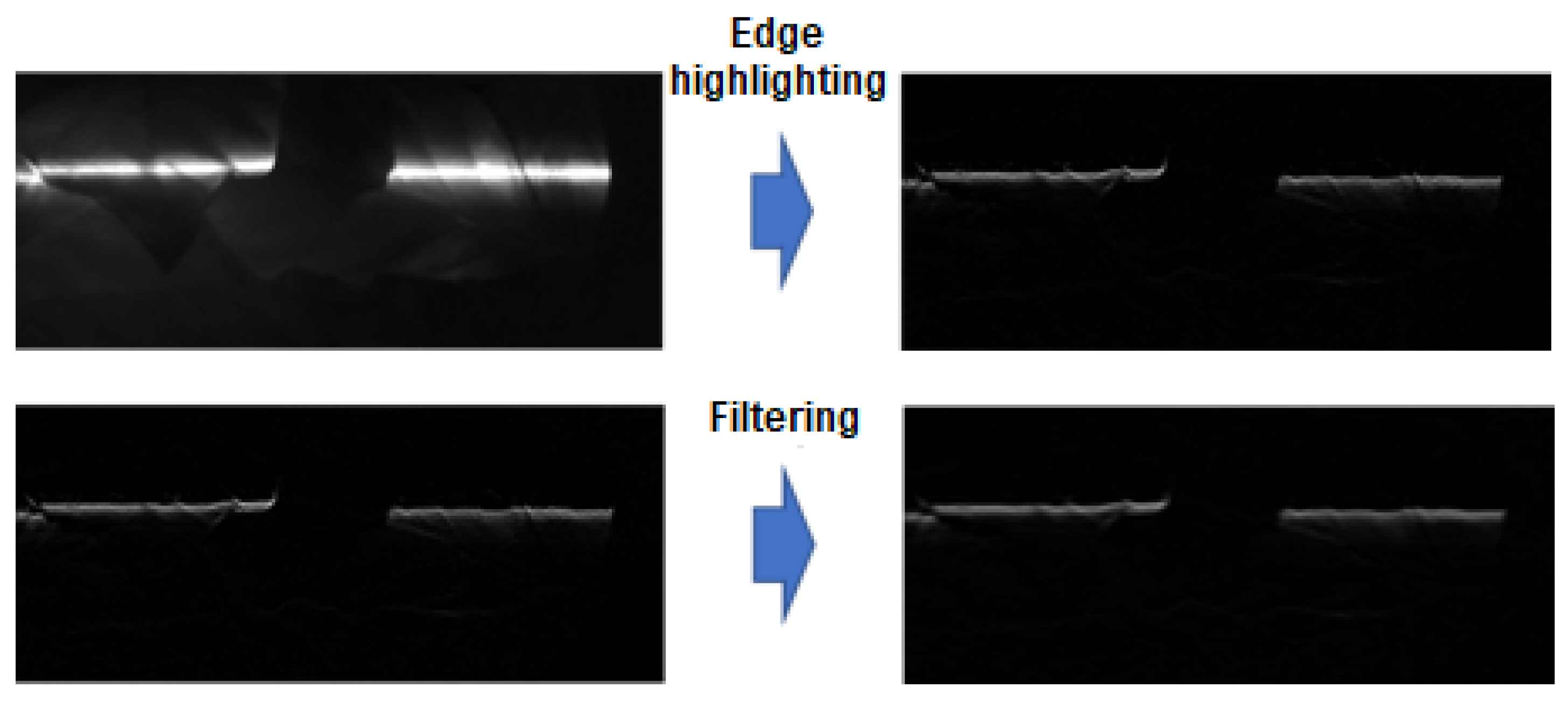

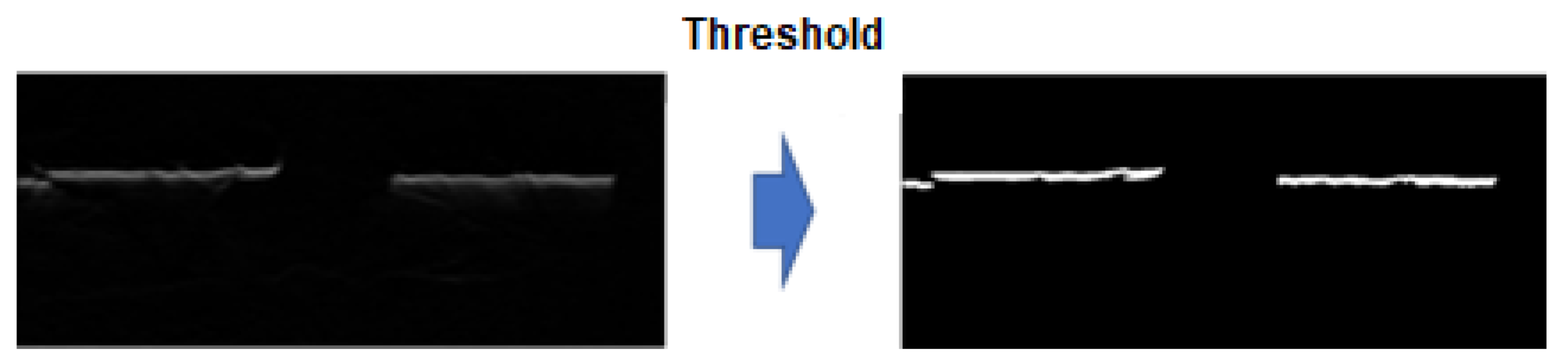

3.2.1. Adaptive Segmentation

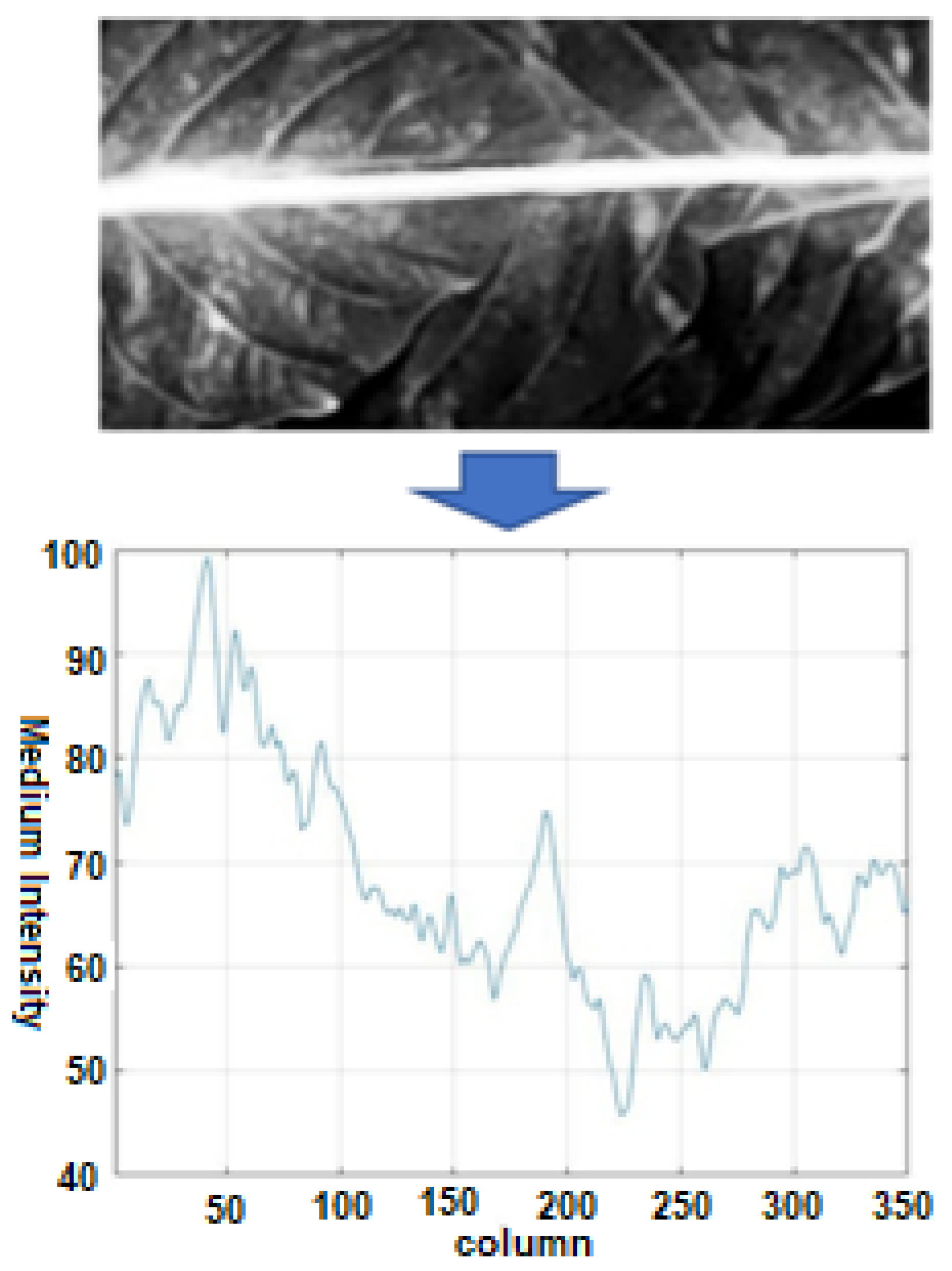

3.2.2. Detection and Removal of Regions without Contrast

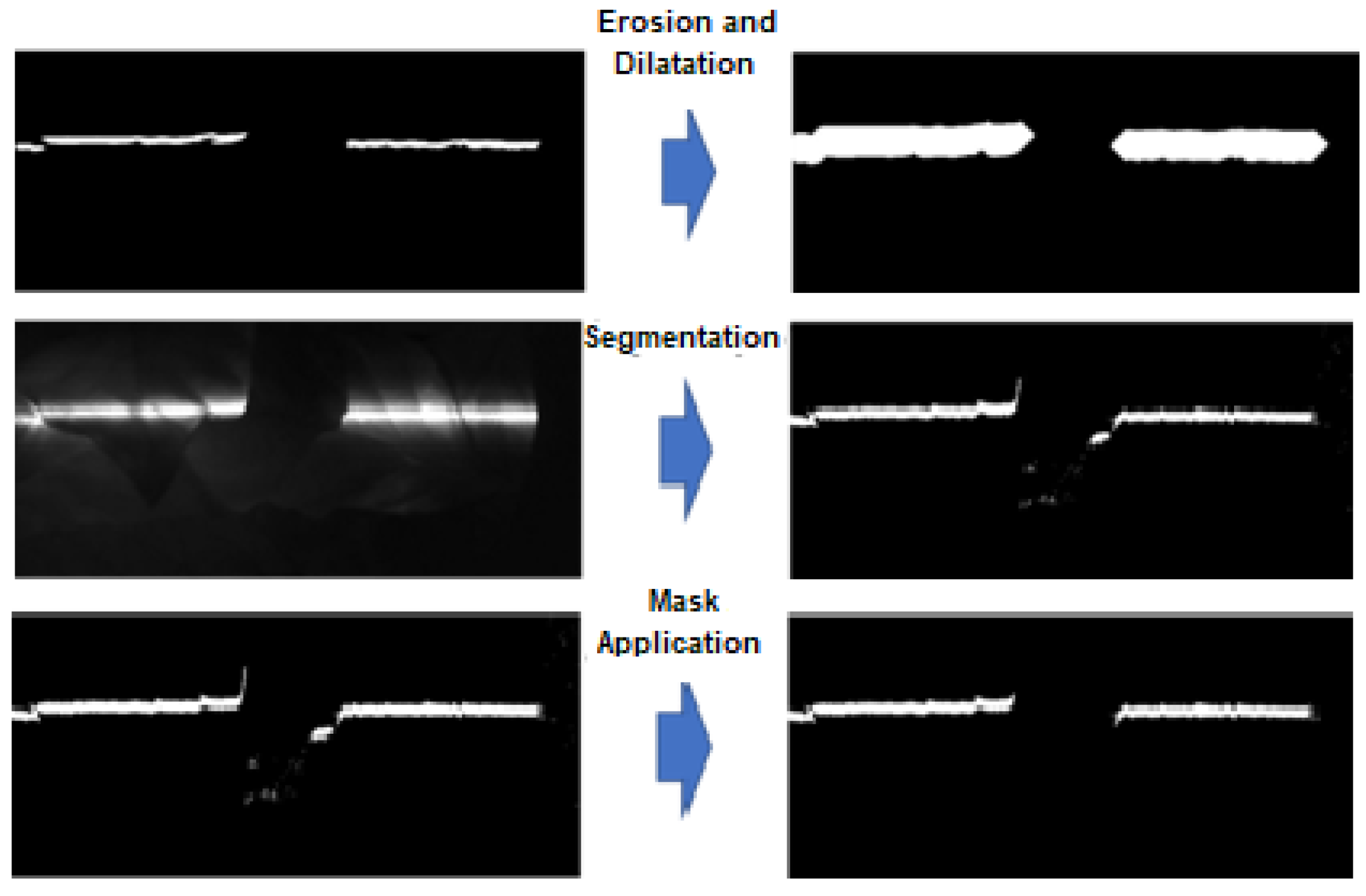

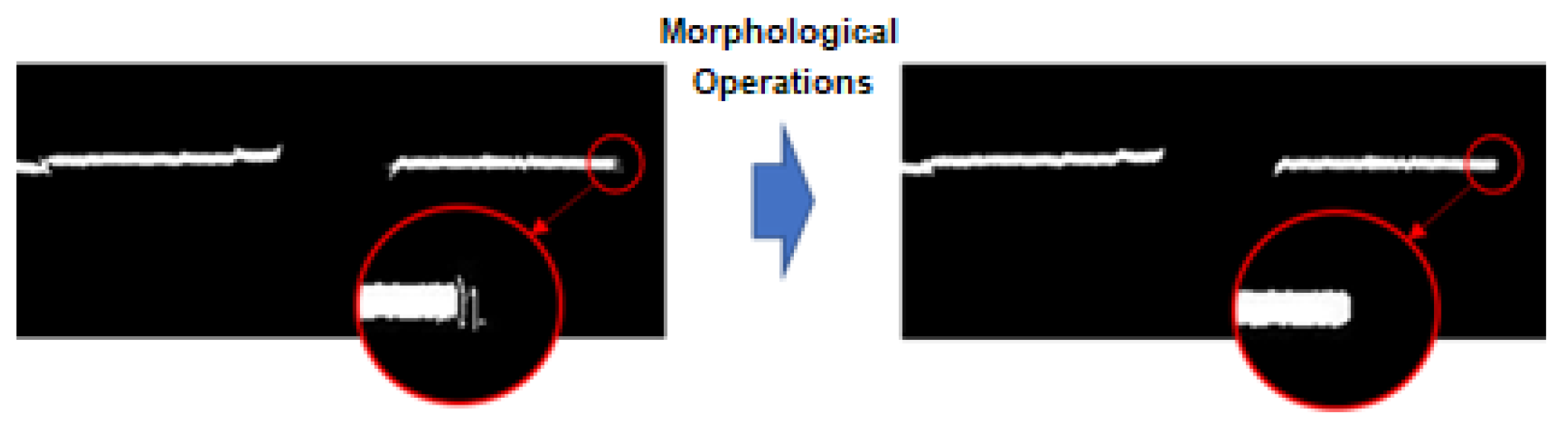

3.2.3. Morphological Operations

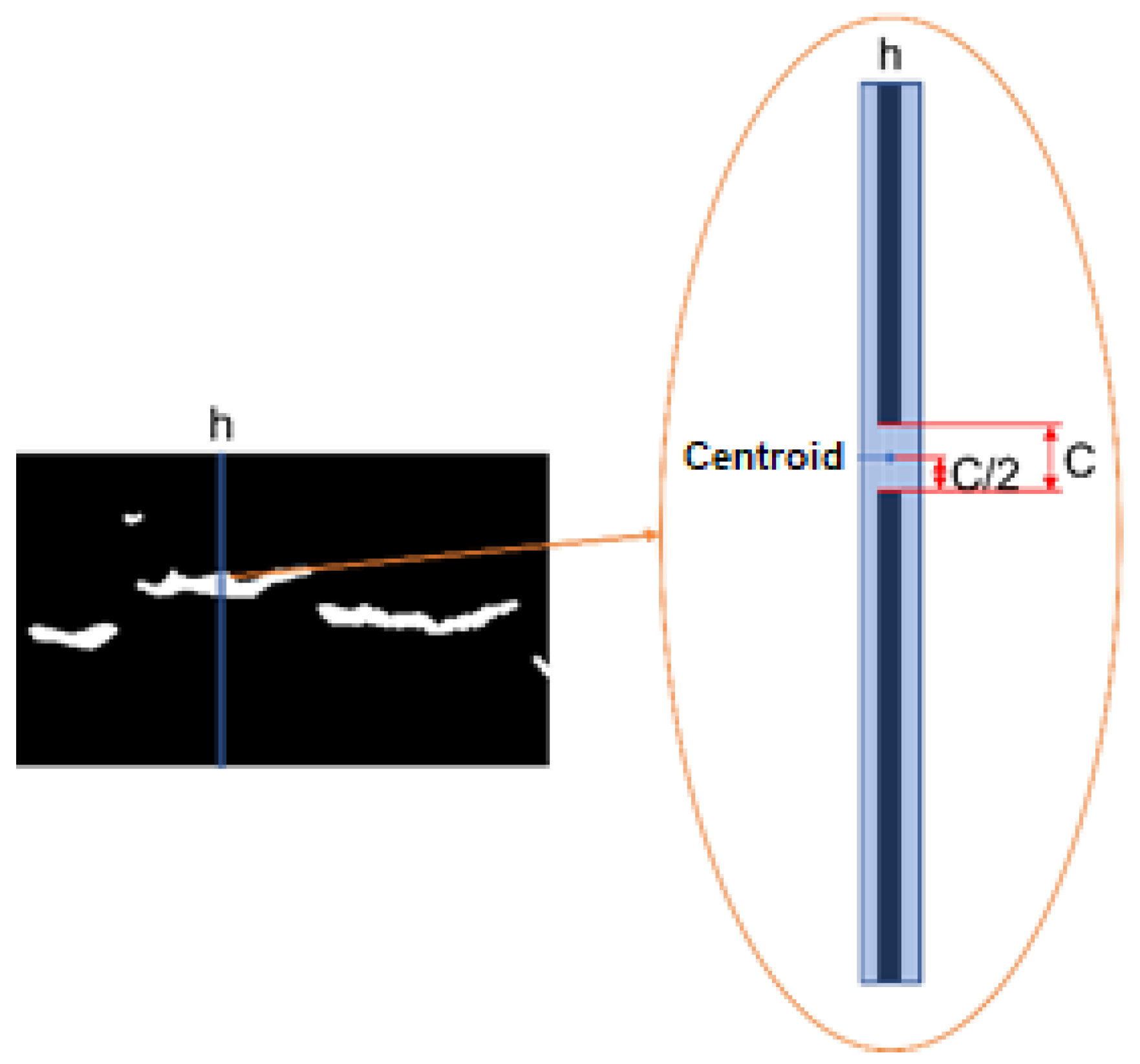

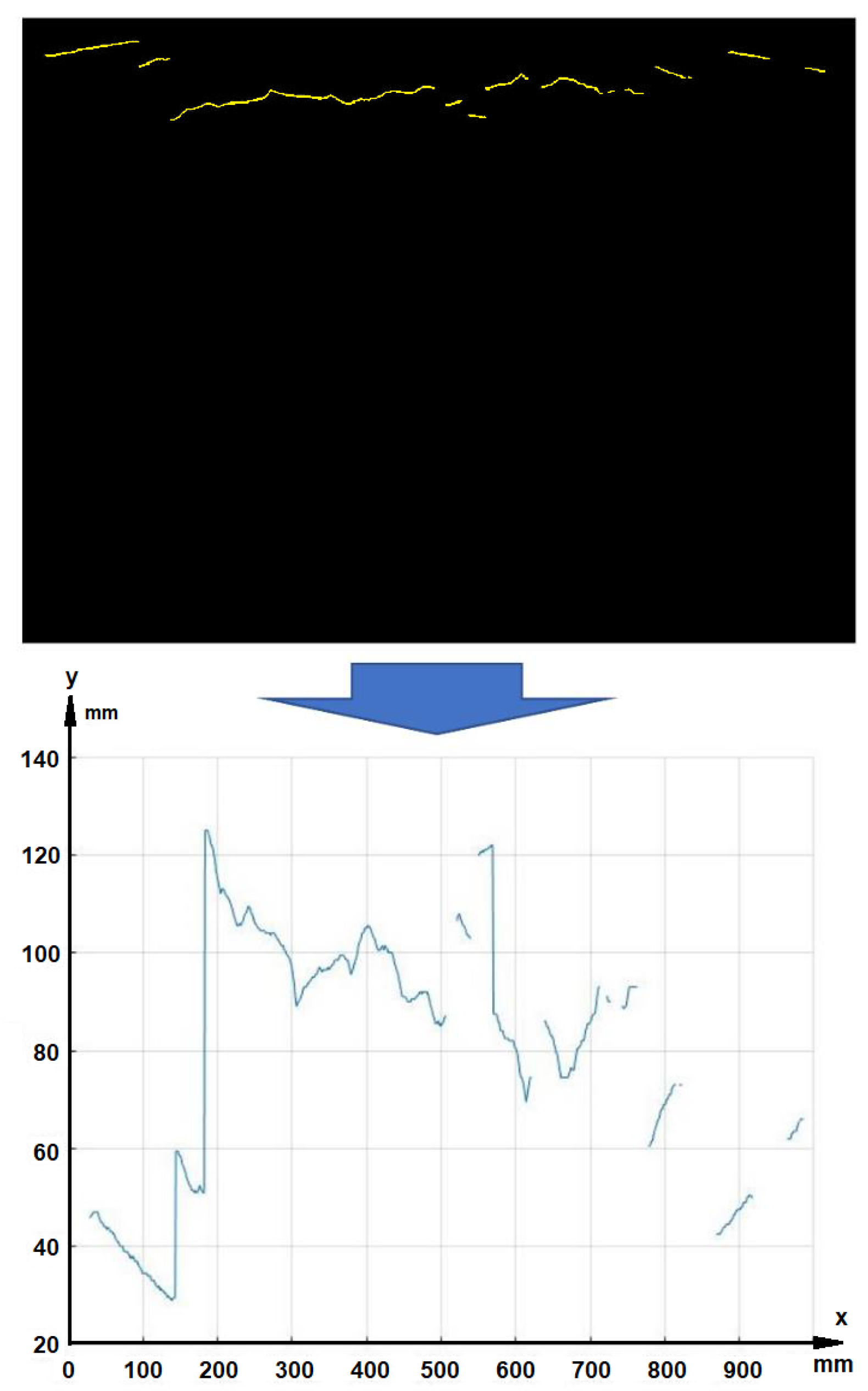

3.3. Beam Detection

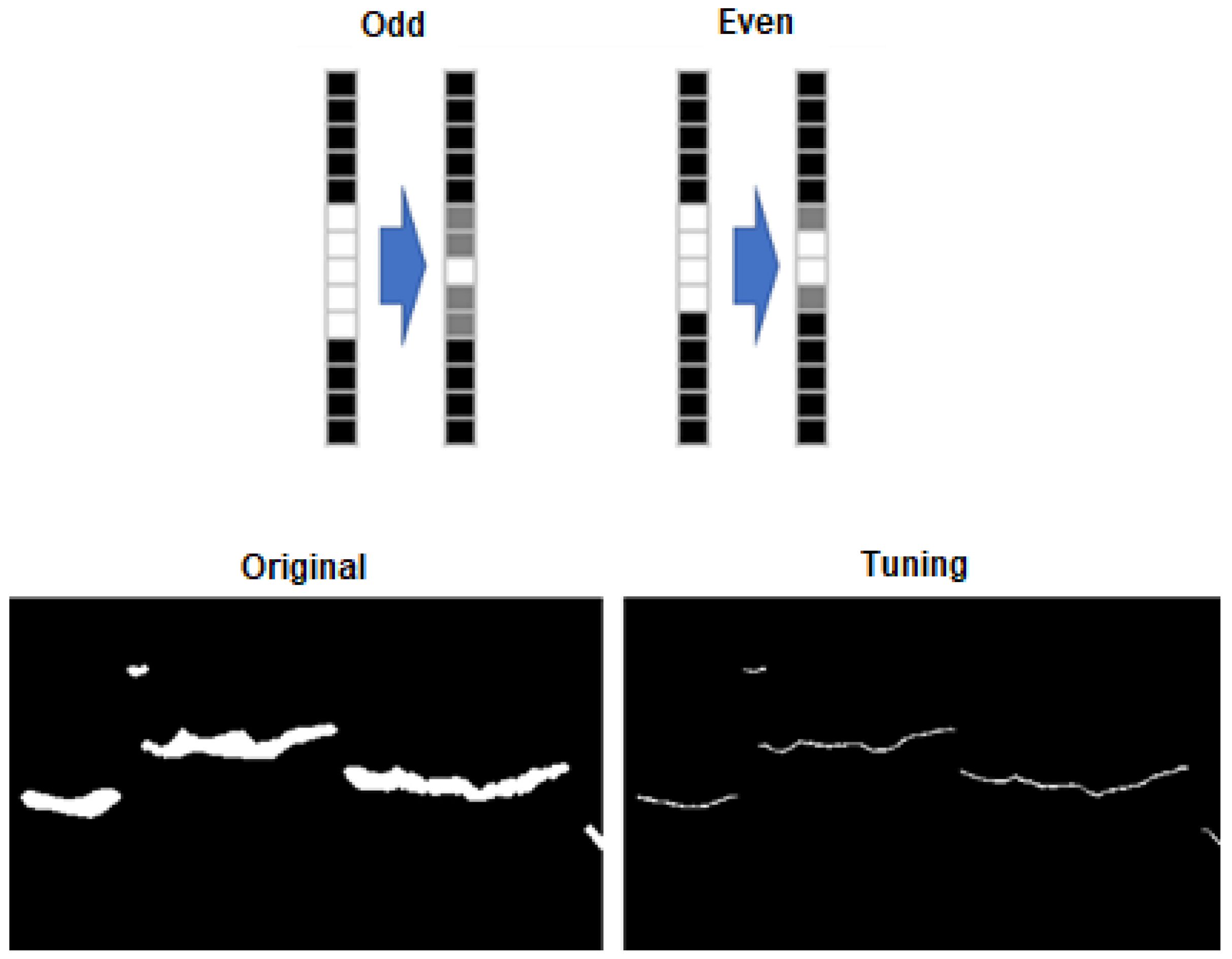

3.3.1. Column Thinning

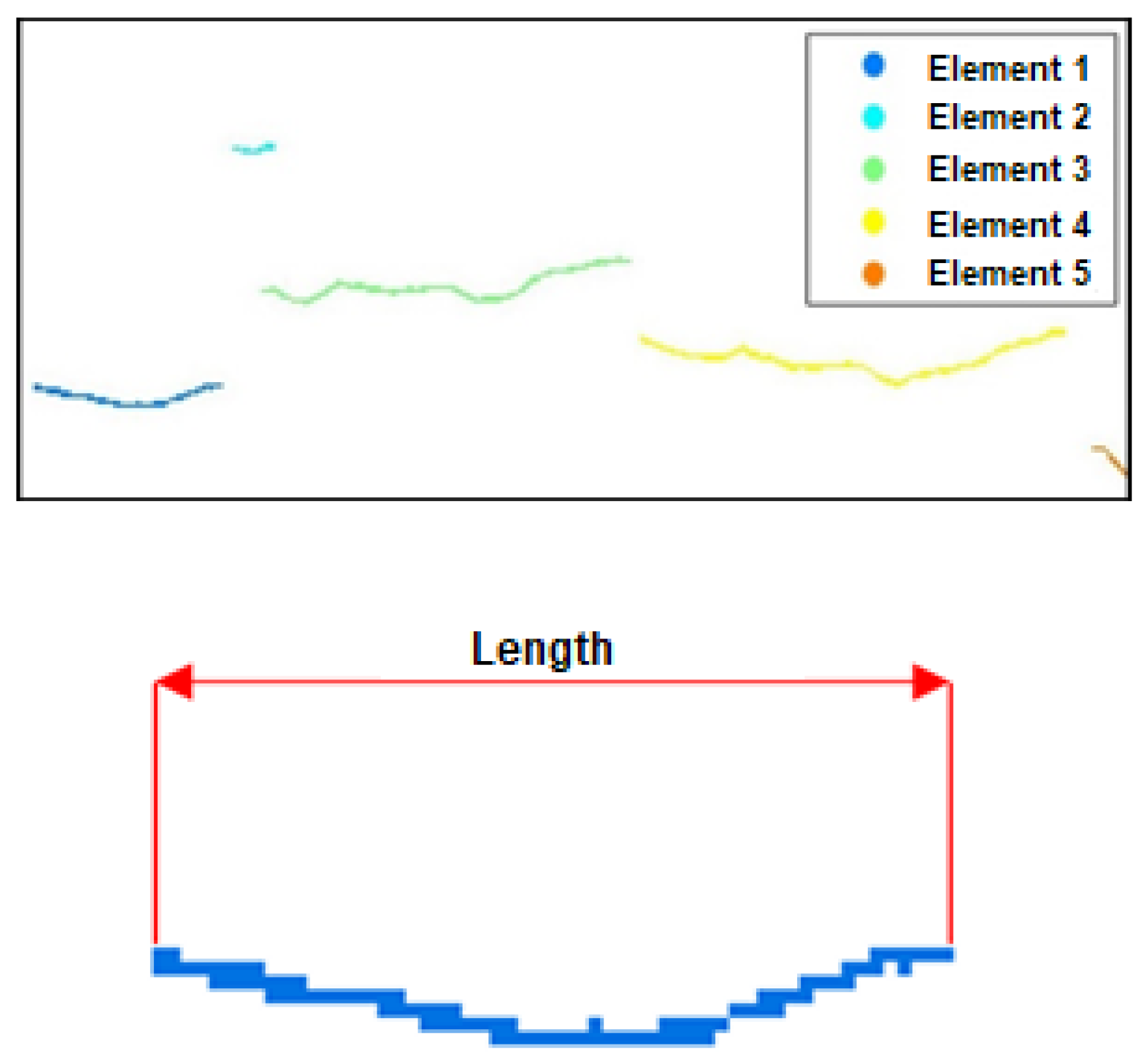

3.3.2. Extraction of Connected Elements

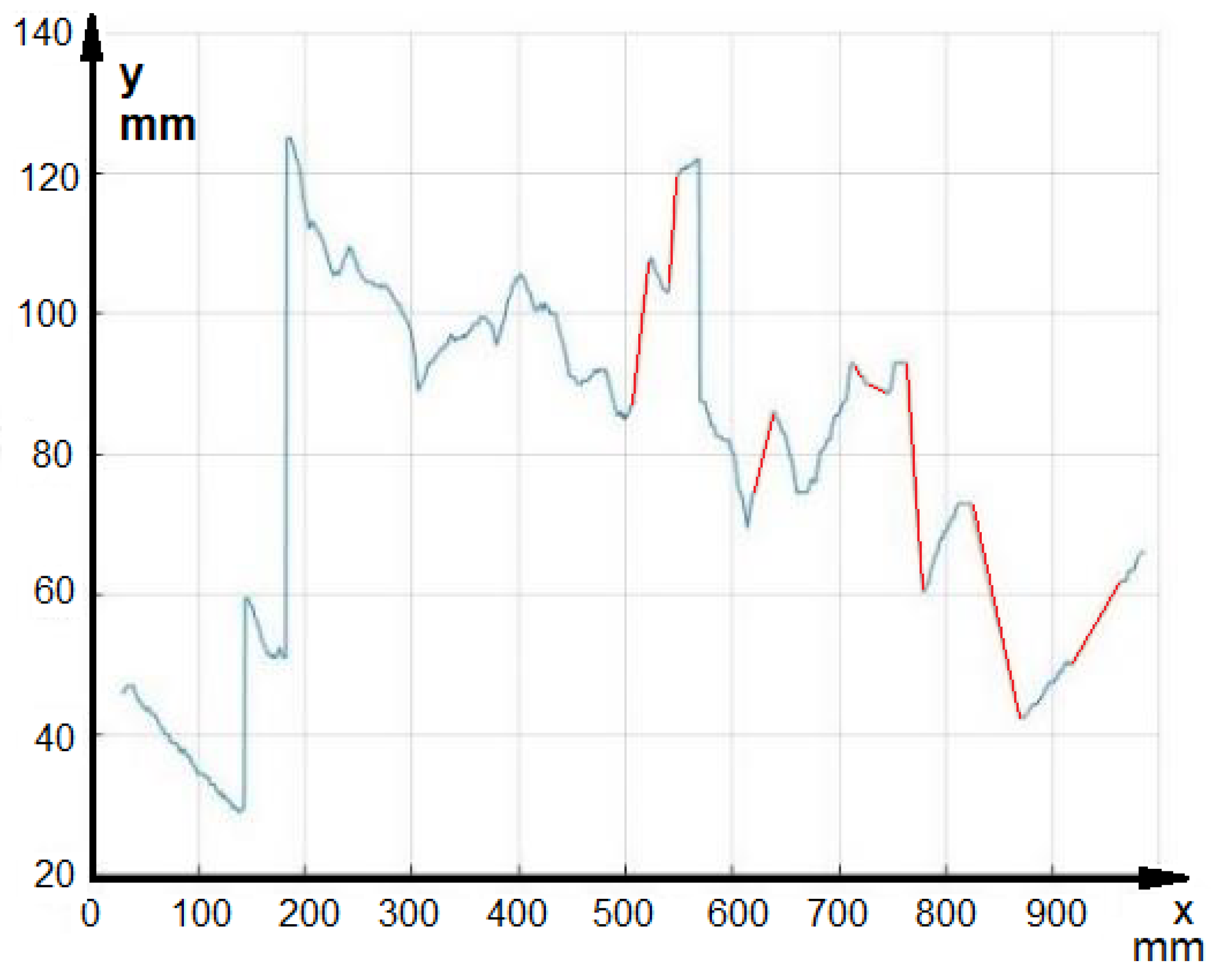

3.3.3. Vertical Overlap Removal

3.3.4. Interpolation

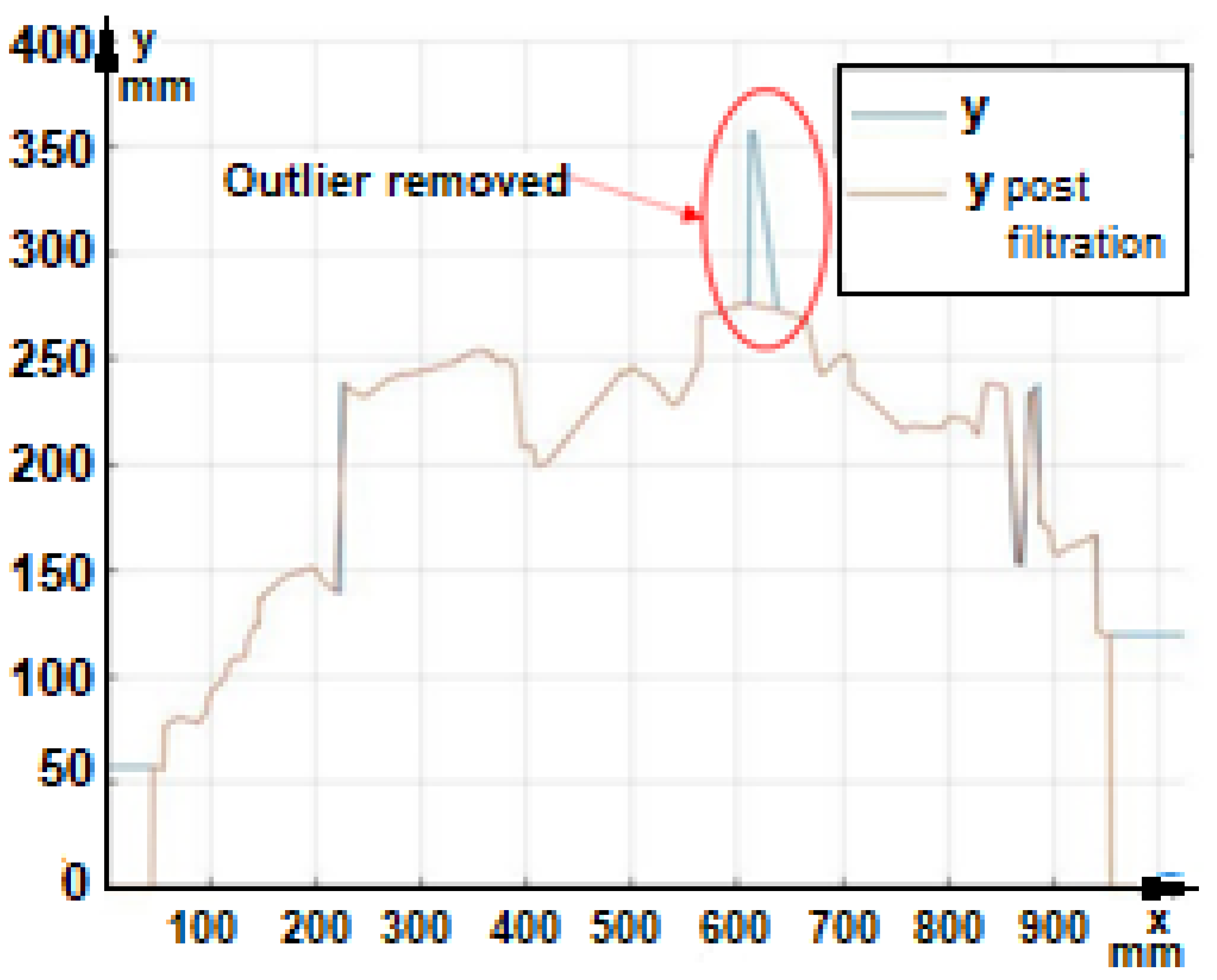

3.3.5. Filtering

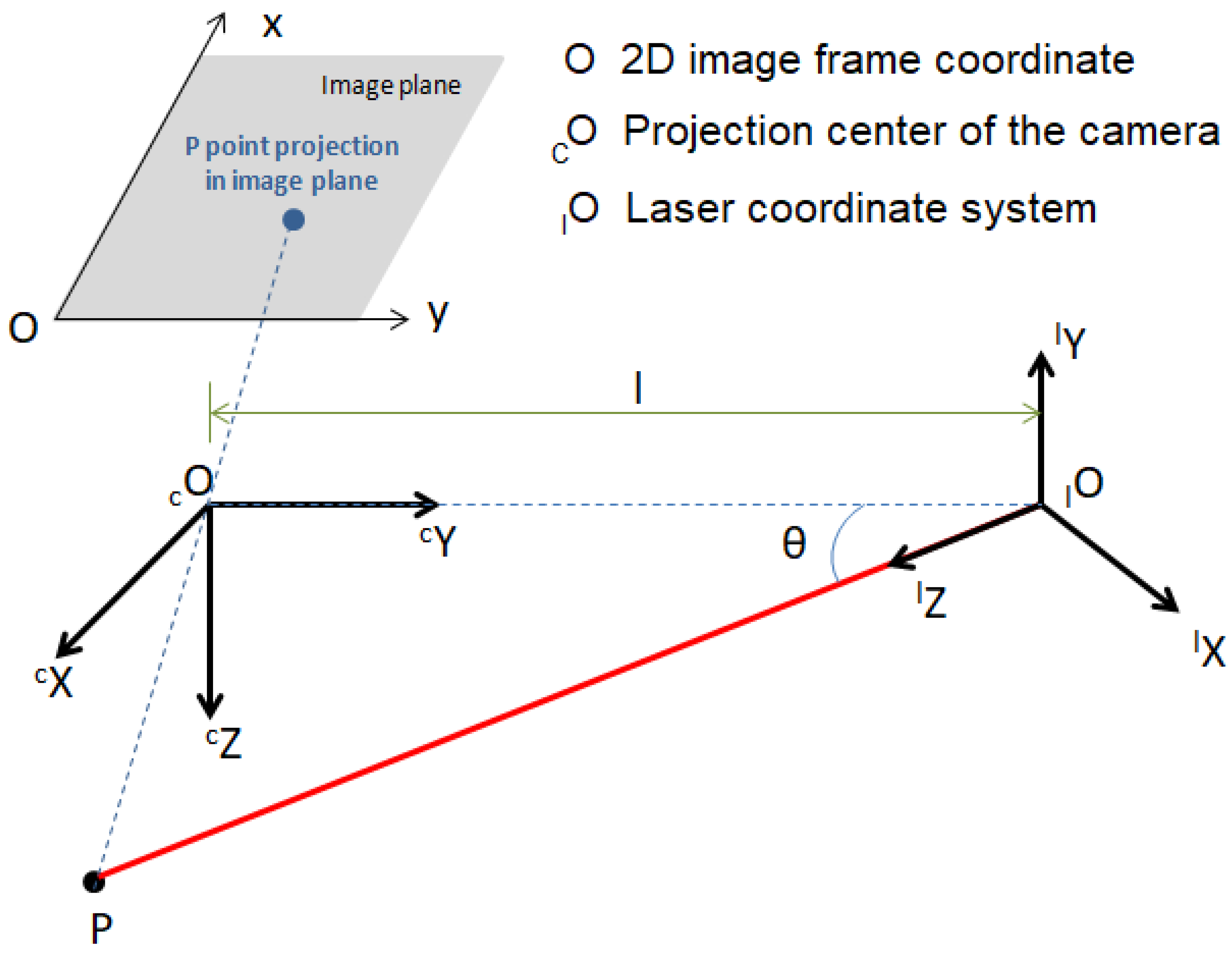

3.4. Triangulation

4. Results

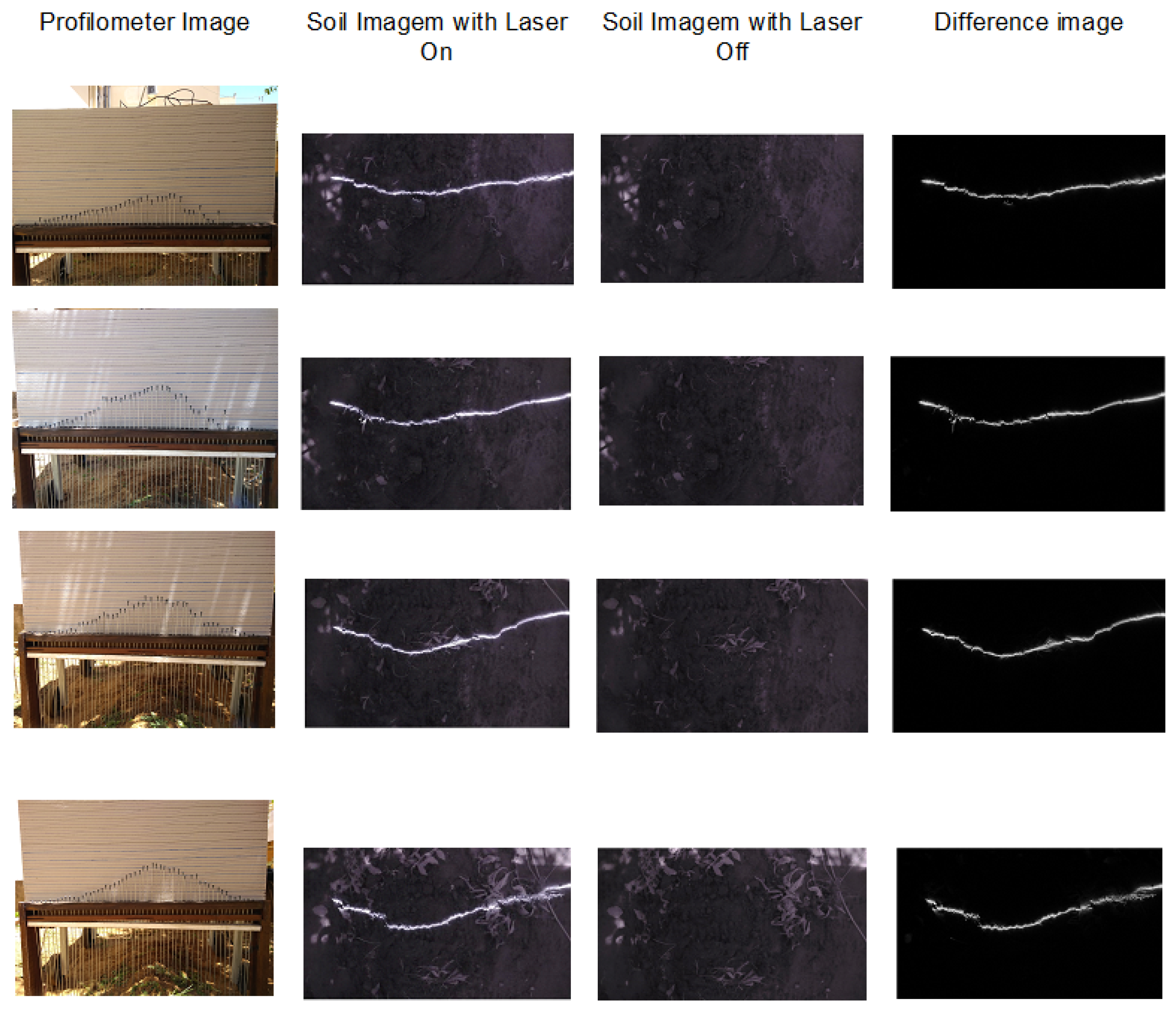

4.1. Image Acquisition

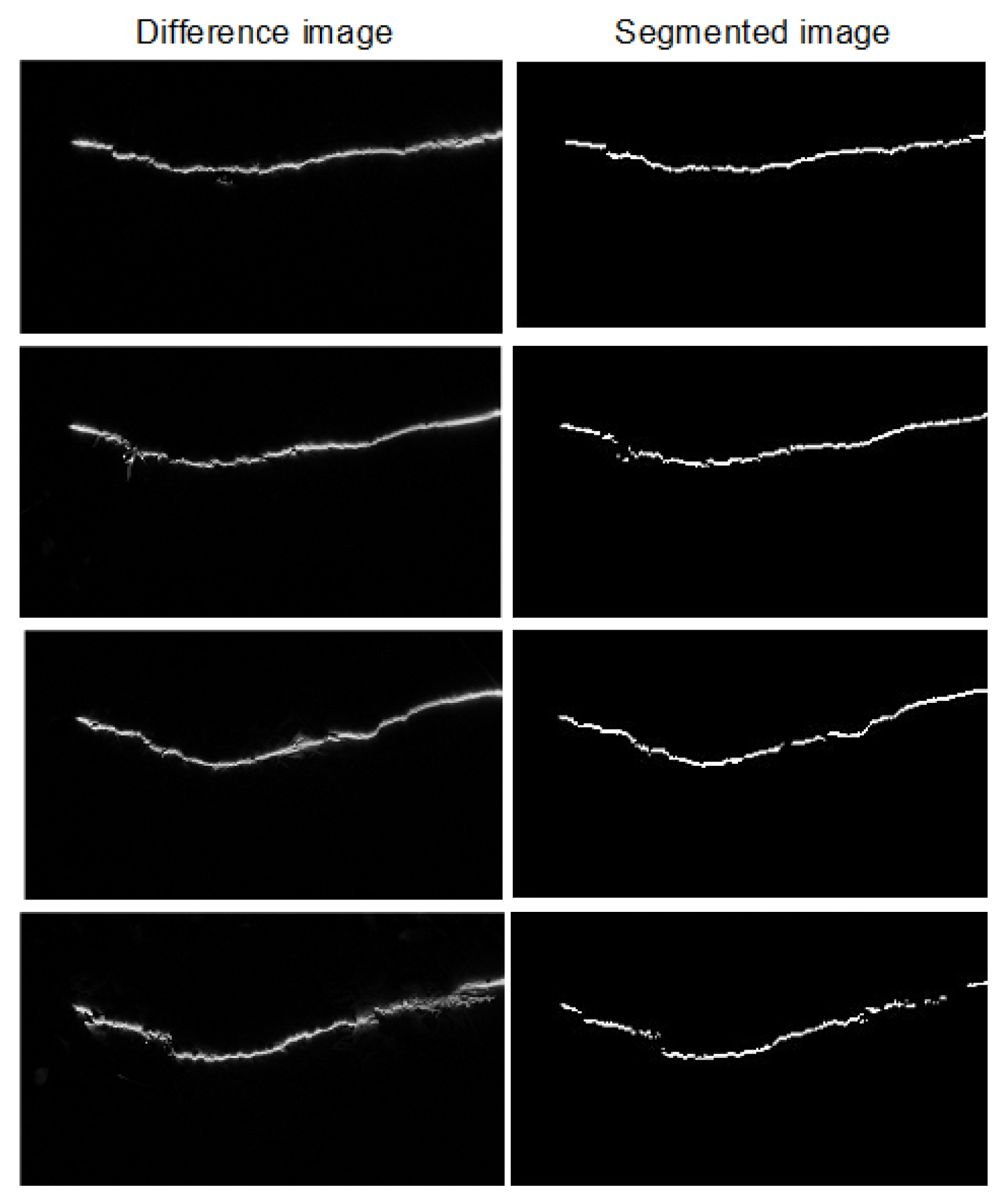

4.2. Segmentation

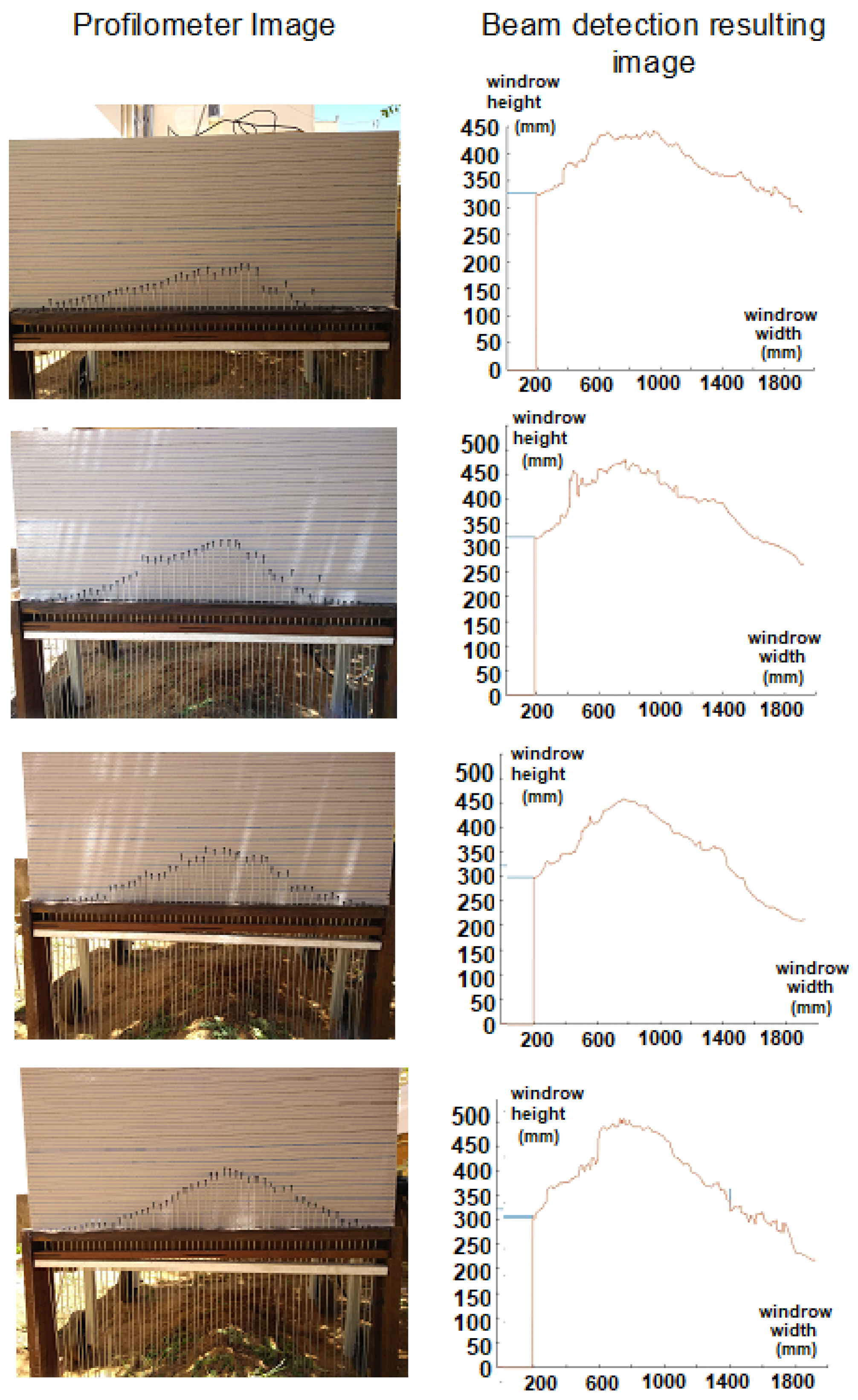

4.3. Beam Detection

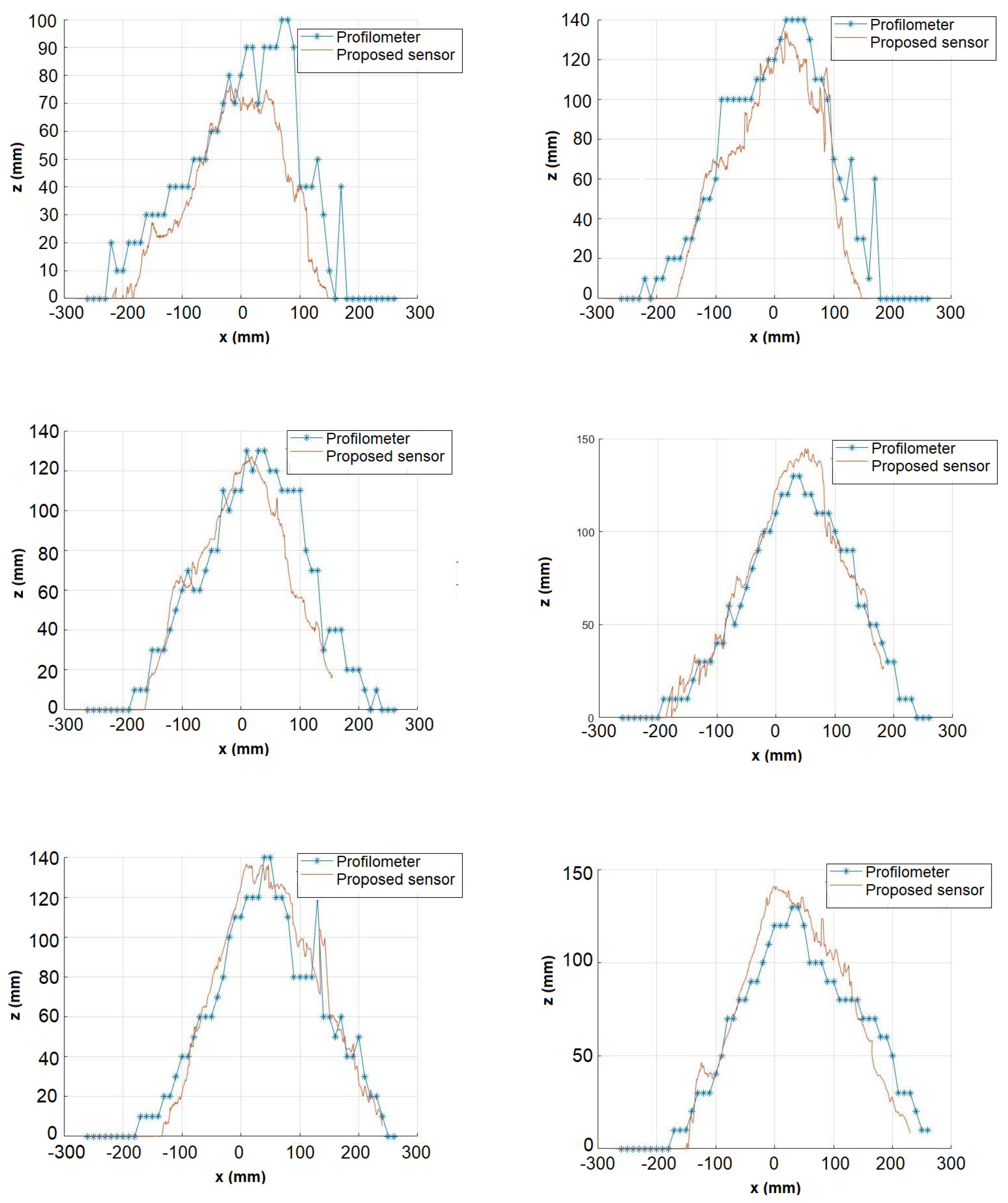

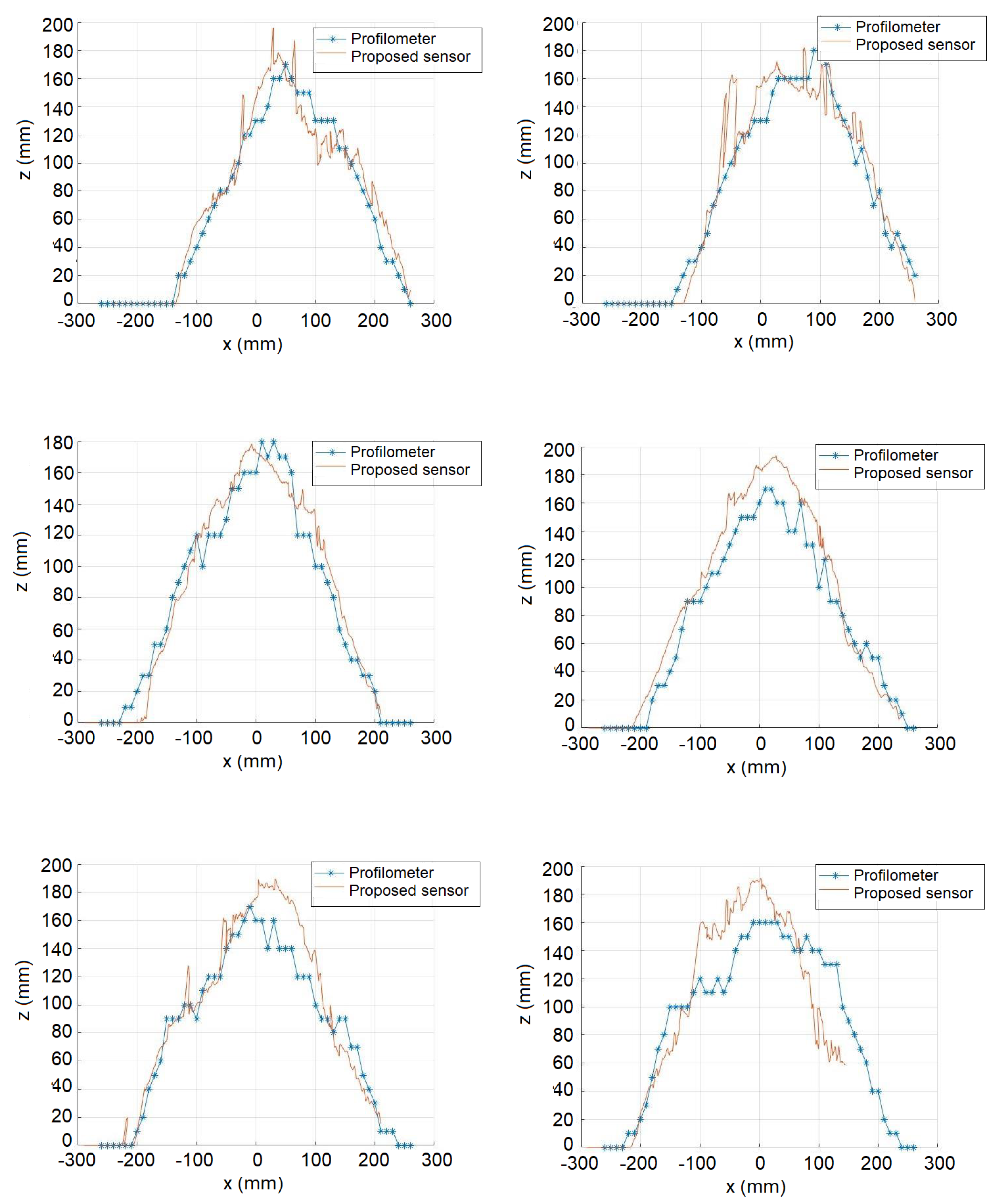

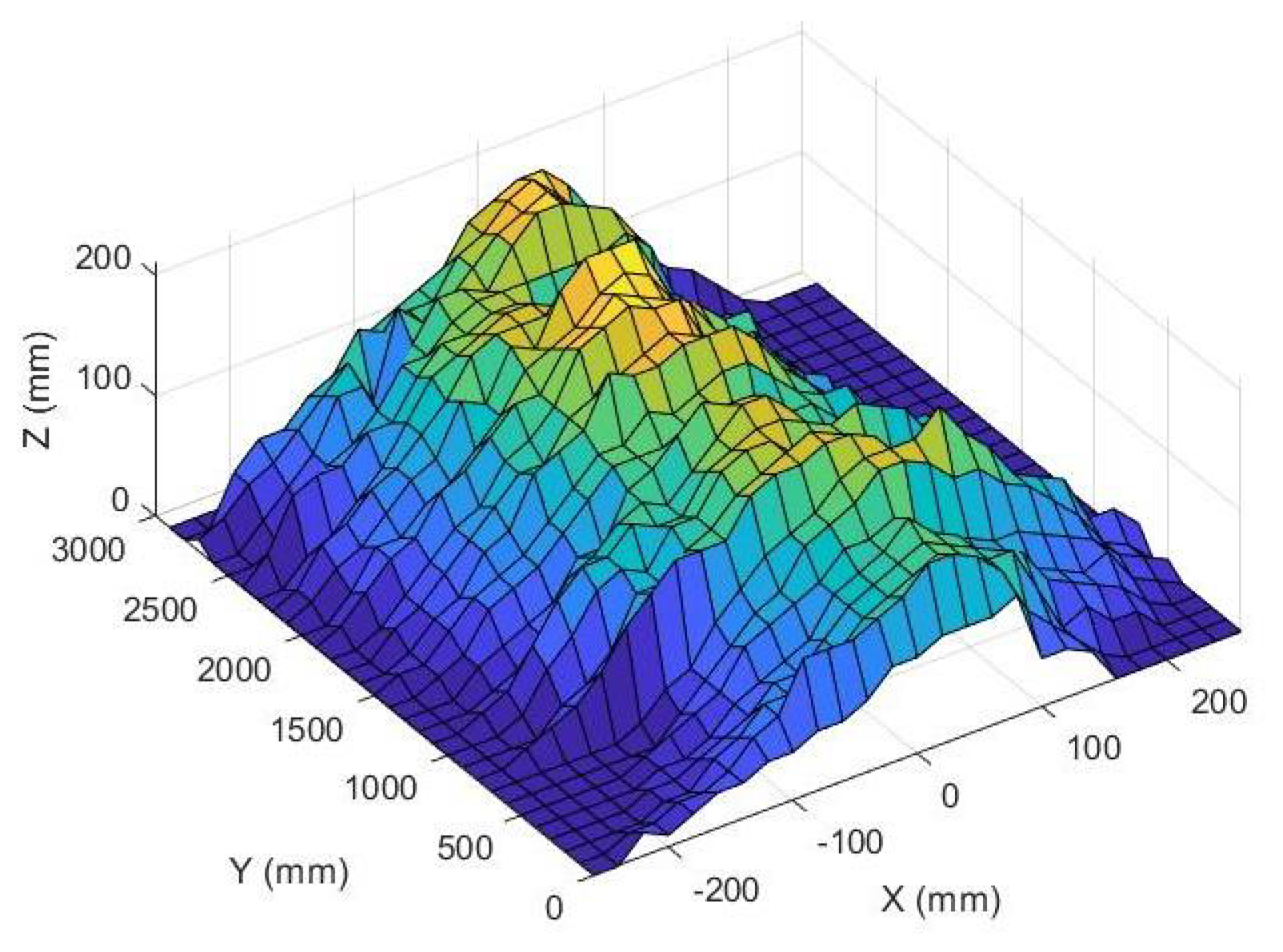

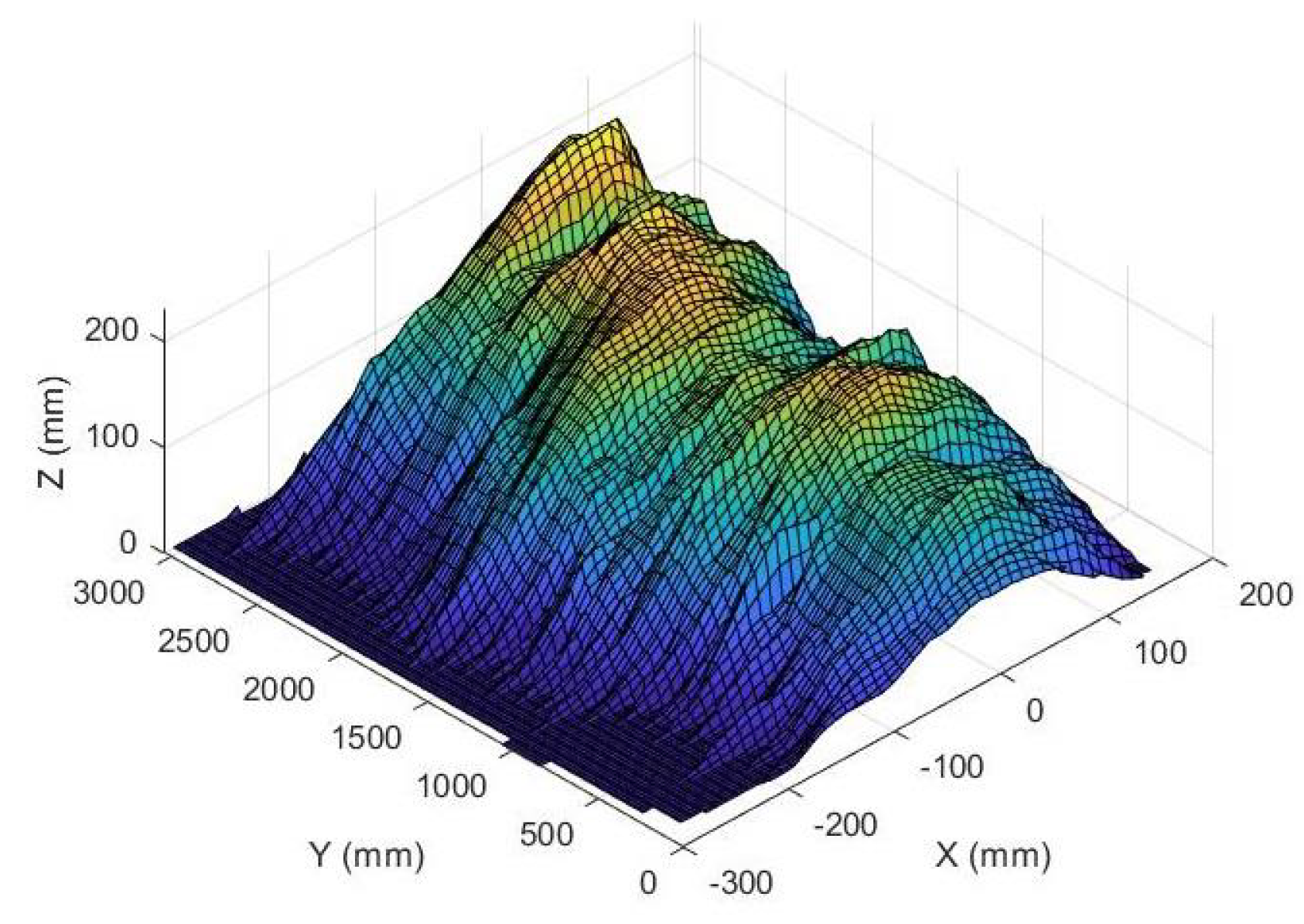

4.4. Triangulation

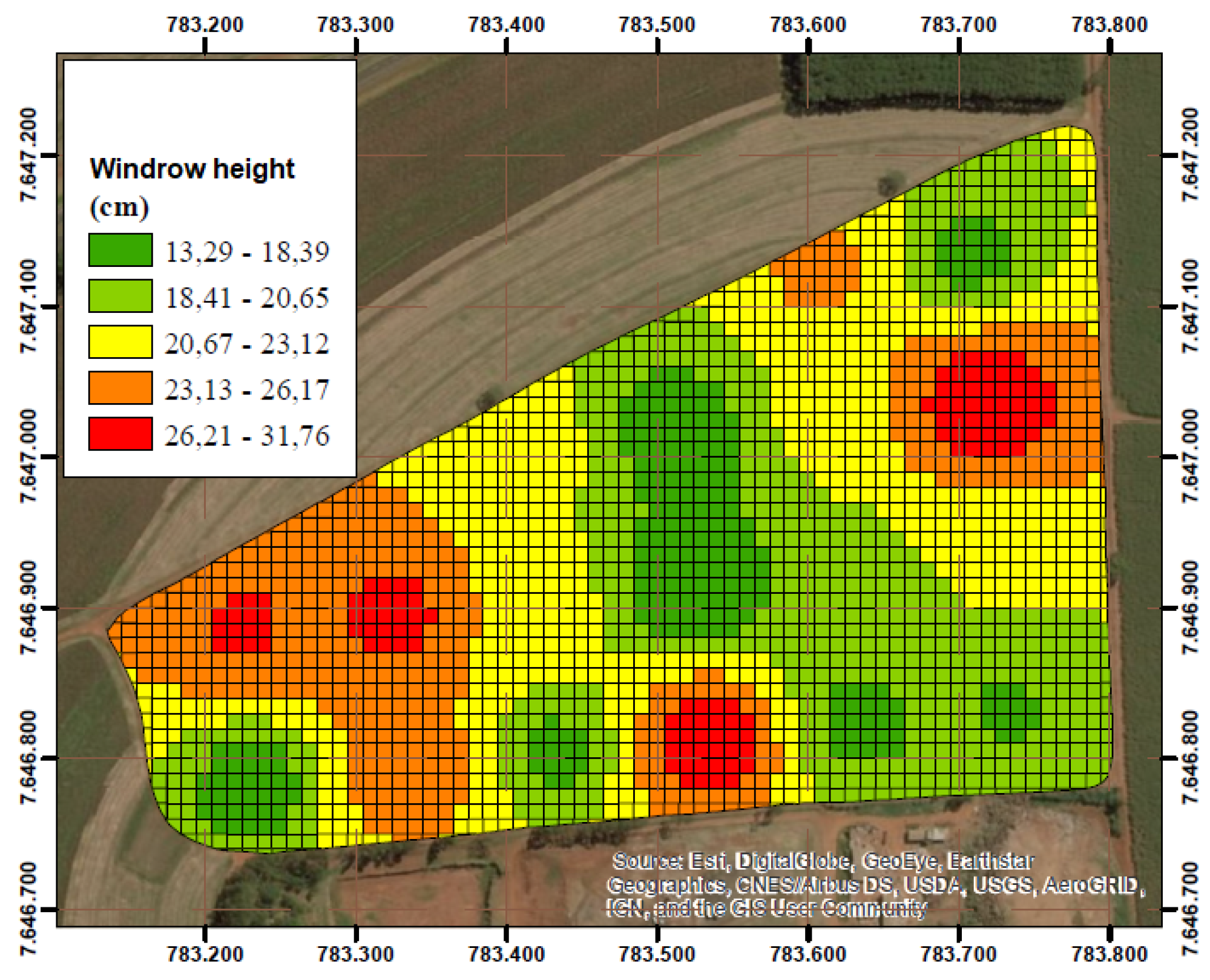

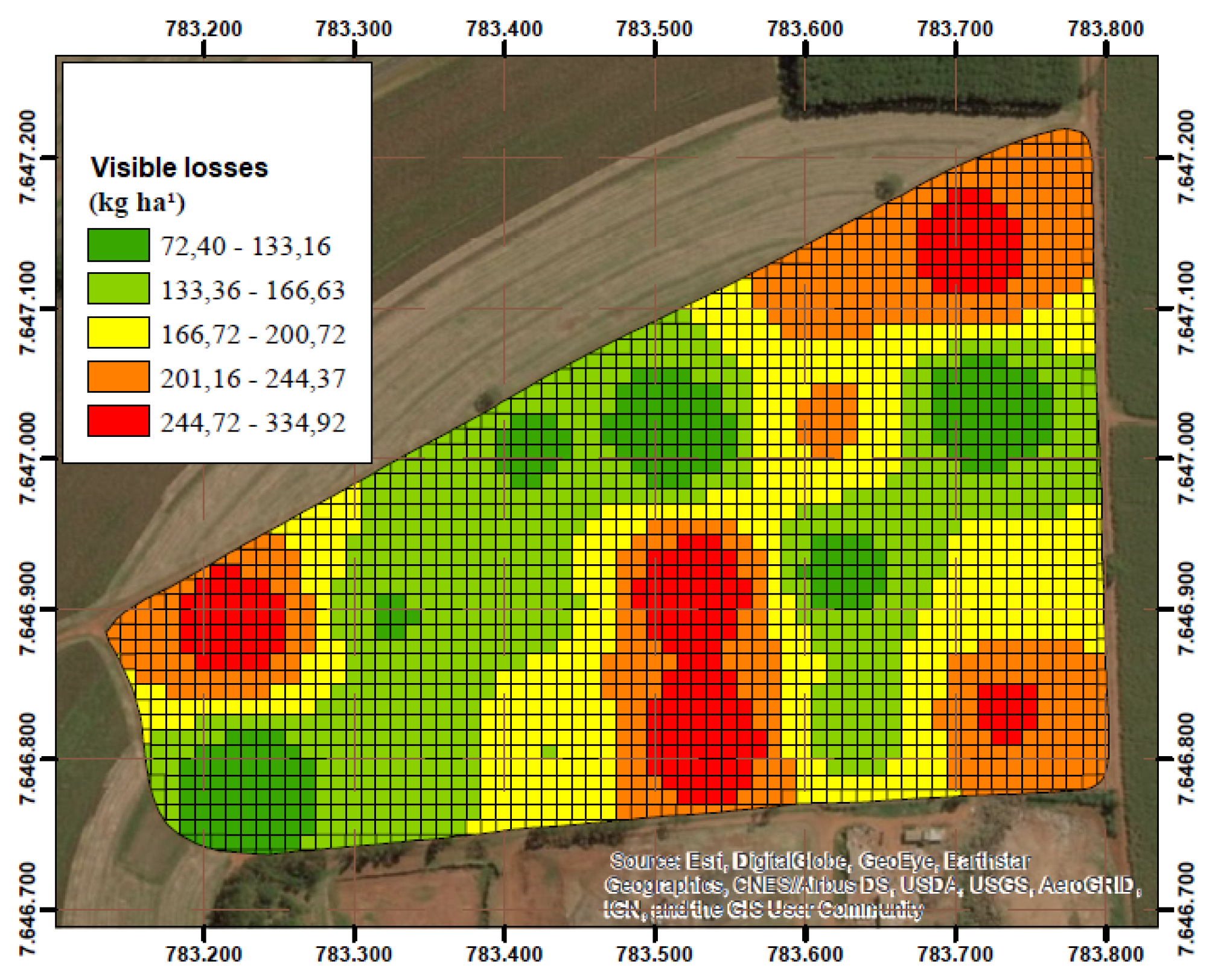

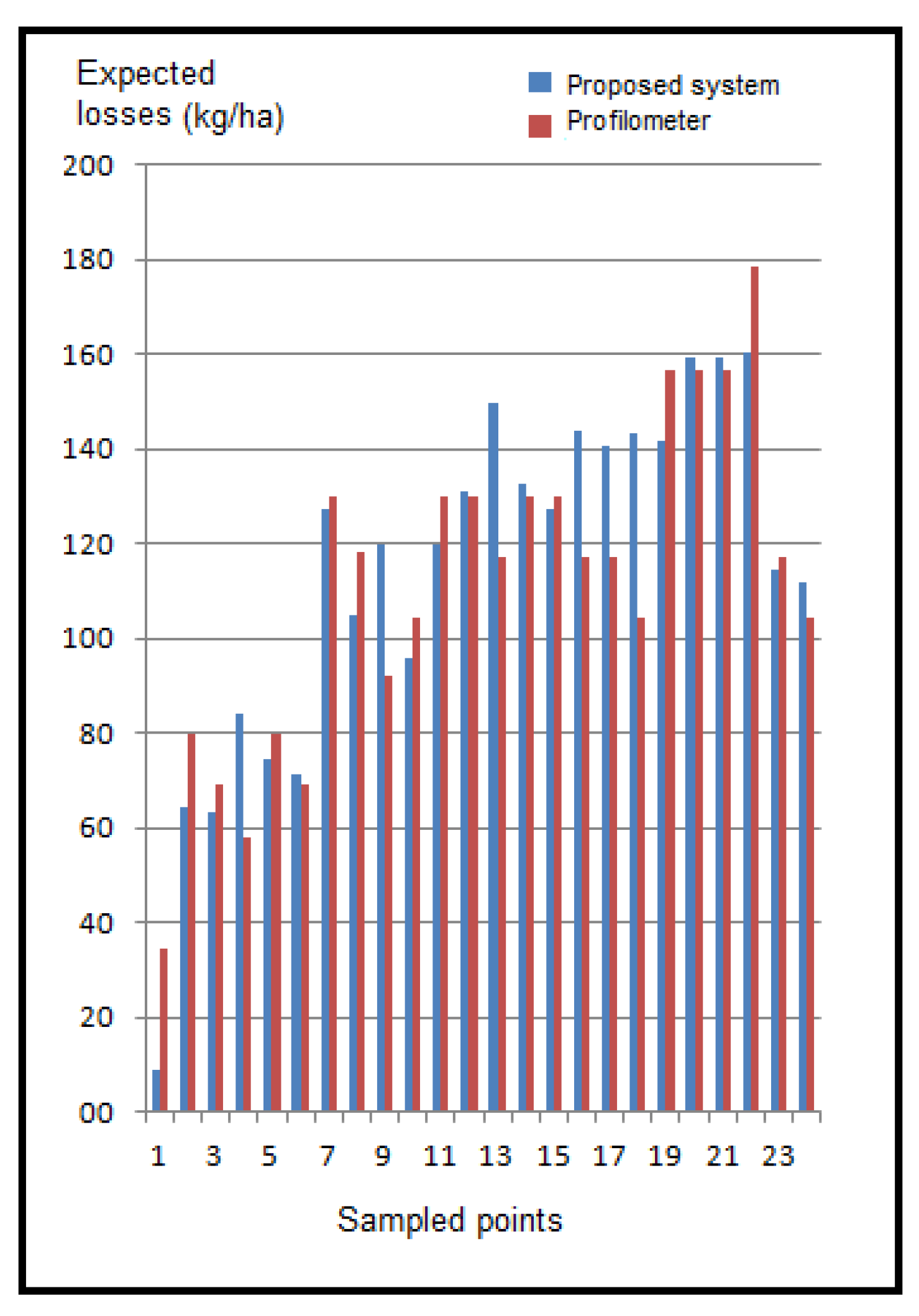

4.5. Estimated Losses

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fasiolo, D.T.; Scalera, L.; Maset, E.; Gasparetto, A. Towards autonomous mapping in agriculture: A review of supportive technologies for ground robotics. Robot. Auton. Syst. 2023, 169, 104514. [Google Scholar] [CrossRef]

- Manish, R.; Lin, Y.-C.; Ravi, R.; Hasheminasab, S.M.; Zhou, T.; Habib, A. Development of a miniaturized mobile mapping system for in-row, under-canopy phenotyping. Remote Sens. 2021, 13, 276. [Google Scholar] [CrossRef]

- Baek, E.-T.; Im, D.-Y. ROS-based unmanned mobile robot platform for agriculture. Appl. Sci. 2022, 12, 4335. [Google Scholar] [CrossRef]

- Garrido, M.; Paraforos, D.S.; Reiser, D.; Arellano, M.V.; Griepentrog, H.W.; Valero, C. 3D maize plant reconstruction based on georeferenced overlapping LiDAR point clouds. Remote Sens. 2015, 7, 17077–17096. [Google Scholar] [CrossRef]

- Chebrolu, N.; Lottes, P.; Schaefer, A.; Winterhalter, W.; Burgard, W.; Stachniss, C. Agricultural robot dataset for plant classification, localization and mapping on sugar beet fields. Int. J. Robot. Res. 2017, 36, 1045–1052. [Google Scholar] [CrossRef]

- Cubero, S.; Marco-Noales, E.; Aleixos, N.; Barbé, S.; Blasco, J. Robhortic: A field robot to detect pests and diseases in horticultural crops by proximal sensing. Agriculture 2020, 10, 276. [Google Scholar] [CrossRef]

- Gasparino, M.V.; Higuti, V.A.; Velasquez, A.E.; Becker, M. Improved localization in a corn crop row using a rotated laser rangefinder for three-dimensional data acquisition. J. Braz. Soc. Mech. Sci. Eng. 2020, 42, 1–10. [Google Scholar] [CrossRef]

- Pire, T.; Mujica, M.; Civera, J.; Kofman, E. The Rosario dataset: Multisensor data for localization and mapping in agricultural environments. Int. J. Robot. Res. 2019, 38, 633–641. [Google Scholar] [CrossRef]

- Underwood, J.; Wendel, A.; Schofield, B.; McMurray, L.; Kimber, R. Efficient in-field plant phenomics for row-crops with an autonomous ground vehicle. J. Field Robot. 2017, 34, 1061–1083. [Google Scholar] [CrossRef]

- Kragh, M.F.; Christiansen, P.; Laursen, M.S.; Larsen, M.; Steen, K.A.; Green, O.; Karstoft, H.; Jø rgensen, R.N. Fieldsafe: Dataset for obstacle detection in agriculture. Sensors 2017, 17, 2579. [Google Scholar] [CrossRef]

- Krus, A.; Van Apeldoorn, D.; Valero, C.; Ramirez, J.J. Acquiring plant features with optical sensing devices in an organic strip-cropping system. Agronomy 2020, 10, 197. [Google Scholar] [CrossRef]

- Grimstad, L.; From, P.J. The Thorvald II agricultural robotic system. Robotics 2017, 6, 24. [Google Scholar] [CrossRef]

- Shafiekhani, A.; Kadam, S.; Fritschi, F.B.; DeSouza, G.N. Vinobot and vinoculer: Two robotic platforms for high-throughput field phenotyping. Sensors 2017, 17, 214. [Google Scholar] [CrossRef] [PubMed]

- de Silva, R.; Cielniak, G.; Gao, J. Towards agricultural autonomy: Crop row detection under varying field conditions using deep learning. arXiv 2021, arXiv:2109.08247. [Google Scholar]

- Brito Filho, A.L.P.; Carneiro, F.M.; Souza, J.B.C.; Almeida, S.L.H.; Lena, B.P.; Silva, R.P. Does the Soil Tillage Affect the Quality of the Peanut Picker? Agronomy 2023, 13, 1024. [Google Scholar] [CrossRef]

- Santos, E. Produtividade e Perdas em Função da Antecipação do Arranquio Mecanizado de Amendoim; Universidade Estadual Paulista: Jaboticabal, Brazil, 2011. [Google Scholar]

- Cavichioli, F.A.; Zerbato, C.; Bertonha, R.S.; da Silva, R.P.; Silva, V.F.A. Perdas quantitativas de amendoim nos períodos do dia em sistemas mecanizados de colheita. Científica 2014, 42, 211–215. [Google Scholar] [CrossRef]

- Zerbato, C.; Furlani, C.E.A.; Ormond, A.T.S.; Gírio, L.A.S.; Carneiro, F.M.; da Silva, R.P. Statistical process control applied to mechanized peanut sowing as a function of soil texture. PLoS ONE 2017, 12, e0180399. [Google Scholar] [CrossRef]

- Anco, D.J.; Thomas, J.S.; Jordan, D.L.; Shew, B.B.; Monfort, W.S.; Mehl, H.L.; Campbell, H.L. Peanut Yield Loss in the Presence of Defoliation Caused by Late or Early Leaf Spot. Plant Dis. 2020, 104, 1390–1399. [Google Scholar] [CrossRef]

- Shen, H.; Yang, H.; Gao, Q.; Gu, F.; Hu, Z.; Wu, F.; Chen, Y.; Cao, M. Experimental Research for Digging and Inverting of Upright Peanuts by Digger-Inverter. Agriculture 2023, 13, 847. [Google Scholar] [CrossRef]

- dos Santos, A.F.; Oliviera, L.P.; Oliveira, B.R.; Ormond, A.T.S.; da Silva, R.P. Can digger blades wear affect the quality of peanut digging? Rev. Eng. Agric. 2021, 29, 49–57. [Google Scholar] [CrossRef]

- Ortiz, B.V.; Balkcom, K.B.; Duzy, L.; van Santen, E.; Hartzog, D.L. Evaluation of agronomic and economic benefits of using RTK-GPS-based auto-steer guidance systems for peanut digging operations. Precis. Agric. 2013, 14, 357–375. [Google Scholar] [CrossRef]

- Yang, H.; Cao, M.; Wang, B.; Hu, Z.; Xu, H.; Wang, S.; Yu, Z. Design and Test of a Tangential-Axial Flow Picking Device for Peanut Combine Harvesting. Agriculture 2022, 12, 179. [Google Scholar] [CrossRef]

- Shi, L.; Wang, B.; Hu, Z.; Yang, H. Mechanism and Experiment of Full-Feeding Tangential-Flow Picking for Peanut Harvesting. Agriculture 2022, 12, 1448. [Google Scholar] [CrossRef]

- Azmoode-Mishamandani, A.; Abdollahpoor, S.; Navid, H.; Vahed, M.M. Performance evaluation of a peanut harvesting machine in Guilan province, Iran. Int. J. Biosci.—IJB 2014, 5, 94–101. [Google Scholar]

- Ferezin, E.; Voltarelli, M.A.; Silva, R.P.; Zerbato, C.; Cassia, M.T. Power take-off rotation and operation quality of peanut mechanized digging. Afr. J. Agric. Res. 2015, 10, 2486–2493. [Google Scholar] [CrossRef]

- Bunhola, T.M.; de Paula Borba, M.A.; de Oliveira, D.T.; Zerbato, C.; da Silva, R.P. Mapas temáticos para perdas no recolhimento em função da altura da leira. In Conference: 14º Encontro sobre a cultura do Amendoim; Anais: Jaboticabal, Brazil, 2021. [Google Scholar] [CrossRef]

- Lia, L.; Hea, F.; Fana, R.; Fanb, B.; Yan, X. 3D reconstruction based on hierarchical reinforcement learning with transferability. Integr. Comput.-Aided Eng. 2023, 30, 327–339. [Google Scholar] [CrossRef]

- Hu, X.; Xiong, N.; Yang, L.T.; Li, D. A surface reconstruction approach with a rotated camera. In Proceedings of the International Symposium on Computer Science and Its Applications, CSA 2008, Hobart, TAS, Australia, 13–15 October 2008; pp. 72–77. [Google Scholar]

- Li, D.; Zhang, H.; Song, Z.; Man, D.; Jones, M.W. An automatic laser scanning system for accurate 3D reconstruction of indoor scenes. In Proceedings of the 2017 IEEE International Conference on Information and Automation (ICIA), Macao, China, 18–20 July 2017; pp. 826–831. [Google Scholar]

- Shan, P.; Jiang, X.; Du, Y.; Ji, H.; Li, P.; Lyu, C.; Yang, W.; Liu, Y. A laser triangulation based on 3D scanner used for an autonomous interior finishing robot. In Proceedings of the 2017 IEEE International Conference on Robotics and Biomimetics, ROBIO 2017, Macau, China, 5–8 December 2017; pp. 1–6. [Google Scholar]

- Hadiyoso, S.; Musaharpa, G.T.; Wijayanto, I. Prototype implementation of dual laser 3D scanner system using cloud to cloud merging method. In Proceedings of the APWiMob 2017—IEEE Asia Pacific Conference on Wireless and Mobile, Bandung, Indonesia, 28–29 November 2017; pp. 36–40. [Google Scholar]

- Schlosser, J.F.; Herzog, D.; Rodrigues, H.E.; Souza, D.C. Tratores do Brasil. Cultiv. Máquinas 2023, XXII, 20–28. [Google Scholar]

- Simões, R. Controle Estatístico Aplicado ao Processo de Colheita Mecanizada de Sementes de Amendoim; Universidade Estadual Paulista: Jaboticabal, Brazil, 2009. [Google Scholar]

- Ferezin, E. Sistema Eletrohidráulico para Acionamento da Esteira vibratóRia do Invertedor de Amendoim; Universidade Estadual Paulista: Jaboticabal, Brazil, 2015. [Google Scholar]

| Windrow Height | Estimated Losses |

|---|---|

| 13.29–18.39 | 72.40–133.16 |

| 18.41–20.65 | 133.36–166.63 |

| 20.67–23.12 | 166.72–200.72 |

| 23.13–26.17 | 201.16–244.37 |

| 26.21–31.76 | 244.72–334.92 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Senni, A.P.; Tronco, M.L.; Pedrino, E.C.; Silva, R.P.d. Automated Windrow Profiling System in Mechanized Peanut Harvesting. AgriEngineering 2024, 6, 3511-3537. https://doi.org/10.3390/agriengineering6040200

Senni AP, Tronco ML, Pedrino EC, Silva RPd. Automated Windrow Profiling System in Mechanized Peanut Harvesting. AgriEngineering. 2024; 6(4):3511-3537. https://doi.org/10.3390/agriengineering6040200

Chicago/Turabian StyleSenni, Alexandre Padilha, Mario Luiz Tronco, Emerson Carlos Pedrino, and Rouverson Pereira da Silva. 2024. "Automated Windrow Profiling System in Mechanized Peanut Harvesting" AgriEngineering 6, no. 4: 3511-3537. https://doi.org/10.3390/agriengineering6040200