Development of Pear Pollination System Using Autonomous Drones

Abstract

:1. Introduction

- (A)

- Pollination labor issues:

- (B)

- Pollination work period issues:

2. Challenges in Building a Pollination Drone System

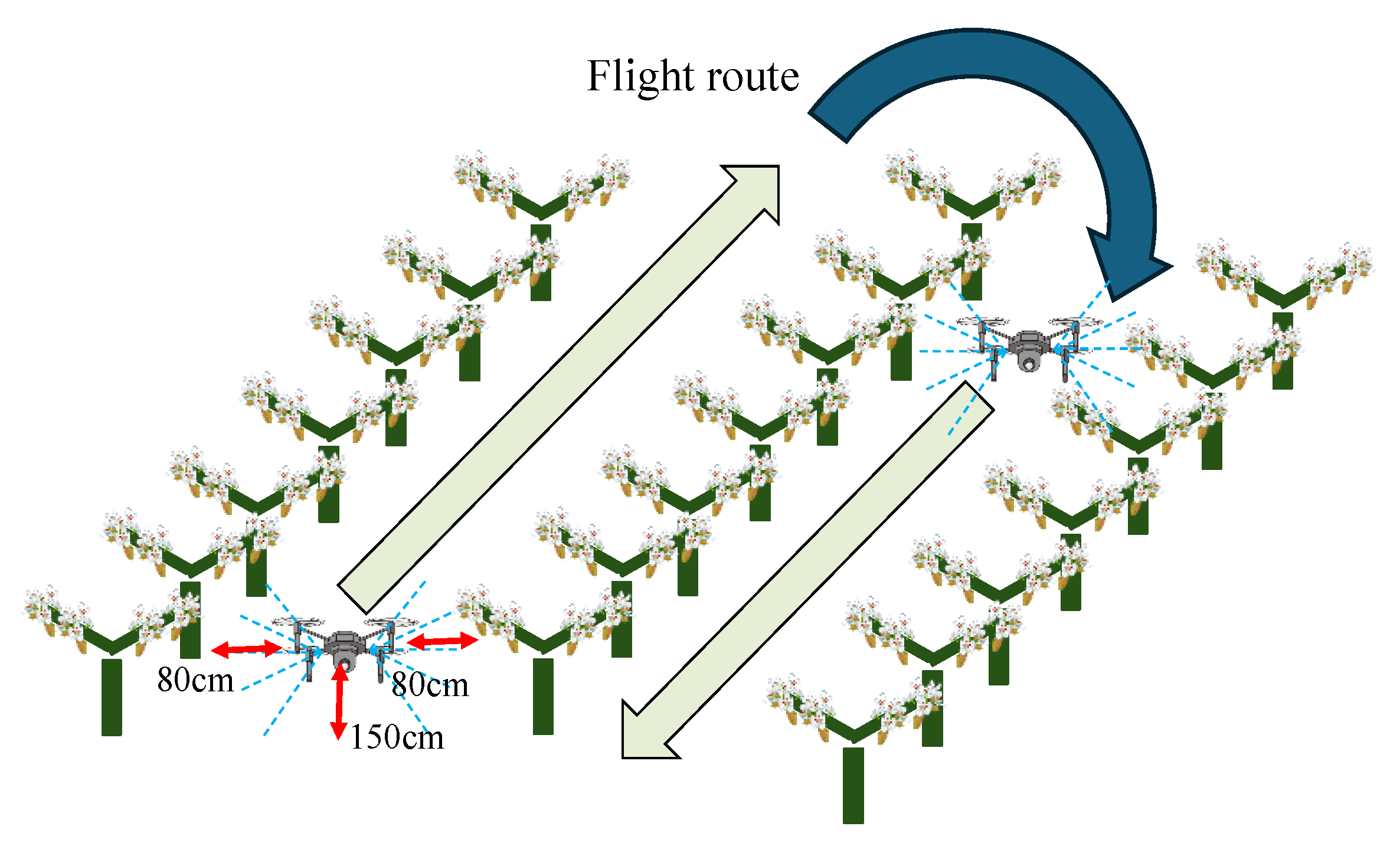

- Challenge (1): Construction of flight routes in the field

- Challenge (2): High-precision flight positioning

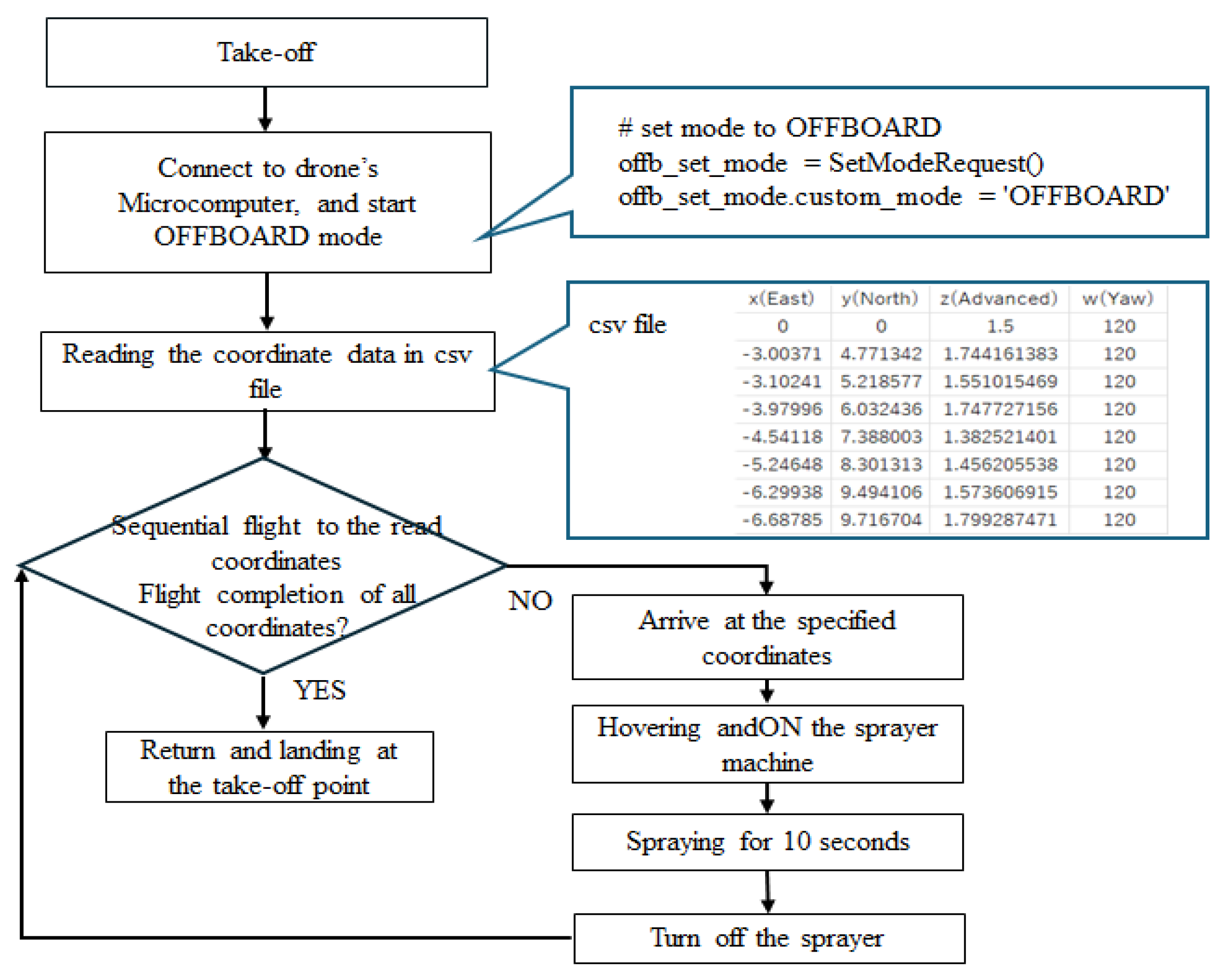

- Challenge (3): Establish a scenario to carry out pollination work

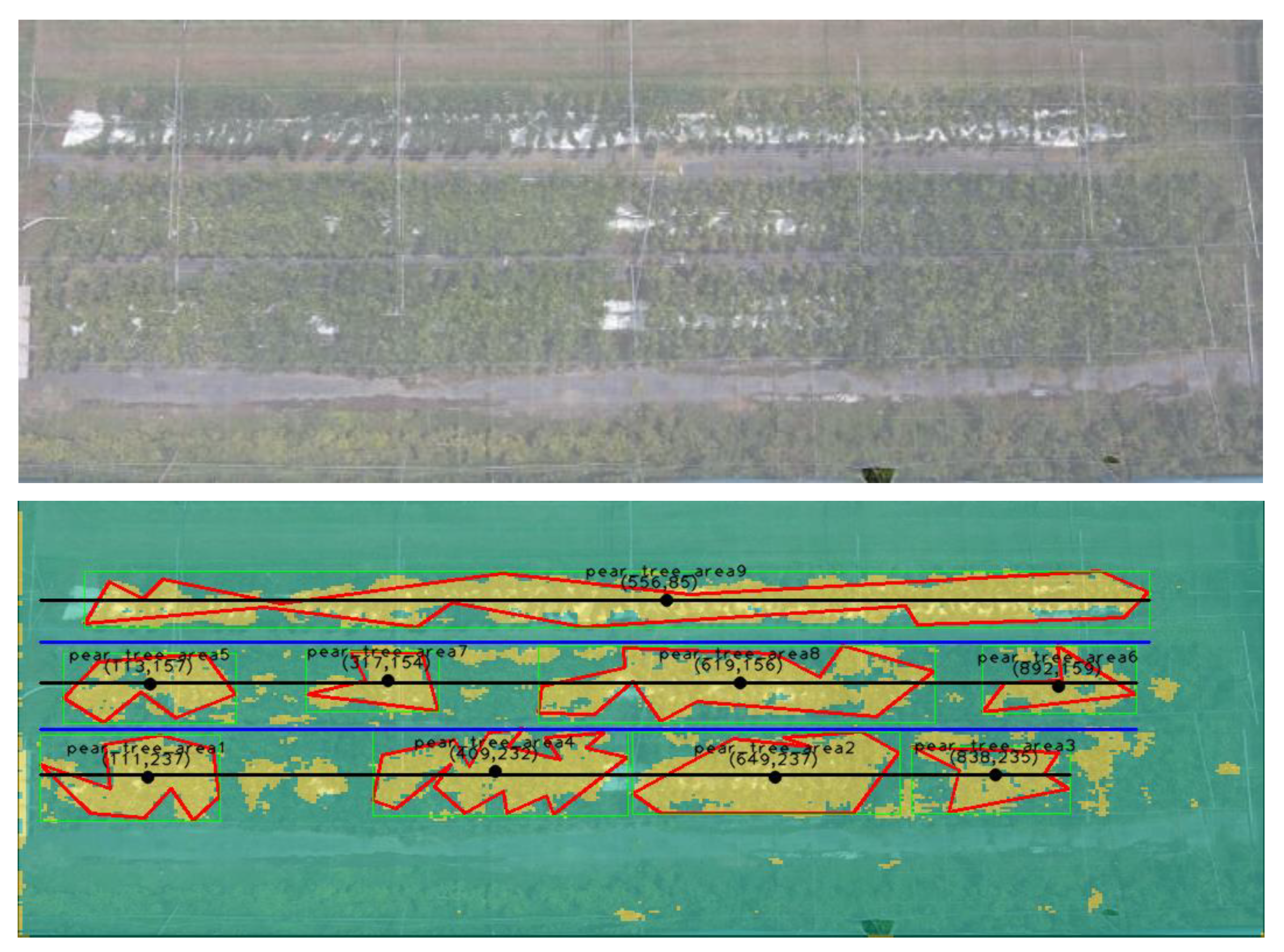

3. Method of Constructing Flight Routes in the Field

3.1. Area Extraction by Segmentation

3.2. Flight Route Coordinate Extraction Method

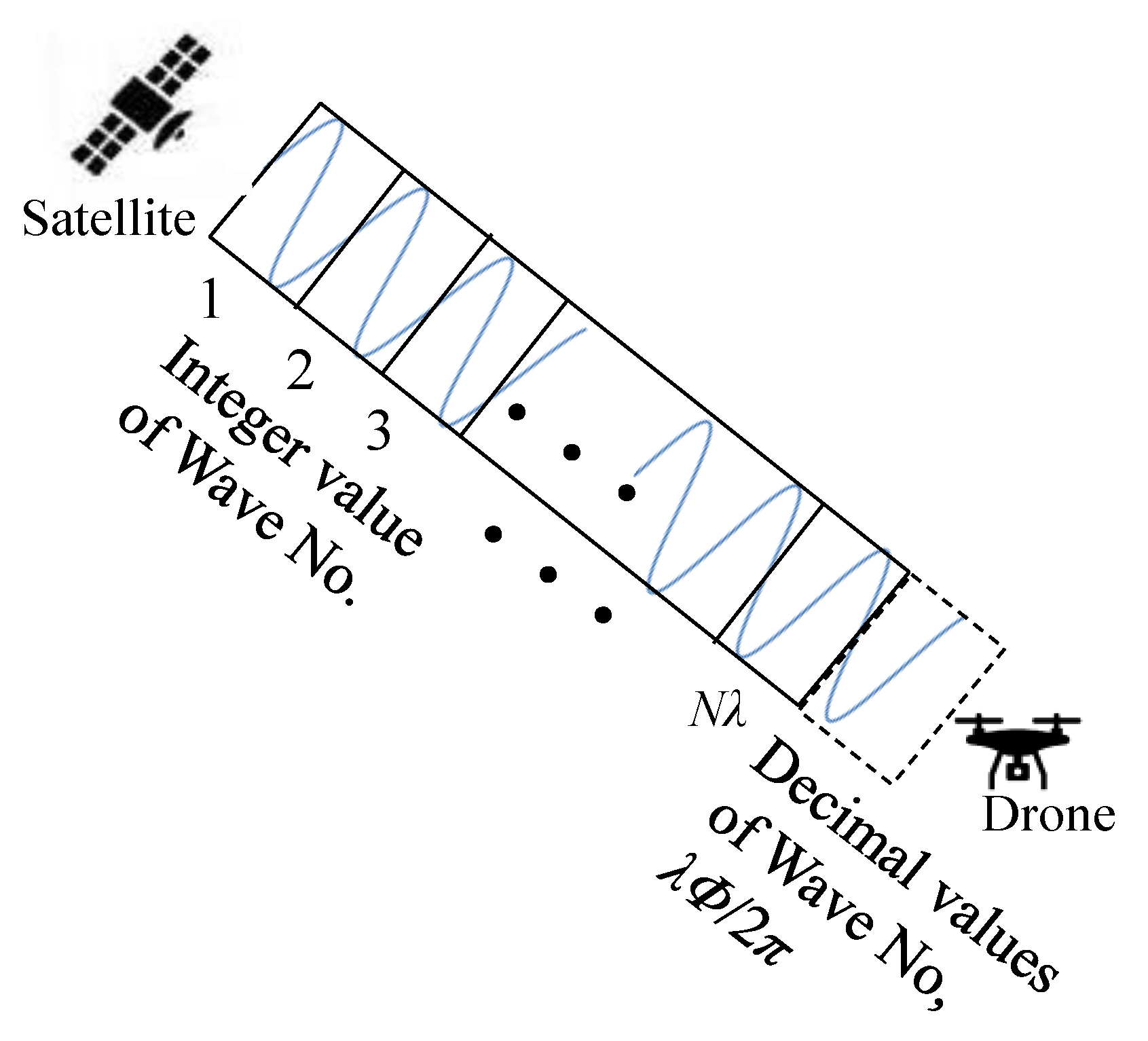

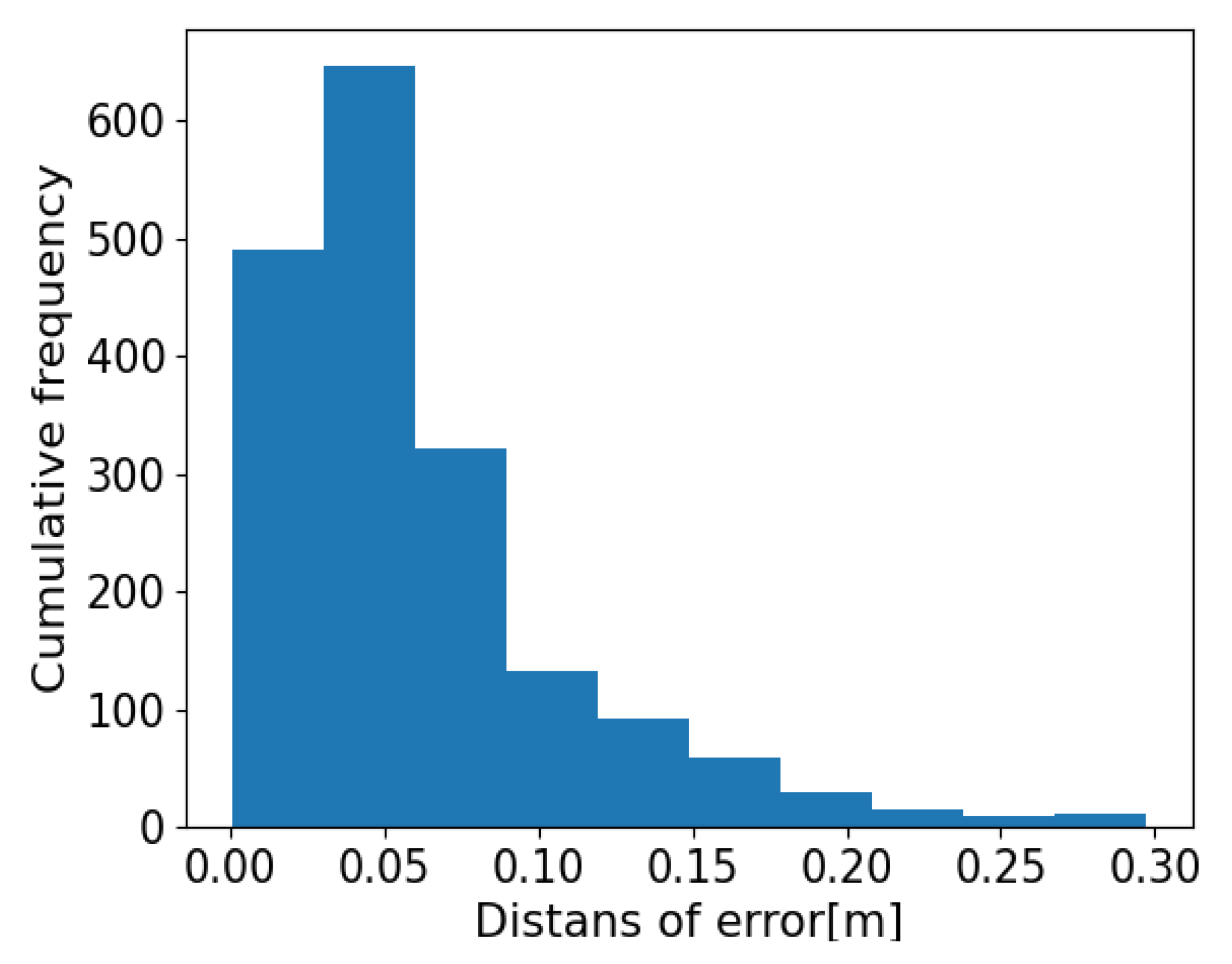

4. Positioning and Flight Accuracy

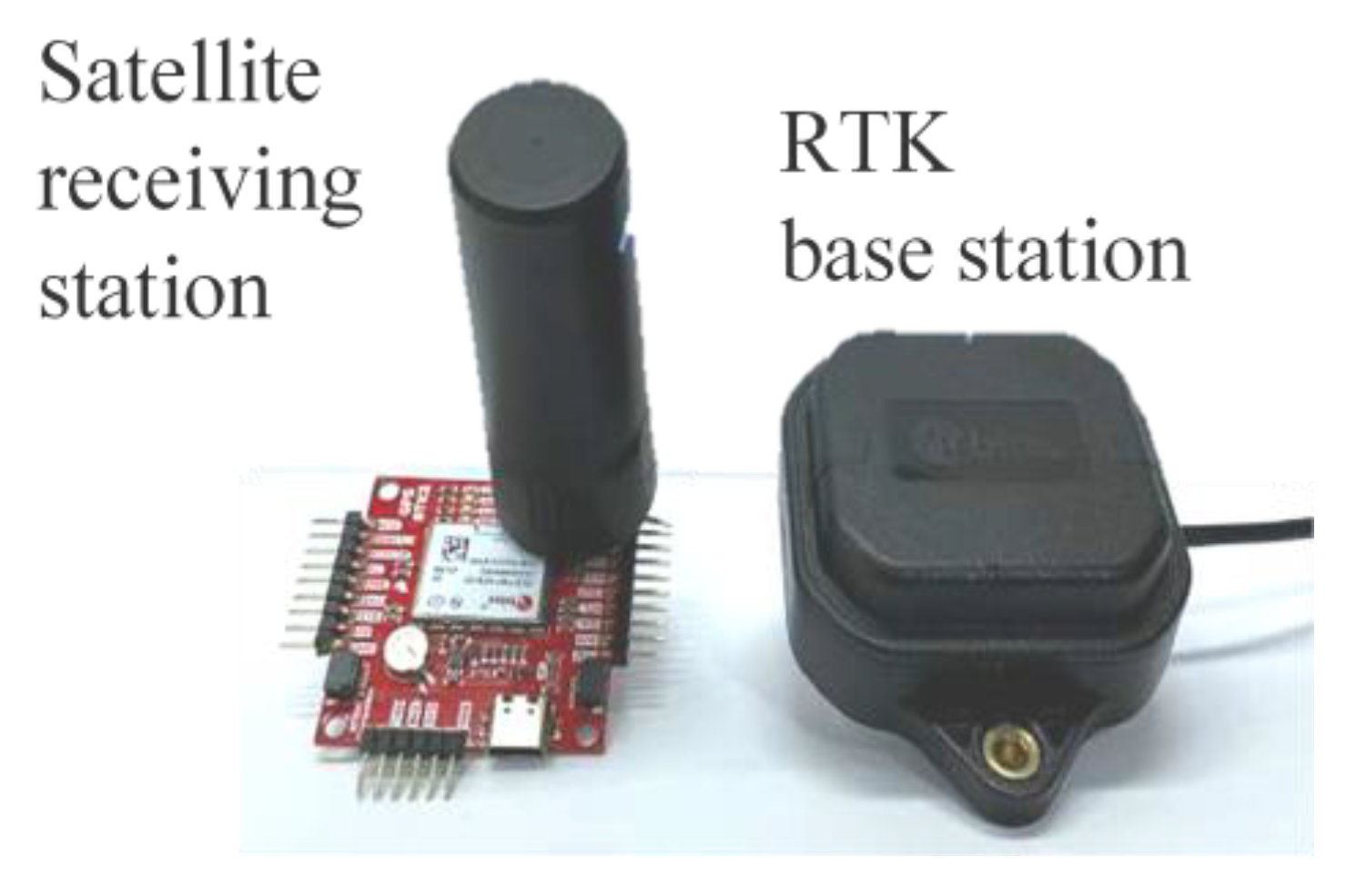

4.1. Overview of RTK-GNSS Positioning Methods

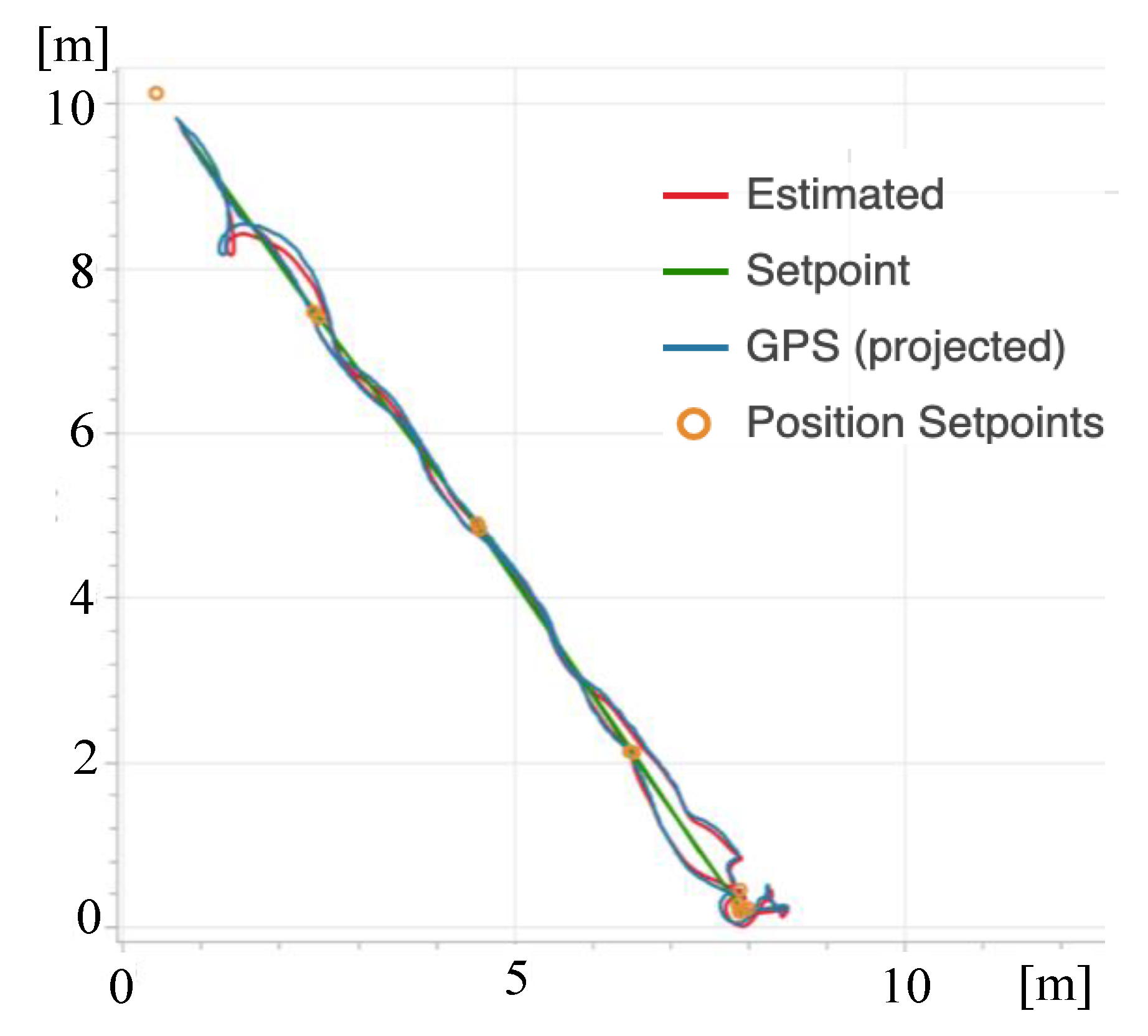

4.2. Verification of Positioning by Experiment

5. Pollination Drone System Configuration

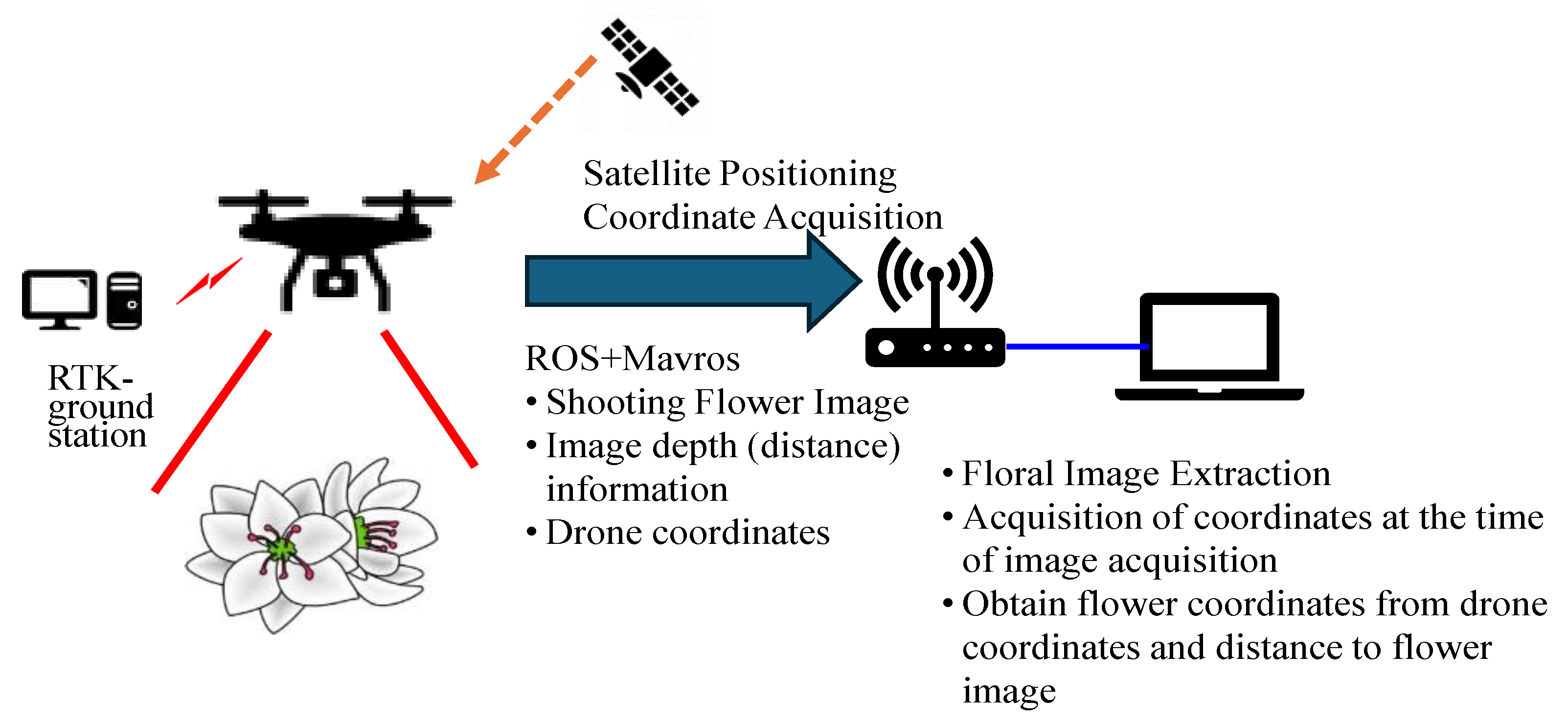

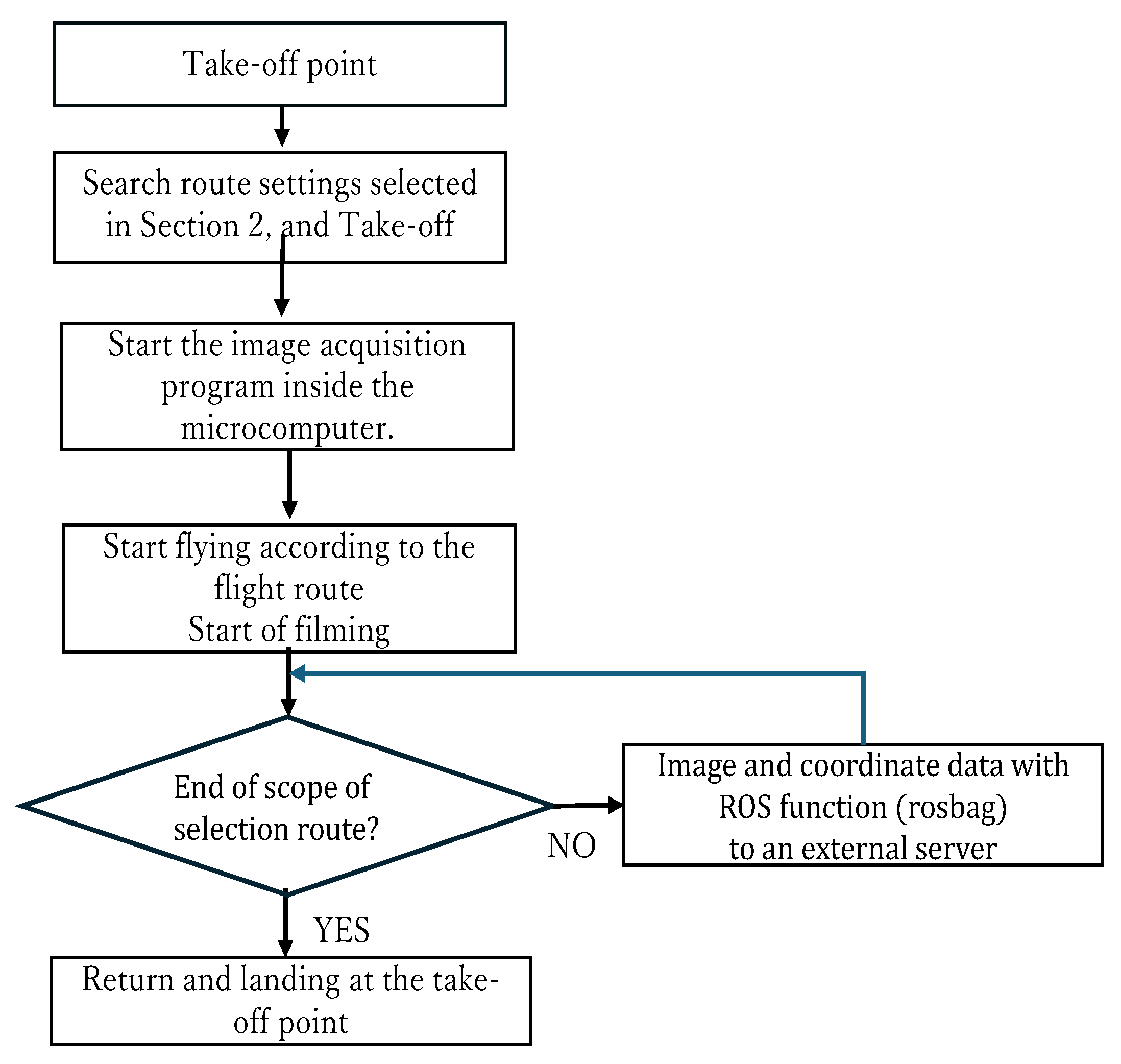

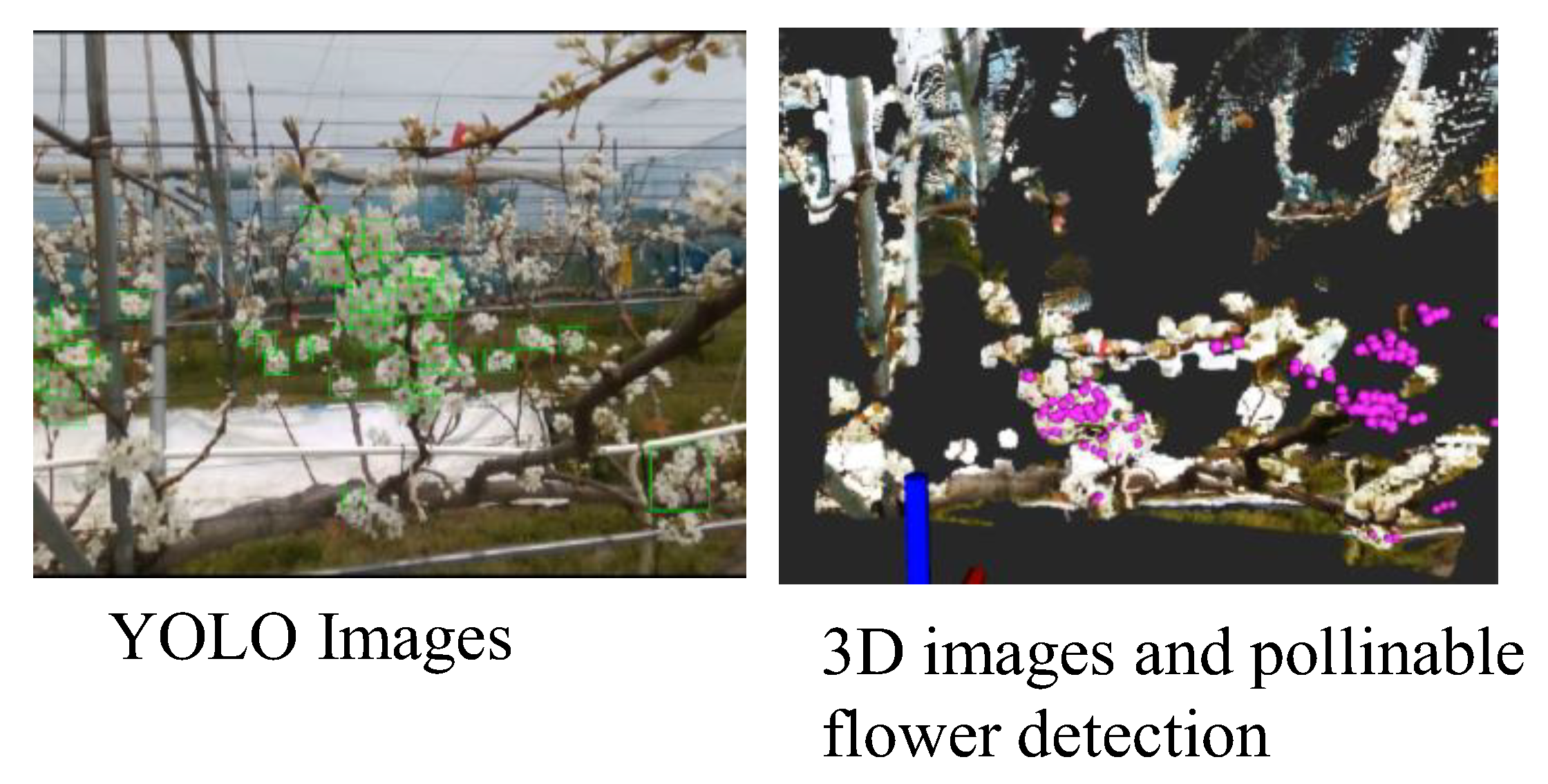

5.1. Flower Search Control

5.2. Method for Estimating Video Coordinates Using a Depth Camera

5.3. Pollination Means and Sprayer Operation

5.4. Drone Airframe Configuration and Pollinator Specifications

6. Results of Experiments

6.1. Verification of In-Field Flight Experiments

6.2. Results of Spraying Experiment

7. Discussion

8. Summary

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cooley, H.; Vallejo-Marín, M. Buzz-Pollinated Crops: A Global Review and Meta-Analysis of the Effects of Supplemental Bee Pollination in Tomato. J. Econ. Entomol. 2021, 114, 505–519. [Google Scholar] [CrossRef] [PubMed]

- Vidyadhar, B.; Tomar, B.S.; Singh, B.; Behera, T.K. Effect of Methods and Time of Pollination on Seed Yield and Quality Parameters in Cherry Tomato Grown under Different Protected Conditions. Indian J. Hortic. 2015, 72, 61–66. [Google Scholar] [CrossRef]

- Yamashita, K.; Tanimoto, S. Studies on Self-Incompatibility of Hassaku (Citrus hassakuhort. Ex Tanaka). J. Jpn. Soc. Hortic. Sci. 1985, 54, 178–183. [Google Scholar] [CrossRef]

- Sassa, H.; Hirano, H.; Ikehashi, H. Identification and Characterization of Stylar Glycoproteins Associated with Self-Incompatibility Genes of Japanese Pear, Pyrus serotina Rehd. Mol. Gen. Genet. 1993, 241, 17–25. [Google Scholar] [CrossRef]

- Murakami, S.; Yamane, S.; Hashimoto, N.; Araki, Y. Artificial Pollination of Japanese Pear and Kiwifruit Using Electrostatic Pollen Dusting Machines. Hortic. Res. 2020, 19, 365–372. [Google Scholar] [CrossRef]

- Tomita, A.; Shinya, K.; Inomata, M. Comparison of Farm Working Efficiency and Working Load Between Hedge-Row Training and Free Standing Training in the “Satonishiki” Sweet Cherry Production. J. Jpn. Soc. Agric. Technol. Manag. 2011, 17, 125–130. [Google Scholar] [CrossRef]

- Kurahashi, T.; Takahashi, K. Comparison of Work Efficiency between “Fuji” Apple Trees Trained to a Y-Trellis and Central Leader System. J. Jpn. Soc. Agric. Technol. Manag. 1995, 2, 15–19. [Google Scholar] [CrossRef]

- Lee, H.-J.; Jeong, R.-D. Metatranscriptomic Analysis of Plant Viruses in Imported Pear and Kiwifruit Pollen. Plant Pathol. J. 2022, 38, 220–228. [Google Scholar] [CrossRef]

- Shibasaki, A.; Shimada, T.; Kondo, S.; Ohara, H.; Ohkawa, K. Varietal Tolerance of Pear Flower Pollen to Low-Temperatures Treatment During Pollen Development and Damage Inhibition by Coffee Extract. Hortic. J. 2023, 92, 151–161. [Google Scholar] [CrossRef]

- Endo, K.; Hiraguri, T.; Kimura, T.; Shimizu, H.; Shimada, T.; Shibasaki, A.; Suzuki, C.; Fujinuma, R.; Takemura, Y. Estimation of the Amount of Pear Pollen Based on Flowering Stage Detection Using Deep Learning. Sci. Rep. 2024, 14, 13163. [Google Scholar] [CrossRef]

- Kirillov, A.; He, K.; Girshick, R.; Rother, C.; Dollár, P. Panoptic Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9396–9405. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Vincenty, T. Direct and Inverse Solutions of Geodesics on the Ellipsoid with Application of Nested Equations. Surv. Rev. 1975, 23, 88–93. [Google Scholar] [CrossRef]

- Vincenty, T. Geodetic Inverse Solution between Antipodal Points. DMAAC Geod. Surv. Squadron 1975. [Google Scholar] [CrossRef]

- Vincenty, T. Correspondence. Surv. Rev. 1976, 23, 294. [Google Scholar]

- Radočaj, D.; Plaščak, I.; Jurišić, M. Global Navigation Satellite Systems as State-of-the-Art Solutions in Precision Agriculture: A Review of Studies Indexed in the Web of Science. Agriculture 2023, 13, 1417. [Google Scholar] [CrossRef]

- Hofmann-Wellenhof, B.; Lichtenegger, H.; Wasle, E. GNSS-Global Navigation Satellite Systems: GPS, GLONASS, Galileo, and Others; Springer: Vienna, Austria, 2008; ISBN 978-3-211-73012-6. [Google Scholar]

- Boquet, G.; Vilajosana, X.; Martinez, B. Feasibility of Providing High-Precision GNSS Correction Data Through Non-Terrestrial Networks. IEEE Trans. Instrum. Meas. 2024, 73, 1–15. [Google Scholar] [CrossRef]

- Miwa, M.; Ushiroda, T. Precision Flight Drones with RTK-GNSS. J. Robot. Mechatron. 2021, 33, 371–378. [Google Scholar] [CrossRef]

- ZED-F9P. Available online: https://qzss.go.jp/usage/products/ublox_200709.html (accessed on 19 December 2024).

- QGroundControl. Available online: https://qgroundcontrol.com/ (accessed on 19 December 2024).

- X500 V2 Kits. Available online: https://holybro.com/collections/x500-kits (accessed on 19 December 2024).

- Gebrehiwet, L.; Negussie, Y.; Tesfaye, E. A Review on Drone Ground Control Station, Configurations, Types and the Communication Systems. IJISEA 2024, 5, 1–16. [Google Scholar]

- Starov, D.; Koryakova, V.; Kwabena, P.; Gladyshev, M. Control Method for an Autonomous Group of Multi-Rotor Aircraft. In Proceedings of the 2023 7th International Conference on Information, Control, and Communication Technologies (ICCT), Astrakhan, Russia, 2–6 October 2023; pp. 1–4. [Google Scholar]

- Cañas, J.M.; Martín-Martín, D.; Arias, P.; Vega, J.; Roldán-Álvarez, D.; García-Pérez, L.; Fernández-Conde, J. Open-Source Drone Programming Course for Distance Engineering Education. Electronics 2020, 9, 2163. [Google Scholar] [CrossRef]

- Yanase, N.; Murasaki, K.; Shimada, Y.; Taniguchi, Y. Estimating Object Size from a Single Image Using Estimated Depth and Geometric Constraints. Proc. Ite Annu. Conv. 2017, 2017, 33B-2. [Google Scholar] [CrossRef]

- Raspberry Pi4 Model B. Available online: https://www.raspberrypi.com/products/raspberry-pi-4-model-b/ (accessed on 19 December 2024).

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Li, Y.; Ibanez-Guzman, J. Lidar for Autonomous Driving: The Principles, Challenges, and Trends for Automotive Lidar and Perception Systems. IEEE Signal Process. Mag. 2020, 37, 50–61. [Google Scholar] [CrossRef]

- Li, Y.; Ma, L.; Zhong, Z.; Liu, F.; Chapman, M.A.; Cao, D.; Li, J. Deep Learning for LiDAR Point Clouds in Autonomous Driving: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 3412–3432. [Google Scholar] [CrossRef] [PubMed]

- RealSense LiDAR Camera D435i. Available online: https://www.physical-computing.jp/product/2098 (accessed on 19 December 2024).

- Sota, O.; Kohei, T.; Tomotaka, K.; Hiroyuki, S.; Takefumi, H.; Akane, S.; Tomohito, S.; Yoshihiro, T. Pear Blossom Counting System with Drones Using YOLO and Deep SORT. In Proceedings of the 2024 International Conference on Image Processing and Robotics (ICIPRoB), Colombo, Sri Lanka, 9–10 March 2024; pp. 1–4. [Google Scholar]

- Zhang, J.; Rivera, C.E.O.; Tyni, K.; Nguyen, S. AirPilot: Interpretable PPO-Based DRL Auto-Tuned Nonlinear PID Drone Controller for Robust Autonomous Flights. arXiv 2025, arXiv:2404.00204. [Google Scholar] [CrossRef]

- Xu, B.; Gao, F.; Yu, C.; Zhang, R.; Wu, Y.; Wang, Y. OmniDrones: An Efficient and Flexible Platform for Reinforcement Learning in Drone Control. IEEE Robot. Autom. Lett. 2024, 9, 2838–2844. [Google Scholar] [CrossRef]

- Caballero-Martin, D.; Lopez-Guede, J.M.; Estevez, J.; Graña, M. Artificial Intelligence Applied to Drone Control: A State of the Art. Drones 2024, 8, 296. [Google Scholar] [CrossRef]

- Bailey, A.G. The Science and Technology of Electrostatic Powder Spraying, Transport and Coating. J. Electrost. 1998, 45, 85–120. [Google Scholar] [CrossRef]

- Scholl, M.; Vogel, N.; Lang, S. Electrostatic Powder Coating as a Novel Process for High-Voltage Insulation Applications. Adv. Eng. Mater. 2023, 25, 2300465. [Google Scholar] [CrossRef]

| Positioning Calculation Software | QGrandControl |

|---|---|

| Receiver | u-Blox ZED-F9P |

| Satellite system | GPS, GLONASS, Galileo, QZSS |

| Observation points | Nippon Institute of Technology Campas Ground |

| Size of the Aircraft | 61 × 61 × 31 (cm) |

|---|---|

| load | 1500 g (Excludes battery) |

| Flight time | 18 min hovering |

| Camera Type | Intel Realsense Depth camera D435i |

| Satellite system used | GPS, GLONASS, Galoleo, QZSS |

| Pollination Method | Rows of Trees per 1 m | Number of Flowers per Inflorescence (Count) | Number of Fruits Set per Inflorescence (Count) | Fruiting Rate (%) | |

|---|---|---|---|---|---|

| Pure Pollen Usage (mg) | Powdering Operations Time (s) | ||||

| Puff pollination | 39.7 | 196 | 7.0 | 5.5 | 78.9 |

| Solution pollination | 25.9 | 25 | 6.9 | 5.6 | 81.8 |

| Drone pollination | 22.5 | 39 | 6.8 | 5.2 | 77.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Miyoshi, K.; Hiraguri, T.; Shimizu, H.; Hattori, K.; Kimura, T.; Okubo, S.; Endo, K.; Shimada, T.; Shibasaki, A.; Takemura, Y. Development of Pear Pollination System Using Autonomous Drones. AgriEngineering 2025, 7, 68. https://doi.org/10.3390/agriengineering7030068

Miyoshi K, Hiraguri T, Shimizu H, Hattori K, Kimura T, Okubo S, Endo K, Shimada T, Shibasaki A, Takemura Y. Development of Pear Pollination System Using Autonomous Drones. AgriEngineering. 2025; 7(3):68. https://doi.org/10.3390/agriengineering7030068

Chicago/Turabian StyleMiyoshi, Kyohei, Takefumi Hiraguri, Hiroyuki Shimizu, Kunihiko Hattori, Tomotaka Kimura, Sota Okubo, Keita Endo, Tomohito Shimada, Akane Shibasaki, and Yoshihiro Takemura. 2025. "Development of Pear Pollination System Using Autonomous Drones" AgriEngineering 7, no. 3: 68. https://doi.org/10.3390/agriengineering7030068

APA StyleMiyoshi, K., Hiraguri, T., Shimizu, H., Hattori, K., Kimura, T., Okubo, S., Endo, K., Shimada, T., Shibasaki, A., & Takemura, Y. (2025). Development of Pear Pollination System Using Autonomous Drones. AgriEngineering, 7(3), 68. https://doi.org/10.3390/agriengineering7030068